Figure 1. Search and exclusion process.

Volume 21, Number 3

Min Young Doo1, Curtis J. Bonk2, and Heeok Heo3

1College of Education, Kangwon National University, 2School of Education, Indiana University, USA, 3School of Education, Sunchon National University, Korea

The significance of scaffolding in education has received considerable attention. Many studies have examined the effects of scaffolding with diverse groups of participants, purposes, learning outcomes, and learning environments. The purpose of this research was to conduct a meta-analysis of the effects of scaffolding on learning outcomes in an online learning environment in higher education. This meta-analysis included studies with 64 effect sizes from 18 journal articles published in English, in eight countries, from 2010 to 2019. The meta-analysis revealed that scaffolding in an online learning environment has a large and statistically significant effect on learning outcomes. The meta-cognitive domain yielded a larger effect size than did the affective and cognitive domains. In terms of types of scaffolding activities, meta-cognitive scaffolding outnumbered other types of scaffolding. Computers as a scaffolding source in an online learning environment were also more prevalent than were human instructors. In addition, scholars in the United States have produced a large portion of the scaffolding research. Finally, the academic area of language and literature has adopted scaffolding most widely. Given that effective scaffolding can improve the quality of learning in an online environment, the current research is expected to contribute to online learning outcomes and learning experiences.

Keywords: scaffolding, online learning, higher education, meta-analysis, effect size

Online learning has become prevalent in higher education with increasing numbers of students taking online courses (Seaman, Allen, & Seaman, 2018). Despite the rapid growth of online learning, approximately 23% of students were concerned about the “quality of instruction and academic support” for online courses in higher education (BestColleges, 2019, p. 9). A MOOC instructor survey (Doo, Tang, Bonk, & Zhu, 2020) indicated the significance of appropriate academic support and the need to implement effective instructional strategies, such as scaffolding, to enhance the quality of learning in an online learning environment.

Wood, Bruner, and Ross (1976) defined scaffolding as a “process that enables a child or novice to solve a problem, carry out a task or achieve a goal which would be beyond his unassisted efforts” (p. 90). The first author’s favorite metaphor for scaffolding is that of teaching a child how to ride a bike. At first, when a learner starts pedalling, an assistant needs to hold onto the bike seat firmly because the child may lose control. Next, while the learner is learning how to balance on the bike, the assistant needs to alternate between holding on to and releasing the bike seat as learning progresses. Finally, once the learner has a sense of balance, the assistant should let go of the bike, a step known as fading. This process is similar to teaching using small steps, called scaffolding.

Scaffolding has received considerable attention as an effective instructional strategy because it helps students engage in learning and enhances learning outcomes (Belland, Walker, Kim, & Lefler, 2017). Due to increasing interest in scaffolding as an instructional strategy, many scholars have researched this process; however, the research findings, to date, have been inconsistent and even conflicting. For example, Gašević, Adesope, Joksimović, and Kovanović (2015) found that conceptual scaffolding has positive effects in asynchronous online discussions, whereas Barzilai and Blau (2014) reported that conceptual scaffolding had little or no significant effect on learning. One way to synthesize these inconsistent research findings is to conduct a meta-analysis of the literature. Glass (1976) first introduced this approach and defined it as “the statistical analysis of a large collection of analysis results from individual studies for the purpose of integrating the findings” (p. 3). The purpose of a meta-analysis is to combine data from several individual studies to summarize or identify the common effects and to assess the dispersion among the findings (Borenstein, Hedges, Higgins, & Rothstein, 2009; Glass, 1976).

Numerous scholarly studies have investigated the effects of scaffolding on learning outcomes including meta-analysis studies to examine the effects of scaffolding (e.g., Belland et al., 2017; Kim, Belland, & Walker, 2018). However, previous studies have only focused on a particular subject matter (i.e., STEM) or specific scaffolding sources, such as computer-based scaffolding. In addition, few studies have examined the influence of scaffolding on undergraduate and graduate students (i.e., higher education) in online learning environments. According to Brown et al. (2020), recent trends in higher education include (a) increased student diversity, (b) alternative pathways to education, and (c) the sustainable growth of online education. These three trends are not mutually exclusive; rather, they are interconnected. As student populations become more diverse and require alternative ways to learn, there is an increasing need for online learning in higher education. Despite the proliferation of online learning in higher education and the significance of scaffolding on learning, there is a lack of scaffolding research exclusively focused on higher education contexts. In response, this study examined the effects of scaffolding on learning outcomes in online courses in higher education by conducting a meta-analysis of the existing research.

Based on the definition of scaffolding by Wood et al. (1976), the three distinctive features of scaffolding include (a) contingency, (b) intersubjectivity, and (c) transfer of responsibility (Belland, 2017; Pea, 2004). Contingency refers to the need for an ongoing assessment of students’ abilities with specific tasks so the teacher can provide scaffolding activities. It also requires instructors to provide scaffolding activities at appropriate times. Belland (2014) viewed these scaffolding activities as providing temporary support for learners.

Intersubjectivity often refers to a temporary shared collective understanding or common framework among learners or problem-solving participants. As teams or groups of learners find common ground (Rogoff, 1995) or experience episodes of shared thoughts (Levine & Moreland, 1991), they can more easily exchange their evolving ideas, build or augment new knowledge, and negotiate meaning (Bonk & Cunningham, 1998). Explicit displays of shared knowledge such as that found in discussion forms, wikis, social media, and collaborative technologies should foster participant intersubjectivity and perspective taking (Bonk & Cunningham, 1998). Teams and groups of learners with enhanced levels of intersubjectivity should be able to identify solutions to problems for successful learning. Finally, transfer of responsibility means that scaffolding must encourage learners to take responsibility for or ownership of learning from those who provide scaffolding (i.e., instructors or peers). To satisfy the purpose of scaffolding, learners should eventually be able to perform tasks independently. Thus, transfer of responsibility emphasizes the importance of reducing scaffolding activities (i.e., withdrawing support) over time.

Critical questions to ask when designing scaffolding activities as an instructional strategy include “what to scaffold, when to scaffold, how to scaffold and when to fade scaffolding” (Lajoie, 2005, p. 542). In terms of what to scaffold, Hannafin, Land, and Oliver (1999) divided the types of scaffolding into four categories: (a) conceptual scaffolding helps learner identify essential themes and related knowledge; (b) meta-cognitive scaffolding helps learners monitor and reflect on the learning process; (c) strategic scaffolding provides alternative ways to work on a task; and (d) procedural scaffolding helps learners use resources and tools for learning, such as providing an orientation to system functions and features. The reported effect sizes of the four types of scaffolding have varied widely. Kim et al. (2018) found small-to-moderate effect sizes for meta-cognitive (g = 0.384) and strategic scaffolding (g = 0.345) on students’ learning outcomes in science, technology, engineering, and math (STEM) learning. However, unlike meta-cognitive and strategic scaffolding, the effect size of conceptual scaffolding (g = 0.126) was small. They explained that the different effect sizes of the three types of scaffolding may result from greater requirements, in problem solving, for meta-cognitive and strategic types of scaffolding than for conceptual scaffolding. These results imply that instructors need to consider the influential factors affecting learning outcomes when providing scaffolding activities, such as the types of learning and characteristics of learners.

The type of scaffolding also depends on who provides scaffolding (e.g., instructors, tutors, or peers) and the technology used, such as intelligent tutoring systems including computers or artificial intelligence (Belland, 2014; Kim & Hannafin, 2011). In the past, teachers and tutors have been the primary designers of scaffolding activities, but technologies such as computers have recently grown in popularity as alternative sources to assist with learning (Devolder, van Braak, & Tondeur, 2012). Recent emerging technologies, such as artificial intelligence and learning analytics using big data, have made a quantum leap in computer-based scaffolding. Jill Watson, the world’s first artificially intelligent (AI) teaching assistant developed at Georgia Tech University, is a cutting-edge example of computer-based scaffolding (Maderer, 2017). Jill Watson was a popular teaching assistant because of her kind and prompt replies to students’ inquiries throughout the semester. At the end of the semester, students were surprised to learn that she was a chatbot invented by their instructor Professor Goel (McFarland, 2016). Like Jill Watson, AI-based teaching assistants are expected to be more widely adopted in higher education to facilitate learning as an alternative to human instructors or teaching assistants (Maderer, 2017).

By considering the core characteristics of scaffolding (i.e., learning diagnosis, fading, and contingent supports to learners) educators come to understand when to scaffold and when to fade scaffolding. In principle, all scaffolds are gradually removed depending on the learners’ level of development. Diagnoses such as dynamic assessment and monitoring learners’ understanding while conducting tasks or solving problems can capture relevant data (Lajoie, 2005), and provide basic information to determine the needs for the scaffolding. However, dynamic assessment of learning processes and the consequences of faded scaffolding has not been explored sufficiently in the context of online learning; so far, research has indicated mixed effects on learning outcomes (Ge, Law, & Huang, 2012). For example, Wu and Pedersen (2011) found that students who received faded computer-based procedural scaffolds did not perform well in science inquires. Belland, Walker, Olsen, and Leary (2015) reported larger effects of scaffolding with no fading.

Scaffolding strategies have been more rigorously designed and implemented in the cognitive learning domain than in other domains, and many researchers have reported on its learning effectiveness (Proske, Narciss, & McNamara, 2012; van Merriënboer & Kirschner, 2012). In particular, Belland et al. (2017) analyzed 144 experimental studies examining the effects of computer-based scaffolding on STEM learners’ cognitive learning and reported a small-to-moderate effect size (g = 0.46). Although the effect size was shown to be just small to moderate, the effects of scaffolding on cognitive learning were statistically significant.

It is predicted that online learning will continue to rapidly increase in higher education in the coming decade (Blumenstyk, 2018), particularly because online learning environments typically provide access to learning resources, tools, and communication media wherever students live and travel. Importantly, providing a sufficient infrastructure that enables easy and convenient access to these tools for learning across a university or institution can promote flexible and self-directed learning. For example, the rapid growth of MOOCs has accelerated the growth of online learning options and educational opportunities to satisfy learners’ motivation and needs (Milligan & Littlejohn, 2017). Students have been provided more learning opportunities without restrictions, thanks to technology advancements. However, recent surveys and research findings have identified concerns about online learners including low learning engagement and low-quality instruction (Doo et al., 2020). To enhance online learning outcomes, learners need appropriate instructional support such as timely and appropriate scaffolding that encourages learners to construct their own knowledge in the online learning environment (Oliver & Herrington, 2003) which, in turn, makes learning more meaningful and engaging.

Several recent studies have explored the effects of scaffolding in online learning in different countries. Ak (2016) examined the effects of computer-based scaffolding in problem-based online asynchronous discussions in Turkey. The findings indicated that students in scaffolding groups were qualitatively and quantitatively more productive in message posting and in communication than the non-scaffolding group. Ak’s study also reported that simple types of scaffolding, such as message labels and sentence openers in asynchronous discussions, facilitated students’ task-related interaction. Ak concluded that technology-based scaffolding in a problem-based online asynchronous discussion enhances students’ task orientation and facilitates task-related learning activities.

More recently, Kim and Lim (2019) compared the effects of supportive (i.e., conceptual) and reflective (i.e., meta-cognitive) scaffolding on problem-solving performance and learning outcomes in online ill-structured problem solving in Korea. The results indicated that the reflective scaffolding group outperformed the supportive scaffolding group in problem-solving performance and learning outcomes. The authors also found that there was a significant interaction between the type of scaffolding employed and the meta-cognitive effects in an online learning environment.

Yilmaz and Yilmaz (2019) also examined the effects of meta-cognitive support using a pedagogical agent on task and group awareness in computer-supported collaborative learning. Their findings indicated that meta-cognitive support using a pedagogic agent positively influenced the learners’ motivation, meta-cognitive awareness, and group processing. Given the pervasive and ubiquitous global influence of online learning today across every educational sector in every region of the world (Bonk, 2009), it is necessary to examine the effects of scaffolding as an instructional strategy to enhance learning outcomes in online learning.

The current research aimed to synthesize the effects of scaffolding strategies on learning in online learning in higher education using a meta-analysis approach. The specific research questions explored here are as follows:

Borenstein et al. (2009) explained that the primary purpose of conducting a meta-analysis is “to synthesize evidence on the effects of intervention or to support evidence-based policy or practice” (p. xxiii). The strength of a meta-analysis is the generalizability of the topics and themes of interest as a result of synthesizing the findings across numerous research studies.

To conduct this meta-analysis, we selected studies that explored the effects of scaffolding on learning in an online learning environment in higher education. To address our two primary research questions, we established inclusion criteria for the literature search to identify eligible studies. The inclusion criteria reflected the overriding purpose of the research and associated research questions (Lipsey & Wilson, 2001). We set the inclusion criteria in terms of the main theme and outcome variables of the research, publication period, publication language, and eligibility of the meta-analysis (Berkeljon & Baldwin, 2009).The specific inclusion criteria for the studies were as follows: (a) examined the effects of scaffolding; (b) written in English; (c) published since the start of 2010; (d) confined to an online learning environment; (e) implemented in a higher education setting; (f) employed rigorous research designs; (g) focused on undergraduate or graduate students in higher education; (h) measured learning outcomes quantitatively with test results, student self-reports, activities, or observation; and (i) included sufficient information for effect-size calculations (e.g., means, standard deviations, F values, t-test results, or correlations).

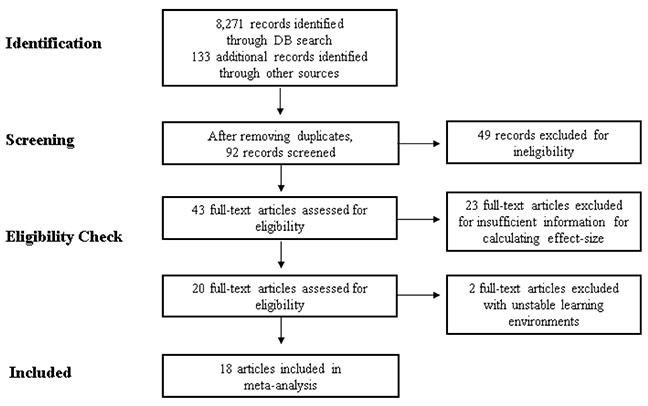

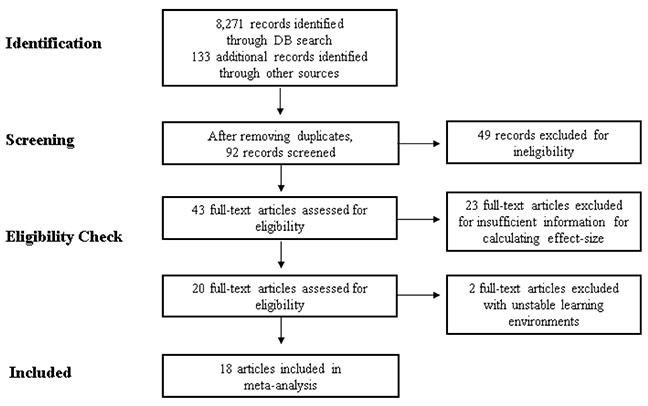

The literature search was conducted using a combination of a computer-based database search and manual search of major relevant journals. The following scholarly electronic databases specializing in the education field were searched using keywords: Academic Search Complete, Education Source (EBSCOhost), ERIC (ProQuest), PsycINFO, JSTOR (ProQuest Dissertation & Theses), and Google Scholar. In addition, we conducted a manual literature search of top-tier journals for studies on online learning and distance education, educational technology, educational psychology, and higher education to reduce the possibility of missing eligible studies in the database search. A combination of the following keywords was used to search the various sources: (a) scaffolding; (b) scaffolds or prompt; (c) online learning or distance education; (d) undergraduates, graduates, or higher education; and (e) learning outcomes or learning achievement. We limited the literature search to the years 2010 through 2019 to reflect more contemporary research trends on scaffolding and online learning. The literature search yielded 64 eligible examples of effect size studies from 18 relevant articles published between 2010 and 2019. The search and exclusion process is illustrated in Figure 1.

Figure 1. Search and exclusion process.

From the 18 articles, we extracted information on four types of variables: (a) independent, (b) moderating, (c) dependent, and (d) other variables (see Table 1).

Table 1

Coding Information for Meta-Analysis

| Type of variable | Variable category | Sub-Category coding |

| Independent variable | Scaffolding | Control group or treatment group |

| Moderating variables | Scaffolding purposes | Conceptual, meta-cognitive, strategic, or procedural |

| Scaffolding sources | Computers, instructors, or peers | |

| Dependent variable | Learning outcomes | Effect sizes (sample size, mean, correlation, p-value, and F- or t-values) Types of learning outcomes (cognitive, meta-cognitive, or affective) |

| Other variables | Publication | Title, author, year, and name of journal |

| Research design | Experimental, quasi-experimental, pre-experimental, or non-experimental | |

| Learning disciplines | Language and literature, science, education, communication, computing, and others |

Each category of variable was coded using sub-categories (Table 1). First, the learning outcomes variable was coded using the three types of learning outcomes, namely cognitive, meta-cognitive, or affective (van de Pol, Volman, & Beishuizen, 2010). The cognitive domain of learning includes context knowledge and the development of intellectual skills (Anderson & Krathwohl, 2000). The meta-cognitive domain of learning refers to knowledge about one’s own cognitive processes of monitoring and controlling thoughts (Flavell, 1979; Gagné, Briggs, & Wager, 1988). The meta-cognitive domain includes self-regulation, referring to “a learner’s cognitive, behavioral, and emotional mechanisms for sustaining goal-directed behavior” (Richey, 2013, p. 278). Finally, the affective domain of learning refers to students’ feelings or psychological states during the learning process, such as emotions, motivations, values, satisfaction, and attitudes (Anderson & Krathwohl, 2000).

The scaffolding purposes in this research were coded following the four types of scaffolding outlined by Hannafin et al. (1999), namely (a) conceptual scaffolding, (b) meta-cognitive scaffolding, (c) strategic scaffolding, and (d) procedural scaffolding. If there were more than two scaffolding purposes (e.g., meta-cognitive and procedural scaffolding) in one study, rather than count both purposes, the more frequently used purpose was coded. We classified the research designs of the studies into four groups: (a) experimental design (e.g., pre-test/post-test control group design or post-test-only control group); (b) quasi-experimental research design (e.g., multiple time-series design, non-equivalent control group design); (c) pre-experimental (e.g., one-group pre-test/post-test design); and (d) non-experimental design or correlational studies (Campbell & Stanley, 1963).

Publication data. The 64 effect size studies came from 18 peer-reviewed scholarly articles published in English; the predominant number of studies were published in 2014 (25.0%) and 2010 (15.6%). From 2010 to 2019, scholarly articles that met our inclusion criteria were published annually except in 2011 (see Table 2).

Table 2

Number of Effect Size Studies and Associated Journals

| Journal name | # of studies (% of total) |

| Instructional Science | 11 (17.2%) |

| Interdisciplinary Journal of Problem-Based Learning | 10 (15.6%) |

| Computers & Education | 9 (141.1%) |

| Educational Technology Research and Development | 6 (9.4%) |

| Journal of Adolescent & Adult Literacy | 5 (7.8%) |

| Journal of Research in Reading | 5 (7.8%) |

| Educational Technology & Society | 4 (6.3%) |

| Internet & Higher Education | 4 (6.3%) |

| Journal of College Science Teaching | 3 (4.7%) |

| British Journal of Educational Technology | 2 (3.1%) |

| Journal of Moral Education | 2 (3.1%) |

| Journal of Online Learning and Teaching | 2 (3.1%) |

| Australasian Journal of Educational Technology | 1 (1.6%) |

Studies using an experimental design (67.2%) far outnumbered those using a quasi-experimental design (26.6%). The types of research design included randomized post-test control group design (54.7%), pre-test and post-test design (25.0%), and repeated measure design (3.1%).

Participants and settings. Overall, the sample studies involved 4,852 participants with 71.82 participants ranging from 31 to 158. The ages of the participants ranged from 18 to 33.2 years with a mean age of 21.57 (SD : 3.45). Highlighting the popularity of scaffolding research around the world, the sample studies were conducted in eight different countries, including the US (42.2%), Canada (14.1%), Turkey (12.5%), Taiwan (10.9%), Germany (7.8%), Greece (6.25%), the Netherlands (3.13%), and South Korea (3.13%). The studies were set in a diverse range of disciplines across several learning domains, including language and literature (e.g., vocabulary, writing, language; 25.0%), science (e.g., physics, biology, energy; 17.2%), education (e.g., instructional design, educational psychology; 18.8%), clinical communications (15.6%), computing (12.5%), health and medical (7.8%), and economics (3.1%).

Scaffolding. In terms of scaffolding purposes, meta-cognitive scaffolding (60.9%) was the primary reason for providing scaffolding, followed by procedural scaffolding (23.5%), conceptual scaffolding (7.8%), and strategic scaffolding (7.8%). As a scaffolding source, computers or embedded systems (68.8%), outnumbered instructors (23.4%), and peers (7.8%). The range of scaffolding periods also varied widely in these studies from 80 minutes to 10 weeks. Some studies in the sample came from independent courses, contributing to the individual differences in the periods. Learning outcomes were classified into three domains: affective domain (14.1%), cognitive domain (64.0%), and meta-cognitive domain (21.9%). Notably, more than 60% of these studies measured cognitive learning outcomes.

Once the coding scheme was confirmed by all the authors of this research, only the first author did the actual coding. To compensate for the weakness of a single coder, coding was completed twice with a time lapse interval (i.e., five months) to ensure coding reliability. In the second round of coding, four wrongly coded items were found and corrected.

We followed the random effects model because a fixed effect model has two key assumptions and associated limitations (Borenstein et al., 2009). First, the purpose of using a fixed-effects model is to calculate the effect size of the given samples, so it does not generalize to multiple populations. Second, a fixed-effects model assumes that the true effect size of each sample is the same if it is error-free. Given the limitations of these assumptions, the random effects model was better suited for this research since the sample studies were not identical in the number of participants and their mean ages; academic disciplines, countries, and research design varied as well.

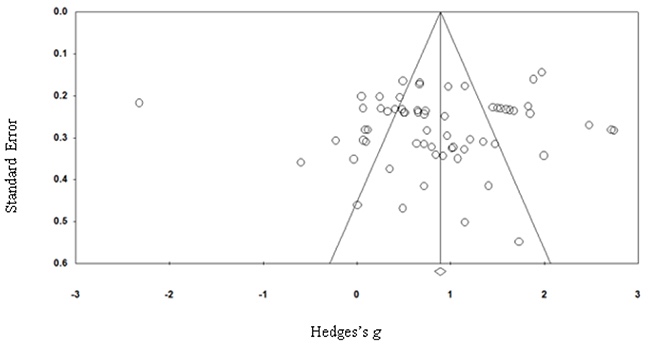

As a result, to calculate the effect sizes, we used Hedges’s g, an estimator of the effect size (Hedges & Oklin, 1985). The standardized mean difference among the groups, Cohen’s d, was obtained to determine the outcome measures in each study. We converted the effect size estimates in Cohen’s d to Hedges’s g to minimize potential bias in the effect size (Hedges & Olkin, 1985). To estimate the potential influence of publication bias on the results, a funnel plot was created, as shown in Figure 2 (Harbord, Egger, & Sterne, 2006). The funnel plot for the effects of scaffolding on learning outcomes showed that more samples had large effect sizes, but the plot was moderately symmetrical, indicating that the research findings were not greatly influenced by publication bias. All analyses were conducted using Comprehensive Meta-Analysis 3.0 for Windows (Borenstein et al., 2009).

Figure 2. Funnel plot for the effects of scaffolding on learning outcomes.

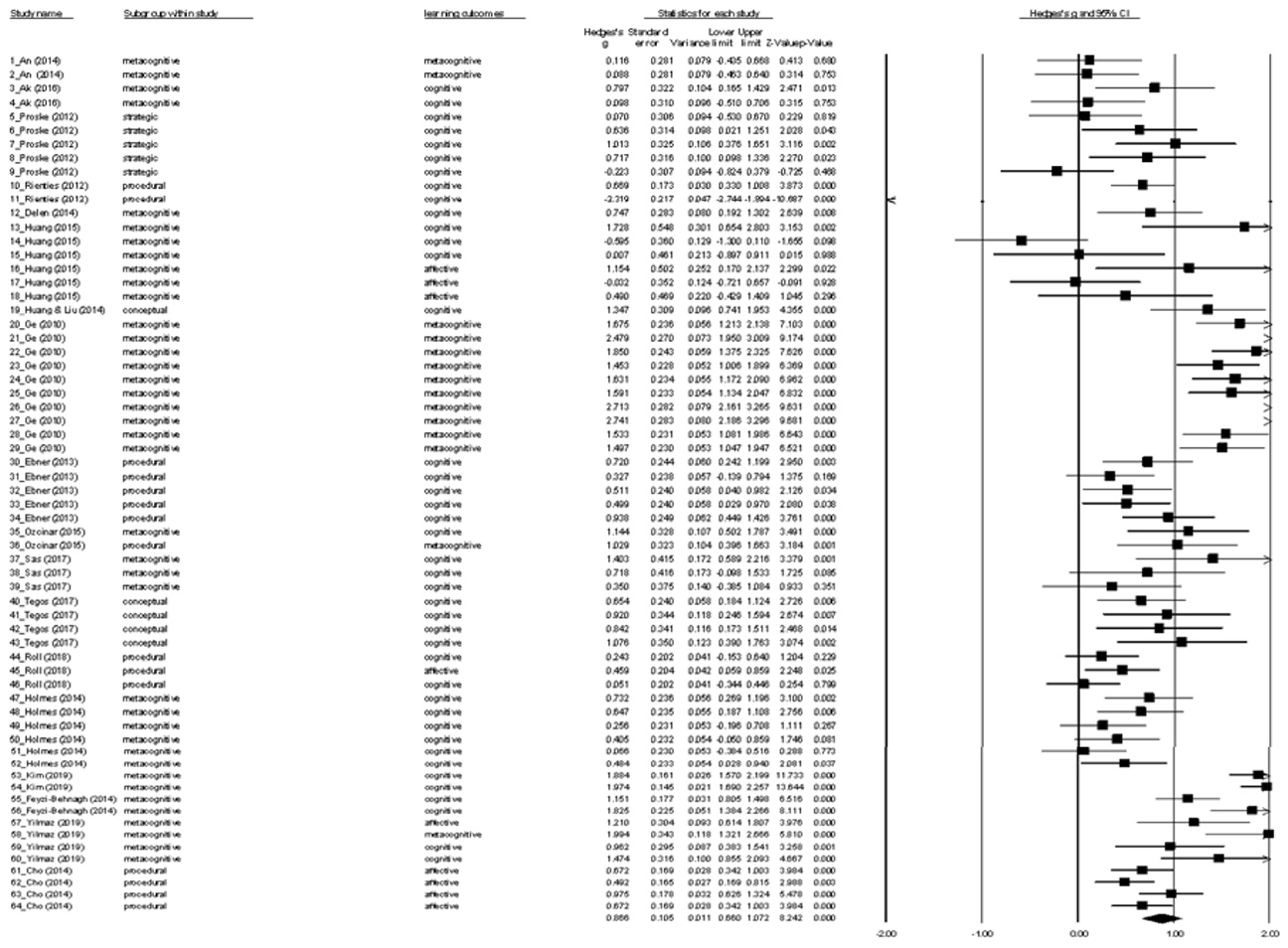

Using the random-effects model, among the 64 studies in the sample, the overall effect size for the effects of scaffolding on learning outcomes was calculated, g =.866 (95% CI: [.660, 1.072], p <.001, N = 64). This indicates a large effect size based on Cohen’s (1988) criteria in which.2 is a small effect size,.5 is a medium effect size, and larger than.8 is a large effect size.

The overall effect size of the effects of scaffolding on learning outcomes, which is greater than 0 at a statistically significant level, z = 8.242, p <.001, indicates that scaffolding produced better learning outcomes than those without scaffolding. The heterogeneity test results (Q = 699.991, I2 = 91.0%, p <.001) showed a considerable difference between effect size estimates in the meta-analysis, which validated our decision to use the random-effects model (Borenstein et al., 2009; Higgins & Green, 2008) in which I2 index below 25% is small, 50% is moderate, and beyond 75% is a large amount of heterogeneity. Thus, it was necessary to conduct a sub-group analysis to systematically examine the effects of scaffolding on learning outcomes.

To estimate the overall effects of scaffolding on different types of learning outcomes, we calculated the effect sizes for each learning outcome using a sub-group analysis with the random-effects model: meta-cognitive, cognitive, and affective. The effects of scaffolding on the meta-cognitive domain (g = 1.600) were larger than the affective learning outcomes (g = 0.672) and cognitive learning outcomes (g = 0.652) (Q (df = 2)= 16.493, p <.001). See Table 3 for the summary of results.

Table 3

Effects of Scaffolding by Learning Outcome

| Type of outcome | k | n | ES(g) | SE | 95% CI | z | p |

| Affective | 9 | 975 | .672 | .258 | [.166, 1.179] | 2.601 | .009 |

| Cognitive | 41 | 2693 | .652 | .121 | [.415,.890] | 5.37 | .000 |

| Meta-Cognitive | 14 | 1184 | 1.600 | .042 | [1.198, 2.001] | 7.87 | .000 |

Note. k (number of effect sizes); n (number of cumulative participants); ES (effect sizes, Hedges’s g value); SE (standard error), 95% CI (95% confidence interval); z (Fisher’s z transformation results); p (significance level).

We analyzed the effects of scaffolding on learning outcomes for different purposes (i.e., meta-cognitive, procedural, conceptual, and strategic). Meta-cognitive scaffolding (g = 1.104) and conceptual scaffolding (g = 0.964) had stronger effects on learning outcomes than did procedural scaffolding (g = 0.393) and strategic scaffolding (g =.440). According to the Q-test results, the differences of effect sizes among scaffolding purposes were statistically significant (Q (3) = 11.584, p <.05; see Table 4).

Table 4

Effect Sizes and Confidence Interval for Scaffolding Purposes

| Purpose | k | n | ES(g) | SE | 95% CI | z | p |

| Meta-Cognitive | 39 | 2788 | 1.104 | .122 | [.864, 1.344] | 9.108 | .000 |

| Procedural | 15 | 884 | .393 | .190 | [.021,.766] | 2.069 | .039 |

| Conceptual | 5 | 338 | .964 | .345 | [.287, 1.640] | 2.792 | .005 |

| Strategic | 5 | 210 | .440 | .344 | [-.235, 1.115] | 1.277 | .202 |

To examine the effects of scaffolding sources on learning outcomes, we calculated the effect sizes of scaffolding from computers, instructors, and peer students. The effect sizes of scaffolding from peers was larger (g = 1.813) than from instructors (g =.837) and from computers (g =.764). In addition, we found that each scaffolding source had a statistically different effect (Q (2) = 7.979, p <.05; see Table 5).

Table 5

Effect Sizes of Scaffolding Sources on Learning Outcomes

| Source | k | n | ES(g) | SE | 95% CI | z | p |

| Computers | 44 | 3063 | .764 | .121 | [.526, 1.01] | 6.304 | .000 |

| Instructors | 15 | 677 | .837 | .206 | [.433, 1.242] | 4.223 | .000 |

| Peers | 5 | 480 | 1.813 | .352 | [1.124, 2.503] | 5.152 | .000 |

As Table 6 shows, we also examined the effects of other variables on learning outcomes, including research design, country where the study took place, and learning discipline. In terms of research design, the studies using an experimental design (g = 1.045) yielded stronger effects than those with a quasi-experimental (g = 0.422) or non-experimental design (g = 0.702) (Q (2) = 7.057, p <.05). The 64 studies were published in eight countries; however, about 42% of the samples were published in the US, while some countries had only a small number of studies published (e.g., 3.13% in both South Korea and the Netherlands). Thus, we combined seven countries into a non-US category for comparison. The effect size of the scaffolding studies published in the US (g = 1.162) was statistically larger than those published outside the US (g = 0.641) (z Diff = 2.515, p <.05).

The effect sizes of learning disciplines were also compared, which revealed that scaffolding in communications-related courses (g = 1.905) had a large effect size, followed by scaffolding in computing courses (g = 1.135) and those in the field of education (g = 0.846). The results showed a statistically significant difference in effect sizes among learning disciplines (Q (5) = 32.995, p <.001).

Table 6

Effect Sizes of Learning Outcomes: Research Design, Location, and Learning Discipline

| Variable | k | n | ES(g) | SE | 95% CI | z | p |

| Research design | |||||||

| Experimental | 43 | 3511 | 1.045 | .123 | [.804, 1.286] | 8.492 | .000 |

| Quasi-Experimental | 17 | 709 | .422 | .023 | [.023,.820] | 2.072 | .000 |

| Non-Experimental | 4 | 632 | .702 | .390 | [-.062, 1.467] | 1.800 | .072 |

| Location | |||||||

| US | 27 | 2341 | 1.162 | .156 | [.856, 1.468] | 7.443 | .000 |

| Non-US | 37 | 2511 | .641 | .136 | [.374,.908] | 4.711 | .000 |

| Learning discipline | |||||||

| Language and literature | 16 | 950 | .478 | .184 | [.118,.838] | 2.605 | .009 |

| Science | 11 | 919 | .485 | .208 | [.076,.893] | 2.326 | .020 |

| Education | 12 | 1150 | .846 | .200 | [.454, 1.238] | 4.229 | .000 |

| Communications | 10 | 960 | 1.905 | .220 | [1.475, 2.336] | 8.672 | .000 |

| Computing | 8 | 488 | 1.135 | .256 | [.634, 1.635] | 4.440 | .000 |

| Other | 7 | 385 | .516 | .269 | [-.011, 1.043] | 1.918 | .000 |

Since Wood et al. (1976) first defined scaffolding as support from experts enabling learners to accomplish what is beyond their current ability, scaffolding has been widely implemented as an effective instructional strategy (Balland, 2017; Kim & Hannafin, 2011). This current research was a meta-analysis of the effects of scaffolding in online learning in higher education in terms of learning outcomes. The results indicated that scaffolding in an online learning environment has a large and statistically significant effect on learning outcomes, confirmed the effectiveness of scaffolding as an instructional strategy, and supported previous meta-analysis studies on scaffolding. It is also notable that the current research yielded a large effect size (g = 0.866) compared to previous meta-analyses. For example, in their meta-analysis, Belland et al. (2017) reported a medium effect size of computer-based scaffolding in STEM education (g = 0.46). Steenbergen-Hu and Cooper (2014) also reported a moderate effect size of scaffolding of intelligent tutoring systems (g = 0.32 to.037) for college students. Kim et al. (2018) conducted a Bayesian meta-analysis to examine the effects of computer-based scaffolding in problem-based learning for STEM and reported a small-to-moderate effect size (g = 0.385).

The reason for the large effect size of our research may be explained by the population’s characteristics. For instance, a key inclusion criterion was that the study be conducted in higher education. Belland et al. (2017) compared the effect size of scaffolding on cognitive learning outcomes of diverse participants who ranged from primary schoolers to adult learners and reported similar research findings to this current study. Interestingly, they reported that the effect sizes for scaffolded instruction with graduate students and adult learners were greater than those with young learner populations, lending credence to our findings regarding scaffolded learning in higher education. Belland et al. (2017) also mentioned that “scaffolding’s strongest effects are in populations the furthest from the target learner population in the original scaffolding definition” (pp. 331-332), in which expert assistants enable children to extend their problem solving or strategic performance beyond what they could accomplish independently (Wood et al., 1976).

Other good examples of the influence of age groups are that of Steenbergen-Hu and Cooper’s (2013, 2014) two meta-analyses for different age groups. They investigated the effects of intelligent tutoring systems (ITS) for K-12 students’ mathematical learning in 2013 and the effects for college students in 2014. They found the overall effect size of ITS for K-12 students’ mathematical learning ranged from 0.1 to 0.9, whereas the effect size for college students was moderate (i.e., g =.32 to.37). They attributed the different effect sizes to (a) the types of intervention and methodologies; (b) the degree of intervention implementation (i.e., laboratory environments vs. real environments); and (c) learners’ age or educational level. They explained that

it is likely that ITS may function better for more mature students who have sufficient prior knowledge, self-regulation skills, learning motivation, and experiences with computers than for younger students who may still need to develop the above characteristics and need more human inputs to learn. (Steenbergen-Hu & Cooper, 2014, p. 342)

The large effect size in our research may be related to the research designs of individual scaffolding studies. More studies used experimental designs (k = 43, g = 1.045) than quasi-experimental designs (k = 17, g = 0.422) or non-experimental designs (k = 4, g = 0.702). Steenbergen-Hu and Cooper (2014) also explained that educational interventions in laboratory environments usually produce larger effects than those in real environments. By including substantially more experimental design studies in our meta-analysis, this research could attain high internal validity and a large effect size.

In terms of the types of learning outcomes, few studies in our sample (9 out of 64) examined an affective domain. Affective domains include important learning outcomes, such as learning satisfaction and learning engagement. Given that satisfaction and engagement are strong predictors of students’ learning achievements (Coates, 2005; Kuh, 2003), more scaffolding opportunities should be provided to students within affective learning domains. The meta-cognitive domain yielded a larger effect size than did the affective and cognitive domains. Since students in higher education settings are likely engaged in more activities requiring higher-order thinking skills and self-regulation compared to K-12 students, scaffolded instruction is expected to play an important role in the meta-cognitive learning domain.

The findings in this research also indicated that peers are a strong source of scaffolding; however, this finding should be interpreted with caution because of the small number of studies focusing on peers (g = 1.813, k = 5). The findings also indicated that the effects of scaffolding by instructors (g = 0.837) were larger than the effects obtained by computers (g = 0.764). More studies in this analysis used computers (k = 44) as a scaffolding source in an online learning environment than human instructors (k = 15).

This result is promising because scaffolding by computer is expected to improve the quality of online learning. For example, MOOCs, which typically have large class sizes with heterogenous participants compared to traditional classrooms, are expanding rapidly (Shah, 2019). Doo et al., (2020) revealed that key frustrations commonly found among MOOC instructors were a lack of interaction with students and difficulty providing feedback in a timely manner. If the effects of scaffolding by computers in an online learning environment are equivalent to human instructors, more social interaction and scaffolding leading to learning outcomes will be available to online learners. AI-based scaffolding opportunities are expected to be extensively implemented in higher education in the near future, given the considerable progress in research on artificial intelligence and learning analytics (e.g., Adams Becker et al., 2018; Metz & Satariano, 2018).

Another key finding is that scaffolding studies have more often employed experimental designs compared to quasi-experimental designs. Experimental designs have also shown large effect sizes (g = 1.045) compared to quasi-experimental designs and non-experimental designs with their small-to-moderate effect sizes (g = 0.396 to 0.702). The strength of an experimental design is in obtaining high internal validity (Vogt, 1999), which involves measuring the effects of scaffolding as a treatment by controlling extraneous variables.

Among the eight countries included in this analysis, the US has been the most productive in terms of the quantity of scaffolding research in online learning environments (42.19%). Studies in the US also had larger sample sizes and larger effect sizes compared to the non-US studies. Future qualitative and quantitative studies might examine the differences in scaffolding implementation by country from both instructor and student perspectives.

Among learning disciplines, scaffolding has been the most widely adopted in the language and literature academic area. However, the effect sizes in this area were small to moderate. By comparison, the effects of scaffolding in computing, communications, and education were larger than in language and literature. Further investigation is needed into how scaffolding is implemented in each learning discipline in online learning environments in higher education, and to explore the development of guidelines for effective scaffolding strategies and features in different disciplines.

Given the importance of scaffolded support in online learning environments displayed across the studies reviewed in this meta-analysis, combined with the proliferation of the forms and types technological supports, it is now time to carry out more fine-tuned and pointed research on this topic. Researchers need to begin to explore issues that yield more practical and strategic results for instructors and instructional designers such as the timing of scaffolding, including decisions related to the fading and elimination of that scaffolding altogether.

It is important to explain the limitations of this meta-analysis study for future researchers. A limited number of studies were included in this meta-analysis due to the fact that only quantitative studies with sufficient information to calculate the effect sizes were eligible. Setting the literature search to the years 2010 through 2019 to reflect more contemporary research trends on scaffolding and online learning also limited the pool of relevant studies. In addition, many studies from the search process were eliminated because they did not meet our inclusion criteria. In terms of publication bias, more studies with large effect sizes were included in the meta-analysis. Because of the difficulties in searching for eligible studies, missing studies that could have corrected the asymmetry of the funnel plot likely were not included. Further limiting the generalizability of our finding, our search was limited to scholarly works published in English.

To strengthen the external validity and obtain more robust research findings, we recommend that future research include scaffolding studies published in local languages. Additional exploration could further identify specific instructional approaches that have been effective in online environments. Given the millions of learners who are enrolled in online learning in the US alone, the impact of such investigations would be substantial (Seaman et al., 2018). Another limitation of this research is that coding was completed by a single coder. Therefore, inter-coder reliability analyses were not possible. This limitation remains despite efforts to overcome this weakness by coding that data twice with a time lapse interval. Future researchers could use multiple coders to estimate inter-coder reliability.

Given that online learning has become a common and acceptable learning environment over the past two decades (Bonk, 2009), the significance of the quality of online learning cannot be overemphasized. In particular, scaffolding can improve the quality of learning, including learning outcomes. This research confirmed the large effects of scaffolding on learning outcomes in an online learning, higher education environment. By providing additional analyses of scaffolded instruction research in the discussion, this research has implications for online instructors, online learners, and administrators in higher education who manage online learning programs and degree options.

Vygotsky (1978) emphasized the importance of social interaction and critical support for learning. From this perspective, scaffolding is expected to substantially improve online learners’ outcomes and learning experiences (Bonk & Cunningham, 1998). Clearly, this research provides significant support for this hypothesis. The variety of studies included in this review indicate that educators are commonly embedding scaffolded support for learners across a wide spectrum of age groups and content areas. This meta-analysis adds to the support for these practices. Simply put, scaffolded instruction is beneficial, and the benefits seem to increase as learners age.

*Note: References marked with an asterisk (*) indicate studies included in the meta-analysis.

Adams Becker, S., Brown, M., Dahlstrom, E., Davis, A., DePaul, K., Diaz, V., & Pomerantz, J. (2018). NMC horizon report: 2018 higher education edition. Louisville, CO: EDUCAUSE. Retrieved from https://library.educause.edu/~/media/files/library/2018/8/2018horizonreport.pdf

*Ak, S. (2016). The role of technology-based scaffolding in problem-based online asynchronous discussion. British Journal of Educational Technology, 47(4), 680-693. https://doi.org/10.1111/bjet.12254

*An, Y.-J., & Cao, L. (2014). Examining the effects of metacognitive scaffolding on students’ design problem solving and metacognitive skills in an online environment. Journal of Online Learning and Teaching, 10(4), 552-568. Retrieved from https://jolt.merlot.org/vol10no4/An_1214.pdf

Anderson, L. W., & Krathwohl, D. R. (Eds.). (2000). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. Boston, MA: Addison Wesley Longman.

Barzilai, S., & Blau, I. (2014). Scaffolding game-based learning: Impact on learning achievements, perceived learning, and game experiences. Computers and Education, 70, 65-79. https://doi.org/10.1016/j.compedu.2013.08.003

Belland, B. R. (2014). Scaffolding: Definition, current debates, and future directions. In J. M. Spector, M. D. Merrill, J. Elen, & M. J. Bishop (Eds.), Handbook of research on educational communications and technology (4th ed., pp. 505-518). New York, NY: Springer.

Belland, B. R. (2017). Instructional scaffolding in STEM education: Strategies and efficacy evidence. Cham, Switzerland: Springer. http://doi.org/10.1007/978-3-319-02565-0

Belland, B. R., Walker, A., Kim, N. J., & Lefler, M. (2017). Synthesizing results from empirical research on computer-based scaffolding in STEM education: A meta-analysis. Review of Educational Research, 87(2), 309-344. https://doi.org/10.3102/0034654316670999

Belland, B. R., Walker, A., Olsen, M. W., & Leary, H. (2015). A pilot meta-analysis of computer-based scaffolding in STEM education. Educational Technology and Society, 18(1), 183-197.https://doi.org/10.7771/1541-5015.1093

BestCollege (2019). Online education trends report. Retrieved from https://www.bestcolleges.com/research/annual-trends-in-online-education/

Doo, M. Y., Tang, Y., Bonk, C. J., & Zhu, M. (2020). MOOC instructor motivation and career and professional development. Distance Education, 41(1), 26-47. https://doi.org/10.1080/01587919.2020.1724770

Glass, G. V. (1976). Primary, secondary, and meta-analysis of research. Educational Researcher, 5, 3-8. https://doi.org/10.3102/0013189X005010003

Hannafin, M., Land, S., & Oliver, K. (1999). Open learning environments: Foundations, methods, and models. In C. M. Reigeluth (Ed.), Instructional-design theories and models: A new paradigm of instructional theory (Vol. II, pp. 115-140). Mahwah, NJ: Lawrence Erlbaum Associates.

Harbord, R. M., Egger, M., & Sterne, J. A. (2006). A modified test for small study effects in meta-analyses of controlled trials with binary endpoints. Statistics in Medicine, 25, 3443-3457. https://doi.org/10.1002/sim.2380

Hedges, L. V., & Olkin, I. (1985). Statistical methods for meta-analysis. New York, NY: Academic Press.

Higgins, J. & Green, S. (Eds.). (2008). Cochrane handbook for systematic reviews of interventions. Chichester, United Kingdom: John Wiley & Sons.

*Holmes, N. G., Day, J., Park, A. H. K., Bonn, D., & Roll, I. (2014). Making the failure more productive: Scaffolding the invention process to improve inquiry behaviors and outcomes in invention activities. Instructional Science, 42, 523-538. https://doi.org/10.1007/s11251-013-9300-7

*Huang, Y., & Huang, Y-M. (2015). A scaffolding strategy to develop handheld sensor-based vocabulary games for improving students’ learning motivation and performance. Educational Technology Research and Development, 63(5), 691-708. https://doi.org/10.1007/s11423-015-9382-9

*Huang, Y., Liu, M., Chen, N., Kinshuk, & Wen, D. (2014). Facilitating learners’ web-based information problem-solving by query expansion-based concept mapping. Australasian Journal of Educational Technology, 30(5), 517-532. https://doi.org/10.14742/ajet.613

*Kim, J. Y., & Lim, K. Y. (2019). Promoting learning in online, ill-structured problem solving: The effects of scaffolding type and metacognition level. Computers & Education, 138, 116-129. http://dx.doi.org/10.1016/j.compedu.2019.05.001

Kim, M., & Hannafin, M. (2011). Scaffolding 6th graders’ problem solving in technology-enhanced science classrooms: A qualitative case study. Instructional Science, 39, 255-282. https://doi.org/10.1007/s11251-010-9127-4

Kim, N. J., Belland, B. R., & Walker, A. E. (2018). Effectiveness of computer-based scaffolding in the context of problem-based learning for STEM education: Bayesian meta-analysis. Educational Psychology Review, 30(2), 397-429. https://doi.org/10.1007/s10648-017-9419-1

Kuh, G. D. (2003). What we’re learning about student engagement from NSSE. Change, 35(2), 24-32. https://doi.org/10.1080/00091380309604090

Lajoie, S. P. (2005). Extending the scaffolding metaphor. Instructional Science, 33, 541-557. http:/doi.org/10.1007/s11251-005-1279-2

Levine, J. M., & Moreland, R. L. (1991). Culture and socialization in work groups. In L. B. Resnick, J. M. Levine, & S. D. Teasley (Eds.), Perspectives on socially shared cognition (pp. 257-279). Washington, DC: American Psychological Association.

Lipsey, M. W., & Wilson, D. B. (2001). Practical meta-analysis. Thousand Oaks, CA: Sage.

Maderer, J. (2017, January 9). Jill Watson, round three, Georgia Tech course prepares for third semester with virtual teaching assistants. Georgia Tech News Center. Retrieved from http://www.news.gatech.edu/2017/01/09/jill-watson-round-three

McFarland, M. (2016, March 11). What happened when a professor built a chatbot to be his teaching assistant? Washington Post. Retrieved from https://www.washingtonpost.com/news/innovations/wp/2016/05/11/this-professor-stunned-his-students-when-he-revealed-the-secret-identity-of-his-teaching-assistant/

Metz, C., & Satariano, A. (2018, July 3). Silicon Valley’s giants take their talent hunt to Cambridge. The New York Times. Retrieved from https://www.nytimes.com/2018/07/03/technology/cambridge-artificial-intelligence.html

Milligan, C., & Littlejohn, A. (2017). Why study on a MOOC? The motives of learners and professionals. The International Review of Research in Open and Distributed Learning, 18(2), 92-102. http://dx.doi.org/10.19173/irrodl.v18i2.3033

Oliver, R., & Herrington, J. (2003). Exploring technology-mediated learning from a pedagogical perspective. Interactive Learning Environments, 11(2), 111-126. https://doi.org/10.1076/ilee.11.2.111.14136

*Özçinar, H. (2015). Scaffolding computer-mediated discussion to enhance moral reasoning and argumentation quality in pre-service teachers. Journal of Moral Education, 44(2), 232-251. http://dx.doi.org/10.1080/03057240.2015.1043875

Pea, R. D. (2004). The social and technological dimensions of scaffolding and related theoretical concepts for learning, education, and human activity. The Journal of the Learning Sciences, 13(3), 423-451. https://doi.org/10.1207/s15327809jls1303_6

*Proske, A., Narciss, S., & McNamara, D. S. (2012). Computer-based scaffolding to facilitate students’ development of expertise in academic writing. Journal of Research in Reading, 35(2), 136-152. https://doi.org/10.1111/j.1467-9817.2010.01450.x

Richey, R. C. (2013). Encyclopedia of terminology for educational communications and technology. New York, NY: Springer.

*Rienties, B., Giesbers, B., Tempelaar, D. T., Lygo-Baker, S., Segers, M., & Gijselaers, W. H. (2012). The role of scaffolding and motivation in CSCL. Computers & Education, 59(3), 893-906. https://doi.org/10.1016/j.compedu.2012.04.010

Rogoff, B. (1995). Observing sociocultural activity: Participatory appropriation, guided participation, and apprenticeship. In J. V. Wertsch, P. D. Rio, & A. Alvarez (Eds.), Sociocultural studies of mind (pp. 139-164). New York, NY: Cambridge University Press.

*Roll, I., Butler, D., Yee, N., Welsh, A., Perez, S., Briseno,... Bonn, D. (2017). Understanding the impact of guiding inquiry: The relationship between directive support, student attributes, and transfer of knowledge, attitudes, and behaviors in inquiry learning. Instructional Science, 46, 77-104. https://doi.org/10.1007/s11251-017-9437-x

*Sas, M., Bendixen, L. D., Crippen, K. J., & Saddler, S. (2017). Online collaborative misconception mapping strategy enhanced health science students’ discussion and knowledge of basic statistical concepts. Journal of College Science Teaching, 46(6), 88-99. Retrieved May 29, 2020, from https://www.jstor.org/stable/44579950

Seaman, J. E., Allen, I. E., & Seaman, J. (2018). Grade increase: Tracking online education in the United States. Babson Survey Research Group. Retrieved from https://onlinelearningsurvey.com/reports/gradeincrease.pdf

Shah, D. (2019, January 6). Year of MOOC-based degrees: A review of MOOC stats and trends in 2018. Class Central. Retrieved from https://www.class-central.com/report/moocs-stats-and-trends-2018/

Steenbergen-Hu, S., & Cooper, H. (2013). A meta-analysis of the effectiveness of intelligent tutoring systems on K-12 students’ mathematical learning. Journal of Educational Psychology, 105(4), 970-987. https://doi.org/10.1037/a0032447

Steenbergen-Hu, S., & Cooper, H. (2014). A meta-analysis of the effectiveness of intelligent tutoring systems on college students’ academic learning. Journal of Educational Psychology, 106(2), 331-347. https://doi.org/10.1037/a0034752

*Tegos, S., & Demetriadis, S. (2017). Conversational agents improve peer learning through building on prior knowledge. Educational Technology & Society, 20(1), 99-111. https://eric.ed.gov/?id=EJ1125830

van de Pol, J., Volman, M., & Beishuizen, J. (2010). Scaffolding in teacher-student interaction: A decade of research. Educational Psychology Review, 22, 271-296. http://doi.org/10.1007/s10648-010-9127-6

van Merriënboer, J. J. G., & Kirschner, P. A. (2012). Ten steps to complex learning: A systematic approach to four-component instructional design (2nd ed.). New York, NY: Routledge.

Vogt, W. P. (1999). Dictionary of statistics & methodology (2nd ed.). Thousand Oaks, CA: Sage.

Vygotsky, L. S. (1978). Minds in society. Cambridge, MA: Harvard University Press.

Wood, D., Bruner, J. S., & Ross, G. (1976). The role of tutoring in problem solving. Journal of Child. Psychology and Psychiatry, and Allied Disciplines, 17(2), 89-100. https://doi.org/10.1111/j.1469-7610.1976.tb00381.x

Wu, H. L., & Pedersen, S. (2011). Integrating computer- and teacher-based scaffolds in science inquiry. Computers & Education, 57(4), 2352-2363. https://doi.org/10.1016/j.compedu.2011.05.011

*Yilmaz, F. G. K., & Yilmaz, R. (2019). Impact of pedagogic agent-mediated metacognitive support towards increasing task and group awareness in CSCL. Computers & Education, 134, 1-14. https://doi.org/10.1016/j.compedu.2019.02.001

A Meta-Analysis of Scaffolding Effects in Online Learning in Higher Education by Min Young Doo, Curtis J. Bonk, and Heeok Heo is licensed under a Creative Commons Attribution 4.0 International License.