This article reports on an exploratory research effort in which the extent of MBA student learning on twelve specific competencies relevant to effective business performance was assessed. The article focuses on the extent to which differences in student learning outcomes may be influenced by one of three different types of instructional delivery: on-campus, distance, and executive MBA. It affirms the high quality of learning that can occur via distance education and proposes a strategy to conduct summative, program-level assessment. Specific findings include participants in all three groups self-reporting significantly higher scores on seven of twelve outcomes (e.g., goal setting, help, information gathering, leadership, quantitative, theory, and technology skills). It also notes that distance MBA students self reported significantly higher scores than on-campus students on the learning outcomes related to technology, quantitative, and theory skills, and higher scores on technology skills than the executive MBA group. Implications for further research are discussed.

Keywords: distance learning; outcomes assessment; management education; MBA program evaluation

During the 1990s, there was serious discussion about the need to fundamentally reform higher education more than at any time in the previous100 years (Angelo, 1996). There was growing concern that the escalating cost of higher education was not linked to an increase in educational quality (Bragg, 1995). This sentiment continues and is reflected by the continuing pressure on universities, colleges, and academic departments to demonstrate the effectiveness of their efforts through some sort of performance measurement or “outcomes assessment” (Angelo and Cross, 1993; Banta, 1993; Ewell, 1997, 2000; Palomba and Banta, 1999; Palomba and Palomba, 1999).

The Association to Advance Collegiate Schools of Business – International (AACSB) adopted a position that requires business schools to measure the outcomes of their curriculum (Edwards and Brannen, 1990; AACSB, 1996). Previously, accreditation efforts focused on input measures such as teacher-student ratios, numbers of terminally- degreed faculty, number of volumes in the library, and the breadth and depth of curricular offerings (AACSB, 1980, 1984, 1987). In many of the specialized accrediting bodies, such as AACSB International, the focus has now shifted to value-added measures that assess what students have actually learned as a result of their participation in specific courses or in an entire academic program (Davenport, 2001).

As a result, in the late 1980s, colleges of business throughout the United States began to conduct frequent outcome assessments at both the undergraduate and graduate levels (Edwards and Brannen, 1990). Although there are no generally accepted or preferred ways to measure student learning and educational outcomes (AACSB, 1980, 1989; Beale, 1993; Kretovics, 1999), at the Master of Business Administration (MBA) level, historically the most common assessment techniques included student evaluations, employer perceptions/opinions, objective tests, and student exit interviews (Edwards and Brannen, 1990; Palomba and Palomba, 1999).

Each of these assessments typically occurs after a course is completed, as students are graduating, or in some cases, after they are in the workplace. However, the question remains as to whether students would demonstrate similar scores on an outcome measure before they enrolled in a course or program of study. Is it possible that students who already possess much of the knowledge and skills the program wishes to impart are already being admitted to MBA programs? We do not mean to suggest that students do not learn specific content in each of their classes; rather, we are concerned about the nature and extent of changes that may (or may not) occur on more global learning outcome measures.

This article reports on an exploratory research effort in which the extent of student learning outcomes on twelve specific competencies relevant to effective business performance was assessed. In addition, it discusses the extent to which student learning was influenced by one of three distinct types of instructional delivery: traditional on-campus, face-to-face (f2f) instruction; distance education (in this case, distribution of video recordings of on-campus classes combined with online faculty/student and student/student interaction), and executive education (f2f, cohort).

This research effort was undertaken as part of the college of business preparations for its re-accreditation review. Because our MBA program is presented using three forms of delivery, we were interested in identifying if the learning outcomes, as determined by an established assessment instrument, in each of the three programs were equivalent. The curriculum is approximately the same, meaning the course syllabus for each course across the three forms of delivery was the same, and in two of the three learning contexts, the instructors were typically the same. There are no elective courses in the MBA program. Although students graduating from the program receive the same AACSB-accredited degree, an important question remains unanswered: “Do students receive the same education? This article summarizes the authors’ efforts to explore how to answer that question, within the context of the larger issue of outcomes assessment.

Clearly, one systematic way to measure student learning would be to compare measures of student competencies at the beginning and end of their educational experience. Another way to achieve similar results would be to identify two groups of essentially equivalent (demographically-speaking) students — one at the beginning of their learning experience and one at the end and analyze skill/ competency differences. Interestingly, according to Albanese (as cited in Boyatzis, Baker, Leonard, Rhee, and Thompson, 1995), few schools of business have conducted outcome studies that compare their graduates to their newly admitted students.

Using an established assessment instrument, this research examined the extent to which students in three contexts developed twelve learning skills/ competencies during the time they were enrolled in an MBA program at a large western US based university.

These three modes of delivery comprised: 1) a traditional on-campus face-to-face program, conducted synchronously in real time; 2) an executive program (EMBA) conducted in real time and face-to-face; and 3) a distance education program (SURGE) offered online through a dedicated software package called Embanet, and supplemented by video recordings of each on-campus class sessions.

Students enrolled in the distance program are never on-campus. All interaction with professors and fellow students is via the Embanet communications software that facilitates online chats, virtual team membership, and the sending and receiving of instructional materials. This distance education program is conducted asynchronously; that is, students receive videotapes of on-campus lectures/ discussions approximately five days after the on-campus class is recorded. Students are not members of a cohort group but work on project assignments either individually or in virtual project teams. Students may take up to eight years to complete their MBA degree.

For the more traditional on-campus delivery format, students attend on-campus evening classes twice a week. Like SURGE students, they may also move in and out of the program, may or may not take all courses in sequence, and often take more than two years to complete the MBA degree.

The executive education MBA group (EMBA) is a close-knit, cohort group that spends approximately two years together in a lock-step program. This group clearly develops significantly more cohesiveness during their time together than do students in either of the other two formats. They work on multiple projects in and out of class together, eat dinner together at each class meeting, and attend classes at an off-campus location in the heart of a major metropolitan area.

The industrial economies of the world have been transformed into information-based economies creating a greater need for higher education (Levine, 2001). This increase in demand, combined with technological advances, has had a significant influence on the way higher education is delivered. Correspondence courses, audio taped lectures, video taped classes, online, Web-based courses – all of these technologies have made it possible for education to be delivered in multiple ways to learners throughout the world.

The nature of distance education has changed dramatically over the years as technological advances have led to innovations in distance education delivery (Carter, 1996). The explosive growth of personal computer usage and the Internet have fueled the rise in distance learning available via the World Wide Web. Instruction using such technology is typically asynchronous, allowing students access to course materials whenever time permits and from wherever they may have access to the Internet (Barber and Dickson, 1996). Today, it is possible for students to enroll in and graduate from degree-granting programs at accredited institutions without ever having to be physically present on the campus (Fornaciari, Forte and Mathews, 1999; Kretovics, 1998). We recognize there are many interpretations of what constitutes distance education. In the specific case reported on in this article, we are referring to the use of mailed videotapes of classroom instruction to students’ homes or places of work, and subsequent, online interaction to accomplish program objectives.

There have been numerous articles and papers written about the effectiveness of distance education for student learning. This research has consistently shown that regardless of discipline (e.g., library science, social work, physical therapy, management education, etc.), there are no significant differences in the learning outcomes of students enrolled in distance courses as compared to traditional face-to-face classroom settings (Haga and Heitkamp, 2000; Levine, 2001; Mulligan and Geary, 1999; O’Hanlon, 2001; Ponzurick, France, and Logar, 2000; Weigel, 2000; Worley, 2000). Several other authors have found no significant differences in student satisfaction (Arbaugh 2000c; Phillips and Peters, 1999; Baldwin, Bedell and Johnson, 1997) or participation rates (Arbaugh, 2000a; 2000b), for distance courses compared to face-to-face courses.

Distance education, once viewed as an anomaly on the traditional campus, has now become an accepted and, in some instances, an expected alternative delivery system (Murphy, 1996; Moore, 1997; Cook, 2000). Institutions with distance education programs have demonstrated that these programs are an effective method to deliver classes to a diverse population.

Regarding its use in colleges of business, Boyatzis, Cowen, and Kolb (1995) suggest that distance education may become accepted as a core activity within graduate management education. “The traditional view of executive education is under exploration at many schools and is being expanded to include distance learning” (Boyatzis, Cowen, and Kolb 1995, p. 48). Kedia and Harveston (1998) also notes that for universities “[t]hrough advances in distance learning, the ability to deliver management education at their own locations rather than having them come to campus is not only a possibility, but a growing trend” (p. 214). Additionally, Moore (1997), Fornaciari, Forte and Mathews (1999), and Arbaugh (2000a; 2000b) indicate that distance education is gaining acceptance as an alternative delivery option to the traditional on-campus experience.

It is important to note that the studies reviewed up to this point have all focused on individual courses rather than entire degree programs. The evidence supports the notion that within individual courses, distant learners learn as well and are just as satisfied with their education as are resident students. However, the literature falls short regarding the assessment of learning outcomes within accredited degree programs offered via different distance education modes (Ponzurick, France, and Logar, 2000; Weigel, 2000). This study focuses on the summative assessment of learning outcomes at the end of a program, as distinct from an individual course focus.

In addition, most studies have focused on synchronous (real-time/interactive) rather than asynchronous (delayed or recorded instruction) media. We compare student learning in two synchronous, face-to-face environments with learning in an asynchronous (distance education) environment.

What Moore (1989) said over a decade ago still appears to hold true today:

. . . the weight of evidence that can be gathered from the literature points overwhelmingly to the conclusion that teaching and studying at a distance, especially that which uses interactive electronic communications media, is effective, when effectiveness is measured by the achievement of learning, by the attitudes of students and teachers and by cost effectiveness (p. 30).

It is also important to note that in the late 1980s, criticisms of traditional management education were increasing at the same time MBA programs were moving toward distance education (AACSB, 1989). Those concerns were met within the academy by calls for increased measurement of student learning outcomes. Within graduate schools of business, the measures that appear to be most utilized are testing procedures, students’ perceptions, and employer observations (Edwards and Brannen, 1990; Palomba and Palomba, 1999). Hilgert (1995) discussed life-changing developmental outcomes related to an executive MBA program experience, and Baruch and Leeming (1996) discussed graduates’ perceptions of their MBA experience. However, these studies did not measure the learning outcomes at the end of an academic program.

The two primary research questions investigated for this study were:

1. Does MBA students’ self-reported performance on a learning outcomes assessment instrument change between the time students begin their MBA program and when they finish it? If so, were those changes uniform ones, or were there significant differences on key outcome measures?

2. Does MBA students’ performance on the learning outcomes assessment differ as a function of the form of instructional delivery methodology (on-campus, SURGE, and EMBA)? If so, what is the nature of those differences?

The sample of participants in this study included those students enrolled in one of the three MBA programs (on-campus, SURGE, and EMBA) offered by the participating institution. Students who enrolled in a specific program were self-selected. SURGE students could complete their MBA without ever needing to be physically present on campus. While the admission requirements differ slightly for the three formats, there were no statistically significant differences between the groups based on pre-admission characteristics such as test scores, years of work experience, age, or gender. The students enrolling fall term (n=222) were used as the entering pool and those who graduated at the end of the prior spring term (n = 115) comprised the exiting pool.

Of the total of 222 students admitted as new students for the fall semester, 83 students (37 percent) returned usable results and are defined as the entry participants in this study. Of this group, 27 students were female (35 percent), 50 (45 percent) were male, and six did not identify their gender. There were 17 SURGE students (20 percent), 12 EMBA students (15 percent), and 54 on-campus students (65 percent).

Of the 115 students graduating the previous May, 39 students (34 percent) returned usable results. Of the 39 usable results, 16 were traditional on-campus students, 11 were SURGE students, and 12 were EMBAs. Gender data for the responding group indicates that 14 participants were female (36 percent) and 25 were male (64 percent).

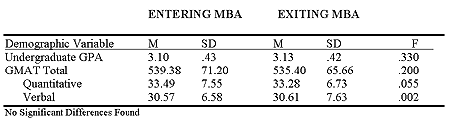

The entering students’ mean GMAT total score of 539.38 was not significantly different from the GMAT of the exiting students (535.40). Nor was there a difference in undergraduate GPAs: entering students’ mean GPA was 3.10 verses a 3.13 of the exiting students (Table 1.). The gender breakdown in both groups was also similar (36 percent of entering students were female; 35 percent of exiting students were female). Finally, the work experiences of the two entry and exit groups were not significantly different. While these two groups are not exactly the same, on dimensions relevant to performance in this MBA program, the two populations may be treated as equivalent.

There were several design challenges associated with this research. For example, without waiting for two or more years for students to complete an MBA program, how does one obtain meaningful performance data on student learning outcomes? In addition, what assessment strategy and/or instrument(s) will provide program-level insights, as distinct from course-level reactions?

Following the modified pretest-posttest or cross-sectional design used by that Boyatzis, Baker, Leonard, Rhee, and Thompson (1995) and Kretovics (1999) in similar research efforts, the authors compared scores of different entering and exiting student groups. The differences in the mean scores of each group were treated as group gain scores as if they were from the same groups. Pascarella and Terenzini (1990), and Terenzini (1989), noted that this cross-sectional methodology is common when measuring change in outcomes studies (see Table 1).

Table 1. Analysis of variance of GMAT and undergraduate GPA by time

The Learning Skills Profile (LSP) is a modified Q-sort that utilizes experiential learning skill typology in its analysis. This assessment is a typology of skills based on a foundation of learning styles and experiential learning theory. It is not designed to measure job performance nor specific academic competencies, but rather to measure one’s learning skills. Learning skills are defined as “generic heuristic[s] that enable mastery of a specific domain having two components: a domain of application and a knowledge transformation process” (Boyatzis and Kolb, 1995, p. 5).

The LSP requires respondents to sort 72 learning skill cards twice, once into seven personal skill envelopes (the focus of this study) and a second time into seven job skill envelopes. It measures twelve learning skills considered important in business and management education (Boyatzis and Kolb 1995). The twelve learning skills are grouped into four major skill areas: 1) interpersonal skills; 2) information gathering skills; 3) analytical skills; and 4) behavioral skills, corresponding to the four phases of experiential learning as seen in Figure 1.

The groupings and definitions of each of the learning skills is as follows:

1. Interpersonal Skills. a) Help skills: the ability to be sensitive to others in gaining opportunities to grow, to be self-aware. b) Leadership skills: the ability to inspire and motivate others, to sell ideas to others, to negotiate, and build team spirit. c) Relationship skills: the ability to establish trusting relationships with others, to facilitate communication and cooperation.

2. Information Gathering Skills. a) Sense-making skills: the ability to adapt, to change, to deal with new situations, and to define new strategies and solutions. b) Information gathering skills: the ability to be sensitive to and aware of organizational events, to listen with an open mind, and to develop and use various sources for receiving and sharing information. c) Information analysis skills: the ability to assimilate information from various sources, to derive meaning and to translate specialized information for general communication and use.

3. Behavioral Skills. a) Goal setting skills: the ability to establish work standards, to monitor and evaluate progress toward goals, and to make decisions based on cost-benefits. b) Action skills: the ability to commit to objectives, to meet deadlines, to be persistent, and to be efficient. c) Initiative skills: the ability to seek out and take advantage of opportunities, to take risks, and to make things happen.

4. Analytical Skills. a) Theory skills: the ability to adopt a larger perspective, to conceptualize, to integrate ideas into systems or theories, and to use models or theories to forecast trends. b) Quantitative skills: the ability to use quantitative tools to analyze and solve problems, and to derive meaning from quantitative reports. c) Technology skills: the ability to use computers and computer networks to analyze data and organize information, and to build computer models or simulations.

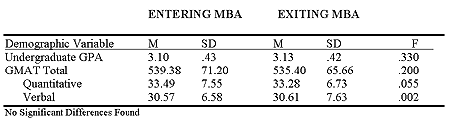

Using reliability information from Boyatzis, Baker, Leonard, Rhee, and Thompson (1995), Boyatzis and Kolb (1991) and Squires (1993) as a comparative base, a reliability analysis (using Cronbach’s alpha) was conducted for this sample. The alphas found in this sample are consistent with those found by Boyatzis, Baker, Leonard, Rhee, and Thompson (1995), Boyatzis and Kolb (1991) and Squires (1993). According to Boyatzis and Kolb (1991), the LSP had internal scale reliabilities, as measured by Cronbach’s alpha, ranging from 0.618 to 0.917, with an average of 0.778. Squires (1993) also found the LSP to be internally consistent with alphas ranging from a low of .800 to a high of .935. The alphas in this study (see Table 2.) ranged from a low of .651 (Information Gathering) to a high of .911 (Technology), which are consistent with the previous studies.

Table 2. Reliability coefficients of the LSP learning skills

The LSP is a self-report instrument, and as such, its use is supported in the literature by Kelso, Holland and Gottfredson (1977), Harrington and O’Shea (1993), Harrington and Schafer, (1996), and Kempen et al. (1996). These authors concluded that self-estimates of ability and/or aptitude are valid and self-reported data are as reliable as data gathered by more objective means. Additionally, Boyatzis and Kolb (1991), Boyatzis, Baker, Leonard, Rhee, and Thompson (1995) and Squires (1993) found the use of the LSP to be accurate measures of the twelve dimensions.

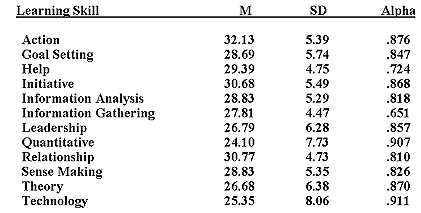

The initial analysis was conducted using a set of 12 independent two-way ANOVAs with time (entry and exit) and delivery methodology (on-campus, SURGE, and EMBA) as independent variables, and each of the twelve LSP learning skills as dependent variables. This analysis allowed simultaneous exploration of both research questions: Does participation in an MBA program have an impact on student learning? Does delivery methodology have an impact on student learning?

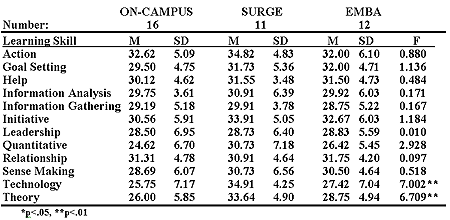

One would hypothesize that students exiting the program would rate themselves higher on relevant outcome measures than students entering a program. Our findings support this expectation. With time as the independent variable, the analysis indicates that exiting students report an absolute increase on each of the twelve LSP learning outcome measures. Statistically significant (p<. 05) results were obtained on seven of the twelve measures: goal setting, helping, information gathering, leadership, quantitative, theory, and technology (see Table 3.). These results compare favorably with those found in two studies reported by Boyatzis, Baker, et al. (1995).

Table 3. Results from Two-Way ANOVA – Learning skill by method and time

All skill areas within the analytical skills group were found to be significantly higher at exit than they were at entry. Only one each of the skill areas in the interpersonal group (i.e., helping) and in the information gathering group (i.e., leadership) were found to be significantly higher. Significant increases over time were not found on five of the outcome measures: action and initiative (behavioral group), sense making and information analysis (information gathering group), and relationship (interpersonal group).

It is not immediately evident why students did not show significant gains on these five skill areas, but did show significant gains on all components of the analytical group. Most of the students studied are also full-time employees and may have experienced significant training in specific areas prior to enrollment. Perhaps the incremental improvement contributed by program participation was marginal in the NS groups and more substantial in the analytical group. Perhaps these particular learning outcome measures were not sensitive enough to detect improvements in the identified outcome categories, or perhaps the small number of respondents was insufficient to reflect accurately the population’s true variance.

The interaction between the variables of time and delivery methodology (see Table 3.) were not significant. Students’ scores on the LSP increased from entry to exit regardless of the delivery methodology used. The post hoc analysis (Tukey) for the time variable determined that in each of the seven cases where a significant difference was found, the mean scores increased from entry to exit. By enrolling in and completing any one of these three MBA programs, scores will significantly improve on the learning outcomes of goal setting, helping, information gathering, leadership, quantitative, technology, and theory skill areas.

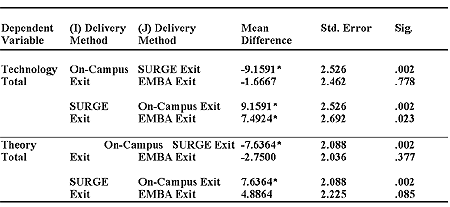

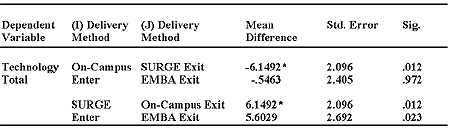

The second research question explored differences in student learning outcomes among the three MBA delivery methodologies. The results of this analysis concluded that only the analytical skill group (e.g., quantitative, theory, and technology) was significant across delivery methodologies (see Table 3.). The post-hoc analysis determined that the SURGE students rated themselves higher than did students participating in the other two delivery methodologies. Specifically, SURGE students reported significantly higher scores than the on-campus students on quantitative, theory and technology outcome measures, and significantly higher scores than did the EMBA participants on the technology outcome measure.

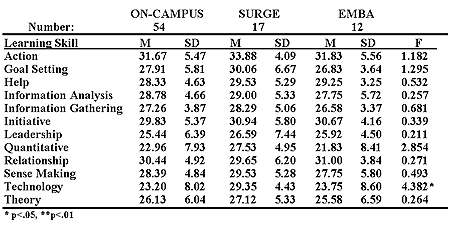

As expected, all three groups of exiting students rated themselves higher than did the three groups of entering students. Were these differences the result of pre-existing differences among the participating students? To answer this question, additional analyses were conducted to determine if the significant differences found for exiting students in the three programs could be attributed to differences that existed prior to program entry.

Data were divided into two independent sets: one containing entering student data and one containing the exiting student data. Using the independent variable and each of the twelve learning skills as the dependent variables, one-way ANOVAs enabled detection of any significant differences among entering students within each methodology. A similar analysis was completed for students exiting the program.

Significant differences were identified on two of the twelve learning outcome measures: technology and theory, with both findings favoring the distance students. Students entering the SURGE program rated themselves significantly higher on technology (see Table 6. and 7.) than did the on-campus students. The mean difference at entry was 6.15 (p < .05), while the mean difference at exit was 9.16 (p < .01), indicating that a portion of the difference found in exiting students may be attributed to a difference at entry.

The exiting group of students (see Table 4. and 5.) account for much of the variance in the main effect differences found on the learning outcome measures of technology and theory. It is not surprising that distance students would rate themselves higher on the technology skill, since they know going into the program that their primary means of communication with faculty and classmates will be via the Internet. Such students may have greater familiarity with technology applications, leading them to feel more comfortable using such tools to obtain their MBA degree. This potentiality was not explored here, and is recommended as an area for future research.

Table 4. Analysis of variance of LSP learning skills by method (Exiting Students)

Table 5. Multiple comparisons (Tukey HSD)

Table 6. Analysis of variance of LSP learning skills by method (Entering Students)

Table 7. Multiple comparisons (Tukey HSD)

The increase in theory skills, defined as “the ability to adopt a larger perspective; to conceptualize, to integrate ideas into systems or theories, and to use models/ theories to forecast trends,” is not as easily explained. Perhaps distance students, by virtue of their willingness to participate in such a program, may be manifesting the strength of their theory skill set, which includes quantitative skills, technology skills, and theory skills.

Alternatively, distance students’ and on-campus/EMBA students may see different paths to achieve their MBA objectives. On the one hand, on-campus and EMBA students meet face-to-face with instructors regularly, and often develop a personal rapport with those individuals. The more intimate interaction that such settings provide is not available to distance students. The necessity for on-campus and EMBA students to apply their analytical skill set (i.e., theory-oriented skills) may be somewhat diminished by the opportunity to develop stronger interpersonal relationships with their instructors. Such relationships may provide avenues for increased class discussion interactions that shift the nature of instruction to a more collaborative one not possible in the distance context. Distance students may compensate for that lack by increasing the use of their analytical skills, relative to their on-campus counterparts. Their challenge becomes one of demonstrating their competence in less interpersonal and more abstract ways. In most instances, individual performance is the distance student’s only avenue for establishing rapport with a faculty member. We believe this is an area for further study.

The findings and implications of this exploratory study need to be considered in light of its limitations. The low participation rate and small sample size must be taken into account when interpreting these results. A larger sample would possibly have provided more informative insights into these complex relationships.

We believe the low participation was in part due to the nature of the assessment instrument. The card-sort methodology requires participants to physically sort the learning skills ratings (either by hand or online) rather than simply checking or circling a number. The process was time consuming. The students enrolled in these MBA programs have numerous interests competing for their time. With no incentives (e.g., extra credit points, financial incentives) provided to encourage participation, many students likely placed this project low on their priority list. A more aggressive follow-up protocol may have increased the response rate.

In addition to the variables on which we focused, confounding or extraneous variables may have influenced these findings. Among those variables are: students’ work experience, the length of time taken to complete a degree, curriculum modifications during program participation, and class size variations. Many of the SURGE students and many on-campus students take courses on a part-time basis, often requiring more than three years to complete their MBA degree. In contrast, the EMBAs are engaged in a “lock-step” program that requires less than three years. These students spend considerably more time together than do students in the other two delivery methodologies. The group dynamics that undoubtedly influence the cohort group’s learning experience are likely quite different and subject to different factors than are those for the non-distance ones. Finally, faculty may turnover, change their course focus, or their evaluation methods, and thereby alter student experiences in unintended and unknown ways.

Certainly, too, the particular methodology used in this exploratory effort to conduct program level assessment may be questioned. While the methodology provides a particularly parsimonious approach to examining what students learn during their program, legitimate questions may be asked about generalizing across populations that appear equivalent on certain dimensions, but may be quite dissimilar on others. More exploration in this area is encouraged.

If learning is the ultimate goal of the educational experience, it is up to members of the academy to ensure that this goal is met. “Outcome assessment is perhaps the best vehicle available at this time with great potential for affecting positive change and addressing the issues of accountability within higher education” (Kretovics 1999, p 133). That this research represents an attempt at “program-level,” as distinct from “course” or “certificate” level assessment is an important additional step toward achieving the larger outcome assessment goal.

Summative evaluations are common at the course level. Most faculty conduct some form of end-of-course assessment to determine student satisfaction, areas for course improvements, and to assign a final grade. In many cases, formative evaluations occur during the progress of courses to determine if they are progressing in the intended direction. Professors conduct mid-course reviews of their courses and may adjust how and what they are teaching as a result. Examinations, peer evaluations, and other forms of assessment are also used to improve student learning and the overall course experience.

Such assessments (both summative and formative) are not as common at the program level. Our effort was to explore how one might do an empirically based, summative evaluation of an entire program and incorporate the critical component of assessing learning outcomes of distance students as well as those experiencing a more traditional learning context. We used an objective learning assessment instrument designed specifically to explore the extent of student learning in twelve key business skill areas identified as critical for our students’ ultimate success. We focused not on individual course evaluations, but on student gain scores at the beginning and at the end of this defined learning experience. Such assessments are necessary in order to identify what students are learning in our MBA program and to identify in what areas additional attention may be required in order to achieve our overall program objectives.

Because of the unique nature of our program, we were also able to investigate differences across instructional delivery formats, adding a level of complexity to the design, but also providing additional insights to consider as we continue to refine our learning outcome assessment strategies in increasingly sophisticated learning environments.

Outcome assessments not only benefit program participants and external stakeholders, it can also provide significant benefit to the institution (Kretovics and McCambridge, 1998). The use of outcome studies can provide systematic measures that are necessary for continuous program improvement. Data gathered in outcome studies can be used to stimulate curriculum revision, program development, and improve instructional methodologies. Kirkwood (1981) states: “outcome measurement, examined dispassionately, becomes not a threat but an opportunity” (p. 67). When used properly, outcome measures can effectively bolster an institution’s accountability to its various stakeholders (AACSB 1989).

As pressure mounts for academic institutions to document learning outcomes, and as the use of distance education methodologies as a pedagogical strategy increases, these insights regarding the assessment of distance program learning outcomes relative to those in more traditional formats become increasingly relevant. These findings challenge the assumption that face-to-face interaction between the student and the instructor is essential to the instructional process. They suggest that a virtual community, if developed properly, can serve student needs as adequately as more traditional notions of classroom community and “seat-time.” Additionally, the results not only support the notion that distance learning is effective, but they also challenge the “no significant difference” research findings by indicating that distance students may, in fact, learn more that the traditional classroom based students.

This study adds to the body of literature that attests to the quality of learning that takes place via distance education programs. Distance education is no longer a trend, nor is it a mode of education to be implemented in the future. It has arrived as a viable pedagogical strategy that expands educational opportunity for untold numbers of individuals seeking to expand their skill levels in meaningful and convenient ways. It is our hope that educators will continue to embrace distance education as an important, additional avenue for student learning to occur beyond the physical boundaries of the university community.

There are at least four areas in which additional research is encouraged: 1) designing and implementing program-level, summative evaluation methodologies; 2) developing valid and reliable, discipline-specific program-level assessment instruments; 3) developing assessment strategies that are uniquely tailored to the emerging Web-based technology-driven programs, and 4) exploring the relationship of the multitude of demographic and other factors to distance learning outcomes. Future researchers may wish to explore the effects of demographic characteristics such as gender, age, national origin, or prior work experience on distance learning outcomes. There are no limits to the challenges that lie ahead for all of us concerned with maximizing the student learning in the exciting world of distance education.

American Assembly of Collegiate Schools of Business (1980). Accreditation research project: Report of Phase I. AACSB Bulletin 15(2), 1 – 31.

American Assembly of Collegiate Schools of Business (1984). Outcome measurement project of the accreditation research committee: Phase II report. St. Louis, MO.: Author

American Assembly of Collegiate Schools of Business (1987). Outcome Measurement project: Phase III report. St. Louis, MO.: Author

American Assembly of Collegiate Schools of Business. (1989). Report of the AACSB task force on outcome measurement project. St. Louis, MO.: Author

American Assembly of Collegiate Schools of Business. (1996). Achieving quality and continuous improvement through self-evaluation and peer review. Accreditation handbook of the American Assembly of Collegiate Schools of Business. St. Louis, MO.: Author

Angelo, T. A. (1996). Transforming Assessment: High standards for higher learning. AAHE Bulletin April, 3 – 4.

Angelo, T. A., and Cross K. P. (1993). Classroom Assessment Techniques: A handbook for college teachers (2nd ed.) San Francisco: Jossey-Bass.

Arbaugh, J. B. (2000b). Virtual classroom versus physical classroom: An exploratory study of class discussion patterns and student learning in an asynchronous Internet-based MBA course. Journal of Management Education 24, 213 – 233.

Arbaugh, J. B. (2000c). An exploratory study of the effects of gender on student learning and class participation in an Internet-based MBA course. Management Learning 31, 503 – 519.

Baldwin, T. T, Bedell, M. D., and Johnson, J. L. (1997). The social fabric of a team based M.B.A. program: Network effects on student satisfaction and performance. Academy of Management Journal 40, 1369 – 1397.

Banta, T. (1993). Summary and Conclusions: Are we making a difference? In T. Banta, (Ed.) Making a difference: Outcomes of a decade of assessment in higher education. San Francisco: Jossey-Bass.

Banta, T., Lund J., Black K., and Olander F. (1996). Assessment in Practice: Putting principles to work on college campuses. San Francisco: Jossey-Bass.

Baruch, Y., and Leeming A. (1996). Programming the MBA Programme: The quest for curriculum. Journal of Management Development 15, 27 – 37.

Beale, A. V. (1993). Are your students learning what you think you’re teaching? Adult Learning 4, 18 – 26.

Boyatzis, R. E., Baker A., Leonard L., Rhee K., and Thompson L. (1995). Will it make a difference? Assessing a value-added, outcome-oriented, competency-based professional program. In R. E. Boyatzis, S. S. Cowen, and D. A. Kolb, (Eds.) Innovation in Professional Education: Steps on a journey from teaching to learning. San Francisco: Jossey-Bass.

Boyatzis, R. E., and Kolb, D. A. (1991). Assessing Individuality in Learning: The learning skills profile. Educational Psychology 11, 279 – 295.

Boyatzis, R. E., Cowen, S. S., and Kolb, D. A. (1995). A learning perspective on executive education. Selections 11, 47 – 55.

Boyatzis, R. E., and Kolb, D. A. (1995). From Learning Styles to Learning Skills: The executive skills profile. Journal of Managerial Psychology 10, 3 – 17.

Bragg, D. 1995. Assessing Postsecondary Vocational-Technical Outcomes: What are the alternatives? Journal of Vocational Education Research 20, 15 – 39.

Cook, K. C. (2000). Online professional communication: Pedagogy, instructional design, and student preference in Internet-based distance education. Business Communication Quarterly 63(2), 106 – 110.

Davenport, C. (2001). How frequently should accreditation standards change. In J. Ratcliff, E. Lubinescu, and M. Gaffney (Eds.) How Accreditation Influences Assessment: New directions for higher education. San Francisco: Jossey-Bass.

Edwards, D. E., and D. E. Brannen. (1990). Current status of outcomes assessment at the MBA level. Journal of Education for Business 65, 206 – 212.

Ewell, P. (1997). Strengthening assessment for academic quality improvement. In M. W. Peterson, D. D. Dill, and A. Mets (Eds.) Planning and Management for a Changing Environment. (p. 360-381). San Francisco: Jossey-Bass.

Ewell, P. (2000). Accountability with a Vengeance: New mandates in Colorado. Assessment Update 12(5), 14 – 16.

Fornaciari, C. J., Forte, M., and Mathews, C. S. (1999). Distance Education as Strategy: How can your school compete? Journal of Management Education 23, 703 – 718.

Haga, M., and Heitkamp, T. (2000). Bringing social work education to the prairie. Journal of Social Work Education 36, 309 – 324.

Harrington, T., and O’Shea A. (1993). The Harrington-O’Shea Career Decision-Making System Revised Manual. Circle Pines, MN: Career Planning Associates, Inc. American Guidance Service.

Harrington, T., and Schafer W.D. (1996). A comparison of self-reported abilities and occupational ability patterns across occupations. Measurement and Evaluation in Counseling and Development 28(4), 180 – 90.

Hilgert, A. D. (1995). Developmental outcomes of an executive MBA programme. Journal of Management Development 14, 64 – 76.

Kedia, B. L., and Harveston, P. D. (1998). Transformation of MBA programs: Meeting the challenge of international competition. Journal of World Business 33, 203 – 217.

Kelso, G., J., Holland, J, and Gottfredson, G. (1977). The relation of self-reported competencies to aptitude test scores, Journal of Vocational Behavior 10, 99 – 103.

Kempen, G. I. J. .M., van Heuvelen, M. J. G., van den Brink, R. H. S., Kooijman, A. C., Klein, M., Houx, P. J., and Ormel, J. (1996). Factors affecting contrasting results between self-reported and performance-based levels of physical limitations. Age and Aging 25, 458 – 65.

Kirkwood, R. (1981). Process or outcomes: A false dichotomy. In T. Stauffer (Ed.) Quality: Higher education’s principal challenge (pp. 63-68). Washington D.C.: American Council on Education.

Kolb, D. A. (1984). Experiential learning: Experience as the source of learning and development. Englewood Cliffs, NJ.: Prentice Hall.

Kretovics, M. (1999). Assessing the MBA: What do our students learn? Journal of Management Development 18(2), 125 – – 136.

Kretovics, M., and McCambridge, J. (1998). Determining what Employers Really Want: Conducting regional focus groups, Journal of Career Planning and Employment 58(2), 25 – 30.

Levine, A. (2001). The remaking of the American university. Innovative Higher Education 25, 253 – 267.

Moore, M. (1989). Distant Education: A learner’s system. Lifelong Learning,2, pp. 8 – 11.

Moore, T. E. (1997). The Corporate University: Transforming management education. Accounting Horizons 11, 77 – 85.

Mulligan, R., and Geary, S. (1999). Requiring writing, ensuring distance-learning outcomes. International Journal of Instructional Media 26, 387 – 396.

Murphy, T. H. (1996). Agricultural Education and Distance Education: The time is now. The Agricultural Education Magazine 68, 3 – 11.

O’Hanlon, N. (2001). Development, delivery, and outcomes assessment of a distance course for new college studies. Library Trends 59, 8 – 27.

Pascarella, E. T., and Terenzini, P. T. (1990). How College Affects Students. San Francisco: Jossey-Bass.

Phillips, M. R., and Peters, M. J. (1999). Targeting rural students with distance learning courses: A comparative study of determinant attributes and satisfaction levels. Journal of Education for Business 74, 351 – 356.

Polomba, C. A., and Banta, T. W. (1999). Assessment Essentials; Planning, Implementing and Improving Assessment in Higher Education. San Francisco: Jossey-Bass.

Palomba N. A. and Palomba C. A. (1999). AACSB accreditation and assessment in Ball State University’s College of Business. Assessment Update 11, 3 – 15.

Ponzurick, T., France, K. R., and Logar, C. (2000). Delivering Graduate Marketing Education: An analysis of face-to-face versus distance education. Journal of Marketing Education 22(3), 180 – 187.

Squires, P. (1993). An application of the Learning Skills Model and the Boyatzis Managerial Competency Model: Competencies that distinguish between superior and average performing managers within public libraries. (Doctoral dissertation, Texas Woman’s University 1993/1994) Dissertation Abstracts International, 55, AAC 9417360.

Switzer, J. S. (1994). Attitudes and Perceptions in a Television-Mediated Environment: A Comparison of on- and off-campus MBA students. Unpublished Master’s thesis. Colorado State University.

Terenzini, P. T. (1989). Assessment with Open Eyes: Pitfalls in studying student outcomes. Journal of Higher Education 60, 644 – 664.

Weigel, V. (2000). E-learning and the tradeoff between richness and reach in higher education. Change 32(5) 10 – 15.

Worley, R. B. (2000). The medium is not the message. Business Communication Quarterly 63(3), 93 – 106.