|

|

Naghmeh Aghaee and Henrik Hansson

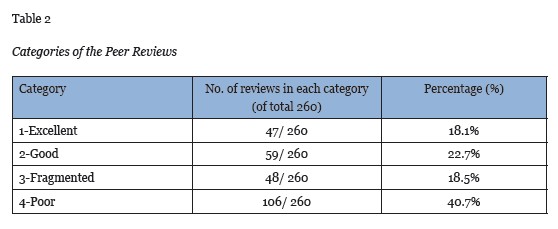

Stockholm University, Sweden

This paper describes a specially developed online peer-review system, the Peer Portal, and the first results of its use for quality enhancement of bachelor’s and master’s thesis manuscripts. The peer-review system is completely student driven and therefore saves time for supervisors and creates a direct interaction between students without interference from supervisors. The purpose is to improve thesis manuscript quality, and thereby use supervisor time more efficiently, since peers review basic aspects of the manuscripts and give constructive suggestions for improvements. The process was initiated in 2012, and, in total, 260 peer reviews were completed between 1st January and 15th May, 2012. All peer reviews for this period have been analyzed with the help of content analysis. The purpose of analysis is to assess the quality of the students work. The results are categorized in four groups: 1) excellent (18.1%), 2) good (22.7%), 3) fragmented (18.5%), and 4) poor (40.7%). The overall result shows that almost 40% of the students produced excellent or good peer reviews and almost as many produced poor peer reviews. The result shows that the quality varies considerably. Explanations of these quality variations need further study. However, alternative hypotheses followed by some strategic suggestions are discussed in this study. Finally, a way forward in terms of improving peer reviews is outlined: 1) development of a peer wizard system and 2) rating of received peer reviews based on the quality categories created in this study. A Peer Portal version 2.0 is suggested, which will eliminate the fragmented and poor quality peer reviews, but still keep this review system student driven and ensure autonomous learning.

Keywords: Autonomous learning; online collaboration; computer-mediated communication; SciPro; peer review; peer portal; thesis process

Online collaboration through computer-mediated communication (CMC) is fostered in current educational practices, which reflect the growing adoption of tools in different computer-supported collaborative learning (CSCL) environments (De Wever, Schellens, Valcke, & Van Keer, 2006). In spite of the conceptual variety of online communication tools in educational practices, most environments aim to support students to exchange messages with one another.

Peer reviews are used in many contexts in order to improve the quality of research papers and reports, which is an important and fundamental part of scientific discourse. The purpose of peer reviews could be different; in some cases, the purpose is to validate the authenticity and novelty of a scientific contribution worthy of publication in a research journal. In fact an important quality “label” of a journal is that it is peer reviewed. The higher the status of the reviewers, the higher the status the journal is accredited with. Several ranking systems of journals are used; see for instance the “Norwegian model” (Norwegian Social Science Data Services (NSD), 2012), which is used by Swedish universities. This is an example of peer review of scientific end products (final articles) and by experienced reviewers. However, the peer-review process can also be utilized in other contexts. To read and evaluate someone else’s work is a valuable experience for the reviewer as well as for the author, who receives the review. Therefore, peer review is even useful for educational purposes.

This paper focuses on peer review as part of the quality enhancement process in thesis production (bachelor’s and master’s). Student peer reviews can be conducted face to face and/or by using online technology. As part of a larger support system for thesis writing, SciPro (Hansson, 2012; Hansson & Moberg, 2011; Hallberg, Hansson, Moberg, & Hewagamage, 2011; Larsson & Hansson, 2011; Hansson, Collin, Larsson, & Wettergren, 2010; Hansson, Larsson, & Wettergren, 2009), an online peer review module, was developed as a bachelor’s thesis (Kjellman & Peters, 2011). The purpose of this module (Peer Portal) is to facilitate interaction between student peers during the thesis process, from project proposal to the discussion section, in order to increase the quality of theses. The aim of this paper is to describe the design of the online peer review module, the Peer Portal, and to evaluate students’ performances when using this module to do the peer reviews.

According to some studies (including Woolley & Hatcher, 1986; Gay, 1994; Lightfoot, 1998; Sharp, Olds, Miller, & Dyrud, 1999; Guilford, 2001), students need additional support to understand the scientific writing and peer-review process. They need to learn about the steps of the scientific writing process or at least parts of the strategies for publishing and peer reviewing. Different practitioners have discussed peer reviews previously (including Simkin & Ramarapu, 1997; Iyengar et al., 2008; Brammer & Rees, 2007) as a significant component and critical element of writing, which is one of the major cornerstones of a scholarly and educational publication. The peer review process is an important step, which is usually considered as a “gold standard” (Hjørland, 2012). The peer review process is a progressive method, which is now more accepted and commonly used in educational learning and academic writing in higher education. Some studies (Sharp et. al., 1999; Guilford, 2001; Venables & Summit, 2003) discuss peer review at the undergraduate and graduate level as a useful tool to both support students to improve the quality of their scientific writings and to reduce supervisors’ workloads.

The peer review process is deeply discussed in some studies (including Cicchetti, 1991; Starbuck, 2005; Chubin & Hackett, 1990) and criticized for its reliability and how well it really works. As mentioned by Brammer and Rees (2007), there are still frequent bitter complaints from students that peer reviews are a waste of time, or some blame their peers for not finding significant mistakes. There are also staff members who grumble about the poor quality of students’ papers and that students do not stay on task during the peer-review process (Brammer & Rees, 2007). Although these issues or observations may not be supported by the theories, they are still challenges in educational systems.

This paper investigates an emerging topic; hence, an explanatory and exploratory study is conducted. Exploratory research initially employs a broad perspective regarding a specific issue and the results crystallize as it progresses (Adams & Schvaneveldt, 1991). In the next section, general information about how to use the peer portal is explained. Then the quality of peer reviews in a sample is explored by using content analysis tools. Content analysis, with the help of computer-mediated and analysis tools, has been a fast-growing, systematic, and objective analysis technique for message characteristics and manifest content of communication (Berelson, 1952; Riffe & Freitag, 1997; Yale & Gilly, 1988; Neuendorf, 2002). This analysis includes the examination of human interactions (Neuendorf 2002) mostly for quantitative, but also qualitative, data analysis (Elo & Kyngäs, 2008). Content analysis is often used to analyze information from asynchronous computer-mediated communications and discussions in formal educational settings, which is not situated at the surface of the transcripts (De Wever et al., 2006). Content analysis is an appropriate method for analysis of messages in mass communication studies (Lombard, Snyder-Duch, & Bracken, 2002).

In this study, content analysis has been chosen as an analysis tool since this study is related to the examination of human interactions and educational settings. This requires profound analysis of the characteristics of peer reviews to provide a clear explanation about the need for a peer portal in higher education. The focus of this article is on the analysis of the quality of the peer reviews in order to investigate how many students understand the peer review process and follow the instructions to fulfill the requirements. The analysis procedure involves three phases: 1) assessing part of the peer reviews in order to establish the categories, 2) assessing the quality of all peer reviews in the assigned time period, and 3) developing hypotheses and suggestions.

In the first phase, based on 150 peer reviews completed during the period of January 1st and May 15th, 2012, four qualitative and mutually exclusive categories were established: 1) excellent, 2) good, 3) fragmented, and 4) poor. The category concepts and criteria were modified in this phase as part of the qualitative process until a useful grounded theory was created. The criteria for each category and category label were validated by external reviewers and tested empirically with the content of the additional peer reviews in the second phase. These categories and the categorizing criteria of peer reviews are described in detail in the “Peer review quality criteria and analysis” section.

In the second phase, the quality of the remaining peer reviews (110) during the same period of time was assessed and the percentage of reviews belonging to each category was determined based on the total number of analyzed peer reviews (260). This proved the validity of categories developed, and no further modifications were required when adding the additional 110 analyzed peer reviews. In this phase, more details are taken into account for each peer review to investigate how many of the peer reviewers understand the review process or follow the criteria (defined in Table 1) and cover the discussed issues in each category (explained in the “Peer review quality criteria and analysis” section). Moreover, how the peer portal helps users to achieve better results and produce higher quality reviews is explored. Finally, in the last phase, a set of hypotheses was formulated in order to tentatively explain the reasons for quality variations found in the online peer reviews. Based on the findings strategic suggestions for improvements are listed and general conclusions are drawn.

Some reviews were in English and the rest were in Swedish. The language of the reviews mainly depends on the language of the thesis drafts, which in most of the cases reflects whether the thesis belongs to the bachelor’s or master’s level. However, the system does not register the completed peer reviews according to educational levels (bachelor’s or master’s). Female students performed approximately 98 of 260 (38%) of the reviews. This number is only based on the names of the reviewers, and in some cases there is a risk that it is mistaken. This number also disregards the duplication of individuals, who might have done several peer reviews on different theses. The quality of the reviews was not correlated to gender.

This section describes the design of the online peer review module in SciPro followed by the next section, which evaluates students’ peer review performances when using this module. The aim of developing this module is to support the peer-review process and improve the quality of scientific writings. Specifically, the purpose is to enhance autonomous learning and working independently and to support asynchronous communication among students to learn from each other, so that both thesis peer reviewers and authors (students, who write the thesis) benefit. Moreover, this strategy helps reduce supervisors’ workload because they are not assigned as reviewers and do not have to teach each student about the peer-review process. A description of how the peer-review system works and the purpose of peer reviews in this context is available in SciPro. Students are instructed in the SciPro system for the purpose of the peer review in the following way:

Regard yourself as an advisor rather than an almighty judge. Provide useful critique and constructive feedback, so that the author can improve his/her thesis. Remember, it is not a finished manuscript, but a work in progress. You can help improve the quality and in the process you will learn something as well. Do not waste your time, the author’s time or the supervisor’s time by doing it superficially. Make a deep analysis, and analyze according to the checklist, your methodological skills and creativity. Go beyond the text, what alternatives are there? Can you provide some interesting ideas, URLs or reading material to the author? The peer review process is between peers. However, supervisors will be able to check out the quality of your reviews. By providing good reviews you demonstrate that you understand research concepts, theories, and the subject matter.

Moreover, students are informed about the required amount of work in the following way:

The supervisor decides how many peer reviews you need to do. However, it is mandatory to do at least two peer reviews during the course period. It is recommended to do an initial peer review on the “Project plan” and one on “Background, aim, methods and ethics”. The number of reviews you want to receive equals the number of reviews you need to do. In summary, the more feedback you want on your own work, the more feedback you need to provide.

When a student would like to request a peer review, the section “Request peer review” allows her/him as an author to express the need or desire for a review on a specific part of the report. The following steps need to be followed by authors of the thesis in order to fulfill the requirements and understand the process.

Step 1: Read the instructions to get valuable information about how the system works.

Step 2: Attach the file(s) that are supposed to be read by peer reviewers. If there is more than one file, it is recommended to zip them together before uploading.

Step 3: Write review guideline/comment. Here you can add a note to specify any part in your report you want to have reviewed (e.g., the language).

Step 4: Select a suitable review template (optional but recommended). The students can pick one of the available “Questions checklists” as a guide for assessment for the peer review. The checklists are based on a large number of established scientific methods in course literature and on senior supervisors’ experience. The questions in the available checklists focus on methodological and process aspects of the thesis work. System designers determine the selection and structure of the questions once researchers and senior supervisors at the department consult and validate the selection.

Step 5: Submit the request for review by clicking on the button at the bottom of the page.

There are also steps to write a peer review. The more the following steps are taken into consideration by the peer reviewers, the higher the quality of the peer reviews. This is not an anonymous review process. The names of both authors and reviewers are visible in the system. Students are able to choose any thesis within any research area, but only on the same level (i.e., bachelor’s or master’s level). However, once a manuscript is chosen by the first reviewer, it will no longer be available for other students, unless the author uploads the same manuscript several times. The procedure consists of the following steps.

Step 1: To start the peer review process, select the “Perform peer review” tab.

Step 2: Click on the arrow to the right of the thesis report that is to be reviewed.

Step 3: Click on “Review this request” to start the review process.

Step 4: Start by downloading the file available in the “Attachment”.

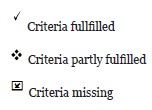

Step 5: If a checklist is chosen by authors to be followed by peer reviewers, the colored circles in the traffic light signals need to be selected for the respective question. Each traffic light signal (see Figure 1) is related to a comment box for additional explanations and motivation for the chosen color. Traffic light signals are used as an overall assessment (green = well-done [OK], yellow = minor corrections required, and red = the requirement is not fulfilled/not OK). If no checklist is available then the authors’ instructions should be followed by the peer reviewer.

Step 6: If a checklist is chosen by authors, peer reviewers need to provide analytic responses related to each pre-defined question in the selected checklist. Otherwise, if no checklist is chosen by authors, the peer reviewers are required to provide their analysis in a free but structured way relating to specific sections in the manuscript.

Step 7 (optional but recommended): Add detailed comments in the original thesis file or a separate file to connect the expressed statements to the related parts. Guide the author by defining what is missing and where in the thesis changes should be made. Then attach the file at the bottom of the page.

Step 8: When the review is completed and the file is uploaded, click on “submit peer review”. Then, the peer review process has to be completed within three days after selecting the thesis report.

Under the tab “My requests & reviews”, users have an overview of their request(s), the response(s) to them, and their own reviews. Here users have the possibility of commenting on the reviews they receive from others or of engaging in discussion with the author of the thesis that they have reviewed.

In the peer-review process, there are some significant issues that should be taken into consideration. It is required that reviewers understand the significance of providing clear insight into any deficiencies and explain their judgment process, so that the authors will be able to understand the reasoning behind the given comments. It is also important to consider the thesis grading criteria and hence give comments based on the explanations about the required items needed to be covered by each thesis work. Commentaries should be courteous and constructive, without including any personal remarks or problems regarding the issues discussed in the paper. Reviewers need to indicate whether the given comments are their own opinion or reflect scientific references. If the comments reflect references, the original reference needs to be provided clearly.

Reviewers should regard themselves as advisors rather than almighty professional judges. They need to try to provide useful critique and constructive feedback, plus point out the missing required information, so that based on those comments the authors can improve the quality of their works. Moreover, based on how the system works, it is expected that reviewers provide sufficient feedback, both in respect to positive and negative issues, to motivate authors to enhance the quality of the thesis.

Based on the issues above, the reviewers are expected to 1) provide their general views by going through the optional checklist, traffic light signals (green = well-done, yellow = needs slight revision, red = requires more effort to fulfill the requirements); 2) briefly explain the issues in each comment box; 3) produce a report or comment on the original document to cover key elements of their views on each particular issue to address the point and what is required to be considered or modified. It is important for reviewers to be neutral and to use a professional and scientific manner without involving their personal emotions.

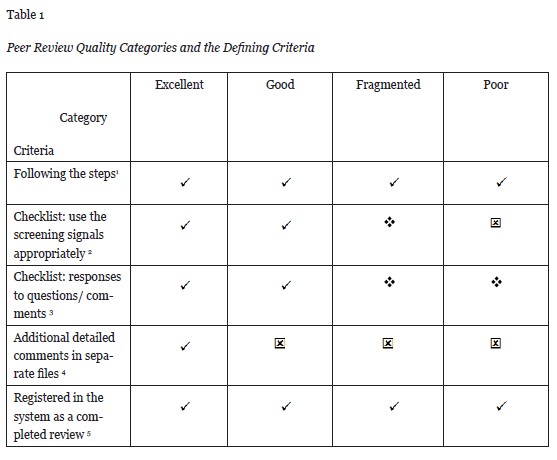

As mentioned above, 260 completed peer reviews were categorized as follows: 1) excellent, 2) good, 3) fragmented, and 4) poor. Table 1 provides a general picture of the categorizing criteria. Further, the criteria and reasons for grouping peer reviews into these four categories are explained in detail for each category.

1 - Excellent peer reviews (18.1%)

In this category, peer reviewers followed the checklist selected by the thesis’ authors. The peer reviewers gave enough effort to identify and analyze the strengths and weaknesses of the thesis manuscript, and, accordingly, they provided support for the author to develop the thesis. The peer reviewers used the traffic light signals correctly and explained general problems. They provided relevant comments based on the grading criteria to answer all or almost all the checklist questions. In most of the reviews in this category, reviewers followed the questions from the optional checklist one by one. Those who did not use the checklist directly made comments based on the questions and covered almost all the required issues. In some cases, reviewers encouraged authors to communicate with them or discuss further the highlighted issues if needed. Moreover, reviewers provided additional details in a separate file to point out specific issues that judgments were based on. With respect to all the issues discussed above, approximately 18% of the reviews in the selected sample belong to this category. Figure 1 is an example of an excellent review, done by a master’s student, to illustrate how the system is supposed to be used.

2 - Good quality peer reviews (22.7%)

In this category, reviewers follow most of the instructions and questions in the checklists. They use the traffic light signals correctly and provide constructive comments in each comment box. However, in this group, reviewers do not point out the details or add any specific comments in the original text to clarify their judgment. In some cases, reviewers use examples or illustrations to point out the issues they bring up. However, further specification about the required modifications, where the subject is mentioned in the original text or what the author should specifically consider, is missing. In this category, most of the reviewers, who provide quite good general comments, do not provide an additional document for the author to specifically show where the required modifications should be applied. In respect to these criteria, approximately 23% of the reviews belong to this category.

3 - Fragmented peer reviews (18.5%)

In this category, approximately 18% of the peer reviews, peer reviewers fulfill only a few criteria according to the instructions. The peer reviewers briefly discuss only a few issues that need reconsideration. However, there is a need for further explanation about why those changes are required and what should be added. The reviewers do not go through all the checklist questions and skip answering most of them. They do not appear to know about all parts of the thesis and do not touch upon necessary issues. Comments in this category include general remarks from some references, which in most cases are not connected to the original reference. Moreover, no detailed comments or constructive feedback are provided or attached.

4 - Poor peer reviews (40.7%)

In the last category, peer reviewers do not follow the instructions, checklist questions or criteria, and do not properly use the traffic light signs. Reviewers only fill in a few words to answer less than 20% of the checklist questions, without any follow-up discussion or clarification. In this category, the comments are very general (e.g., “the thesis is very poor” or “this point is not discussed”). In most of the cases in this group, the impression is that reviewers do not read the instructions properly and do not spend enough time to read the entire thesis before commenting on it. Some reviewers use the wrong traffic light signal; for instance, they choose the green sign (which means the checklist item is entirely fulfilled) and they comment in the comment box, “the issue is not discussed at all”, or vice versa, choose the red sign and say, “it is perfectly done”. This shows that most of the reviewers in this category, which has the highest percentage of reviews (approximately 41%), do not read the reviewing instructions and apparently only want to finish the task, without concern for the quality of their reviews.

Based on the categories above, the result of the chosen sample (260 peer reviews) is shown in Table 2. This table illustrates the quality of the completed peer reviews of the uncompleted thesis manuscripts done by bachelor’s and master’s students. The four categories, discussed above, are presented in this table with the number and percentage of peer reviews belonging to each category.

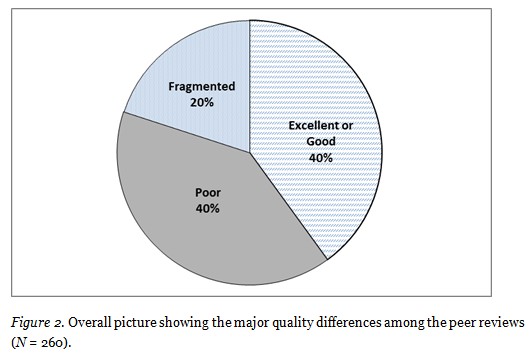

The categories are merged further based on Table 2 into three major parts, “Excellent or Good”, “Fragmented”, and “Poor” to provide a general overview (Figure 2).

The online peer review module, the Peer Portal, provides opportunities for asynchronous communications, dialogs, and discussions for students during the thesis process. By following the required steps, both the thesis author and the peer reviewer take advantage of the peer review. The reviewer learns about new issues discussed in the thesis and the author receives some feedback to enhance the quality of the thesis. The constructive and relevant feedback that students get from their peer reviewers provides opportunities to enhance the unfinished thesis manuscripts before sending them to their supervisors. Moreover, using the online Peer Portal makes the process more efficient and effective for both students and supervisors. Without this system, supervisors must assign each peer reviewer manually, control the relevance of the topic, check with the students whether or not they would like to review the specific thesis, and consider the language issues (non-Swedish speaking students cannot review Swedish theses). The peer portal supports students to select topics relevant to their own subject area and develop their knowledge, provide opportunities to have open discussions, ask relevant questions, or get help from their reviewers to enhance the quality of their work.

This study explored how the peer review portal is used and what might be the limitations that require further development. Based on the findings from the analysis of 260 peer reviews, approximately 40% of students follow the instructions and take advantage of the peer-review process. Finding some good peer reviews proves that there are students who are interested in learning from others’ experiences and in supporting their fellows to enhance thesis quality. In some cases, the authors ask for further comments or explanations from the reviewers. For instance, a student as an author asks the reviewer: “Could you please dedicate more time in reviewing my paper and send it to me as soon as you can. Hence, the quality of my paper relies on the quality of the review.” Or in another case, the author asks for further references from the reviewer to develop his knowledge about the discussed issue. This means that some students are interested in getting constructive feedback or detailed comments from their peers to enhance the quality of their theses.

As shown in Figure 2, approximately 60% of students deliver fragmented or poor peer reviews. The result shows that a large number of the reviewers in this group do not spend sufficient time to understand the review process and how the system works. They write very short comments (i.e., only a few words or sentences to get the task done, regardless of the quality of the review). Despite varying results the Peer Portal system has so far created more than 6,100 structured question-and-answer interactions (checklists used contained on average 15 questions). These interactions between students about their thesis manuscripts and positive learning effects, which are not visible in reviews, are taking place through this process. In order to understand the underlying reasons for the different results from different groups, further studies are required. Yet, some hypotheses have been developed here.

The developed peer review system, the Peer Portal, facilitated more than 400 autonomous peer review interactions between students during 2012. The purpose was to make a structured, self-managed, student-driven system for quality enhancement of thesis manuscripts. This objective has been fulfilled. About 40% of the students produced excellent or good reviews; however, the rest produced low quality (fragmented or poor) peer reviews (see Figure 2). The underlying philosophy with this peer review approach is that students should manage their involvement without supervisors’ intervention firstly because supervisors might change the nature of dialogues (create a power asymmetry) and secondly because the Peer Portal is meant to reduce the work load for supervisors, not create another task to take care of. This is why, as this study clearly shows, leaving the interactions open and unmonitored is not enough. It might be satisfactory when 40% of the students do reasonably acceptable peer reviews. However, since the interaction is asymmetric because more than 40% of the students do not deliver peer reviews up to the standards, further support mechanisms are required to improve the overall quality and make the interactions fair among students.

In line with the results and philosophy, further development might include introducing a rating system with four quality levels representing the categories that have been developed in this study (excellent, good, fragmented, poor). By using such a rating system (e.g., indicated by 1-4 stars), students would be able to rate their received peer reviews, and thereby the poor peer reviews would be filtered out by the system. This development would empower students who want to do good peer reviews and produce the right incentives. This would clearly make best-practice students visible to all, including supervisors, and likewise the bad-quality reviewers would be easily spotted. It is believed that the social pressure introduced would contribute to better results. Moreover, a more structured peer-review process with mandatory steps built in would make students use the available checklists and fill in all required fields. A peer review surveyor might be introduced to follow up, analyze, and give feedback to students about their peer reviews. Furthermore, support from supervisors or the SciPro support-group to introduce face-to-face peer-review workshops for students would also encourage reviewers to learn more and hence improve the quality of their works. Yet, essentially the vision is, the more student driven the Peer Portal activities are, the better it is for students, supervisors, and the department.

In some cases the feedback between students using the Peer Portal is of higher quality than feedback provided by supervisors. Since the Peer Portal is completely student driven, it could be scaled up and become an international crowd sourcing system that benefits a large number of students and supervisors in their common goal of intensifying feedback in the supervision process leading to high quality theses. The whole ethos and understanding when using the Peer Portal should be that of encouragement and exchange of innovative, creative, and critical ideas with the overall goal of creating quality theses and scientific minds.

Based on our findings in this study the following studies would be of interest to pursue:

Thanks to all who helped in the preparation of this paper. This includes the SciPro technical team, especially Jan Moberg, Fredrik Friis, Dan Kjellman and Martin Peters, who developed the Peer Portal. Special thanks to William Jobe for the language improvements of the article. And finally thanks to students and faculty at the department of Computer and System Sciences (DSV), Stockholm University, who provided support to develop this article.

Adams, G., & Schvaneveldt, J. (1991). Understanding research methods (2nd ed.). New York: Longman.

Berelson, B. (1952). Content analysis in communication research. Glencoe, IL: Free Press.

Brammer, C., & Rees, M. (2007). Peer review from the students’ perspective: Invaluable or invalid? Composition Studies, 35(2), 71-85.

Chubin, M., & Hackett, E. (1990). Peerless science: Peer review and US science policy. New York, NY, USA: State University of New York Press.

Cicchetti, D. V. (1991). The reliability of peer review for manuscript and grant submissions: A cross-disciplinary investigation. Behavioral and Brain Sciences, 14(1), 119-135.

De Wever, B., Schellens, T., Valcke, M., & Van Keer, H. (2006). Content analysis schemes to analyze transcripts of online asynchronous discussion groups: A review. Computers & Education, 46(1), 6-28.

Elo, S., & Kyngäs, H. (2008). The qualitative content analysis process. Journal of Advanced Nursing, 62(1), 107–115.

Gay, J. T. (1994). Teaching graduate students to write for publication. The Journal of Nursing Education, 33(7), 328-329.

Guilford, W. (2001). Teaching peer review and the process of scientific writing. Advances in Physiology Education, 25(3), 167-175.

Hallberg, D., Hansson, H., Moberg, J., & Hewagamage, H. (2011). SciPro from a mobile perspective: Technology enhanced supervision of thesis work in emerging regions. Aitec East Africa ICT summit at Oshwal Centre, Nairobi, Kenya.

Hansson, H. (2012). 4-excellence: IT system for theses. Going Global: Internationalising higher education. British Council Conference, London, UK.

Hansson, H., & Moberg, J. (2011). Quality processes in technology enhanced thesis work. 24th ICDE World Conference on Open and Distance Learning, Bali, Indonesia.

Hansson, H., Collin, J., Larsson, K., & Wettergren, G. (2010). Sci-Pro improving universities core activity with ICT supporting the scientific thesis writing process. Sixth European Distance and E-Learning Network (EDEN) Research Workshop, Budapest.

Hansson, H., Larsson, K., & Wettergren, G. (2009). Open and flexible ICT: Support for student thesis production: design concept for the future. In A. Gaskell, R. Mills, R & A. Tait (Eds.) The Cambridge International Conference on Open and Distance Learning. Cambridge, UK: The Open University.

Hjørland, B. (2012). Methods for evaluating information sources: An annotated catalogue. Journal of Information Science, 38(3), 258-268.

Iyengar, R., Diverse-pierluissi, M. A., Jenkins, S. L., Chan, A. M., Devi L. A., Sobie, E. A. et al. (2008). Inquiry learning: Integrating content detail and critical reasoning by peer review. Science, 319(5867), 1189-1190.

Kjellman, D., & Peters, M. (2011). Development of peer portal enabling large-scale individualised peer reviews in thesis writing (Unpublished bachelor thesis). Stockholm University, Department of Computer- and Systems Sciences, Sweden.

Larsson, K., & Hansson, H. (2011). The challenge for supervision: Mass individualization of the thesis writing process with less recourses. Online Educa Berlin 2011 - 17th International Conference on Technology Supported Learning & Training (ICWE, 2011).

Lightfoot, J. T. (1998). A different method of teaching peer review systems. Advances in Physiology Education, 19(1), 57-61.

Lombard, M., Snyder-Duch, J., & Bracken, C. C. (2002). Content analysis in mass communication: Assessment and reporting of intercoder reliability. Human Communication Research, 28(4), 587-604.

Neuendorf, K. (2002). The content analysis guidebook. Thousand Oaks, CA: Sage Publications.

Norwegian Social Science Data Services (NSD). (2012). Publiseringskanaler – dokumentasjon. Retrieved from http://dbh.nsd.uib.no/kanaler/hjelp.do.

Riffe, D., & Freitag, A. (1997). A content analysis of content analyses. Journalism and Mass Communication Quarterly, 74(4), 873-882.

Sharp, J. E., Olds, B. M., Miller, R. L., & Dyrud, M. A. (1999). Four effective writing strategies for engineering classes. Journal of Engineering Education, 88(1), 53-57.

Simkin, M. G., & Ramarapu N. K. (1997). Student perceptions of the peer review process in student writing projects. Journal of Technical Writing and Communication, 27(3), 249-263.

Starbuck, W. H. (2005). How much better are the most prestigious journals? The statistics of academic publication. Organization Science, 16(2), 180-200.

Venables, A., & Summit, R. (2003). Enhancing scientific essay writing using peer assessment. Innovations in Education and Teaching International, 40(3), 281-290.

Woolley, A. S., & Hatcher, B. J. (1986). Teaching students to write for publication. The Journal of Nursing Education, 25(7), 300-301.

Yale, L., & Gilly, M. C. (1988). Trends in advertising research: A look at the content of marketing-oriented journals from 1976 to 1985. Journal of Advertising, 17(1), 12-22.