|

Chadchadaporn Pukkaew

Asian Institute of Technology, Thailand

This study assesses the effectiveness of internet-based distance learning (IBDL) through the VClass live e-education platform. The research examines (1) the effectiveness of IBDL for regular and distance students and (2) the distance students’ experience of VClass in the IBDL course entitled Computer Programming 1. The study employed the common definitions of evaluation to attain useful statistical results. The measurement instruments used were test scores and questionnaires. The sample consisted of 59 first-year undergraduate students, most of whom were studying computer information systems at Rajamangala University of Technology Lanna Chiang Mai in Thailand. The results revealed that distance students engaged in learning behavior only occasionally but that the effectiveness of learning was the same for distance and regular students. Moreover, the provided computer-mediated communications (CMC) (e.g., live chat, email, and discussion board) were sparingly used, primarily by male distance students. Distance students, regular students, the instructor, and the tutor agreed to use a social networking site, Facebook, rather than the provided CMC during the course. The evaluation results produce useful information that is applicable for developing and improving IBDL practices.

Keywords: Internet-based distance learning; effectiveness of internet-based distance learning; VClass e-education platform; evaluation process

Distance learning involving communication technology such as internet-based distance learning (IBDL) enables institutions to conduct classes on limited budgets and with limited teaching staff while providing the same education quality to both distance and regular students. Royalty-free software applications that are designed for education, such as the VClass learning management system (VClass LMS), are ideal for circumventing budget and teacher shortages, and many universities have adopted IBDL. The effectiveness of online learning environments must be examined, and methodologies for evaluating this effectiveness are necessary. Therefore, this study assesses the effectiveness of the IBDL pedagogy provided by the VClass e-education platform in live mode via a designed evaluation process and discusses its implementation.

The following sections describe the research goal and objectives as well as the design and details of the evaluation process and its implementation for IBDL. Subsequently, a discussion of the results and a summary and conclusion are presented.

IBDL technology is vital to educational institutions, regardless of budget and teaching staff. This technology provides the same quality of education to regular and distance students. Evaluation plays a key role in the utilization of IBDL in educational institutions.

This study assesses the effectiveness of IBDL implemented through the VClass e-education platform in an educational institution to aid in determining its feasibility in universities. Specifically, this study has the following three research objectives:

Several studies have proposed evaluation models for IBDL (Baker, 2003; Passerini & Granger, 2000; Sims, Dobbs, & Hand, 2002). Passerini and Granger (2000) proposed a development framework that comprised a behaviorist step-by-step development process that focused on the constructivist paradigm. This framework consisted of analysis, design, development, evaluation, and delivery processes, which were similar to the phases of the system development life cycle. In evaluating the framework, a variety of methods (e.g., questionnaires, user focus groups, or interviews) could be utilized as formative evaluations, and summative evaluations occurred after the instruction was implemented. These evaluations were designed to assess the overall effectiveness of the instructional layout. Feedback on a variety of criteria, particularly the asynchronous and synchronous communication experience, could be refined from both formative and summative evaluations. Sims, Dobbs, and Hand (2002) proposed a proactive evaluation framework by integrating the production process. The framework identified critical online learning factors and influences in planning, designing, and developing learning resources during formative assessment by focusing on the decision-making process, which was based on the complex interaction between subject-specific content, learning outcomes, and online computer-based learning environments. The online learning factors of the proposed framework, which were considered to be critical to effective online learning, comprised strategic intent, content, learning design, interface design, interactivity, assessment, student support, utility of content, and outcomes. For the assessment criteria of this framework, five elements (i.e., assignments, examinations, project work, work placement, and authentication) were suggested to assess teacher-, peer-, or student-directed factors within the context of critical factors, including the assessment of new environments in which the performance data were collected (e.g., real-world workplace environments). Baker (2003) proposed a framework of K-12 curriculum components to evaluate outcomes that are specific to online distance learning courses; this framework integrated an adaptation of Tyler’s principles with the level of cognitive learning in Bloom’s taxonomy.

Baker (2003) indicated three factors by which distance learning courses differ from traditional in-class courses: 1) a personal interaction factor, which refers to the instructor’s opportunity to provide feedback and direction and to observe learning activities in real time; 2) an interaction factor, which refers to interactions among learners as they share experiences; and 3) a class attendance factor, which refers to learners’ responsibility and motivation to perform the required tasks. These factors should be considered in selecting distance learning tools. Ferguson and Wijekumar (2000), as cited in Baker (2003), suggested a variety of web-based tools for distance learning instructional methods and course design strategies, including course outline posting, course notes, course reference materials, chat rooms, email, online tests, group discussion boards, digital drop boxes, interactive activities, feedback, virtual classrooms, and whiteboarding. Additionally, free distance learning tools that include the concerned factors are available on the Internet (BbCollaborate, VYEW, and WizIQ, http://lizstover.com/free-tools.html).

Comparative studies of the effectiveness of online learning remain to be reported. Several studies that assessed the effectiveness of distance learning by comparing regular and distance students found no significant differences in learning outcomes (Abraham, 2002; Shachar & Neumann, 2003; Smith & Dillon, 1999; Zhao, Lei, Yan, Lai, & Tan, 2005). Other studies found that internet-based student performance was significantly higher than classroom-based performance for four examined items (i.e., knowledge, comprehension, application, and overall exam scores) because of the increased student-centeredness of IBDL and because students prepared more effectively for the internet-based classroom examination (Thomas, Simmons, Jin, Almeda, & Mannos, 2005). Several meta-analyses have reported that significant differences in several factors were found in studies that compared the effectiveness of regular and distance student performances (Shachar & Neumann, 2003; Zhao, Lei, Yan, Lai, & Tan, 2005). Additionally, several studies have reported that “students who had the opportunity to interact with each other face-to-face performed better than those lacking that opportunity” (Koch & McAdory, 2012).

In general, evaluation methods appear to be acknowledged by scholars in the field. Nevertheless, certain models may not clearly present the meanings or definitions of terms involved in educational evaluation. Therefore, this paper restates the primary terminology used in the evaluation processes as follows.

In relation to evaluation, “measurement” is the act or process of measuring something (Hornby, 1995; Levine, 2005). Educational measurement is the process of calculating the success of an instructional activity using a data set (e.g., test scores, midterm scores, or dropout rate).

Educational “assessment” refers to a process that attempts to understand and improve student learning. This process includes clarifying instructor expectations to students and setting appropriate outcomes for learning by using relevant information (Huitt, Hummel, & Kaeck, 2001; Levine, 2005).

“Evaluation” entails using determined criteria and standards to assess the value of systematically acquired information regarding accuracy, effectiveness, economic efficiency, or satisfactory outcomes, either quantitatively or qualitatively. Evaluations provide relevant feedback to stakeholders (Bloom, 1956, p. 185, cited in Bloom, Hastings, & Madaus, 1971; Levine, 2005; Trochim, 2006).

“Computer-mediated communication” (CMC) is the process by which people create, exchange, and perceive information when using networked telecommunications that facilitate encoding, transmitting, and decoding messages. CMC can be synchronous, for example, chat rooms, or asynchronous, for example, discussion boards and emails (Romiszowski & Mason, 1996).

VClass LMS is a royalty-free e-education platform that was developed by Distributed Education Center (DEC), which is a subunit of the Internet Education and Research Laboratory at the Asian Institute of Technology (AIT) in Thailand. VClass aims to enable the large-scale sharing and archiving of teaching and learning resources among Thai universities, which can be delivered live or non-live using an H.264 high-definition video format with IPv4 and IPv6 technology.

VClass (DEC, 1998) in live mode uses three servers, VClass LMS, conference, and session initiation protocol (SIP). The VClass LMS server stores learning resources, student and instructor profiles, and recorded video streams, which students and instructors utilize before and after class sessions. The conference and SIP servers are used for video conferencing, streaming multimedia distribution, and instant messaging from the sender to the remote location over the internet networking system. Thus, VClass in live mode allows instructors and distance students to communicate by microphone and instant messaging in real time.

As certain studies have suggested (Ferguson & Wijekumar, 2000, cited in Baker, 2003), the use of the educational technology of this study is based on the idea of using an educational technology that was developed and available in our country. Thus, a royalty-free software application namely VClass which was developed by DEC at AIT in Thailand was utilized.

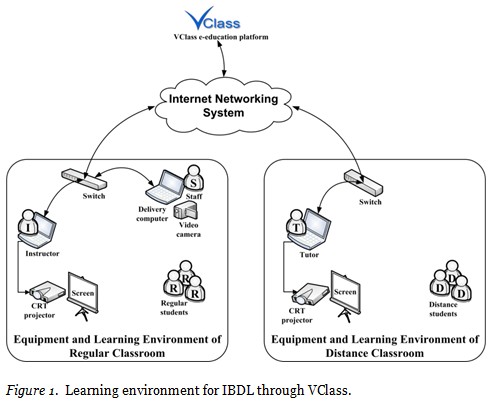

The learning environment for VClass in live mode, as illustrated in Figure 1, is divided into two parts, regular and distance classroom settings. Both settings must have internet access.

In the regular classroom, a few components are added: a switching hub, delivery computer, video camera, and staff. The staff member connects the equipment, logs into VClass 15 minutes before the class starts, and coordinates with the IT department regarding problems with the internet networking system. Moreover, the staff member helps the instructor monitor the communication signal via the audio device or instant messages from the remote location. After each class session, the staff member must convert the recorded video lecture into a compatible format (e.g., flv or mp4) for uploading to VClass LMS. Distance students can review the previous class on demand; this ability represents part of the subject group treatment.

The distance classroom is a normal classroom that connects to the Internet. A tutor is vital to the proper operation of the distance classroom to coordinate among the instructor, distance students, staff of the regular classroom, and IT department on the remote side. The tutor also facilitates classroom operations and solves general problems (e.g., clarifying uncertainties regarding course content, coordinating assignment submissions, and proctoring tests).

For this study, class sessions were delivered from the sender to the remote location via the internet networking system. The remote location received and distributed the live class using the equipment in the distance classroom, including a computer with a web camera and audio device for communication with the sender side. The components of these learning environments were the inputs of the system process.

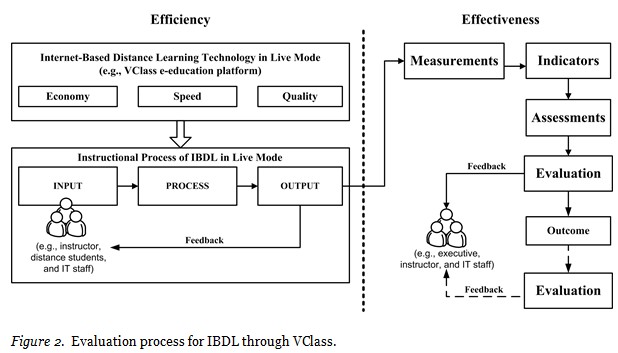

The evaluation process of IBDL through VClass gauges the effectiveness of IBDL in live mode. Figure 2 illustrates how the model measures the effectiveness of IBDL. The evaluation’s design was based on the definitions above for understandability, practicability, and applicability, especially for learning through IBDL technology, and applying to the evaluation of instructional activities. The process considers three main parts—IBDL technology in live mode, the instructional process of IBDL in live mode, and the evaluation of the effectiveness of IBDL—which are discussed below.

IBDL technology is evaluated regarding its efficiency or effectiveness (i.e., economy, speed, and quality). Regarding efficiency, scientific approaches can be used to evaluate the performance of IBDL technology. In contrast, the effectiveness of IBDL technology can be evaluated by measuring users’ attitudes toward its features. Herein, this study adopted the existing resources and equipment of the pilot project, but they were not the primary focus of the evaluation. Nevertheless, open-ended survey questions were provided to measure users’ attitudes concerning the existing resources of the pilot project.

The teaching and learning process is crucial in IBDL activities and consists of input, process, output, and feedback. To evaluate the instructional process, the entities involved should be considered in identifying the input, process, output, and feedback for data collection based on the research goal and objectives.

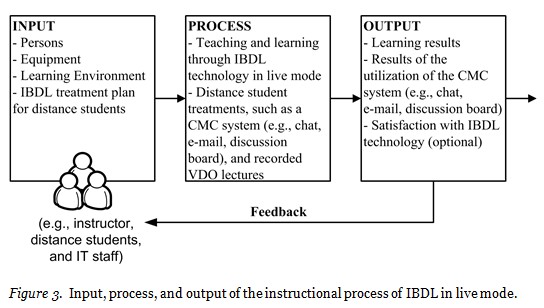

Figure 3 illustrates the entities that are involved in each part of the instructional process of IBDL in live mode. The input component involves several entities: 1) persons, including students, the instructor, and the tutor; 2) the equipment, comprising the computer that sends and receives the video/audio conference, internet connection equipment, projector, digital camera, and microphone; 3) the learning environment, including the lighting, air conditioning, and size of the distance classroom; and 4) the IBDL treatment plan for distance students, comprising the schedule of learning and communicating, grade/score level, assignment submission, and digital learning materials, including recorded video lectures and flexible office hours.

The process component refers to the methodologies or procedures (e.g., teaching and learning through VClass in live mode, selected CMC systems) that operate on input entities through the VClass system. The suggested treatment for the subject group is the adoption of CMC system technology that is free and widely available (in VClass), such as e-mail, chat rooms, and discussion boards. Recorded video lectures, another treatment for distance learning that can be used to review material throughout the course, are also suggested.

Once the operations of the process components are completed, the affected component (i.e., the output component) is produced. In this case, the output consists of the learning results and the utilization of the CMC system; student satisfaction with the learning experience is an optional element. Feedback on the system process (e.g., assignment, test, and midterm scores) is sent to stakeholders as formative results for improving the input and the overall process.

The survey questions on the system process collect the general information (e.g., age, gender, educational level, internet experience, and prior IBDL course) and test results of both student groups. Distance students are also asked to complete a self-assessment and share their IBDL experience; this information is used for comparing the learning behaviors of regular and distance students. A Likert scale is employed for this assessment. The learning behaviors gleaned from the self-assessment are sorted into two categories, before and after class attendance. In the “pre-attendance” survey, the distance students are asked about the frequency of attendance, use of the CMC, downloads of learning material, and review of course content. “After-attendance” questions cover how often course content (recorded video lectures) was downloaded and reviewed and the expression of uncertainty about the course content to the instructor/tutor via each available communication channel. The number of questions that the students asked in their regular and IBDL classes is also included to evaluate the learning behavior of both groups. To evaluate their IBDL experience, the survey asks distance students open- and closed-ended questions on communication between themselves, the instructor, and the tutor through e-mail, live chat, and social media Web sites. The frequency of use of the communication channels, the utility of employing recorded video lectures, distance students’ preferred communication channel, and other opinions and attitudes regarding IBDL and the communication channels are observed.

This component comprises the evaluation process concerning the effectiveness of learning through IBDL technology. In the evaluation process, the outputs of the system process indicate the effectiveness of the learning component and should contain measures of student outcomes (e.g., minimum scores of assigned tasks, grades). The outputs of each student are analyzed statistically (e.g., average grade, standard deviation) to evaluate quantitatively and qualitatively his or her learning. Measurements are the starting point of an evaluation in the effectiveness section (Figure 2). In IBDL, the success of instructional activity hinges on learning results, and the utilization of the CMC system relies on the use of IBDL through VClass. Success is assessed by comparing the assigned indicators (e.g., grading scale, pass/fail scale for tests or assignments). The assessments, along with the other results (e.g., the use of the CMC system), are considered summative results. The evaluation results reveal the effectiveness of IBDL through VClass as justified output, or the so-called outcome. The final results are submitted as feedback to stakeholders (e.g., administrators, instructors, and IT staff) to improve the target IBDL application. Outcomes may be evaluated as input for subsequent subjects.

Herein, evaluation is defined as the systematic acquisition and assessment of information aimed at estimating the value of various measurements of student performance. Assigned indicators evaluate student output quantitatively or qualitatively regarding accuracy, effectiveness, economic efficiency, or satisfactory outcomes for learning through VClass in live mode. Evaluation also entails the provision of useful feedback to stakeholders.

The designed evaluation process for IBDL measures, assesses, and evaluates a distance learning system to acquire information that can help to develop, improve, and affirm its effectiveness. However, before the processes are applied, the goal and objectives of the experiment must be defined, and hypotheses must be proposed. Subsequently, inputs should be identified, and a plan should be formed to describe how the system will operate on the inputs to render the desired outputs. For instructional practice, the desired outputs should be identified through testing, and indicators should be prepared to assess the measurement results (e.g., pass or fail), which should then be distributed to stakeholders as formative evaluation feedback to improve teaching and learning. Finally, the experimental hypotheses must be tested with statistics to arrive at the results of the evaluation. Data collection instruments (e.g., questionnaires, interviews, or focus groups) should be prepared accordingly. Questionnaires were used because of the ease of surveying distance students by this method. The quantitative and qualitative evaluations were based on the same measurement instrument. Validity methodology and reliability testing demonstrated the reliability of the questionnaire. Statistical analyses were then used to test the hypotheses. Once evaluation results are attained through statistical analysis, summative evaluation feedback must be given to stakeholders. The following section describes the utilization of the designed evaluation process.

To evaluate the effectiveness of IBDL through VClass in live mode, the second and third research objectives were examined by implementing the designed evaluation process and statistically analyzing its results. However, this study does not evaluate the efficiency of IBDL technology in live mode or the efficiency of the instructional process; only the effectiveness of IBDL is evaluated and reported. Hence, the instructional process of IBDL in live mode component focuses solely on its input, process, and output to assess statistically the effectiveness of IBDL.

The course described in this study represents the first application of IBDL at Rajamangala University of Technology Lanna (RMUTL). The Office of Academic Resource and Information Technology provided a video conference server for VClass on a conferencing channel, and the supporting team of the DEC provided the VClass e-education platform. The VClass LMS server was used to save the recorded video lectures and discussion boards for communications between the instructor and distance students. The Metropolitan Ethernet, with a bandwidth of 100 Mbps on IPv4 technology, connects the main and branch campuses of RMUTL.

Because the distribution of the population could not be calculated, non-probabilistic sampling techniques were used such as convenience sampling; nevertheless, the sampling satisfies the research objectives of the experiment (Pongvichai, 2011) as a pilot project of RMUTL. The distance classroom students were first-year undergraduates at Jomthong, a branch campus. The students were studying in the Computer Information Systems Department, Faculty of Business Administration and Liberal Arts, RMUTL, Chiang Mai campus, Thailand. This department has only one regular classroom for undergraduate students on the main campus. Therefore, “distance students” refers to students at the branch campus, and “regular students” refers to students at the main campus. The experiment was conducted during the first semester of the Computer Programming 1 course, from June to September 2011. This department’s curriculum is arranged as a learning package with 25 to 35 students per classroom. A regular classroom from the main campus and a distance classroom from the branch campus were selected in the pilot project. The sample consisted of 59 students—31 distance students and 28 regular students. According to Krejcie and Morgan’s (1970) table of sample sizes, this small population was sufficient to result in a 95% confidence level and could be examined using measures of central tendency.

The hypotheses were divided into the following two categories based on research objectives: 1) the effectiveness of IBDL, comparing regular and distance students, and 2) the distance students’ experience with IBDL through VClass. Each category was subdivided into sub-hypotheses to examine the variables in each research objective. The expectations of this study were to acquire new knowledge from the collected information. However, only the null hypotheses are presented.

In the first category, the average score for the effectiveness of learning is an essential variable in evaluating the normal distribution of sampling. This study examines variables such as the proportion of students passing an exam based on an assigned indicator, differences in learning behavior (e.g., a student’s behavior before and after class attendance), the effectiveness of learning, the relationship of overall average scores for learning behaviors with the effectiveness of learning, and the independence of gender and learning behavior from the effectiveness of learning. Therefore, seven hypotheses are set with a 0.05 significance level:

H1.10: The average scores of distance and regular students have a normal distribution.

H1.20: The proportion of distance and regular students who score 50 or higher is 85%.

H1.30: The effectiveness of learning is independent of gender for distance and regular students.

H1.40: The overall average scores of each learning behavior do not differ between distance and regular students.

H1.50: The effectiveness of learning is independent of the average scores of each learning behavior for distance and regular students.

H1.60: The effectiveness of learning for distance and regular students does not differ.

H1.70: The overall average scores of each learning behavior in the effectiveness of learning of distance and regular students do not differ.

In the second category, the communication between distance students, the instructor, and the tutor is subdivided into two groups—four hypotheses each—to evaluate communication channels (e.g., e-mail, live chats, and the social media Web site). Generally, communication channels are examined regarding the independence and differences between genders, internet experience, and effectiveness of learning. Finally, eight hypotheses are set to investigate distance students’ experience with IBDL with a 0.05 significance level as follows.

H2.10: The overall average usage of each communication channel with the instructor is independent of the gender of distance students.

H2.20: The overall average usage of each communication channel with the instructor is independent of the level of internet experience of distance students.

H2.30: The effectiveness of learning and the overall average usage of each communication channel with the instructor are independent of each other.

H2.40: The overall average usage of each communication channel with the instructor does not differ by the gender of distance students.

H2.50: The overall average usage of each communication channel with the tutor is independent of the gender of distance students.

H2.60: The overall average usage of each communication channel with the tutor is independent of the level of internet experience of distance students.

H2.70: The effectiveness of learning and the overall average usage of each communication channel with the tutor are independent of each other.

H2.80: The overall average usage of each communication channel with the tutor does not differ by the gender of distance students.

Questionnaires were used to assess the performance and opinions of distance and regular students. Punpinij (2010) stated that a questionnaire response rate of 85% is adequate for the analysis and reporting of evaluation results and that the error rate should be no more than 5%. A response rate of 90% or higher is extremely good. In the present paper, online questionnaires were produced using Google Docs. The response rate was 100%; therefore, the responses for both groups can be used for analysis.

For distance students, the measurement instrument is separated into three parts, corresponding to survey question indicators: 1) participant characteristics; 2) self-assessment of learning behaviors; and 3) previous experience with IBDL. For regular students, the measurement instrument is separated into two parts: 1) student characteristics; and 2) self-assessment of learning. Quantitative and qualitative data collection utilized both closed- and open-ended questions to elicit useful information and suggestions from participants.

The validity of each questionnaire was controlled using the content validity method. Questionnaire items were designed based on learning behavior and distance student experience with IBDL. Three experts reviewed the questionnaire items of distance students for content validity. Valid questionnaire items were selected by using the index of concordance or content validity index formula (Pongvichai, 2011) and, therefore, had values greater than +0.50. The items were modified based on the information obtained by the experts. The distance students answered the modified items, and the regular students answered all items except those pertaining to IBDL.

Reliability refers to the consistency of a set of measurements by a given instrument. Cronbach’s alpha (Cronbach, 1951) was used to measure the reliability of the internal consistency of the survey instrument because using the test-retest method was inconvenient (Pongvichai, 2011). Cronbach’s alpha is a coefficient that is used for ordered rating scale survey instruments, such as a Likert scale that measures participants’ attitudes. Wasserman and Bracken (2003) suggested that Cronbach’s alpha of 0.60 or higher indicates acceptable coefficient of internal consistency for group assessment. George and Mallery (2003) proposed that Cronbach’s alpha of 0.7–0.8 is acceptable; 0.8–0.9 is good; and ≥0.9 is excellent. In the current study, Cronbach’s alpha was computed for each set of survey question indicators using SPSS.

Part 2, “the self-assessment of learning behaviors,” is divided into two categories that represent the “before” and “after” of student attendance in the IBDL class. Quantitative data were collected from distance students using a Likert scale. Cronbach’s alpha is 0.8612 and 0.9487 for the “before the class” and “after the class” data, respectively, both higher than the values suggested by Wasserman and Bracken (2003) and George and Mallery (2003).

Part 2, “the self-assessment of learning behaviors,” is divided into the following two categories: the “before” and “after” of student attendance in the regular class. Cronbach’s alpha for “before” (0.6870) is higher than the value suggested by Wasserman and Bracken (2003). Cronbach’s alpha for “after” (0.9332) exceeds the values suggested by Wasserman and Bracken (2003) and George and Mallery (2003). A number of items could have been deleted without any substantial negative effect on Cronbach’s alpha, but none were deleted.

The value of reliability might depend on the items in each part of the questionnaire, such that a greater number of items results in higher reliability. Moreover, participants may not answer properly, especially if there are many items. Hence, each part of the questionnaire should include only those items that are necessary to evaluate the research hypotheses. Therefore, validity and reliability play an important role in the questionnaire.

There were 31 students in the distance classroom (17 males, 14 females), with an age range of 18–21 years (38.70%, 48.39%, 9.68%, and 3.23% were 18, 19, 20, and 21 years old, respectively). In all, 54.84% had more than 6 years of internet experience (5–6 years, 22.58%; 3–4 years, 19.35%; and 1–2 years, 3.23%), and 16.13% had experience with distance learning.

There were 28 students in the regular classroom (19 males, 9 females), with an age range of 17–22 years (3.70%, 51.90%, 25.90%, and 3.7% were 17, 18, 19, and 20–22 years old; 11.10% were unreported). In all, 55.60% had 5–6 years of internet experience, and 44.40% had 3–4 years.

The three groups of hypotheses were tested using the Pearson chi-square test, independent samples t-test, Levene’s test for equality of variances, frequency, and percentage. The Pearson chi-square test was used to calculate the independent scale. The independent samples t-test measured the difference of scales between distance students and regular students. Levene’s test for equality of variances measured the variances of the responses. Frequency and percentage were used to determine the number and percent of responses received.

The university grading system consisted of eight grades: A (80–100), B+ (75–79), B (70–74), C+ (65–69), C (60–64), D+ (55–59), D (50–54), and F (0–49). The percentages of distance students who received each grade are as follows: A, 10%; B+, 3%; B, 3%; C+, 19%; C, 7%; D+, 19%; D, 32%; and F, 7%. The corresponding percentages of regular students are as follows: A, 4%; B+, 4%; B, 4%; C+, 7%; C, 11%; D+, 18%; D, 7%; F, 30%; and “Withdraw,” 15%. The majority of distance students received a D (32%), D+ (19%), or C+ (19%), and the majority of regular students received F (30%), D+ (18%), or C (11%). Several regular students withdrew, whereas no distance students withdrew. The results of normal distribution testing, however, indicated that two outliers in the distance classroom had extremely high scores (>=87). Their data were separated to evaluate individually to keep the evaluation of the remaining distance student scores correct and normal. Therefore, the questionnaire responses in the final analysis comprised 29 distance and 23 regular students. Hence, the normal distribution result of H1.10 is accepted at 0.080 for distance student and at 0.200 for regular student.

The hypothesis of the effectiveness of VClass IBDL consists of seven sub-hypotheses. Five null hypotheses (H1.10, H1.30, H1.50, H1.60, and H1.70) were accepted for both distance and regular students. For H1.30, Pearson chi-square test was used to make the calculations. The result indicates that the significance values (Asymp. Sig. [2-sided]) for distance and regular students were 0.615 and 0.175 respectively. Therefore, H1.30 was accepted. Regarding H1.50, the significance values (Asymp. Sig. [2-sided]) for distance students before and after class attendance were 0.127 and 0.490 respectively. For regular students, the result indicates that the significance values (Asymp. Sig. [2-sided]) before and after class attendance were 0.515 and 0.259 respectively. Additionally, the number of times questions were asked in class was calculated. The Pearson chi-square values for distance and regular students were 0.581 and 0.913 respectively. Therefore, the hypothesis H1.50 was accepted. For H1.60, the F-value was 1.878, and the significance value, computed using Levene’s test for equality of variances, was 0.177, which is higher than the assigned significant level. The t-test for equality of means indicated that the t-value was 1.076 and significance value (Sig. [2-tailed]) was 0.287. Thus, H1.60 was accepted. H1.70 was accepted on the basis of the significance level of the individual learning behaviors of (1) distance students (before [0.317] and after [0.562], class attendance, and number of times questions were asked in class [0.063]) and (2) regular students (before [0.515] and after [0.259], class attendance, and number of times questions were asked in class [0.749]). Nevertheless, the extremely low values for the number of times questions were asked in the distance students’ class (0.063) may be attributable to the average of the number of times questions were put to the instructor and to the inefficient use of the communication channels provided to the distance students and the instructor.

For the rejected hypothesis, one null hypothesis, H1.20, was accepted for distance students but rejected for regular students. Thus, the proportion of distance students who could pass to the next subject was 85% or greater, whereas the proportion of regular students was less than 85%. For hypothesis H1.40, differences in the overall averages of each group’s individual learning behaviors (e.g., before and after attending the class, asking questions in class) were evaluated. The null hypothesis H1.40 was rejected for both student groups. The indicators of each learning behavior of both groups were tested to identify differences between the groups. For “before the class,” the t-test for equality of means indicated that the frequency of class attendance (0.002), downloading learning material (0.000), and reviewing lessons (0.001) differed between distance and regular students. That is, regular students engaged more frequently in nearly every learning behavior. For “after the class,” the t-test for equality of means showed that reviewing lessons (0.000) and asking the instructor questions on live chat (0.023) or the discussion board (0.022) also differed between the groups. Again, regular students engaged more frequently in nearly every learning behavior. Distance students were provided with downloadable video lectures of each class in VClass LMS and a tutor who could answer questions on the communication channel, although they utilized these resources only occasionally. Overall, the average number of questions directed at the instructor during the semester was approximately two per distance student and five per regular student.

Eight hypotheses on the distance student experience with IBDL through the VClass e-education platform were tested. Four empty hypotheses (H2.10, H2.30, H2.50, and H2.70) were accepted, and the remaining hypotheses (H2.20, H2.40, H2.60, and H2.80) were rejected. For the accepted hypothesis, Pearson chi-square test was used. The results indicated that the significance value (Asymp. Sig. [2-sided]) of H2.10, H2.30, H2.50, and H2.70 were 0.217, 0.670, 0.206, and 0.433 respectively. For hypotheses H2.20 and H2.60, the independence of prior internet experience and the overall average usage of each communication channel (live chat, e-mail, and the discussion board) were tested. The results indicated that as distance students’ internet experience increased, their use of communication channels with the instructor and tutor decreased. In relation to hypotheses H2.40 and H2.80, the use of live chat and the discussion board differed by gender, as male distance students utilized these channels more often than their female counterparts.

In the qualitative evaluation, the distance students expressed the following opinions and made suggestions in their responses to the open-ended questions:

An assessment of the effectiveness of IBDL requires an intensive plan to assess the effectiveness of IBDL pedagogy. Designing an evaluation process is essential and needs to be done before working on assessment. The proposed evaluation process performs the function of assessment guidelines. This study focuses on the instruction process of IBDL in the live mode component of the evaluation process. This component represents the input, process, and output elements. Each element indicates items concerning the instruction process. This allows the evaluator to see the item involved in the instruction process as a whole and to identify the outputs required for an evaluation. Once the requisite outputs are rendered, an assessment of effectiveness can be conducted. This study used mean of statistics to assess the effectiveness of IBDL. The starting point of the effectiveness part is measurements component. Input data on the measurements (e.g., test scores and completed questionnaires) were processed using mean of statistics. Indicators (e.g., grade and assigned significance level) indicate the output of the effectiveness that is assessments component. To obtain the evaluation result, the researcher analyzes and interprets the assessment results. Then the evaluation results are sent to stakeholders to improve and approve the IBDL. An evaluation should be conducted not only at the end of the study (summative) but also during the ongoing process (formative) in order to improve the process and obtain better results at the end of the study. Regardless of whether the evaluation results are positive, they provide useful information for improving and developing the IBDL pedagogy.

Hence, after the instructional process of IBDL in live mode produced its outputs, mean of statistics was used to assess the value of systematically acquired information. Two areas for improvement were found, which support the second and third objectives of this study. First, according to the second objective, distance students engaged in learning behavior only occasionally. Surprisingly, the effectiveness of learning was the same for both groups, possibly owing to the helpfulness of the tutor, who scheduled extra classes for the distance students before examination day; the distance students’ request for assistance from instructors at the branch campus; and their fear of failing the exam, which motivated them to study. Second, CMC, such as live chat, e-mail, and discussion boards, was sparingly used by distance students; most of the use was by male distance students. Moreover, social media and MSN messenger were utilized as communication channels to reduce the problems of distance and stress in communication between instructors, students, and peers. As described in several studies, teachers are highly respected and typically considered as being knowledgeable and authoritative because traditional Thai culture places a very high value on learning. Additionally, Thai students may not feel as comfortable asking questions and/or voicing their opinions as Western students; however, they listen attentively, take notes very carefully, and are well behaved (Nguyen, 2008; Pagram & Pagram, 2006). Moreover,

[t]he high power distance characterizing Thai culture shapes the behavior of administrators, teachers, students and parents to show unusually high deference (greng jai) toward those of senior status in all social relationships which results in a pervasive, socially legitimated expectation that decisions should be made by those in positions of authority. (Hallinger & Kantamara, 2000)

These factors may explain why the interaction quality between the instructors and students was not sufficient and must be improved. However, a social network, such as Facebook, was found to be utilized by the instructor and students. The two areas, ultimately, become invaluable feedback of the study for stakeholders to analyze and to improve IBDL in live mode for better results.

In conclusion, assessments of the effectiveness of VClass IBDL did not show significant differences between regular and distance students. The utilization of educational technologies over the internet networking system may help solve the teacher shortages and tight budget problems to maintain equal instructional quality at the main and branch campus. VClass is an example that can be used as an educational and communicational technology in live mode. However, other educational technologies must also be studied. Moreover, modern social media networking sites such as Facebook play an important role in the communication between instructor, tutor, and students when they are in different times and places. Furthermore, social media provide an opportunity for students to communicate under usage agreements for academic purposes, including reducing the gap of high respect that students have for instructors, particularly in Asia. Therefore, these two observations are useful to stakeholders in developing, approving, and improving VClass IBDL, especially by integrating Facebook into the VClass e-education platform. Additionally, the interactivity in synchronous virtual classroom methodologies may be integrated into IBDL in live mode, as suggested by certain studies (Martin, Parker, & Deale, 2012), to motivate distance students to learn and obtain immediate feedback from the instructors. Nevertheless, other IBDL technologies and CMC should be considered in IBDL pedagogy.

The author wishes to acknowledge the Office of the Higher Education Commission, Thailand, for a support grant under the program Strategic Scholarships for Frontier Research Network for the Joint Ph.D. Program; and Rajamangala University of Technology Lanna for the partial support given to her during her study at the Asian Institute of Technology (AIT), Thailand. Additionally, the author would like to express her greatest appreciation to the three experts for their valuable suggestions during the designing evaluation instruments.

Abraham, T. (2002). Evaluating the virtual management information systems classroom. Journal of Information Systems Education, 13(2), 125-134.

Baker, R. K. (2003). A framework for design and evaluation of internet-based distance learning courses phase one-framework justification, design and evaluation. Online Journal of Distance Learning, 6(2).

Bloom, B. S., Hastings, J. T., & Madaus, G. F. (1971). Handbook on formative and summative evaluation of student learning. New York: McGraw-Hill.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297-334.

Distributed Education Center, Asian Institute of Technology. (1998). VClass. Retrieved from VClass web site: http://www.vclass.net

Ferguson, L., & Wijekumar, K. (2000). Effective design and use of web-based distance learning environments. Professional Safety, 45(12), 28-32.

George, D., & Mallery, P. (2003). SPSS for Windows step by step: A simple guide and reference, 11.0 update (4th ed.). Boston: Allyn and Bacon.

Hallinger, P., & Kantamara, P. (2000). Educational change in Thailand: Opening a window onto leadership as a cultural process. School Leadership and Management, 189-205.

Hornby, A. S. (1995). Oxford advanced learner’s dictionary of current English (5th ed.). (J. Crowther, K. Kavanagh, & M. Ashby, Eds.). England: Oxford University Press.

Huitt, B., Hummel, J., & Kaeck, D. (2001). Assessment, measurement, evaluation, and research. Retrieved from edpsycinteractive.org website: http://www.edpsycinteractive.org/topics/intro/sciknow.html

Koch, J. V., & McAdory, A. R. (2012). Still no significant difference? The impact of distance learning on student success in undergraduate managerial economics. Journal of Economics and Finance Education, 11(1), 27.

Krejcie, R. V., & Morgan, D. W. (1970). Determining sample size for research activities. Educational and Psychological Measurement, 30, 607-610.

Levine, S. J. (2005). Evaluation in distance education. Retrieved from LearnerAssociates.net website: http://www.learnerassociates.net/debook/evaluate.pdf

Likert, R. (1932). A technique for the measurement of attitudes. Archives of Psychology, 22(140), 1-55.

Martin, F., Parker, M. A., & Deale, D. F. (2012). Examining interactivity in synchronous virtual classrooms. The International Review of Research in Open and Distance Learning, 13(3).

Nguyen, T. (2008). Thailand: Cultural background for ESL/EFL teachers. Retrieved from http://www.hmongstudies.org/ThaiCulture.pdf

Pagram, P., & Pagram, J. (2006). Issue in e-learning: A Thai case study. The Electronic Journal of Information Systems in Developing Countries, 26(6), 1-8.

Passerini, K., & Granger, M. J. (2000, January). A developmental model for distance learning using the Internet. Computers & Education, 34(1), 1-15.

Pongvichai, S. (2011). Data analysis in statistics with computer: Emphasis on research. Bangkok, Thailand: Chulalongkorn University.

Punpinij, S. (2010). Research techniques in social science. Bangkok: Witthayaphat.

Romiszowski, A., & Mason, R. (1996). Computer-mediated communication. In D. Jonassen (ed.), Handbook of research for educational communications and technology (pp. 438–456). New York: Macmillan.

Shachar, M., & Neumann, Y. (2003). Differences between traditional and distance education academic performances: A meta-analytic approach. International Review of Research in Open and Distance Learning, 4(2), 1-20.

Sims, R., Dobbs, G., & Hand, T. (2002). Enhancing quality in online learning: Scaffolding planning and design through proactive evaluation. Distance Education, 23(2), 135-148.

Smith, P. L., & Dillon, C. L. (1999). Comparing distance learning and classroom learning: Conceptual considerations. The American Journal of Distance Education, 13(2), 6-23.

Thomas, H. F., Simmons, R. J., Jin, G., Almeda, A. A., & Mannos, A. A. (2005). Comparison of student outcomes for a classroom-based vs. an internet-based construction safety course. The American Society of Safety Engineers, 2(1).

Trochim, W. M. (2006, October 20). The research methods knowledge base (2nd ed.). Retrieved from The Research Methods Knowledge Base website: http://www.socialresearchmethods.net/kb/

Wasserman, J. D., & Bracken, B. A. (2003). Psychometric characteristics of assessment procedures. In I. B. Weiner, J. R. Graham & J. A. Naglieri (Eds.), Handbook of psychology: Assessment psychology (Vol. 10, p. 55). Canada: John Wiley and Sons, Inc.

Zhao, Y., Lei, J., Yan, B., Lai, C., & Tan, H. S. (2005). What makes the difference? A practical analysis of research on the effectiveness of distance education. Teachers College Record, 107(8), 1836-1884.