|

|

|

Elizabeth Archer, Yuraisha Bianca Chetty, and Paul Prinsloo

University of South Africa

Student success and retention is a primary goal of higher education institutions across the world. The cost of student failure and dropout in higher education is multifaceted including, amongst other things, the loss of revenue, prestige, and stakeholder trust for both institutions and students. Interventions to address this are complex and varied. While the dominant thrust has been to investigate academic and non-academic risk factors thus applying a “risk” lens, equal attention should be given to exploring the characteristics of successful students which expands the focus to include “requirements for success”.

Based on a socio-critical model for understanding of student success and retention, the University of South Africa (Unisa) initiated a pilot project to benchmark successful students’ habits and behaviours using a tool employed in business settings, namely Shadowmatch®.

The original focus was on finding a theoretically valid measured for habits and behaviours to examine the critical aspect of student agency in the social critical model. Although this was not the focus of the pilot, concerns regarding using a commercial tool in an academic setting overshadowed the process. This paper provides insights into how academic-business collaboration could allow an institution to be more dynamic and flexible in supporting its student population.

Keywords: Distance education; student success; academic-business collaboration; habits and behaviours; benchmarking

Student success is of major concern to a number of stakeholders in higher education including governments, policy makers, faculty, and students. An integral element in many models on student success and retention is the impact of students’ habitus as a framework of “lasting, transposable dispositions which, integrating past experiences, functions at every moment as a matrix of perceptions, appreciations, and actions” (Bourdieu in Berger, 2000, p. 99). Incorporating data on habits and behaviours into student success models provides an additional lens which speaks to personal attributes, and this in turn strengthens a student-centred approach.

The University of South Africa (Unisa) developed a comprehensive framework for enhancing student success, based on a socio-critical understanding of student success and retention (Subotzky & Prinsloo, 2011). Central to this understanding is the role of students’ agency involving their habits and behaviours flowing from their habitus. The student success framework relies on a suite of instruments, as well as systems data to inform policy and practice. Very little information was, however, available on habits and behaviours which form part of non-academic risk and success factors. The Shadowmatch® pilot project was launched to provide this data by benchmarking successful students’ habits and behaviours. This paper firstly examines the epistemological ‘fit’ between the socio-critical model for understanding and predicting student success and Shadowmatch®. We then continue mapping the pilot of Shadowmatch® in a higher education environment and highlighting the complexities as well as benefits of such a higher education-corporate collaboration.

The focus of this article are these complexities and benefits of a higher education-corporate collaboration. The details of the Shadowmatch® report and evaluation are addressed in an internal procurement report. This paper is a process article reflecting on how the institution engaged with the pilot with regard to negotiating access and permission for the pilot. The Shadowmatch® pilot implementation forms part of the background and contextualisation for this discussion. The research therefore has the potential to be transferred to any corporate-academic collaboration beyond the bounds of benchmarking the habits and behaviours of successful students.

If student success is considered to be complex and the result of mostly non-linear, mutually constitutive factors and relations, it follows that it is time-consuming and possibly costly to develop in-house tools to map aspects or specific relations within the context of student success as a complex phenomenon. The aim of this paper is to examine the complexities and benefits of piloting a commercial product in the higher education environment as an alternative to an institution developing its own instrument.

Specifically, this paper examines the piloting of Shadowmatch®, a tool used in the corporate and commercial sector to determine the profiles of effective employees as a basis for planning professional development to increase organisational effectiveness and impact. The instrument was implemented and reporting adapted to profile successful students in various higher education qualifications. This represented a shift to focusing on requirements for success as opposed to merely identifying students at risk. The aim was to use these profiles of success to increase students’ self-awareness and self-efficacy in order to encourage behaviours that will significantly increase their chances of success.

The literature review provides a brief overview of some of the theoretical models and research on student success and retention, before discussing the conceptual framework by Subotzky and Prinsloo (2011) employed at Unisa for the profiling of students. As this research specifically examines the use of a commercial product to profile students in a higher education institution, we will also briefly refer to the perceived tensions regarding higher education and its response to demands from and its relations to the corporate world (Apple, 2009; Blackmore, 2001; Giroux, 2003; Haigh, 2008; Lynch, 2006).

Attempts to profile students according to potential and risk-of-failure should be seen against the backdrop of concerns regarding the ‘revolving door’ and low throughput rates in higher education and specifically in distance education. Student success and failure have been explained, theorised, and researched by various authors (Bean, 1980; Kember, 1989; Spady, 1970; Tinto, 1975, 1988; Subotzky & Prinsloo, 2011). Since the early models explaining student success in face-to-face higher education (Spady, 1970; Tinto, 1975, 1988), there have been numerous models and theoretical frameworks addressing student failure and dropout (Baird, 2000; Bean, 1980, 1982; Cabrera, Nora, & Castaneda, 1992; Johnson, 1996; Kember, 1998). Though there is appreciation for those early works on student success, more recent research (e.g., Braxton, 2000) questions many of the assumptions and theoretical constructs of these early models and theories on student success, retention, and failure (Kuh & Love, 2000; Tierney, 2000; Prinsloo, 2009; and Subotzky & Prinsloo, 2011).

Subotzky and Prinsloo (2011) classify the different approaches to understanding student success and retention according to the context in which these approaches and models are developed, such as geopolitical (developing or developed), theoretical/philosophical/ideological/disciplinary, the type of institution and delivery (e.g., face-to-face, blended, or distance education), and the methodology used in the approach (e.g., structural models, bivariate probability model, or logic regression analysis).

Compared to research in face-to-face contexts, there is less published research regarding student success and retention in distance education contexts. A range of authors (Kember, 1989; Kember, Lee, & Li, 2001; Prinsloo, 2009; Subotzky & Prinsloo, 2011; Woodley, 2004) therefore point to some unique considerations with regard to conceptualising student success in distance education contexts and question the direct transferability of traditional models and theories to distance education contexts.

A further complicating factor impacting on the transferability of different models on student success and retention, irrespective of context, is the increasing “unbundling” or the “unmooring” of traditional higher education (Watters, 2012) and a blurring of the boundaries between traditional notions and definitions of face-to-face education versus distance education and e-learning (Hanna, 1998; Woo, Gospera, McNeilla, Preston, Green, & Phillips, 2008). The fact that traditional face-to-face institutions and distance education institutions are including various elements and ranges of e-learning further complicates the formal, traditional distinctions between face-to-face and distance education delivery models. New forms of educational delivery therefore disrupt traditional models and theories of understanding student success and retention (Clow, 2013; Daniel, 2012).

Though Tinto’s interactionalist theory/model enjoys “near paradigmatic” stature (Braxton, 2000, p. 2), it “is partially supported and lacks empirical internal consistency” (Braxton, 2000, p. 3). Braxton and Lien (2000) therefore state that Tinto’s model needs revision. Prinsloo (2009) also point to a number of other concerns regarding the transferability of current models for understanding and predicting student success and retention such as the fact that “tangible and intangible impacts of economic influences” on student persistence in developing world contexts “remain under-researched” (p. 85). “The impact of economic considerations as a psychological stressor may in a developing world context play an even more important role than in other contexts” (Prinsloo, 2009, p. 85). There is also evidence that suggests that “student throughput and retention operate differently for students of different ages, and that different factors influence early leavers and later leavers” (Prinsloo, 2009, p. 86).

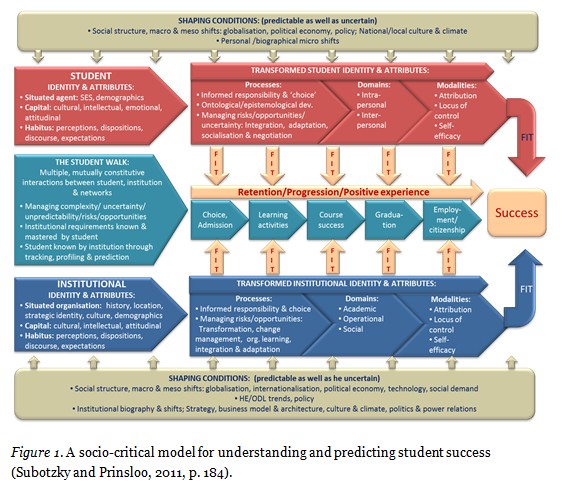

Based on various criticisms against current models for understanding and predicting student success and retention (e.g., Braxton, 2000), Subotzky and Prinsloo (2011) follow Tinto (2006) in proposing a socio-critical model to make sense of student retention as a complex and layered, dynamic web of events.This model developed by Subotzky and Prinsloo (2011) provides the conceptual basis of efforts at Unisa to predict student success and identify students at risk.

Subotzky and Prinsloo (2011, p. 184-188) propose some key constructs informing their socio-critical model:

1. Situated agents: student and institution: Although student and institutional attributes and behaviours are strongly shaped by the structural conditions of their historical, geographical, socio-economic, and cultural backgrounds, they enjoy relative freedom within these constraints to develop their attributes in pursuit of success. Students’ identity and attributes include, inter alia, not only various forms of capital, but also dispositions such as intellectual maturity, the ability to think critically, and various other competencies and abilities (Prinsloo, 2009). The institution’s identity entails not only the impact and shape of its location, but also different forms of capital and habitus.

2. The student walk encompasses the numerous ongoing interactions between student and institution throughout the student’s journey. These interactions are “mutually constitutive” and influenced by the situatedness of both agents. In this context, institutional and administrative efficiencies play a crucial role. Engagement during the student walk is mutually transformative, where reciprocal knowledge and understanding is key to the “fit” between the individual student’s aspirations and dispositions and the culture and academic offerings by the institution.

3. Capital: Both students and the institution acquire “various forms of capital partly through the reproductive mechanisms embedded in their socio-economic and cultural contexts and partly through their own individual or institutional/organizational initiatives”.

4. Habitus: The mutual and dynamic engagement between students and the institution is shaped by habitus as “the complex combination of perceptions, experiences, values, practices, discourses, and assumptions that underlies the construction of our worldviews”.

5. Domains and modalities of transformation which include inter and intra-personal domains on the side of students, and academic, social, and operational domains on the side of the institution. Both the student and institutional domains are shaped by locus of control, attribution, and self-efficacy.

6. A broad definition of success which includes not only course success and graduation, but also student and institutional satisfaction, “successful fit between students’ graduate attributes and the requirements of the workplace, civil society, and democratic, participative citizenship” and course success without graduation.

Subotzky and Prinsloo (2011, p. 179) state that factors impacting on student success and retention should be understood as comprising three distinct, but overlapping levels: “individual (academic and attitudinal attributes, and other personal characteristics and circumstances), institutional (quality and relevance of academic, non-academic, and administrative services), and supra-institutional (macro-political and socio-economic factors)”. Figure 1 illustrates these different levels. The “student walk” signifies different interactions in the nexus between the individual student (at the top) and the institution (at the bottom). The “student walk” is, however, not only shaped by the two main protagonists, but also by supra-institutional (macro-political and socio-economic) factors.

Subotzky and Prinsloo (2011) state that “Many, if not most, international models interpret success narrowly as the outcome of students assimilating into prevailing institutional cultures and epistemologies” (p. 190). The increasing diversity of students and the socio-economic challenges inherent in developing countries implies that student success is much more complex than simply about students fitting in, as mutual responsibility is a precondition.

Profiling students in higher education is not a recent phenomenon. Admission criteria to higher education and to specific programmes were employed as one of the earliest ways through which some students were deemed to be showing the most potential for success, whilst other students, not meeting the criteria, were excluded. One of the unique characteristics of distance education has always been the less stringent admission requirements. One particular gestalt of distance education actually claims to provide ‘open’ education, such as open distance learning (ODL), depending on geopolitical contexts, legislation, and funding frameworks. Recent developments in higher education such as the massive open online course (MOOC) phenomenon have highlighted the role of open admission requirements on student success and retention (Clow, 2013), as well as the potential to harvest and analyse students’ digital data in order to offer customised curricula, assessment, and support. Students are therefore not only profiled according to demographical and historical educational data, but increasingly these profiles are enhanced by real-time data such as time-on-task, number of logins, and so on (Booth, 2012; Long & Siemens, 2011; May, 2011; Oblinger, 2012; Siemens, 2011; Wagner & Ice, 2012).

There are many examples of the role and impact of effective student profiling in increasing not only the effectiveness of student success and retention, but also the more optimal allocation of resources (Chansarkar & Michaeloudis, 2001; Diaz & Brown, 2012; Kabakchieva, 2012; Wardley, Bélanger, & Leonard, 2013). Discourses regarding the scope, methods, and impact of profiling in other fields such as surveillance studies has shown that profiling opens up a number of ethical dilemmas (Knox, 2010; Mayer-Schönberger, 2009; Mayer-Schönberger & Cukier, 2013; Marx, 1998). There is very little research done in the context of higher education on the possible negative impact of profiling through institutional research or learning analytics. As Slade and Prinsloo (2013) and Prinsloo and Slade (2013) indicate, the harvesting and use of students’ digital data raises a number of ethical questions and dilemmas for which most higher education institutions are ill-prepared (also see Diaz & Brown, 2012; Knox, 2010; and Pounder, 2008).

The provocations offered by Boyd and Crawford (2013) provide a sobering perspective regarding the potential of learning analytics and our profiling to provide authentic, dynamic, and holistic pictures of students. One of the dangers of profiling is that we assume these profiles to be objective and accurate, whilst our algorithms are based on social constructs embedded in current thinking, understanding, and values (Johnson, 2013). While our understanding of the complexities in student success and retention increases as more and more data become available, Boyd and Crawford (2013, p. 6) warn that “…bigger data are not always better data”.

At present many of the profiling of students is focused on identifying students who are at risk and/or students who are in need of specific student support and interventions (Diaz & Brown, 2012). While there are some examples focused on both students at risk and identifying students with potential, most institutional profiling strategies are currently aimed at students-at-risk (Braxton, 2000; Diaz & Brown, 2012).

Modern higher education has always been embedded in existing power-relations, whether these entailed relationships are with organised religion, national governments, industry, the market, and a range of other stakeholders. Authors such as Barnett (2000), Blackmore (2001), Diefenbach (2007), Giroux (2003), Kezar (2004), Washburn (2005), Willmot (2003) and others differ regarding how higher education should respond to the increasing impact of neo-liberal capitalism, managerialism, and the corporatisation of higher education. Amidst concerns regarding “academic capitalism” (Diefenbach, 2007) and claims that higher education has become the “handmaiden” of the corporate world (Giroux, 2003), there are also concerns regarding the increasing outsourcing of essential services and functions (Gupta, Herath, & Mikouiza, 2005; Watters, 2012; Wood, 2000) and the (over)-reliance of higher education institutions on commercially licenced products and the way these, directly or indirectly, shape curricula, pedagogies, and identity (Beckton, 2012; Cribb & Gewirtz, 2013). Metaphors describing the ways in which higher education is changing refer to the “unbundling and unmooring” of higher education (Watters, 2012) and the “hollowed-out university” (Cribb & Gewirtz, 2013).

Though outsourcing is not a new trend in higher education, the scope and impact of outsourcing are increasing (Gupta et al., 2005; Wood, 2000). Many higher education institutions increasingly use a number of commercial products and licences (e.g., commercial learning management systems and software) or establish partnerships with commercial interests in fulfilling their mandate. Gupta et al. (2005) describe a range of reasons for this increased outsourcing and commercialisation of services including the “slowing economy, declining students’ enrollments, state budget cuts, decreased funding for research, and rapidly increasing costs of higher education” (p. 396).

Despite the established practice of using various products and services developed for and by corporate entities, this case study reports on the use of a commercial tool developed for the corporate environment to profile students. A number of concerns and issues (such as student privacy, alignment, data ownership, etc.) were raised, to which we will later return. Many of the issues and concerns can be traced back to complexities and perceptions regarding not only the established relationship between higher education and commercial entities, but also to the increasing dependence originating in licencing regimes.

This research falls into the broader category of instrumental case studies (Rule & John, 2011; Thomas, 2011; Yin, 2009) where an issue or concern is studied through one bounded case to illustrate a particular issue. In this case the researchers aimed at gaining insight into the institutional processes, challenges, and opportunities for adapting a commercial model of assessing students’ potential for success against empirically established benchmarks. The data were analysed using pragmatic eclecticism (Saldana, 2009), which means that the researchers kept themselves open during the initial data collection and coding to determine the most appropriate methods of coding. A number of first cycle coding methods (preliminary coding methods) were combined with second cycle coding (categorical, conceptual, and/or theoretical organisation). During the first cycle, coding and recoding, data were analysed according to meaningful units of text, with codes generated through an inductive process and allocated to each unit individually. Once the first cycle coding was completed, codes were clustered in meaningful groups to generate themes. The paper employed data from

The methodological norms of this study were established through trustworthiness as first suggested by Guba and Lincoln (1985, pp. 289-331). Trustworthiness was established by ensuring transferability, credibility, dependability, and confirmability. This approach relies on ‘thick’ descriptions to allow other researchers to transfer results to their own context. Prolonged engagement, referential adequacy, and peer debriefing were employed to establish the credibility of the research.

The Shadowmatch® pilot is discussed in this section. The pilot process provided an appropriate platform to examine the academic-corporate collaboration. The data from this implementation is not the focus of this article. Instead, the article draws attention to the process of selecting and acquiring Shadowmatch® for the pilot. This pre-implementation phase produced most of the data relating to the tensions of academic-business collaboration. The second section deals with the pilot implementation.

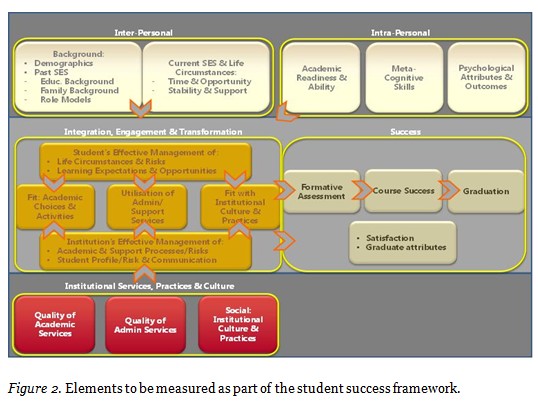

Unisa assesses student risk and success through the student success framework, which was conceptualised and developed by Subotzky and Prinsloo (2011) and is underpinned by a socio-critical model for understanding and predicting student success. The detailed model was discussed earlier. The student success framework relies on a suite of instruments, as well as systems data to inform policy and practice. Figure 2 illustrates the various elements which need to be measured as part of the framework.

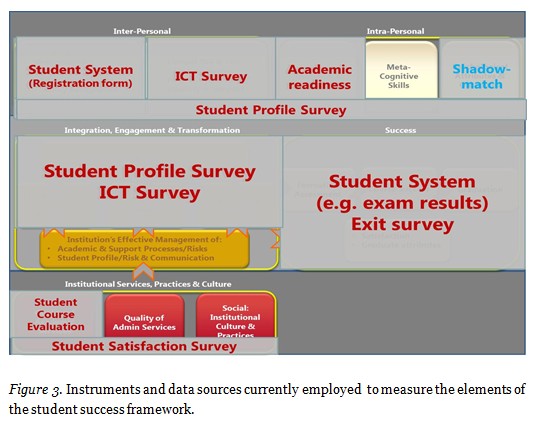

Figure 3 illustrates the various tools and instruments which were being used to assess specific elements of the framework, prior to the pilot of Shadowmatch®. It became evident that very little information was, however, available on habits and behaviours which form part of non-academic risk and success factors. In 2011, Unisa expressed a need to find a well-developed and tested instrument for assessing students’ habits and behaviours.

For this reason, in March 2011 it was proposed at the Senate Teaching and Learning Committee (STLC) that expressions of interest be invited from providers. The proposal for the expressions was approved by STLC on 28 March 2011. The Department of Institutional Statistics and Analysis (DISA) was tasked with the project and, through rigorous scanning of the local and international higher education environment, identified Shadowmatch® as a possible solution. The provider needed to be able to provide an online solution for the ODL environment, preferably with an automated individualised reporting and feedback system to support students in improving their habits and behaviours for success. The scanning indicated that only a single local provider, namely De Villiers, Bester and Associates (DBA), offered this through the Shadowmatch® tool. On 26 September 2011, the Shadowmatch proposal was presented to STLC. The proposal was approved in principle and referred to the Student Success Forum (SSF) for further recommendations regarding implementation and rollout of the tool. The SSF met thereafter on 24 October 2011 to consider the STLC resolutions and agreed to a pilot phase to include some qualifications in order to test the appropriateness for the Unisa environment.

Facilitated by DISA, a formal presentation of the tool was made by DBA to SSF, resulting in a productive session during which constructive insights and suggestions were shared in advance of the implementation of the project. The purpose of the pilot was to investigate the suitability of Shadowmatch® in measuring non-academic risk in the Unisa context. The pilot phase of the project commenced firstly with the subscription to the Shadowmatch® tool followed by the formal contractual agreement between the DBA as the provider and Unisa. The latter was facilitated by DISA in collaboration with Procurement and Legal Services. Furthermore, given that the procurement of software solutions was in the ICT domain, the executive director of ICT was consulted during this phase and provided oversight during the finalisation of the contract for the subscription to the tool. Throughout the pilot subscription and implementation process there were regular reports made to SSF as well as additional engagement about the process.

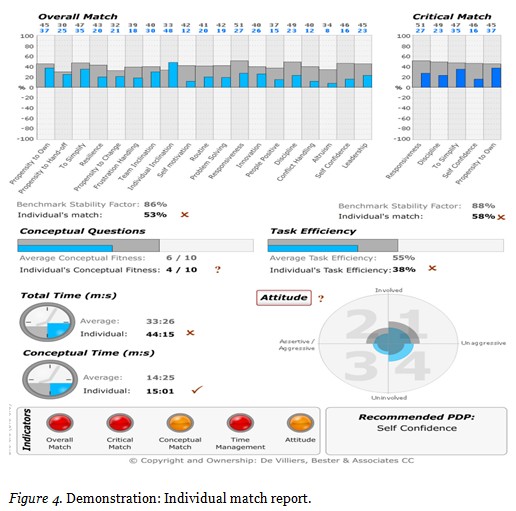

The Shadowmatch® instrument assesses a range of habits and behaviours. These include a propensity to own, a propensity to hand off, the ability to simplify, resilience, a propensity to change, frustration handling, team inclination, individual inclination, self-motivation, routine, problem solving, responsiveness, innovation, people positive, discipline, conflict handling, altruistic, self-confidence, and leadership. Over and above this, the instrument also assesses attitudes and locates them within four quadrants. Quadrant 1 represents those who are “Involved-Unaggressive”; Quadrant 2 represents those who are “Involved-Assertive/Aggressive”; Quadrant 3 represents those who are “Uninvolved-Assertive/Aggressive”; and Quadrant 4 represents those who are “Uninvolved-Unaggressive”. The benchmark of the habits and behaviours of top performing students are shown in grey with the individual score of the student indicated in blue (see Figure 4).

Three phases characterised the Shadowmatch® pilot at Unisa. Phase 1 involved the assessment of successful students’ habits and behaviours in order to establish benchmarks for comparison with the broader student population. Colleges at the institution were requested to provide the qualifications for inclusion in the benchmarking process, and 170 benchmarks were established across the colleges. The intention was to obtain a representative sample of both students and qualifications. The sampling frame of the study constituted students who had graduated in these qualifications – with graduation being regarded as the indicator of a successful student. The top 25 graduates from the previous year (2011) in the selected qualifications were identified for participation in the assessment of habits and behaviours by means of a questionnaire. To ensure fairness and consistency, the top performers were identified using the following three criteria: a) graduated from the qualification in 2011, b) highest average across all modules within the course, and c) completed the qualification within twice the minimum time for completion of the qualification. In order to boost the sample for the benchmarks, registered students who had performed well in recent examinations were also targeted for inclusion in the assessment. Benchmarks for habits and behaviours per qualification were formed based on the assessments of the top performers in those qualifications. Benchmarks were established for 170 qualifications. The response rates to the assessment of students within these qualifications made this possible. Those qualifications which yielded poor or absent response rates from students were excluded.

Following the fieldwork to establish habit and behaviour benchmarks for top performing Unisa students, the same exercise was circulated to current Unisa students in the selected qualifications for completion. This characterised Phase 2 of the pilot. The aim here was to determine the fit or match between the students’ current habits and behaviours (noting the qualification for which they were registered) and those of high performing students in these qualifications – as determined through the benchmarking process. The combined response rate to Phase 1 and 2 of the pilot was 9,500.

Results proved insightful in that the analysis profiled individual students in a range of qualifications in terms of their “match” to the benchmark for those qualifications. Based on the student’s match to the benchmark for their qualification, students were provided with an automatically generated personal development plan for the habit or behavior which required development or intervention.

The third and final phase of the pilot involved ascertaining how student participants experienced the Shadowmatch® pilot, which included the Shadowmatch® instrument as well as the individual reports and the personal development plans which were the key outputs. This was determined through an evaluation instrument developed by the researchers and administered as an online survey. A total of 723 students participated in the evaluation. Results indicated that students’ own reports of their experiences were generally positive, with relatively few students reporting that the experience was not beneficial. These survey results cannot be generalised to the broader group of students who participated in the pilot given the response rate. However, the results did provide insights which the university was able to use to determine if the pilot phase could be extended for an additional year.

The case study highlights the complexity of adapting and integrating an existing commercial product into a higher education environment. After a thorough theoretical exploration and validation of Shadowmatch® established its potential, a central concern of both academics and students was the issue of using a tool developed in a corporate environment in a higher education setting. Academics expressed the view that the higher education context is so unique that a tool developed to function in the corporate environment would be of little use. This manifested in concerns about how success is defined in the academic context. Some academics’ perceptions were that the profile of habits and behaviours would be similar across all qualifications, thus invalidating the necessity of an instrument that allows for individual benchmarks of success for various qualifications. Others perceived the definition of success to be so intrinsic to the qualification that they felt no instrument would be able to capture appropriate benchmarks. Academics also expressed the view that the business language intrinsic to the tool was not suitable for an academic environment and would require customisation. For students, the academic versus corporate pull manifested in a distrust of the origin of the assessments. Notwithstanding multiple communications employing various modes of communication (learning management system, sms, e-mail) some students still questioned the authenticity and expressed fears that the assessment was a means of phishing (fraudulently obtaining personal information).

The tensions of employing an instrument developed for the corporate environment were also expressed in relation to ownership of the data and the instrument, as well as validity and reliability issues. As the instrument was designed by an external company, the academic community was concerned about the potential for publication by the external company based on the data. The institution wished to have the opportunity to publish from the data, and this required a contractual agreement with Shadowmatch®. Furthermore, given that the instrument was the property of Shadowmatch®, validity and reliability had therefore been established by an external auditing company. Many academics expressed distrust in the validity and reliability as well as cultural appropriateness of the instrument for Unisa students, despite the instrument being designed for the South African context with a 10 year track record of success and existing reports on the validity, reliability, and cross-cultural appropriateness.

The negotiation also included discussions around data security and data access. Data security is a high priority for Unisa. Using an external provider to assess students’ non-academic risk was perceived as a threat to data security. All aspects of data transfer, student contact information as well as storage of data had to be thoroughly scrutinised and formalised in the contract to allow for the pilot project to proceed. In this regard, the departments of Information and Communication Technology and Legal Services at the institution played an instrumental advisory role. The Shadowmatch® system can be implemented as a web-application with external hosting or integrated into the systems of an institution with local server hosting. The choice between local hosting and off-site hosting brought to the fore the tension between the advantages of reduced cost, reduced complexity, and the flexibility of off-site hosting on the one hand and the desire to protect data and keep functions in-house on the other hand.

Despite the central role of student agency in determining student success and retention (Subotzky & Prinsloo, 2011), much of the resistance towards implementing an instrument designed for the corporate sector in the higher education environment was based on fear by academics that the two environments were distinctly different. The view was that the requirements for the two environments were so unique that a tool designed for the one environment could not yield positive results in the other. There are perhaps two assumptions which underpin this view. Firstly, it illustrates how, even though the tool focusses on investigating students’ similarities to established benchmark requirements for success, academics still examined the tool in terms of the assumption that the environment needs to be assessed and mapped to understand student success. Secondly, this expression of disjuncture between the requirements for success in the labour market as opposed to the academic environment suggests an assumption that the requirements of success in the academic environment are distinct from the requirements of success in the labour market. This raises the question of how a higher education institution is to establish employability in graduates if this disjuncture persists.

Despite the experienced tensions around the fit of the Shadowmatch® instrument in a higher education setting, the institution did eventually support a pilot project to test the value and appropriateness of this instrument. This was by and large influenced by the placement of this initiative within the overarching student success framework of the institution, referred to earlier. Students’ habits and behaviours resonated with the non-academic risk factors catered for in the framework, and were regarded as one of many variables which could potentially assist over time in not only identifying students at risk but moving beyond this to identify the requirements for success in particular qualifications. If researched, in this case by employing the Shadowmatch® instrument, this could enable appropriate support interventions to be implemented to enhance students’ chances of success.

As discussed earlier, students’ own reports of their experiences were generally positive. This was examined through an evaluation instrument developed by the researchers to determine how students experienced the Shadowmatch® instrument as well as the individual reports and the personal development plans which were the key outputs. These results, in combination with a comprehensive evaluation of the benefits and challenges of the pilot project and various analyses, will determine future support for the use of the Shadowmatch® instrument at the institution. Within the context of researching student habits and behaviours as a key element in the student success framework, it will highlight whether a continued partnership between a corporate outfit and an open distance and e-learning higher education institution is indeed possible and beneficial.

The piloting of the Shadowmatch® instrument at Unisa provided the opportunity to implement a ready-made solution to assess student habits and behaviours as part of the student success framework. It provided a fresh approach in that it firstly examined profiles of successful students along with identifying students at risk. Secondly, it stepped away from trying to establish the requirements of success in a qualification, and instead examined successful candidates to establish clusters of habits and behaviours that contributed to success. An unexpected outcome of the process was that it highlighted some of the underlying tensions and embedded assumptions at the institution, ranging from perceptions surrounding academic demands versus employability demands to the tension between maintaining control over data versus the flexibility, reduced costs and little or no demand on the ICT system of employing external providers.

The pilot project, undertaken by a support department within the institution, provided a lens to examine how corporate-academic partnership could allow an institution to be more dynamic and flexible in supporting its student population. It provides a glimpse into the complexities higher education institutions may face in a dynamic higher education landscape where technology is changing so rapidly that increased reliance on external providers by support functions will be required in order for them to appropriately deliver on their mandates of effectively supporting the core functions of teaching and learning, research, and community engagement.

Apple, M. W. (2009). Some ideas on interrupting the right: On doing critical educational work in conservative times. Education, Citizenship and Social Justice, 9, 87-101.

Baird, L. L. (2000). College climate and the Tinto model. In J. M. Braxton (Ed), Reworking the student departure puzzle (pp. 62-80). Nashville, TN: Vanderbilt University Press.

Barnett, R. (2000), University knowledge in an age of supercomplexity. Higher Education, 40, 409-422.

Bean, J. P. (1980). Dropouts and turnover: The synthesis and test of a causal model of student attrition. Research in Higher Education, 12(2), 155-187.

Bean, J. P. (1982). Student attrition, intentions and confidence: Interaction effects in a path model. Research in Higher Education, 17(4), 291-320.

Beckton, J. (2012). Public technology: Challenging the commodification of knowledge. In H. Stevenson, L. Bell & M. Neary (Eds.), Towards teaching in public: Reshaping the modern university (pp. 118-132). London: Continuum.

Berger, J. B. (2000). Optimising capital, social reproduction, and undergraduate persistence. A sociological perspective. In J. M. Braxton (Ed), Reworking the student departure puzzle (pp 95-124). Nashville, TN: Vanderbilt University Press.

Blackmore, J. (2001). Universities in crisis? Knowledge economies, emancipatory pedagogies, and the critical intellectual. Educational Theory, 51(3), 353-370.

Booth, M. (2012, July 18). Learning analytics: The new black. EDUCAUSEreview, online. Retrieved from http://www.educause.edu/ero/article/learning-analytics-new-black

Boyd, D., & Crawford, K. (2013). Six provocations for big data. Retrieved from http://papers.ssrn.com/sol3/papers.cfm?abstract_id=1926431

Braxton, J. M. (Ed.). (2000). Reworking the student departure puzzle. Nashville, TN: Vanderbilt University Press.

Cabrera, A. F., Nora, A., & Castaneda, M. B. (1992). The role of finances in the persistence process: A structural model. Research in Higher Education, 33(5), 571-593.

Chansarkar, B. A., & Michealoudis, A. (2001). Student profiles and factors affecting performance. International Journal of Mathematical Education in Science and Technology, 32(1), 97—104. DOI: 10.1080/00207390120974.

Clow, D. (2013). MOOCs and the funnel of participation. Proceedings of the Third International Conference on Learning Analytics and Knowledge, LAK’13 (pp. 185-189). Retrieved from http://dl.acm.org/citation.cfm?id=2460332

Daniel, J. (2012). Making sense of MOOCs: Musings in a maze of myth, paradox and possibility. Journal of Interactive Media in Education. Retrieved from http://www-jime.open.ac.uk/jime/article/viewArticle/2012-18/html

Diaz, V., & Brown, M. (2012). Learning analytics. A report on the ELI focus session. Retrieved from http://net.educause.edu/ir/library/PDF/ELI3027.pdf

Diefenbach, T. (2007), The managerialistic ideology of organisational change management. Journal of Organisational Change Management, 20(1), 126-144.

Geertz, C. (1975). The interpretation of cultures. New York: Basic Books.

Giroux, H.A.(2003). Selling out higher education. Policy Futures in Education, 1(1), 179-311.

Guba, E. G., & Lincoln, Y. S. (1985). Understanding and doing naturalistic inquiry. Beverly Hills: Sage Publications.

Hanna, D. E. (1998). Higher education in an era of digital competition: Emerging organizational models. Journal of Asynchronous Learning Networks, 2(1), 66-95.

Haigh, M. (2008). Internationalisation, planetary citizenship and Higher Education Inc. Compare: A Journal of Comparative and International Education, 38(4), 427—440.

Johnson, G. M. (1996). Faculty differences in university attrition: A comparison of the characteristics of arts, education and science students who withdraw from undergraduate programmes. Journal of Higher Education Policy and Management, 18(1), 75-91.

Johnson, J. A. (2013). From open data to information justice. Midwest Political Science Association Annual Conference, April 2013 Version 1.1. Retrieved from http://dx.doi.org/10.2139/ssrn.2241092 - http://papers.ssrn.com/sol3/papers.cfm?abstract_id=2241092

Kabakchieva, D. (2012). Student performance prediction by using data mining classification algorithms. International Journal of Computer Science and Management Research, 1(4), 686—690.

Kember, D. (1989). A longitudinal-process model of drop-out from distance education. The Journal of Higher Education, 60(3), 278-301.

Kember, D., Lee, K., & Li, N. (2001). Cultivating a sense of belonging in part-time students. International Journal of Lifelong Education, 20(4), 326-341.

Kezar, A. J. (2004). Obtaining integrity? Reviewing and examining the charter between higher education and society. The Review of Higher Education, 27(4), 429-459.

Knox, D. (2010). A good horse runs at the shadow of the whip: Surveillance and organizational trust in online learning environments. The Canadian Journal of Media Studies. Retrieved from http://cjms.fims.uwo.ca/issues/07-01/dKnoxAGoodHorseFinal.pdf

Kuh, G. D., & Love, P.G. (2000). A cultural perspective on student departure. In J. M. Braxton (Ed), Reworking the student departure puzzle (pp. 196-212). Nashville, TN: Vanderbilt University Press.

Long, P., & Siemens, G. (2011). Penetrating the fog: Analytics in learning and education. EDUCAUSE Review, September/October, 31-40.

Lynch, K. (2006). Neo-liberalism and marketisation: The implications for higher education. European Educational Research Journal, 5(1), 1-17.

Marx, G. T. (1998). Ethics for the new surveillance. The Information Society: An International Journal, 14(3), 171-185. DOI: org/10.1080/019722498128809

May, H. (2011). Is all the fuss about learning analytics just hype? Retrieved from http://www.loomlearning.com/2011/analytics-schmanalytics

Mayer-Schönberger, V. (2009). Delete. The virtue of forgetting in the digital age. Princeton, NJ: Princeton University Press.

Mayer-Schönberger, V., & Cukier, K. (2013). Big data. A revolution that will transform how we live, work, and think. New York, NY: Houghton Mifflin Publishing Company.

Oblinger, D. G. (2012). Let’s talk analytics. EDUCAUSE Review, July/August, 10-13.

Pounder, C. N. M. (2008). Nine principles for assessing whether privacy is protected in a surveillance society. Identity in the Information Society, 1, 1-22. DOI: 10.1007/s12394-008-0002-2

Prinsloo, P. (2009). Modelling throughput at Unisa: The key to the successful implementation of ODL. Retrieved from http://uir.unisa.ac.za/handle/10500/6035

Prinsloo, P., & Slade, S. (2013). An evaluation of policy frameworks for addressing ethical considerations in learning analytics. Learning Analytics and Knowledge 2013 – Leuven, Belgium, 8-12 April 2013. Retrieved from https://dl.acm.org/citation.cfm?id=2460344

Rule, P., & John, V. (2011). Case study research. Pretoria: Van Schaik Publishers.

Siemens, G. (2011). Learning analytics: Envisioning a research discipline and a domain of practice. Paper presented at 2nd International Conference on Learning Analytics and Knowledge (LAK12), Vancouver. Retrieved from http://learninganalytics.net/LAK_12_keynote_Siemens.pdf\

Saldana, J. (2009). The coding manual for qualitative researchers. London: SAGE Publications Ltd.

Slade, S., & Prinsloo, P. (2013). Learning analytics: Ethical issues and dilemmas. American Behavioral Scientist, 57(1), 1509–1528.

Spady, W. G. (1970). Dropouts from higher education: An interdisciplinary review and synthesis. Interchange, 1(1), 64-85.

Subotzky, G., & Prinsloo, P. (2011). Turning the tide: A socio-critical model and framework for improving student success in open distance learning at the University of South Africa. Distance Education, 32(2), 177-19.

Thomas, G. (2011). How to do your case study. A guide for students and researchers. London, UK: SAGE.

Tierney, W. G.( 2000). Power, identity, and the dilemma of college student departure. In J. M. Braxton (Ed), Reworking the student departure puzzle (pp. 213-234). Nashville, TN: Vanderbilt University Press.

Tinto, V. (1975). Dropout from higher education: A theoretical synthesis of recent research. Review of Educational Research, 45, 89-125.

Tinto, V. (1988). Stages of departure: Reflections on the longitudinal character of student leaving. The Journal of Higher Education, 59(4), 438-455.

Wagner, E., & Ice, P. (2012, July 18). Data changes everything: Delivering on the promise of learning analytics in higher education. EDUCAUSEreview online. Retrieved from http://www.educause.edu/ero/article/data-changes-everything-delivering-promise-learning-analytics-higher-education

Wardley , L. J., Bélanger, C. H., & Leonard, V. M. (2013). Institutional commitment of traditional and non-traditional-aged students: A potential brand measurement? Journal of Marketing for Higher Education, 23(1), 90-112. DOI: 10.1080/08841241.2013.810691

Washburn, J. (2005). University, Inc.: The corporate corruption of higher education. New York, NY: Basic Books

Watters, A. (2012, September 5). Unbundling and unmooring: Technology and the higher ed tsunami [Web log post]. EDUCAUSEreview online. Retrieved from http://www.educause.edu/ero/article/unbundling-and-unmooring-technology-and-higher-ed-tsunami

Willmot, H. (2003). Commercialisation of higher education in the UK: The state, industry and peer review. Studies in Higher Education, 28(2), 129-141. DOI: 10.1080/0307507032000058127.

Woo, K., Gospera, M., McNeilla, M., Preston, G., Green, D., & Phillips, P. (2008). Web-based lecture technologies: Blurring the boundaries between face-to-face and distance learning. Research in Learning Technology, 16(2), 81–93.

Woodley, A. ( 2004). Conceptualising student dropout in part-time distance education: Pathologising the normal? Open Learning, 19(1), 48-63.

Yin, R. K. (2009). Case study research. Design and methods (4th ed.). London, UK: Sage.