Joohi Lee

University of Texas Arlington, USA

This exploratory research project investigated graduate students’ satisfaction levels with online learning associated with human (professor/instructor and instructional associate) and design factors (course structure and technical aspects) using a survey study. A total of 81 graduate students (master’s students who majored in math and science education) enrolled in an online math methods course (Conceptual Geometry) participated in this study. According to the results of this study, student satisfaction level is closely associated with clear guidelines on assignment, rubrics, and constructive feedback. In addition, student satisfaction level is related to professor’s (or course instructor’s) knowledge of materials.

Keywords: Education; math education; distance education; satisfaction factors

There has been clear evidence of rapid growth in online learning in U.S. higher education (Christensen, Horn, & Caldera, 2011; Nagel, 2010). According to Ambient Insight Research (2009), it is projected that more than 80% of all higher education students will take at least one online course by 2014. About 65% of higher education institutions in the U.S. have reported that they offer online education options (Allen & Seaman, 2005). Considering the current needs of online programs at the higher education level, the critical concern is how to maximize student learning by providing quality online learning experiences. Studies have shown that affective domains of students such as motivation and/or satisfaction are significantly related with their learning outcomes (Bryant et al., 2005; Eom, Wen, & Ahill, 2006). More importantly, when they are satisfied with online learning, students tend to be more motivated in learning and motivation leads to context (Graham & Scarborough, 2001). It is essential to closely investigate what elements and factors impact the level of student satisfaction. When students have a high level of satisfaction in learning, they obtain a high level of learning (e.g., Alavi, Wheeler, & Valacich, 1995). To provide students with an effective learning environment, Zhu (2012) recommends that student satisfaction levels should be taken into consideration in designing online courses and building online environments. Thus, it is important to investigate what factors are associated with student satisfaction level for online learning in order to maximize student learning. In this study, factors specific to an online mathematics methods course were investigated.

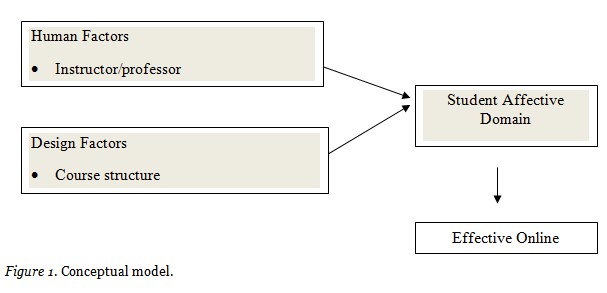

In this study, the researcher used a conceptual model based on the framework suggested by Piccoli, Ahmad, and Ives (2001) that both human and design factors influence student affective and cognitive domains and ultimately lead to effective learning. Figure 1 presents the conceptual model of this proposed study based on Piccoli et al.’s work.

In high-quality online courses, students learn as much as in face-to-face courses (Meyer, 2002). As Figure 1 presents, high-quality online courses are composed of high-quality human (Osmanoglue, Koc, & Isikal, 2013; Russell et al., 2009) and design factors (Nuangchalerm, Prachagool, & Sriputta, 2011). Empirical studies have shown how these factors impact student outcomes or adult learner outcomes. For example, Russell et al. (2009) investigated the effects of online courses for middle school algebra teachers and found significant impacts on teachers’ mathematical understanding, pedagogical beliefs, and instructional practices associated with human factors, in this case math education instructors. When instructors provided teachers with a highly supportive environment, they tended to show positive outcomes in teaching and learning. O’Dwyer et al. (2007) emphasized quality communication as a way to build supportive virtual learning environments for students. They found the lack of communication between students and instructors affected student satisfaction levels in a negative manner. Osmanoglu et al. (2013) examined 19 pre-service and 7 in-service mathematics teachers on the use of online video case discussions and found that effective communications among peers and with instructors promoted pre- and in-service teachers’ mathematical process skills (problem solving, connection, mathematical communication, reasoning and proof, and representation). In particular, the outcomes were shown to be more significant when the instructor participated in discussions.

In addition, design factors impact student learning (Nuangchalerm, Prachagool, & Sriputta, 2011). Nuangchalerm and his colleagues found that students’ online learning experience was less effective due to the difficulty of access to technical assistance. Ku et al. (2011) reported similar findings with 21 graduate students (in-service teachers), who felt less satisfied with online learning because they lacked the skills to use the technology.

This study focused on affective domains (levels of student satisfaction) associated with these two factors, specifically student satisfaction level. For the purpose of this study, human factors (course instructor/professor and instructional associate [IA]/ graduate assistant [GA]) and design factors were course structures and technical aspects.

The following specific research questions were addressed in this study:

A cross-sectional study was conducted in which a survey was administered to participants one time. Cross-sectional study has been known as an effective method to provide a snapshot of the current behaviors, attitudes, and perspectives of participants (Gay, Mills, & Airasian, 2009). This method was used to measure what factors are associated with student satisfaction level in online learning.

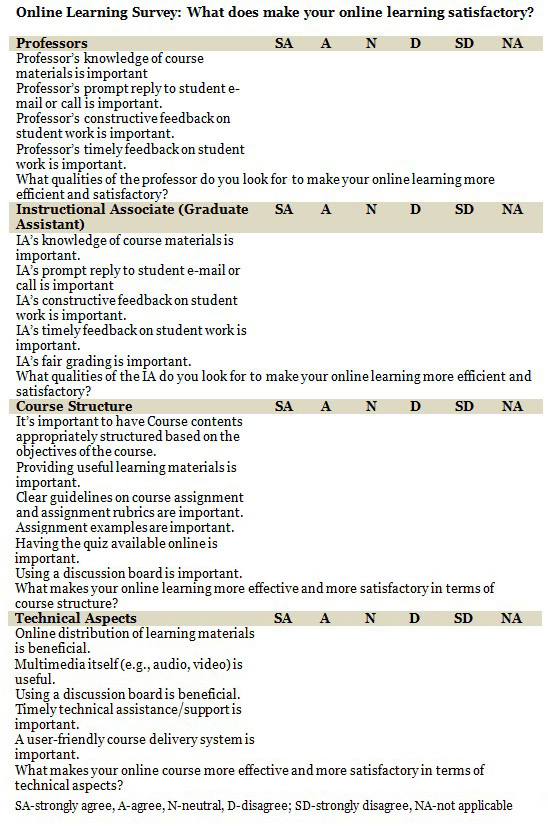

For the purpose of this study, the researcher designed a questionnaire and conducted a pilot study in order to measure reliability of the survey instrument. The survey items comprise a total of 24 questions including four open-ended questions. The items were developed by reviewing online learning design-based research (DBR). DBR is an emerging paradigm which includes pedagogy and tools to help develop and sustain learning environments (Norton & Hathaway, 2008). The major principle of DBR is to produce the most effective learning environment by applying continuous cycles of design enactment, analysis, and redesign (Cobb, 2001). Following the principle of DBR, the survey measures human and design factors associated with student satisfaction levels. In this study, human factors involve professor and instructional associate (IA) or graduate assistant (GA). Design factors include course structure and technical aspects. The survey consists of the following: five items to measure participants’ thoughts on the professor, six items to measure participants’ thoughts on the IA/GA, six items to measure participants’ thoughts on course structure, and seven items to measure participants’ thoughts on technical aspects. Twenty questions are answered on a six-point Likert scale ranging from 1 = strongly agree to 6 = strongly disagree, and four are open-ended questions (see Appendix for instrument).

According to Cox (1996), the term “reliability” is explained by the word “consistency.” When an observed score is strongly consistent, the instrument has high reliability. To measure reliability of the instrument, a pilot study was conducted with 10 graduate students. The results were analyzed using Cronbach’s alpha which is calculated, which is commonly used to test the reliability of questionnaire items (Cronk, 1999). In the pilot, only the 20 quantitative questions were used to calculate the reliability of the survey. Cronbach’s alpha on all 20 items was .92, which indicates very high reliability.

A total of 81 graduate students (master’s students who majored in math and science education) enrolled in an online math methods course participated in this study. Eighty of the participants were geographically spread out across the United States; one was from Korea. The participants were composed of 68 female and 13 male students. All participants reported that they identified themselves as a teacher or educator who directly worked in the field of math and/or science education. Seventy-two participants were school teachers from pre-K through grade 12. Others worked as math and/or curriculum coordinators and science, technology, engineering, and math (STEM) curriculum consultants.

The program in which the participants were enrolled aims to provide a master’s degree in Math and Science Education to in-service teachers or educators from pre-K through grade 12. Students who are admitted to the program take a total of 36 credit hours to complete the degree.

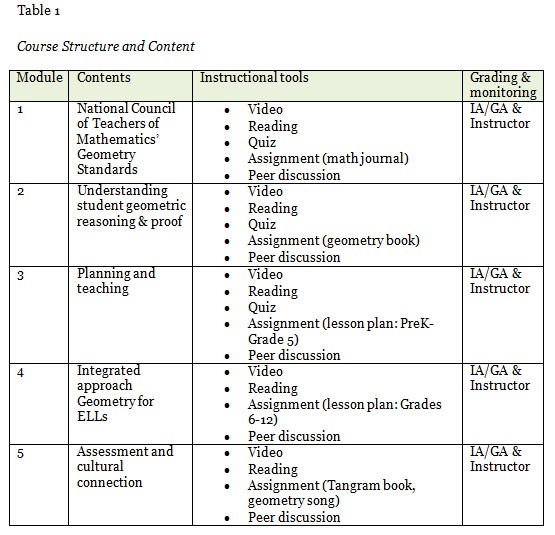

The course (Conceptual Geometry) was offered once a year. Its curriculum focused on how to promote pedagogical content knowledge of geometric reasoning and conceptual understanding of geometry for teachers/educators from pre-k to grade 12. This course is required for all graduate students who are admitted to the program. It is five weeks long, with five modules (one module for each week). The instructor of the course utilized Blackboard (a web-based course management system). Table 1 presents the structure and instructional design of the course.

In order for students to meet course expectations, they viewed video-taped lectures and PowerPoint presentations, completed required readings, took quizzes, completed assignment(s), and participated in theme-threaded forum discussions on a weekly basis. Students were required to submit their assignments on Blackboard by midnight of every Sunday. IA/GAs were required to complete grading by every Wednesday and to monitor student discussions on a daily basis. The course instructor continuously monitored IA/GA work and graded student work if necessary.

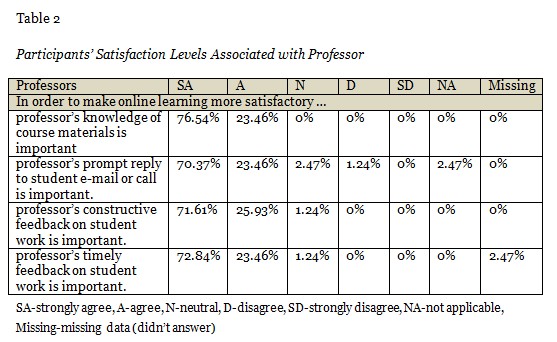

Table 2 shows how participants’ satisfaction levels were associated with a professor/instructor. More than 95% of participants strongly agreed or agreed that their online learning was more satisfactory if their professors had the following characteristics and behaviors: knowledge of the course, prompt reply, constructive and timely feedback on student works. The highest percentage of participants (about 77%) strongly agreed that the professor’s knowledge of course materials is important to make their online learning satisfactory.

One open-ended question asked participants about the qualities they expected for a course professor that would make online learning more satisfactory. Participants’ feedback is categorized into the following four major themes: grading, knowledge, clear guideline/expectations, and availability/prompt reply. Illustrative examples of participant feedback reflecting these themes are presented below.

Grading

• I look for a professor to provide valuable feedback on assignments.

• I look for constructive feedback due to the need to learn what I can improve and what I am doing well on.

• I look for a professor that is consistent with grading and knows the course material.

• Someone gives assignments that help me grow as a teacher

• When I receive an assignment back, I first look for the comments. I learn best by my mistakes.

• I also appreciate comments on my work I turn in so I know I am meeting the expectations of the professor and the course. Suggestions are great so I can better improve my work in the future as well.

• On time with grading and sticking to deadlines

Knowledge

• The professor has knowledge of the new math CCSS.

• Knowledge of current issues in math education

• I also like to have a professor that is highly knowledgeable in the area of study presented in the course.

• Strong knowledge of the subject being taught

• The professor should demonstrate knowledge about the course content and have the ability to present the content in a streamlined, intentional manner.

Clear Guidelines/Expectations

• Clarity of assignment details, due dates, grading scheme (rubrics)

• I like instructors who understand the uniqueness of attending classes online. I prefer instructors who clearly state the assignment objectives and are readily available to answer questions.

• I look for professors that are very clear and concise in laying out their expectations for the course. I also look for clear and thorough descriptions of assignments. Most of all, I look for professors who have an equally high expectation of themselves as they have of their students.

• Clear when communicating (E-mails don’t result in further questions from the student)

• I look for clear guidelines of the teacher’s expectations, i.e. rubrics, and try to follow those as closely as possible to achieve the highest mark that is indicative of my personal best.

• Clear, concise explanations of expectations

Availability/Prompt Reply

• Timely e-mail responses to questions

• I expect the professor to be available for questions, organized, and prompt with replies.

• Particular qualities I look for in a professor of an online class include the professor being available in a timely manner if I have a question about something.

• Open communication with quick response to students

• To me, the most important quality is that if I need information, or have a question, the professor gets back to me quickly. I don’t get to see my professors face to face, so email is important and with the fast pace of a 5 week class, there is little time to waste. All of my professors so far have gotten back to me within a few hours of any emails I have sent.

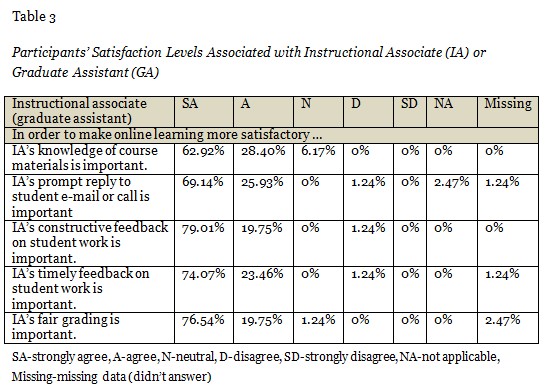

Table 3 presents participants’ perceptions of qualities that make online learning efficient associated with an instructional associate (IA) or graduate assistant (GA). The highest percentage of students strongly agreed that IA’s constructive feedback on student work is important to make their learning satisfactory. More than 95% of students either strongly agreed or agreed that their online learning was more satisfactory when the IA/GA had the following qualities and behaviors: knowledge of the course materials, prompt reply, constructive and timely feedback on student work.

One open-ended question asked participants about the qualities they expected in an IA/GA to make online learning more satisfactory. Participants’ feedback is categorized into the following three themes: grading along with feedback, accessibility/prompt response, and knowledge of course. Examples illustrating these themes are presented below.

Grading along with Feedback

• Grading in a timely manner with pertinent feedback

• I look for constructive comments that can be used to improve the quality of my assignments when the IA is responsible for grading.

• Clear feedback on assignments, especially when the score is less than 100%. Always provide reasons for deducting points (even if it’s just 1).

• Clarification of assignment details, due dates, grading scheme (rubrics)

• Timely grading (within 7 days)

Accessibility/Prompt Response

• I think that a quality that the IA should possess is accessibility. This person needs to be able to be reached at all times and expect that he/she will be contacted at any moment.

• Willing to answer questions, readily available

• Someone who responds to you in a timely fashion and is helpful

• I want my IA’s to email me back quickly as well. I’ve noticed more often than not that it is my IA I communicate with the most, so a timely response to my questions is vital to my success in this program.

• Quick, appropriate feedback and answers to questions

• Quick responses & meaningful feedback on assignments

Knowledge

• The same knowledge and quick communication that is expected from the professor

• The IA is knowledgeable of the course content and materials and has a clear understanding of the direction the professor is taking with the course.

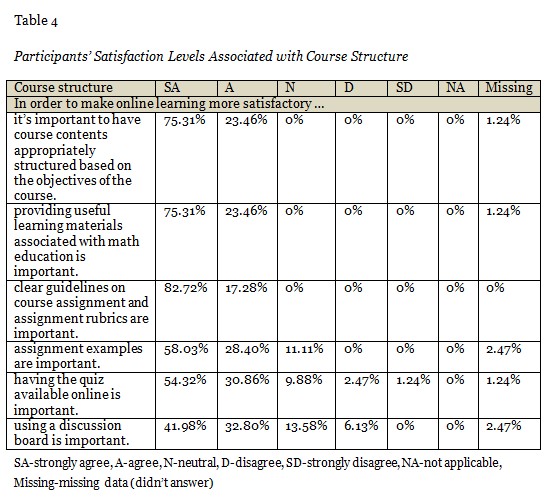

Table 4 presents participants’ perspectives on efficient online learning associated with course structure. The highest percentage of students (82.72%) strongly agreed that clear guidelines for course assignments and assignment rubrics are important to making their learning satisfactory. Students also strongly agreed that course contents should be well aligned with course objectives and should provide useful learning materials for math education.

One open-ended question asked participants what aspects of course structure would make their online learning more efficient and satisfactory. Participants’ feedback is categorized into the following three themes: clear assignment rubrics, useful teaching resources, and module organization. Examples of participant responses that illustrate these themes are presented below.

Clear Assignment Rubrics

• The rubric and assignment expectations have to be clear.

• I like having the rubrics to guide my efforts. Also examples of assignments have been very useful in clarifying what the final product will look like.

• It is important to have clear requirements for assignments and grading.

• The online course is more effective to me if I can see examples of the assignments.

• Clearly outlining assignments and due dates

• Clear expectations, rubrics and examples of work provided by the professor are important so I am able to meet those expectations in my assignments.

Useful Teaching Resources

• …useful material available to benefit the students. I use the information to go back and refer to as a resource.

• A variety of meaningful learning tools and e-math materials allows me to learn more about the course content and be more successful in completing course work.

• I want the e-activities to be beneficial to me in this degree. I want to be able to learn something more than I already knew.

Module (Course) Organization

• Checklist with due dates is very beneficial. Weekly or either for the whole course where we can check each box when it is done.

• Courses should also have a clearly outlined pacing guide and due dates for each week presented at the beginning of the course.

• The syllabus and organization is essential. Each detail makes the course more effective and satisfactory. Helping us look ahead to future assignments is crucial for our success and maintaining our teaching jobs.

• I like to work at my own pace online. I like being able to also see what resources are online to help me through the course.

• Aligning the assignments with the objectives is a great way to make the course more effective.

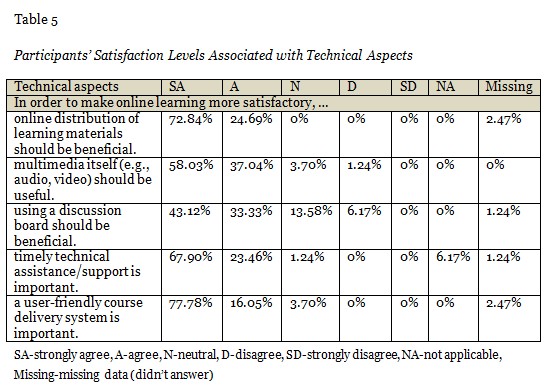

Table 5 presents participants’ perspectives on efficient online learning associated with technical aspects. The highest percentage of students (77.78%) strongly agreed that a user-friendly course delivery system is important. In order to make online learning more effective, students also strongly agreed (72.84%) that course materials should be beneficial.

One open-ended question asked participants what technical aspects they expected to make their online learning more efficient and satisfactory. Participants’ feedback is categorized into the following three themes: user-friendly, material availability/accessibility, and technical support. Participants’ responses that illustrate these themes are presented below.

User-Friendly

• Online math materials are easy to navigate.

• Easy to follow prompts

• Easy to use technology that is working effectively

• Easy to use, easy to get help

• I think that the course should be easy to navigate.

• User-friendly and very simple to navigate

• Clear direction on how to use and access the material in the course is very important. Also, it is important that links to resources work.

All Materials Available Online/Accessibility

• The online course is great because everything that I needed was online (books, articles, videos, discussion). It really makes the learning less stressful.

• The course is more effective if all materials can be accessed online. Readings should be able to be accessed through the course site.

• Being able to access material without difficulty is most important.

• Working links to materials distributed electronically

• I love that it is accessible anytime. It is great also to be able to see all of the modules to look ahead if needed. It is quite easy to access documents.

• Access 24/7

• Readily accessible materials and a delivery system with few glitches make the course more satisfactory.

• Being able to access the materials easily

Technical Support

• Tech support is imperative!

• The availability of technical support is very important, especially between 6pm-12am and weekends.

It is critically important for universities to understand the emerging online learning environment, particularly the level of student satisfaction which is closely associated with learning outcomes. The paradigm of adult learning has changed from content-centered to learner-centered, which places more emphasis on learners than on course materials/contents (Magnussen, 2008). In this regard, this study focuses on learners and provides exploratory empirical data to contribute to the field of online math education in order to make online learning more meaningful to students.

Participants in this study thought that it was most important that a professor and IA/GA be knowledgeable about course materials and provide up-to-date effective strategies/pedagogical knowledge for teaching math. There was a slightly different expectation for instructional personnel: The highest percentage of students expected a course professor to be knowledgeable of course materials while they expected an IA/GA to be able to grade fairly with constructive feedback. Several participants stated that they needed constructive and clear feedback on their work as they attempted to improve their work as well as become a better teacher of mathematics. Prompt replies from the professor and IA/GA were also related to student satisfaction levels. This is a similar finding to that of Russell and her colleagues (2009) in their research conducted with teachers of middle school algebra. They found that instructors’ and content experts’ availability was a key component of student effective learning in online math methods courses. Availability of a professor (instructor, content expert, and/or facilitator) is especially important in online math education courses since the course work or assignments often require a clear understanding of the tasks in order to successfully perform and complete them. If a student does not correctly understand the task, it is problematic. The first step of math problem solving is “understanding a question/problem” (NCTM, 2011). Therefore, finding ways to maximize the availability of a course professor or facilitator is essential. For example, setting up online conferences (live chat, webinar) on a regular basis (e.g., weekly) and having virtual office hours during which students can communicate with the course instructor can facilitate easy access to instructors.

In terms of course structure, students considered clear assignment rubrics and guidelines to be important to make online learning satisfactory. Participants expressed strong feelings about receiving explicit course expectations and assignment guidelines/rubrics. Students tend to focus more on learning when online courses are planned with clear expectations and guidelines (Dykman & Davis, 2008; Ku et al., 2011; Moallem, 2003; Salmon, 2002). Clear explanation is a key for successful online learning because it helps to prevent misunderstanding of course materials and assignments. NCTM (2001) strongly recommends that teachers of mathematics precisely present expectations of students as well as mathematical ideas in order to avoid student confusion in performing their tasks.

Participants also thought that a user-friendly system is an important factor of their satisfaction level. Not all students are proficient in using technology (Darrington, 2008), so it is important to provide a user-friendly system to students for efficient online learning. However, it is also necessary to provide adequate training (e.g., how to navigate course materials, how to upload and download materials, how to get technical assistance, etc.) in order to reduce the level of student frustration. This also prevents difficulties with online technology from interfering with learning (Cornelius & Glasgow, 2007).

This study found that students’ perceptions of discussion boards were associated with the level of their satisfaction. This is an interesting finding that students thought that a discussion board was less significant to make online learning satisfactory. There have been many studies which showed the positive impact on learning of the use of online discussions as students share their own experiences, reflections, and insights from their life as well as their readings (Rettig, 2013). This study did not indicate whether the participants’ experience with discussion boards in the current online course was negative or they were simply not fond of online discussions in general. Future researchers should further investigate the use of discussion boards for virtual discussions in terms of formats, structure, contents, and so forth. Finding effective, interactive, and collaborative ways to participate in discussions is an essential part of making online learning meaningful to students (Bell, Hudson, & Heinan, 2004; Russell et al., 2009).

Communication is “an essential part of mathematics and mathematics education” (NCTM, 2001, p. 60). Communication in math education is critically important since it promotes students’ reasoning and proof abilities along with their collaborative skills as they share their own mathematical ideas and listen to their peers’ perspectives. Further study is necessary to determine how to promote student participation in online discussions and communications with their peers (e.g., what helps students feel more motivated to participate in discussions/communications, what format helps students feel more comfortable sharing their mathematical thinking and insights about teaching, etc.).

Gallie (2005) and Jung et al. (2002) suggest that instructor/professor involvement with online discussions is more effective than discussions held among students only. Depending on who initiates the discussion, the outcomes of learning differ. According to Russell and her colleagues (2009), the accessibility of instructors and/or facilitators is even more key to engaging more students in virtual discussion in online math education than self-paced online discussions.

Assessing students online using tests (quiz, mid-exam, final exam, etc.) has been a challenge in higher education due to the lack of validity (Clarke, et. al., 2004; Hewson, 2012). Participants in this study indicated that an online quiz was one of the least effective factors among other design factors associated with student satisfaction levels. This is a conflicting result based on previous studies (e.g., Dermo, 2009; Marriott, 2009) in which participants showed relatively positive and favorable attitudes toward online summative assessments. Thus, it is recommended for researchers to investigate both formative and summative assessment in online courses in order to adequately evaluate student learning and at the same time to successfully motivate student interest and increase their level of satisfaction. Online assessment needs to be re-conceptualized in order to promote higher order thinking levels. Work that is authentic and applicable to the real world is more meaningful (Dunlap, Sobel, & Sand, 2007; Fish & Wickersham, 2009). In addition, Hewson (2012) urges further studies to examine student affective domains regarding online assessments to determine the best methods to assess students in the way they favor and that lead to a low anxiety level.

This study is limited in several aspects. First, more studies are necessary to investigate in more depth the human and design factors associated with student satisfaction levels and effective online learning. Especially, it is important to investigate how to make effective and meaningful online learning. For example, one might ask how to make course structure more meaningful and effective to meet the needs of students (i.e., does live instruction work better than videotape-based instructions?; does presentation work better than discussions or readings? etc.). Second, this study does not consider students’ demographic information. Demographic variables should be taken into consideration to sensitively meet the needs of students. Third, rigorous study designs are necessary to investigate cause and effect relationships on individual factors (design and human factors) by applying a true experimental design. Individual factors can also be broken into several aspects to investigate how to make them effective for meaningful learning. For example, design factors involve assessments, discussions, lecture types, live chatting, virtual conferences, and so forth. It is necessary to further investigate how each aspect impacts and/or is associated with student learning.

Alavi, M., Wheeler, B. C., & Valacich, J. S. (1995). Using IT to reengineer business education: An exploratory investigation of collaborative telelearning. MIS Quarterly, 19(3), 293-312.

Allen, I., & Seaman, J. (2005). Growing by degrees: Online education in the United States, 2005. Retrieved from http://www.sloan-c.org/resources/growing_by_degrees.pdf

Ambient Insight Research. (2009). The U.S. market for self-paced eLearning products and services: 2010-2015 Forecast and analysis. Retrieved from http://www.ambientinsight.com/Reports/eLearning.aspx

Bell, P. D., Hudson, S., & Heinan, M. (2004). Effect of teaching/learning methodology on effectiveness of a web based medical terminology course? International Journal of Instructional Technology & Distance Learning, 1(4). Retrieved from http://www.itdl.org/Journal/Apr_04/article06.htm

Bryant, S., Kahle, J.B., & Schafer, B.A. (2005) Distance education: A review of the contemporary literature. Issues in Accounting Education, 20(3), 255–272.

Christensen, C., Horn, M., & Caldera, L. (2011). Disrupting college: How disruptive innovation can deliver quality and affordability to postsecondary education. Retrieved from http://www.americanprogress.org/wp content/uploads/issues/2011/02/pdf/disrupting_college.pdf

Clarke, S., Lindsay, K., McKenna, C., & New, S. (2004). INQUIRE: A case study in evaluating the potential of online MCQ tests in a discursive subject. ALT-J, Research in Learning Technology 12, 249–260.

Cobb, P. (2001). Supporting the improvement of learning and teaching in social and institutional context. In S. M. Carver & D. Klahr (Eds.), Cognition and instruction: Twenty-five years of progress (pp. 455-478). Mahwah, NJ: Erlbaum.

Cornelius, F., & Glasgow, M. E. S. (2007). The development and infrastructure needs required for success-one college’s model: Online nursing education at Drexel University. TechTrends, 51(6), 32-35.

Cox, J. (1996). Your opinion, please! How to build the best questionnaire in the field of education. Thousands Oaks, CA: Corwin.

Cronk, B. C. (1999). How to use SPSS. Los Angeles, CA: Pyrezk Publishing.

Darrington, A. (2008). Six lessons in e-learning: Strategies and support for teachers new to online environment. Teaching English in the Two-Year College, 35(4), 416-422.

Dykman, C. A., & Davis, C. K. (2008). Online education forum: Part two-teaching online versus teaching conventionally. Journal of Information Systems Education, 19(2), 157-164.

Dunlap, J. C., Sobel, D., & Sands, D. I. (2007). Supporting students’ cognitive processing in online courses: Designing for deep and meaningful student-to-content interactions. TechTrends, 51(4), 20-31.

Eom, S. B., Wen, J. H., & Ashill, N. (2006). The determinants of students’ perceived learning outcomes and satisfaction in university online education: An empirical investigation. Decision Sciences Journal of Innovative Education, 4(2), 215-235.

Fish, W. W., & Wickersham, L. E. (2009). Best practices for online instructors reminders. The Quarterly Review of Distance Education, 10(3), 179-184.

Gallie, K. (2005). Strudent attrition before and after modifications in distance course delivery. Studies in Learning, Evaluation, Innovation and Development, 2(3), 69-76.

Gay, L. R., Mills, G. E., & Airasian, P. (2009). Educational research: Competencies for analysis and application. Upper Saddle River, NJ: Pearson.

Graham, M., & Scarborough, H. (2001). Enhancing the learning environment for distance education students. Distance Education, 22(2), 232-244.

Hewson, C. (2012). Can online course-based assessment methods be fair and equitable? Relationships between students’ preferences and performance within online and offline assessments. Journal of Computer Assisted Learning, 28, 288-298. doi: 10.1111/j.1365-2729.2011.00473.x

Jung, I., Choi, S., Lim, C., & Leem, J. (2002). Effects of different types of interaction on learning achievement, satisfaction and participation in web-based instruction. Innovations in Education and Teaching International, 39, 153-162. doi:10.1080/14703290252934603.

Ku, H., Akarasriworn, C., Glassmeyer, D. M., Mendoza, B., & Rice, L. A. (2011). Teaching an online graduate mathematics education course for in-service mathematics teachers. Quarterly Review of Distance Education, 12(2), 135-147.

Magnussen, L. (2008). Applying the principles of significant learning in the e-learning environment. Journal of Nursing Education, 47(2), 82-86.

Meyer, K. (2002). Quality in distance education: Focus on on-line learning. ASK-ERIC Higher Education Report, 29(4). San Francisco: Jossey-Bass.

Moallem, M. (2003). An interactive online course: A collaborative design model. Educational Technology Research and Development, 51(4), 85-103.

National Council of Teachers of Mathematics. (2001). Principles and standards for school mathematics. VA, Reston: NCTM.

Nagel, D. (2010). The future of e-learning is more growth. Campus Technology. Retrieved from http://campustechnology.com/articles/2010/03/03/the-future-of-e-learning-is-more-growth.aspx?sc lang=en

Norton, P., & Hathaway, D. (2008) Exploring two teacher education online learning designs: A classroom of one or many? Journal of Research on Technology in Education, 40(4), 475-495.

Nuangchalerm, P., Prachagool, V., & Sriputta, P. (2011). Online professional experiences in teacher preparation program: A preservice teacher study. Canadian Social Science, 7(5), 116-120. doi:10.3968/J.css.1923669720110705.298

O’Dwyer, L., Carey, R., & Kleiman, G. (2007). A study of the effectiveness of the Louisiana Algebra I online course. Journal of Research on Technology in Education, 39(3), 289-306.

Piccoli, G., Ahmad, R., & Ives, B. (2001).Web-based virtual learning environments: A research framework and a preliminary assessment of effectiveness in basic IT skills training. MIS Quarterly, 25(4), 401–426.

Rettig, M. (2013). Online postings of teacher education candidates completing student teaching: What do they talk about? Linking Research & Practice to Improve Learning, 57(4), 40-45.

Russell, M., Kleiman, G., Carey, R., & Douglas, J. (2009). Comparing self-paced and cohort-based online courses for teachers. Journal of Research on Technology in Education, 41(4), 443-466.

Salmon, G. (2002). E-tivities: The key to active online learning. Sterling, VA: Kogan Page.

Zhu, C. (2012). Student satisfaction, performance, and knowledge construction in online collaborative learning. Learning Educational Technology & Society, 15(1), 127-136.