|

|

|

Michele T. Cole, Daniel J. Shelley, and Louis B. Swartz

Robert Morris University, United States

This article presents the results of a three-year study of graduate and undergraduate students’ level of satisfaction with online instruction at one university. The study expands on earlier research into student satisfaction with e-learning. Researchers conducted a series of surveys over eight academic terms. Five hundred and fifty-three students participated in the study. Responses were consistent throughout, although there were some differences noted in the level of student satisfaction with their experience. There were no statistically significant differences in the level of satisfaction based on gender, age, or level of study. Overall, students rated their online instruction as moderately satisfactory, with hybrid or partially online courses rated as somewhat more satisfactory than fully online courses. “Convenience” was the most cited reason for satisfaction. “Lack of interaction” was the most cited reason for dissatisfaction. Preferences for hybrid courses surfaced in the responses to an open-ended question asking what made the experience with online or partially online courses satisfactory or unsatisfactory. This study’s findings support the literature to date and reinforce the significance of student satisfaction to student retention.

Keywords: E-learning; instructional design; online education; student retention; student satisfaction

In their ten-year study of the nature and extent of online education in the United States, Allen and Seaman (2013) found that interest on the part of universities and colleges in online education shows no sign of abating. Online education continues to expand at a rate faster than traditional campus-based programs. The authors reported the number of students enrolled in at least one online course to be at an all-time high of 32% of all enrollments in participating institutions, representing an increase of 570,000 students from the previous year. Allen and Seaman also found that 77% of university leaders responding to the survey rated learning outcomes to be the same, if not better, with online education when compared with face-to-face learning. Their results support the no significant difference phenomenon that Russell (1999) found in his comparative study of student learning in the online and traditional classroom environments. Acknowledging that learning outcomes are equivalent, the question of how satisfied students are with their experiences with e-learning persists. This is important from the stand point of student retention which is, of course, relevant to enrollment and maintaining institutional revenue streams. Also, analysis of student satisfaction may point to improvements in e-learning practices which in turn could improve outcomes.

The Allen and Seaman (2013) report looked at online education, including the growing presence of massive open online courses (MOOCs), from the institutional perspective, not from the student’s. In their report, the authors noted that the remaining barriers to widespread acceptance of online education were lack of faculty and employer acceptance, lack of student discipline and low retention rates. Of these, student retention in online programs is particularly relevant to the discussion of student satisfaction with their online experience. Reinforcing the instructor’s role in designing satisfying online curricula, Kransow (2013) posited that if students were satisfied with their online experiences, they would be more likely to remain in the program.

Kransow (2013) poses a critical question for instructors working in the online environment. How can online courses be designed to maximize student satisfaction as well as student motivation, performance and persistence? Drawing on the literature, Kransow emphasizes the importance of building a sense of community in the online environment. Yet, building an online community that fosters student satisfaction involves strategies that go beyond facilitating interaction with course components. Building community also requires, among other elements, interaction with each other, that is, between student and instructor and among students in the course. Sher (2009), in his study of the role such interactions play in student learning in a Web-based environment, found interaction between student and instructor and among students to be significant factors in student satisfaction and learning.

Interaction—between the student and the instructor, among students, and with course content and technology—was the focus of Strachota’s (2003) study of student satisfaction with distance education. In her study, learner-content interaction ranked first as a determinant of student satisfaction, followed by learner-instructor and learner-technology interaction. Interaction between and among students was not found to be significantly correlated with satisfaction. Bollinger (2004) found three constructs to be important in measuring student satisfaction with online courses: interactivity, instructor variables and issues with technology.

Palmer and Holt (2009) found that a student’s comfort level with technology was critical to satisfaction with online courses. Secondary factors included clarity of expectations and the student’s self-assessment of how well they were doing in the online environment. Drennan, Kennedy, and Pisarski (2005) also found positive perceptions of technology to be one of two key attributes of student satisfaction. The second was autonomous and innovative learning styles. Richardson and Swan (2003) focused on the relationship of social presence in online learning to satisfaction with the instructor. They found a positive correlation between students’ perceptions of social presence and their perceptions of learning and satisfaction. For Sahin (2007), the strongest predictor of student satisfaction was personal relevance (linkage of course content with personal experience), followed by instructor support, active learning and, lastly, authentic learning (real-life problem-solving).

Kleinman (2005) looked at improving instructional design to maximize active learning and interaction in online courses. Over a period of ten years, Kleinman studied online communities of learning, concluding that an online environment which fosters active, engaged learning and which provides the interactive support necessary to help students understand what is expected, leads to a satisfied learning community. Swan (2001), too, found that interactivity was essential to designing online courses that positively affect student satisfaction. Wang (2003) argued that to truly measure student satisfaction researchers must first assess the effectiveness of online education.

Online education represents a major shift in how people learn and in turn, how learners are taught. The argument is made that, therefore, there is an increasing need to understand what contributes to student satisfaction with online learning (Sinclaire, 2011). Student satisfaction is one of several variables influencing the success of online learning programs, along with the institutional factors that Abel (2005) listed in his article on best practices (leadership, faculty commitment, student support, and technology). Sener and Humbert (2003) maintained that satisfaction is a vital element in creating a successful online program.

There have been a number of studies of student satisfaction with e-learning (Swan, 2001; Shelley, Swartz, & Cole, 2008, 2007), fully online as well as with blended learning models (Lim, Morris, & Kupritz, 2007). There have also been a number of studies by Arbaugh and associates on the predictors of student satisfaction with online learning (Arbaugh, 2000; Arbaugh, & Benbunan-Fich, 2006; Arbaugh, et al., 2009; Arbaugh, & Rau, 2007). Results from this study both support and expand on earlier work.

Discussion about the role that MOOCs are destined to play in higher education (Deneen, 2013; Shirky, 2013) serves to heighten educators’ interest in providing quality online courses that maximize student satisfaction. The controversy over granting credit for MOOC courses (Huckabee, 2013; Jacobs, 2013; Kolowich, 2013a; Kolowich, 2013b; Kolowich, 2013c; Lewin, 2013; Pappano, 2012) reinforces the relevance of student satisfaction to successful online education.

This study reports on research into student satisfaction with online education conducted over three years. The research has focused largely on business students at one university in Southwestern Pennsylvania. The emphasis on student satisfaction with e-learning and online instruction is increasingly relevant for curriculum development which in turn is relevant for student retention. Understanding what makes online instruction and e-learning satisfactory helps to inform instructional design.

This study is an extension of previous research on student satisfaction with online education (Cole, Shelley, & Swartz, 2013, Swartz, Cole, & Shelley, 2010, Shelley, Swartz, & Cole, 2008, 2007). Researchers used a multi-item survey instrument to assess how well student expectations were met in selected online courses. Graduate and undergraduate students were asked first whether they were satisfied with their experience with e-learning. Following that, they were asked to explain what made the experience satisfactory or unsatisfactory. Student satisfaction is defined as “the learner’s perceived value of their educational experiences in an educational setting” (Bollinger & Erichsen, 2013, p. 5).

This study focused on two survey questions:

Both survey questions were broken into two separate questions for purposes of analysis, resulting in four research questions:

This paper presents the results of that analysis.

Researchers used a Web-based survey created in Vovici, an online survey software program. Following a pilot study in spring, 2010, surveys were sent to students in graduate and undergraduate business courses over a period of three years. Researchers used a mixed-method analysis to evaluate responses to the selected questions. Descriptive statistics were used to summarize demographic data and survey responses. Results were transferred from Vovici to, and combined in, SPSS to analyze the first two research questions. Independent samples t-tests were conducted on the scaled items. Keyword analysis was used for the third and fourth research questions. The survey was anonymous.

Students in each of the business classes were offered extra credit for taking the survey. Credit was given based on notification to the instructor by the student. The same instructor taught each of the 19 courses in the second and third study samples as well as the business courses included in the initial study.

The initial survey instrument was approved by the University’s Institutional Review Board in 2010. Subsequent modifications to the survey were minor and did not require separate approvals in 2011/2012 or 2012/2013. The same script was used seeking participation in each of the surveys. Participation was solicited via an e-mail from the instructor. Each e-mail included the link to the Web-based survey developed in Vovici.

Data from the completed surveys were transferred from Vovici into SPSS. Independent samples t-tests were conducted on the questions asking students to rate their level of satisfaction with online learning. Responses from males and females, “Generation X” and “Generation Y,” and from graduate and undergraduate students were compared to determine if there were any statistically significant differences in the level of satisfaction with online and partially online courses. Responses to the question asking what contributed to the respondents’ satisfaction or dissatisfaction with online learning were tabulated in Vovici. To analyze these responses, researchers grouped keywords under themes to form categories. The categories were: convenience, interaction, structure, learning style, and platform. “Interaction” included “communication.” “Structure” included “clarity” and “instructor’s role.” “Other” was included to capture responses that did not fall into any of the stated categories.

The sample from the pilot study in spring, 2010 included graduate students from the MS in Instructional Technology and the MS in Nonprofit Management programs, undergraduate business majors, and Masters of Business Administration (MBA) students. No changes to the survey design were indicated as a result of the pilot study. The second study was conducted over three terms, summer, 2010, fall, 2010, and spring, 2011. This sample was composed of undergraduate students enrolled in Legal Environment of Business (BLAW 1050), taught in the fall 2010 term, and graduate students enrolled in Legal Issues of Executive Management (MBAD 6063), which was taught in the summer 2010 and spring 2011 terms. The third study was conducted over four terms, fall, 2011, spring, 2012, fall, 2012, and spring, 2013. This sample was composed of undergraduates in BLAW 1050 taught in the fall 2011, fall 2012, and spring 2013 terms and graduate students in MBAD 6063, taught in the spring 2012 and spring 2013 terms. Both the graduate and undergraduate business courses chosen for the study were taught by the same instructor.

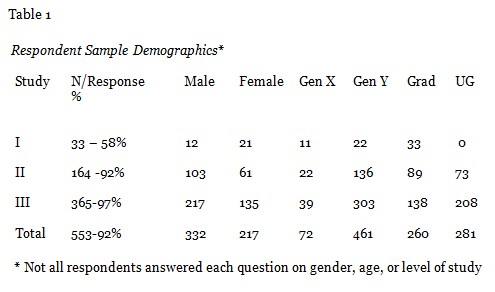

Thirty-three students participated in the spring 2010 survey, a response rate of 58%. One hundred and sixty-four students participated in the second study, a response rate of 92%. Three hundred and fifty-six students participated in the third study, a response rate of 97%. Combined, the total number of participants was 553 of 603 enrolled students, for a response rate of 92%.

Twelve males and 21 females participated in the first survey. One hundred and three males and 61 females responded to the survey in the second study group. Two hundred and seventeen males and 135 females responded to the survey in the third study group for a total of 332 males (60.5%) and 217 females (39.5%) who participated in the surveys. Not all participants in the third sample responded to the question on gender.

Participants were asked to identify themselves as belonging to one of the following age groups:

Eight participants identified themselves as belonging to the Baby Boomer or the Traditional Worker categories. Nine people checked “Other.” Three participants did not respond to the question on age. The remaining respondents self-identified as belonging to “Generation X” or “Generation Y.” Due to the limited sample sizes for “Baby Boomers” and “Traditional Workers,” only responses from participants in the Generation X and Generation Y categories were compared for this study.

In the first survey, 22 respondents self-identified as members of “Generation Y.” Eleven respondents classified themselves as members of “Generation X.” In the second study group, 136 respondents self-identified as “Generation Y.” Twenty-two respondents self-identified as “Generation X.” In the third study group, 303 respondents self-identified as “Generation Y.” Thirty-nine respondents self-identified as “Generation X.” The total number of respondents who self-identified as belonging to “Generation Y” was 461. Seventy-two respondents self-identified as “Generation X.” The total number of respondents belonging to either “Generation X” or “Generation Y” was 533.

Two hundred and sixty graduate students participated in the surveys. Two hundred and eighty-one undergraduate students participated, for a total of 541. Some respondents did not identify themselves clearly as being either graduate or undergraduate students. Table 1 presents the respondents’ demographic information.

Responses to the two questions on student satisfaction from three surveys, Designing Online Courses, Students’ Perceptions of Academic Integrity and Enhancing Online Learning with Technology, provided the data for the analysis. Although survey instruments used in the second and third studies were modified slightly to gather data for the studies on academic integrity and use of technology, each survey asked:

Researchers used a 5 point Likert scale for the first survey question, asking students to rate their level of satisfaction with fully online and/or partially online courses. Zero was equal to “very satisfied;” four was equal to “very dissatisfied.” The second survey question was designed as a follow-up query, asking what contributed to the student’s satisfaction or dissatisfaction with online learning.

To help inform the analysis of responses to the research questions, researchers asked students how many online or partially online courses they had taken. To enable comparisons by gender, age group, and level of study, demographic questions were included in each of the surveys.

Designing Online Courses was administered in the spring 2010 term. The survey was composed of 12 questions. Students’ Perceptions of Academic Integrity was conducted in the summer 2010, fall 2010, and spring 2011 terms. This survey was composed of 13 questions. The third survey, Enhancing Online Learning with Technology, was composed of 12 questions. This survey was administered in the fall 2011, spring 2012, fall 2012, and spring 2013 terms.

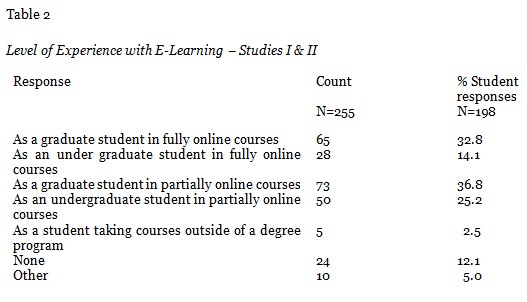

The first survey question sought to capture respondents’ level of experience with e-learning. In the first two studies, students were asked if they had taken or were taking one or more fully online graduate courses, partially online graduate courses, fully online undergraduate courses, and/or partially online undergraduate courses. Responses from both studies were combined for analysis. There were 198 student responses. Since the response categories were not mutually exclusive, a student could select more than one response. Some students had taken both graduate and undergraduate-level fully online and/or partially online courses. As a result, the total number of responses to the question (255) exceeds the number of respondents (198). Table 2 presents the results.

Elaboration on “other” included four instances of some experience with online courses that did not fit the categories in the question, and two references to having had online assignments. Four were unresponsive to the question.

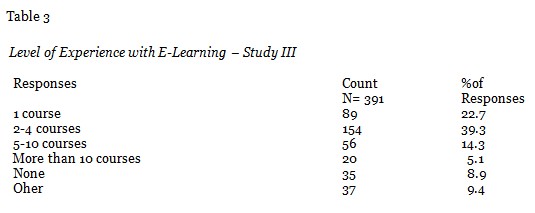

The question asking for the respondent’s level of experience with online or partially online was phrased differently in the third study. In the final surveys (from fall, 2011, spring, 2012, fall, 2012, and spring, 2013), researchers asked how many fully or partially online courses the student had taken. There were 391 responses. Students could choose only one response. Table 3 illustrates the results.

In a two part survey question, students were asked to rate their level of satisfaction with fully online courses taken and with partially online courses taken. Students could respond to either part of the question or to both. To the first part, level of satisfaction with fully online courses, there were 472 responses, 85% of the total 553 participants. A 5 point Likert scale was used to measure responses ranging from 0 (very satisfied) to 4 (very dissatisfied). One hundred and six students or 22.5% of the total responding said that they were “very satisfied.” One hundred and seventy-one (36.2%) said that they were “satisfied.” One hundred and twenty-six (26.7%) were “neutral.” Fifty–one (10.8%) said that they were “dissatisfied.” Eighteen (3.8%) respondents were “very dissatisfied” with their experience with fully online courses.

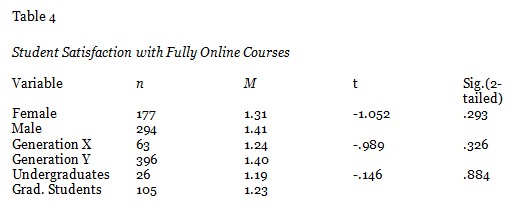

Independent samples t-tests were conducted on this question to determine if there were any significant differences in the levels of satisfaction based on gender, age, or level of study with regard to satisfaction with fully online learning. There were no statistically significant differences between males and females, between members of “Generation X” and “Generation Y,” or between graduate and undergraduate students on the question. Females, members of Generation X, and upper-level undergraduate students were more likely than males, members of Generation Y, and graduate students to rate their experiences with fully online courses as satisfactory. The mean score for females was 1.31; the mean score for males was 1.41. The mean score for members of Generation X was 1.24; the mean score for members of Generation Y was 1.40. The mean score for upper-level undergraduate students was 1.19; the mean score for graduate students was 1.23. Table 4 presents the results.

There were 420 responses, 76% of the total 553 participants, to the second part of the question asking students to rate their level of satisfaction with partially online courses. The same 5 point Likert scale was used to measure both parts. Ninety-nine students or 23.6% of the total responding said that they were “very satisfied.” One hundred and thirty-six (32.4%) said that they were “satisfied.” One hundred and thirty-seven (32.6%) were “neutral.” Forty-three (10.2%) said that they were “dissatisfied.” Five students (1.2%) said that they were “very dissatisfied” with their experience with partially online courses.

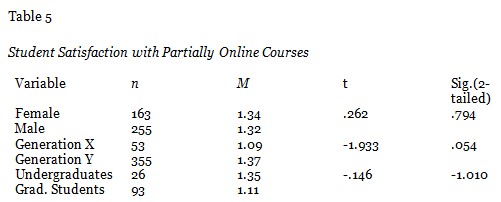

Independent samples t-tests were conducted on this question to determine if there were any significant differences in the levels of satisfaction based on gender, age, or level of study with regard to satisfaction with partially online learning. As with the first research question, there were no statistically significant differences between males and females, between members of “Generation X” and “Generation Y,” or between graduate and undergraduate students with regard to satisfaction with partially online courses. However, unlike satisfaction with fully online courses taken, males were somewhat more satisfied than females, and graduate students were more satisfied than upper-level undergraduates with partially online courses taken. The mean score for males was 1.32; for females, the mean was 1.34. The mean for graduate students was 1.11; for upper-level undergraduates, the mean was 1.35. As was the case with fully online courses, older students, members of Generation X, were more satisfied with their partially online courses than were members of Generation Y. The mean score for “Generation X” was 1.09; for “Generation Y,” the mean was 1.37. Table 5 presents the results.

When samples were combined, students rated their level of satisfaction with partially online courses higher than they did with fully online courses they had taken. Because students could rate both types of e-learning, the variables were treated as continuous as opposed to categorical variables. The mean score for level of satisfaction with partially online courses was 1.33; for fully online courses, the mean was 1.37 on a 5 point Likert scale ranging from 0 (very satisfied) to 4 (very dissatisfied).

The third and fourth research questions were posed in a single open-ended survey question, “What made your experience with the online course/s satisfactory or unsatisfactory?” The survey question did not distinguish partially online courses from fully online courses.

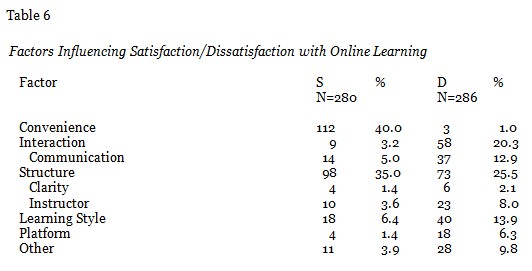

Ninety-one percent of the survey participants (504 of 553) chose to respond to the question asking what factors contributed to their satisfaction or dissatisfaction with online learning. Responses to the question from each of the three surveys were combined for analysis. Keywords were identified and grouped by theme to form five categories: interaction (including communication), convenience, structure (including clarity and instructor’s facility with online instruction), learning style, and platform. “Other” was included to capture comments not easily grouped under one of the above.

Five hundred and sixty-six responses were included in the analysis. Of these, 280 (49.5%) comments expressed reasons for the respondents’ satisfaction with their online experience. Two hundred and eighty-six (50.5%) comments expressed reasons for the respondents’ dissatisfaction with online learning. One answer could include both satisfaction and dissatisfaction responses. These were tabulated separately. Students who participated in the first two studies, graduate and undergraduate business students and masters students in instructional technology and nonprofit management, expressed more dissatisfaction than satisfaction with their online courses than did participants in the third study who were graduate and undergraduate business students. In the first study, 30.9% of the respondents expressed reasons for satisfaction with their experience; 69% expressed reasons for dissatisfaction. In the second study, 43.9% expressed reasons for satisfaction; 56% expressed reasons for dissatisfaction. In the third study, 54% expressed reasons for satisfaction while 46% expressed reasons for dissatisfaction.

Why were students satisfied with their online courses? Convenience ranked highest, followed by online course structure and learning style. For those who were dissatisfied, the most common reason given was lack of interaction, with the instructor as well as with other students. Those who were dissatisfied also listed online course structure and learning style. The online learning platform did figure in the reasons for satisfaction or dissatisfaction, but to a lesser degree.

Convenience was the greatest factor influencing students’ satisfaction with online course, representing 40% of the total 280 comments expressing satisfaction. Course structure, including clarity, represented 36.4% of the comments. The instructor’s facility with online instruction accounted for another 3.6%. Positive interaction and communication, primarily with the instructor represented 8.2%. Compatibility with the student’s learning style represented 6.4% and satisfaction with the platform (Blackboard) accounted for 1.4% of the comments. The remaining remarks were classified as “Other,” representing 3.9%. “Other” included satisfaction with course content and with available resources. “Other” also included responses indicating equal satisfaction with onground courses.

Lack of interaction, including lack of communication with the instructor and classmates, was the main source of dissatisfaction with online courses, accounting for 33.2% of the total 286 comments expressing dissatisfaction. Dissatisfaction with the online course structure, including clarity, represented 27.6% of the comments. Dissatisfaction with the instructor’s facility with online instruction accounted for another 8%. Incompatibility with the student’s learning style represented almost 14% of the total reasons for students’ dissatisfaction. Dissatisfaction with the platform (Blackboard) accounted for 6.3% of the comments. The remaining remarks were classified as “Other,” representing 9.8%. “Other” included dissatisfaction with course content, with the online fees, the work load and with technical support. Table 6 illustrates the results.

In several areas, results were consistent with other studies (Sher, 2009; Kuo, Walker, Belland, & Schroder, 2013). Student-instructor interaction and learner-content interaction were among the predictors of student satisfaction that Kuo, Walker, Belland and Schroder identified in their study of student satisfaction with online programs. In this study, student-instructor interaction and learner-content interaction were also important. But there were some differences of degree with regard to issues of instructor’s communication and interaction with students. Jackson, Jones, and Rodriguez (2010) found that timeliness in responding to students, accessibility, clearly stated expectations, and instructor enthusiasm played a significant role in student satisfaction. In this study, issues of timeliness and instructor’s accessibility were also raised but to a lesser extent than clarity and instructor’s ability to effectively use technology in online courses.

As noted earlier, satisfaction with online learning as expressed in the open-ended question, “What made your experience with the online course/s satisfactory or unsatisfactory?” was stronger in the third sample than in the first two. Although the initial sample included non-business students, the second and third samples were composed of business students taught by the same instructor. Possibly, the positive change in the level of satisfaction could be attributed to the students’ and/or the instructor’s greater experience with online course delivery.

Based on responses to the open-ended question in this study, one might conclude that if going to class or taking courses onground were as convenient as taking courses online, the majority of students would choose that mode of learning. Interestingly, in a comparison of students in traditional, classroom environments and students in online courses, Callaway (2012) found that students in the classroom setting were satisfied with both quality and convenience of the traditional instruction, while students in the online learning environment were satisfied with the quality of the courses, but not with the convenience of the online instruction.

In this study, it was clear that students felt the lack of interaction with the instructor and with their classmates in the online environment. Onground instruction affords the student the opportunity to have questions answered and for the instructor to elaborate on points to be made at the time the student is experiencing difficulty. Interaction with other students contributes to the sense that there is a community of learning and provides additional support for the student to expand his or her understanding of the material. The following comments from the three studies are illustrative.

Study I

# 1. “It is difficult to engage the student in online courses. I think interacting with other students and the professor is crucial to developing persepective [sic] about the subject material as well as real world applications.”

# 3. “ … I have gained so much from face-to-face interactions with professors and fellow students. There is so much learning from observing how a professor does various things and from getting to know classmates and learning from what they have to share. While I absolutely love online courses due to the convenience …, I do not think any student’s sole method of taking courses should be online… I would have to say the mix of online and on-ground found in the hybrid class is perfect for me.”

Study II

# 17. “I like the partially online format because it provides students with the opportunity to put a face to a name with their professor and ask questions…”

# 27. “With a heavy schedule at times, I enjoyed the convenience of the online class. I would have liked to have engaged in face to face conversation about certain topics throughout the course.”

Study III

# 6. “I do not like how you can’t directly ask a teacher something. However, it is great that you have your own time to get projects done throughout an online course. Overall I was satisfied with my online course and would do it again when I have the chance.”

# 312. “I feel it can be good and bad. I think that it can be helpful for people that have busy hectic schedules that can’t be at class at the same time every week. But, it is hard, for me atleast [sic] to learn when you are not sitting face-to-face with the professor.”

Although not the focus of this study, comments from students did reinforce the notion that an answer to convenience and interaction could be hybrid or partially online courses. Bollinger and Erichsen (2013) explored the comparison of hybrid with fully online courses in their study of student satisfaction, finding differences based on learning style. While the reliance on “learning styles” as a basis for more effective instructional design, does have its critics (see, Pashler, McDaniel, Rohrer, & Bjork, 2008), others have found it to be helpful in understanding what contributes to student satisfaction. Learning style also figured in this study as one of the five most cited factors affecting student satisfaction or dissatisfaction with their online and partially online learning experience. Yet in a comparison of online and traditional management information systems courses, Sinclaire (2012) found no relationship between learning style and satisfaction for students in the online courses. She did find a relationship between learning style and satisfaction in the traditional courses.

Also evaluating the two formats, hybrid and fully online, Estelami (2012) found student satisfaction in both to be affected by course content, student-instructor communication, the instructor’s role in the course and the use of effective learning tools. In this study, results supported Estelami’s findings more closely with regard to course content and instructor-student interaction. Comments on the instructor’s facility with technology might be grouped in Estelami’s third and fourth constructs, instructor’s role in the course and use of effective learning tools.

Pinto and Anderson (2013) found that the more the student felt a part of the class, and the more interaction there was between students, the more satisfied the student reported to be with the hybrid format. As in this study, communication was important to the student’s reporting satisfaction with e-learning.

As retention is key to the success of online programs in higher education, the relationship between students’ satisfaction with their e-learning experiences and student retention is clear (Lorenzo, 2012). It is this role that makes ongoing studies of satisfaction with online education important.

Notwithstanding the broad time span of the studies, the sample was small. As noted earlier, the authors’ studies of student satisfaction in the online learning environment to date have focused largely on undergraduate and graduate level business students at one private nonprofit university in Southwestern Pennsylvania. While growing, the university’s experience with online instruction is relatively recent. The first fully online courses were initiated in 1999. Since that time, the university has added more than 250 online and partially online courses. Current offerings include eight undergraduate and twelve graduate degree programs online, and ten online certificate programs.

With regard to the strong response rate for each of the surveys, that could be attributed in part to having offered “extra credit” for taking the survey. The “extra credit” incentive applied to the graduate and undergraduate business courses.

It needs to be noted as well that people are more likely to take the time to articulate dissatisfaction than they are to voice satisfaction. That tendency may be reflected in the responses to the open-ended question and may explain why the overall mean scores on the Likert scale indicated moderate satisfaction with both fully online and partially online courses.

Many institutions and their faculties are immersed in the debate over “how much is too much” online course delivery. Why? Is it because online education appears to have acquired an unstoppable momentum? MOOCs may be a case in point (Allen & Seaman, 2013). Or, perhaps is it that as universities here and abroad are searching for ways to open access to the millions who cannot physically attend college, the affordability and ease of working in the online environment seems to provide a promising solution? In spite of skepticism that online learning has proven to be effective and at the same time, saves money (Bowen, 2013), online education appears to be here to stay.

To date, there have been numerous studies of student satisfaction and student learning. There appears to be consensus that both online and onground instruction is effective (Wagner, Garippo, & Lovaas, 2011). There may be instances where the students’ ability to understand course material is improved in a setting that provides immediate in-person contact with the instructor. But there also may be instances where the student is more comfortable participating in an online course. The argument is that both modes are effective given the right fit between student and course. As Wyatt (2005) noted in his comparison of students’ perceptions of online and traditional classroom learning, some students thrive in the online environment while others languish.

Discussing the disconnect between convenience and quality in the traditional versus the online environment, Callaway (2012) concluded the discovering with “the right mix” of traditional instruction and online delivery could address the disparity. With regard to satisfaction with e-learning, one might argue that “the right mix” would include the elements inherent in a hybrid model. As researchers found in this study, positive interaction, with the instructor and with fellow students seems to go hand-in-hand with student satisfaction. Hybrid instruction is one way to foster interaction while providing the element of convenience and the ability to learn at one’s own pace. Additional research, such as Pinto and Anderson’s (2013) study of student expectations and satisfaction with hybrid learning, would further this discussion.

Abel, R. (2005). Implementing best practices in online learning. EDUCAUSE Quarterly, 28(3), 75-77.

Allen, I.E., & Seaman, J. (2013). Changing course: Ten years of tracking online education in the United States. Babson Survey Research Group and Quahog Research Group. Retrieved from http://www.onlinelearningsurvey.com/reports/changingcourse.pdf

Arbaugh, J.B. (2000). Virtual classroom characteristics and student satisfaction in Internet-based MBA courses. Journal of Management Education, 24(1), 32-54.

Arbaugh, J.B., & Benbunan-Fich, R. (2006). An investigation of epistemological and social dimensions of teaching in online learning environments. Academy of Management Learning & Education, 5, 435-447.

Arbaugh, J.B., Godfrey, M.R., Johnson, M., Pollack, B.L., Neindorf, B., & Wresch, W. (2009). Research in online and blended learning in the business disciplines: Key findings and possible future directions. Internet and Higher Education, 12, 71-87.

Arbaugh, J.B., & Rau, B. L. (2007). A study of disciplinary, structural, and behavioral effects on course outcomes in online MBA courses. Decision Sciences Journal of Innovative Education, 5(1), 65-94.

Bollinger, D.U. (2004). Key factors for determining student satisfaction in online courses. International Journal on E-Learning, 3(1), 61-67. Retrieved from http://www.editlib.org/p/2226

Bollinger, D.U., & Erichsen, E.A. (2013). Student satisfaction with blended and online courses based on personality type. Canadian Journal of Learning & Technology, 39(1), 1-23.

Bowen, W.G. (2013, March 25). Walk deliberately, don’t run, toward online education. The Chronicle of Higher Education. Retrieved from http://chronicle.com/article/Walk-Deleberately-Dont-Run/138109

Callaway, S.K. (2012). Implications of online learning: Measuring student satisfaction and learning for online and traditional students. Insights to a Changing World Journal, 2, www.franklinpublishing.net

Cole, M.T., Shelley, D.J., & Swartz, L.B. (2013). Academic integrity and student satisfaction in an online environment. In H. Yang & S. Wang (Eds.) Cases on online learning communities and beyond: Investigations and applications. Hershey, PA.: IGI Global, Chapter 1, 1-19.

Deneen, P.J. (2013, June 3). We’re all to blame for MOOCs. The Chronicle of Higher Education. Retrieved from http://chronicle.com/article/Were-All-to-Blame-for-MOOCs/139519/

Drennan, J., Kennedy, J., & Pisarski, A. (2005). Factors affecting student attitudes toward flexible online learning in management education. The Journal of Educational Research, 98(6), 331-338. doi:10.3200/JOER.98.6.331-338.

Estelami, H. (2012). An exploratory study of the drivers of student satisfaction and learning experience in hybrid-online and purely online marketing courses. Marketing Education Review, 22(2), 143-155. doi: 10.2753/MER1052-8008220204.

Huckabee, C. (2013, April 22). How to improve public online education: Report offers a model. The Chronicle of Higher Education. Retrieved from http://chronicle.com/article/How-to-Improve-Public-Online/138729/

Jackson, L.C., Jones, S.J., & Rodriguez, R.C. (2010). Faculty actions that result in student satisfaction in online courses. Journal of Asynchronous Learning Networks, 14(4), 78-96.

Jacobs, A.J. (2013, April 21). Two cheers for web u! The New York Times, Sunday Review, pp. 1 & 6.

Kleinman, S. (2005). Strategies for encouraging active learning, interaction, and academic integrity in online courses. Communication Teacher, 19(1), 13-18. doi: 10.1080/1740462042000339212.

Kolowich, S. (2013 a, February 7). American council on education recommends 5 MOOCs for credit. The Chronicle of Higher Education. Retrieved from http://chronicle.com/article/American-Council-on-Education/137155/

Kolowich, S. (2013 b, April 30). Duke U’s undergraduate faculty derails plan for online courses for credit. The Chronicle of Higher Education. Retrieved from http://chronicle.com/article/Duke-Us-Undergraduate/138895/

Kolowich, S. (2013c, July 8). A university’s offer of credit for a MOOC gets no takers. The Chronicle of Higher Education. Retrieved from http://chronicle.com/article/A-Universitys-Offer-of-Credit/140131/

Kranzow, J. (2013). Faculty leadership in online education: Structuring courses to impact student satisfaction and persistence. MERLOT Journal of Online Learning and Teaching, 9(1), 131-139.

Kuo, Y.-C., Walker, A.E., Belland, B.R., & Schroder, K.E. (2013). A predictive study of student satisfaction in online education programs. The International Review of Research in Open and Distance Learning, 14(1), 16-39.

Lewin, T. (2013, April 29). Colleges adapt online courses to ease burden. The New York Times. Retrieved from http://www.nytimes.com/2013/04/30/education/colleges-adapt-online

Lim, D.H., Morris, M.L., & Kupritz, V.W. (2007). Online vs. blended learning: Differences in instructional outcomes and learner satisfaction. Journal of Asynchronous Learning Networks, 11(2).

Lorenzo, G. (2012). A research review about online learning: Are students satisfied? Why do some succeed and others fail? What contributes to higher retention rates and positive learning outcomes? Internet Learning, 1(1), Fall 2012.

Palmer, S.R., & Holt, D.M. (2009). Examining student satisfaction with wholly online learning. Journal of Computer Assisted Learning, 25(2), 101-113. doi: 10.1111/j.1365-2729.2008.00294.x

Pappano, L. (2012, November 4). The year of the MOOC. The New York Times, pp. 26-28.

Pinto, M.B., & Anderson, W. (2013). A little knowledge goes a long way: Student expectation and satisfaction with hybrid learning. Journal of Instructional Pedagogies, 10, 65-76.

Pashler, H., McDaniel, M., Rohrer, D. & Bjork, R. (2008). Learning styles: Concepts and Evidence. Psychological Science in the Public Interest, 9(3), 105-119.

Recursos Humanos. (2010). Dueling age groups in today’s workforce: From baby boomers to generations X and Y. Universia Knowledge @ Wharton. http://www.wharton.universia.net/

Richardson, J.C., & Swan, K. (2003). Examining social presence in online courses in relation to students’ perceived learning and satisfaction. Journal of Asynchronous Learning, 7(1), 68-88.

Russell, T. (1999). The no significant difference phenomenon. Office of Instructional Telecommunications, North Carolina State University, Chapel Hill, N.C.

Sahin, I. (2007). Predicting student satisfaction in distance education and learning environments. Turkish Online Journal of Distance Education, 8(2), Art. 9.

Sener, J., & Humbert, J. (2003). Student satisfaction with online learning: An expanding universe. Retrieved from 2003 wiki.sln.suny.edu

Shelley, D.J., Swartz, L.B., & Cole, M.T. (2008). Learning business law online vs. onland: A mixed method analysis. International Journal of Information and Communication Technology Education, 4(2), 54-66.

Shelley, D.J., Swartz, L.B. & Cole, M.T. (2007) A comparative analysis of online and traditional undergraduate business law courses. International Journal of Information and Communication Technology Education, 3(1), 10-21.

Sher, A. (2009). Assessing the relationship of student-instructor and student-student interaction to student learning and satisfaction in web-based online learning environment. Journal of Interactive Online Learning, 8(2), 102-120.

Shirky, C. (2013, July 8). MOOCs and economic reality. The Chronicle of Higher Education. Retrieved from http://chronicle.com/blogs/conversation/2013/07/08/moocs-and-economic

Sinclaire, J.K. (2012). VARK learning style and student satisfaction with traditional and online courses. International Journal of Education Research, 7(1), 77-89.

Sinclaire, J.K. (2011). Student satisfaction with online learning: Lessons from organizational behavior. Research in Higher Education Journal, 11, 1-20.

Strachota, E.M. (2003). Student satisfaction in online courses: An analysis of the impactof learner-content, learner-instructor, learner-learner and learner-teacher interaction. Dissertation Abstracts International, 64(8), 2746 Key: citeulike: 1029163.

Swan, K. (2001). Virtual interaction: Design factors affecting student satisfaction and perceived learning in asynchronous online courses. Distance Education, 22(2), 306-331. doi: 10.1080/0158791010220208

Swartz, L.B., Cole, M.T., & Shelley, D.J. (2010). Learning business law online vs. onland: Student satisfaction and performance. In L.A. Tomei (Ed.), ICTs for modern educational and instructional advancement: New approaches to teaching. Hershey, PA: IGI Global, Chapter 8, 82-95.

Wagner, S.C., Garippo, S.J., & Lovaas, P. (2011). A longitudinal comparison of online versus traditional instruction. MERLOT Journal of Online Learning and Teaching, 7(1), 68-73.

Wang, Y-S. (2003). Assessment of learner satisfaction with asynchronous electronic learning systems. Information & Management, 41, 75-86. doi: 101016/S0378-7206(03)00028-4.

Wyatt, G. (2005). Satisfaction, academic rigor and interaction: Perceptions of online instruction. Education, 125(3), 460-468.

© Cole, Shelley, Swartz