Volume 17, Number 1

Il-Hyun Jo1, Yeonjeong Park1, Meehyun Yoon2, Hanall Sung1

1 Ewha Womans University, South Korea, 2 University of Georgia, USA

The purpose of this study was to identify the relationship between the psychological variables and online behavioral patterns of students, collected through a learning management system (LMS). As the psychological variable, time and study environment management (TSEM), one of the sub-constructs of MSLQ, was chosen to verify a set of time-related online log variables: login frequency, login regularity, and total login time. Data was collected from 188 college students in a Korean university. Employing structural equation modeling, a hypothesized model was tested to measure the model fit. The results presented a criterion validity of online log variables to estimate the students’ time management. The structural model, including the online variable TSEM, and final score, with a moderate fit, indicated that learners’ online behavior related to time mediates their psychological functions and their learning outcomes. Based on the results, the final discussion includes recommendations for further study and meaningfulness in regards to extending the Learning Analytics for Performance and Action (LAPA) model.

Keywords: log variables, time management, online learning, structural equation model

Advances in Internet technology have enabled the storage of large quantities of data, which can be utilized for educational purposes. Accordingly, much of the previous literature has sought to identify patterns in this data (Baker & Yacef, 2009; Elias, 2011; Macfadyen & Dawson, 2010; Romero, Ventura, & García, 2008). When people access the Internet, massive log data are collected (Brown, 2011; Johnson, Smith, Willis, Levine, & Haywood, 2011). In particular, online learning behaviors are tracked via log-files in learning management systems (LMS), and analyzed as a major predictor of final learning outcomes (Romero, Espejo, Zafra, Romero, & Ventura, 2013;). Such online learning behaviors are considered objective and obvious data for the prediction of outcomes.

However, data alone is not sufficient to elicit useful knowledge from the existing patterns of online learning. An in-depth interpretation of the data is also required. Proper intervention based on accurate interpretation is expected to lead students to undertake more meaningful learning. From this perspective, the research reported in this paper conducted a systematic inquiry based on the learning analytics (LA) approach to answer the question — How can we better utilize online behavioral data to enable appropriate teacher/tutor intervention of students’ behaviours? Appropriate intervention would go beyond the analysis of patterns or the prediction of learning outcomes?

Most of the previous studies of LA seemed to merely describe the psychological interpretation of students’ online learning behaviors rather than analyze observable data (e.g., Blikstein, 2011; Worsley, & Blikstein, 2013; Thompson et al., 2013). This becomes problematic when students need effective interventions. Although instructors can observe student behavioral patterns via log data recorded in the LMS, it is difficult to design a proper method of intervention without considering an underlying psychological aspects. The necessity for interpreting learning behaviors and outcomes, based on physio-psychology and educational psychology, was addressed by Jo and Kim (2013); “How we think,” “how we perceive,” and “how we act” interact together. Our internal thoughts, as well as external motivation, determine our behaviors, and our behavioral patterns can be observed and interpreted by a series of actions and operations. In this perspective, online learning behaviors, as illustrated by observable log-files, are the consequences of mental factors, including those in the unconscious area of the mind.

Along these lines, this study assumed that online behavioral patterns are not random variables but are caused by underlying psychological factors, even though they are not known yet. More specifically, the design and provision of suitable intervention can only be made possible with an understanding of the psychology of student behavior, and not from an examination of the behavior data itself. It is essential to identify the relationship between psychological factors that are validated as predictors of learning and online behavioral patterns.

Self-regulation has long been addressed as a psychological factor which predicts learning achievement (Hofer, Yu, & Pintrich, 1989; Zimmerman, 1990). Several studies have proved that The Motivated Strategies for Learning Questionnaire (MSLQ), which is the most widely utilized measurement tool for self-regulation today, is reliable and valid (Kim, Lee, Lee, & Lee, 2011; Pintrich, Smith, Garcia, & Mckeachine, 1993). Above all, as a major component of the MSLQ, time and study environment management (TSEM) has proven to be a predictor of learning achievement (Jo & Kim, 2013; Jo, Yoon, & Ha, 2013) in adult education. With previous research, we expect that online behavioral indicators are influenced by learners’ TSEM, indicating self-regulation.

Learning analytics (LA) has received significant attention from educators and researchers in higher education since its inception (Campbell, DeBlois, & Oblinger, 2007; Elias, 2011, Swan, 2001). The attractive parts of LA are two-fold: Firstly, LA involves the use of big data-mining technology, which enables the prediction of students’ learning outcomes and stimulates their motivation for learning through the visualization of data; secondly, self-regulating students can use the results of data mining to appropriately prepare their learning process to improve their learning outcomes. In particular, predications based on data mining can help to prevent at-risk students from failing. To realize the rosy promises of LA, researchers of LA have addressed a wide range of research agendas, including mining and preprocessing of big-data, predicting future performance from the data, providing intervention accordingly, and ultimately, improving teaching and learning. However, we identified a gap in the body of research; no interpretation model for the behavior-psychology continuum exists. Thus, we attempted to consider psychological characteristics based on the Learning Analytics for Prediction and Action (LAPA) model to fully understand learners’ behavioral patterns to provide them with suitable intervention.

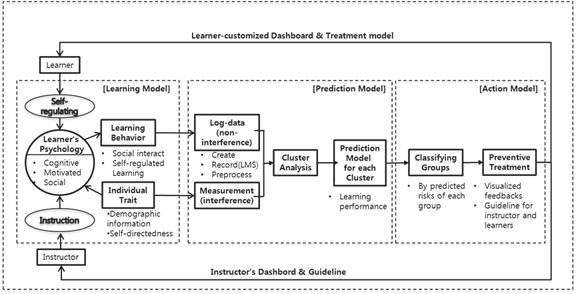

Jo (2012) introduced the LAPA Model shown in Figure 1. In this conceptual framework, he indicated that it is possible to provide a prompt and personalized educational opportunity to both students and instructors in accordance with their levels and needs through learning analytics using an educational technology approach. According to Jo (2012), LAPA consists of three segments: the learning model, prediction model, and action (intervention) model. The first segment presents the learning process including six specific components, i.e., the learner’s self-regulatory ability, learner psychology, instruction, online learning behavior, learner characteristics, and types of courses. In the second segment, the prediction of students’ learning achievements and classification of action (intervention) levels are implemented by analyzing log data and measuring the data. Finally, the third segment provides precautionary actions, such as the service oriented architecture (SOA) dashboard and guidelines for both learners and instructors.

Figure 1. Learning Analytics for Prediction and Action Model (Jo, 2012).

The LAPA model emphasizes learning and pedagogical treatment, in addition to the statistical prediction pursued by traditional mining of educational data. This unique characteristic of the Model is presented in the “Learning Model” to the left of the “Prediction Model,” shown in Figure 1. This shows that the Prediction part, a major function of traditional educational data mining, needs to be supplemented and interpreted by the Learning Model, which provides a psychological understanding of how and why people learn. This also implies that a learner’s specific behavior comes out as a result of interactions of the learner’s individual trait and state, learning contents, instruction, and self-directed learning environment. In this regards, the LAPA Model can be seen as a psychological extension of technique-oriented educational data mining. Based on the LAPA model, this study focuses on this part of “Learning Model” where we attempted to verify how online log variables estimate learners’ self-regulation.

Self-regulation is a psychological function related to motivation and action that learners utilize to achieve their learning goals (Bandura, 1988). Self-regulated learners structure their learning activities by making appropriate and reciprocally related cognitive, affective, and behavioral adjustments (Boekaerts, 1999; Karoly, 1993). It is related to how learners utilize materials, along with how effectively they set goals, activate prior knowledge, monitor their learning, and select strategies (Bol & Garner, 2011). As Butler and Winne (1995) expressed, “the most effective learners are self-regulating” (p. 245). They tend to pay more attention to planning their learning, and revisiting goals, as well as engage in more self-monitoring and other cognitive strategies (Azevedo, Guthrie, & Seibert, 2004; Greene & Azevedo, 2007). Learners who employ effective self-regulation also tend to perform better (Pressley & Ghatala, 1990; Pressley & Harris, 2006; White & Frederiksen, 2005). Poorer self-regulators tend to struggle with distractions, dwell on their mistakes, and are less organized when solving tasks (Zimmerman, 1998), as they spend less time assessing how the new information is linked to prior knowledge (Greene & Azevedo, 2009).

Among the many psychological factors involved in self-regulation, we focused on time and study environment management (TSEM). TSEM is the ability to effectively manage learning time (Kearsley, 2000; Phipps & Merisotic, 1999) and environment (Zimmerman & Martines-Ponz, 1986). Specifically, it is related to learners’ abilities to prioritize learning tasks, allocate time to sub-tasks, and revise their plans as necessary (Lynch & Dembo, 2004). Students with high TSEM are proactive in managing not only their study time, but also their learning environments and resources (Lynch & Dembo, 2004). For example, students who manage learning time and environment efficiently demonstrate high functionality and choose a suitable learning tool.

Previous studies discuss how learners manage the assigned learning time to achieve the learning goal (e.g., Lynch & Dembo, 2004; Kwon, 2009; Choi & Choi, 2012). In spite of inconsistent perspectives of TSEM as a central factor of self-regulation, Kwon (2009) regarded time management as a salient factor for success in e-learning and found a relationship between learners' levels of action, time management, and learning outcomes, suggesting that understanding time management was key to approaching e-learning. In addition, Choi and Choi (2012) verified the effects of TSEM on learners’ self-regulated learning and learning achievement in e-learning settings focusing on juniors in an online course at a university in Korea.

In this study, we utilized the standardized tool MSLQ. Not only has its reliability and validity for measuring learners’ psychological learning strategies been proven, but this questionnaire is also a potent learning predictor (Kim, Lee, Lee, & Lee, 2011; Pintrich, Smith, Garcia, & Mckeachine, 1993). The questionnaire includes 81 questions divided into four categories: motivation, cognitive strategy, metacognition, and resource management strategy. Jo, Yoon, and Ha (2013) indicated that students’ self-regulatory ability, specifically their time management strategies, were hidden psychological characteristics driving regular login activity, which results in high performance. However, a further study needs to validate the relationship between specified online login variables and time management strategies.

Advanced studies have analyzed how learners’ self-regulation behaviors are presented in online learning environments (e.g., Stanca, 2006; Jo, & Kim, 2013; Jo, Yoon, & Ha, 2013; Jo, Kim, & Yoon, 2014; Moore, 2003; Mödritscher, Andergassen, & Neumann, 2013). Using panel data, Stanca (2006) found a relationship between students’ regular learning attendance and academic performance. In an e-learning environment using log data, Jo and Kim (2013) indicated that the regularity of learning, calculated using the standard deviation of learning time, has a significant effect on learners’ performance. Moore (2003) also associated learners’ attendance with their performance, and asserted that learners who participate in a class regularly achieve better academic outcomes.

Recently, Mödritscher, Andergassen, and Neumann (2013) investigated the correlations between learning results and LMS usage, focusing on the log files related to students’ practice and learning by repetition. That study correlated students’ online behavior log variables, including the amount of learning time, learning days, duration of learning at various times of the day, and coverage of self-assessment exercises, with their final exam results.

Other recent studies which analyzed online behaviors based on the learning analytics model (Jo & Kim, 2013; Jo, Yoon, & Ha, 2013) indicated several potent online log variables which could predict students’ time management strategies and their final learning outcomes. Those log variables were total login time, login frequency and login regularity. Jo, Kim, and Yoon (2014) further constructed the three variables as proxy variables to represent time management strategies in an online course. Their study, as shown in Figure 2, attempted to connect the theoretical background regarding time management strategy with such proxy variables derived from the online log file. That is, time management strategy is a concept related to how self-regulated learners organize and prioritize their tasks (Britton & Tesser, 1991; Moore, 2003), invest sufficient amounts of time on tasks, and actively participate in the learning process (Davis, 2000; Orpen, 1994; Woolfolk & Woolfolk, 1986), while they sustain their time and efforts based on a well-planned schedule (Barling, Kellowy, & Cheung, 1996).

Figure 2. Three online log variables with their theoretical backgrounds (Jo, Kim, & Yoon, 2014)

Learners with high abilities to prioritize tasks are able to better invest their time and effort on study, and are aware of their tasks, prompting them to check their tasks regularly. To some extent, the concepts of sufficient time investment and active participation are assumed to influence the total login time and login frequency. The relationship between the regularity of the login interval and persistency at tasks with well-planned time usage can be posited if we consider that learners who make a persistent effort based on the use of well-planned time are likely to spend their time evenly, rather than procrastinate and cram.

Although the previous studies introduced so far highlighted the usefulness and significance of online log variables, they did not validate criterion using an established measurement scale of students’ self-regulation, specifically, time management strategies. Thus, in our study, we focused on how the online log variables are related to psychological factors. Based on previous research, it was hypothesized that the TSEM of the MSLQ, reflecting students’ time management abilities, would affect students’ time-related log behavior patterns: total login time, login frequency, and regularity of the learning interval. More specifically, it was hypothesized that total login time would be longer, login frequency would be greater, and the regularity of login, and the interval between logins would be smaller on account of students’ high abilities to manage their time.

A total of 188 students at a private university located in Seoul, Korea, were asked to participate in this study. These students took an online course entitled “Management Statistics” in the first semester of 2014. This study incorporated two major data-collection methods: a survey to measure students’ psychological characteristics, and extraction of log-data from an LMS to measure online behavioral patterns.

The survey was conducted at the beginning of the semester when the students participated in a monthly offline meeting. A total of 138 of the 188 students completed the survey. Among the 138 participants, 14 failed to complete all items on the measurement instrument, resulting in a final data set of 124 students for analysis (65.9%).

The survey instrument included participants’ demographic information and questions regarding the MSLQ’s TSEM. A Korean version of the MSLQ was utilized, which was the translated version of the instrument developed by Pintrich and De Groot (1990). The MSLQ items were composed of a five-point scale (Not at all=1, Strongly agree=5), with eight questions related to TSEM. The several studies using a Korean version of the instrument have shown stable reliability and validity. For example, in the study of Kim, Lee, Lee, and Lee (2011) the Cronbach alpha value of the resource management scale including TSEM (19 items) was.72. Chung, Kim, and Kang (2010) specifically reported the alpha value of TSEM (eight items), which was.65. In our study it was.68.

Table 1

Survey Instrument

| Survey Structure | Contents of Questions | |

| Part 1 | Demographic Info | Name, ID, Grade, Affiliated College, and Major |

| Part 2 | MSLQ _TS_01 | I usually study in a place where I can concentrate on my course work. |

| MSLQ _TS_02 | I make good use of my study time for this course. | |

| MSLQ _TS_03 | I find it hard to stick to a study schedule. (REVERSED) | |

| MSLQ _TS_04 | I have a regular place set aside for studying. | |

| MSLQ _TS_05 | I attend class regularly. | |

| MSLQ _TS_06 | I often find that I don’t spend very much time on this course because of other activities. (REVERSED) | |

| MSLQ _TS_07 | I rarely find time to review my notes or reading before an exam. (REVERSED) | |

| MSLQ _TS_08 | I make sure I keep up with the weekly reading and assignments for this course. | |

The participants ranged from freshmen to seniors enrolled in a variety of majors throughout the campus. The survey indicated that the respondents were enrolled in the colleges including Humanities College (34%), College of Business (18%), Social Science College (15%), College of Health Science (6%), College of Art (6%), College of Education (5%), International College (5%), College of Music (2%), College of Technology (2%), and Graduate School (2%). For grades, seniors occupied the largest proportion at 42%, juniors followed at 25%, next were sophomores at 18%, and finally, freshmen had the least representation at 15%. There was a variety of students majoring in Business Administration and the following majors: Chinese Literature (10%), English Literature (9%), International Studies (5%), Educational Technology (4%), Advertising (3%), and Statistics (3%). In addition, students majoring in other subjects, such as Psychology and Philosophy, participated in the survey.

In this study, online “Management Statistics” courseware allowed students to access the virtual learning environment. Consequently, the online learning environment and resources were also important instruments for study. Video course materials were uploaded every week by instructors, and the students studied by themselves by the video-based instruction.

As a full online course, students were required to attend the virtual classroom to view the weekly-uploaded video lectures, take online quizzes, submit individual tasks, and take mid-term and final exams for the successful completion of the course. The impact of each activity on the final score had the following weight: virtual attendance (5%), individual tasks (10%), quiz (10%), mid-term exam (30%), and final exam (45%). Every activity occurred in the virtual classroom, except for the mid-term and final exam. In addition, to complement students’ self-directed learning in the virtual class, an offline meeting was held once a month to provide students with the opportunity to ask questions directly to the instructor. However, the monthly offline meeting was not mandatory, since the course was 100% virtual.

Since the activities in the virtual class were reflected in students’ final scores and grades, they were facilitated to logon to the virtual classroom frequently and regularly. As a result, students left millions of log-file entries during the semester (sixteen weeks) in the Moodle-based LMS. For analysis in this study, students’ weekly login data were intentionally extracted from the system. When extracting the data set, the total login time, login frequency, and regularity of the login interval were extracted using a data mining algorithm and inserted into our analysis, because these variables were considered to be related to students’ time management throughout a series of previous studies, as introduced in the literature review section.

For total login time to be accurately calculated, all pieces of each learner’s login stamp were collected and added up. Login duration for each activity was calculated according to the stored page information, which means the time period from the beginning of learning to the point in time when the learner finished the task. In this specific e-learning system, login points in time could be clearly identified, while points in time for the logouts were difficult to determine, because there are a variety of cases in which learners terminate their online course without logging out of the learning window. For example, they may shut down the web browser. Thus, the duration of learning time for the last activity was substituted for the average learning duration of the activity.

Total login frequency was computed by adding up the number of logins into the learning system window based on the web log data. To compute the exact figure of login frequency, the number of multiple connections on the same date was calculated (Kim, Yoon, & Ha, 2013). The standard deviation of the average learning time was calculated based on the method used in the study by Kim (2011). This is derived from the records of points in time when a learner accessed the learning system window. Then, the regularity of the learning interval was calculated using the variance.

The purpose of this study was to validate how well TSEMs, one of the sub-constructs of MSLQ (the possible candidate psychological factor), can predict online behavioral variables (criteria) related to learners’ learning time recorded in the log files. Therefore, the validity criterion was verified by setting the items corresponding to the TSEMs among the measured MSLQ as exogenous observed variables, TSEMs as exogenous latent variables, and the total login time, login frequency, and irregularity of login interval, extracted from participants’ log activity in the LMS, as endogenous observed variables. On this account, the Structural Equating Model (SEM) was utilized with Amos 18.

Table 2 shows the results of descriptive statistics about the variables related to the criterion validity of the learners’ TSEM tests. In addition, it was confirmed that the normal distribution conditions required by the structural equating model (skewness <3 and kurtosis <10) were met (Kline, 2005).

In reviewing the results of the self-reported surveys, the mean TSEM ability of the participants in this study was 3.26. The results of the responses for each item are shown in Table 2. Regarding online behavioral patterns, the results showed that participants stayed in the virtual classroom for total 42.30 (SD = 13.02) hours per one semester, and logged on an average of 108.94 (SD = 44.53) times. The mean learner login regularity was 43.52 (SD = 18.28). In this study, the login regularity was calculated by using the standard deviation of the login intervals. Therefore, the lower the value, the higher it indicates regular login. This variable technically means the “irregularity of the access interval.”

Table 2

Descriptive Statistics (N=124)

| Latent Variable | Observed Variables | Mean | SD | Skewness | Kurtosis | |

| TSEM | MSLQ scale by self-reported measurement |

MSLQ _TS_01 | 3.94 | .88 | -.59 | -.23 |

| MSLQ _TS_02 | 3.25 | .93 | -.02 | .04 | ||

| MSLQ _TS_03 | 3.14 | .97 | .20 | -.48 | ||

| MSLQ _TS_04 | 3.29 | 1.08 | -.33 | -.63 | ||

| MSLQ _TS_05 | 3.79 | .75 | .01 | -.55 | ||

| MSLQ _TS_06 | 2.25 | .84 | .55 | .27 | ||

| MSLQ _TS_07 | 3.75 | .88 | -.51 | .02 | ||

| MSLQ _TS_08 | 2.69 | .97 | .07 | -.42 | ||

| Online behavior by log data in LMS |

Total Login Time | 42.30 | 13.02 | 1.01 | 1.68 | |

| Login Frequency | 108.94 | 44.53 | 1.66 | 4.52 | ||

| Login Regularity | 43.52 | 18.28 | 1.19 | 1.84 | ||

Before conducting criterion validation of TSEM in the MSLQ and time-relevant online behavior, confirmatory factory analysis was performed. Confirmatory factor analysis presents how closely the input correlations are reproduced given that the items fall into one specific factor. In our case, eight questions and three time-relevant online behavior variables were tested to see how well they fit into the two latent variables. The standardized factor loadings in the two latent variables were estimated by maximum likelihood. Table 3 provides the results of standard regression weights and model fit. The weights, ranging from.37 to.93, were considered to be highly valid. Since login regularity is a measurement that actually indicates the irregularity of learning, which was calculated by the standard deviation of the login interval, a negative coefficient was observed.

Table 3

Standardized Regression Weights of the Measurement Model

| Latent Variable | Observed Variable | Standardized Regression Weights and Model Fit | |

| MSLQ scale by self-reported measurement |

MSLQ _TS_01 | .46** | CMIN/df= 1.466 p =.022 CFI=.924 TLI=.907 RMSEA=.062 |

| MSLQ _TS_02 | .46** | ||

| MSLQ _TS_03 | .43** | ||

| MSLQ _TS_04 | .37** | ||

| MSLQ _TS_05 | .56** | ||

| MSLQ _TS_06 | .49** | ||

| MSLQ _TS_07 | .46** | ||

| MSLQ _TS_08 | .42** | ||

| Online behavior by log data in the LMS |

Total Login Time | .45** | |

| Login Frequency | .93** | ||

| Login Regularity | -.81** | ||

Note. *p<.05, ** p<.01, *** p<.001

Structural Model

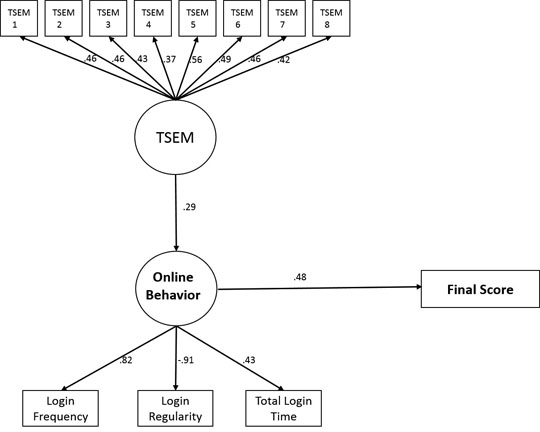

Structural equation modeling (SEM) is a powerful multivariate analysis method not only for model building, but also for testing a model. In our study, SEM was implemented to investigate structural relationships between variables of learners’ time management strategy constructs in the MSLQ, variables from the online log files, and performance. That is, we tested all the hypotheses by examining direct and indirect effects among the variables. Table 4 shows the regression coefficients among the two latent variables and learners’ final scores. Specifically, learners’ TSEM scores have a positive effect on learners’ time-relevant online behavior (β=.29, p<.05), and learners’ time-relevant online behavior has a positive effect on their final scores (β=.48, p<.05). However, the TSEM score does not have a direct effect on learners’ final scores (β=.15, p=.16). To determine whether learners’ time relevant online behavior mediates between TSEM and learners’ final scores, the Sobel Test was performed. The result shows that TSEM has an indirect effect on the final scores, mediated by learners’ time-relevant online behavior (Z=112.18, p=.00). These results imply that TSEM and the final score are perfectly mediated by time-relevant online behavior.

Table 4

Results of Hypotheses Test

| Type of Effects | Correlation | Standardized Regression Weights | Sobel value |

| Direct effect | TSEM – Online Behavior | β=.29* | - |

| Online Behavior–Final Score | β=.48* | - | |

| TSEM– Final score | β=.15 | - | |

| Indirect effect | TSEM– Final score | - | Z=.112.18*** |

Note. *p<.05, ** p<.01, *** p<.001

The overall structural model is presented in Figure 3, where all the observed variables of TSEM were connected to online behavior log variables. In referring to the model modification indices in AMOS 18.0, we added the correlation links among measurement errors and modified the initial model for conciseness by removing the insignificant coefficient between TSEM and the final score. The fit indices for the modified model are indicated in Table 5.

Figure 3. Estimated coefficients for the modified measurement model

Table 5

Fit Indices for the Measurement and Correction Model (N=124)

| CMIN | df | CMIN/df | p | CFI | TLI |

RMSEA (Lo 90 – Hi 90) | |

| Hypothesized model | 79.548 | 54 | 1.473 | .013 | .918 | .900 | .062(.029-090) |

| Modified model | 81.623 | 55 | 1.484 | .011 | .915 | .898 | .063(.031-090) |

Throughout this research, the criterion validity between TSEM in the MSLQ and LMS online log variables was evidenced. The results showed modest correlations between the latent variables of the MSLQ and three variables of the online behavior log: total login time, login frequency, and login regularity. The findings of this study provide several implications and a guide for future research.

Firstly, the results empirically proved that online log variables derived from all the actions that students leave in the LMS are affected by psychological factors. This result is consistent with the results of previous studies (Jo, Yoon, & Ha, 2013). By using the MSLQ scale, which has high validity and reliability for the measurement of students’ self-regulation abilities, the study examined to what extent online log variables are explained by the psychological concept of TSEM.

Secondly, the results of this study provided the hoped-for possibility of automated analysis and prescription based on the log data without the need to administer the MSLQ survey. That is, the findings can be interpreted as empirical evidence for measuring the level of learners’ time management strategies and taking motivational and cognitive pre-emptive action, without implementing extra self-reporting surveys in the online learning environment. Consequently, further research should explore other latent variables in the MSLQ instrument, such as motivation or other resource management strategies in relation to the online behavior log variables.

Thirdly, structural equation modeling, including TSEM, online log variable, and final results, confirmed that learners’ time related online behavior mediates between their psychological constructs and final scores. In this study, the TSEM score did not have a direct effect on learners’ final scores, which is inconsistent with the previous studies mentioned earlier (Jo & Kim, 2013; Jo, Yoon, & Ha, 2013). Interestingly, online log variables played a mediating role in presenting indirect effects of TSEM on final scores. While such a result suggests the need for further studies in different contexts, this result of this study is meaningful because it reveals the relationship between the learner’s psychological construct, online behavioral patterns, and learning outcome.

Fourthly, the results of our research contribute by extending the LAPA model. As discussed in the literature review, the model is a framework which extends data-oriented traditional educational data mining into the psychological interpretation and pedagogical intervention area (Jo, Yoon, & Ha, 2013). Thus, it enables the identification of underlying mediating processes, diagnosis of internal or external conditions, and the discovery of teaching solutions and interventions that help educators effectively teach and manage students. When educators apply appropriate interventions that affect internal factors, they will be able to achieve the ultimate goal, which is to change human behavior in a desirable way in online learning environments.

As the LAPA model explains the logical flow among the learning, prediction, and intervention section: 1) a more accurate prediction model can lead to enhanced and personalized learning interventions; 2) a robust prediction model can be developed by exploring individual learners’ psychological factors, and fundamentally; 3) their psychological factors can be measured based on their behaviors. This study used the scales in the MSLQ instrument, with multiple criterion validations and extension to other sub-factors beyond TSEM. However, it is possible to replace these scales with automatically collected and measured behavioral patterns of learning which are obtained from online log files.

In order to gain a better understanding of how students manage their time when learning online, this study identified the relationship between psychological variables and login data collected within an LMS. Based on the literature review, the study tested the criterion validity of three online log variables through a comparison with TSEM in an MSLQ

Although the study revealed the underlying psychological factors that are reflected in learners’ online behaviors, it is important to take the limitations of the study into account when interpreting the results. Firstly, it should be noted that all participants were female college students. Further research should be conducted to ensure the generalizability of the behavioral variables in various contexts with different target group (e.g., male students). Secondly, the dataset was only extracted from one course that was provided 100% online. Therefore, future studies should explore various types of online learning (e.g., massive open online courses and blended learning) and ensure more robust criterion validity and reliability of results. Lastly, we only addressed TSEM, one of the 15 subscales in the MSLQ. In future research, other subscales associated with learners’ online behaviors should be considered to enhance the understanding of multidimensional online behaviors. For example, extrinsic goal orientation should be examined to understand how learner behavior is reinforced by external motivation.

Despite the limitations mentioned above, this study provided a clear explanation of learners’ online behaviors by linking them to psychological variables. This study provides a foundation on which future studies can build. We argue that instructional designers should consider supporting intermediate processes between the behavioral and psychological factors to link between two segments, the learning model and prediction model in the LAPA model. We expect that with this research, instructors will be able to provide suitable interventions for learners by leveraging the use of analytics to determine psychological factors affecting students more effectively. It should also be emphasized that suitable treatment of students and learning interventions can be derived from students’ behavior patterns based on psychological factors.

This work was supported by the Ministry of Education of the Republic of Korea and the National Research Foundation of Korea (NRF-2015S1A5B5A02010250).

Azevedo, R., Guthrie, J. T., & Seibert, D. (2004). The role of self-regulated learning in fostering students’ conceptual understanding of complex systems with hypermedia. Journal of Educational Computing Research, 30, 87–111.

Bandura, A. (1988). Self-regulation of motivation and action through goal systems. In V. Hamilton, F. H. Bower, & N. H. Frijda (Eds.), Cognitive perspectives on emotion and motivation (pp. 37-61). Dordrecht, Netherlands: Springer.

Baker, R. S. J. D., & Yacef, K. (2009). The state of educational data mining in 2009: A review and future visions. Journal of Educational Data Mining, 1 (1), 3-17.

Barling, J., Kelloway, E. K., and Cheung, D. (1996). Time management and achievement striving interact to predict car sale performance. Journal of Applied Psychology, 81 (6), 821-826

Blikstein, P. (2011). Using learning analytics to assess students’ behavior in open-ended programming tasks. In Proceedings of the 1 st International Conference on Learning Analytics and Knowledge (pp.110-116). ACM.

Boekaerts, M. (1999). Self-regulated learning: Where we are today. International Journal of Educational Research, 31, 445–457.

Bol, L., & Garner, J. K. (2011). Challenges in supporting self-regulation in distance education environments. Journal of Computing in Higher Education, 23, 104–123.

Britton, B. K. & Tesser, A. (1991). Effects of time-management practices on college grades. Journal of Educational Psychology, 83 (3), 405-410.

Brown, M. (2011). Learning analytics: The coming third wave. EDUCAUSE Learning Initiative Brief, 1-4. Retrieved from https://net.educause.edu/ir/library/pdf/ELIB1101.pdf

Butler, D. L. & Winne, P. H. (1995). Feedback and self-regulated learning: A theoretical synthesis. Review of Educational Research, 65 (3), 245-281.

Campbell, J. P., DeBlois, P. B., and Oblinger, D. G. 2007. Academic analytics: A new tool for a new era. EDUCAUSE Review, 42 (4), 40-42.

Choi, J. I., & Choi, J. S. (2012). The effects of learning plans and time management strategies on college students’ self-regulated learning and academic achievement in e-learning. Journal of Educational Studies, 43 (4), 221-244.

Chung, Y. S., Kim, H. Y., & Kang, S. (2010). An exploratory study on the correlations of learning strategies, motivation, and academic achievement in adult learners. The Journal of Educational Research, 8 (2), 23-41.

Davis, M. A. (2000). Time and the nursing home assistant: relations among time management, perceived control over time, and work-related outcomes. Paper presented at the Academy of Management, Toronto, Canada.

Elias, E. (2011). Learning analytics: definitions, processes and potential. Retrieved from http://learninganalytics.net/LearningAnalyticsDefinitionsProcessesPotential.pdf

Greene, J. A., & Azevedo, R. (2007). Adolescents’ use of self-regulatory processes and their relation to qualitative mental model shifts while using hypermedia. Journal of Educational Computing Research, 36, 125–148.

Greene, J. A., & Azevedo, R. (2009). A macro-level analysis of SRL processes and their relations to the acquisition of a sophisticated mental model of a complex system. Contemporary Educational Psychology, 34, 18–29.

Hofer, B. K., Yu, S. L., & Pintrich. P. R. (1998). Teaching college students to be self-regulated learners. In Schunk, D. H. & Zimmerman, B. J. (Eds.), Self-regulated learning: From teaching to self-reflective practice (pp.57-85). New York, NY: Guilford Press.

Jo, I. (2012). On the LAPA (Learning Analytics for Prediction & Action) Model suggested. Future Research Seminar. Paper presented at the Korea Society of Knowledge Management, Seoul, Korea.

Jo, I., & Kim, J. (2013). Investigation of statistically significant period for achievement prediction model in e-learning. Journal of Educational Technology, 29 (2), 285-306.

Jo, I., Kim, Y. (2013). Impact of learner`s time management strategies on achievement in an e-learning environment: A learning analytics approach. Journal of Korean Association for Educational Information and Media, 19 (1), 83-107.

Jo, I., Kim, D., & Yoon, M. (2014). Constructing proxy variable to analyze adult learners' time management strategy in lms using learning analytics. Paper presented at the conference of Learning Analytics and Knowledge, Indianapolis, IN.

Jo, I., Yoon, M., & Ha, K. (2013 ). Analysis of relations between learner's time management strategy, regularity of learning interval, and learning performance: A learning analytics approach. Paper presented at the E-learning Korea 2013, Seoul, Korea.

Johnson, L., Smith, R., Willis, H., Levine, A., and Haywood, K. (2011). The 2011 Horizon Report. Austin, Texas: The New Media Consortium. Retrieved from http://www.nmc.org/pdf/2011-Horizon-Report.pdf

Karoly, P. (1993). Mechanisms of self-regulation: A systems view. Annual Review of Psychology, 44, 23–52.

Kearsley, G. (2000). Online education: learning and teaching in cyberspace. Belmont, CA.: Wadsworth.

Kline, R. B. (2005). Principles and practice of structural equation modeling (2nd ed,). New York: Guilford Press.

Kim, Y. (2011). Learning time management variables impact on academic achievement in corporate e-learning environment. Master Thesis. Ewha Woman’s University, Seoul.

Kim, Y., Lee, C. K., Lee, H. U., & Lee, S. N. (2011). The Effects of Learning Strategies on Academic Achievement. Journal of the Korea Association of Yeolin Education, 19 (3), 177-196.

Kwon, S. (2009). The Analysis of differences of learners' participation, procrastination, learning time and achievement by adult learners' adherence of learning time schedule in e-Learning environments, Journal of Learner-Centered Curriculum and Instruction, 9 (3), 61-86.

Lynch, R., & Dembo, M. (2004). The relationship between self-regulation and online learning in a blended learning context. The International Review of Research in Open and Distributed Learning, 5 (2).

Macfadyen, L. P., & Dawson, S. (2010). Mining LMS data to develop an ‘‘early warning system” for educators: A proof of concept. Computers & Education, 54 (2), 588-599.

Mödritscher, F., Andergassen, M., & Neumann, G. (2013, September). Dependencies between e-learning usage patterns and learning results. In Proceedings of the 13th International Conference on Knowledge Management and Knowledge Technologies (p. 24). ACM.

Moore (2003). Attendance and performance: How important is it for students to attend class? Journal of College Science Teaching, 32 (6), 367-371.

Orpen, C. (1994). The effect of time-management training on employee attitudes and behaviour: A field experiment, The Journal of Psychology, 128, 393-396.

Phipps, R., and Merisotis, J. (1999). What’s the difference? A review of contemporary research on the effectiveness of distance learning in higher education. Washington, D.C.: The Institute for Higher Education Policy.

Pressley, M., & Ghatala, E. S. (1990). Self-regulated learning: Monitoring learning from text. Educational Psychologist, 25, 19–33.

Pressley, M., & Harris, K. R. (2006). Cognitive strategies instruction: From basic research to classroom instruction. In P. Alexander & P. Winne (Eds.), Handbook of educational psychology (2nd ed., pp. 264–286). Mahwah, NJ: Lawrence Erlbaum Associates.

Pintrich, P. R., & De Groot, E. V. (1990). Motivational and self-regulated learning components of classroom academic performance. Journal of educational psychology, 82 (1), 33.

Pintrich, P. R., Smith, D. A. F., Garcia, T., & McKeachie, W. (1993). Reliability and predictive validity of the Motivated Strategies for Learning Questionnaire (MSLQ). Educational and Psychological Measurement, 53, 801–813.

Romero, C., Espejo, P. G., Zafra, A., Romero, J. R., & Ventura, S. (2013). Web usage mining for predicting final marks of students that use Moodle courses. Computer Applications in Engineering Education, 21 (1), 135-146.

Romero, C., Ventura, S., & García, E. (2008). Data mining in course management systems: Moodle case study and tutorial. Computers & Education, 51(1), 368-384.

Stanca (2006). The effects of attendance on academic performance: panel data evidence for introductory microeconomics, forthcoming, Journal of Economic Education, 37 (3), 251-266.

Swan, K. (2001). Virtual interaction: Design factors affecting student satisfaction and perceived learning in asynchronous online courses. Distance Education, 22 (2), 306-331.

Thompson, K., Ashe, D., Carvalho, L., Goodyear, P., Kelly, N., & Parisio, M. (2013). Processing and visualizing data in complex learning environments. American Behavioral Scientis t, 57 (10), 1401-1420.

White, B., & Frederiksen, J. (2005). A theoretical framework and approach for fostering metacognitive development. Educational Psychologist, 40, 211–223.

Woolfolk, A. E. & Woolfolk, R. L. (1986). Time management: An experimental investigation, Journal of School Psychology, 24, 267-75.

Worsley, M., & Blikstein, P. (2013, April). Towards the development of multimodal action based assessment. In Proceedings of the third international conference on Learning Analytics and Knowledge (pp. 94-101). ACM.

Zimmerman, B. J. (1990). Taking aim on empowerment research: on the distinction between individual and psychological conception. American Journal of Community Psychology, 18, 169-171.

Zimmerman, B. J. (1998). Developing self-fulfilling cycles of academic regulation: An analysis of exemplary instructional models. In D. H. Schunk & B. J. Zimmerman (Eds.), Self-regulated Learning. From Teaching to Self-reflective Practice (pp.1–19). New York/London: Guildford Press.

Zimmerman, B. J., and Martinez-Pons, M. (1986). Development of a structured interview for assessing student use of self-regulated learning strategies, American Educational Research Journal, 23, 614 – 628.

Evaluation of Online Log Variables that Estimate Learners’ Time Management in a Korean Online Learning Context by Il-Hyun Jo, Yeonjeong Park, Meehyun Yoon, Hanall Sung is licensed under a Creative Commons Attribution 4.0 International License.