Figure 1. LMS usage model in an ODL institution.

Volume 17, Number 3

Peter Mkhize, Samuel Mtsweni, and Portia Buthelezi

University of South Africa

Academic institutions such as the University of South Africa (Unisa) are using information and communication technology (ICT) in order to conduct their daily primary operations, which are teaching and learning. Unisa is the only distance learning university in South Africa and also in Africa. Unisa currently has the highest number of students on the continent of Africa. In an attempt to bridge the gap between facilitators and students, Unisa introduced a learning management system known as myUnisa. MyUnisa is used by facilitators and students as a tool to conduct teaching and learning, and for communication. To the best of the researcher's knowledge, factors that influence its acceptance and usage have not been studied prior to this study. The main deciders of the success of technology are the users, as is reflected in the well-established theories and models that exist to evaluate the acceptance of technology and innovation. The objective of this study was to understand the factors that contribute to the usage of myUnisa by students. An online questionnaire was used for data collection, and a quantitative analysis was conducted. Among others, the results reveal that complexity does not have a significant impact on the students' decision to use myUnisa.

Keywords: open distance learning, learning management system, online learning, ODL, higher education

In the twenty-first century, institutions of higher learning use information and communication technology (ICT) to conduct their daily operations, and this often includes using ICT for teaching and learning. The University of South Africa (Unisa) is the largest open distance learning (ODL) university on the African continent. It has more than 150,000 students registered each academic year, and these students are spread across South Africa as well as other countries in Africa and beyond. The university has adopted an ODL model, supported by myUnisa as an official university learning management system (LMS).

The academic staff members at Unisa predominantly use myUnisa as the instructional delivery tool of choice. In line with the ODL model, the university's admission policy is open, in the sense that it allows admission to tertiary education to students from a wide spectrum of socio-economic environments. Given South Africa's socio-economic, socio-cultural, and political history, it is beneficial to understand the factors that influence the usage of myUnisa, from the students' perspective. In this study the researchers will define the research problem, conduct a literature survey to develop a theoretical framework as the basis of inquiry, and explain the research methodology used to obtain the results, which will lead to the conclusion of the study.

In an institution as large as the University of South Africa, a minor system glitch could have serious consequences for user perception, affecting hundreds of thousands of students. Therefore, it is important for the institution to make every effort to see that instructional delivery tools such as myUnisa, as part of the pedagogic system, are as effective as possible. In order to do this, they need to carefully assess the effectiveness of myUnisa as the university's official LMS.

Venkatesh and Davis (2000) warn that even the best technology-based systems are useless if they are not accepted by their intended users. In the case of the myUnisa LMS, the intended users are staff members as well as students registered with Unisa. The adoption rate and usage of the LMS is a vital factor determining the success of myUnisa, which is measured on how it benefits the students and the institution at large (Chang, Chiang and Hopkinson, 2013). It is important to note that while myUnisa is mandatory for staff members, it is discretionary for students in the sense that they have the option to use the traditional learning system and be successful in their studies, despite the existence of myUnisa.

Many factors can affect students' decision to adopt myUnisa as the LMS of choice, such as the fact that South Africa is a developing country that experiences problems such as poor network connectivity and slow system response. In this study, factors that affect adoption, and rate of adoption, are used to understand how they influence the diffusion rate of myUnisa as an innovative instructional delivery tool. Therefore, we investigate behavioural intent to use myUnisa by Unisa students, based on relative advantage, compatibility, complexity, and attitude.

The purpose of this study is to evaluate behavioural intent to use myUnisa, based on relative advantage, compatibility, complexity, and attitude, specifically focusing on currently registered students at Unisa, within the School of Computing, in the College of Science, Engineering and Technology.

Which factors influence the use of myUnisa by students in the School of Computing?

LMS research has been driven by the need to investigate the reasons behind their adoption and the likelihood that the intended users will use these systems (Straub, 2009). Past research has investigated different platforms as LMS and the user perceptions of them. These different LMS platforms have many similarities, referred to as generic tools, such as the collaborative tools, schedulers, quiz or assessment options, forums, and communication options ( Black, Beck, Dawson, Jinks, & DiPietro, 2007). The LMSs differentiate themselves from others with what Black et al. (2007) referred to as micro-detailed features such as the capability to download lectures in audio format or hold synchronous meetings. Feldstein (2006) in Black et al. (2007) reported that as much as LMSs are improving their basic functions, they are still generic in their capabilities.

In recent years, even social media platforms have also been used as LMSs. Wang, Woo, Quek, Yang, & Liu (2012), in their exploratory study, investigated the Facebook group as an LMS due to its social and pedagogical aspects. They found that students were satisfied with the Facebook group because the LMS functions could easily be incorporated into the platform. On the other hand, the Facebook group did not support all the file formats required by students, and the students perceived the platform to have less privacy and therefore to be unsafe for them to use as an LMS.

Ssekakubo, Suleman, & Marsden (2011) investigated the causes of failure in the adoption of e-learning management systems in developing countries, using five universities in Africa as their sample. They found that the most likely causes of failure were mostly related to high ICT illiteracy rates among the student population and usability issues of the learning management systems. These issues are non-factors in the present study because the population used was students registered for a formal qualification within the School of Computing at Unisa. By virtue of studying in the School of Computing, these students should be familiar with ICT and be literate in its use.

Straub (2009) suggested that technology adoption is an intricate and evolving process with social aspects in addition to the technical aspects. The social aspects include attitude, behavioural intent, and the perceived value to the user of the LMS based on the technology acceptance model (TAM) by Davis (1989) and the diffusion of innovations theory by Rogers (1995).

Technological innovation has been the pillar of success in many organisations in the knowledge age, irrespective of the organisation type. Davis (1989) introduced a technology acceptance model (TAM) to address the lack of an acceptance-measuring tool, to help both the information technology vendor and the information systems manager to evaluate the user's behaviour towards the vendor's product. Davis (1989) argued that in addition to the theoretical value of better measures to predict and explain systems, the TAM has great practical value for the organisation. The TAM is underpinned by two theoretical constructs: the perceived usefulness and perceived ease of use (Venkatesh and Davis, 2000).

Behavioural intent is the perceived likelihood that a person will participate in a given behaviour, and it is a major determinant of targeted behaviour (Engle et al., 2010). According to Fishbein and Ajzen (2011), the intention to perform a particular behaviour will be a good predictor of the actual behaviour if the behaviour is under the person's control. Hsu and Lin (2008) attested to the fact that intention is an appropriate predictor of an individual's behaviour. It can be used to indicate whether individual students would actually accept myUnisa as an innovative instructional tool.

In this study, the researchers wanted to measure student behavioural intent towards using myUnisa, in relation to the factors that may influence the student intention to use the system. Hsu and Lin (2008) measured the influence of attitude on behavioural intention, which was, in turn, influenced by technology acceptance factors and knowledge sharing factors. Even though myUnisa usage is discretionary, the delivery model tends to compel students to use myUnisa. Currently a large proportion of delivery strategies are based on myUnisa. It would therefore be beneficial for the researchers to understand the myUnisa rate of adoption by evaluating the student behavioural intent, based on their attitude towards using the myUnisa LMS.

The user attitude towards a system has been said to be a determining factor in whether they will use the system or not (Davis, 1989). In the case of the myUnisa LMS, in order for the students to use the myUnisa online teaching tools and use them effectively, the students ought to have a positive attitude towards the tools, perceive them as useful, and be willing to try them. This could require a big perceptual adjustment, depending on the students' current perception of myUnisa's usefulness. According to the TAM as developed by Davis (1989), the perceived usefulness of the system influences user attitudes towards adopting the system. Venkatesh and Davis (2000) highlighted the role of attitude in influencing the adoption of a new system. The argument was that attitude could be used to determine behavioural intention of the user. These two constructs are related to relative advantage, complexity, and compatibility—which were suggested by Rogers (1995) as measures of the adoption rate for the diffusion of innovation. Because myUnisa is an innovative way of delivering learning material to students, the researchers hypothesised that:

H1: The student attitude towards myUnisa positively affects their behavioural intent to use it.

Diffusion is the process of communicating an innovation or intervention among the members of a social system over a period of time (Rogers, 1995, 2003). The decision to accept an innovation and the innovation adoption rate are affected by the adopter's perception of the innovation (Dingfelder & Mandell, 2011). This perception is based on the most influential characteristics of innovation, such as relative advantage, compatibility, complexity, trialability, and observability of the innovation (Rogers, 2002; Bennett & Bennett, 2003; Sanson-Fisher, 2004). This study focuses on the relative advantage, compatibility, and complexity of the innovation as they have been widely studied and have been noted to have the most consistent significant relationship to innovation adoption (Tonartzky & Klein, 1982 in Dingfelder & Mandell, 2011).

In their study of the factors that influence the adoption of ICT by recent refugees, Kabbar and Crump (2006) suggested that the adoption of ICT could help some sections of society not to feel alienated from the new digital environment, as they realise that the new digital environment is better than the traditional environment. Zhang, Wen, Li, Fu, and Cui (2010) argued that innovation could be realised in terms of economics, social prestige, and satisfaction, which would entice an individual to explore a new experience. Relative advantage is a perceived advantage, and it does not matter whether the user realises the objective advantage or not (Rogers, 2002). The adoption rate as it relates to relative advantage is not investigated in this study as it is useful only where the system use is discretionary, whereas the use of myUnisa is compulsory for students in the School of Computing at Unisa. For the purposes of this study, relative advantage refers to the extent to which the students believe that the LMS would be better than the traditional learning mechanisms, as it is the first online LMS to be implemented by the institution. The students would also adopt innovation if they perceived it to add value to their lives, in terms of economic value and social prestige (Duan, He, Feng, Li, & Fu, 2010). Hence, the following hypothesis is proposed:

H2: The students' perception of myUnisa's relative advantage is positively correlated with their attitudes towards using myUnisa.

According to Rogers (1995), an idea that is believed to be incompatible with traditional ideas will not be adopted unless the idea is compatible with the existing values and past experiences of the individual who is faced with a decision to adopt the new idea. Duan et al. (2010) confirmed in their findings that compatibility has a significant, positive effect on the adoption of an innovative learning system. Compatibility could be tricky for myUnisa sponsors, because it is measured on the basis of personal characteristics such as life experience, lifestyle, and individuals' current circumstances (Pacharapha & Ractham, 2012; Duan et al., 2010). It is advisable for myUnisa sponsors to apply themselves in studying the intended users, and design the system in a way that is compatible with students' values and past experiences.

In turn, Unisa students would be more likely to adopt the innovation if it is perceived as being compatible with their traditional learning experiences and norms. Even though the LMS is marketed as an innovation that will change the face of education and learning, it is important to ensure that the change does not contradict the adopters' values and acceptable norms (Adhikari, 2005). Therefore, the following hypothesis was proposed:

H3: MyUnisa's compatibility with traditional learning practices (i.e., its ability to allow uploading of handwritten or scanned documents) is positively correlated with the student attitude towards using it.

Complexity refers to the degree to which the users or adopters of the innovation find the innovation less challenging and easy to use (Bennett & Bennett, 2003; Rogers, 2002; Sanson-Fisher, 2004). It is related to the ease-of-use concept, which forms a construct of the technology acceptance model (TAM; Davis, 1989). However, complexity is inclined towards measuring the rate of adoption. Complexity is a cause for concern in many areas of study where research projects have emanated from a desire to solve complex issues.

The complexity of the innovative system could be problematic if students have to put effort into learning first how to use the LMS and then to learn the skills taught through the LMS. Engelbrecht (2003) affirmed that if innovation requires learning, it would be adopted slowly. Therefore, in the case of myUnisa adoption, systems sponsors would benefit in taking time to ensure that myUnisa is easy to use, even for disciplines that are less technology inclined, such as those in the College of Humanities. Consequently, the researchers hypothesised as follows:

H4: The complexity of the myUnisa system is positively correlated the students' attitude to using myUnisa.

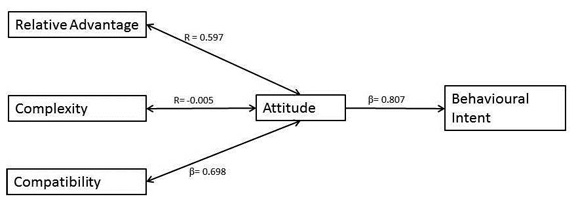

Based on the hypotheses proposed above, the ODL institutional LMS usage model in Figure 1 was compiled as the theoretical framework for this study.

Figure 1. LMS usage model in an ODL institution.

The objective of the study was to evaluate the behavioural intention of students to use myUnisa, based on relative advantage, compatibility, complexity, and attitude, and focused on current registered students at Unisa. The research focused on the students within the School of Computing, in the College of Science, Engineering and Technology. In order to address the objectives of the study, the researchers conducted a survey study. The main reason the researchers chose the survey approach was based on the nature of the study and the objectives of the study (Creswell, 2013). A survey approach provides the opportunity to use questionnaires or interviews as the instrument to collect data (de Vaus, 2013). The researchers chose to use the questionnaire because of the advantages stated by Olivier (2009), which include the ease of distributing a questionnaire over a wide geographic area, since the students of Unisa are in various countries in Africa and other continents. In addition, questionnaires are cheaper than interviews and they reduce the bias faced by researchers when using interviews to collect data (Fink, 2012; Creswell, 2013).

The researchers developed the questionnaire focusing on the objectives of the study. The constructs and items of the questionnaire were drawn from the existing literature, which was well referenced, and they had been tested by other researchers (Duan et al., 2010). This was done in order to ensure the quality and validity of the instrument (Denscombe, 2014). The constructs that were measured included relative advantage, complexity, compatibility, attitude, and behavioural intention. These constructs were adopted from the theories that are widely used and had been tested by other researchers, such as Rogers (2003) and Zhang et al. (2010). These theories included the diffusion of innovations by Rogers (1995), which encompasses relative advantage and compatibility, and the theory of planned behaviour by Fishbein and Ajzen (1975), which encompasses behavioural intention and attitude.

The constructs had different numbers of items, which were used to measure the constructs. These items were drawn from the existing literature. Relative advantage and compatibility had seven items each; complexity had five; attitude and behavioural intention had three each. The researchers made sure that the constructs had a limited number of items, to avoid a long questionnaire which would be difficult for the participants to answer (Creswell, 2013). The questionnaire used a 7-point Likert scale ranging from strongly disagree (1) to strongly agree (7). The researchers chose a 7-point scale because if the scale is large, the researcher needs a smaller sample than when the scale is small (Dawes, 2008).

After the questionnaire was complete, the researchers reviewed it in order to ensure its quality (Denscombe, 2014). It was reviewed by three researchers who are experts within the field from which the theories were taken. After the review of the questionnaire, the researchers needed to decide whether to use a paper-based questionnaire or an online questionnaire. To eliminate the data capturing challenge and minimise the research costs, the researchers chose an online questionnaire, as they are cheaper to distribute to the students of Unisa who are spread across a large geographic area (Olivier, 2009). After the questionnaire was ready for distribution, the researchers conducted a pilot study in order to ensure the validity of the instrument (Oates, 2005; Fink, 2012; Creswell, 2013; Denscombe, 2014).

The population refers to individuals or units being studied in order to draw conclusions about them (Fink, 2012). The population for this study was students studying at Unisa, and registered for a formal qualification. From the relevant population of students, the researchers needed to draw a sample for the study in order to make a generalisation about the population (Oates, 2005; de Vaus, 2013). A sample is the subset of a population, which can be used to generalise the results for the entire population (Creswell, 2013). The sample for the study was the students registered for a formal qualification within the School of Computing. They were selected because it is compulsory for them to make use of myUnisa. The sampling technique used for this study was purposive sampling (de Vaus, 2013). Purposive sampling allows the researchers to specify the criteria used to select the participants in the study, such as student registration for a formal qualification (Bhattacherjee, 2012). The researchers specified the sample of students allowed to participate in this study. These were students who were registered for a formal qualification within the School of Computing at Unisa. The next step was to distribute the questionnaire for data collection.

The researchers needed to validate the questionnaire if the questionnaire was to address the objectives of the study (Fink, 2012; Denscombe, 2014). The first type of validity the researchers focused on was content validity. Three researchers who are experts in the theories used in this study reviewed the instrument. The questionnaire was further distributed to 20 students registered with the School of Computing at Unisa, 18 of whom participated in the pilot study and provided comments. The students were requested to specify the challenges, recommendations, errors, and suggestions pertaining to the instrument in order to make it easier to answer or to complete. Three researchers reviewed the recommendations and suggestions and implemented the comments within the questionnaire before it could be distributed for data collection. After the implementation of the suggestions and recommendations, the researchers reviewed the questionnaire again. Another review the researchers needed to do was for validity. Validity ensures that the instrument measures the constructs which it is supposed to measure or is claiming to measure (Creswell, 2013). Different validities such as construct validity will be discussed in the analysis section of this study. Before the researchers could begin data collection they needed to decide on the type of participant for the study (Oates, 2005; Fink, 2012).

Data collection is a vital stage of research. The main purpose of data collection is to collect accurate data which will enable the researchers to address the objectives of the research (Creswell, 2013). The researchers chose to collect data once and for a certain time period. Data were collected only once because the researchers were not going to conduct any interventions with the participants, and the study was not a case study (Fink, 2012). The questionnaire was an online questionnaire, which was hosted on the Google Docs platform (https://docs.google.com/). An email was sent to the students to invite them to participate in the study. The email was sent to 350 students, and 156 students participated in the study. The email included the link to the questionnaire delineating the rights of the students as participants and the purpose of the study, as recommended by Oates (2005).

The researchers delineated the rights of the participants in order to further adhere to ethical requirements, as stated by Oates (2005) and in line with the ethics rules of the university. Ethical clearance was applied for to the top management of the university in order to conduct the study at the university. It is vital for researchers to adhere to ethical standards because they cannot be excluded in social research (Oates, 2005). After data collection was completed, the data were retrieved in the form of an Excel file and transformed into an SPSS file. The researchers then started cleaning and coding the data so as to perform different types of analysis. Two types of data analysis were performed, namely descriptive statistics and inferential statistics (Creswell, 2013). Descriptive statistical analysis was used to gain a better understanding of the sample. Inferential statistical analysis was performed to address the objectives of the study (Fink, 2012). Confirmatory factor analysis was conducted in order to ensure construct validity and reliability (de Vaus, 2013); this also included convergent validity and discriminate validity (Denscombe, 2014). Multiple regression analysis was conducted in order to analyse the relationships of different constructs in the proposed model (de Vaus, 2013). Further details and results of the analysis are discussed in the following sections.

The demographics of Unisa's students has changed over the past few years. It used to be dominated by working individuals who were furthering their studies in order to grow within their workplaces and also to move to other organisations. At the time of when this study was conducted, it emerged that more students who have just left high school are joining the university. This group was classified as young adults by Statistics South Africa.

At the time of this research the majority of the students (72%) were employed and only 27.6% were unemployed (Table 1). The majority of the students who were employed were between the ages of 36 and 50 years and had only a professional certificate. It also important to note that a majority of the students (79.5%) had an Internet connection at their homes. This can be attributed to the fact that one of the admission criteria for the School of Computing is to have an Internet connection.

Table 1

Demographic Profile of the Respondents (N = 156)

| Frequency | Percent (%) | |

| Age | ||

| 16–20 | 3 | 1.9 |

| 21–35 | 113 | 72.4 |

| 36–50 | 39 | 25.0 |

| 51–65 | 1 | 0.6 |

| Employment | ||

| No | 43 | 27.6 |

| Yes | 113 | 72.4 |

| Mobile access | ||

| No | 32 | 20.5 |

| Yes | 124 | 79.5 |

| Province | ||

| Eastern Cape | 7 | 4.5 |

| Free State | 5 | 3.2 |

| Gauteng | 95 | 60.9 |

| KwaZulu-Natal | 13 | 8.3 |

| Limpopo | 4 | 2.6 |

| Mpumalanga | 7 | 4.5 |

| North West | 3 | 1.9 |

| Northern Cape | 1 | 0.6 |

| Western Cape | 14 | 9.0 |

| International students | 6 | 3.8 |

| Not specified | 1 | 0.6 |

| Settlement type | ||

| Rural area | 10 | 6.4 |

| Suburban area | 96 | 61.5 |

| Township | 50 | 32.1 |

In order to answer the research questions above, a series of statistical tests were performed. This section provides a discussion of the results and present findings. We started with dataset processing to ensure that data were ready to be used for analysis that would help achieve the research objectives. Once all the responses were collated, the researchers performed editing and coding. It became apparent that there were missing values, specifically in the construct item. The researchers identified the missing values in the dataset as scale-type input values; therefore they used an expectation–maximisation (EM) technique on SPSS to fill the missing values. Expectation–maximisation is useful for predicting the values of factors, if the missing values are not systematic. We used Little's Missing Completely at Random Test, which yielded a statistically insignificant Chi-square value, with a p value equal to.652, meaning that the missing values were completely random (Little & Rubin, 2002). This is in line with the operating assumption of the EM technique. Having coded and edited the dataset we conducted a validity and reliability test.

In the above paragraph we have explained how we dealt with missing values, which enabled us to perform a variety of analyses that were sensitive to both sample size and missing values. In addition, content validity was tested by using a pilot study and by subjecting the instrument to experts' scrutiny, and we tested construct validity. We performed exploratory factor analysis to extract latent variables from the observed variables, thereby reducing the number of variables used for analysis. In performing exploratory factor analysis, we used the maximum likelihood extraction method and the promax with Kaiser normalization rotation method.

Table 2

KMO Measure and Bartlett's Test

| Kaiser-Meyer-Olkin measure of sampling adequacy | .929 | |

| Bartlett's test of sphericity | Approx. Chi-square | 2657.795 |

| df | 231 | |

| Sig. | 0.000 | |

The Kaiser-Meyer-Olkin measure of .929, above .7, means that the sample from which these data were collected was adequate. Meanwhile, Bartlett's test of sphericity was statistically significant, with a p value equal to 0.00. At this point the researchers were confident about sample adequacy and that there were no missing values.

In order to ensure that items grouping in each construct were actually correct, researchers performed an exploratory factor analysis, so that they could identify independent factors and items that load onto these factors (Table 3).

Table 3

Exploratory Factor Analysis

| Factor | |||||

| Behavioral intent | Relative advantage | Complexity | Compatibility | Attitude | |

| Beh_Int_1 | 1.053 | ||||

| Beh_Int_2 | 1.009 | ||||

| Beh_Int_3 | .831 | ||||

| Beh_Int_4 | .664 | ||||

| Beh_Int_5 | .594 | ||||

| Beh_Int_6 | .546 | ||||

| Rel_Adv_1 | .946 | ||||

| Rel_Adv_2 | .834 | ||||

| Rel_Adv_3 | .759 | ||||

| Rel_Adv_4 | .745 | ||||

| Rel_Adv_5 | .689 | ||||

| Rel_Adv_6 | .664 | ||||

| Com_Ex_1 | .737 | ||||

| Com_Ex_2 | .671 | ||||

| Com_Ex_3 | .654 | ||||

| Com_Ex_4 | .653 | ||||

| Com_Ex_5 | .537 | ||||

| Com_Bil_1 | .854 | ||||

| Com_Bil_2 | .591 | ||||

| Com_Bil_3 | .535 | ||||

| Att_1 | .755 | ||||

| Att_2 | .551 | ||||

Note. Extraction method: Maximum Likelihood; Rotation method: Promax with Kaiser Normalization; Rotation converged in 6 iterations

The researchers restricted extraction by eigenvalues equal to 1, and factor loading was set above.39; then, all scale that yielded a factor loading less than.39 were discarded. The scale with the lowest factor loading retained for further analysis is.535. In Table 3 there is a list of coded items with their factor loadings: Beh_Int 1 to 6 represent scale loading on behavioural intent; Rel_Adv 1 to 6 represent relative advantage; Com_Ex_1 to 5 represent complexity; Com_Bil_1 to 3 represent compatibility; and Att_1 represents attitude the cumulative variance is 85%.

Equally important was testing of reliability to ensure that the instrument would yield similar results if the tests were replicated in a different but similar study. In doing this, we tested a Cronbach's alpha value using multiple scales as extracted from exploratory factor analysis followed by factor naming. The resulting factors were behavioural intent, relative advantage, complexity, compatibility, and attitude. Table 3 shows that the highest Cronbach's Alpha is.954 for behavioural intent and the lowest is.778 for complexity. According to Salkind (2014) a Cronbach's alpha that is greater than.6 is questionable,.7 is acceptable,.8 is good, and.9 is excellent. Therefore, the reliability of the instrument and all factors in the instrument was good.

Table 4

Cronbach's Alpha

| Factor | No of scales | Cronbach's Alpha |

| Behavioral Intent | 6 | .954 |

| Relative advantage | 6 | .923 |

| Complexity | 5 | .778 |

| Complatibility | 3 | .88 |

| Attitude | 2 | .927 |

In addition to reliability and validity statistics, we performed correlation analysis to measure the direction and strength of association among variables (Table 3 & 4). Table 5 shows a correlation matrix containing r values and p values.

Table 5

Correlation Matrix

| 1 | 2 | 3 | 4 | ||

| ATTITUDE | r | 1.000 | .698** | -.005 | .597** |

| p | .000 | .946 | .000 | ||

| n | 156 | 156 | 156 | 156 | |

| COMPATIBILITY | r | .698** | 1.000 | -.031 | .647** |

| p | .000 | .704 | .000 | ||

| n | 156 | 156 | 156 | 156 | |

| COMPLEXITY | r | -.005 | -.031 | 1.000 | -.008 |

| p | .946 | .704 | .922 | ||

| n | 156 | 156 | 156 | 156 | |

| REL_ADVANTAGE | r | .597** | .647** | -.008 | 1.000 |

| p | .000 | .000 | .922 | ||

| n | 156 | 156 | 156 | 156 | |

Note. r = correlation coefficient; p = 2-tailed p value; n = number of individuals.

R values depict the strength and direction of the association among variables. In this case, the strongest association with the dependent variable is compatibility, which yields an r value equal to.644. This association is positive and significant, with a p value =.01. Therefore, H3 null hypothesis is not rejected. At the other end of the strength and direction measurement, the association between attitude and complexity is negative with an r value equal to -.005; it is the weakest and is insignificant, which leads to the rejection of the H4 null hypothesis and support of the alternative hypothesis.

In test H2, the results reveal that relative advantage is moderately associated with attitude, indicated by an r value equal to.597, and it is significant with a p value equal to.000. It will suffice to support the null hypothesis H2 and reject the alternative hypothesis. On the other hand, compatibility proves to be strongly associated with attitude, with the r value equal to.698 and significant at the p value of.000. In this case we also accept the null H3 hypothesis and reject the alternative hypothesis.

Thus far, we have supported H2, H3 and rejected H4. All tested associations against the attitude proved to be positive, strong, and significant with the exception of complexity. This could mean that students' attitude towards using myUnisa is not affected by the complexity of the LMS. This observation could be attributed to the fact that myUnisa is a mandatory system for students in the School of Computing or that myUnisa is easy to use and complexity is not an aspect of concern to them.

In order to achieve the objective of testing the impact of dependent variables on attitude in line with a working model presented in the theoretical framework we used our dataset to test and evaluate the impact of the independent variables by using simple linear regression.

Figure 2. LMS usage model in an ODL institution.

Figure 2 presents a diagram of a simple linear regression test result. One of the research objectives was to evaluate the impact of independent variables on dependent variables.

In preparation for regression analysis, the researcher had to evaluate correlation as presented in the Table 4 correlations test to reveal multicolinearity between compatibility and relative advantage, which does not meet the assumption for the multiple linear regression test. Even though they are correlated to the dependent variable they could not be used simultaneously to evaluate the impact on attitude. Complexity was eliminated from the linear regression analysis because it was insignificantly correlated to attitude. Whereas, relative advantage was eliminated from the linear regression analysis because there was colinearity with compatibility.

Linear regression analysis was performed on attitude with compatibility, which yielded a strong correlation (r value equal to .698). The β value was .698 meaning that one standard deviation shift on compatibility would result in a .698 shift on attitude, with a regression coefficient equal to .716 and p value equal to .000. These results indicated that compatibility had a positive and significant impact on attitude; and it could be used as a predictor of attitude.

In the same way, there was a strong correlation (r =.807) between attitude and behavioural intent to use myUnisa by students. The β (.807) value was also significant with a p value equal to .000 (Figure 2). The results presented above are graphically represents in a model in Figure 2.

This study was conducted with the aim of understanding factors that contribute to the usage of myUnisa as a learning management system at Unisa, and to evaluate the predictive strength of these factors on students' attitude towards using myUnisa. In this study, we developed a theoretical model that provides instructional designers with guidelines which could enable them to sharpen their design strategy. The finding is made that complexity has no significant impact on attitude toward the use of myUnisa. The researchers, who are members of the myUnisa forum, realised that as long as the LMS is compatible with the way they have always engaged with learning material, and adds value to their learning experience, they would not worry about complexity.

The University of South Africa is currently the only open distance learning university in South Africa; therefore, lessons learnt in this study could be extrapolated to other universities when they adopt the ODL model for at least some of their programmes. The results would help to remove the fear that some instructional designers in the institution have that the rate of diffusion of LMS usage could suffer if it is complex. However, it is important to note that the sample consisted of students who were studying computer science and information systems. Further investigation is warranted that would include students who are registered for qualifications in the humanities, accountancy, education, economics, and management.

It is important to note that this study is meant to evoke academic debate about the diffusion of innovative LMSs in tertiary institutions that are situated in developing countries. We started with Unisa because of its unique student profile.

This study was conducted in the only ODL university on the continent of Africa. At the time of writing, Unisa is the only university in the country that offers distance education programmes exclusively. Hence, the student profile is different from that of other universities in South Africa. The main difference is the requirement for regular Internet access, which is compulsory for our students. Therefore, the results of this study cannot be generalised to the whole tertiary education sector in South Africa. In addition, we cannot even generalise the results to all ODL students in the world because of socio-economic and other differences (Unisa is based in a developing country, whereas most ODL institutions are based in developed countries).

It is important to note that the model represented in Figure 2 does not depict cause and effect between the tested variables. Despite the researchers' intentions to perform path analysis, this was not feasible due to the sample size requirement for performing path analysis and structural equation modelling. Therefore, the model presented above depicts the correlations and regression weights to determine the predictive strength of independent variables on the dependent variable. It is also important to mention again that regular access to a computer with Internet connectivity is mandatory for students who are enrolled for a qualification in the School of Computing. Therefore, we could not generalise to the whole population of Unisa students, as the use of myUnisa is discretionary for them.

This study sought to evaluate the influence of relative advantage, compatibility and complexity on students' attitude towards, and behavioural intentions to use, myUnisa as a learning management system of choice during their studies. The results reveal that, under current circumstances, the complexity of myUnisa does not have a significant influence on attitude in comparison with compatibility and relative advantage. This fact is in line with the LMS usage model presented in Figure 2, which is meant to provide a guideline for ICT strategic decision makers in the university, as well as for the instructional designers who are involved in planning the instructional model that could be effective and support the strategic objectives of the university. Parties involved should invest money and time in ensuring that the innovative learning model is compatible with the kind of system that is familiar to the student.

This study confirms the importance of relative advantage and compatibility in improving the diffusion rate of myUnisa in the School of Computing. The results reveal that both relative advantage and compatibility have a significant impact on the students' attitude towards the use of the LMS. The results also reveal that complexity has an insignificant impact on students' attitude towards the use of the LMS. As mentioned in the previous section, this could be attributed to the fact that the myUnisa LMS is a mandatory system for students of information systems and computer science, and that they are already familiar with complex computer programs and are even involved in software development. Therefore, instructional designers should be careful in the design process and introduce features that are compatible with the social media features that the students use on a regular basis. This implies a need to dedicate resources towards promoting the benefits of using the innovative LMS over the traditional types of instructional engagement.

Adhikari, A. (2005). Technology inclination of Indian consumers' and organizations' strategy: A research perspective. The ICFAI Journal of Service Marketing, 101(1), 26–33.

Bennett, J., & Bennett, L. (2003). A review of factors that influence the diffusion of innovation when structuring a faculty training program. The Internet and Higher Education, 6(1), 53–63.

Bhattacherjee, A. (2012). Social science research: Principles, methods and practices. Retrieved from http://scholarcommons.usf.edu/oa_textbooks/3

Black, E. W., Beck, D., Dawson, K., Jinks, S., & DiPietro, M. (2007). Considering implementation and use in the adoption of an LMS in online and blended learning environments. TechTrends, 51(2), 35–53.

Chang, N., Chiang, C. & Hopkinson, A. (2013). A study of user acceptance using Google Software as a Service (Saas). In International conference on computer, networks and communication engineering (ICCNCE 2013). Publisher Location: Atlantis Press. doi: 10.2991/icence.2013.18

Creswell, J. W. (2013). Research design: Qualitative, quantitative, and mixed methods approaches. London, UK: SAGE.

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340.

Dawes, J. G. (2008). Do data characteristics change according to the number of scale points used? An experiment using 5 point, 7 point and 10 point scales. International journal of market research, 51(1).

Denscombe, M. (2014). The good research guide: For small-scale social research projects. Maidenhead, Berkshire: McGraw-Hill Education (UK).

de Vaus, D. (2013). Surveys in social research. London, UK: Routledge.

Dingfelder, H. E., & Mandell, D. S. (2011). Bridging the research-to-practice gap in autism intervention: An application of diffusion of innovation theory. Journal of Autism and Developmental Disorders, 41(5), 597–609.

Duan, Y., He, Q., Feng, W., Li, D., & Fu, Z. (2010). A study on e-learning take-up intention from an innovation adoption perspective: A case in China. Computers & Education, 55(1), 237–246.

Engelbrecht, E. (2003). E-learning-from hype to reality. Progressio, 25(1), 20.

Engle, R. L., Dimitriadi, N., Gavidia, J. V., Schlaegel, C., Delanoe, S., Alvarado, I.,... & Wolff, B. (2010). Entrepreneurial intent: A twelve-country evaluation of Ajzen's model of planned behavior. International Journal of Entrepreneurial Behaviour & Research, 16(1), 35–57.

Fishbein, M., & Ajzen, I. (1975). Belief, attitudes, intention, and behavior. An introduction to theory and research. Massachussets: Addison-Wesley.

Fink, A. (2012). How to conduct surveys: A step-by-step guide. Los Angeles, CA: SAGE.

Fishbein, M., & Ajzen, I. (1975). Belief, attitudes, intention, and behavior. An introduction to theory and research. Massachussets: Addison-Wesley.

Fishbein, M., & Ajzen, I. (2011). Predicting and changing behavior: The reasoned action approach. New York, NY: Taylor & Francis.

Hsu, C. L., & Lin, J. C. C. (2008). Acceptance of blog usage: The roles of technology acceptance, social influence and knowledge sharing motivation. Information & Management, 45(1), 65–74.

Kabbar, E. F., & Crump, B. J. (2006). The factors that influence adoption of ICTs by recent refugee immigrants to New Zealand. Informing Science: International Journal of an Emerging Transdiscipline, 9, 111–121.

Little, R. J. A., & Rubin, D. B. (2002) Statistical analysis with missing data. New Jersey, NJ: John Wiley & Sons.

Oates, B. J. (2005). Researching information systems and computing. London, UK: SAGE.

Olivier, M. S. (2009). Information technology research: A practical guide for computer science and informatics (3rd ed.). Pretoria, South Africa: Van Schaik.

Pacharapha, T., & Ractham, V. V. (2012). Knowledge acquisition: The roles of perceived value of knowledge content and source. Journal of Knowledge Management, 16(5), 724–739.Rogers, E. M. (1995). Diffusion of innovations (4th ed.). New York, NY: Free Press.

Rogers, E. M. (2002). Diffusion of preventive innovations. Addictive Behaviors, 27(6), 989–993.

Salkind N. J. (2014). Statistics for people who think they hate statistics (5th ed.). London, UK: SAGE.

Sanson-Fisher, R. W. (2004). Diffusion of innovation theory for clinical change. Medical Journal of Australia, 180(6), 55–56.

Ssekakubo, G., Suleman, H., & Marsden, G. (2011). Issues of adoption: Have e-learning management systems fulfilled their potential in developing countries? In Proceedings of the South African Institute of Computer Scientists and Information Technologists conference on knowledge, innovation and leadership in a diverse, multidisciplinary environment (pp. 231–238). New York, NY, USA: ACM.

Straub, E. T. (2009). Understanding technology adoption: Theory and future directions for informal learning. Review of Educational Research, 79(2), 625–649.

Venkatesh, V., & Davis, F. D. (2000). A theoretical extension of the technology acceptance model: Four longitudinal field studies. Management Science, 46(2), 186–204.

Wang, Q., Woo, H. L., Quek, C. L., Yang, Y., & Liu, M. (2012). Using the Facebook group as a learning management system: An exploratory study. British Journal of Educational Technology, 43(3), 428–438.

Zhang, L., Wen, H., Li, D., Fu, Z., & Cui, S. (2010). E-learning adoption intention and its key influence factors based on innovation adoption theory. Mathematical and Computer Modelling, 51(11), 1428–1432.

Diffusion of Innovations Approach to the Evaluation of Learning Management System Usage in an Open Distance Learning Institution by Peter Mkhize, Samuel Mtsweni, and Portia Buthelezi is licensed under a Creative Commons Attribution 4.0 International License.