Patrick R. Lowenthal1 and Charles B. Hodges2

1Boise State University, 2Georgia Southern University

Volume 16, Number 5

The concept of the massive, open, online course (MOOC) is not new, but high-profile initiatives have moved MOOCs into the forefront of higher education news over the past few years. Members of institutions of higher education have mixed feelings about MOOCs, ranging from those who want to offer college credit for the successful completion of MOOCs to those who fear MOOCs are the end of the university as we know it. We set forth to investigate the quality of MOOCs by using the Quality Matters quality control framework. In this article, we present the results of our inquiry, with a specific focus on the implications the results have on day-to-day practice of designing online courses.

Keywords: Massive Open Online Courses, MOOCs, Online Learning, Course Quality, Instructional Design, Quality Matters, Quality Assurance

During the past few years, massive open online courses (MOOCs) have become one of the most talked about trends in higher education (e.g., EDUCAUSE, 2012; Markoff, 2011; Rushkoff, 2013). In fact, 2012 was described as the year of the MOOC (Pappano, 2012). A MOOC is basically a large, open, online course. However, in practice, “MOOCs” differ in many ways. For instance, MOOCs vary in size and in their degree of openness. MOOCs can range from a few hundred students (e.g., Downes, 2011) to thousands (e.g., Markoff, 2011). Some MOOCs are completely open, whereas others limit enrollments (Fain, 2013). Most MOOCs are not offered for college credit; however, there are recent trends to offer college credit for the successful completion of certain MOOCs (Coursera, 2013; Kolowich, 2013). Finally, while some MOOCs intentionally place often famous instructors at the center of the learning experience (sometimes called cMOOCs), other MOOCs focus more on the learners and the connections they can make with others, sometimes called xMOOCs (see Kilgore & Lowenthal, 2014). Despite this diversity, one constant across nearly every MOOC appears to be low completion rates; in other words, while hundreds or thousands might sign up for a MOOC, less than 10% tend to complete them (Jordan, 2014). Despite reports of low completion rates (Pretz, 2014; Rosen, 2012; Yang, Sinha, Adamson, & Rose, 2013)—which some argue is not necessarily a bad thing and others point out is a misleading metric to begin with (Carey, 2013; DeBoer, Ho, Stump, & Breslow, 2014; Reich, 2014)—the fascination with MOOCs persists. The alluring promise of MOOCs and what keeps people interested in them is their ability to offer free or low-cost education to anyone, anytime, anywhere, and on a massive scale (Delbanco, 2013; Jordan, 2014; Yang et al., 2013). Thus, companies like Coursera, edX, and Udacity are striving to make MOOCs a household name and, ultimately, to challenge how and where people learn.

Many people, however, remain skeptical of MOOCs (Carr, 2012; Delbanco, 2013; Mazoue, 2013; Sharma, 2013). Among other things, they question whether meaningful and effective educational experiences are even possible in large enrollment courses (see Glass & Smith, 1979; Lederman & Barwick, 2007; Mulryan-Kyne, 2010), let alone completely online courses with massive enrollments (see Leddy, 2013; Mazoue, 2013; Rees, 2013). Therefore, if MOOCs are going to persist and become more than a passing fad, they must be shown to meet some of the same quality standards that traditional online courses are expected to meet. The problem, though, is that very little research has been done to investigate the instructional quality of MOOCs. Due to this problem, we decided to investigate the design of MOOCs as determined by certain, accepted online course quality frameworks. We began this study with an assumption that MOOCs, just like formal online courses, are not inherently good or bad. Further, there are likely some things that members of the academy can learn (both good and bad) from analyzing MOOCs. In the following article, we report the results of using the Quality Matters framework, a widely adopted approach to assessing the quality of online course design (Quality Matters, 2011; Shattuck, 2007), to evaluate the quality of six randomly selected MOOCs. We conclude this article with a discussion of the implications this research has on practice.

Online courses differ from face-to-face courses in certain ways (Inglis, 2005). For instance, the instructional content and instructional strategies in online courses can be designed and developed before a course is ever offered. Thus, when people talk about the quality of online courses, they often differentiate between how a course is designed and how it is taught. While a bad instructor can arguably find a way to ruin a well-designed online course (e.g., by being non-responsive), a well-designed online course is generally recognized as a hallmark of online course quality. With this in mind, in the following section, we briefly summarize literature about online course quality and MOOCs to establish a way to discuss the quality of MOOCs.

Despite the increase in enrollments in online courses, many people remain skeptical of online learning (Allen, Seaman, Lederman, & Jaschik, 2012; Bidwell, 2013; Jaschik & Lederman, 2014; Parker, Lenhart, & Moore, 2011; Public Agenda, 2013; Samuels, 2013). They question whether online learning is as good as face-to-face instruction (Allen et al., 2012; McDonald, 2002)—specifically, whether students learn as much in online courses as in face-to-face courses. This skepticism has fueled hundreds of “comparison studies” seeking to equate learning outcomes between face-to-face and online learning (Bernard et al., 2004; Meyer, 2002, 2004; Phipps & Merisotis, 1999). The majority of these studies have resulted in no significant difference (Bernard et al., 2004; Russell, 1999). The no significant difference finding is often interpreted to mean that online courses are no worse, or no better than, more traditional face-to-face courses. Despite volumes of similar research, many people—even those who previously have taught online—still question whether students learn as much online as they do face-to-face (Jaschik & Lederman, 2014).

But for every study that suggested no significant difference or that students possibly might learn better online (e.g., Means, Toyama, Murphy, Bakia, & Jones, 2010), another study suggested the opposite (see Jaggars, 2011; Jaggars & Bailey, 2010; Jaggars & Xu, 2010; Xu & Jaggars, 2011). Many researchers have come to the conclusion that comparison studies like these are a waste of time because researchers cannot control for extraneous variables that may impact student achievement (Bernard et al., 2004; Lockee, Moore, & Burton, 2001; Meyer, 2004; Phipps & Merisotis, 1999;), instructors teach differently online (Palloff & Pratt, 1999; Salmon, 2000; Wiley, 2002), and this line of inquiry typically places face-to-face instruction inappropriately as the gold standard (Duffy & Kirkley, 2004; McDonald, 2002).

Others remained skeptical of online learning simply because they believe that education is inherently a face-to-face process (Bejerano, 2008; Edmundson, 2012; Kroll, 2013). They questioned the ability of people to establish presence online and the quality of teacher-to-student and student-to-student interaction. There also is a related, deep fear that teachers will eventually be replaced by computers (Lytle, 2012). Still others fear that cheating and plagiarism are rampant in online courses (Gabriel, 2011; Wilson & Christopher, 2008).

This skepticism of online learning led to a number of quality assurance programs for online courses. The following are a few of the more popular programs in the United States:

iNACOL is the only quality assurance/standards framework listed above that focuses on both online teaching and online course design. While other rubrics focus on measuring quality teaching online, the most popular quality assurance frameworks focus on online course design but not online teaching.

Quality Matters is one of the most popular and widely used quality assurance frameworks in the United States. Quality Matters began under a Department of Education Fund for Improvement of Post-Secondary Education (FIPSE) grant. Quality Matters (QM) is now an international organization focused on improving the quality of online courses at the K-12, Higher Education, and Professional Education levels. There are currently more than 800 QM subscribers (Shattuck, Zimmerman, & Adair, 2014). QM is a peer review and faculty development process that is centered on the following eight general standards:

Each of these general standards has a number of related and more specific sub-standards. While each subscriber arguably could use QM differently, the formal QM process involves taking a course that has been taught before and having it reviewed by three peer reviewers (which must include one master review, one subject matter expert, and one external reviewer) to see if each standard has been met and then revising the course to meet any standards that were not met. The process is not perfect, but it is a widely accepted model for designing quality online courses (Shattuck, 2012).

While MOOCs date back to 2008, their widespread popularity is relatively new. Thus, not surprisingly, the majority of articles written about MOOCs come from the popular press. Except for a couple of special issues of journals focused on MOOCs (e.g., Journal of Online Teaching and Learning and Distance Education), relatively little formal research has been conducted on MOOCs, compared to all of the hype surrounding them. Most of the research that has been conducted has focused on enrollments, or some element of the learner experience in MOOCs. Very little research has focused specifically on the curriculum or instructional design of MOOCs (Margaryan, Bianco, & Littlejohn, 2015), which is exactly the focus of the present study.

Our experience indicates that there are two basic types of MOOC critics. The first type consists of those who are critical of any type of online learning. These people generally focus on the problems with online learning addressed earlier (e.g., lack of presence, fear of cheating, fear of computers replacing teachers). The second type of critic is the people who generally support online education, but remain critical, or at least skeptical, of MOOCs. Generally speaking, these critics tend to question the pedagogical approach of MOOCs and, therefore, in turn question using an institution’s limited resources on offering MOOCs, as well as any attempts to offer college credit for the completion of what they perceive as inferior (pedagogically speaking) online courses. The second type of critics see MOOCs as a step back in time (pedagogically speaking) by simply focusing on the transmission of content (Anderson, 2013; Conole, 2013). Anderson (2013) pointed out, though, that this type of pedagogy is still the norm in many classrooms (online or not) at all levels of education. Further, there are many different types of MOOCs (Adair, Alman, Budzick, Grisham, Mancini, & Thackaberry, 2014). For instance, Anderson (2013) argued that despite attempts to act like all MOOCs are the same, “different MOOCs [as in any form of educational delivery or organization] employ different pedagogies” (p. 4).

Faced with the hype of MOOCs, trends to offer college credit for MOOC completion, and the general skepticism of online learning in general—and MOOCs in particular—we decided to actually investigate the quality of MOOCs. It seemed logical to apply a commonly adopted quality control framework like QM to investigate the quality (at least in terms of course design) of MOOCs. If colleges and universities are going to offer credit for the completion of some of these courses, then, generally speaking, MOOCs ideally should score well on QM reviews.

Many of the high-profile MOOC initiatives involve some of the leading MOOC providers like Coursera, edX, and Udacity. Therefore, we began our inquiry interested in the quality of so-called xMOOCs from these three companies. In an effort to narrow the scope and compare similar MOOCs across providers, we decided to look at only science-, technology-, engineering-, and mathematic- (STEM) focused MOOCs. We then went to Coursera, edX, and Udacity’s websites and randomly selected six STEM-focused MOOCs—two from each of these three providers—for analysis.

The six identified MOOCs were analyzed using the 2011-2013 edition of the Quality Matters Rubric Standards with Assigned Point Values (Quality Matters, 2011), which basically involves a type of content analysis by three different reviewers using a standard coding scheme. QM has a rubric for Continuing and Professional Development that would be appropriate to use on MOOCs (Adair et al., 2014). However, we intentionally chose to use QM’s higher education rubric rather than the continuing and professional development focused rubric because of the increased initiatives about offering college credit for MOOC completion. In other words, a MOOC should score as well as a traditional online course if it is going to be worth college credit.

Three trained Quality Matters peer reviewers (the first author of this article and two contracted QM master reviewers) analyzed each of the MOOCs using the Quality Matters 2011-2013 rubric. Following a standard QM review process, a standard was met if two of the three reviewers marked it as met; a course passed a review if all of the essential standards were met and an overall score of 85% or higher was achieved. The reviewers shared their evaluations and arrived at consensus. Notes generated during the reviews served as a secondary data source.

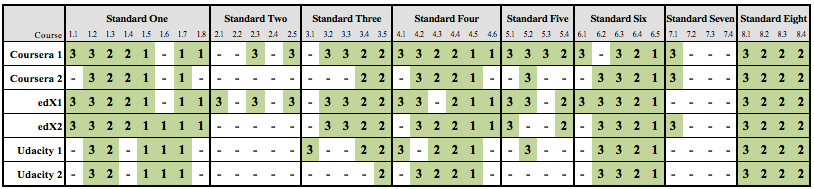

A course must meet all of the essential standards and get a score of 85% to pass a review. None of the six MOOCs passed the initial Quality Matters review (see Table 1). However, one Coursera MOOC and one edX MOOC scored very well overall in terms of total points (see Table 1). The Udacity courses, on the other hand, (which were both self-paced courses offered year round) performed the worst.

Table 1: Quality Matters Results

| Course | Percent | Pass |

| Coursera 1 | 82% | No |

| Coursera 2 | 51% | No |

| edX1 | 83% | No |

| edX2 | 68% | No |

| Udacity 1 | 43% | No |

| Udacity 2 | 44% | No |

Further, the fact that all six MOOCs did not pass a QM review does not suggest that all of these MOOCs were poorly designed. In fact, all six MOOCs met the following specific review standards (listed below under each overarching standard):

Standard 1: Course Overview and Introduction (includes 8 review standards)

1.2 Students are introduced to the purpose and structure of the course.

1.7 The self-introduction by the instructor is appropriate and available online.

Standard 3: Assessment and Measurement (includes 5 review standards)

3.1 The types of assessments selected measure the stated learning objectives and are consistent with course activities and resources.

3.5 Students have multiple opportunities to measure their own learning progress.

Standard 4: Instructional Materials (includes 6 review standards)

4.3 All resources and materials used in the course are appropriately cited.

4.4 The instructional materials are current.

4.5 The instructional materials present a variety of perspectives on the course content.

Standard 5: Learner Interaction and Engagement (includes 4 review standards)

5.2 Learning activities provide opportunities for interaction that support active learning.

Standard 6: Course Technology (includes 5 review standards)

6.2 Course tools and media support student engagement and guide the student to become an active learner.

6.3 Navigation throughout the online components of the course is logical, consistent, and efficient.

6.4 Students can readily access the technologies required in the course.

6.5 The course technologies are current.

Standard 8: Accessibility (includes 4 review standards)

8.1 The course employs accessible technologies and provides guidance on how to obtain accommodation.

8.2 The course contains equivalent alternatives to auditory and visual content.

8.3 The course design facilitates readability and minimizes distractions.

8.4 The course design accommodates the use of assistive technologies.

At the same time, though, all six MOOCs also failed to meet the following standards:

2.2 The module/unit learning objectives describe outcomes that are measurable and consistent with the course-level objectives.

7.2 Course instructions articulate or link to the institution’s accessibility policies and services.

7.3 Course instructions articulate or link to an explanation of how the institution’s academic support services and resources can help students succeed in the course and how students can access the services.

7.4 Course instructions articulate or link to an explanation of how the institution’s student support services can help students succeed and how students can access the services.

Standard 7 focuses on Learner Support. More specifically, it focuses on supporting learners who are attending institutions of higher education for college credit. It is not surprising that none of these MOOCs performed well on this standard. Despite initiatives to offer college credit for MOOCs, MOOCs, for the most part, are not designed as for-credit college courses. Rather, they are designed as professional development experiences. As such, these “courses” were never designed with supporting a learner over time the same way that college courses are designed. We contend, though, that many MOOCs easily could be updated to address Standard 7 and, therefore, (at least in terms of this sample) increase their overall score and, in turn, come much closer to passing a QM review. For example, two of the six MOOCs (i.e., 30% of MOOCs) in our sample would have an overall score over 85% (see Table 2) and simply have a handful of essential standards to address to pass a QM review (see Figure 1).

Table 2: Quality Matters Results Without Standard 7

| Course | Percent |

| Coursera 1 | 91% |

| Coursera 2 | 48% |

| edX1 | 92% |

| edX2 | 52% |

| Udacity 1 | 49% |

| Udacity 2 | 72% |

While the QM standards and review process is useful and widely adopted, one must remember that:

A point system is associated with the specific standards, with some standards assigned more value than others. Those assigned with the greatest number of points are considered essential standards that must all be satisfied for a course to meet overall Quality Matters standards. (Quality Matters, 2014, p. 2)

Regarding the MOOCs in this sample, none of the MOOCs scored well on Standard 2, which focuses on learning objectives. While learning objectives are important and serve a purpose, some might argue that explicitly sharing learning objectives with learners (i.e., making them visible) is not the hallmark of a quality course. In fact, depending on a designer’s position on learning, instructional objectives may have different purposes, or may not be appropriate at all (Ertmer & Newby, 1993). Some of the small amount of research conducted on QM suggests that students and faculty view the importance of learning objectives differently—with students valuing clearly articulated objectives compared to faculty who focus on measurable objectives that are aligned with assessments (see Ralston-Berg, 2014; You, Hochberg, Ballard, Xiao, & Walters, 2014). The failure of meeting Standard 2 is more about transparency than whether or not the course was designed around clear learning objectives. For instance, the courses could have been designed to meet clear and measurable learning objectives, but the course itself does not clearly communicate these objectives (in a traditional format) to the learner. Part of the issue with QM’s heavy focus on clearly stating learning objectives is that it ignores another school of thought in the field of instructional design that suggests learning objectives are meant more for instructional designers to aid in designing instruction and should not be shared with learners—at least not in the traditional format that includes a measurable verb, condition, and criteria (see Allen, 2003; Batchelder, 2009; Bean, 2009; Moore, 2007). Further, but related, more research needs to be conducted on the difference between standards that are worth 3 points vs. those worth 2 points or 1 point. For instance all of the learning objectives standards (Standard 2) are seen as essential and therefore worth 3 points, but other important standards like Learner Activities and Learner Interaction (Standard 5) have fewer overall substandards and overall points. Yet quality learner activities and learner interactions are arguably even more important components of a high-quality course than clearly stated learning objectives. For instance, one could easily write clear and measurable learning objectives that align with watching recorded lectures and taking multiple-choice tests. In the end, however, MOOCs are not known for high-quality learning activities and learner interactions so the greater point is simply that a course can be designed in a way to meet QM standards but still be a relatively boring course. Thus, perhaps more differentiation needs to be made between a quality course and a truly high quality or exceptional course.

Figure 1. Individual ratings per standard.

Given all of the hype surrounding MOOCs, some of the recent efforts to offer college credit for the completion of MOOCs (see Adair et al., 2014), and many people’s skepticism of MOOCs, we decided to investigate the quality of massive open online courses to identify whether or not they can meet the same quality standards as traditional online courses. We began our inquiry assuming that MOOCs cannot be as bad as many of our colleagues feared, but we were unsure how they would compare with traditional for-credit online courses. We decided to use the popular Quality Matters (QM) framework to assess the quality of MOOCs. While none of the MOOCs passed an informal QM review, to our surprise some of the MOOCs analyzed scored very well and, with some minor revisions (which is part of the QM process), two of the MOOCs could pass a QM review and, therefore, be considered high quality online courses. This suggests that MOOCs have the potential to be high quality online courses, at least in terms of course design. But high quality (designed) online courses, in our experience, do not simply happen on their own. Instead, they are the result of the intentional application of a systematic process of design and evaluation aimed at improvement over time. It is unclear at this point how much effort the major MOOC providers will spend improving their online courses over time. It is important to note that our findings suggest something different than the work of Margaryan et al. (2015). Margaryan et al.’s analysis of the instructional quality of MOOCs found that while MOOCs were well organized, the instructional design quality was low. Therefore, additional research, using multiple quality assurance frameworks is needed to further investigate the quality of MOOCs.

Keeping our results in perspective is important. We only investigated six MOOCs. As such, our results should not be generalized to apply to all MOOCs. A larger sample might reveal that the MOOCs that scored well in our sample were an anomaly or that at the same time, a larger sample might have revealed that some MOOCs may score even better on a QM review. Further, we intentionally did not review any cMOOCs. We were more interested in analyzing the MOOCs being developed by the large MOOC providers—the same types of MOOCs for which many are contemplating offering credit for completion. Our inquiry was exploratory and descriptive in nature. We never intended to conduct an exhaustive review of all possible MOOCs. Instead, we were simply interested in addressing a gap in the literature about the overall quality of MOOCs and seeing how a random selection of MOOCs performed on a process used by colleges and universities throughout the United States. With this in mind, our results suggest a few important considerations, especially in light of continued efforts to offer college credit for completing different MOOCs.

First, despite people’s biases or the little research done on MOOCs and course quality (e.g., Margaryan et al., 2015; or see Adair et al., 2014 for references to other unpublished research), MOOCs are not all inherently bad or poorly designed. Some MOOCs can be “quality” courses with just a few minor course design changes based on the QM standards. Perhaps even more importantly, MOOCs are often described as poor learning experiences because of the little-to-no teacher-student interaction, the large class sizes, and the greater emphasis on lecture and testing that is common in these types of courses. But as mentioned earlier, this type of pedagogy is still the norm in many classrooms (online or not) at all levels of education (Anderson, 2013). Further, as heretical as it may seem, some literature suggested that teacher-student interaction is not always needed. For instance, Anderson’s Equivalency Theorem posits that “deep and meaningful learning is supported as long as one of the three forms of interaction (student-teacher; student-student; student-content) is at a high level” (Miyazoe & Anderson, 2013, Definitions and Concepts section). Miyazoe and Anderson argued that based on this theorem, high levels of quality learning can take place in MOOCs even when certain types of interaction are limited. We are not arguing for the elimination of teacher-student interaction or arguing for large online courses, but simply pointing out that discounting the quality of courses when they mirror common practices elsewhere in colleges and universities might not be fair.

Second, not all MOOCs are designed to offer the same type of learning experience. For instance, some MOOCs are intentionally designed to be self-paced (e.g., Udacity MOOCs) where a learner can start at any time; other MOOCs adhere to a strict schedule and are facilitated in some manner. Further, even describing MOOCs as either xMOOCs or cMOOCs is too limiting (Conole, 2013) because there are even differences across so-called xMOOCs and cMOOCs. These differences are rarely talked about in the larger discussion about MOOCs.

Third, MOOCs (especially those by Coursea, edX, and Udacity) often have a similar instructional approach that relies heavily on professionally produced videos, readings, and quizzes, all with minimal instructor contact. Instructional designers and faculty alike can learn a lot from highly produced MOOCs in terms of effective design and production. At the same time, this approach suggests that there might be limitations to the Quality Matters rubric if such simply designed courses can pass a review and be deemed “quality” courses. The Quality Matters rubric might focus too much on the basics (e.g., clear learning objectives) and not enough on instructional approaches for active engagement, communication, and collaboration.

Our investigation suggests, among other things, that MOOCs are an opportunity to rethink how we design/teach online courses and should be seen for what they can add to the online learning landscape rather than as a complete threat or gimmick. MOOCs also have the ability to spark technological innovation; for example, some MOOC providers are pushing some boundaries with how threaded discussions and videos are used in learning management systems (e.g., with their up-voting discussions or video players).

While the results from this study cannot be generalized to all MOOCs, they do provide some needed data about the nature of MOOCs. This data can then serve to advance our discussions about MOOCs beyond mere hyperbole. For instance, can MOOCs meet the same design requirements as other for credit online courses? If not, where do they fall short? Questions such as these are important as institutions struggle with offering credit for completing MOOCs. Finally, this investigation also has the possibility of informing and further evolving online quality assurance systems like Quality Matters by analyzing MOOCs—a non-traditional format of online learning. As Anderson (2013) explained:

Each of us, as responsible open and distance educators, is compelled to examine the affordances and challenges of MOOC development and delivery methods, critically examine their effect on public education and perhaps most importantly insure that our own educational systems are making the most effective use of these very disruptive technologies. (p. 8)

Adair, D., Alman, S. W., Budzick, D., Grishman, L. M., Mancini, M. E., & Thackaberry, S. (2014). Many shades of MOOCs. Internet Learning, 3 (1), 53-72.

Allen, I. E., Seaman, J., Lederman, D., & Jaschik, S. (2012). Conflicted: Faculty and online education. Inside Higher Ed, Babson Survey Research Group, and Quahog Research Group. Retrieved from http://www.insidehighered.com/sites/default/server_files/files/IHE-BSRG-Conflict.pdf

Allen, M. W. (2003). Michael Allen's guide to e-learning: Building interactive, fun, and effective learning programs for any company. Hoboken, NJ: John Wiley & Sons.

Anderson, T. (2013). Promise and/or peril: MOOCs and open and distance education. Commonwealth of Learning. Retrieved from http://www.ethicalforum.be/sites/default/files/MOOCsPromisePeril.pdf

Batchelder, L. (2009, October). Do learners really need learning objectives? Retrieved from http://www.bottomlineperformance.com/do-learners-really-need-learning-objectives/

Bean, C. (2009, July). Our objection to learning objectives. Retrieved from http://www.kineo.com/us/resources/top-tips/learning-strategy-and-design/our-objection-to-learning-objectives

Bejerano, A. R. (2008). Face-to-face or online instruction? Face-to-face is better. Communications Current, 3 (3). Retrieved from https://www.natcom.org/CommCurrentsArticle.aspx?id=884

Bernard, R. M., Abrami, P. C., Lou, Y., Borokhovski, E., Wade, A., Wozney, L., Wallet, P. A., Fiset, M., & Huang, B. (2004). How does distance education compare with classroom instruction? A meta-analysis of the empirical literature. Review of educational research, 74 (3), 379-439.

Bidwell, A. (2013, September). Employers, students remain skeptical of online education. US News. Retrieved from http://www.usnews.com/news/articles/2013/09/20/employers-students-remain-skeptical-of-online-education

California State Faculty Association. (2013, May). Massive virtual fires engulf San Jose State University. Retrieved from http://chronicle.com/article/Document-San-Jose-StateUs/139139/

Carey, D. (2013, December). Pay no attention to supposed low MOOC completion rates. New America: EdCentral. Retrieved from http://www.edcentral.org/pay-attention-supposedly-low-mooc-completion-rates/

Carr, N. (2012, September). The crisis in higher education. MIT Technology Review. Retrieved from http://www.technologyreview.com/featuredstory/429376/the-crisis-in-higher-education/

Conole, G. (2013). MOOCs as disruptive technologies: Strategies for enhancing the learner experience and quality MOOCs. Revista de Educación a Distancia, 39. Retrieved from http://www.um.es/ead/red/39/conole.pdf

Coursera. (2013, February 7). Five courses receive college credit recommendations. Retrieved from http://blog.coursera.org/post/42486198362/five-courses-receive-college-credit-recommendations

DeBoer, J., Ho, A. D., Stump, G. S., & Breslow, L. (2014). Changing “course”: Reconceptualizing educational variables for massive open online courses. Educational Researcher, 43 (2), 74-84.

Delbanco, A. (2013, March). MOOCs of hazard. New Republic. Retrieved from http://www.newrepublic.com/article/112731/moocs-will-online-education-ruin-university-experience

Downes, S. (2011, January). ‘Connectivism’ and connective knowledge. Huffington Post. Retrieved from http://www.huffingtonpost.com/stephen-downes/connectivism-and-connecti_b_804653.html

Duffy, T. M., & Kirkley, J. R. (Eds.) (2004). Learners-centered theory and practice in distance education: Cases from higher education. Mahwah, NJ: Lawrence Erlbaum.

Edmundson, M. (2012, July). The trouble with online education. New York Times. Retrieved from http://www.nytimes.com/2012/07/20/opinion/the-trouble-with-online-education.html

EDUCAUSE. (2012). What campus leaders need to know about MOOCs: An EDUCAUSE executive briefing. Retrieved from http://net.educause.edu/ir/library/pdf/PUB4005.pdf

Ertmer, P. A., & Newby, T. J. (1993). Behaviorism, cognitivism, constructivism: Comparing critical features from an instructional design perspective. Performance Improvement Quarterly, 6 (4), 50-72. doi:10.1111/j.1937-8327.1993.tb00605.x

Fain, P. (2013, January). As California goes? Inside Higher Ed. Retrieved from http://www.insidehighered.com/news/2013/01/16/california-looks-moocs-online-push

Gabriel, T. (2011, April). More pupils are learning online, fueling debate on quality. The New York Times. Retrieved from http://www.nytimes.com/2011/04/06/education/06online.html

Glass, G. V., & Smith, M. L. (1979). Meta-analysis of research on class size and achievement. Educational Evaluation and Policy Analysis, 1 (1), 2-16.

Inglis, A. (2005). Quality improvement, quality assurance, and benchmarking: comparing two frameworks for managing quality processes in open and distance learning. The International Review of Research in Open and Distributed Learning, 6 (1). Retrieved from http://www.irrodl.org/index.php/irrodl/article/view/221/304

Jaggars, S. S. (2011). Online learning: Does it help low-income and underprepared students? (CCRC Working Paper No. 26, Assessment of Evidence Series). New York, NY: Columbia University, Teachers College, Community College Research Center.

Jaggars, S., & Bailey, T. R. (2010). Effectiveness of fully online courses for college students: Response to a Department of Education meta-analysis. Retrieved from http://academiccommons.columbia.edu/download/fedora_content/download/ac:172121/CONTENT/effectiveness-online-response-meta-analysis.pdf

Jaggars, S. S., & Xu, D. (2010). Online learning in the Virginia Community College system. New York, NY: Columbia University, Teachers College, Community College Research Center.

Jaschik, S., & Lederman, D. (2014). The 2014 Inside Higher Ed Survey of Faculty Attitudes on Technology: A study by Gallup and Inside Higher Ed. Inside Higher Ed. Retrieved from http://www.insidehighered.com/download/form.php?width=500&height=550&iframe=true&title=Survey%20of%20Faculty%20Attitudes%20on%20Technology&file=IHE-FacTechSurvey2014%20final.pdf

Jordan, K. (2014). Initial trends in enrolment and completion of massive open online courses. The International Review of Research in Open and Distributed Learning, 15 (1). Retrieved from http://www.irrodl.org/index.php/irrodl/article/viewFile/1651/2813

Kilgore, W., & Lowenthal, P. R. (2015). The Human Element MOOC: An experiment in social presence. In R. D. Wright (Ed.), Student-teacher interaction in online learning environments (pp. 389-407). Hershey, PA: IGI Global.

Kolowich, S. (2013, February). American council on higher education recommends 5 MOOCs for credit. The Chronicle of Higher Education. Retrieved from http://chronicle.com/article/American-Council-on-Education/137155/

Kroll, K. (2013). Where have all the faculty gone? Inside HigherEd. Retrieved from https://www.insidehighered.com/views/2013/05/09/faculty-who-teach-online-are-invisible-campuses-essay

Leddy, T. (2013, June). Are MOOCs good for students? Boston Review. Retrieved from http://bostonreview.net/us/are-moocs-good-students

Lederman, D., & Barwick, D. W. (2007). Does class size matter? Inside HigherEd. Retrieved from https://www.insidehighered.com/views/2007/12/06/barwick

Lockee, B. B., Moore, M., & Burton, J. (2001). Old concerns with new distance education research. EDUCAUSE Quarterly, 2, 60-62.

Lytle, R. (2012, July). College professors fearful of online education growth. US News & World Reports. Retrieved from http://www.usnews.com/education/online-education/articles/2012/07/06/college-professors-fearful-of-online-education-growth

Markoff, J. (2011). Virtual and artificial, but 58,000 want course. The New York Times. Retrieved from http://www.nytimes.com/2011/08/16/science/16stanford.html

Margaryan, A., Bianco, M., & Littlejohn, A. (2015). Instructional quality of Massive Open Online Courses (MOOCs). Computers & Education, 80, 77-83.

Mazoue, J. (2013, October). Five myths about MOOCs. EDUCAUSE Review Online. Retrieved from http://www.educause.edu/ero/article/five-myths-about-moocs

McDonald, J. (2002). “As good as face-to-face” as good as it gets? Journal of Asynchronous Learning Networks, 6 (2). 10-23.

Means, B., Toyama, Y., Murphy, R., Bakia, M., & Jones, K. (2009). Evaluation of evidence-based practices in online learning: A meta-analysis and review of online learning studies. US Department of Education.

Meyer, K. A. (2002). Quality in distance education: Focus on on-line learning (ASHE-ERIC Higher Education Report). San Francisco, CA: Jossey-Bass.

Meyer, K. A. (2004). Putting the distance learning comparison study in perspective: Its role as personal journey research. Online Journal of Distance Learning Administration, 7 (1). Retrieved from http://www.westga.edu/~distance/ojdla/spring71/meyer71.pdf

Miyazoe, T., & Anderson, T. (2013). Interaction Equivalency in an OER, MOOCS and Informal Learning Era. Journal of Interactive Media in Education, 2013 (2). Retrieved from http://jime.open.ac.uk/articles/10.5334/2013-09/

Moore, C. (2007, December). Makeover: Turn objectives into motivators [Web log post]. Retrieved from http://blog.cathy-moore.com/2007/12/makeover-turn-objectives-into-motivators/

Mulryan-Kyne, C. (2010). Teaching large classes at college and university level: Challenges and opportunities. Teaching in Higher Education, 15 (2), 175-185.

Palloff, R. M., & Pratt, K. (1999). B uilding learning communities in cyberspace. San Francisco, CA: Jossey-Bass.

Pappano, L. (2012, November). The year of the MOOC. The New York Times. Retrieved from http://www.nytimes.com/2012/11/04/education/edlife/massive-open-online-courses-are-multiplying-at-a-rapid-pace.html

Parker, K., Lenhart, A., & Moore, K. (2011). The digital revolution and higher education: College presidents, public differ on value of online learning. Pew Internet & American Life Project. Retrieved from http://www.pewsocialtrends.org/files/2011/08/online-learning.pdf

Phipps, R., & Merisotis, J. (1999). What's the difference? A review of contemporary research on effectiveness of distance learning in higher education. Washington, DC: Institute for Higher Education.

Pretz, K. (2014). Low completion rates for MOOCs. IEEE Roundup. Retrieved from http://theinstitute.ieee.org/ieee-roundup/opinions/ieee-roundup/low-completion-rates-for-moocs

Public Agenda. (2013, September) Not yet sold: What employers and community college students think about online education: A report by Public Agenda. Retrieved from http://www.publicagenda.org/files/NotYetSold_PublicAgenda_2013.pdf

Quality Matters. (2013). Higher Ed Program Rubric. Retrieved from https://www.qualitymatters.org/rubric

Quality Matters. (2014). Introduction to the Quality Matters Program. Retrieved from https://www.qualitymatters.org/sites/default/files/Introduction%20to%20the%20Quality%20Matters%20Program%20HyperlinkedFinal2014.pdf

Ralston-Berg, P. (2014). Surveying student perspectives of quality: Value of QM rubric items. Internet Learning, 3 (1), 117-126.

Rees, J. (2013, July). The MOOC racket. Slate. Retrieved from http://www.slate.com/articles/technology/future_tense/2013/07/moocs_could_be_disastrous_for_students_and_professors.html

Reich, J. (2014). MOOC completion and retention in the context of student intent. EDUCAUSE Review Online. Retrieved from http://www.educause.edu/ero/article/mooc-completion-and-retention-context-student-intent

Rosen, R. J. (2012, July). Overblown-claims-of-failure watch: How not to gauge the success of online courses. The Atlantic. Retrieved from http://www.theatlantic.com/technology/archive/2012/07/overblown-claims-of-failure-watch-how-not-to-gauge-the-success-of-online-courses/260159/

Rushkoff, D. (2013, January). Online courses need human element to educate. CNN. Retrieved from http://www.cnn.com/2013/01/15/opinion/rushkoff-moocs/index.html

Russell, T. L. (1999). The "no significant difference" phenomenon. Raleigh: North Carolina University.

Salmon, G. (2000). E-moderating: The key to teaching and learning online. London: Kogan Page.

Samuels, B. (2013). Being present. Inside Higher Ed. Retrieved from https://www.insidehighered.com/views/2013/01/24/essay-flaws-distance-education

Sharma, G. (2013, July). A MOOC delusion: Why visions to educate the world are absurd. The Chronicle of Higher Education. Retrieved from http://chronicle.com/blogs/worldwise/a-mooc-delusion-why-visions-to-educate-the-world-are-absurd/32599

Shattuck, K. (2007). Quality Matters: Collaborative program planning at a state level. Online Journal of Distance Learning Administration, 10 (3).

Shattuck, K. (2012, May). What we’re learning from Quality Matters-focused research: Research, practice, continuous improvement. Quality Matters. Retrieved from https://www.qualitymatters.org/what-were-learning-paperfinalmay-18-2012dec2012kspdf/download/What%20we're%20learning%20paper_FINAL_May%2018,%202012_Dec2012ks.pdf

Shattuck, K., Zimmerman, W. A., & Adair, D. (2014). Continuous improvement of the QM rubric and review processes: Scholarship of integration and application. Internet Learning, 3 (1), 25-34.

Wiley, D. (2002). The Disney debacle: Online instruction versus face-to-face instruction. TechTrends, 46 (6), 72.

Wilson, B. G., & Christopher, L. (2008). Hyper versus reality on campus: Why e-learning isn’t likely to replace a professor any time soon. In S. Carliner & P. Shank (Eds.), The e-learning handbook: Past promises, present challenges (pp. 55-76). San Francisco, CA: Pfeiffer/Wiley.

Xu, D., & Jaggars, S. S. (2011). Online and hybrid course enrollment and performance in Washington State Community and Technical Colleges (CCRC Working Paper No. 31). Community College Research Center, Columbia University. Retrieved from http://files.eric.ed.gov/fulltext/ED517746.pdf

Yang, D., Sinha, T., Adamson, D., & Rose, C. P. (2013, December). “ Turn on, tune in, drop out”: Anticipating student dropouts in massive open online courses. Proceedings from the 2013 NIPS Data-Driven Education Workshop, Lake Tahoe, NV. Retrieved from http://lytics.stanford.edu/datadriveneducation/papers/yangetal.pdf

You, J., Hochlberg, S. A., Ballard, P., Xiao, M., & Walters, A. (2014). Measuring online course design: A comparative analysis. Internet Learning, 3 (1), 35-52.

© Lowenthal and Hodges