Volume 17, Number 5

Dorothy R. Queiros and M.R. (Ruth) de Villiers

University of South Africa

Online learning is a means of reaching marginalised and disadvantaged students within South Africa. Nevertheless, these students encounter obstacles in online learning. This research investigates South African students' opinions regarding online learning, culminating in a model of important connections (facets that connect students to their learning and the institution). Most participants had no prior experience with online learning. Their perceptions and barriers to learning may apply to other developing countries as well.

A cross-sequential research design was employed using a survey among 58 fourth-year students who were studying a traditional paper-based module via open distance learning. The findings indicated certain essential connections: first, a strong social presence (through timely feedback, interaction with facilitators, peer-to-peer contact, discussion forums, and collaborative activities); second, technological aspects (technology access, online learning self-efficacy, and computer self-efficacy); and third, tools (web sites, video clips). The study revealed low levels of computer/internet access at home, which is of concern in an ODL milieu heading online. Institutions moving to online learning in developing countries should pay close attention to their students' situations and perceptions, and develop a path that would accommodate both the disadvantaged and techno-savvy students without compromising quality of education and learning. The article culminates in practical recommendations that encompass the main findings to help guide institutions in developing countries as they move towards online teaching and learning.

Keywords: Access to technology, connections, online learning, self-efficacy, social presence, video clips, web sites

With over 300,000 students, the University of South Africa (Unisa) is Africa's largest open distance learning (ODL) institution. This article reviews research at Unisa that investigated students' opinions and perceptions in online learning, and culminated in the determination of the most essential connections identified to support their learning. The participants were students who had not yet experienced online learning, but were taking a course scheduled to go online. In this context, connections are facets that link students to their learning and to the institution. The perceptions and apprehensions of the participants may be relevant to others, and should be proactively addressed.

Most current university students were born between 1980 and 1994, and are thus "netgeners" or "millennials" (Glenn, 2000). Many are techno-savvy and are competent social networkers (Mbati, 2012), but many South African students are from disadvantaged backgrounds with poor socio-economic conditions and inferior schooling. This creates a problem as the associated low literacy levels and restricted access to computers—apart from smart phones—hinder the effectiveness of online learning as a teaching medium (Bharuthram & Kies, 2012). Conversely, online learning is viewed as a means of reaching such students! In addition, Bharuthram and Kies found that it is the academically strong students from privileged backgrounds who enjoy online learning and its benefits. As an ODL institution, Unisa serves both types of students. A path must therefore be negotiated that accommodates the disadvantaged student, as well as the techno-savvy, without compromising quality in teaching and learning.

The growth in online learning and its advantages and disadvantages are now discussed. Globally, online learning has become a key channel of instructional delivery in higher education institutions (Blackmon & Major, 2012; Carlson & Jesseman, 2011), driven by increased costs of conventional education, and decreased costs of storing and transmitting information electronically (Çakiroğlu, 2014). Bharuthram and Kies (2012) refer to the positive impact of e-learning in distance education. In emerging economies, online education is increasing (Çakiroğlu, 2014; Todhunter, 2013).

The advantages of online learning include timeliness, accessibility, learner-centricity, currency, cost-effectiveness, ease of tracking, collaboration and interactivity (Pollard & Hillage, 2001). For students, particular advantages are flexibility, easy access to resources, convenience of electronic communication with educators, enhancement of personal computing and internet skills, and participation and social presence (Bharuthram & Kies, 2012; Mbati, 2012).

Disadvantages include start-up costs, the need for human support, its time consuming nature, lack of social presence and interactivity, and learner demotivation (Bharuthram & Kies, 2012; Pollard & Hillage, 2001). A recurring theme in literature is its technology dependence (yet inadequate technical support); inadequate expertise in online tools; lack of access/connectivity; and hardware/software problems (Zhang & Walls, 2006). Several researchers note an increase in anxiety as a result of online learning (Bharuthram & Kies, 2012; Geduld, 2013; Mbati, 2012). On first exposure to online learning, barriers include inadequate access to online materials, uncertainty regarding how to study via this mode, and the discomfort of spending extensive periods at the computer (Lund & Volet, 1998). Bharuthram and Kies (2012), as well as Lund and Volet (1998) caution against placing modules online without understanding issues regarding electronic teaching and learning. An awareness of how students perceive online learning and the barriers they face is thus essential.

The context of the study was a traditional paper-based year-long module titled "Advanced tourism development and ecotourism," which is presented at fourth level in Unisa's ODL environment. With a view to the module going online back in 2015, exploratory investigative research was undertaken to elicit students' opinions on online learning and associated facets.

In this cross-sequential research design, data—mainly quantitative—was collected at three time stamps via online surveys among the ODL cohorts of 2011, 2012, and 2013. All these cohorts received printed study material along with supplementary activities.

The data was statistically analysed. Exploratory factor analysis was conducted, using principal axis factoring as extraction method and promax rotation as rotation method, to determine if the items considered in each sub-section form a meaningful factor. Due to the small sample size, the non-parametric Mann Whitney (2 groups) or Kruskal Wallis (3 or more groups) was used to test for statistically significant differences. Cramer-V, a measure of statistical association, was used to test for statistically significant associations.

The research was conducted before year-end examinations. Participation was voluntary and anonymous, with students providing informed consent before proceeding. Ethical clearance was obtained from the Research Ethics Committee of Unisa's College of Economic and Management Sciences.

The aim was to:

The sample, of which the composition and distribution are shown in Table 1, comprised 58 participants. Although the three sub-samples were fairly small, the response rates, 47%, 53%, and 59%, are reasonable. The three-cohort approach means that participants in the three sub-studies were not the same groups. The reduction in student numbers in 2013 corresponds with stricter entrance requirements.

Table 1

Three-Cohort Sample Composition

| Learner population | Number of respondents | Response rate (%) | Contribution to combined sample (%) | |

| 2011 | 51 | 23 | 45 | 40 |

| 2012 | 47 | 25 | 53 | 43 |

| 2013 | 17 | 10 | 59 | 17 |

| Totals | 115 | 58 | 50% overall | 100% |

In general, students in ODL institutions are older than students at contact-teaching universities. Table 2 shows the ages of participants in this study. Most were born between 1980 and 1995, falling predominantly into the net generation/millennial group mentioned previously.

Table 2

Age Distribution

| Age | Number | % |

| 20-25 | 18 | 31 |

| 26-30 | 12 | 21 |

| 31+ | 18 | 31 |

| Age not stated | 10 | 17 |

| Total | 58 | 100% |

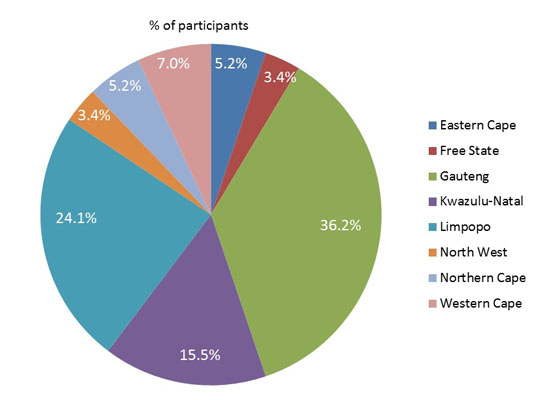

Regarding gender, 59% were male and 41% female. Figure 1 indicates that more students hailed from Gauteng (36.2%) province, followed by Limpopo (24.1%). Only one lived outside South Africa.

Figure 1. Regional profile of participants.

The questionnaire items covered opinions on online learning (the focus of this article) as well as student perceptions of the study material (addressed in prior research by Queiros, de Villiers, van Zyl, Conradie, and van Zyl, 2015). Most questionnaire items used a 5-point Likert scale: strongly disagree (SD), disagree (D), unsure (U), agree (A), strongly agree (SA). Others had customised options, such as "I use the internet: hardly ever, once a month, once a week," etc.

The research was conducted among students taking an offline module, which was scheduled to go online imminently. Most had never experienced online learning.

To ground the results, key theoretical and conceptual issues from the literature relating to each item, are presented in context instead of in a dedicated literature review.

Using the Kruskal-Wallis non-parametric test, the three cohorts can be viewed as a single composite sample as no statistically significant differences were found between the years with regard to all the variables under study except for items 7 (p=0.009) and 18 (p=0.046), where differences occur between responses from the three cohorts. In certain cases, data is presented for each of the three years.

Freeman (1997) reported that students appreciated access to web sites and video clips as learning resources. Mayes, Luebeck, Ku, Akarasriworn, and Korkmaz (2011) support short video clips for introducing and concluding sections and for providing expertise. These should be closely related to the curriculum (Ljubojevic, Vaskovic, Stankovic, & Vaskovic, 2014). However, Carlson and Jesseman (2011) found that videos did not appear to increase learning.

Web sites are frequently used to support formal programmes (McKimm, Jollie, & Cantillon, 2003) and are viewed as vital to learners (Lund & Volet, 1998). Some pages are hyperlinked to other sites, thus availing additional information via independent active learning (McKimm et al., 2003). Chang and Tung (2008) found that critical facets were users' technological self-efficacy, perceived usefulness of the web site, and ease of access.

Findings. In the module under study, students received a CD/DVD of short video clips to demonstrate theory in real-world contexts or to present expert opinions. They were also referred to web sites for active engagement, for example, using currency convertors, exploring maps, or doing quizzes. Table 3 presents participants' responses to the relevant items.

Table 3

Items Relating to Video Clips and Web Sites

| Item | Responses (%) | |||||

| SD | D | U | A | SA | A & SA | |

| 1. The video clips helped me understand the application of the information better. | 5.0 | 5.0 | 34.0 | 39.0 | 17.0 | 56.0 |

| 2. Video clips helped the material come alive for me. | 3.4 | 6.8 | 40.7 | 32.1 | 17.0 | 49.1 |

| 3. Video clips helped me remember information better. | 6.8 | 5.1 | 40.7 | 25.4 | 22.0 | 47.4 |

| 4. The references to web sites enhanced my learning. | 1.7 | 8.5 | 15.2 | 47.5 | 27.6 | 75.1 |

The results of the exploratory factor analysis confirmed the existence of a uni-dimensional factor (only one eigen value >1 and variance explained is 63.27%). Cronbach's Alpha, the internal consistency for the value of using video clips and web sites, measured.814, which is >0.7, indicating that video clips and web sites are reliable factors.

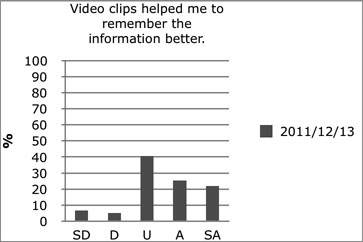

Merging "agree" and "strongly agree" in the final column of Table 3 reveals tentative positivity on video clips (47-56%), while "unsure" was rated 34%, 40.7%, and 40.7%. This notable uncertainty regarding video clips is evident in Figure 2, and was surprising to the authors. The only item regarding web sites as learning tools (4) was positively rated at 75.1%, demonstrating that web sites enhanced learning more than videos. This is an interesting finding as web sites require students to independently seek information, whereas videos offer a more passive experience.

In open-ended responses, students were asked to explain which learner engagement tools supplementing the traditional paper-based module were their favourites and why. Responses include:

In summary, the single item regarding web sites enhancing learning received very favourable ratings, and was supplemented by positive qualitative comments.

Figure 2. Responses on uncertainty regarding the value of video clips (Table 3, Item 3).

In 1987, Chikering and Gamson (1987) asserted that course quality is influenced by student-lecturer contact, cooperation and reciprocity between students, and prompt feedback. Todhunter (2013) and Ilgaz and Gülbahar (2015) also emphasise the first two as essential in online education. Such interaction can improve openness (Todhunter, 2013); contribute to a sense of community in student-student contact and lecturer-student communication (Mayes et al., 2011; Carlson & Jesseman, 2011); support learning goal attainment (Phelan, 2012); encourage positive attitudes towards online learning (Leong, 2011); enhance engagement, student satisfaction and motivation (Geri, 2012; Mbati, 2012); and improve retention (Leong, 2011). In online contexts, this is termed as social presence (Bharuthram & Kies, 2012; Cook, 2012) or learning communities, for mutual support and exchange of ideas (Phelan, 2012).

From a constructivist perspective, highly interactive settings are required to facilitate supportive and corrective feedback, and online collaborative learning (Çakiroğlu, 2014; Muuro, Wagacha, Oboko & Kihoro, 2014). Before presenting results, literature on the most relevant sub-components of social presence is overviewed.

Discussion forums. Social presence can be implemented via online discussion forums (ODFs) (Muuro et al., 2014). Effective well-designed ODFs foster learner-centred instruction, support collaboration, and implement constructivism via active engagement (Palloff & Pratt, 2009; Samuels-Peretz, 2014). Due to lack of immediacy, ODFs provide more thoughtful, critical, informative, and extensive interaction than synchronous communication (McGinley, Osgood, & Kenney, 2012). Students can reflect and craft contributions before posting, thus reducing vulnerability (Carlson & Jesseman, 2011), while lurkers (those who merely observe) can correct misconceptions by observing interactions (Carlson & Jesseman, 2011; Fung, 2004).

Not all students participate in ODFs (Çakiroğlu, 2014). In a South African study, Bharuthram and Kies (2012) suggest that students less proficient in English dislike forums for fear of being misunderstood. Asunka's (2008) Ghanaian study reported a lack of enthusiasm for online collaborative activities, and reluctance to initiate threads. Remaining anonymous may encourage more interaction (Freeman, 1997).

Collaborative work. Collaborative tasks can increase social interaction in ODL (Geri, 2012; Mayes et al., 2011); encourage social construction of knowledge, active idea sharing, clarification of ideas, peer feedback, reasoning, and problem-solving; and reduce isolation (Stacey, 1999). In contrast, students can find group work complex, demanding, and time-consuming in distance learning. They may struggle to work collaboratively, preferring student-lecturer interaction (Asunka, 2008; Çakiroğlu, 2014).

Strong teaching presence. A strong teaching presence is essential in online learning (Tsai, 2012) and a lack thereof is a stumbling block to learners (Mayes et al., 2011). Facilitator availability and accessibility help to reduce anxiety, motivate distance learners to participate, and improve the learning experience and computer self-efficacy levels (Blackmon & Major, 2012; Hauser, Paul & Bradley, 2012; Zhang, Peng, & Hung, 2009).

Timely feedback. Referring to personal contact with lecturers, several studies mention lack of timely feedback as an impediment causing anxiety and reducing enthusiasm and engagement (Çakiroğlu, 2014; Mbati, 2012; Zhang et al., 2009). Online feedback from teachers can enhance learning (Tsai, 2012; Wang, Shannon, & Ross, 2013), but must be timely (Çakiroğlu, 2014; Mbati, 2012; Phelan, 2012).

Findings. As stated, the module was presented offline in the years under study. Although Unisa's learning management system, myUnisa, has a built-in discussion forum, the students in this module rarely used it. Email and electronic uploading of deliverables were available, but not synchronous chat facilities or formal scaffolding for collaborative work. Responses on these aspects (Table 4) are therefore based on learners' opinions and desires.

Table 4

Items Relating to Social Presence

| Item | Responses (%) | |||||

| SD | D | U | A | SA | A + SA | |

| Social Presence (fellow students) | ||||||

| 5. I would like opportunities to establish personal contact with other students. | 5.1 | 10.1 | 10.2 | 42.4 | 32.2 | 74.6 |

| 6. I am keen to participate in online discussion forums. | 1.7 | 11.9 | 11.8 | 47.5 | 27.1 | 74.6 |

| 7. I would prefer not to contribute to online discussion forums and would rather just observe. | 1.7 | 17.2 | 13.8 | 48.3 | 19.0 | 67.3 |

| 8. It would help if I did activities with other students. | 6.8 | 17.0 | 16.9 | 35.6 | 23.7 | 59.3 |

| 9. I am keen to participate in online collaborative activities (e.g., doing a joint project). | 8.5 | 8.5 | 20.3 | 37.3 | 25.4 | 62.7 |

| 10. I would like activities that enable me to incorporate fellow students' contributions. | 6.8 | 13.6 | 16.9 | 42.4 | 20.3 | 62.7 |

| 11. I would like activities that enable me to build on and elaborate on fellow students' contributions. | 8.3 | 10.6 | 23.6 | 37.2 | 20.3 | 57.5 |

| Social Presence (lecturer) | ||||||

| 12. Interacting with my lecturer will help motivate me to study. | 1.7 | 3.4 | 11.8 | 35.6 | 47.5 | 83.1 |

| 13. I would like opportunities to interact with my lecturer online. | 1.7 | 0 | 1.6 | 45.8 | 50.9 | 96.7 |

| 14. I would like activities that enable me to obtain corrective feedback from the lecturer. | 0 | 1.7 | 8.4 | 44.1 | 45.8 | 89.9 |

The results of the EFA indicated two factors for social presence: interaction with fellow-students/peers and interaction with lecturer/facilitator (variance explained is 48.26 and 9.92 respectively). Cronbach's alpha (reliability) for the two factors measured.902 (interaction with students) and.688 (interaction with lecturer). Although the latter is just <0.7, it is considered acceptable (Hair, Black, Babin & Anderson, 2010), due to the exploratory nature of the research.

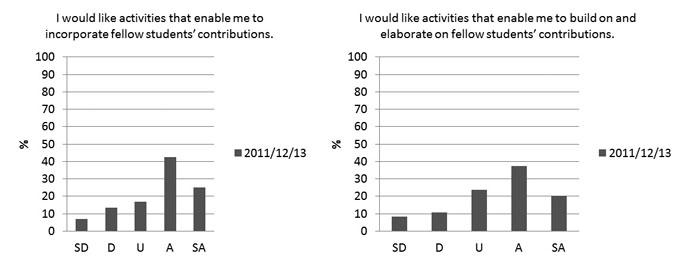

Ratings on the merged "A" and "SA" regarding collaborative experiences were positive: 75%, 75% (peer-to-peer communication); 59%, 63%, 63%, 58% (joint online activities); and extremely positive, 83%, 97%, 90% (online contact with lecturer). Extremely high ratings went to lecturer interaction (83% and 97%), followed by peer contact (75% twice), then by collaborative student activities (around 60%). The reticence regarding joint activities and contributions to group work is probably due to lack of prior exposure in an online context. Participants were slightly more positive about incorporating others' contributions (63%) than building on them (58%) (Figure 3). This could indicate lack of confidence and inexperience, with learners more willing to use others' contributions rather than be active contributors. Moreover, there was a strong inclination (67%) against active contribution to ODFs with participants tending rather to lurk. This was the case in all three years, and indicates inexperience in interaction on such platforms, which requires attention.

The non-parametric Kruskal Wallis test results indicate a statistically significant difference at the 5% level of significance between the three age groups (20-25, 26-30, 31+) regarding interaction with other students. The asymptotic significance value is.048<.05. Furthermore, the mean ranks indicate that the oldest participants tend to agree more (mean rank=30.89) with the items on social presence (between students) than the two younger groups (mean ranks of 20.25 and 21.29), i.e., on the value of peer-to-peer communication and collaboration with fellow-students.

Figure 3. Responses indicate that students are more keen to incorporate others' contributions rather than build on them themselves.

"The instructional value of any technology is only as good as the quality of its implementation and the skill and comfort levels of its users" (Mayes et al., 2011). Wang et al., (2013) define two dimensions within technological self-efficacy, both of which were addressed in this study, namely general computer self-efficacy and online learning self-efficacy. General computer self-efficacy is the confidence that one can perform well across a variety of tasks. It can be tested by measuring computer use and/or frequency of use (Hauser et al., 2012). Online self-efficacy relates to the skills required to use online learning tools such as discussion forums, emails, and internet searches (Wang et al., 2013). Universities should consider how students perceive online study and what barriers they face (Lund & Volet, 1998). The more technologically proficient students are, the more they prefer and cope with online learning (Lund & Volet, 1998). Wang et al., (2013) concur, reporting that students with previous online experience have more effective learning strategies and higher motivation. This in turn results in increased technology self-efficacy, course satisfaction, and higher marks.

Many novice learners come to the online environment without computing skills (Wang et al., 2013). Technological backgrounds are often highly inconsistent, causing anxiety, confusion, and loss of control (Mayes et al., 2011). Students must master digital literacy (Butcher, 2014), first by learning to use email, discussion forums, and internet searches (Bates & Khasawneh, 2004; Mbati, 2012).

South African studies by Bharuthram and Kies (2012) on online learning, and by Geduld (2013) on open distance learning, report that the main barriers students faced were limited access to libraries and computers, high costs of computing, internet access, lack of English proficiency, and poor writing skills. Lack of access to technology can leave students feeling marginalized, cause anxiety, and create a digital divide between them and students with access (Bharuthram & Kies (2012). Monk (2001) cautions that the digital divide can break communication between student and institution, exacerbating social exclusion.

In Africa, only one in ten households has internet connectivity (van Rij, 2015). By contrast, mobile-broadband penetration in Africa grew from 2% in 2010 to almost 20% in 2014 (ITU, 2014). However, online students require more than mobile telephony to study. In Geduld's (2013) research on South African distance education students at North-West University, 67% had no internet access at home, mainly for financial reasons. Asunka's (2008) study in Ghana revealed that only 5 out of 22 students had computer and internet access at home. The findings of this study were somewhat better, though still inadequate for distance learners. Furthermore, in the Tanzanian context, Mtebe and Raisamo (2014) investigated barriers from the instructor's perspective: lack of access to computers and internet (68%), low internet bandwidth (73%), and lack of skills to use or create online educational resources (63%).

Findings. The set of responses regarding technology is presented in Table 5 and discussed below.

Table 5

Access to Technology and the Skills Required for Online Learning

| Responses (%) | ||||||

| SD | D | U | A | SA | A + SA | |

| Item 15. I am able to use a computer and the internet with ease. | 1.7 | 1.7 | 3.4 | 22 | 71.2 | 91.2 |

| Item 16. When I use technological tools to help me learn, I feel engrossed in what I am doing. | 3.6 | 5.4 | 7.1 | 35.7 | 48.2 | 83.9 |

| Question 17. What do you consider to be your level of computer/technological skills? | Responses (%) | |||

| Beginner | Competent | Proficient | Advanced | |

| 1.7 | 25.4 | 49.2 | 23.7 | |

| Item 18. I access the internet at: | Responses (%) | ||||

| No access | Library | Internet café | Office | Home | |

| 1.7 | 15.3 | 32.2 | 57.6 | 45.8 | |

| Question 19. What type of technology access do you have? | Responses (%) | ||||

| No access to a computer | Access to computer part of the time (with no internet) | Personal computer but not internet | Access to computer part of the time (with internet) | Personal computer with internet | |

| 3.4 | 5.1 | 20.3 | 25.4 | 45.8 | |

| Item 20. I use the internet: | Responses (%) | ||||

| Hardly ever | Once a month | Once a week | Once a day | Several times a day | |

| 3.4 | 5.1 | 15.2 | 13.6 | 62.7 | |

| Item 21. I use the internet for: | Responses (%) | ||

| My studies | Other | ||

| 84.8 | 84.8 | 59.3 | |

The exploratory factor analysis indicated a KMO measure of.496 for the first six items in Table 5, which is <0.5, showing that this data is unsuitable for factor analysis.

Computer Proficiency. Regarding skills (15), 91% of participants (A + SA) could use a computer and the internet with ease and 84% (A + SA) felt engrossed (16); 73% considered themselves proficient or advanced in computer use (17). These results are very positive.

The researchers also investigated differences between age groups and their responses to the above items using the non-parametric Kruskal Wallis. For the level of technological skills (17), the asymptotic significance value is.059, which is <0.10, hence significant at the 10% level, indicating a tendency that younger participants (mean rank=30.14) perceive their computing skills more highly than older participants.

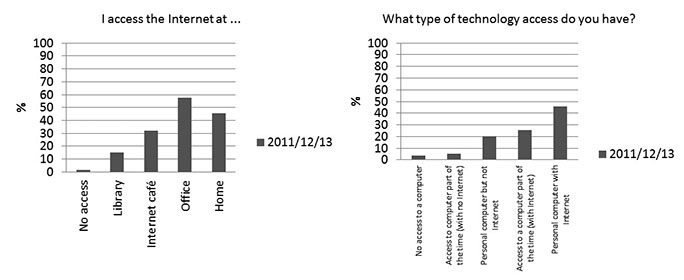

Access. The results regarding access to technology are less satisfactory (Figure 4). For Item 18, small amounts (1.7%) have no access to a computer, while the majority gain access at the office (58%) followed by home (46%). This is consistent with Question 19 where 46% have access to a personal computer with internet at home. Out of all participants, 20% have a personal computer but no internet, and 25% have access to a computer (with internet) part of the time. The level of 46% home access would be inadequate for future online learning. Furthermore, students cannot depend on spending workplace time (where they have other responsibilities) on their studies.

When testing for a difference between genders regarding the type of technological access (19), it emerged as statistically significant at the 10% level (p=.07) with males having a higher mean score, indicating that males tend to have more access to technology options than females.

Figure 4. Responses to location and type of technological access.

For Item 20, 63% used the internet several times a day. Contrasting this with Item 18, where participants predominantly gained access from the office, it can be assumed that a fair amount of internet usage takes place at work.

Item 21, regarding reasons for which the internet was used, showed extensive use of the internet for studies and emails (85% in both cases), which is surprising since it exceeds the number with internet access in 18 and 19. The difference appears equivalent to the 32% who use internet cafes.

For Item 21, the Pearson Chi-square test could not be used, because more than 20% of cells had expected counts less than 5. Cramer's V, though, which measures association between two variables, shows a positive relationship between age group and use of internet for studies (21) indicating that the youngest participants used it most.

In their study on online learning at Murdoch University in Perth, Lund, and Volet (1998) found that when students could attend lectures, face-to-face contact was their major reason not to study online (68%) while being "unsure of [their] ability to study successfully in this mode" was chosen by 59%. For external students who could choose between traditional distance mode and online, "restricted access or no access to a computer off campus" and being "unsure of [their] ability to study successfully in this mode," were the main reasons (66% and 44% respectively) not to study online. Conducting research among faculty in the US, Seaman (2009) found that over 70% viewed online learning as inferior to face-to-face. In contrast, another US report stated that over 75% of academic leaders at public institutions found online to be equal to or better than face-to-face instruction (Allen & Seaman, 2010). In Asunka's (2008) study in Ghana, no students had previous online experience, but most were keen to try. However, after the module, 44% indicated they had not found online learning very useful. In the developing world, it is often perceived as inferior to classroom learning (Asunka, 2008).

Findings.

Table 6

Online Learning Experiences and Opinions

| Question 22. What is your experience with online learning? | Responses (%) | ||

| Have never taken an online course | Have taken a partially online course | Have taken a fully online course | |

| 74.6 | 10.2 | 15.3 | |

| Question 23. In your opinion, when comparing an online module with a paper-based distance education module... | Responses (%) | |||

| I will learn better in a paper-based module than in an online module. | I will learn equally well in an online module as in a paper-based module. | I will learn better in an online module than in a paper-based module. | I don't know which method of learning is better. | |

| 28.8 | 15.3 | 15.3 | 40.7 | |

For Item 22, a high proportion (74.6%) had never taken an online course, indicating the need for orientation and training on first exposure. This inexperience could also explain the tentative reaction to video clips and collaborative activities, and heavy reliance on lecturers (discussed previously).

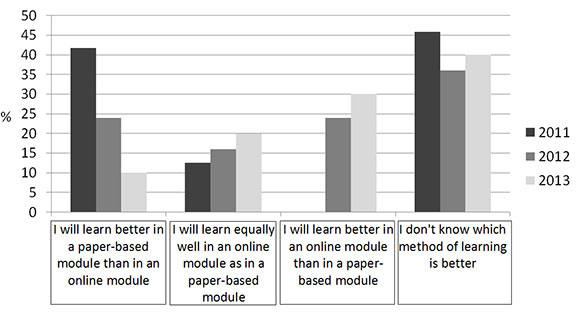

For Question 23 in Table 6, it is useful to examine the longitudinal data, as shown in Figure 5. Participants in 2011 rated paper better than online, 2012 participants were almost equal in terms of paper versus online, and 2013 participants viewed online as better than paper-based. There is a high proportion of "unsures" in all three years, which is of concern considering the increase in online. However, the increase in ratings from 2011 to 2013 suggests that students are becoming accustomed to the concept.

Figure 5. Longitudinal data suggests improvement in attitudes to online learning.

The 2011 participants were less confident overall. Table 7 also presents longitudinal data, namely pertinent extracts from Tables 5 and 6 for Items 16 and 23 for the three years in question. The students in 2012 and 2013 score higher than students in 2011, in terms of being engrossed when learning via technological tools (16); and are more in favour of online learning. The 2011 students tend more towards paper-based than online (23) and feel less proficient (17) than their 2012 and 2013 counterparts. Furthermore, longitudinal data for Item 7 ("I would prefer not to contribute to online discussion forums and would rather just observe") indicates that the 2011 students were highly reticent regarding active involvement in ODFs; 83% of them agreed or strongly agreed that they would prefer not to contribute but would rather just lurk.

Table 7

Longitudinal Data and Reticence of 2011 Participants

| Question/item | 2011 | 2012 | 2013 |

| 24. When I use technological tools to help me learn, I feel engrossed in what I am doing. | 66.7 | 92 | 80 |

| 25. In your opinion, when comparing an online module with a paper-based distance education module, students taking an online module will: | (% response) | ||

| learn better in an online module than in a paper- based module. | 0 | 24 | 30 |

| learn better in a paper-based module than in an online module. | 41.7 | 24 | 10 |

The previous decade in ODL has been equated to a battlefield painting where one looks "…to see who has died, which among the wounded can be given help, while those who walk away wonder if the world has really changed" (Tait, 2003, p.1). This dramatic statement highlights two groups of students, neither doing particularly well: those who could not cope and dropped out and those still struggling (Geduld, 2013). Should struggling be the status quo? Despite constraints in the South African context, online learning has great potential for providing access to quality higher education and can be an excellent solution (Asunka, 2008). However, the concerns of students and the obstacles they experience must be thoroughly addressed. "The most fundamental meta-criterion for judging whether or not good teaching is happening is the extent to which teachers deliberately... try to get inside students' heads and see... learning from their point of view" (Brookfield, 1995, p.35).

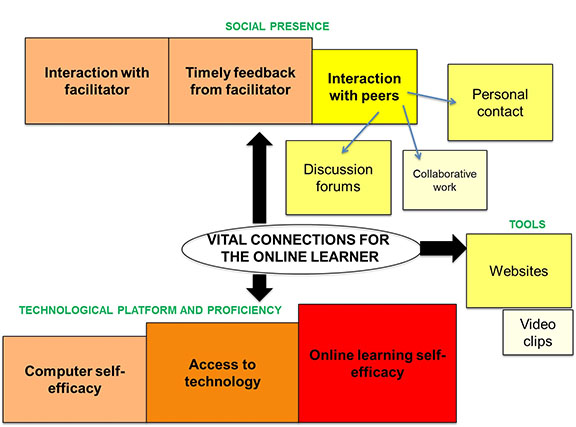

In an attempt to do this, the statistics emerging from the perceptions and opinions of participants were used to determine the various connections that link students to their learning and the institution, and their relative degree of importance. The model in Figure 6 is the authors' representation of these connections. The sizes of the blocks and their shading indicate the ranked importance of each major connection, with the larger darker blocks indicating greater importance.

In support of this model, the researchers make recommendations, and strengthen them with references to previous research. The groups of connections are discussed in descending order of importance to the learner. The discussion should be read in conjunction with viewing the figure.

Considering that South African studies cited herein such as Bharuthram and Kies (2012) and Geduld (2014), as well as Asunka's (2008) study in Ghana, and Mtebe and Raisamo (2014) in Tanzania, concur with our results, the authors propose that the following recommendations are relevant to other developing countries too. The interpretation following could also be transferable to disadvantaged students in developed countries.

Figure 6. Model of vital connections for the online learner.

The first connection, technological aspects, indicates that "online learning self-efficacy," "access to technology," and "computer self-efficacy," are vital connections (the first two being most important) and areas in which learners seek support. ODL institutions need to understand the purpose of technology. Online learning should be designed considering learners' needs and obstacles, not just as a cost-saving mechanism. Carlson and Jesseman (2011) highlight the tension between rapid offerings of online options and careful planning to ensure they indeed deliver optimal learning experiences. In the context of developing countries, course designers and ODL institutions should note that the newest technology is not necessarily optimal, but should rather consider a critically discerning hybrid of appropriate, user-friendly, and accessible technology combined with media such as print and radio.

To facilitate these three connections, relevant support is essential. Though integrating e-learning into curricula may be beneficial, some students are challenged and require additional support (Bharuthram & Kies, 2012). Students and lecturers alike should be trained regarding technology usage and the skills for online learning. Particular attention should be paid to first exposures. Training reduces anxiety and increases technological self-efficacy (Bates & Khasawneh, 2004), which in turn, increases motivation to study online (Wang et al., 2013). This positive feedback loop discovered by Wang et al., offers hope to struggling students: the more online courses taken, the more students internalise effective learning strategies and become increasingly motivated. This leads to greater technological self-efficacy and satisfaction, which improve final grades. Several authors suggest introductions to online courses to address the technical strategies required for each module, for example, contributing to discussion forums, sending/receiving emails, downloading/uploading documents, conducting internet searches, utilizing online databases, etc. This can be done via tutorial letters, videos, and activities. Scaffolding learners' self-regulatory skills is preferable to throwing them in at the deep end (McMahon & Oliver, 2001). Facilitators can also design assessments and tasks that encourage technology usage (Wang et al., 2013) such as mandatory blogs, discussion forum postings, and/or collaborative online work.

Mayes et al., (2011) advocate user-based surveys at an early stage to ascertain the unique characteristics and environment of the learners, including prior online experience and learning preferences. A site could be provided where students report problems and mutually address complexities. Students regularly assist each other by sharing how they resolved similar issues (Mayes et al., 2011; Stacey, 1999).

Second, the next connections to feature strongly were "interaction with facilitator" and "timely feedback from facilitator." Hence a strong teacher presence is vital in online learning, perhaps more so in developing countries. As the driver of the learning experience, the facilitator should ensure acquisition of technological skills, stimulate constructivist learning, nurture the online learning community, encourage and facilitate discourse, and provide prompt feedback and assessment (Butcher, 2014; Mbati, 2012). While learners hold some responsibility for creating social presence, most of it resides with the facilitator (Blackmom & Major, 2012). Facilitators should be trained for this role. McMahon and Oliver (2001) refer to teacher-free online learning environments as impoverished in learning support.

"Interaction with peers" through "personal contact" and "discussion forums" holds equal weight in the model, and alerts facilitators to the importance of creating the right type of interaction. Many learners who are inexperienced in online learning and interaction will require orientation and training. Cook (2012) advises considering the group profile and creating social presence that builds and supports learning while establishing a sense of belonging. Technological tools and activities can engage learners via content, peers, and the facilitator. Mayes et al., (2011) suggest building social tools into the module, for example, a space where learners can introduce themselves and post their photo, and a "lounge" where learners can chat about non-academic issues. Students could critique the community-building strategies and suggest ideas.

Hesitance to participate in collaborative work and discussion forums emerged in this study as well as others from developing countries, such as Asunka (2008) and Bharuthram and Kies (2012), and hence requires attention. Freeman (1997) proposes systems where students can remain anonymous and where new online learners are guided in participation techniques. Interaction could start with easy scaffolded tasks and progress to more complex ones. Focused questions requiring short answers should elicit more responses, and discussions should be guided by a facilitator (Fung, 2004; Mayes et al., 2011).

For the African student, learning in support groups is a cultural practice. Without such, students tend to experience isolation and frustration (Geduld, 2013). Lecturers could create umbrella discussion forums within which students could operate in local face-to-face study groups.

The third set of connections relates to learning tools. A wide range of technological tools exists, of which only the two most relevant to this module were investigated, namely web sites and video clips, with participants voicing strong positivity regarding the former. Other possibilities are chat rooms, blogs, wikis, instant messaging, power point presentations, podcasts, live lectures, current events, assessments with feedback, synchronous activities to enhance teaching and learning and m-learning (mobile learning) apps for tablets and smartphones (Cook, 2012; Mayes et al., 2011; Mbati, 2012). Tools should be selected with discernment to avoid overloading the learner.

This exploratory investigative research used a cross-sequential survey design to investigate opinions on various facets of online learning, mainly amongst participants who had no experience of it. In developing countries, an awareness of students' opinions, concerns, and the barriers they face is vital as online learning increases and extends to multiple learners—those with less access and advantages, and those with more.

The authors identified the facets most needed by participants and consolidated them as ranked connections. This culminated in a model that visually presents the interrelationships and relative importance in:

It is likely that these connections would apply to other learners too, particularly in developing countries. Institutions going online in these countries, should note students' situations and perceptions, and negotiate a path accommodating both the disadvantaged and advantaged, without compromising quality. Tools and delivery methods should be carefully selected, paying close attention to the needs of the different types of students being served. This awareness can result in strategies to better prepare both the lecturer and student for online learning and to decrease barriers to technology and access.

Allen, I.E., & Seaman, J. (2010). Class differences: Online education in the United States, 2010. Babson Survey Research Group. Retrieved from http://files.eric.ed.gov/fulltext/ED529952.pdf

Asunka, S. (2008). Online learning in higher education in Sub-Saharan Africa: Ghanaian university students' experiences and perceptions. The International Review of Research in Open and Distance Learning, 9 (3), 1-13.

Bates, R., & Khasawneh, S. (2004). A path analytic study of the determinants of college students' motivation to use online teaching technologies. Academy of Human Resource Development International Conference (AHRD), 1075-1082. Retrieved from ERIC Reproduction Service (ED492503).

Bharuthram, S., & Kies, C. (2012). Introducing e-learning in a South African higher education institution: Challenges arising from an intervention and possible responses. British Journal of Educational Technology, 44(3), 410-420.

Blackmom, S.J., & Major, C. (2012). Student experiences in online courses: A qualitative research synthesis. The Quarterly Review of Distance Education, 13(2), 77-85.

Brookfield, S.D. (1995). Becoming a critically reflective teacher. San Francisco, CA: Jossey-Bass.

Butcher, N. (2014). Technologies in higher education: Mapping the terrain. Moscow: UNESCO Institute for Information Technologies in Education. Retrieved from http://iite.unesco.org/pics/publications/en/files/3214737.pdf

Çakiroğlu, Ü. (2014). Evaluating students' perspectives about virtual classrooms with regard to seven principles of good practice. South African Journal of Education, 34(2), 1-19.

Carlson, J., & Jesseman, D. (2011). Have we asked them yet? Graduate student preferences for web-enhanced learning. The Quarterly Review of Distance Education, 12(2), 125-134.

Chang, S., & Tung, F. (2008). An empirical investigation of students' behavioural intentions to use the online learning course websites. British Journal of Educational Technology, 39(1), 71-83.

Chikering, A.W., & Gamson, Z.F. (1987). Seven principles for good practice in undergraduate education. AAHE Bulletin, 39, 2-6. Retrieved from http://files.eric.ed.gov/fulltext/ED282491.pdf. Accessed 9/4/2015

Cook, V. (2012). Learning everywhere, all the time. The Delta Kappa Gamma Bulletin, Professional Development, Spring 2012.

Freeman, M. (1997). Flexibility in access, interaction and assessment: The case for web-based teaching programs. Australian Journal of Educational Technology, 13(1), 23-39.

Fung, Y.Y.H. (2004). Collaborative online learning: Interaction patterns and limiting factors. Open Learning: The Journal of Open, Distance and e-Learning, 19(2), 135-149.

Geduld, B. (2013). Students' experiences of demands and challenges in open distance education: A South African case. Progressio, 35(2), 102-125.

Geri, N. (2012). The resonance factor: Probing the impact of video on student retention in distance learning. Interdisciplinary Journal of E-Learning and Learning Objects, 8, 1-13.

Glenn, J. M. (2000). Teaching the net generation. Business Education Forum, 54(3):6-14.

Hair, J.F., Black, W.C., Babin, B.J. & Anderson, R.E. (2010). Multivariate data analysis: A global perspective (7th ed.). Upper Saddle River, NJ: Pearson.

Hauser, R., Paul, R., & Bradley, J. (2012). Computer self-efficacy, anxiety, and learning in online versus face-to-face medium. Journal of Information Technology Education: Research, 11, 141-154.

Ilgaz, H., & Gülbahar, Y. (2015). A snapshot of online learners: E-readiness, e-satisfaction and expectations. International Review of Research in Open and Distributed Learning, 16(2), 171-187.

ITU (International Telecommunication Union). (2014). The World in 2014: ICT Facts and Figures. Geneva: ICT Data and Statistics Division. Retrieved from https://www.itu.int/en/ITU-D/Statistics/Documents/facts/ICTFactsFigures2014-e.pdf

Leong, P. (2011). Role of social presence and cognitive absorption in online learning environments. Distance Education, 32(1), 5-28.

Ljubojevic, M., Vaskovic, V., Stankovic, S., & Vaskovic, J. (2014). Using supplementary video in multimedia instruction as a teaching tool to increase efficiency of learning and quality of experience. The International Review of Research in Open and Distance Learning, 15(3), 275-291.

Lund, C., & Volet, S. (1998). Barriers to studying online for the first time: Students' perceptions. Proceedings of Planning for Progress, Partnership and Profit, EdTech Conference, July 1998. Perth, Western Australia.

Mayes, R., Luebeck, J., Ku, H., Akarasriworn, C., & Korkmaz, O. (2011). Themes and strategies for transformative online instruction: A review of literature and practice. The Quarterly Review of Distance Education, 12(3), 151-166.

Mbati, L.A. (2012). Online learning for social constructivism: Creating a conducive environment. Progressio, 34(2), 99-119.

McGinley, V., Osgood, J., & Kenney, J. (2012). Exploring graduate students' perceptual differences of face-to-face and online learning. The Quarterly Review of Distance Education, 13(3), 177-182.

McKimm, J., Jollie, C., & Cantillon, P. (2003). Web-based learning. British Medical Journal, 326(7394), 870-873.

McMahon, M., & Oliver, R. (2001). Promoting self-regulated learning in an online environment. Ed-Media 2001 World Conference on Educational Multimedia, Hypermedia & Telecommunications, 1299-1305. Charlottesville, VA: Association for the Advancement of Computing in Education.

Monk, D. (2001). Open/distance learning in the United Kingdom. Why do people do it here (and elsewhere)? Perspectives in Education, 19(3), 53-66.

Mtebe, J.S. & Raisamo, R. (2014). Investigating perceived barriers to the use of open educational resources in higher education in Tanzania. The International Review of Research in Open and Distance Learning, 15(2), 43-65.

Muuro, M.E., Wagacha, W.P., Oboko, R., & Kihoro, J. (2014). Students' perceived challenges in an online collaborative learning environment: A case of higher learning institutions in Nairobi, Kenya. The International Review of Research in Open and Distance Learning, 15(6), 132-161.

Palloff, R.M., & Pratt, K. (2009). Assessing the online learner: Resources and strategies for faculty . San Francisco, CA: John Wiley & Sons.

Phelan, L. (2012). Interrogating students' perceptions of their online learning experiences with Brookfield's critical incident questionnaire. Distance Education, 33(1), 31-44.

Pollard, E., & Hillage, J. (2001). Exploring e-learning. Report 376. Brighton: Institute for Employment Studies.

Queiros, D.R., de Villiers, M.R., van Zyl, C., Conradie, N. & van Zyl, L. (2015). Rich environments for active Open Distance Learning: Looks good in theory but is it really what learners want? Progressio, 37(2):79-100.

Samuels-Peretz, D. (2014). Ghosts, stars, and learning online: Analysis of interaction patterns in student online discussions. The International Review of Research in Open and Distance Learning, 15(3), 50-71.

Seaman, J. (2009). Online learning as a strategic asset: Vol II. The paradox of faculty voices: Views and experiences with online learning. Washington DC: Association of Public and Land Grant Universities Sloan National Commission on Online Learning.

Stacey, E. (1999). Collaborative learning in an online environment. International Journal of E-Learning and Distance Education, 14(2):14-33.

Tait, A. (2003). Reflections on student support in open and distance learning. The International Review of Research in Open and Distance Learning, 4(1), 1-10.

Todhunter, B. (2013). LOL – limitations of online learning – are we selling the open and distance education message short? Distance Education, 34(2), 232-252.

Tsai, C. (2012). The role of teacher's initiation in online pedagogy. Education + Training, 5 4(6), 456-471.

Van Rij, V. (2015). Annex to discussion paper by Victor van Rij. Retrieved from http://iite.unesco.org/files/news/639201/Annex_to_Discussion_Paper_Foresight_on_HE_and_ICT.pdf. UNESCO Institute for Information Technologies in Education

Wang, C., Shannon, D.M., & Ross, M.E. (2013). Students' characteristics, self-regulated learning, technology self-efficacy, and course outcomes in online learning. Distance Education, 34(3), 302-323.

Zhang, J. & Walls, R. (2006). Instructor's self-perceived pedagogical principle implementation in the online environment. The Quarterly Review of Distance Education, 7(4), 413-426.

Zhang, K., Peng, S., & Hung, J. (2009). Online collaborative learning in a project-based learning environment in Taiwan: A case study on undergraduate students' perspectives. Educational Media International, 46(20), 1-8.

Online Learning in a South African Higher Education Institution: Determining the Right Connections for the Student by Dorothy R. Queiros and M.R. (Ruth) de Villiers is licensed under a Creative Commons Attribution 4.0 International License.