Volume 17, Number 6

Eleonora Milano Falcão Vieira1, Marialice de Moraes2, and Jaqueline Rossato3

1,2Federal University of Santa Catarina, 3Federal University of Maranhão

The constant technological development in education, and the potentiality of the resources offered by Information and Communication Technologies (ICTs), are challenges faced by teaching institutions in Brazil, especially by those institutions, which by the very nature of their services intend to provide distance education courses. In such a scene, one sees the use of technology as a tool to give support and to take part in the process of teaching activities, such as the Virtual Learning Objects (VLOs), which offer an opportunity to contribute to the teaching and learning process. Considering this, the present work aims at analyzing the VLOs used in the distance education courses of Economic Sciences and of Accounting at the Universidade Federal de Santa Catarina (Federal University of Santa Catarina), under the quality criteria indicated by the Learning Object Review Instrument (LORI) methodology proposed by Nesbit, Belfer, and Vargo (2002), Nesbit, Belfer, and Leacock (2004), and Leacock & Nesbit (2007), in order to learn how to better take profit of efforts and resources.

Keywords: distance education, learning virtual objects, economic sciences, accounting

As a largely used teaching modality at higher education institutions, Distance Education (DE) leads to an increasing use of Information and Communication Technologies (ICTs), particularly the new possibilities offered by the Internet. Thus, when choosing DE, these institutions must invest in the preparation of specialized staves, as well as in the specific training of teachers and students.

Together with such an increased use of ICTs, there is a growing offer of educational software, which demands the need to evaluate its use (Gama, 2007). For DE initiatives, as well as in the use of diverse technological tools in face-to-face disciplines, the sharing and reuse of information made possible by the use of Virtual Learning Objects (VLOs) come as interesting opportunities of returns in investment and learning. Previous studies on the use of VLOs suggest positive results in the acquisition of new knowledge, in the improvement of learning, and in the building of a sense of belonging (Alvarez & Dal Sasso, 2011).

"The challenge of creating and using learning objects involves pedagogical issues in planning, in their use by specialized staves, and in their proper description, in order to make possible their use and recovery. The reuse, an essential characteristic of these objects demands a continuous process of description, since such objects should not be taken as a static element. Considering the diversity and dynamics that involves learning objects, the adoption and controlled and uniform use of the description of the metadata are essential." (Rodrigues, Taga, & Vieira, 2011, p. 188)1

Aware of the need to make more effective the use of the virtual learning environment (VLE) based on the Moodle platform, the managers of the distance education courses of Accounting and of Economic Sciences at the Universidade Federal de Santa Catarina (Federal University of Santa Catarina) are investing in the production of Virtual Learning Objects (VLOs). The main goal, which justifies the use of learning objects is the need to offer different forms to present online content, focused on facilitating learning.

However, in spite of the investments in the production of VLOs, their use by teachers and students is still negligible, indicating the need by the agents to really appropriate such new media, starting from their effective use in the disciplines by the teachers as a way to present learning contents, including the following up and evaluation of their use by the students, and the understanding of their significance for the teachers.

Based on such a situation, the current work presents the results from an analysis of the Virtual Learning Objects used in three grades in the above-mentioned courses. Thus, under the criteria of Nesbit, Belfer and Leacock (2002) and Leacock and Nesbit's (2007), Learning Object Review Instrument (LORI) methodology was used by 12 teachers have participated in an evaluation of the objects used in their disciplines.

According to the IEEE Learning Technology Standards Committee (IEEE/LTSC), Learning Objects (LOs), can be defined as "any entity, digital or not, which can be used and reused during the learning process that resorts to technology. Such objects may include hypermedia contents, instructional contents, other learning objects, and supporting software" (IEEE/LTSC, 2004).2

There are many learning objects, and each one is formatted to comply with a specific need. The LOs can be classified according to their pedagogical function, as i) instructional objects; ii) collaborative objects; iii) objects for practice; and iv) evaluation objects (Gonçález, 2005).

The LOs can be created in any media or format, and can be as simple as animated films, or the presentation of slides, or as complex as a simulation (Macêdo, Castro Filho, Macêdo, Siqueira, Oliveira, Sales, & Freire, 2007). Such objects can be made available only for a restricted group of users by means of the learning environment in a specific course, or shared by different users at institutional repositories, either national or international.

In the case of Brazil, the Ministry of Education created in June, 2008, the Banco Internacional de Objetos Educacionais (International Bank of Educational Objects), offering free access to videos, animated films, games, texts, audio, and educational software, including contents produced for all teaching levels, from elementary school through higher education.

In relation to the methods for creating learning objects, there are some evaluation methods developed by Brazilians, who are interested in mechanisms for evaluating educational software (Gama, 2007), and international authors, who devote their studies to the evaluation of learning objects.

Among the main works investigated, one stresses: i) the TICESE technique; ii) the Bloom's taxonomy; iii) the methodology by Thomas Reeves; iv) the methodology by Martins; v) the evaluation model by Campos; vi) the MERLOT evaluation model; and vii) the LORI evaluation tool.

The Técnicas de Inspeção de Conformidade Ergonômica de Software Educacional/Inspection Techniques of Ergonomic Conformity of Educational Software (TICESE) technique, is mainly focused on the elaboration of techniques, which offer to the evaluators a tool to help in the evaluation of the ergonomic aspects of a software, aiming at reaching an affinity between these and the pedagogical aspect of an educational software (Gama, 2007).

As its own name suggests, the TICESE technique is concerned with the inspection of the ergonomic conformity of the educational software that is aimed at developing the scientific bases for the adaptation of the work conditions to the capacities and realities of the worker (Gamez, 1998).

Bloom's taxonomy (1956) is a proposal of systematized evaluation interpreted as a tool and indicated for the pedagogical aspect of the evaluation (Gama, 2007). According to that methodology, the pedagogical domains can be divided into three non-excluding areas: i) the cognitive area, which refers to the understanding of knowledge, which contains knowledge, and includes application, synthesis, and evaluation; ii) the affective area, related to feelings and posture as, for example, attitudes, responsibility, respect, emotion, and values; and iii) psychomotor, related to actions. As for the factors: knowledge refers to a more specific knowledge, with an emphasis on the memory processes; and understanding refers to a kind of understanding that does not depend on the complexity of the material at issue. Thus, the core idea in such taxonomy is that which educators want the apprentice to know.

The methodology by Thomas Reeves (1999) is based on two criteria of evaluation: pedagogical criteria and those criteria related to the interface with the user. Thomas Reeves suggests that these criteria should be evaluated according to a non-dimensioned graphic scale represented by a double arrow, right and left, as the concepts of "positive" and "negative," respectively (Bertoldi, 1999).

The 14 pedagogical criteria which comprise that methodology are: epistemology (objectivist - constructivist), pedagogical philosophy (instructivist - constructivist), underlying psychology (behavioral - cognitive), objectivity (precisely focused - non-focused), instructional sequencing (reductionist - constructivist), experimental validity (abstract - concrete), the role of the instructor (provider of the material - facilitating agent), error appreciation (learning without error - learning with experience), motivation (extrinsic - intrinsic), structuring (high - low), accommodation of individual differences (non-existing - multifaceted), student control (non-existing - unrestricted), user activity (matemagenic - generative), and cooperative learning (not supported - supported).

And the 10 criteria related to the interface with the user are: usability, surfing and cognitive contents (difficult - easy), mapping (none - powerful), screen design (violated principles - respected principles), special compatibility of knowledge (incompatible - compatible), presentation of information (confusing - clear), integration (non-coordinated - coordinated), aesthetic (unpleasant - pleasant), and general functionality (non-functional - highly functional).

The methodology by Martins (2004) came up in his Master's research with the aim of analyzing the interactions between the apprentice, the web interface, and the didactic material. As well of verifying to what extent such interaction favors learning and of evaluating if the apprentices are able to reach their goals in a pleasant learning (Gama 2007; Martins, 2004).

For evaluation a form based on the heuristics was elaborated (Nielsen, 2004), allowing the ergonomic properties of the system to be analyzed in detail, like usability, which is linked to two aspects: pedagogical and design.

The evaluation model by Campos (1994) is presented as a manual for evaluation of educational software. In that model, the objectives determine the general properties and what the evaluated product must have, as well as the factors that are responsible for the quality of the product, according to criteria used to allow the verification of the attributed quality of the software in sub-factors.

As for the Multimedia Educational Resource for Learning and Online Teaching (MERLOT) evaluation model, evaluation is based on three dimensions: content quality; usability, checking the object's usability; and effective potential, which includes the pedagogical part in the process. According to MERLOT, the affective potential is the dimension of greater difficulty in relation to the process (Gama, 2007).

Another methodology employed to evaluate virtual learning objects is the Learning Object Review Instrument (LORI) evaluation tool. A number of works developed within the international scope, such as in Canada and in the United States, have already used the LORI evaluation tool, which was developed to search for quality in a learning object made available at the Internet (computers world network) (Nesbit et. al., 2002; Krauss & Ally, 2005).

The LORI is the evaluation tool used in the present study to analyze the Virtual Learning Objects used in the distance courses of Accounting and of Economic Sciences at the Universidade Federal de Santa Catarina, since it pinpoints the quality criteria (Nesbit, Belfer & Leacock, 2003), and has been used and tested worldwide.

In order to achieve the goals in this research, the VLOs were evaluated following the LORI methodology (Nesbit, Belfer & Vargo, 2002; Nesbit, Belfer & Leacock, 2004; Leacock & Nesbit, 2007). By using such methodology the reviewer (appraiser) can evaluate and comment on the VLOs, while taking into account the nine criteria:

As a whole, 12 reviewers from the courses of Accounting and of Economic Sciences participated in the research, while seven objects were analyzed, which included slides, handouts (written material), Moodle, HTML 5, video, slides with audio explanations, and inventory control for accounting. It is worth stressing that the distance courses at issue offer approximately 74 different kinds of virtual learning objects.

Based on a qualitative approach, the present work has an exploratory and explanatory character, in order to make reference to evaluation methodologies little studied in the Brazilian distance education scene. Given the representativeness and significance that these tools, supported by information technologies, have on learning for distance education students, there is the need for studies which approach such theme.

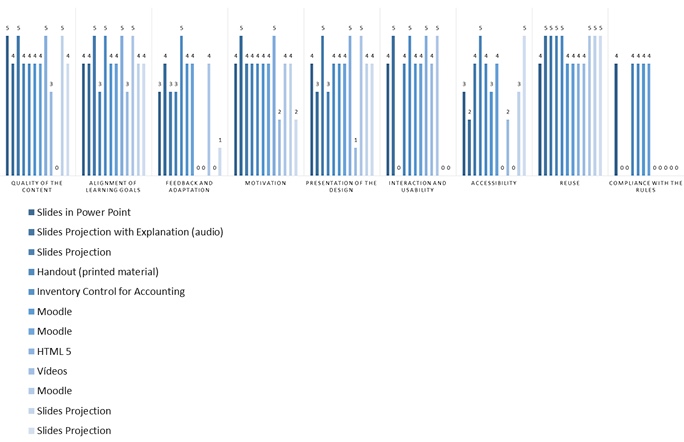

Armed with the information collected by the reviewers, the data was organized and categorized in Excel spreadsheets, followed by the elaboration of two figures (Figure 1 and Figure 2), which concentrate and encompass all evaluated objects in order to facilitate visualization and analysis. In Figure 1, the focus of the analysis is on the virtual objects indicated by the reviewers, while in Figure 2, the focus was on the evaluated criteria.

The learning objects were evaluated on a scale of five levels, where 1 is the lowest level and 5 the highest level in the evaluation. When the item is considered irrelevant for the Object, or the teacher/reviewer does not feel qualified to judge this criterion, he/she could choose to mark "X = not applicable," according to what is presented in Figures 1 and 2, below.

The data were analyzed and categorized into two groups of analysis: 1) refers to the analysis of the virtual objects indicated by the reviewers, and 2) refers to the evaluation of the analyzed criteria, with a focus on the analysis of the criteria suggested by the methodology in case and evaluated by the reviewers.

In relation to the 74 virtual learning objects offered for the planning and practice of distance education in Accounting and in Economic Sciences and indicated by the reviewers, it is noteworthy that only seven were evaluated, showing a low use of resources in the development of the discipline, be it by the students or by the course itself. Such results suggest the need for future studies which include the students' perspective to analyze the efficiency of these objects in helping learning.

One should note that HyperText Markup Language (HTML) is the virtual object which received the highest marks in five criteria: content quality, alignment of goals, motivation, presentation of the design, and interaction and usability. In addition, it received four in the criterion of reuse. Is that object a good option to be better explored by teachers in distance education? It is the language used in the creation of Internet pages and has a characteristic structure and formatting commands.

Figure 1. Evaluation of virtual objects - perspective of the analyzed objects.

Figure 1 shows that "slides in PowerPoint" and the use of "Moodle" were the most often cited objects by the reviewers, which received the lowest marks in the criteria of "feedback and adaptation," "interaction and usability," and "accessibility." The data proved coherent as they evinced the lowest marks for those elements of analysis (LORI methodology criteria) shared by the mentioned objects, given its inextricable link.

Interaction and usability are closely related to feedback and adaptation, since the learning object must provide clear instruction between interface and user, thus allowing the student to understand quickly the instructions and return his attention to the content (Kearsley, 1988). There is also a close relation to accessibility, since a good design should offer a number of means of access and interaction to the educational content.

According to the LORI evaluation methodology, there is a clear distinction between two kinds of interaction, which occur when a student uses a learning object: interaction with the interface and interaction with the content. Interaction with the interface refers to usability, and is the phrase used to describe how easy or how difficult it is for students to move around a learning object - to surf his way through the options that the object offers and to participate in the activities offered by that object (Leacock & Nesbit, 2007). In order to be well evaluated, the object must allow an intuitive, predictable, and agile navigation. In its turn, interaction with the content can be understood by means of feedback and adaptation. When an object is essentially expositive and gives little or no feedback, it gets low marks, as was the case of "PowerPoint" and "Moodle."

Figure 2, where the focus of the analysis is on the analyzed criteria, shows that the main criterion for choosing virtual objects is associated to the "reuse of the material," being that criterion associated to the ability to use that same VLO in different learning contexts by students from distinct horizons.

The development of more flexible learning objects promotes one of the main goals of learning objects - reducing duplication of efforts and costs among the involved institutions (Leacock & Nesbit, 2007). The LORI methodology values learning objects that are effective for an ample range of students, while acknowledging that no learning object alone will be effective for all students in all contexts. However, the search for maximum reuse cannot occur at the expense of utility, since the "learning objects have to be produced in a way that they are large enough to make educational sense, but small enough to be reused in a flexible way" (Campbell, 2003).3

In addition, the present study shows that the selection of the mentioned VLOs demands little or no "adaptation to the norms," allowing a greater flexibility in the development of the content, of the material, and of the work methodology. However, such issues directly affect the ability to research and reuse learning objects, once they escape standardization.

According to the indications by the authors of the LORI methodology (Leacock & Nesbit, 2007), to achieve a coherent and effective use of the metadata standards is essential in order to overcome technical barriers to reuse learning objects (Duval & Hodgins, 2006; Mcclelland, 2003). The quality of the characteristic description of the metadata and of the level of access of the learning objects are key factors to help users to "evolve from searching to finding" (Duval & Hodgins, 2006).4 Considering that the number of learning objects keeps growing, the significance of functional, shareable, and consistent metadata also grows. With the consistent use of standardized schemes of metadata, the inter-operational character of the repositories of learning objects shall increase significantly (Robson, 2004).

Figure 2. Evaluation of virtual objects - perspective of the analyzed criteria.

The evaluation by one of the reviewers who used Moodle (one of the most accessed objects, together with slides in PowerPoint) calls attention for not taking into consideration the content quality criterion, while attributing to that item the option "not applicable." If the quality of the content is arguably the most salient aspect of learning, and certainly the most relevant for experts on that field (Leacock & Nesbit, 2007), such evidence is at least curious and should be explored in detail in future studies.

It is interesting to note that in this research, the quality of the content, the alignment of goals, and motivation were not the criteria to receive the highest marks in the evaluation. In fact, these criteria should be top choices for using learning objects, in consonance with the remaining elements as, for example, accessibility, interaction and usability, feedback, and adaptation.

To evaluate the VLOs used in the distance courses of Accounting and of Economic Sciences at the Universidade Federal de Santa Catarina is a necessary step to improve teaching and learning in distance education. It is well known that the methods to assure quality in learning resources are constantly changing and adapting to distinct contexts, and that the quality criteria of evaluation have the potential to lead improvements in teaching practice, especially in distance education courses.

Without clear benchmarks, it is difficult for new developers to know how to assure high quality to their objects (Leacock & Nesbit, 2007). Clear guidelines to evaluate quality will help users and developers in this emergent field.

The main results in this study show the challenge to improve usability of the VLOs, while revealing (Rosenberg, 2002) that one of the most promising technological improvements lies in the creation of solutions for e-learning based on learning objects.

The analysis of the virtual learning objects based on the indications by the reviewers showed that the choice of virtual objects gives priority to the criterion of "reuse of the material," being that an ability to use the same VLO in different learning contexts by students from distinct horizons. In addition, the most valued objects are those which demand little or no "adaptation to the norms," a characteristic which allows greater flexibility in the development of content, of the material, and of the work methodology.

The development of more flexible learning objects promote the decrease in the doubling of efforts and costs among the institutions, making it an essential task for planning and developing distance education courses. However, the low adequacy to the norms has a direct influence on the research and reuse of learning objects, once it flees standardization.

Studies that adopted the LORI methodology demonstrated that evaluation is useful within a collaborative model, and that when it is used in an educational configuration, it is perceived by the participants as helpful in improving teaching abilities of design and development (Leacock & Nesbit, 2007). For that and other reasons it is to be expected that the present discussion will contribute in offering inputs for teachers and managers in distance education, while encouraging new studies related to that theme.

Alvarez, A. G.; Dal Sasso, G. T. M. (2011). Objetos virtuais de aprendizagem: contribuições para o processo de aprendizagem em saúde e enfermagem [Virtual learning objects: contributions to the learning process in health and nursing]. Acta Paul. Enferm, 24(5), pp. 707-711.

Bertoldi, S. (1999). Avaliação de Software Educacional: Impressões e Reflexões [Educational Software Evaluation: Impressions and Reflections]. Dissertação. Universidade Federal de Santa Catarina, 31.

Campbell, L. M. (2003). Engaging with the learning object economy. In A. Littlejohn (Ed.) Reusing online resources: A sustainable approach to e-learning. London: Kogan-Page, 35-45.

Campos, G. H. B. Metodologia para avaliação da qualidade de software educacional. Diretrizes para desenvolvedores e usuários. Rio de Janeiro, 1994. Tese de Doutorado.COPPE/UFRJ, 1994.

Duval, E. & Hodgins, W. (2006). Standardized uniqueness: Oxymoron or vision for the future? Computer, 39(3), 96-98.

Gama, C. L. G. (2007). Método de Construção de Objetos de Aprendizagem com Aplicação de Métodos Numéricos [ Method of Constructing Learning Objects with Application of Numerical Methods]. Tese. Programa de Pós-Graduação em Métodos Numéricos em Engenharia. Universidade Federal do Parana, 210.

Gamez, L. (1998). Manual do avaliador [ Evaluator's Manual]. Minho e Florianópolis, Dissertação (Mestrado) Universidade do Minho e Universidade Federal de Santa Catarina, 102.

Gonzáles, L. A. A. (2005). Conjuntos Difusos de Objetos de Aprendizaje. Retrieved from http://www.inf.uach.cl/lalvarez/documentos/Conjuntos%20Difusos%20de%20LO.pdf

IEEE Learning Technology Standards Committee (IEEE/LTSC). (2004). IEEE Standard for Learning Object Metadata. Retrieved from http://ltsc.ieee.org/wg12/

Krauss, F. & Ally, M. (2005). A study of the design and evaluation of a learning object and implications for content development. Interdisciplinary. Journal of Knowledge and Learning Objects, 1. Retrieved from http://ijklo.org/Volume1/v1p001-022Krauss.pdf

Kearsley, G. (1988). Online help systems: Design and implementation. Norwood, NJ: Alex Publications

Leacock, T. L., & Nesbit, J. C. (2007). A framework for evaluating the quality of multimedia learning resources. Educational Technology & Society, 10(2) 44-59.

Macêdo, L. N., Castro Filho, J. A., Macêdo, A. A. M., Siqueira, D. M. B., Oliveira, E. M., Sales, G. L., & Freire, R. S. (2007). Desenvolvendo o pensamento proporcional com o uso de um objeto de aprendizagem [Developing thinking proportional to the use of a learning object]. In C.L. Prata, & A.C. Nascimento (Org.). Objetos de aprendizagem: uma proposta de recurso pedagógico Brasília : MEC, SEED.

Martins, M. L. (2004). O Papel da Usabilidade no Ensino a Distância Mediado por Computador [The Role of Usability in Computer-Mediated Distance Learning]. Dissertação. Centro Federal de Educação Tecnológica de Minas Gerais, 107.

Mcclelland, M. (2003). Metadata standards for educational resources. Compute, 36(11), 107-109.

Nesbit, J., Belfer, K., & Vargo, J. A. (2002). Convergent participation model for evaluation of learning objects. Canadian Journal of Learning and Technology, 28(3). Retrieved from http://www.cjlt.ca/content/vol28.3/nesbit_etal.htm

Nesbit, J. C., Belfer, K., & Leacock T. L. (2004). LORI 1.5: Learning object review instrument. Retrieved from http://www.transplantedgoose.net/gradstudies/educ892/LORI1.5.pdf.

Nielsen, J. (2004). Ten usability heuristics. Retrieved from http://www.useit.com/papers/heuristic/heuristic_list.html

Robson, R. (2004). Context and the role of standards in increasing the value of learning objects. In R. McGreal (Ed.). Online education using learning objects. New York: RoutledgeFalmer.

Rodrigues, R. S., Taga, V., & Vieira, E. M. F. (2011). Repositórios educacionais: estudos preliminares para a Universidade Aberta do Brasil. Perspectivas em Ciência da Informação, 16(3), p.181-207.

Rosenberg, M. J. (2002). E-learning: estratégia para a transmissão do conhecimento na era digital. São Paulo: Makron Books.

Evaluation of Virtual Objects: Contributions for the Learning Process by Eleonora Milano Falcão Vieira, Marialice de Moraes, and Jaqueline Rossato is licensed under a Creative Commons Attribution 4.0 International License.