Volume 17, Number 6

Andrés Chiappe1, Ricardo Pinto2, and Vivian Arias3

1,3Universidad de La Sabana, 2Universidad Piloto de Colombia

Open Assessment of Learning (OAoL) is an emerging educational concept derived from the incorporation of Information and Communication Technologies (ICT) to education and is related with the Open Education Movement. In order to improve understanding of OAoL a literature review was conducted as a meta-synthesis of 100 studies on ICT-based assessment published from 1995 to 2015, selected from well-established peer-reviewed databases. The purpose of this study focused on identifying the common topics between ICT-based assessment and OAoL which is considered as an Open Educational Practice.

The review showed that extensive use of the Internet makes it easy to achieve some special features of OAoL as collaboration or sharing, which are considered negative or inconvenient in traditional assessment but at the same time become elements that promote innovation on that topic. It was also found that there is still a great resistance to accept change (as OAoL does) when structural elements of traditional assessment are questioned or challenged.

Keywords: learning assessment, collaboration, open educational practice, educational technology, peer assessment

Assessment of learning has been considered for a long time a fundamental component of teaching and learning processes (Brailovsky, 2001; Brown, Bull, & Pendlebury, 2013; Dochy, Segers, & Dierick, 2002) and at the same time, it has received some of the harshest criticism from different educational stakeholders (Beaumont, O'Doherty, & Shannon, 2011; Wiliam, 2011).

The specialized literature on student assessment is abundant and profuse and has shown over the years a variety of diverse conceptualizations and applications but usually with the same meeting point: the assessment as the measuring of learning goals or outcomes, generally associated with knowledge acquisition, standardized test-based or grade-oriented (Astin & Lising, 2012). It should be noted that having some historical exceptions on the above, as the approach of John Dewey in early 1990s on the "learning by doing," where a formative or alternative assessment was promoted, (now becoming an emerging practice in some developed countries), the "classic" way to assess learning still remains in a lot of educational contexts around the world (Kamens & McNeely, 2010).

Moreover, the impact of massive use of the Internet has been manifested through remarkable changes in the way we communicate and relate to others (Joinson, 2003). Education is one of the areas that have been affected by this, but not in the same way or with the same intensity as, for example, trading, entertainment, or communication. Although over the last three decades, education has shown some interesting ICT-based changes in its dynamics (e.g., the emergence of e-learning, the use of mobile devices and more recently some open learning experiences known as massive open online courses or MOOCs), these examples are not yet very signifcant in the overall panorama of education (Livingstone, 2012; Yildiz et al., 2014).

As a result, the use of the Internet is creating incipient changes in teaching practice or the way in which educational content is produced or distributed (Gillespie, 2014; Pera, 2013). However, assessment persists in repeating the same mechanical models that hinder the transition from a summative to a formative type of assessment, changing only the means and tools to technological resources but the nature of learning assessment remains the same (Redecker & Johannessen, 2013; Voogt, Knezek, Cox, Knezek, & ten Brummelhuis, 2013).

Moreover, as a revitalization of early 20th century Open Education, a growing trend is emerging called an "Open Education Movement" that is gaining ground from an extensive use of ICT. This movement promotes a way of thinking about education that transcends its origins that were linked to distance education and currently configure a set of open educational practices that enhance teaching and learning by incorporating "openness" attributes such as sharing, remote collaboraton, adaptation, free access, and reuse of information and spaces for educational interaction, etc. (Downes, 2013; Knox, 2013).

Regarding the above, it becomes interesting to think about a new or at least a fresh concept for student assessment called Open Assessment of Learning (OAoL), understood as an open educational practice and defined as follows:

The process of learning verification and feedback that takes place collaboratively, mediated by free access tools in which teachers produce or adapt assessment resources and students adapt and reshape these resources for the purpose of generating for themselves an assessment that meets their personal needs, learning styles and context. (Chiappe, 2012, p. 10).

Therefore, as OAoL looks to be a promising or at least provocative concept, which aims to promote innovative changes in educational practices, it becomes relevant to ask this question: Conceiving OAoL as an ICT-based educational practice, which are common topics among ICT-based assessment and OAoL so that it can be better understood as an open educational practice?

This question led a literature review on ICT-based student assessment, which is the context in which OAoL is developing and will be the scenario of its implementation as an open educational practice.

The review of literature is a very particular type of study, characterized as a reflexive process of social inquiry that is based on the analysis of written scientific research (Ahmed, 2010; Denscombe, 2014). This study used meta-synthesis as a method that differs from the meta-analysis and the systematic literature review and focuses on the study of the results of other qualitative research in order to generate substantive theory through interpretation of such results (Thorne, Jensen, Kearney, Noblit, & Sandelowski, 2004; Walsh & Downe, 2005; Whittemore & Knafl, 2005; Zimmer, 2006). The analytical and selective review of the literature for the purpose of this study was developed as a Content Analysis process, which was based on the observation of evidence of key subjects or topics that would favor or limit the implementation of OAoL. In support of this method, Sandelowski, Docherty, and Emden (1997, p. 369) write, "in contrast to quantitative metaanalysis, qualitative metasynthesis is not about averaging or reducing findings to a common metric, but rather enlarging the interpretive possibilities of findings and constructing larger narratives or general theories."

The review was implemented using three steps based on the basic review cycle of Machi & McEvoy (2012), as follows

Step 1: Establish the purpose of the review (define research interest). As previously mentioned, the main objective of the review was to identify in peer reviewed research papers, some common topics among ICT-based assessment and OAoL. This would allow the researchers to progress towards the understanding of the particularities of OAoL as an open educational practice.

Step 2: Specify inclusion and exclusion criteria and explore a concept in multiple sources (searching and filtering). The first inclusion criteria had to do with ensuring the quality, diversity, and amplitude of information sources. For this reason, the initial searching was conducted in well-recognized peer-reviewed academic sources that allow access to papers in English, Spanish, and Portuguese. Thus, the searching was conducted through ISI Web of Knowledge, Scopus, Scielo, and DOAJ, which allowed reflecting on European (66%), North American (15%), and Latin American (4%) thought on the subject.

Second inclusion criteria had to do with searching relevance. To do so, searching descriptors or keywords included: "open assessment," "assessment + ICT," "assessment in virtual learning environments," and "assessment + elearning." All descriptors were applied both in English, Spanish and Portuguese.

After the first search in databases, we excluded letters, erratums, editorials, notes, shorts surveys, books, and book chapters. Only reviews, research articles, and conference papers on Social Sciences were selected for further analysis.

A final inclusion criteria had to do with date filtering. Because we were looking for research related to ICT-based learning assessment, only papers published between 1995 to 2015 (note that the first paper was found on 1998) were finally selected.

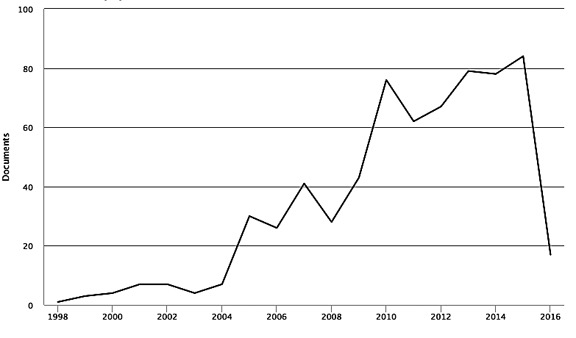

As a result of this first process, a set of documents with 664 items was created. Figure 1 shows date distribution of this first set of documents.

Figure 1. First selection of documents by year.

The last filtering was carried out through an abstracting proccess that allowed reducing the document set to a final group of 100 items, ready for further in-depth reading as shown in table 1.

Table 1

Final Set of 100 Items for In-Depth Reading

| Journal ISSN | Journal Impact factor | Journal SJR quartile | # items by journal |

| 0360-1315 | 2.578 | Q1 | 21 |

| 1088-1980 | 1.531 | Q1 | 11 |

| 1302-6488 | 0.142 | Q4 | 10 |

| 0266-4909 | 2.048 | Q1 | 9 |

| 1360-2357 | 0.409 | Q2 | 9 |

| 1436-4522 | 0.019 | Q1 | 8 |

| 0308-5961 | 0.632 | Q2 | 6 |

| 1868-8799 | 0.122 | Q4 | 5 |

| 1447-9494 | 0.110 | Q4 | 5 |

| 0007-1013 | 1.510 | Q1 | 4 |

| 1134-3478 | 0.719 | Q1 | 3 |

| 1303-6521 | 0.486 | Q2 | 2 |

| 1449-5554 | 1.001 | Q1 | 2 |

| 1475-939X | 1.049 | Q1 | 2 |

| 0034-8082 | 0.246 | Q3 | 1 |

| 1042-1629 | 1.609 | Q1 | 1 |

| 1479-4403 | 0.251 | Q3 | 1 |

| total | 100 | ||

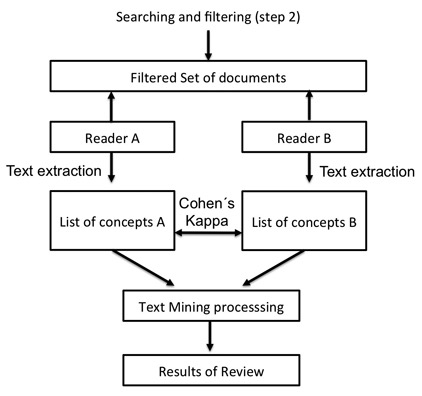

Step 3: In-depth reading and data processing. Based on the proposal of Guba and Lincoln (2000) on the relevance of conducting an "audit research" in which contributors examine both the processes and consistency in research products, an independent and parallel two-observer reading was conducted on the same set of documents.

As shown in Figure 2, data processing starts with an in-depth independent two-reader reading and extraction of relevant subjects or concepts in the form of small chunks of text arranged in separate lists. In order to strengthen the objectivity and reduce the level of bias in the analysis, a Cohen's Kappa coefficient (K) was applied to the separate lists that contain text segments analyzed by each reader. The result of this process was K = 0.74, which corresponds to a high level of consistency.

Then, those two lists containing the text segments and their associated concepts or key ideas were unified into a single document, which was processed and compared through a text mining tool as mentioned by Botta-Ferret & Cabrera-Gato (2007), which allow to identify information patterns within the data. These patterns were converted later into sets of key issues, which ultimately became sets of results of the review.

Figure 2. Detail of in-depth reading and data processing.

The literature review process on ICT-based student assessment shows that the intellectual production and research in this area has been extensive and varied, since the mid-90s. As said before, 100 peer-reviewed research papers were analyzed. The review showed a large number of subjects potentially identifiable with the conceptualization proposed for OAoL. In this regard, and for descriptive purposes, the issues were grouped into six topics, which are described below.

Much of the literature, corresponding to 63% of analyzed documents regarding assessment with ICT reveals one of the main features that simultaneously includes its deepest criticism: the teacher's dominance and the learner's passivity when engaged in assessment and identification of their own learning. For decades, international agencies such as United Nations Educational, Scientific and Cultural Organization (UNESCO) and the Organization for Economic Co-operation and Development (OECD) have suggested to governments and institutions new approaches to teaching and learning in order to foster quality education as a means to contribute to the eradication of poverty, taking into account factors of coverage, timeliness, easiness, and relevance. In other words, to democratize education (Semenov, 2005) and change the paradigms of educational methods centered on teaching to a student-centered instructional learning in which the assessment acquires a new dimension (Mainali & Heck, 2015; Weimer, 2002). This new dimension makes allusion mainly to a change from student's passive role to an empowerment that involves active participation in assessment design and implementation that will ultimately lead to the creation of customized assessment experiences.

Also, 53% of such authors agree with these views and support a transformative discourse based on the use of ICT, especially focused towards its potential to transform personal access to information, to spaces of social interaction and the strengthening of digital skills and autonomy. In fact, 65% of such papers refer to ICT-based assessment as a diametrically opposed option to one that is focused and controlled by the teacher, even if teachers have acquired competence on teaching with ICT (Angeli & Valanides, 2009; Bottino, 2004; Cobcroft, Towers, Smith, & Bruns, 2006; Richards, 2005; Tinio, 2003; Voogt, 2003; Wang, 2008). Perspectives include that of technological pedagogical content knowledge (TPACK), the articulation of pedagogy, technology, and learning needs; the use of emerging technologies; the changes in the role of teacher; and challenges facing developing countries.

However, daily assessment practices, at least for the most part, are not echoing these ideas and, on the contrary, reinforce traditional patterns of teaching and assessment (Mora & Romero, 2014; Zu-wang, 2007). In such traditional practices the learner is considered a passive receiving agent, absent of criterion and experience in order to be able to empower their learning and, of course, their assessment (Montgomery, 2002; Sitthiworachart & Joy, 2008). Scenarios like this establish a clear context of reluctance to embrace a potentially disruptive concept such as Open Assessment of Learning.

This has negative implications for short-term participatory assessment processes that are very characteristic of OAoL. A teacher-centered assessment limits the implementation of collaborative or participative assessment and therefore restricts the possibilities of using systems or methods that provide learning assessment as a product of teacher-student consensus. As Dos Santos (2013, p. 125) stated when referring to education (which also applies to the assessment based on this perspective):

It is not just a question of a teacher acting as a promoter of someone else's learning, but is also about someone who is also learning, mediated by the group that questions, comments and reacts to the different stimuli we bring.

In this situation, by not engaging in OAoL, both the teacher and the student suffer by letting opportunities to learn together or from each other pass them by.

Another recurring issue that appears in the literature presents critical reflections regarding the assessment as a process that identifies the levels of knowledge and skills acquisition. Forty percent of the analyzed texts consistently show the call for transit from a summative to a formative perspective of assessment and the focus on the use of ICT to evaluate both people and programs (Rodriguez, 2005).

This concept of assessment certainly is not new and has been widely studied for a long time, by authors who have addressed this from the pedagogical discussion, the use of mobile devices, the importance of motivation in formative assessment and learning analytics (Black & Wiliam, 2009; Hwang & Chang, 2011; Nicol & Macfarlane-Dick, 2006; Tempelaar, Heck, Cuypers, van der Kooij, & van de Vrie, 2013; Yorke, 2003).

This is certainly not only a favourable condition for the development of Open Assessment of Learning but one of its features that allow us to think that this kind of open educational practice facilitates the transformation of strictly summative assessment into a formative one. This involves making assessment as a learning opportunity, not just to identify gained learning but also to open the possibility of conceiving other realities therein, in which, for example, assessment processes do not need observants or where items or practices that are not allowed in traditional assessments are now well received, such as collaboration and consultation becoming desirable to achieve the objective of strengthening the learning of those being assessed.

In this sense, it is possible to consider the assessment as a process of self-regulation as expressed by Valdivia (2009). This recogniszes the importance and formative potential of the timely socialization between teachers and students as well as the participatory development of assessment tools and the co-creation of customized assessment experiences. In addition, the application of the attributes of "openness" lead to a less guided-by the-teacher participation, which helps the generation of digital communities of learning that require the strengthening of student autonomy (Willis, 2011). The student must make decisions based on their criteria and not from a direct, and predetermined orientation by the teacher, which in turn requires greater self-regulation by the student.

Open Assessment of Learning has an underlyng transformative nature in the way it incorporates ICT. It is worth mentioning that the Internet is just a technological tool and as such, the interaction dynamics that are built based on this (collaboration, self-assessment) will not have the desired effect if the learning experiences have not previously been designed with this in mind. In this regard, OAoL proposes an enabling environment for harnessing the transformative potential of the Internet.

In this sense, the literature does not make any special emphasis on this condition but identifies the role of the Internet in assessment processes as a supporting component, mediator, or as an assessment strengthener. Such an assessment issue suggests the image of old wine in a new bottle; do the same with more sophisticated or refined instruments, or at least think about new possibilities regarding better feedback and a clearer identification of learning. The vast majority of papers that refer to the inclusion of the Internet in assessment (60% of the texts analyzed) falls within the above category. Subsequently, new terms like e-assessment are coined to indicate the mediation of ICT in the learning assessment process and relate this matter with open and distance education systems, the importance of Internet-based tools to address special educational needs, the digital divide and the assessment's expectations on 21st century education (Chaudhary & Dey, 2013; Drigas & Ioannidou, 2013; Franklin, Stam, & Clayton, 2013; Lim & Oakley, 2013; Redecker & Johannessen, 2013; Rivasy, De La Serna, & Martínez-Figueira, 2014).

Given the complex challenge of further developing their transformative potential, it is reasonable to assume that it is easier to conduct an instrumental approach to ICT, which is perhaps one of the main reasons for this emphasis in the specialized literature. However, 73% of the authors that addressed this issue also identified or proposed two elements that might eventually lead to generating changes in assessment practices via the use of the Internet and that can be included as part of OAoL: digital rubrics and e-portfolios.

It should be noted that although these tools are not really new or innovative in the context of the ICT-based assessment, they become complementary to what has been mentioned in the previous section regarding the strengthening of active participation of students in their own assessment process. In fact, under the category of digital rubrics, assessment processes using web systems known as "e-rubrics" are used in naturally complex interdisciplinary and collaborative learning environments. Some examples of this were implemented to assess various tasks to be performed in forums (Bartolomé, Martínez-Figueira, & Tellado-González, 2014; Torres-Gordillo & Perera-Rodríguez, 2010), peer review processes (Serrano & Cebrian, 2011), or in co-peer reviews and self-assessment (Gallego-Arrufat & Raposo-Rivas, 2014).

As suggested by Panadero and Jonsson (2013), the use of digital rubrics provide transparent assessment, which also helps to reduce anxiety in students caused by such process. Moreover, the use of rubrics can also facilitate feedback and improve self-efficacy and self-regulation of learning, all of which can indirectly facilitate the improvement of student achievement.

In addition to digital rubrics, Cebrián de la Serna (2011) indicates that e-portfolios provide support to both formative assessment and constructivist approaches to teaching and learning (and in this case also in assessment), in which three key elements that contribute to this process are present: reflective practice, collaborative learning, and peer-teacher feedback (de la Cruz Flores & Abreu Hernández, 2014).

Feedback is another major element identified in ICT-based student assessment, which is emphasized as an enabling factor that should be considered beyond just quantification or measurement of learning. The literature review on this subject is extensive and details the educational importance for the students of receiving timely and relevant information about their learning, the perspective of automated systems, the possibilities of tailored and timely feedback and its importance to maintain adequate levels of student motivation as well as the relevance of peer feedback (Debuse, Lawley, & Shibl, 2007; Gipps, 2005; Irons, 2007; Jordan & Mitchell, 2009; Li, Liu, & Steckelberg, 2010; Van den Berg, Admiraal, & Pilot, 2006; Webb, 2005).

According to Simpson (2013), feedback should consider the emotional aspects of the student because this increases motivation, develops new and better thinking and learning skills, and reduces student dropout rates. Here arises the question of whether or not the teacher or tutor will have the skills and knowledge to achieve such monitoring and feedback in a highly ICT-mediated context. Some authors state that it is possible, but requires a great deal of teacher commitment, time, and training (Hatziapostolou & Paraskakis, 2010).

From this perspective, an approach to assessment as an open practice seeks to improve the participation of the learner and their centrality in the feedback process as a key actor –as peer learner-, encouraging commitment to learning and increasing the validity and reliability of assessment activities through the implementation of actions and ongoing formative feedback through Internet tools (Gikandi, Morrow, & Davis, 2011).

OAoL, due to its collaborative and participatory features, provides feedback from different directions and unexpected content, which might introduce different and valuable information to feedback that may even exceed the teacher's single point of view about feedback content.

The literature review draws attention to a particularly limiting condition that affects OAoL. Twenty-six percent of analyzed papers agree that current education is ruled by assessment traditions that are very difficult to change and that are part of what is considered status quo. Reviewed papers show that this phenomenon is not focused on a particular geographic region. Although there are exceptions, traditional assessment is a globally widespread reality.

In addition to this cultural limitations, there are institutional and governmental rules and regulations that increasingly reaffirm this tradition (Chetwynd & Dobbyn, 2011). Standardized tests and international benchmarks, such as the Programme for International Student Assessment (PISA), eventually become governmental standards or reference points, which in turn are trickled down to educational institutions and into classroom practices. Any alternative to the established norm automatically enters unknown territories and is therefore "risky and dangerous", which is one of the main ideas that difficult change in the traditional evaluation. (Boud, Cohen, & Sampson, 1999; Heller, Watson, Komar, Min, & Perunovic, 2007; Maslovaty & Kuzi, 2002; Stoiber & Vanderwood, 2008).

In addition to the above, literature shows an emerging attempt of shifting the traditional concept of assessment, moving from a selective and quantitative conceptualization of assessment to a more flexible and participatory approach. However, this change is not yet reflected in concrete and coherent actions at the insitutional level, which is identified as an unfavorable factor for implementing innovative conceptions of ICT-based assessment such as OAoL.

Ninety percent of the texts analyzed in this literature review put particular emphasis on the relevance of applying different forms of assessment (self- and co-assessment or peer assessment) in addition to assessment conducted by teachers (Sitzmann, Ely, Brown, & Bauer, 2010). This is particularly relevant for OAoL due to its technological context. Literature shows that the more technological mediation, the greater the presence of peer and self-assessment, which becomes a very interesting subject for instructional and assessment designers of blended, elearning, or even MOOC learning experiences (Barry, 2012; Budimac, Putnik, Ivanović, Bothe, & Schuetzler, 2011; Chang, Tseng, & Lou, 2012; De Grez, Valcke, & Roozen, 2012; Gielen, Dochy, & Onghena, 2011; Lai & Ng, 2011; Shih, 2011).

Regarding the above, peer and self-assessment are valuable components for OAoL because they introduce to the learners the necessary ethical values of responsibility and honesty, throughout the whole assessment exercise, allowing to conceive it in a way that is farther from grading and closer to the strengthening of learning. This creates a context of mutual trust, where the teacher's role in avoiding "cheating" is no longer needed, so he can focus his energy to support and promote student's learning via both teacher's and peer's feedback.

In that sense, Castillo-Merino and Serradell-López (2014) propose consultative assessment processes so that there are collective constructions that contribute not just to the strengthening of motivation, which is of great importance, but also to extend the exercise to the metacognitive generation driven by the rewards of assessment in collaboration. This can be carried out in the collaborative construction of rubrics and assessment tools in which teacher and students work in consensus to involve, for example, student's learning styles and their preferences for the type and conditions for assessment.

It could be said that the boundaries that have traditionally been applied to a "class" for students are disappearing because of the integration of the Internet into academic dynamics of educational institutions. Current conception of a "class" for many of today's students includes an increasingly ICT-based complex network of interactions (Hartman, Dziuban, & Brophy-Ellison, 2007). If this is true, within such complexity traditional assessment offers a poor opportunity for interaction. Perhaps we should consider new protocols or new assessment methods leading to spaces of greater reflection and interaction.

In addition to the above, and recognizing that OAoL is a process based on the use of ICT, the results show that the technology should not be as important as the application of the attributes of "openness," which would not have the same scope if they are out of their digital ecology. In that vein, it should be recognized that technology is a tool that can generate transformation or simply become a distraction factor for innovation, making things the same way but with new mediations. That's why it makes sense to consider that is how to assess with technology, which would lead to generate transformation in such educational practice. In this context, the application of the attributes of "openness" start to generate these new possibilities to think differently about assessment, and then, to assess differently.

OAoL, then, becomes an alternative to traditional forms of assessment. Without a doubt, the implementation of this open educational practice would significantly increase participation and empowerment of the students in their learning and assessment. In this regard, it is noteworthy that transformation of the traditional assessment culture will be an arduous task; a process that will require a significant investment of energy, time, and resources.

Thus, it is expected that OAoL experiences must produce an emerging transformation in assessment, and although it was not intentional, a transformation in teaching practices. This is interesting as a potential innovation strategy that seeks to break resistance to change from teachers that are engaged in traditional educational practices.

Moreover, the transformation of assessment into a more open process, conducted under the application of some of "openness" attributes, makes the assessment more formative and less teacher-centered and puts the focus out of the mere measure of learning outcomes. Thus, assessment becomes a moment to learn, not just the space in which the learner is accountable for their learning.

Besides the above, it is important to note that OAoL should not be seen as a panacea in terms of student assessment. While it may be considered a new practice, it is prudent to consider it as complementary to other forms or types of assessment. It should be understood that any learning assessment deals with people with distinct expectations, interests, learning styles, backgrounds, educational contexts, and availability in terms of time and space. As a result, using multiple mechanisms to identify and enhance learning should not be discounted. It should also be recognized that the presence of OAoL does not imply the complete elimination of traditional assessment, which can begin to transform itself, preserving its valuable components and complementing them with open strategies, better resources, more flexibility of time constraints, and above all, follow the discourse that the student is the central axis of any real learning process.

Finally, "openness" puts us in a place where different elements traditionally considered inappropriate or unacceptable become valid or even desirable in the educational scenario. This is why it is necessary to change the way of thinking about assessment that, by the way, creates major challenges both institutional and personal. In that sense, an educational institution that considers implementing open assessment practices will be in permanent tension with an educational system that is not used to it and that works within a normative framework that is inconsistent with the nature of the OAoL. Moreover, OAoL presents personal challenges both for teachers and students to the extent that the first must be willing to cede control that traditionally have on assessment, and students would be able to change the idea about that assessment is equal to grading or even promotion instead to recognize that really is a space to keep learning about themselves and from others.

Derived from the findings of this study, some interesting topics for future research appeared. One of them has to do with a possible relationship between compromise generated by OAoL empowerment and decreasing drop-out rates or strengthening student's academic success.

One of the limitations of this study that merits further research efforts has to do with the contribution of OAoL to the management of the permanent tension between the need for educational innovation and the responsibility that educational institutions face to ensure their quality. In fact, one of the immediate problems of OAoL is the incompatibility with some of the assessment methods (accepted by education systems) that educational institutions have traditionally used to ensure the quality of their graduates. In this sense, OAoL could be implemented progressively and complementary to traditional assessment methods through multiple-case research projects. This would generate sufficient institutional experiences to validate the relevance of OAoL as a fully reliable assessment alternative.

Ahmed, J. U. (2010). Documentary research method: New dimensions. Industrial Journal of Management & Social Sciences, 4(1), 1–14.

Angeli, C., & Valanides, N. (2009). Epistemological and methodological issues for the conceptualization, development, and assessment of ICT–TPCK: Advances in technological pedagogical content knowledge (TPCK). Computers & Education, 52(1), 154–168.

Astin, A. W., & Lising, A. (2012). Assessment for excellence: The philosophy and practice of assessment and evaluation in higher education. Lanham, Maryland: Rowman & Littlefield Publishers. Retrieved from https://goo.gl/Wl4UTk

Barry, S. (2012). A video recording and viewing protocol for student group presentations: Assisting self-assessment through a Wiki environment. Computers & Education, 59(3), 855–860.

Bartolomé, A., Martínez-Figueira, E., & Tellado-González, F. (2014). La evaluación del aprendizaje en red mediante blogs y rúbricas: complementos o suplementos? [Networked assessment of learning through blogs and rubrics: ¿complements or supplements?]. REDU. Revista de Docencia Universitaria, 12(1), 159–176.

Beaumont, C., O'Doherty, M., & Shannon, L. (2011). Reconceptualising assessment feedback: A key to improving student learning? Studies in Higher Education, 36(6), 671–687.

Black, P., & Wiliam, D. (2009). Developing the theory of formative assessment. Educational Assessment, Evaluation and Accountability (formerly: Journal of Personnel Evaluation in Education), 21(1), 5–31.

Botta-Ferret, E., & Cabrera-Gato, J. E. (2007). Minería de textos: una herramienta útil para mejorar la gestión del bibliotecario en el entorno digital [Text mining: a useful tool to improve librarian's management in the digital environment]. Acimed, 16(4), 1–12.

Bottino, R. M. (2004). The evolution of ICT-based learning environments: Which perspectives for the school of the future? British Journal of Educational Technology, 35(5), 553–567.

Boud, D., Cohen, R., & Sampson, J. (1999). Peer learning and assessment. Assessment & Evaluation in Higher Education, 24(4), 413–426.

Brailovsky, C. A. (2001). Educación médica, evaluación de las competencias. In Aportes para un cambio curricular en La Argentina (pp. 103–122). Buenos Aires: University Press. Retrieved from http://www.aspefam.org.pe/intranet/CEDOSA/Brailosky.pdf

Brown, G. A., Bull, J., & Pendlebury, M. (2013). Assessing student learning in higher education. New York: Routledge. Retrieved from https://goo.gl/JVggO7

Budimac, Z., Putnik, Z., Ivanović, M., Bothe, K., & Schuetzler, K. (2011). On the assessment and self-assessment in a students teamwork based course on software engineering. Computer Applications in Engineering Education, 19(1), 1–9.

Castillo-Merino, D., & Serradell-López, E. (2014). An analysis of the determinants of students' performance in e-learning. Computers in Human Behavior, 30, 476–484.

Cebrián de la Serna, M. (2011). Los ePortafolios en la supervisión del Practicum: Modelos pedagógicos y soportes tecnológicos. Retrieved from http://digibug.ugr.es/handle/10481/15361

Chang, C.-C., Tseng, K.-H., & Lou, S.-J. (2012). A comparative analysis of the consistency and difference among teacher-assessment, student self-assessment and peer-assessment in a Web-based portfolio assessment environment for high school students. Computers & Education, 58(1), 303–320.

Chaudhary, S. V. S., & Dey, N. (2013). Assessment in open and distance learning system (ODL): A challenge. Open Praxis, 5(3), 207–216.

Chetwynd, F., & Dobbyn, C. (2011). Assessment, feedback and marking guides in distance education. Open Learning, 26(1), 67–78.

Chiappe, A. (2012). Prácticas educativas abiertas como factor de innovación educativa con TIC. Boletín REDIPE, 818(1), 6–12.

Cobcroft, R. S., Towers, S. J., Smith, J. E., & Bruns, A. (2006). Mobile learning in review: Opportunities and challenges for learners, teachers, and institutions. In Proceedings of Online Learning and Teaching (OLT) Conference 2006 (pp. 21–30). Brisbane: Queensland University of Technology. Retrieved from http://eprints.qut.edu.au/5399

Debuse, J., Lawley, M., & Shibl, R. (2007). The Implementation of an Automated Assessment Feedback and Quality Assurance System for ICT Courses. Journal of Information Systems Education, 18(4), 491–502.

De Grez, L., Valcke, M., & Roozen, I. (2012). How effective are self-and peer assessment of oral presentation skills compared with teachers' assessments? Active Learning in Higher Education, 13(2), 129–142.

de la Cruz Flores, G., & Abreu Hernández, L. F. (2014). Rúbricas y autorregulación: pautas para promover una cultura de la autonomía en la formación profesional. REDU. Revista de Docencia Universitaria, 12(1), 31–48.

Denscombe, M. (2014). The good research guide: for small-scale social research projects. McGraw-Hill Education (UK). Retrieved from https://goo.gl/s8es0l

Dochy, F., Segers, M., & Dierick, S. (2002). Nuevas vías de aprendizaje y enseñanza y sus consecuencias: una nueva era de evaluación. Revista de Docencia Universitaria, 2(2). Retrieved from http://revistas.um.es/redu/article/view/20051/19411

Dos Santos, A. (2013). Educación abierta: historia, práctica y el contexto de los recursos educacionales abiertos. In B. Santana, C. Rossini, & N. De Luca (Eds.), Recursos educacionales abiertos. Prácticas colaborativas y políticas públicas (pp. 71–88). Sao Paulo: EDUFBA - Casa da Cultura Digital.

Downes, S. (2013). The role of open educational resources in personal learning. In R. McGreal, W. Kinuthia, & S. Marshall (Eds.), Open educational resources: Innovation, research and practice, (pp. 207-221). Vancouver: Commonwealth of Learning and Athabasca University.

Drigas, A. S., & Ioannidou, R.-E. (2013). ICTs in special education: A review. In Information Systems, E-learning, and Knowledge Management Research (pp. 357–364). Springer. Retrieved from http://link.springer.com/chapter/10.1007/978-3-642-35879-1_43

Franklin, M., Stam, P., & Clayton, T. (2013). ICT impact assessment by linking data across sources and countries. Yearbook on Productivity 2008, 155.

Gallego-Arrufat, M.-J., & Raposo-Rivas, M. (2014). Compromiso del estudiante y percepción del proceso evaluador basado en rúbricas. REDU. Revista de Docencia Universitaria, 12(1), 197–215.

Gielen, S., Dochy, F., & Onghena, P. (2011). An inventory of peer assessment diversity. Assessment & Evaluation in Higher Education, 36(2), 137–155.

Gikandi, J. W., Morrow, D., & Davis, N. E. (2011). Online formative assessment in higher education: A review of the literature. Computers & Education, 57(4), 2333–2351.

Gillespie, H. (2014). Unlocking learning and teaching with ICT: Identifying and overcoming barriers. London: Routledge. Retrieved from https://goo.gl/oDUTJq

Gipps, C. V. (2005). What is the role for ICT-based assessment in universities? Studies in Higher Education, 30(2), 171–180.

Guba, E., & Lincoln, Y. (2000). Paradigmas en competencia en la investigación cualitativa [Paradigms in qualitative research competence]. In C. Denman & J. Haro (Eds.), Por los rincones. Antología de métodos cualitativos en la investigación social [In the corners. Anthology of qualitative methods in social research] (pp. 113–145). Sonora, México: Colegio de Sonora. Retrieved from https://goo.gl/8GTeQx

Hartman, J., Dziuban, C., & Brophy-Ellison, J. (2007). Faculty 2.0. Educause Review, 5, 62–76.

Hatziapostolou, T., & Paraskakis, I. (2010). Enhancing the Impact of Formative Feedback on Student Learning through an Online Feedback System. Electronic Journal of E-Learning, 8(2), 111–122.

Heller, D., Watson, D., Komar, J., Min, J.-A., & Perunovic, W. Q. E. (2007). Contextualized personality: Traditional and new assessment procedures. Journal of Personality, 75(6), 1229–1254.

Hwang, G.-J., & Chang, H.-F. (2011). A formative assessment-based mobile learning approach to improving the learning attitudes and achievements of students. Computers & Education, 56(4), 1023–1031.

Irons, A. (2007). Enhancing learning through formative assessment and feedback. London: Routledge. Retrieved from https://goo.gl/jnPR3D

Joinson, A. N. (2003). Understanding the psychology of internet behaviour. Virtual worlds, real lives. Revista Iberoamericana de Educación a Distancia, 6(2), 190.

Jordan, S., & Mitchell, T. (2009). e-Assessment for learning? The potential of short-answer free-text questions with tailored feedback. British Journal of Educational Technology, 40(2), 371–385.

Kamens, D. H., & McNeely, C. L. (2010). Globalization and the growth of international educational testing and national assessment. Comparative Education Review, 54(1), 5–25.

Knox, J. (2013). The limitations of access alone: Moving towards open processes in education technology. Open Praxis, 5(1), 21–29.

Lai, Y. C., & Ng, E. M. (2011). Using wikis to develop student teachers' learning, teaching, and assessment capabilities. The Internet and Higher Education, 14(1), 15–26.

Li, L., Liu, X., & Steckelberg, A. L. (2010). Assessor or assessee: How student learning improves by giving and receiving peer feedback. British Journal of Educational Technology, 41(3), 525–536.

Lim, C. P., & Oakley, G. (2013). Information and communication technologies (ICT) in primary education. In Creating Holistic Technology-Enhanced Learning Experiences (pp. 1–18). Rotterdam: Springer. Retrieved from http://link.springer.com/chapter/10.1007/978-94-6209-086-6_1

Livingstone, S. (2012). Critical reflections on the benefits of ICT in education. Oxford Review of Education, 38(1), 9–24.

Machi, L. A., & McEvoy, B. T. (2012). The literature review: Six steps to success. Thousand Oaks: Corwin Press. Retrieved from https://goo.gl/XoDa0P

Mainali, B. R., & Heck, A. (in press). Comparison of traditional instruction on reflection and rotation in a Nepalese high school with an ICT-rich, student-centered, investigative approach. International Journal of Science and Mathematics Education, 1-21.

Maslovaty, N., & Kuzi, E. (2002). Promoting motivational goals through alternative or traditional assessment. Studies in Educational Evaluation, 28(3), 199–222.

Montgomery, K. (2002). Authentic tasks and rubrics: Going beyond traditional assessments in college teaching. College Teaching, 50(1), 34–40.

Mora, G. M., & Romero, I. M. M. (2014). Innovación educativa y TIC: Uso del Ciberhabla para fomentar el aprendizaje. Revista Iberoamericana de Producción Académica Y Gestión Educativa. Retrieved from http://pag.org.mx/index.php/PAG/article/view/79

Nicol, D. J., & Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199–218.

Panadero, E., & Jonsson, A. (2013). The use of scoring rubrics for formative assessment purposes revisited: A review. Educational Research Review, 9, 129–144.

Pera, A. (2013). The social aspects of technology-enhanced learning situations. Geopolitics, History, and International Relations, (2), 118–123.

Redecker, C., & Johannessen, Ø. (2013). Changing assessment—Towards a new assessment paradigm using ICT. European Journal of Education, 48(1), 79–96.

Richards, C. (2005). The design of effective ICT-supported learning activities: Exemplary models, changing requirements, and new possibilities. Language Learning & Technology, 9(1), 60–79.

Rivasy, M. R., De La Serna, M. C., & Martínez-Figueira, E. (2014). Electronic rubrics to assess competences in ICT subjects. European Educational Research Journal, 13(5), 584–594.

Rodriguez, M. J. (2005). Aplicación de las TIC a la evaluación de alumnos universitarios [Application of ICT to university student's assessment]. Teoría de La Educación: Educación Y Cultura En La Sociedad de La Información, 6(2), 1–6.

Sandelowski, M., Docherty, S., & Emden, C. (1997). Focus on qualitative methods qualitative metasynthesis: Issues and techniques. Research in Nursing and Health, 20, 365–372.

Semenov, A. (2005). Las tecnologías de la información y la comunicación en la enseñanza: Manual para docentes o Cómo crear nuevos entornos de aprendizaje abierto por medio de las TIC [Information and communication technologies in teaching: A handbook for teachers or how to create new open learning environments through ICT]. Paris: Unesco. Retrieved from http://unesdoc.unesco.org/images/0013/001390/139028s.pdf

Serrano, J., & Cebrian, M. (2011). Study of the impact on student learning using the eRubric tool and peer assessment. Education in a Technological World: Coomunicating Current and Emerging Research and Technological Efforts, 421–427.

Shih, R.-C. (2011). Can Web 2.0 technology assist college students in learning English writing? Integrating Facebook and peer assessment with blended learning. Australasian Journal of Educational Technology, 27(5), 829–845.

Simpson, O. (2013). Supporting students in online open and distance learning. London, UK: RoutledgeFalmer. Retrieved from https://goo.gl/va52aX

Sitthiworachart, J., & Joy, M. (2008). Computer support of effective peer assessment in an undergraduate programming class. Journal of Computer Assisted Learning, 24(3), 217–231. doi: http://doi.org/10.1111/j.1365-2729.2007.00255.x

Sitzmann, T., Ely, K., Brown, K. G., & Bauer, K. N. (2010). Self-assessment of knowledge: A cognitive learning or affective measure? Academy of Management Learning & Education, 9(2), 169–191.

Stoiber, K. C., & Vanderwood, M. L. (2008). Traditional assessment, consultation, and intervention practices: Urban school psychologists' use, importance, and competence ratings. Journal of Educational and Psychological Consultation, 18(3), 264–292.

Tempelaar, D. T., Heck, A., Cuypers, H., van der Kooij, H., & van de Vrie, E. (2013). Formative assessment and learning analytics. In Proceedings of the Third International Conference on Learning Analytics and Knowledge (pp. 205–209). ACM. Retrieved from http://dl.acm.org/citation.cfm?id=2460337

Thorne, S., Jensen, L., Kearney, M. H., Noblit, G., & Sandelowski, M. (2004). Qualitative metasynthesis: Reflections on methodological orientation and ideological agenda. Qualitative Health Research, 14(10), 1342–1365.

Tinio, V. L. (2003). ICT in education. United Nations Development Programme-Asia Pacific Development Information Programme. Retrieved from http://2002.bilisimsurasi.org.tr/egitim/eprimer-edu.pdf

Torres-Gordillo, J.-J., & Perera-Rodríguez, V.-H. (2010). La rúbrica como instrumento pedagógico para la tutorización y evaluación de los aprendizajes en el foro online en educación superior [The rubric as a pedagogical tool for tutoring and assessment of learning in higher education's online forums]. Pixel-Bit: Revista de Medios Y Educación, (36), 141–149.

Valdivia, I. M. á. (2009). Evaluar para contribuir a la autorregulación del aprendizaje. Electronic Journal of Research in Educational Psychology, 7(19), 1007–1030.

Van den Berg, I., Admiraal, W., & Pilot, A. (2006). Designing student peer assessment in higher education: Analysis of written and oral peer feedback. Teaching in Higher Education, 11(2), 135–147.

Voogt, J. (2003). Consequences of ICT for aims, contents, processes, and environments of learning. In Curriculum landscapes and trends (pp. 217–236). Springer. Retrieved from http://link.springer.com/chapter/10.1007/978-94-017-1205-7_13

Voogt, J., Knezek, G., Cox, M., Knezek, D., & ten Brummelhuis, A. (2013). Under which conditions does ICT have a positive effect on teaching and learning? A call to action. Journal of Computer Assisted Learning, 29(1), 4–14.

Walsh, D., & Downe, S. (2005). Meta-synthesis method for qualitative research: A literature review. Journal of Advanced Nursing, 50(2), 204–211.

Wang, Q. (2008). A generic model for guiding the integration of ICT into teaching and learning. Innovations in Education and Teaching International, 45(4), 411–419.

Webb, M. E. (2005). Affordances of ICT in science learning: implications for an integrated pedagogy. International Journal of Science Education, 27(6), 705–735.

Weimer, M. (2002). Learner-centered teaching: Five key changes to practice. San Francisco: John Wiley & Sons. Retrieved from https://goo.gl/WRoZWO

Whittemore, R., & Knafl, K. (2005). The integrative review: updated methodology. Journal of Advanced Nursing, 52(5), 546–553.

Wiliam, D. (2011). What is assessment for learning? Studies in Educational Evaluation, 37(1), 3–14.

Willis, J. (2011). Affiliation, autonomy and assessment for learning. Assessment in Education: Principles, Policy & Practice, 18(4), 399–415.

Yildiz, M., Khaddage, F., Shonfeld, M., Lattemann, C., Reed, H., Keengwe, S., & Shepherd, G. (2014). MOOCs to SIMMs: M-learning around the world. In Society for Information Technology & Teacher Education International Conference (Vol. 2014, pp. 1145–1151). Retrieved from http://www.editlib.org/p/147340/proceeding_147340.pdf

Yorke, M. (2003). Formative assessment in higher education: Moves towards theory and the enhancement of pedagogic practice. Higher Education, 45(4), 477–501.

Zimmer, L. (2006). Qualitative meta-synthesis: A question of dialoguing with texts. Journal of Advanced Nursing, 53(3), 311–318.

Zu-wang, Y. (2007). Reviews about teaching and the cultivation of creative talent. Research in Higher Education of Engineering, 6, 128–130.

Open Assessment of Learning: A Meta-Synthesis by Andres Chiappe, Ricardo Pinto, Vivian Arias is licensed under a Creative Commons Attribution 4.0 International License.