Volume 18, Number 2

Robert Bodily, Rob Nyland, and David Wiley

Brigham Young University

The RISE (Resource Inspection, Selection, and Enhancement) Framework is a framework supporting the continuous improvement of open educational resources (OER). The framework is an automated process that identifies learning resources that should be evaluated and either eliminated or improved. This is particularly useful in OER contexts where the copyright permissions of resources allow for remixing, editing, and improving content. The RISE Framework presents a scatterplot with resource usage on the x-axis and grade on the assessments associated with that resource on the y-axis. This scatterplot is broken down into four different quadrants (the mean of each variable being the origin) to find resources that are candidates for improvement. Resources that reside deep within their respective quadrant (farthest from the origin) should be further analyzed for continuous course improvement. We present a case study applying our framework with an Introduction to Business course. Aggregate resource use data was collected from Google Analytics and aggregate assessment data was collected from an online assessment system. Using the RISE Framework, we successfully identified resources, time periods, and modules in the course that should be further evaluated for improvement.

Keywords: OER, open educational resources, course evaluation, learning analytics, continuous improvement, The RISE Framework

Adoption of Open Educational Resources (OER) is increasing throughout the field of education. According to the Hewlett Foundation (n.d.),

OER are teaching, learning, and research resources that reside in the public domain or have been released under an intellectual property license that permits their free use and re-purposing by others. Open educational resources include full courses, course materials, modules, textbooks, streaming videos, tests, software, and any other tools, materials, or techniques used to support access to knowledge (para. 2).

Because of the intellectual property licenses of OER, those who adopt them are allowed to exercise the 5R permissions - retain, reuse, revise, remix, and redistribute. This means that faculty can modify OER to meet the specific needs of students. Additionally, faculty can modify their content after a course concludes based on student performance and other feedback. While OER are licensed in a manner that permits their revision and improvement, this permission provides no information about what should be changed or improved.

The process known as continuous improvement relies on student data regarding assessment performance and content use to create a feedback loop to improve curriculum. Using data from the student learning process is the domain of learning analytics - an emerging field defined as "the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs" (Siemens, 2010, para. 6). While a small portion of learning analytics research has focused on using analytics to evaluate courses to improve design (Pardo, Ellis, & Calvo, 2015; Rienties, Toetenel, & Bryan, 2015), no studies focus on continuous improvement of content using learning analytics. This is likely because textbooks and other digital courseware traditionally used in courses are subject to copyright restrictions that make it illegal for faculty to continuously improve or change it. Consequently, the continuous improvement process can provide faculty with information about what needs revising, but not the permission to do so.

The purpose of this paper is to explicitly connect strengths of OER (reusable and editable content) with strengths of learning analytics (unobtrusively collected course evaluation data) to enable the continuous improvement of educational content. First, we review the literature surrounding OER use, learning analytics and design, and continuous course improvement. Then, we propose the RISE (Resource Inspection, Selection, and Enhancement) Framework for using learning analytics to identify resources that need continuous improvement. Finally, we present a case study using content usage and assessment data collected from an OER course to apply our framework in a real-world scenario.

As the adoption of OER has increased, so have efforts to conduct research on the impact of OER. Bliss, Robinson, Hilton, and Wiley (2013) outlined a framework categorizing the four major areas that OER research should address: cost, outcomes, use, and perceptions (COUP). This study focuses on the use aspect of this framework. While the majority of use studies have focused on (1) faculty adoption of OER or (2) ways that users exercise the 5R permissions (retain, reuse, revise, remix, redistribute) - we are focusing on the way in which students use OER.

In looking at the studies that have examined student use of OER, we have separated them into the following categories for our analysis: self-report use data, digital download data, and digital access data tied to outcomes.

These research studies have tried to understand a variety of facets of student use of OER, including frequency and motivation for use. Bliss et al. (2013) surveyed 490 students from eight community colleges that had recently adopted OER in place of a traditional textbook as part of Project Kaleidoscope. Overall, there was no difference in students' responses to how often they used their textbook and how often they used their Project Kaleidoscope texts, with a majority of students using their textbooks more than 2-3 times per week.

Looking exclusively at students using OER, Lindshield and Adhikari (2013) conducted a survey with face-to-face and online students asking them about their resource use. They found that online students used the resources more often - two-thirds of online students used the resources twice a week or more while only one-third of on-campus students reported doing the same. Like Bliss et al. (2013), they also found that the majority of students were using their textbook 2-3 times or more per week.

Internationally, Olufunke and Adegun (2014) looked at student use of OER at an African university. While students reported a high level of use (2.21 out of 3), it is unclear if the researcher properly defined OER for their research subjects - the question prompt read "I get relevant learning materials online." Students may have been reporting on their online learning materials usage generally rather than specific OER use. In another usage study from a Chinese University, Hu, Li, Li, and Huang (2015) reported that 79% of students had used some form of OER. Sixty percent of them reported using OER to assist their own personal learning, citing OER videos as the most frequently used.

Taking a qualitative approach to OER use, Petrides, Jimes, Middleton-Detzner, Walling, and Weiss (2011) held focus groups with students who had used a digital open textbook in their class. While their research did not specifically ask about frequency of use, students reported that the availability of the resources had a positive impact on their study habits.

One of the most interesting use studies we found was an evaluation of open science textbooks from the Open Textbook Project. Here, Price (2012) examined how teachers and secondary students used open textbooks in their class. Overall, she found that teachers using Open Textbook Project textbooks reported using a greater portion of the textbook compared with teachers using traditional textbooks (63% vs. 50%). In order to further investigate student use of open textbooks, the author examined student markings (e.g., highlighting or notes) in their textbooks. While she found no markings in the traditional textbooks (because such marking is prohibited by school policies), she found that students using open textbooks did mark up their textbook - at points mostly prescribed by the instructor.

While self-report data on student use of OER is useful in helping us gain a preliminary understanding of how students use OER, this is not necessarily a scalable method of collecting OER use data and may suffer from self-report bias, or biases introduced due to the nature of human memory or some people's desire to appear to fit an ideal. To obtain a more comprehensive understanding of how students use OER, we next discuss the studies which collected digital access or download data on how frequently students interacted with their textbooks

Other studies have tried to get better measures of student OER use by looking at access to online learning materials. An example of this is Feldstein et al. (2012) who conducted a 1-year pilot study with 991 students in nine core courses at Virginia State University. These courses adopted Flat World Knowledge OER textbooks in place of traditional textbooks. In the study, the authors tracked student resource downloads during fall and spring semesters. They found that more students viewed OER textbooks online in OER sections of the course when compared with those that purchased textbooks in a traditional section of the course (90% vs 47%). They also found that PDF was the preferred method of resource download, ePub and MOBI file downloads increased over time, and self-report file preference was consistent with actual download usage.

Allen et al. (2015) conducted a study at UC Davis that used ChemWiki, an online chemistry OER, as the primary text in place of a commercial textbook. The treatment group consisted of 478 students that used ChemWiki and the control group consisted of 448 students that used a traditional textbook. A weekly time-on-task survey was sent to students to determine how much they were using their respective resource. They found that there was a moderate correlation between self-report resource use (time spent) and actual resource use (number of pageviews in the ChemWiki resource). In addition, while the ChemWiki group spent 24 minutes more with the resource each week than the traditional textbook group, all students spent the same amount of time studying per week.

These use studies are measuring use in an unobtrusive way and help us better understand how much students use OER. However, they are not connecting student resource use to student outcomes. This connection between resource use and achievement scores is essential to enable continuous course improvement. The studies discussed in the next section describe the results from linking outcomes to resource use.

Lovett, Meyer, and Thille (2008) described a multiyear study of the Open Learning Initiative (OLI), an OER project from Carnegie Mellon University. While the majority of their study was focused on comparing outcomes of face-to-face sections of an Intro to Statistics class with online sections exclusively using OLI, a part of their study examines OER use. They ran a correlation between the time spent on certain activities in the course and assessments that aligned to them. Overall, they found a moderate (r =.3) correlation between time spent on an activity and performance on an assessment.

In a similar study from OLI, Steif and Dollár (2009) wanted to see if learning gains in a mechanical engineering course were related to use of the OLI course materials. To measure this, they looked at the correlation between the amount of cognitive tutors (a type of online activity) completed and performance gains for a module. The correlation was positive, but relatively low (r =.274).

In a final study linking performance and OER usage, Gil Vázquez, Candelas Herías, Jara Bravo, García Gómez, & Torres Medina (2013) looked at statistics from an OpenCourseWare (OCW) that they developed for a computer networks class. The content consisted of a blog, multimedia videos, electronic documents, and interactive simulations. In the first part of the study, the researchers surveyed and interviewed students in the class to get their opinion on the resources - which was positive overall. Additionally, the researcher examined the link between OER use and student performance. They found that there was a strong, but not statistically significant, relationship between OER use and achieving a high grade (r =.74, p =.068).

Thus far we have focused on studies relating to student use of OER. With respect to using learning analytics to inform course redesign, little literature exists in connection with OER. We next examine pertinent literature outside of OER to understand how learning analytics has been used in the course redesign process.

Although learning analytics can provide instructors and designers with the information they need to evaluate and redesign their courses, there are only a few studies examining how learning analytics can support the course redesign process. First we will review a few articles that examined course design implications suggested through learning analytics. Second, we will review articles discussing frameworks or case studies of course redesign using learning analytics.

Course design. Rienties, Toetenel, and Bryan (2015) examined the relationship between course design, online resource use, and student achievement using 32 courses at the Open University. Their cluster analysis yielded "four distinctive learning design patterns: constructivist, assessment-driven, balanced-variety, and social constructivist" (p. 318). These course design patterns had an impact on student engagement and student achievement. Based on these findings, it is important to be mindful of what kind of design is used if the goal is to foster increased student engagement and achievement.

Pardo, Ellis, and Calvo (2015) argued that learning analytics data does not provide enough context to inform learning design by itself. They used the Student Approaches to Learning framework to conduct a mixed-methods study investigating the benefit of using quantitative and qualitative data to evaluate learning design. In an engineering class of 300 students, they found that their quantitative and qualitative approach improved the course evaluation and affected how they interpreted the quantitative data. This study illustrates the need for both quantitative and qualitative data to ensure the most accurate learning design evaluation. Because of this information, we have included a quantitative and a qualitative component to our content improvement framework (discussed in the The RISE Framework section).

Course redesign. Lockyer, Heathcote, and Dawson (2013) primarily look at the intersection of learning analytics and learning design. Learning design is a field interested in documenting specific instructional sequences so educators can copy and change sequences according to local needs. Towards the end of their article, they mention how learning analytics can benefit course design: "Revisiting the learning analytics collected during the course can support teachers when they are planning to run the course again or when the learning design is being applied to a different cohort or context" (p. 1454). This article is useful in providing a framework to bridge the gap between learning analytics and learning design. This framework focused on course sequence, but did not discuss implications for content revisions. More research is needed to facilitate continuous course improvement in text content using learning analytics.

In another research study, Morse (2014) sent a survey to 71 program chairs at universities asking them what kinds of data would be beneficial to help them redesign their courses. Using this data, Morse developed a framework for the kinds of data that would be useful in redesigning courses. This framework includes knowledge support requirements, data requirements, interface requirements, and functional requirements. These requirements may be useful in helping instructors evaluate and improve several aspects of their course, but again, does not address how to make content changes in a course.

Continuous improvement is a term indicating a feedback loop between learning analytics data and instructional design stakeholders so they can make data driven decisions about course design. Wiley (2009) discusses the benefits of using analytics for continuous improvement in an OER setting - in this case the Open High School of Utah (OHSU):

Because the Open Curriculum is licensed in such a way that we can revise materials directly, OHSU is able to engage in a highly data-driven curriculum improvement process. As students go through the online courses, their clicks and learning paths can be tracked and recorded. This information can be combined with item response theory and learning outcome analysis data to set priorities for curriculum or assessment revision empirically. This data-driven process of curriculum improvement should allow the Open Curriculum to reach a very high level of quality very quickly (p. 38).

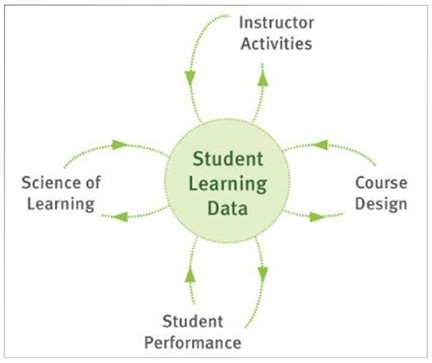

This continuous improvement process is also discussed by the Online Learning Initiative (OLI). Strader and Thille (2012) said "A key attribute of the OLI environment is that while students are working through the course, we are collecting analytics data and using those data to drive multiple feedback loops" (p. 204). These feedback loops are illustrated in Figure 1. They continue,

Analysis of these interaction-level data allow us to observe how students are using the material in the course and assess the impact of their use patterns on learning outcomes. We are then able to take advantage of that analysis to iteratively refine and improve the course for the next group of students (p. 204).

Figure 1. Feedback loops used in the Online Learning Initiative. Figure is licensed under a CC-BY License, by Strader and Thille (2012).

While analytics help us identify what resources to modify in the continuous improvement process, we also need to have legal permissions to modify and improve the content. Wiley and Green (2012) discuss this benefit of using OER and learning analytics together: "OER provide instructors with free and legal permissions to engage in continuous quality-improvement processes such as incremental adaptation and revision, empowering instructors to take ownership and control over their courses and textbooks in a manner not previously possible" (p. 83). The unique nature of OER allows instructors and designers to improve curriculum in a way that might not be possible with a commercial, traditionally copyrighted learning resource.

While the aforementioned authors have discussed the possibilities of using analytics to engage in continuous improvement of OER, we were unable to locate any publications showing results from this process. In addition, we were unable to find a framework to help with the continuous improvement of content, meaning online text resources or textbooks in general. Synthesizing the results from our literature review, we have determined that a framework is needed to assist stakeholders in identifying which pieces of content need additional attention to facilitate continuous course improvement. This is particularly interesting in an OER context where instructors have the legal permissions necessary to change and improve content. In the remainder of this paper we will provide a framework for evaluating OER using learning analytics to facilitate their continuous improvement. We will then provide an example of how to apply this framework using data from an OER-based online course.

In order to continuously improve open educational resources, an automated process and framework is needed to make course content improvement practical, inexpensive, and efficient. One way that resources could be programmatically identified is to use a metric combining resource use and student grade on the corresponding outcome to identify whether the resource was similar to or different than other resources. Resources that were significantly different than others can be flagged for examination by instructional designers to determine why the resource was more or less effective than other resources. To achieve this, we propose the Resource Inspection, Selection, and Enhancement (RISE) Framework as a simple framework for using learning analytics to identify open educational resources that are good candidates for improvement efforts.

The framework assumes that both OER content and assessment items have been explicitly aligned with learning outcomes, allowing designers or evaluators to connect OER to the specific assessments whose success they are designed to facilitate. In other words, learning outcome alignment of both content and assessment is critical to enabling the proposed framework. Our framework is flexible regarding the number of resources aligned with a single outcome and the number of items assessing a single outcome.

The framework is composed of a 2 x 2 matrix. Student grade on assessment is on the y-axis. The x-axis is more flexible, and can include resource usage metrics such as pageviews, time spent, or content page ratings. Each resource can be classified as either high or low on each axis by splitting resources into categories based on the median value. By locating each resource within this matrix, we can examine the relationship between resource usage and student performance on related assessments. In Figure 2, we have identified possible reasons that may cause a resource to be categorized in a particular quadrant using resource use (x-axis) and grades (y-axis).

| High Grades | High student prior knowledge, inherently easy learning outcome, highly effective content, poorly written assessment | Effective resources, effective assessment, strong outcome alignment |

| Low Grades | Low motivation or high life distraction of students, too much material, technical or other difficulties accessing resources | Poorly designed resources, poorly written assessments, poor outcome alignment, difficult learning outcome |

| Low Use | High Use |

Figure 2. A partial list of reasons OER might receive a particular classification within the RISE framework.

By utilizing this framework, designers can identify resources in their courses that are good candidates for additional improvement efforts. For instance, if a resource is in the High Use, High Grades quadrant, it may act as a model for other resources in the class. If a resource falls into the Low Use, Low Grades quadrant, it may warrant further evaluation by the designers to understand why students are ignoring it or why it is not contributing to student success. The goal of the framework is not to make specific design recommendations, but to provide a means of identifying resources that should be evaluated and improved. To further explain the framework, we next conduct a case study implementing the framework in an Introduction to Business class.

Participants (n = 1002) consisted of students enrolled in several sections of a Fall 2015 Introduction to Business course taught using a popular OER courseware platform. This course had an OER online text, videos, simulations, formative assessments, practice assessments, and summative assessments. The assessment system tracked student assessment scores for each question and quiz. Resource use data was collected using Google Analytics.

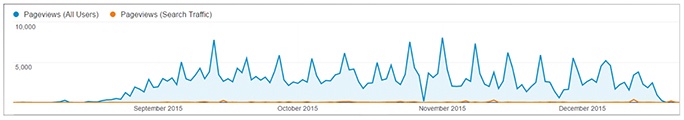

Resource use was measured using pageviews of the online resources normalized by the number of students that attempted assessment items for that outcome. We opted to use pageviews because content ratings were not available for these resources and time spent could not be accurately tracked, as students may have left a page open for days at a time without actually using the resource. This metric, pageviews per student, was the best way to analyze resource use data because not all students attempted or were assigned to complete all outcomes. Because we tracked page use using Google Analytics, resource use was aggregated at the class level rather than the student level. One potential problem with using Google Analytics for resource use in an OER context was that because resource pages are public, page use was not restricted to students in the course. We address this specific limitation below. Figure 3 shows all user pageviews compared with pageviews generated by search engine traffic (likely non-student views). Given the extremely limited number of pageviews generated by search engine traffic, we believe the pageview measure is an accurate representation of student resource use in the course.

Figure 3. Web traffic is shown from within the course and from external sources.

Assessment scores were collected for all students for all questions across all assessments. Then, each question was aggregated with all of the other questions aligned to the same outcome. There were between 4 and 20 questions per outcome. These scores were added together across all students to obtain one average score for each outcome. Finally, we connected pageview data to average assessment scores using the outcome alignments.

Once the metrics for page use and grades were collected, we applied the RISE framework by inspecting and selecting certain resources using a three-phase process. First, we conducted an outer quadrant analysis. After plotting all of the resources by their pageviews and grades, we identified the resources that were furthest away from the "origin" (where the average number of pageviews intersects the average grade). After resource identification and selection, a designer could evaluate the resources qualitatively to make the resources more useful for the students in the course. This evaluation process is not discussed in this paper but will be included in future work. Second, we examined all of the resources according to their page type (reading, video, simulation, etc.). Our goal in this phase was to look at what types of resources were more useful in helping students achieve course outcomes. Third, we identified extreme outliers for all of the resources and identified possible reasons for the anomalies.

The process of applying the RISE Framework is described below, and includes an outer quadrant analysis, analyses within each quadrant, analysis by page type, and extreme outlier analysis.

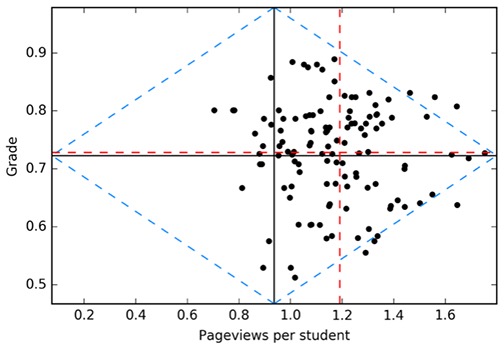

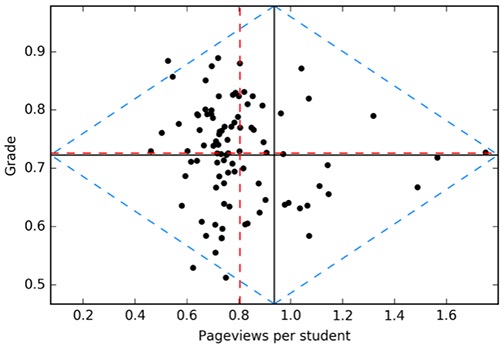

After removing extreme outliers (discussed below), the distribution of resources versus grades can be seen in Figure 4. This scatterplot is divided into four quadrants:

Figure 4. Scatterplot of all resources. The dashed blue line indicates an additive z-score greater than three, which is our cutoff point for resource identification.

We will now look more in depth at the examples in each of these quadrants outside our cutoff value. To identify the resources that were on the furthest regions of the quadrants, we used an additive z-score approach. We calculated the z-score (standardized distance from the mean in standard deviation units) of the resources for both its use and its associated grades, calculated the absolute value, and added them together. The resulting combined z-score is an indicator of how far away from the mean each data point exists in multivariate space. We use this metric to identify which resources should be further examined for continuous course improvement.

There are other measures indicating distance from the mean in multivariate space that could have been used - Mahalanobis distance for example. To see what differences exist between this approach and Mahalanobis distance, we ran an analysis using Mahalanobis distance as the distance calculation instead of our additive z-score approach. Our analysis yielded similar results, so we decided to keep the additive z-score approach because adding together z-scores for multiple variables is much easier to explain and calculate than the Mahalanobis distance.

Quadrant 1 analysis. The resources in quadrant 1 are those for which use was high and performance on outcomes related to the page was high. As stated earlier, possible reasons for this could be that the resource was helpful in achieving outcomes or that the alignment between the resource and outcomes assessment was particularly good. The top resources for this quadrant are shown in Table 1. After further analysis, Getting Down to Business, What Is Business?, and Factors of Production were discarded from further analysis because these resources were the resources for the first module in the course. The beginning of the course effect on page use was limited to the first module in the course, so we did not need to control for time in the course for other resource pages. The remaining three resources in this quadrant merit further analysis.

Table 1

Top Resources of Quadrant 1: High Grades, High Pageviews

| Page title | Grade | PV/student | Z-Score* |

| Reading: The Four Ps of Marketing | 0.81 | 1.64 | 3.65 |

| Reading: Sole Proprietorship and Partnerships | 0.82 | 1.56 | 3.52 |

| Reading: Fredrick Taylor's Scientific Management | 0.83 | 1.46 | 3.25 |

| Reading: Getting Down to Business | 0.73 | 1.75 | 3.11 |

| Outcome: What Is Business? | 0.73 | 1.75 | 3.1 |

| Reading: Factors of Production: Inputs and Outputs | 0.79 | 1.53 | 3.01 |

*Note. This column is the absolute value of the z-score for grade and PV/student added together.

Quadrant 2 analysis. The resources in quadrant 2 are those for which use was lower than average but performance on outcomes related to the page was high. This may indicate that the students had a high level of previous knowledge of the outcome, the assessment was too easy, or the content was easy to understand. The top pages for this quadrant are shown in Table 2. All four of these resources were located in the same course module, so it is likely these resources are showing up in this quadrant because the outcome is too easy. Students did not think it was worth reviewing the content for this outcome, and it did not impact their grades as they still scored well on the outcome.

Table 2

Top Resources of Quadrant 2: High Grades, Low Pageviews

| Page title | Grade | PV/student | Z-Score* |

| Outcome: Recruiting Employees | 0.88 | 0.53 | 3.4 |

| Self-Check: Recruiting Employees | 0.88 | 0.57 | 3.24 |

| Self-Check: Employee Performance | 0.86 | 0.54 | 3.04 |

| Outcome: Employee Performance | 0.86 | 0.55 | 3.01 |

*Note. This column is the absolute value of the z-score for grade and PV/student added together.

Quadrant 3 analysis. The resources in quadrant 3 are those for which use and performance on outcomes were lower than average. This may indicate that the students were unmotivated for some reason or that the outcomes were difficult and the resources were not sufficiently helpful in supporting student understanding. The top resources in this quadrant are shown in Table 3. To understand why these resources are located within this quadrant, we looked at what module the resources came from as well as how far into the semester they were given. All of the pages in quadrant three were from the same module given about one-third of the way through the semester. In addition, the resources were from two outcomes located right next to each other in the module. Further analysis should be conducted to understand the low use and low grades on these outcomes.

Table 3

Top Resources of Quadrant 3: Low Grades, Low Pageviews

| Page title | Grade | PV/student | Z-Score* |

| Video: Legal Rights Under Implied Warranties | 0.53 | 0.57 | 3.6 |

| Self-Check: Warranties | 0.53 | 0.58 | 3.58 |

| Outcome: Warranties | 0.53 | 0.62 | 3.42 |

| Self-Check: Mergers and Acquisitions | 0.51 | 0.74 | 3.17 |

| Outcome: Mergers and Acquisitions | 0.51 | 0.75 | 3.14 |

*Note. This column is the absolute value of the z-score for grade and PV/student added together.

Quadrant 4 analysis. The resources in quadrant 4 are those for which use was higher than average, but performance on outcomes related to the resources was lower than average. This may indicate that the resources or their assessments were poorly aligned to the outcome, the content was not helpful in preparing for the assessments, or that the assessments were too difficult. The top resources in this quadrant are shown in Table 4. Two of these resources, External Forces and What is International Business? were discarded from further analysis because they were both located within the first two modules of the course, which explains the high resource use. The remaining five resources merit further analysis to understand the low grade on the outcome and the higher than average resource use.

Table 4

Top Resources of Quadrant 4: Low Grades, High Pageviews

| Page title | Grade | PV/student | Z-Score* |

| Reading: The Organization Chart and Reporting Structure | 0.64 | 1.89 | 4.56 |

| Reading: Organizing | 0.64 | 1.65 | 3.65 |

| Reading: Financial Ratio Analysis | 0.56 | 1.29 | 3.3 |

| Reading: Product Liability | 0.58 | 1.33 | 3.2 |

| Reading: External Forces | 0.58 | 1.34 | 3.14 |

| Reading: What Is International Business? | 0.66 | 1.55 | 3.08 |

| Reading: The Hawthorne Studies | 0.64 | 1.50 | 3.08 |

*Note. This column is the absolute value of the z-score for grade and PV/student added together.

Comments on the individual quadrant analyses. From our outer quadrant analysis we were able to identify multiple resources as well as multiple time periods or modules in the course that are worth further analysis and evaluation by an instructional designer. We do not go through the resource evaluation in this paper. Instead, the RISE Framework is meant to help identify resources that should be evaluated.

In the next phase of our evaluation case study, we divided the resources according to their page type for further evaluation. In the course, there were five types of resources: reading, self-check, outcome description, video, and simulation. We calculated the mean performance and usage for each of these resource types and overlaid them on the means of the resources as a whole. By looking at performance versus page use for each of these page types, we hope to gain insights into how the type of page contributes to overall student performance. Such results may help designers identify the most effective modalities for helping students understand concepts.

Reading pages. Reading pages primarily consists of longer sections of text that cover the course outcomes. Reading pages may also contain embedded videos. After examining the scatterplot in Figure 5, reading pages had higher use than other page types; however, despite higher use, reading pages had the same average grade as the rest of the page types.

Figure 5. Scatterplot for Reading Pages.

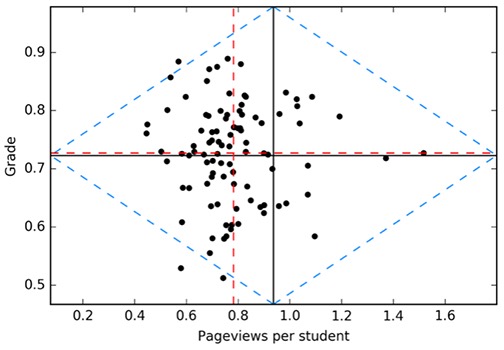

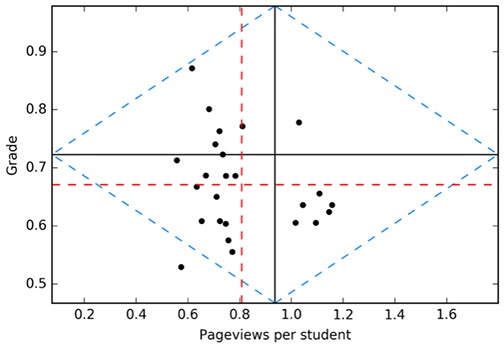

Self-check pages. In a self-check page, students take a formative assessment that covers the content of the previous section. The students are given objectively scored assessment items with immediate feedback upon submission. The students can take the assessment items as many times as they like and then move onto the next section when they feel that they are ready. Figure 6 illustrates that use was lower for self-check pages when compared to all of the resources, while performance on outcomes was approximately the same.

Figure 6. Self-check pages.

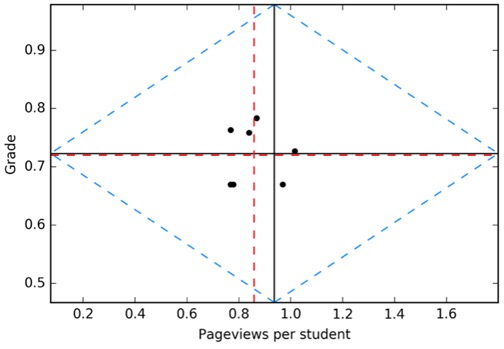

Outcome pages. Outcome pages come at the beginning of a section and describe what the student will learn to do within that section. The outcome page also includes a list of the learning activities that will take place within that section. Figure 7 shows that student grades were approximately the same for outcome pages; however, use was slightly lower when compared to the resources as a whole.

Figure 7. Outcome pages.

Video pages. Similar to reading pages, the purpose of video pages is to help students understand the outcomes covered in a section. While the video page may contain text, the primary purpose of this text is to give context to the video before the student watches it. Figure 8 shows that the use of video pages was lower than the resources as a whole and that performance on outcomes related to those pages was markedly lower. One hypothesized explanation is videos were only created for concepts that were historically more challenging for students. Thus, we cannot determine the true effectiveness of video pages without comparing to historical use and grade data. This is an interesting question that should be examined in future research.

Figure 8. Video Pages.

Simulation pages. Simulation pages contain instructions and a link to an externally hosted simulation. These simulations allow students to learn the course outcomes in a game-like environment. The most popular simulation used in the course is the Chair the Fed game (http://www.frbsf.org/education/teacher-resources/chair-federal-reserve-economy-simulation-game). In this game, students set national monetary policy as the chairmen of the Federal Bank. They can see the effect that setting the fed funds rate has on unemployment and inflation. Figure 9 shows that performance on outcomes was similar to other resources as a whole. Surprisingly, use of simulation pages was slightly lower than other pages. It could be that students felt the simulation was an optional activity and would not help them succeed in the course.

Figure 9. Simulation Pages.

In the final phase of our analysis, we examined one outlier that was removed from the original data set because of an extreme pageviews value. This resource is described in Table 5.

Table 5

The Extreme Pageview Outlier in the Case Study Course

| Page title | Grade | PV/student | Z-Score* |

| Reading: Stages of an Economy | 0.83 | 2.82 | 7.79 |

*Note. This column is the absolute value of the z-score for grade and PV/student added together.

The extreme outlier resource, Stages of an Economy, had more pageviews than any other resource in the class. Upon further analysis in Google Analytics, we found that this page had 1,500 views from search engine traffic and only 1,626 views from direct traffic. Direct traffic comes from people clicking on a link (such as the link within the course). By removing the search engine traffic, the pageview per student value for this page drops down to a number similar to the other resources in the course.

Reading pages were used more than any other page type in the case study course. However, it was unclear whether this was because students were revisiting reading pages or because they were choosing not to visit the other pages in the course. To investigate this, we used the Unique Pageviews column from Google Analytics instead of the Pageviews column and reconducted our analyses. There were no meaningful differences between the analysis using Unique Pageviews and Pageviews. This suggests that some students are choosing to skip Outcome and Self-Check pages in order to get to the reading pages more quickly.

This finding has some interesting design implications. For example, if students are going to skip summary and self-check pages in order to view reading pages, assuming outcome and self-check pages are useful, it might be worthwhile to include outcome and self-check information on the same page as the reading. Perhaps students would be more likely to answer formative self-check items or preview the outcome if it is included on the same page as the reading pages.

The last step in the RISE framework is enhancement. Throughout the article we have discussed the ways in which we have attempted to automate the resource inspection and selection stages of the framework. Enhancement is the ultimate purpose for the framework; however, this stage is not possible to automate. For this reason, we will discuss what the enhancement stage would look like as implemented in the framework.

Using the automated resource inspection and selection support, an instructional designer would receive a list of resources in each quadrant that differed significantly from other resources in the course. The designer then works their way through this list critically examining the associated assessments (e.g., using confirmatory factor analysis and item response theory analysis to check item loadings on outcomes and item function) and the associated resources (e.g., checking for mismatches between the type of learning outcome and the instructional strategies employed, differences in the language used to describe key concepts in the resources and assessments, issues with reading level, or examples that students may find difficult to understand). If time were not limited, faculty and designers could engage in this level of deep review of all resources used to support learning in the class; however, because time is scarce, there is a strong need for a system that can automatically surface resources most in need of improvement.

As mentioned previously, these improvements are uniquely enabled when the resources are open, as the designer has the legal ability to modify and adapt course content. Once changes are made to the content, the course can be taught again and the process of tracking continues for another semester. The designer can then compare the resource use and outcomes from the previous semester to determine if the course changes improved student use of resources and student achievement on the outcome.

The major limitation of our study is we only have access to aggregate course level data, when student level resource use and assessment data would have been more desirable. While this limits a few of the analyses that we can conduct, there is still benefit for continuous course improvement by looking at aggregate resource use and assessment data to improve content. In this study, we successfully identified a number of resources that merit further evaluation. In future studies we will track resource use at the student level, which will allow our analyses to be much more sensitive.

To further validate this framework, more case studies should be conducted. These studies can make additions to our basic framework and apply it in new test cases (e.g., a case study with content ratings). Our goal in this paper was to provide a sample framework that was automatable and easy to use. Future research, including studies we intend to conduct, can provide a more nuanced version of the framework for supporting continuous improvement.

In addition, future research should add sophistication to our simplified construct of resource use. Rather than only looking at number of pageviews per student, distinct patterns of resource use (like skipping Outcome and Self-check pages) should be identified and examined individually in relation to performance on assessments. Additional metrics such as time spent or content ratings should also be examined to determine the benefits and drawbacks to using these metrics when compared with resource use.

Longitudinal studies investigating the effects of course content redesign is another logical step that would build on this paper. We are planning on conducting a deeper design analysis of the pages identified by our framework and then running our analysis again on a new dataset to examine the effect of our design changes on student use and grades.

Because the continuous improvement process can consume a significant amount of time and many institution's incentive systems provide greater rewards for other activities (like writing grants or publishing articles), most faculty and instructional designers never engage in the process systematically. The framework described above provides a fully automatable method for identifying the OER that could potentially benefit from continuous improvement efforts. Both the quadrant analysis and the resource type analysis can immediately provide faculty and instructional designers with the information they need to focus in quickly on the most problematic areas of a course. This framework (and we hope to see others like it emerge) can eliminate the need for significant investments of time and data science skill in the first step of the continuous improvement process - identifying what needs improving. We hope the availability of the framework will dramatically increase continuous improvement of OER-based courses as they continue to multiply in number.

Allen, G., Guzman-Alvarez, A., Smith, A., Gamage, A., Molinaro, M., & Larsen, D. S. (2015). Evaluating the effectiveness of the open-access ChemWiki resource as a replacement for traditional general chemistry textbooks. Chemistry Education Research and Practice, 16(4), 939-948. doi: 10.1039/C5RP00084J

Bliss, T. J., Robinson, T., Hilton, J., & Wiley, D. (2013). An OER COUP: College teacher and student perceptions of open educational resources. Journal of Interactive Media in Education, 2013(1). doi: http://doi.org/10.5334/2013-04

Feldstein, A., Martin, M., Hudson, A., Warren, K., Hilton III, J., & Wiley, D. (2012). Open textbooks and increased student access and outcomes. European Journal of Open, Distance and E-learning, 15(2). Retrieved from http://eric.ed.gov/?id=EJ992490

Gil Vázquez, P., Candelas Herías, F. A., Jara Bravo, C. A., García Gómez, G. J., & Torres Medina, F. (2013). Web-based OERs in computer networks. Retrieved from http://hdl.handle.net/10045/38069

Hewlett Foundation. (n.d.). Open educational resources [Blog Post]. Retrieved from http://www.hewlett.org/programs/education/open-educational-resources

Hu, E., Li, Y., Li, J., & Huang, W. (2015). Open educational resources (OER) usage and barriers: A study from Zheijiang University, China. Educational Technology Research & Development, 63(6), 957-974. doi: 10.1007/s11423-015-9398-1

Lindshield, B. L., & Adhikari, K. (2013). Online and campus college students like using an open educational resource instead of a traditional textbook. Journal of Online Learning and Teaching, 9(1), 26. Retrieved from http://jolt.merlot.org/vol9no1/lindshield_0313.htm

Lockyer, L., Heathcote, E., & Dawson, S. (2013). Informing pedagogical action: Aligning learning analytics with learning design. American Behavioral Scientist, 57(10), 1439-1459. doi: 10.1177/0002764213479367

Lovett, M., Meyer, O., & Thille, C. (2008). JIME-The open learning initiative: Measuring the effectiveness of the OLI statistics course in accelerating student learning. Journal of Interactive Media in Education, 2008(1). doi: http://doi.org/10.5334/2008-14

Morse, R. K. (2014, November). Towards requirements for supporting course redesign with learning analytics. In Proceedings of the 42nd Annual ACM SIGUCCS Conference on User Services, 89-92. doi: 10.1145/2661172.2661199

Olufunke, A. C., & Adegun, O. A. (2014). Utilization of open educational resources (OER) and quality assurance in universities in Nigeria. European Scientific Journal, 10(7), 535-544. Retrieved from http://eujournal.org/index.php/esj/article/view/3001

Pardo, A., Ellis, R. A., & Calvo, R. A. (2015). Combining observational and experiential data to inform the redesign of learning activities. In Proceedings of the Fifth International Conference on Learning Analytics and Knowledge - LAK '15, 305-309. doi: 10.1145/2723576.2723625

Petrides, L., Jimes, C., Middleton-Detzner, C., Walling, J., & Weiss, S. (2011). Open textbook adoption and use: Implications for teachers and learners. Open learning, 26(1), 39-49. doi: 10.1080/02680513.2011.538563

Price, J. L. (2012). Textbook bling: An evaluation of textbook quality and usability in open educational resources versus traditionally published textbooks (Master's Thesis). Retrieved from http://scholarsarchive.byu.edu/etd/3327/

Rienties, B., Toetenel, L., & Bryan, A. (2015). "Scaling up" learning design: impact of learning design activities on LMS behavior and performance. In Proceedings of the Fifth International Conference on Learning Analytics and Knowledge - LAK '15. 315-319. doi: 10.1145/2723576.2723600

Siemens, G. (2010, July 22). 1st International Conference on Learning Analytics and Knowledge 2011 [Blog Post]. Retrieved from https://tekri.athabascau.ca/analytics/

Steif, P. S., & Dollár, A. (2009). Study of usage patterns and learning gains in a web-based interactive static course. Journal of Engineering Education, 98, 321-333. doi: 10.1002/j.2168-9830.2009.tb01030.x

Strader, R., & Thille, C. (2012). The open learning initiative: Enacting instruction online. Game Changers: Education and Information Technologies, 201-213. Retrieved from https://net.educause.edu/ir/library/pdf/pub720315.pdf

Wiley, D. (2009). Openness, disaggregation, and the future of schools. TechTrends, 53(4), 37. doi: 10.1007/s11528-009-0304-8

Wiley, D., & Green, C. (2012). Why openness in education. Game changers: Education and information technologies, 81-89. Retrieved from https://library.educause.edu/resources/2012/5/chapter-6-why-openness-in-education

The RISE Framework: Using Learning Analytics to Automatically Identify Open Educational Resources for Continuous Improvement by AUTHORNAMES is licensed under a Creative Commons Attribution 4.0 International License.