Volume 19, Number 1

Kennedy Hadullo1, Robert Oboko2, and Elijah Omwenga3

1Technical university of Mombasa, 2,3University of Nairobi

There is a substantial increase in the use of learning management systems (LMSs) to support e-learning in higher education institutions, particularly in developing countries. This has been done with some measures of success and failure as well. There is evidence from literature that the provision of e-learning faces several quality issues relating to course design, content support, social support, administrative support, course assessment, learner characteristics, instructor characteristics, and institutional factors. It is clear that developing countries still remain behind in the great revolution of e-learning in Higher Education. Accordingly, further investigation into e-learning use in Kenya is required in order to fill in this gap of research, and extend the body of existing literature by highlighting major quality determinants in the application of e-learning for teaching and learning in developing countries. By using a case study of Jomo Kenyatta University of Agriculture and Technology (JKUAT), the study establishes the status of e-learning system quality in Kenya based on these determinants and then concludes with a discussion and recommendation of the constructs and indicators that are required to support qualify teaching and learning practices.

Keywords: e-learning, learning management system, LMS, course design, content support, social support, administrative support, learner characteristics, instructor characteristics, course assessment, institutional factors

According to the Organization for Economic Co-operation and Development (OECD), many countries are currently overseeing a massive expansion of higher education through the use of information and communication technologies (ICTs). However, improving quality is one the most significant challenges for Higher Institutions of Education (HEIs), particularly in developing countries. This is as a result of enrollment expansion characterized by a range of weak inputs such as weak academic preparation for incoming students, lack of financial resources, inadequate teaching staff, poor remuneration of staff, and inadequate staff qualifications (Johanson, Richard, & Shafiq, 2011; United States Agency for International Development [USAID], 2014; Aung & Khaing, 2016).

Recent studies show that ICT integration in education through e-learning are facing numerous challenges associated with quality. For example, studies in Kenya confirmed that there are quality issues linked to inadequate ICT and e-learning infrastructure, financial constraints, expensive and inadequate Internet bandwidth, lack of operational e-learning policies, lack of technical skills on e-learning and e-content development by teaching staff, inadequate course support, lack of interest and commitment among the teaching staff, and longer amounts of time required to develop e-learning courses (Tarus, Gichoya, & Muumbo, 2015; Makokha & Mutisya, 2016).

A related study (Chawinga, 2016) in Malawi on increasing access to university education through e-learning observed that the greatest obstacles to e-learning use were: Lack of academic support (77.6%); Delayed end of semester examination results (75.5%); Class too large (74.3%); Delayed feedback from instructors (72.6%); Failure to find relevant information for studies (67%); Poor learning materials/manuals (33.1%); and Lost assignments and grades (19.5%).

In light of all these challenges, it is clear that developing countries still remains behind the great revolution of ICTs in Higher Education. Accordingly, further investigation into e-learning use in Kenya is required in order to fill in this gap of research, and extend the body of existing literature by highlighting major quality determinants in the application of e-learning for teaching and learning. The study proposes to determine the factors that determine the quality of e-learning systems based on empirical literature, the Quality Matters Rubric Standards (QMRS) and the Criteria for Evaluating the Quality of Online Courses by Wright (QMRS, 2014; Wright, 2014). The expected result of this study is the identification of the key constructs and indicators that determine quality and then use these factors to establish the status of e-learning system quality of JKUAT.

The importance of addressing quality in an e-learning system is crucial and many of the scholars referred to above argue that it has a role to play in increasing the success rate of e-learning system implementation and use. Majority of e-learning initiatives in developing countries are grappling with providing quality (Ssekakubo, Suleman, & Marsden, 2011; Tarus, Gichoya, & Muumbo, 2015; Makokha & Mutisya, 2016; Chawinga, 2016; Kashorda & Waema, 2014). This prompted the researcher to review the existing literature, obtain the quality determinants of e-learning, and use the determinants to establish the quality status of JKUAT e-learning system based on the perceptions and views of JKUAT students, instructors, and administrators.

The following objectives were formulated for the research:

Kenya had 33 public and 17 private universities by the year 2015, according to the Commission for University Education (CUE, 2015). Most of these institutions had started offering a few courses in e-learning which were mainly Learning Management System (LMS) supported asynchronous and blended in nature (Ssekakubo et al., 2011). However, most of the universities which have adopted e-learning have not invested sufficiently in the necessary infrastructure and training in course development that can breed success (Kashorda & Waema, 2014).

Some studies have found out that the main challenges affecting e-learning include but are not limited to: inadequate ICT and e-learning infrastructure, financial constraints, lack of affordable and adequate Internet bandwidth, lack of operational e-learning policies, lack of technical skills on e-learning, and e-content development by the teaching staff (Ssekakubo et al., 2011; Tarus, Gichoya, & Muumbo, 2015; Makokha & Mutisya, 2016; Muuro et al., 2014).

In a study on the structural relationships of environments, individuals, and learning outcomes in e-learning, Lim, Park, and Kang (2016) observed that content quality and system quality were significant in terms of eliciting intrinsic and extrinsic motivation. Furthermore, academic self-efficacy and computer self-efficacy were affected by content quality and system quality, respectively. Both the Quality Matters Rubric Standards (QMRS, 2014) and the Criteria for Evaluating the Quality of Online Courses (Wright, 2014) introduced the indicators for measuring the quality of e-learning in the context of course design and development and course assessment.

These findings indicate that well-designed courses, content, and assessments, as well as adequate infrastructure, lead to quality and increases learning motivation that is essential for successful e-learning use. Additionally, the content once designed and developed must be supported with announcements and reminders, multimedia applications such as audio and animations, learning activities that are realistic or authentic, and constructive feedback from instructors (QMRS Higher Education Rubrics, 2014; Wright, 2014; Makokha & Mutisya, 2016; Tarus, Gichoya, & Muumbo, 2015).

Other studies found out that both social and administrative support enhances quality. Socially, informational support, instrumental support, affirmation support, and emotional support were all found to be influential (Weng & Chung, 2015; Munich, 2014; Muuro et al., 2014; Queiros & de Villiers, 2016). Similarly, registration support, orientation, and a dedicated call center were given as some of the key indicators that determine success or failure (Tarus, Gichoya, & Muumbo, 2015; Makokha & Mutisya, 2016).

In a related studies, Arinto (2016) and Queiros and de Villiers (2016) agreed, in principle, that three facets need to be in place in order to better prepare both the lecturer and student for online learning: strong social presence (through timely feedback, interaction with facilitators, peer-to-peer contact, discussion forums, and collaborative activities); technological aspects (technology access, online learning self-efficacy, and computer self-efficacy); and learning tools (websites, then video clips).

Other factors hindering e-learning were observed to comprise of low internet bandwidth, insufficient financial support, inadequate training programs, lack of technical support, lack of ICT infrastructure, ambiguous policies, and objectives, with the key issues identified by the majority of participants as lack of training programs and inadequate ICT infrastructure (Azawei et al., 2016).

The role played by user characteristics such as learners and instructors also proved to be critical in an e-learning setup. Factors such as: computer and internet experience, passion about e-learning, motivation from instructors, for the learners and self-efficacy, training, motivation, and incentives for the instructor all contribute to the quality of an e-learning system (Baloyi, 2014; Muuro et al., 2014; Baloyi,2014; Queiros & de Villiers, 2016; Azawei et al., 2016; Makokha & Mutisya, 2016; Mayoka & Kyeyune, 2012; Kisanga, 2016).

From the literature review on the status of e-learning in Kenya and other developing countries, it can be deduced that there are eight factors that influence the quality of e-learning in developing countries: course design, content support, social support, administrative support, course assessment, learner characteristics, instructor characteristics, and institutional factors. These factors are summarized in Table 1.

Table 1

Key Determinants of Quality

| Constructs | Indicators | Author | |

| 1 | Course design | Course information, course structure, course layout. | QMRS Higher Education Rubrics (2014); Wright (2014); Makokha & Mutisya (2016); Tarus, Gichoya, & Muumbo (2015). |

| 2 | Content support | Announcements & reminders, use of multimedia, constructive feedback, authentic learning activities. | QMRS Higher Education Rubrics (2014); Wright (2014); Makokha & Mutisya (2016); Tarus, Gichoya, & Muumbo (2015). |

| 3 | Social support | Informational support, instrumental support, affirmation support, emotional support. | Weng & Chung (2015); Munich (2014); Muuro et al.(2014); Queiros & de Villiers (2016). |

| 4 | Administrative support | Registration support, orientation, call center. | Tarus, Gichoya, & Muumbo (2015); Makokha & Mutisya (2016). |

| 5 | Assessment | Assessment policies, assignments management, timely feedback, grades management. | Chawinga (2016); Arinto (2016); Makokha & Mutisya (2016); Wright (2014). |

| 6 | Institutional factors | Policies, funding, infrastructure, culture. | Kashorda & Waema (2014); Ssekakubo et al. (2011); Tarus, Gichoya, & Muumbo (2015); Bagarukayo & Kalema (2015); Aung & Khaing (2016). |

| 7 | Learner characteristics | Computer and internet experience, passion about e-learning, motivation from instructors, good access to university e-learning system. | Baloyi (2014); Muuro et al. (2014); Baloyi (2014); Queiros & de Villiers (2016). |

| 8 | Instructor characteristics | Self-efficacy, training, motivation, incentives, experience. | Azawei et al. (2016); Makokha & Mutisya (2016); Mayoka & Kyeyune (2012); Kisanga (2016). |

Research design can be described as a general plan about what needs to be done to answer the research questions (Saunders, Lewis, & Thornhill, 2012). Research design can be divided into three groups: descriptive, casual, and exploratory. Descriptive research is usually concerned with describing a population with respect to important variables. Causal research is used to establish cause-and-effect relationships between variables. The choice of the most appropriate design depends largely on the objectives of the research.

Descriptive research was used in this study as it can be used to describe the factors that affect e-learning system quality. Descriptive research can be classified as cross-sectional studies (CS) or longitudinal studies (LS). CS measure units from a sample of the population at only one point in time while LS repeatedly measure the same sample units of a population over a period of time. CS was used as it has been proved to be an effective method for providing participants views and perspectives in a study (Gay, Mills, & Airasian, 2009).

The study was done at JKUAT SODEL between December 2nd and December 20th, 2016. SODEL has three intakes in any given academic year: January, May, and September. Intakes admit candidates from certificate, diploma, bachelor, and master's programmes. Although SODEL currently has an e-learning population of about 700 students in total, the study targeted a sample population of around 350 consisting of postgraduate students (315), instructors (34), and the e-learning director (1). The sample size was determined using Kjericie and Morgan's sample size table (Kjericie & Morgan, 1970). With an expected 95% confidence level, the table yielded a sample size of 200.

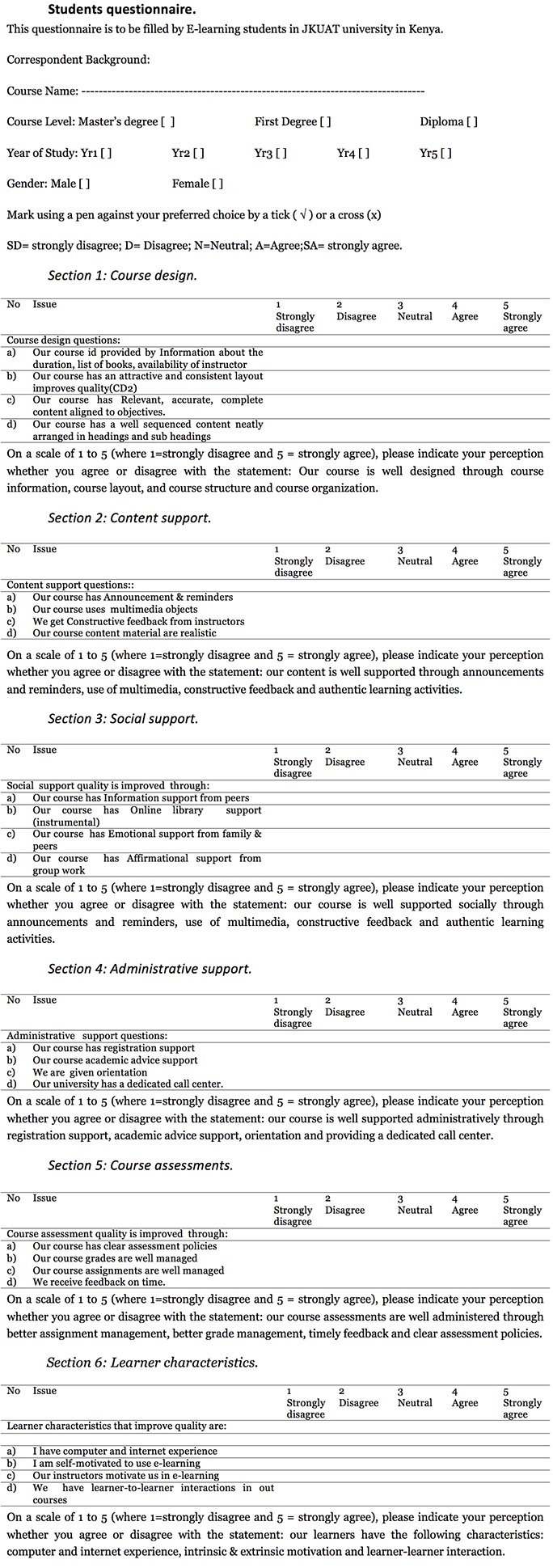

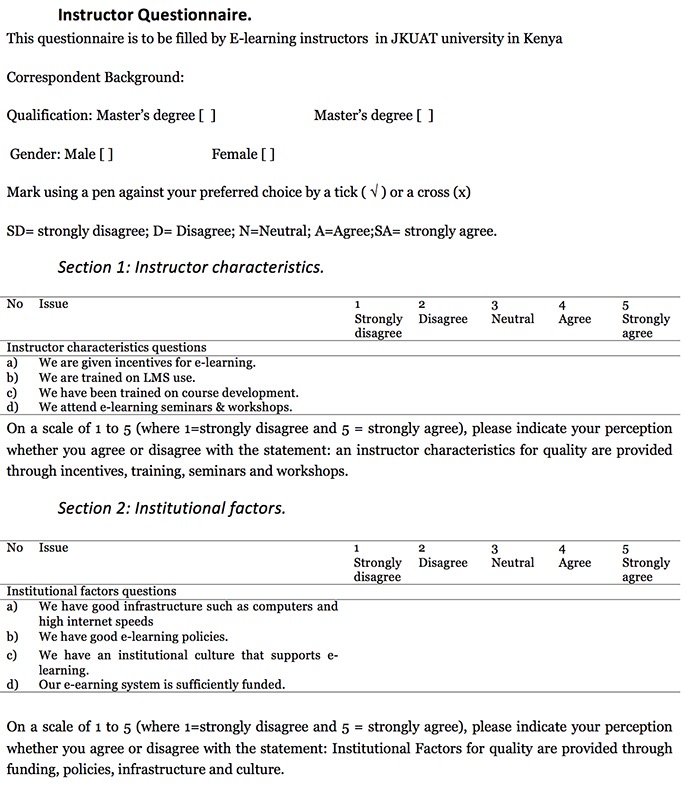

Survey methods can be broadly categorized as: mail survey, telephone survey, and personal interview (Neuman, 2014). The study adopted a mixed survey (qualitative and quantitative) using questionnaires and interviews to gather data. The questionnaires adopted a 5-point Likert scale consisting of strongly disagree, disagree, neutral, agree, and strongly agree. Out of the 200 correspondents, 180 were students and the remaining 20 were to be selected from instructors and administrators. The questionnaires and the interview themes used in the study are found in the appendices (Appendix A).

The questionnaires were hand delivered to the students, instructors, and administrators during the end semester examinations with the help of research assistants. The researcher collected them back after a period of two weeks. All the interviews were conducted face-to-face with the help of the research assistants, and were audio recorded with each session lasting for about 45 minutes.

Qualitative data was prepared through content analysis by categorizing the transcribed data according to the study objectives constructs and indicators. The analysis process applied both inductive and deductive reasoning to obtain correct interpretations from the data. Coding of data was done using SPSS program version 23.0. The coding was based on the eight constructs and 31 indicators.

Once all the data had been coded, the instrument was assessed to check whether it exhibited adequate reliability and validity. Reliability test done to verify internal consistency was measured using Cronbach's alpha (α >= 0.7). Validity test was done using construct validity (CV) using factor loading (FA >= 0.4), Average Variance Extracted (AVE >= 0.5), and composite reliability (CR >= 0.7) (Fornell & Larcker, 1981; Hair et al., 2010).

After preparing and assessing the data collection instruments, analysis was done using descriptive statistics, frequencies, and factor analysis in order to determine the factors that determine the quality of e-learning. The frequency results were used to rate the students, instructors, and administrators views about the status of e-learning quality in JKUAT.

The total responses from questionnaires and interviews were 200. This consisted of postgraduate students in Leadership and Governance, Procument and Logistics Management, Business Administration, Project Management, Human Strategic Management, Entrepreneurship, and IT, totaling 180. The rest totaling 20 included the instructors (19) and one administrator (e-learning director). Table 2 summarizes the distribution of the sample by programmes offered at JKUAT.

Table 2

Sample Distribution by Programme

| Programme | Enrolment | Sample size | Percent |

| Msc. in Leadership and Governance | 50 | 25 | 14% |

| Msc in Procument and Logistics Management | 45 | 22 | 12% |

| Msc in Business Administration | 60 | 30 | 17% |

| Msc in Project Management | 50 | 25 | 14% |

| Msc in Human strategic management | 40 | 21 | 12% |

| Msc in entrepreneurship | 40 | 21 | 12% |

| Msc in IT | 30 | 17 | 9% |

| instructors | 34 | 19 | 11% |

| E-learning deputy director | 1 | 1 | 1% |

| Total | 350 | 200 | 100 |

Reliability and validity. The reliability of the 31 indicators in the questionnaire gave a value of 0.829. Since this alpha (α) value was higher than 0.7, the items in the questionnaire had a good internal consistency and were therefore reliable. The individual alpha for the constructs were also greater than 0.7 which was a good sign. Convergent validity test on the eight constructs exceeded the recommended thresholds of >0.4 for Factor Loading (FL), >0.5 for Average Variance Extracted (AVE), and >0.7 for Composite Ratio (CR). All the constructs and indicators have convergent validity. The measurements are summarized in Table 3.

Table 3

Reliability and Convergent Validity

| Constructs | Indicators | Factor loading | Cronbach's alpha | AVE | CR | Convergent validity |

| Course development | CD1 | 0.790 | 0.856 | 0.728 | 0.818 | OK |

| CD2 | 0.831 | |||||

| CD3 | 0.529 | |||||

| CD4 | 0.66 | |||||

| Content support | CS1 | 0.725 | 0.822 | 0.719 | 0.831 | OK |

| CS2 | 0.706 | |||||

| CS3 | 0.672 | |||||

| CS4 | 0.701 | |||||

| Social support | SS1 | 0.551 | 0.848 | 0.734 | 0.899 | OK |

| SS2 | 0.665 | |||||

| SS3 | 0.833 | |||||

| SS4 | 0.765 | |||||

| Administrative support | AS1 | 0.988 | 0.812 | 0.837 | 0.910 | OK |

| AS2 | 0.7071 | |||||

| AS3 | 0.922 | |||||

| AS4 | 0.606 | |||||

| Institutional factors | IF1 | 0.592 | 0.783 | 0.885 | 0.889 | OK |

| IF2 | 0.657 | |||||

| IF3 | 0.824 | |||||

| IF4 | 0.799 | |||||

| Course assessment | CA1 | 0.876 | 0.775 | 0.762 | 0.786 | OK |

| CA2 | 0.833 | |||||

| CA3 | 0.723 | |||||

| Learner characteristics | LC1 | 0.742 | 0.804 | 0.778 | 0.766 | OK |

| LC2 | 0.702 | |||||

| LC3 | 0.815 | |||||

| IC4 | 0.648 | |||||

| Instructor characteristics | IC1 | 0.692 | 0.811 | 0.823 | 0.843 | OK |

| IC2 | 0.844 | |||||

| IC3 | 0.763 | |||||

| IC4 | 0.715 |

The status of e-leaning system quality as expressed by the respondents at JKUAT was obtained through frequencies from descriptive statistics based on the quality constructs and indicators. These results are shown in Tables 4-11.

Course design.

Table 4

Course Design Factors That Determine E-learning Quality

| Course design | Strongly disagree N (%) | Disagree N (%) | Undecided N (%) | Agree N (%) | Strongly agree N (%) |

| Course information | 5(3%) | 20(12%) | 15(9%) | 92(58%) | 29(18%) |

| Course structure | 9(6%) | 88(54%) | 14(9%) | 18(11%) | 32(20%) |

| Course layout | 25(16%) | 30(17%) | 20(12%) | 77(48%) | 9(6%) |

| Course organization | 32(20%) | 58(36%) | 23(14%) | 28(17%) | 18(11%) |

Number of respondents: (N = 180)

The results on Table 4 shows that over 54% of the students were happy with the course information provided and the course layout of the LMS. However, 60% did not like the course structure while 56% did not like the course organization.

One student commented: "(a)lthough our content has no issues with spelling, grammar and accuracy, they rarely include more relevant examples to help us understand the subject. We always have to look for more materials to helps us understand better."

Content support.

Table 5

Content Support Factors That Determine E-learning Quality

| Content support | Strongly disagree N (%) | Disagree N (%) | Undecided N (%) | Agree N (%) | Strongly agree N (%) |

| Announcements provided | 18(11%) | 26(16%) | 14(9%) | 61(38%) | 42(26%) |

| Reminders provided | 11(7%) | 81(25%) | 26(16%) | 56(35%) | 27(17%) |

| Multimedia has been used | 40(21%) | 51(32%) | 19(12%) | 37(23%) | 14(9%) |

| There is constructive feedback | 37(23%) | 53(33%) | 23(14%) | 31(19%) | 17(11%) |

Number of respondents: (N = 180)

The results on Table 5 shows those over 50% were happy about the provision of announcements and reminders through emails on their courses. However, over 53% complained about lack of constructive feedback and inadequate use of multimedia. One responded commented that "(t)he notes that our lectures upload are merely pdfs with without an inclusion of audio, video or animations. We normally download these pdfs and read them offline. Our lectures rarely pick our phones or reply our emails."

Social support.

Table 6

Social Support Factors That Determine E-learning Quality

| Social support | Strongly disagree N (%) | Disagree N (%) | Undecided N (%) | Agree N (%) | Strongly agree N (%) |

| Information support from peers | 39(24%) | 53(33%) | 13(8%) | 27(17%) | 29(18%) |

| Online library support (instrumental) | 35(22%) | 23(14%) | 26(16%) | 45(28%) | 32(20%) |

| Emotional support from family & peers | 47(29%) | 52(32%) | 22(14%) | 26(16%) | 14(9%) |

| Affirmational support by working in groups | 34(21%) | 60(37%) | 18(11%) | 36(22%) | 13(8%) |

Number of respondents: (N = 180)

The results on Table 6 shows that only instrumental or library support scored over 45% implying the students heavily relied on online library for social support. The rest scored below 40%, and were broken down into information support (35%), emotional support (25%), and affirmation support (30%). Most of the students stressed that it was difficult to interact socially as both the LMS course forum and chat were rarely used by both the students and the instructors. This is how one student commented, “we have just formed a’s app group this week when we came for our semester examinations. Most of us are meeting for the first time. We hope for better interaction next semester through What’s app group.”

Administrative support.

Table 7

Administrative Support Components That Determine E-learning Quality

| Administrative support | Strongly disagree N (%) | Disagree N (%) | Undecided N (%) | Agree N (%) | Strongly agree N (%) |

| Course registration | 9(5%) | 32(20%) | 21(13%) | 70(44%) | 29(18%) |

| Academic advice | 18(11%) | 27(17%) | 23(14%) | 60(37%) | 33(21%) |

| Campus orientation | 11(7%) | 26(16%) | 29(18%) | 55(34%) | 40(25%) |

| Phone call support | 38(24%) | 50(31%) | 24(15%) | 31(19%) | 18(11%) |

Number of respondents: (N = 180)

The results on Table 7 show that close to 60% of the students commended the support they got during on-campus orientation, course registration, and academic advice they received when joining the course. However, 56% complained about the difficulties experienced when trying to make phone calls to the e-learning department at JKUAT. This was evident from the following response from a respondent:

(I)magine I had to travel all the way from Busia Town to Nairobi City (a distance of 358km) to come and confirm my fee balance after I was told I could not sit for examinations yet I had cleared all my fees. I was told to come personally as I could not be assisted through phone calls.

Course assessment.

Table 8

Course Assessment Components That Determine E-learning Quality

| Course assignment | Strongly disagree N (%) | Disagree N (%) | Undecided N (%) | Agree N (%) | Strongly agree N (%) |

| Lost grades | 21(13%) | 27(17%) | 26(16%) | 49(31%) | 37(23%) |

| Assignment management | 15(9%) | 32(20%) | 24(15%) | 58(36%) | 32(20%) |

| Assessment & feedback | 22(14%) | 40(25%) | 23(14%) | 42(26%) | 34(21%) |

| Assessments & content | 42(26%) | 20(12%) | 17(11%) | 50(31%) | 32(20%) |

Number of respondents: (N = 180)

The results on Table 8 show that 51% of the students agree that the content taught is enough to undertake assessments while 47% report to having no problems with the lack assessment feedback, such as CATs and assignments. Only 30% of the students supported claims that grade loss or misplacement was a problem in JKUAT, while 56% were satisfied with assignment management.

Learner characteristics.

Table 9

Learner Characteristics Components That Determine e-Learning Quality

| Learner characteristics | Strongly disagree N (%) | Disagree N (%) | Undecided N (%) | Agree N (%) | Strongly agree N (%) |

| I enjoy using e-learning | 29(18%) | 47(29%) | 19(12%) | 48(30%) | 18(11%) |

| Instructors motivate us | 34(21%) | 44(27%) | 13(8%) | 52(32%) | 18(11%) |

| I have internet & computer experience | 26(16%) | 34(21%) | 17(11%) | 45(28%) | 39(24%) |

| We have been trained on E-learning | 37(23%) | 50(31%) | 21(13%) | 40(25%) | 13(9%) |

Number of respondents: (N = 180)

The results on Table 9 shows that those who enjoy e-learning are 41% while those who do not are 47%. The majority (52%) also reported having useful internet and computer experience while over 50% lamented lacking LMS training as well lack of motivation from instructors.

Instructor characteristics.

Table 10

Instructor Characteristics That Determine E-learning Quality

| Instructor characteristics | Strongly disagree N (%) | Disagree N (%) | Undecided N (%) | Agree N (%) | Strongly agree N (%) |

| We are trained on LMS | 4(20%) | 7(31%) | 3(19%) | 3(15%) | 3(15%) |

| We are trained in course development | 5(25%) | 6(30%) | 2(10%) | 5(25%) | 3(10%) |

| We are given incentives | 7(35%) | 8(40%) | - | 3(15%) | 2(10%) |

| We attend workshops/seminars | 4(20%) | 6(30%) | 3(15%) | 4(20%) | 3(15%) |

Number of respondents: (N = 20)

The results on Table 10 show that over 50% of are not satisfied with training on LMS and course development. Over 50% were also dissatisfied with provisions for attending workshops or seminars on e-learning as well as incentives at work. One instructor made this comment:

If the university can include e-learning course development as part of the workload that is considered for payment by the university then we would all be willing to sacrifice out time for it. Otherwise nobody wants to work for free.

Institutional factors.

Table 11

Institutional Factors That Determine E-Learning Quality

| Institutional factors | Strongly disagree N (%) | Disagree N (%) | Undecided N (%) | Agree N (%) | Strongly agree N (%) |

| Funding | 5(25%) | 6(30%) | 1(5%) | 5(26%) | 3(13%) |

| Infrastructure | 4(20%) | 5(25%) | 3(15%) | 4(20%) | 4(20%) |

| Culture | 6(30%) | 7(35%) | 1(5%) | 4(20%) | 2(10%) |

| Policies | 5(25%) | 5(25%) | - | 5(25%) | 6(25%) |

Number of respondents: (N = 20)

The results on Table 11 shows that 55% of the respondent's state that the university lacks funding, infrastructure, and polices to manage e-learning. Another 65% adds that the culture of the university does not support e-learning.

This study set out to identify the e-learning system quality factors that determine the quality of e-learning in developing countries and also use the factors to determine the status of e-learning system quality at JKUAT using empirical data. From the literature review, it was established that there are indeed eight factors that determine the quality of e-learning systems: course design, course support, social support, administrative support, course assessment, learner characteristics, instructor characteristics, and institutional factors. The status of e-learning system quality at JKUAT was determined based on these factors with the following findings.

The findings revealed that JKUAT e-learning courses had a good layout and adequate course information. This conforms to the findings by Wright (2014) who established that the institution providing e-learning must provide a good LMS interface and adequate course information. However, the students were not satisfied with the structure and organization of the courses. The students also reported inadequate content and lack of relevant examples which forced them to always search for alternative materials. Lim, Park, and Kang (2016) points that rich and relevant content should always be incorporated in e-learning courses so as to boost academic self-efficacy.

The students gave a good report concerning course announcements and reminders on their courses. This conforms to Wright (2014) guidelines on content support which stated that reminders and announcements help online students to keep updated with course issues. However, the students lamented about the inadequate use of multimedia and the infrequent feedback received from instructors. There is a need for multimedia use in e-learning courses as it improves learning by keeping the learners engaged and motivated (Muuro et al., 2014). Furthermore, there is a need to improve content support through timely feedback and interaction with facilitators via emails, discussion forums, and collaborative activities, which is key in learner support (Queiros & de Villiers, 2016).

According to this study, the only source of social support for JKUAT e-learning students is through the online library. Otherwise, information support, affirmational support, and emotional support were reportedly not effective as the students stated that both LMS forum and chat were not active. Social support, which is commonly categorized into four types of supportive, informational, instrumental, and emotional support, is an important motivator that affects online students (Munich, 2014). This support comes primarily from sources such as peers, forum, chat, and e-learning group work (Weng & Chung, 2015; Queiros & de Villiers, 2016). It is therefore imperative that JKUAT embraces LMS chats and forum in its courses in order to boost social support.

The results of this study shows that the level of administrative support namely, on-campus orientation, course registration, and academic advice, is satisfactory. The only problem the students complained about concerns communication using the telephone. They asserted that communication through telephone calls was a challenge as most calls went unanswered. This made it very difficult to get information regarding the course, such as examination information or fee issues. This calls for a dedicated call center for addressing student’s matters as recommended by Makokha and Mutisya (2016).

The results revealed that most students agreed that the content taught was enough to undertake assessments. However, nearly half of the students felt that they deserved to get assignment and CAT papers back while the rest thought it did not matter as long as they passed the assessment. A small minority also complained about lost CAT grades and exam grades, forcing them to re-submit some papers. There were also some concerns about delayed examination results and the excessive number of assignments, although these came from a smaller group of students. Regarding examinations, JKUAT need to conform to the findings made by Chawinga (2016), who observed that universities should safeguard student's grades and also release end of semester examinations on time to avoid inconveniencing the learners.

The results show that nearly half of the students have a passion for e-learning and also possess useful internet and computer experience. However, close to half of the students lamented lacking LMS training as well lack of motivation from instructors. Jung (2017) observed that learner motivation (intrinsic and extrinsic) is crucial to the learners' success in an online coursework environment. JKUAT needs to provide training as it is a way of imparting e-learning skills through training was necessary in order to improve quality (Arinto, 2016; Azawei et al., 2016).

The results show that instructors are not satisfied with training on LMS use and course development. They also expressed concerns about low motivation from the university and the lack or limited access to e-learning seminars and workshops where they can learn more about e-learning. JKUAT needs to provide training, motivation, and incentives in order to enhance instructor participation in e-learning (Makokha & Mutisya, 2016; Mayoka & Kyeyune, 2012; Kisanga, 2016).

The study further reveals that lack of funding has handicapped infrastructure implementation such as equipping labs with computers and maintaining the network that host the LMS. Poor network connectivity and Internet bandwidth has also hampered quality use. Tarus, Gichoya, and Muumbo (2015) observed that these technological components play a critical role in facilitating accessibility to e-learning by the users and should be adequate. There are also reports about lack of adequate training, lack of policy for developing, using and securing e-learning, lack of training in LMS and course development, and low motivation for the instructors and administrators. These results are consistent with Kashorda and Waema's (2014) and Bagarukayo and Kalema's (2015) studies, which advocated for funding, policy, and infrastructure as key pillars for e-learning success.

The present study aimed to cast some light on major challenges that hinder quality application of e-learning in developing countries. A case was chosen from Kenya because e-learning has been recently implemented in nearly all public universities. Findings confirmed that there are about eight quality issues that influence e-learning in Kenya and in other developing countries.

With the competitive expansion of e-learning in developing countries, HEIs that provide e-learning must improve the quality of their e-learning systems based on these factors in order to achieve successful adoption, implementation, and use of e-learning systems. The present study may contribute to a better understanding of Kenyan and developing countries e-learning systems by offering a criterion for enhancing the quality and may serve as useful benchmark for e-learning providers and policy makers.

In addition, it can be used to identify weak areas in e-learning systems operations from the users' point of view and suggest effective strategies for improving quality. The author also believes that the context of Kenya is a typical representation of many situations facing HEIs in developing countries and therefore the results can be applied to other developing countries.

Al-Azawei, A., Parslow, P., & Lundqvist, K. (2016). Barriers and opportunities of e-learning implementation in Iraq: A case of public universities. The International Review of Research in Open and Distributed Learning, 17(5). doi: 10.19173/irrodl.v17i5.2501

Arinto, P. B. (2016). Issues and challenges in open and distance e-learning: Perspectives from the Philippines. The International Review of Research in Open and Distributed Learning, 17(2). doi: 10.19173/irrodl.v17i2.1913

Aung, T.N., & Khaing, S.S. (2016). Challenges of implementing e-learning in developing countries: A Review. In T. Zin, J.W. Lin, J.S. Pan, P. Tin, & M. Yokota (Eds.), Genetic and evolutionary computing (pp. 405-411). Cham: Springer.

Baloyi, G. (2014). Learner support in open distance and e-learning for adult students using technologies. Journal of Communication, 5(2), 127-133. Retrieved from 10.1080/0976691x.2014.11884832

Bagarukayo, E., & Kalema, B., (2015). Evaluation of e-learning usage in South African universities: A critical review. The International Journal of Education and Development using Information and Communication Technology, 11(2), pp.168-183.

Chawinga, W. D. (2016). Increasing access to higher education through open and distance learning: Empirical findings from Mzuzu University, Malawi. The International Review of Research in Open and Distributed Learning, 17(4).

Commission for University Education. (2015). Accredited universities in Kenya. Retrieved from http://www.cue.or.ke/images/phocadownload/Accredited_Universities_Kenya_Nov2015.pdf

Delone, W. H., & McLean, E. R. (2003). The DeLone and McLean Model of information Systems success: A ten-year update. Journal of Management Information Systems, 19(4), 9-30. doi: 10.1080/07421222.2003.11045748

Fornell, C., & Larcker, D.F. (1981). Evaluating Structural Equation Models with Unobservable Variables and Measurement Error. Journal of Marketing Research, 18(1), pp. 39-50.

Gay, L. R., Mills, G. E., & Airasian, P. (2009). Educational research: Competencies for analysis and application. Upper Saddle River, NJ: Pearson.

Hair, J. F. J., Black, W. C., Babin, B. J., Anderson, R. E., & Tatham, R. L. (2010). Multivariate data analysis. Englewood Cliffs, NJ: Prentice Hall.

Johanson, Richard & Shafiq, M. (2011). Tertiary education in developing countries: Issues and challenges. Millennium Challenge Corporation (MCC) Education, Health and Community Development Group. Draft - January 2011.

Jung, N. (2017). Korean learning motivation and demotivation of university students in Singapore. Foreign Languages Education, 24(3), 237-260. doi: 10.15334/fle.2017.24.3.237

Kashorda, M., & Waema, T. (2014). E-Readiness survey of Kenyan Universities (2013) report. Nairobi: Kenya Education Network.

Kisanga, D. (2016). Determinants of teachers' attitudes towards e-learning in Tanzanian higher learning institutions. The International Review of Research in Open and Distributed Learning, 17(5). doi: 10.19173/irrodl.v17i5.2720

Kjericie, V., & Morgan, W. (1970). Determining sample size for research activities. Educational and Psychological Measurement, 30(3). Retrieved from http://www.fns.usda.gov/fdd/processing/info/SalesVerificationTable.doc

Lim, K., Park, S., & Kang, M. (2016). Structural relationships of environments, individuals, and learning outcomes in Korean online university settings. The International Review of Research in Open and Distributed Learning, 17(4). doi: 10.19173/irrodl.v17i4.2500

Makokha, G., & Mutisya, D. (2016). Status of e-learning in public universities in Kenya. The International Review of Research in Open and Distributed Learning, 17(3). doi: 10.19173/irrodl.v17i3.2235

Mayoka, K., & Kyeyune, R. (2012). An analysis of eLearning Information System adoption in Ugandan Universities: Case of Makerere University Business School. Information Technology Research Journal, 2(1), pp.1-7.

Mtebe, J., & Raisamo, R. (2014). A model for assessing learning management system success in higher education in sub-saharan countries. The Electronic Journal of Information Systems in Developing Countries, 61(1), 1-17. doi: 10.1002/j.1681-4835.2014.tb00436.x

Munich, K. (2014). Social support for online learning: Perspectives of nursing students. International Journal of E-Learning & Distance Education, 29(2), 1-12. Retrieved from http://ijede.ca/index.php/jde/article/view/891/1565

Muuro, M., Wagacha, W., Kihoro, J., & Oboko, R. (2014). Students' perceived challenges in an online collaborative learning environment: A case of higher learning institutions in Nairobi, Kenya. The International Review of Research in Open and Distributed Learning, 15(6). doi: 10.19173/irrodl.v15i6.1768

Neuman, W., L. (2014). Social research methods: Qualitative and quantitative approaches. Essex: Pearson.

Quality Matters Rubric Standards. (2014). Non-annotated Standards from the QM Higher Education Rubric (5th ed.). Retrieved from https://www.qualitymatters.org/qa-resources/rubric-standards/higher-ed-rubric

Queiros, D., & De Villiers, M. (2016). Online learning in a South African higher education institution: Determining the right connections for the student. The International Review of Research in Open and Distributed Learning, 17(5). doi: 10.19173/irrodl.v17i5.2552

Raspopovic, M., Jankulovic, A., Runic, J., & Lucic, V. (2014). Success factors for e-learning in a developing country: A case study of Serbia. The International Review of Research in Open and Distributed Learning, 15(3). doi: 10.19173/irrodl.v15i3.1586

Saunders, M., Lewis, P., & Thornhill, A. (2012). Research methods for business students (6th ed.), Pearson Education Limited.

Ssekakubo, G., Suleman, H., & Marsden, G. (2011). Issues of adoption: Have e-learning management systems fulfilled their potential in developing countries? In SAICSIT '11: Proceedings of the South African Institute of Computer Scientists and Information Technologists Conference on Knowledge, Innovation and Leadership in a Diverse, Multidisciplinary Environment (pp. 231-238). doi: 0.1145/2072221.2072248

Tarus, J., Gichoya, D., & Muumbo, A. (2015). Challenges of implementing e-learning in Kenya: A case of Kenyan public universities. The International Review of Research in Open and Distributed Learning, 16(1). 10.19173/irrodl.v16i1.1816

United States Agency for International Development. (2014). African higher education: Opportunities for transformative change for sustainable development. Retrieved from http://www.aplu.org/library/african-higher-education-opportunities-for-transformative-change-for-sustainable-development/file

Wright, C., R., (2014). Criteria for evaluating the quality of online courses. Retrieved from https://elearning.typepad.com/thelearnedman/ID/evaluatingcourses.pdf

Status of e-learning Quality in Kenya: Case of Jomo Kenyatta University of Agriculture and Technology Postgraduate Students by Kennedy Hadullo, Robert Oboko, and Elijah Omwenga is licensed under a Creative Commons Attribution 4.0 International License.