Volume 20, Number 1

Firas Moosvi, Stefan A. Reinsberg, and Georg W. Rieger

Department of Physics & Astronomy, University of British Columbia, Canada

In this article, we examine whether an inquiry-based, hands-on physics lab can be delivered effectively as a distance lab. In science and engineering, hands-on distance labs are rare and open-ended project labs in physics have not been reported in the literature. Our introductory physics lab at a large Canadian research university features hands-on experiments that can be performed at home with common materials and online support, as well as a capstone project that serves as the main assessment of the lab. After transitioning the lab from face-to-face instruction to a distance format, we compared the capstone project scores of the two lab formats by conducting an analysis of variance, which showed no significant differences in the overall scores. However, our study revealed two areas that need improvements in instruction, namely data analysis and formulating a clear goal or research question. Focus group interviews showed that students in the distance lab did not perceive the capstone project as authentic science and that they would have preferred a campus lab format. Overall our results suggest that the distance project lab discussed here might be an acceptable substitute for a campus lab and might also be suitable for other distance courses in science.

Keyword: distance education, distance labs, introductory physics, smartphone physics, hands-on experiments, capstone project, project labs

There is broad consensus among educators that laboratory experience is an important part of science education (National Science Teachers Association, 2019; American Association of Physics Teachers, 1998); therefore, it stands to reason that distance education programs need to offer science labs that are suitable for distance learners. However, experiments performed in traditional teaching labs are not easy to transfer to the online environment because they often use scientific instruments and other specialized equipment. One obvious solution to this problem is to hold the laboratory portion of an online course on campus, either as weekly or bi-weekly labs or as an intense one-week experience (also known as a power lab), in which students perform all of the labs in a course (Cancilla & Albon, 2008; Lyall & Patti, 2010). However, weekly labs on campus are only practical for students living nearby, and this blended format is typically offered as an alternative to on-campus courses for students looking for more flexibility in their schedule. Power labs are more suitable for distance courses with a significant fraction of students living far from campus, but they may be impractical for many distance students due to travel and lodging costs as well as scheduling (Lyall & Patti, 2010; Brewer, Cinel, Harrison, & Mohr, 2013).

For over two decades now, course designers and researchers have implemented lab formats that distance students can complete at home (Ma & Nickerson, 2006; Brinson, 2015). Labs based on hands-on experiments at home, virtual lab experiments (Pyatt & Sims, 2012; Waldrop, 2013; Rowe, Koban, Davidoff, & Thompson, 2017), computer simulations, video-based experiments (Waldrop, 2013), and remote-controlled experiments (Kennepohl, 2009) have been part of online science courses for some time now, and there is growing evidence that students learn at least as much in these formats as in traditional hands-on, face-to-face teaching labs (Brinson, 2015). Nevertheless, there is still a need for more research that describes and evaluates different formats of distance labs (Kennepohl, 2009).

According to Brinson (2015), there were only 56 comparison studies published from 2005 to 2014 that reported learning outcomes of distance labs and/or compared distance labs to face-to-face teaching labs. The vast majority of the reviewed studies compared quiz or exam grades, while surprisingly small numbers based their comparisons on lab reports or practical lab exams. Moreover, some of the comparison studies had a limited number of participants (Casanova, Civelli, Kimbrough, Heath, & Reeves, 2006, chemistry; Lyall & Patti, 2010, chemistry) and/or examined non-traditional student populations that differed from the more traditional student populations that typically access on-campus (face-to-face) labs (e.g., Reuter, 2009, soil science; Rowe et al., 2017, chemistry). Most of the physics examples in Brinson's review were based on remote experiments or virtual labs, and not on hands-on distance labs, so they are not relevant to our concerns. We refer to a remote experiment as an experiment in which the students conduct an experiment with equipment that is at a different location and only accessible via the internet, whereas a hands-on distance lab is a distance education lab in which the students perform hands-on experiments at home. A virtual lab refers to a computer simulation of an experiment.

As Brinson (2015) did not include hands-on distance labs in his review (to avoid confusion with traditional hands-on face-to-face labs), we will briefly discuss nine relevant studies of such labs. Most of the hands-on distance labs we found in our search of the literature relied on lab kits that students were required to purchase or borrow from their institutions. Examples of physics labs or labs in courses with significant physics content are described by Abbott (1998), Turner and Parisi (2008), Al-Shamali and Connors (2010), and Mawn, Carrico, Charuk, Stote, and Lawrence (2011). In other sciences, examples of distance labs with lab kits are presented by Reuter (2009) for soil science, Lyall and Patti (2010), Brewer et al. (2013), and Rowe et al. (2017) for chemistry, and Hallyburton and Lundsford (2013) for biology. Lab kits have the advantage of being well tested and safe, which is a major concern in chemistry (Lyall & Patti, 2010; Brewer et al., 2013). However, they add logistical issues and cost (Al-Shamali & Connors, 2010; Turner & Parisi, 2008).

Hands-on distance labs are usually chosen to provide students with an authentic lab or real-world experience (Cancilla and Albon, 2008; Lyall & Patti, 2010; Brewer et al., 2013). In cases when students can take a course either face-to-face on campus or as a distance course, the distance lab must provide a similar experience as a hands-on lab on campus (Lyall & Patti, 2010). In addition, Brewer et al. (2013) list acceptance of their distance courses for transfer credit by other institutions as one of their main considerations. However, resistance to non-traditional labs is still prevalent. For example, Brinson (2015) reported that in the United States the National Science Teachers Association and the American Chemical Society explicitly discouraged replacing traditional hands-on, face-to-face labs with non-traditional labs.

Traditional face-to-face labs are often seen as an opportunity for students to practice inquiry skills and scientific process skills (NSTA, 2019), which may be another reason for choosing a hands-on format. However, in reality, this is rarely the focus of the lab (see discussion by Holmes & Wieman, 2018). For virtual labs, Brinson (2015) reports only four published examples that measure inquiry. Three of these studies (Klahr, Triona, & Williams, 2007; Morgil, Gungor-Seyhan, Ural-Alsan, & Temel, 2008; Tatli & Ayas, 2012) reported equal or better learning outcomes for virtual labs as for corresponding hands-on labs. The fourth study (Lang, 2012) found that students did not perceive that the research design skills learning outcome was achieved in either lab format. For hands-on distance labs, Mawn et al. (2011) have demonstrated that students can engage meaningfully in inquiry tasks and develop scientific process skills.

Our hands-on lab did not use a lab kit; rather, it was similar to the "kitchen chemistry" approach (Casanova et al., 2006; Lyall & Patti, 2010) in its use of common household items. The kitchen chemistry approach is scalable to large enrolment courses and adds (almost) no cost for students or the institution. We mainly chose this approach because it supported our main pedagogical goal: enabling students to design, execute, and analyze an experimental project on their own. The decision to use simple equipment is informed by our previous experience with lab formats in which microcomputers, sensors, and other relatively sophisticated equipment were used to perform fairly precise measurements that confirm known constants or theories. We found that our students spent too much time learning how to use the equipment and troubleshooting it. They were often focused on measuring "precise" or "correct" data, but had difficulties in interpreting their data and relating it to the theories behind the experiments. Our new lab did not aim to measure known quantities; instead, it focused entirely on experimental design and data skills. Deciding how to conduct an experiment is an important part of experimental research, so we avoided predefined experimental procedures as much as possible. Our goals and design choices were influenced by the work of Reif and St. John (1979), who emphasized thinking skills and communications skills in their physics lab. Their lab design included flexible lab times and the possibility to do experiments at home to combat time constraints. Our lab also featured a capstone project in which students designed and performed an experiment to answer a research question of their choice, because we wanted our students to engage in authentic scientific tasks and see themselves as scientists.

Our approach aligned with the focus areas identified by the American Association of Physics Teachers for the undergraduate physics lab curriculum: constructing knowledge, modeling, designing experiments, developing technical and practical laboratory skills, analyzing and visualizing data, and communicating physics (American Association of Physics Teachers, 2014). Ideally, labs should feature authentic cognitive tasks and activities, similar to those an expert in the field would engage in (Wieman, 2015). Our lab emphasized three of the focus areas: designing experiments, analyzing and visualizing data, and communicating physics. Our choice was influenced by the work of Etkina, Murthy, and Zou (2006), who critiqued many physics labs for failing to give students a chance to formulate their own research questions or design their own experiments. There were, however, drawbacks to our approach and potential pitfalls, such as time spent on finding equipment and lack of close supervision, as we elaborate in our discussion.

The aim of our study was to determine whether students learn as much in an inquiry-based hands-on distance lab as in a similar face-to-face lab on campus. While our study focused on a physics lab, we believe that our lab format could be relevant to distance education or blended learning in other STEM fields, particularly in engineering. In the next sections, we describe the campus lab and distance lab formats, present a comparison of student performance in each, and conclude with a discussion of student perceptions of the distance lab.

The lab was part of an introductory algebra-based physics course at a large Canadian research university. It was delivered in a face-to-face format on campus until 2014 and included some experimental tasks at home, as well as the capstone project that was also performed at home (Rieger, Sitwell, Carolan, & Roll, 2014). This format was switched to a distance format in 2015 and all lab activities were completed at home, while the lectures and tutorials (recitals) of this blended course took place on campus, as before. The campus lab and the distance lab formats had an identical lab design and the same activities. For the first eight weeks of the term, students underwent a skill-building phase in which they built up their "scientific toolbox": planning and performing experiments, analyzing and graphing data, as well as estimating uncertainty and using basic statistics (average, mean, standard deviation, standard error). Since there was no extra credit associated with the lab, these weekly tasks were designed to take less than two hours to finish. Most experiments during the skill-building phase used simple equipment to reduce cognitive load and help students focus on understanding and analyzing experimental data, but a few experiments used automatic data acquisition. The objective was to demonstrate that even experiments performed with precise instruments and computers introduce uncertainty. For data analysis and graphing, students used their own spreadsheet programs (Excel, OpenOffice, Numbers).

During the last four weeks of the term, students worked on their capstone project. For their capstone projects, students were asked to formulate a research question, plan and perform an experiment to answer their question, analyze their data with the tools they learned in the course, and present their results in form of a written report. Students were not required to choose a physics topic, but they were instructed to propose a topic that required measuring experimental data with easily accessible equipment. For reasons of safety and ethics, all project proposals had to be approved by a teaching assistant or the course instructor.

Table 1 presents an overview and a brief description of all lab activities, including the capstone project. Some of the listed skills and major learning goals are related to inquiry tasks that students either performed on worksheets (campus lab) or online by filling in textboxes and answering multiple-choice questions (distance lab). The tasks also included review questions that connected to the activities of previous weeks. Differences in materials used in the campus lab and the distance lab are indicated in Table 1 by CL and DL, respectively.

Table 1

Overview of the Lab Activities

| Week | Topic | Skills and major learning goals | Experiments and materials |

| 1 | Introduction to Uncertainty | Identify sources of experimental uncertainty; design an experiment and collect data; calculate the mean value. | Average speed of paper planes. Materials: letter-sized paper, stopwatch, tape measure (or ruler and tiled floor). |

| 2 | Histograms | Present data in form of a histogram; analyze and interpret histograms; create histograms from students' own data and from other data sources. | Use of spreadsheet software: calculate mean values and check for minimum and maximum values in a large dataset. Materials: students' own computers and spreadsheet software, large dataset. |

| 3 | Quantifying Histograms | Compare distributions; calculate mean, standard deviation, and standard error; use standard error to express uncertainty; evaluate agreement between experiments. | Simple pendulum: vary mass or length of string, take multiple measurements to reduce uncertainty, determine whether varying length or mass influences the period of a pendulum. Materials: string, objects with known mass (or kitchen scale to measure mass), tape measure or ruler, students' own computers and spreadsheet software. |

| 4 | The Pendulum | Acquire data automatically; plan and design an experiment to reduce uncertainties with repeated measurements; answer a research question based on data analysis and uncertainties using mean and standard error. | Simple pendulum: vary mass, length of string, or amplitude, acquire data with a motion sensor/microcomputer (CL) or accelerometer in cell phone (DL), determine whether varying length or mass influences the period of a pendulum. Materials: computer with motion sensor (CL), smartphone with accelerometer app (DL), string, objects with known mass (or scale to measure mass), tape measure or ruler, videos (provided online) on use of accelerometer app and experimental set up (DL), students' own computers and spreadsheet software. |

| 5 | Static Friction | Determine the static friction force from the measured mass and the maximum applied force (CL) or the maximum inclination angle (DL); learn to draw and interpret scatterplots with spreadsheet software. | Dependency of static friction on mass. Materials: microcomputer/force probe (CL) or smartphone, bookshelf or similar smooth surface that can be tilted (DL) food storage container with known amount of water or other known masses (e.g., coins), students' own computers and spreadsheet software. |

| 6 | Trendlines | Create a scatter plot from a given dataset. Fit trendlines to the data and interpret fitting coefficients and R2 values; decide on best fitting model for the given data. | Use of spreadsheet software to explore best-fitting mathematical representation for a given dataset. Materials: students' own computers and spreadsheet software, given dataset. Instructional video (provided) (DL). |

| 7 | Error Bars | Measure the average velocity of an object falling with significant air resistance; learn to draw and interpret graphs with error bars (with spreadsheet software). | Materials: large coffee filters (CL) or cupcake holders (DL), microcomputer/motion sensor (CL) or tape measure and stopwatch (DL), students' own computers and spreadsheet software. Instructional video (provided) (DL). |

| 8 | Summary and Review | Choose appropriate representations: Histograms vs. graphs; use of mean values vs. trendlines; state the corresponding uncertainties: standard error vs. uncertainties in fitting coefficients and R2 values. Determine fractional energy loss for a bouncing ball and choose the appropriate representation for your result. | Appropriate representations in different experiments. Materials: contrasting cases provided online, ball, ruler or tape measure, students' own computers and spreadsheet software. |

| 9-12 | Capstone Project | Propose a research question; give and receive peer feedback; plan and conduct your experiment; analyze your data; write a brief report. | Students' choice. Project must be a research question that can be answered with an experiment. Project must be approved by a teaching assistant. Materials: common household materials or easily accessible equipment, students' own computers and spreadsheet software. |

The lab experiments were originally designed for the campus lab in which the skill-building phase during the first eight weeks was delivered face-to-face on campus. In class, students were engaged in worksheet-based learning tasks and clicker questions for predictions and follow-up, in addition to doing hands-on experiments. For the distance lab, all of these inquiry tasks were moved online and most peer activities were replaced by multiple-choice questions with feedback. All inquiry tasks were identical in both lab formats and were typically focused on experimental design ("What materials are you planning to use?" or "How many data points are you planning to take?"), data interpretation, and data analysis. Three of the experiments performed in the campus lab relied on computer-based data acquisition with motion sensors or force probes. In the distance lab, these were modified for use at home: In Week 4, students used their smartphones as a pendulum bob and acquired data with the built-in accelerometers to determine the period of a pendulum as a function of different parameters. For the friction experiment in Week 5, students in the distance lab used a smartphone app to measure the inclination angle at which an object started to slide down a tilted bookshelf instead of using a force probe (connected to a microcomputer) to measure the maximum force that can be applied to an object before it starts to move. In Week 7, students used stopwatches/smartphone timers and rulers instead of motion sensors to capture the falling motion of cupcake holders with air resistance. We emphasize here that even though these experiments involved computer or smartphone-based data acquisition, one of the key learning goals (as in all other weeks) was to quantify the experimental uncertainty in a meaningful way. Students used their own computers and software for data analysis and graphing tasks in both lab formats. In the distance labs, we provided technical support for these programs and for the more sophisticated smartphone experiments with a number of instructional videos, e.g., how to add trend lines and error bars in Excel, or how to set up a smartphone to record accelerometer data. In addition, students could get help from teaching assistants for all lab-related questions in an online discussion forum hosted on piazza.com. In the campus lab, teaching assistants were on hand to provide technical help and answer questions.

The most significant difference between the two formats was how the students learned in the lab. In the campus lab, students worked in pairs and were also encouraged to perform their capstone project in pairs. In the distance lab, students typically worked on their own at home during the first eight weeks. For the capstone project they had the option to work with a partner and approximately half of the capstone projects in the distance labs were done in pairs.

The participants in this study took either the fall offering of our introductory physics course in 2014, which included the face-to-face campus lab (control group) or the fall offering in 2015, which included the distance lab (treatment group). Each group consisted of approximately 750-800 students in three lecture sections that used the same teaching materials. The demographics of the course was very similar in the two years: 60% of our students were in science, 15% were in arts, and 25% were in other faculties. Approximately two-thirds of students in the course have identified as female and one third as male. The vast majority (79%) of our students have been first-year students.

The main research question of our study was:

Can students learn as much in an inquiry-based hands-on distance lab as in a nearly identical face-to-face lab on campus?

As explained in the previous sections, the two lab formats had an identical design, the same learning goals and the same learning tasks. The only differences were in the lab equipment of three experiments to accommodate the delivery format. Therefore our hypothesis was that our students learned as much in an inquiry-based hands-on distance lab as in a similar face-to-face lab on campus. To test this hypothesis, we compared the learning outcomes in the two lab formats. Since the main assessment method in both labs is the capstone project, we collected data on student performance on the capstone project in the distance lab (treatment) and in the face-to-face lab (control). Accordingly our main research question can be formulated more specifically:

1a. Is the mean project score for the distance lab projects better or worse than the score for the campus lab projects, using the same grading rubric?

1b. Which of the seven categories in rubric scores (see below) separate the campus lab projects from the distance projects?

We also wanted to know how students perceived the distance lab and conducted focus groups in the spring of 2016. Our research question were:

2. Did students enjoy the freedom of performing all experiments at home on their own time?

3. Did they perceive working on the capstone project as doing authentic research or "doing science"?

For this study, which was approved by our institution's Behavioural Research Ethics Board, we used a sample of 57 project reports from the 2014 fall term (face-to-face labs) and 176 project reports from the 2015 fall term (distance labs). The sample data are summarized in Table 2.

Table 2

Overview of the Study Data

| Year | Lecture format | Lab format | Project performed | Number of projects | Number of students | Course grade average | Fails |

| 2014 | F2F | F2F | In pairs | 57 | 114 | 72.1% | 10 |

| 2015 | F2F | DIST | Individually/Pairs | 153/176 | 152/342 | 74.1%/74.7% | 5/19 |

To ensure interrater reliability from year to year, all projects, including those from 2014, were marked by eight graduate teaching assistants at the end of the 2015 fall term. The project reports were anonymized and section-specific information was removed to ensure that raters could not tell whether a project was completed individually or in pairs, and whether they rated a project from the 2014 campus lab or the 2015 distance lab. In addition, teaching assistants did not rate projects of students they potentially interacted with in the campus labs.

The rating was performed using a grading tool that was specifically developed for this course. The tool is a list of 24 rubric questions (see Table 3) that raters answer by choosing either "Yes (1/1)," "Partially (0.5/1)," or "No (0/1)." For a subset of questions (Questions 10-17), the option N/A was also available in cases where these questions do not apply or are not important for a project. Any N/A rating reduced the total achievable score by one so that those questions were not taken into account for the overall grade. All questions were weighted equally and the sum of the points was converted to a percentage to yield the students' overall project grade. The last four questions of the grading tool (21-24) were meant to distinguish projects that were exceptional.

Table 3

Capstone Project Assessment Rubric

| Category | Rubric questions |

| C1 Clearly stated research question or clearly formulated goal |

|

| C2 Clear description of the experiment |

|

| C3 Sufficient data and quality of data |

|

| C4 Analysis of data and appropriate graphs |

|

| C5 Estimating uncertainty |

|

| C6 Clear conclusions |

|

| C7 Outstanding Projects |

|

Because the grading tool was developed at the end of the 2015 fall term, students in 2014 and 2015 did not see the detailed marking questions, but were given the same general evaluation categories. In particular, students were told that their project should have the following components:

A sample project was also provided to illustrate the general format and the key components a project report should have. In addition, students handed in a draft of their project reports for peer feedback a week before the final submission.

The consistency of the grading was determined by measuring interrater agreement. All teaching assistants were given the same subset of 15 project reports. These reports were mixed in with the other reports that each teaching assistant was assigned to grade (which were different for each teaching assistant). The teaching assistants were blinded as to whether project reports were used for the interrater agreement calibration or as part of their standard student assessments. Comparing the detailed rating of all calibration project reports, we found an average interrater agreement of 76%, i.e., the same rating (either 1.0, 0.5, or 0.0) was given on average 7.6 out of ten times. We found major discrepancies in only a few cases (1.8%), in which a few rubric questions that mainly received ratings of one also received ratings of zero and vice versa. The range of the interrater agreement for each of the 24 rubric questions varied between 60 and 96%. The lowest agreement (60%) was obtained for question 16: "Are standard deviations or standard errors used in a meaningful way?" This was concerning because estimating uncertainty was one of the major learning goals of the lab. Clearly, better instructions for teaching assistants with examples of how to interpret this question (in particular, the word "meaningful") are required in future studies. Three more questions, all in the "outstanding projects" category (Questions, 21, 22, and 24), had relatively low agreement (less than 70%). For these rubric questions, we relied on the raters' judgement and did not provide more specific instruction. Consequently, some teaching assistants interpreted these questions more generously than others. Given the involvement of eight raters, interrater agreement was satisfactory overall, but in the future, we would supply more detailed instructions and explanations for four of the rating questions. This could be addressed by providing annotated examples of project reports that demonstrate the difference between good and outstanding projects, as well as examples of meaningful versus incorrect use of standard deviations and standard errors.

In addition to performance, we were also interested in how students perceived the lab. We believe that designing an experiment and selecting equipment are authentic tasks that are part of experimental science. Did students see themselves as scientists when they did their capstone projects and performed their experiments at home? Did they enjoy the freedom of performing all experiments at home on their own time? To find out, we ran focus groups in the spring of 2016, just after the examination period of the spring term had ended. All 800 students from the 2015 fall term (Y2) were invited to participate in focus groups and 12 students volunteered and gave us their opinion of the distance lab. The focus groups were conducted on campus by two of us (S.A.R and G.W.R) with 2-4 participants and lasted for approximately 30 minutes. We did not ask the students for any identifying information, except for their faculty and year of study. The participants received a $10 gift card from our institution's bookstore for their input. The open-ended questions that guided the focus group interviews are listed in Table 4.

Table 4

Focus Group Interview Questions

| Q1 | What is your faculty (Science/Arts/etc.) and year of study? |

| Q2 | Describe in general terms a few things you have learned in the labs. |

| Q3 | Did you learn something in the labs that may be useful in other courses or outside of school? |

| Q4 | Did you enjoy doing the labs at home? |

| Q5 | Where do you see advantages and disadvantages of doing labs at home? |

| Q6 | Do you think that you were doing science in your final project? Please elaborate. |

| Q7 | Were you comfortable doing the labs at home or would you have preferred doing the labs in a teaching lab? |

| Q8 | Is there anything else you want to tell us about your experience with the physics labs? |

In consultation with the Applied Statistics and Data Science Group at our institution, we used ANOVA models to address our research questions related to students' performance in the two lab formats:

1a. Is the mean project score for the distance lab projects better or worse than the score for the campus lab projects, using the grading rubric?

1b. Which of the seven categories in rubric scores separate the campus lab projects from the distance projects?

To answer the first question, we used a two-factor ANOVA model to test the null hypothesis, i.e., that there is no significant difference between campus projects and distance projects. As our sample sizes were unequal, with a different number of campus projects and distance projects, we used a partial sum of squares. The dependent variable is the project percentage grade and the independent variables are the project type (distance or campus) and TA (we had eight teaching assistants grading the projects). We used a linear model to explain the project percentage grade from two main effects, project type and TA, as well as their interactions, TA × type. After ensuring that there were no significant interactions we used a model that contained only the main effects (TA and type), but not the interactions.

To answer our second research question, we built seven ANOVA models, one for each category. We followed the same procedure as for our first research question, but with the percentage score of the category as dependent variable. For both research questions, we worked with percentages rather than raw scores to account for the presence of N/As as a possible response to some rubric questions.

Although we have listed the data for projects completed individually in the distance lab in Table 2, for the comparison of the two lab formats (distance versus face-to-face) we only used data from projects that were performed by pairs of students. We discovered a small but significant effect in the 2015 data of whether capstone projects were completed individually or in pairs, with slightly better performance of the pairs; therefore, we excluded projects that were performed individually to eliminate a confounding of variables.

We also excluded the fifteen projects used for measuring the interrater agreement. We carried out the analysis using the R statistical software environment.

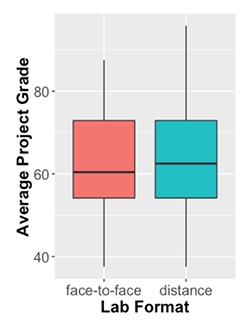

Average grades for the distance lab projects and for the campus lab projects were almost identical. Figure 1 presents the results of our analysis.

Figure 1. Average project grades in the two lab formats.

Our ANOVA showed no significant differences in the overall project marks between the campus lab projects and distance lab projects,

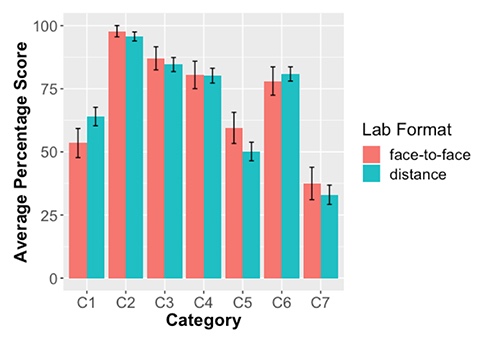

The data presented in Figure 2 addresses our research question, whether any rubric categories separate the campus lab projects from the distance lab projects (question 1b). Our analysis reveals significant differences in two of the seven categories, C1 (Clearly stated research question or clearly formulated goal),

Figure 2. Average percentage scores in the seven categories of the project grading rubric (see Table 3). The error bars show 95% confidence intervals.

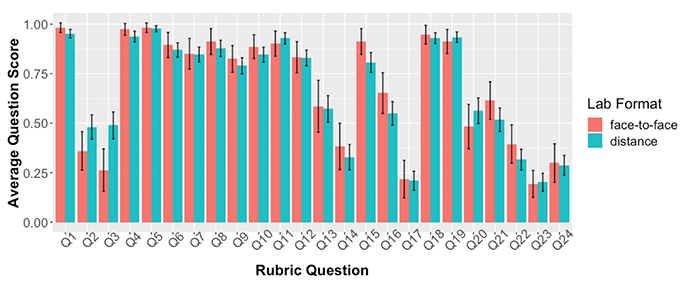

For completeness, the average scores for all 24 rubric questions for the 2014 students (campus labs) and the 2015 students (distance labs) are displayed in Figure 3.

Figure 3. Average scores obtained with the project grading tool. The error bars show 95% confidence intervals.

The focus groups generated valuable insights into students' perceptions of the distance labs and answered our second and third research questions. Several students commented that the lab did not seem to be connected to the lecture. Students in the focus groups largely perceived the distance labs as "easy," but also as a nuisance: finding suitable equipment was sometimes difficult and several students mentioned being in a rush close to the due dates. Most students in the focus group would therefore have preferred doing the experiments in a teaching lab where equipment and support was immediately available.

Most students in the focus groups said that most of their learning took place during the capstone project, but they did not perceive the project as "doing science." For them, it was hard to come up with a "sophisticated" research topic and their basic projects felt "a bit childish" to them. Several students mentioned their perception that "real science" is done with sophisticated equipment that yields precise data. However, two of the 12 students we interviewed enjoyed doing the labs at home. These students mentioned the freedom to design their experiments and the flexibility to do the labs on their own time as benefits of the distance lab format. One student said that experiments at home felt "more real" and another student said that she felt that she was doing science when analyzing the data of her project. All of the students we interviewed appreciated learning to analyze and present their data with their own spreadsheet software.

Our results allow for a fairly detailed comparison of student performance in our labs. While overall student performance was similar in the two lab formats, there were significant differences in two of the grading categories. We attribute the difference in C1 (Clearly stated research question or clearly formulated goal) to clearer instructions in the online documents provided for the distance lab. It is conceivable that students missed the importance of this part in the face-to-face introduction of the capstone project and perhaps did not pay close enough attention to the handouts. However, student performance in this category was not satisfactory in either lab format. We suggest that a lab report template with textboxes for the research question, hypothesis, goals, and motivation could address this problem.

The relatively low scores in C5 (Estimating uncertainty) are more concerning. They demonstrate that many students in both lab formats had difficulties applying the data analysis skills they were supposed to acquire in the first part of the labs. The observed difference in this category is not highly significant, but it seems that the campus lab format was somewhat more effective in teaching these more advanced skills. Although students who took the campus labs performed less well overall (Table 2), they performed better than distance lab students in this part of the assessment. Still, the results in this category were unsatisfying in both lab formats. We suggest that a scaffolding step is needed in which students can practice their data analysis skills before carrying out their capstone project. This could be in the form of a sample project where the data are provided and students are asked to perform the analysis and interpret the results.

All other categories show satisfactory outcomes with no significant differences between the two lab formats.

Before we discuss the results of our focus groups, we want to emphasize that we only interviewed students from the 2015 distance lab. A comparison of students' perceptions of two lab formats is therefore impossible.

The students in our focus groups gave mainly practical reasons related to time and finding equipment of why they did not generally enjoy the freedom of performing all experiments at home. Likewise, the lack of sophisticated equipment at home was given as the main reason of why they did not think they were performing an authentic scientific experiment for their capstone project. However, a look at the literature tells us that this perception cannot easily be remedied with better equipment.

In their work on student experiences in apprentice-style undergraduate research projects, Hunter, Laursen, and Seymour (2007) identified a number of factors that contributed to students' perceptions of becoming scientists and members of a community of practice. These were: working on an original research project, working closely with faculty and getting feedback from experts, presenting in group meetings and/or at conferences, learning how to operate sophisticated equipment, and making (small) contributions to the knowledge and progress in the field. The students involved in their study clearly perceived their research projects as authentic science. The absence of these factors helps explain why our capstone project did not offer an authentic scientific experience. Our lab (and probably most other introductory labs) would not be able to offer participation in original research, close collaboration with faculty, or regular group meetings. Moreover, although the experiments in our lab and the capstone project did not have any time constraints other than due dates, they took place during a busy term. In contrast, one of the undergraduate research students in Hunter et al.'s (2007) study described how they were able to take some time and "concentrate on one thing and figure it out" (p. 50). Given that the capstone project in our campus lab had the same constrains as in our distance lab, we assume that the students' perceptions of the capstone project in the campus lab were probably quite similar.

Nevertheless, we want to emphasize that engaging students in open-ended experiments and inquiry tasks is generally a good idea. In a recent paper, Holmes and Wieman (2018) present a summary of focus group interviews in which students described their experiences in traditional labs, project labs, and undergraduate research projects. Their study suggests that open-ended project labs like our capstone project engage learners in much more expert thinking tasks than traditional structured labs, but not as much as undergraduate research projects. The authors report that students view opportunities for making decisions as highly important.

We end this section with a discussion of the limitations of our study. The first limitation of our study is the fact that the two groups of participants were not from the same year. Although the same instructors ran the lab in both years, different teaching assistants were involved, which could have influenced the results. There were also minor differences in the performance of the two groups (Table 2). The second limitation of the study is that we only assessed the capstone project reports. We did not assess what students learned during the skill building phase, for example by administering a midterm lab test, which could have provided further insight into the differences between the two lab formats. The third limitation is that we did not run focus groups after the 2014 campus labs, which precludes a direct comparison of student perceptions. Although it is conceivable that students have similar views about the capstone project in both lab formats, there is no reason to assume that student perceptions of the skill-building phase are similar in the two lab formats.

In this article we presented an introductory hands-on project lab that could - after further improvements - be a suitable format for distance learning in physics and in engineering and a viable alternative to labs conducted on campus. It is currently run at a scale of 800 students with support and evaluation from teaching assistants and can potentially be scaled up further and used in MOOCs if the capstone project is peer-evaluated, which, however, has its own challenges.

The use of a new grading tool with 24 questions in seven grading categories provided us with a fine-grained assessment of student learning during the capstone project. Results show that overall students' learning outcomes were comparable between the campus and distance formats, but further improvements in the teaching of data analysis are necessary, in particular in the distance lab format. Focus groups revealed that students often did not perceive the capstone project as "doing science." It also appears that the flexibility afforded by the distance lab did not lead to increased student motivation. Students in our focus groups perceived the distance lab as "easy," but indicated a preference for doing experiments in a teaching lab where equipment and support is immediately available.

Should instructors consider adopting our distance lab format? The time constraints imposed by a busy term schedule cannot be changed for campus students and will always make it hard to carry out a capstone project. This challenge was present in both of our lab formats. We therefore suggest delivering our project lab as a stand-alone lab course that students could choose during a less busy term, for example the summer term. The distance lab format is particularly suitable for the summer term since it offers high flexibility in location and allows students to take this course even if they do not stay on campus for the summer. As we have shown in our study the distance lab format does not compromise learning and leads to the same overall performance as an identical lab delivered face-to-face on campus.

Abbott, H. (1998). Action@Distance: Using web and take-home labs. The Physics Teacher, 36(7), 399-402. doi: 10.1119/1.879904

Al-Shamali, F., & Connors, M. (2010). Low-cost physics home laboratory. In D. Kennepohl & L. Shaw (Eds.), Accessible elements: Teaching science online and at a distance (pp. 83-108). Edmonton, AB: AU Press.

American Association of Physics Teachers (1998). Goals of the introductory physics laboratory. American Journal of Physics, 66, 483-485. doi: 10.1119/1.19042

American Association of Physics Teachers (2014). AAPT recommendations for the undergraduate physics laboratory curriculum. Retrieved from American Association of Physics Teachers website: https://www.aapt.org/Resources/upload/LabGuidlinesDocument_EBendorsed_nov10.pdf

Brewer, S. E., Cinel, B., Harrison, M., & Mohr, C. L. (2013). First year chemistry laboratory courses for distance learners: Development and transfer credit acceptance. International Review of Research in Open and Distributed Learning, 14(3), 488-507. doi: 10.19173/irrodl.v14i3.1446

Brinson, J. R. (2015). Learning outcome achievement in non-traditional (virtual and remote) versus traditional (hands-on) laboratories: A review of the empirical research. Computers & Education, 87, 218-237. doi: 10.1016/j.compedu.2015.07.003

Cancilla, D. A., & Albon, S. P. (2008). Reflections from the Moving the Laboratory Online workshops: Emerging themes. Journal of Asynchronous Learning Networks, 12(3-4), 53-59. Retrieved from https://eric.ed.gov/?id=EJ837503

Casanova, R. S., Civelli, J. L., Kimbrough, D. R., Heath, B. P., & Reeves, J. H. (2006). Distance learning: A viable alternative to the conventional lecture-lab format in general chemistry. Journal of Chemical Education, 83(3), 501-507. doi: 10.1021/ed083p501

Etkina, E., Murthy, S., & Zou, X. (2006). Using introductory labs to engage students in experimental design. American Journal of Physics, 74, 979-986. https://doi.org/10.1119/1.2238885

Hallyburton, C.L., & Lunsford, E. (2013). Challenges and opportunities for learning biology in distance-based settings. Bioscene: Journal of College Biology Teaching, 39(1), 27-33. Retrieved from https://eric.ed.gov/?id=EJ1020526

Holmes, N. G., & Wieman, C. E. (2018). Introductory physics labs: We can do better. Physics Today, 71(1), 38. doi: 10.1063/PT.3.3816

Hunter, A. B., Laursen, S. L., & Seymour, E. (2007). Becoming a scientist: The role of undergraduate research in students' cognitive, personal, and professional development. Science Education, 91, 36-74. doi: 10.1002/sce.20173

Kennepohl, D. (2009). Science online and at a distance. American Journal of Distance Education, 23(3), 122-124. doi: 10.1080/08923640903080703

Klahr, D., Triona, L. M., & Williams, C. (2007). Hands on what? The relative effectiveness of physical versus virtual materials in an engineering design project by middle school children. Journal of Research in Science Teaching, 44(1), 183-203. doi: 10.1002/tea.20152

Lang, J. (2012). Comparative study of hands-on and remote physics labs for first year university level physics students. Transformative Dialogues: Teaching and Learning Journal, 6(1), 1-25. Retrieved from https://www.kpu.ca/sites/default/files/Teaching%20and%20Learning/TD.6.1.2_Lang_Comparative_Study_of_Physics_Labs.pdf

Lyall, R., & Patti, A. F. (2010). Taking the chemistry experience home—Home experiments or "Kitchen Chemistry". In D. Kennepohl, & L. Shaw (Eds.), Accessible elements: Teaching science online and at a distance (pp. 83-108). Edmonton, AB: AU Press.

Ma, J., & Nickerson, J. V. (2006). Hands-on, simulated, and remote laboratories: A comparative literature review. ACM Computing Surveys, 38(3), 1-24. doi: 10.1145/1132960.1132961

Mawn, M. V., Carrico, P., Charuk, K., Stote, K. S., & Lawrence, B. (2011). Hands-on and online: scientific explorations through distance learning. Open Learning: The Journal of Open, Distance and e-Learning, 26(2), 135-146. doi: 10.1080/02680513.2011.567464

Morgil, I., Gungor-Seyhan, H., Ural-Alsan, E., & Temel, S. (2008). The effect of web-based project applications on students' attitudes towards chemistry. Turkish Online Journal of Distance Education, 9(2), 220-237. Retrieved from https://files.eric.ed.gov/fulltext/ED501087.pdf

National Science Teachers Association (2019). NSTA Position Statement: The Integral Role of Laboratory Investigations in Science Instruction. Retrieved from https://www.nsta.org/about/positions/laboratory.aspx

Pyatt, K., & Sims, R. (2012). Virtual and physical experimentation in inquiry-based science labs: Attitudes, performance and access. Journal of Science Education and Technology, 21(1), 133-147. doi: 10.1007/s10956-011-9291-6

Rieger, G. W., Sitwell, M., Carolan, J., & Roll, I (2014). A "flipped" approach to large-scale first-year physics labs, Physics in Canada 70 (2), 126-128. Retrieved from http://www.cwsei.ubc.ca/SEI_research/files/Physics/Rieger-etal_FlippedLabs_PiC2014.pdf

Reif, F. & St. John, M. (1979). Teaching physicists' thinking skills in the laboratory. American Journal of Physics 47, 950-957. doi: 10.1119/1.11618

Reuter, R. (2009). Online versus in the classroom: Student success in a hands-on lab class. Journal of Distance Education, 23(3), 1-17. doi: 10.1080/08923640903080620

Rowe, R. J., Koban, L., Davidoff, A. J., & Thompson, K. H., (2017). Efficacy of online laboratory science courses. Journal of Formative Design in Learning, 2(1), 56-67. doi: 10.1007/s41686-017-0014-0

Tatli, Z., & Ayas, A. (2012). Virtual chemistry laboratory: effect of constructivist learning environment. Turkish Online Journal of Distance Education, 13(1), 183-199. Retrieved January 14, 2019 from https://eric.ed.gov/?id=EJ976940

Turner, J., & Parisi, A. (2008). A take-home physics experiment kit for on-campus and off-campus students. Teaching Science, 54(2), 20-23. Retrieved from https://eprints.usq.edu.au/4377/

Waldrop, M. M. (2013). Education online: The virtual lab. Nature, 499(7458), 268-270. Retrieved from http://www.nature.com/news/education-online-the-virtuallab-1.13383

Wieman, C. (2015). Comparative cognitive task analyses of experimental science and instructional laboratory courses. The Physics Teacher 53, 349. doi: 10.1119/1.4928349

Can a Hands-On Physics Project Lab be Delivered Effectively as a Distance Lab? by Firas Moosvi, Stefan A. Reinsberg, and Georg W. Rieger is licensed under a Creative Commons Attribution 4.0 International License.