Volume 22, Number 2

Florence Martin, PhD1, Doris U. Bolliger, EdD2, and Claudia Flowers, PhD3

1,3University of North Carolina Charlotte, 2Old Dominion University, Virginia

Course design is critical to online student engagement and retention. This study focused on the development and validation of an online course design elements (OCDE) instrument with 38 Likert-type scale items in five subscales: (a) overview, (b) content presentation, (c) interaction and communication, (d) assessment and evaluation, and (e) learner support. The validation process included implementation with 222 online instructors and instructional designers in higher education. Three models were evaluated which included a one-factor model, five-factor model, and higher-order model. The five-factor and higher-order models aligned with the development of the OCDE. The frequency of use of OCDE items was rated above the mean 4.0 except for two items on collaboration and self-assessment. The overall OCDE score was related to self-reported levels of expertise but not with years of experience. The findings have implications for the use of this instrument with online instructors and instructional designers in the design of online courses.

Keywords: online course design, design elements, instrument validation, confirmatory factor analysis, structural equation model

Higher education campus enrollment has decreased; however, the number of online courses and online enrollment has continued to increase (Allen & Seaman, 2017). Though online enrollment has increased, online student dropout and lack of engagement in distance education are still issues of concern. Dropout can be prevented through well-designed online courses (Dietz-Uhler et al., 2007). It is clear that high-quality course design is critical to the success of online courses. Several researchers have examined online course design in online learning. Jaggars and Xu (2016) examined the relationships among online course design features, course organization and presentation, learning objectives and assessment, and interpersonal interaction and technology. They found that design features influenced student performance, and interaction affected student grades. Swan (2001) found clarity of design, interaction with instructors, and active discussion influenced students’ perceived learning and satisfaction. Laurillard et al. (2013) recommended effective pedagogy to foster individual and social processes and outcomes, promote active engagement, and support learning with a needs assessment.

Some higher education institutions have developed or adopted rubrics to not only provide guidance for instructors’ course design efforts, but also to evaluate the design in online courses. Baldwin et al. (2018) reviewed six rubrics commonly used to evaluate the design of online courses. They identified 22 design standards that were included in several of the rubrics. We reviewed the research on five categories of design standards, namely (a) overview, (b) content presentation, (c) interaction and communication, (d) assessment and evaluation, and (e) learner support, and examined their impact on online learning.

Figure 1

Online Course Design Elements Framework

At the beginning of a course, it is critical to provide an overview or information for learners to get started in the online course (Bozarth et al., 2004; Jones, 2013). An overview or getting started module can include elements such as (a) a course orientation, (b) instructor contact information and instructor expectations, (c) course goals and objectives, and (d) course policies. An orientation must be designed to introduce the learners to the course intentionally and to guide them to the various aspects of the course. Bozarth et al. (2004) examined challenges faced by novice students in an online course and recommended creating an orientation to address these challenges. For example, they recommended clarifying the time commitment required in online courses. Jones (2013) found students felt better prepared for online learning after completing an orientation. At the beginning of the course, it is also essential to provide expectations regarding the quality of communication and participation in the online course (Stavredes & Herder, 2014).

Price et al. (2016) suggested that providing instructor contact information, with different ways to contact the instructor, is important in the online course; contact could be via e-mail, phone, or synchronous online communication tools. Instructor information can be presented as text or in an instructor’s introduction video so that the students can get to know the instructor better (Martin et al., 2018). It is also important for the instructor to provide standard response times, specifically for questions via e-mail or in discussion forum, in addition to feedback on submitted assignments. Most instructional design models and rubrics have emphasized the importance of measurable course goals and objectives (Chen, 2007; Czerkawski & Lyman, 2016). In online courses it is also important to include these goals and objectives in an area where online students can easily locate them. Finally, it is critical to state course policies for behavior expectations. Policies pertaining to netiquette, academic integrity, and late work need to be provided to all learners. In addition, Waterhouse and Rogers (2004) listed specific policies for privacy, e-mail, discussions, software standards, assignments, and technical help.

Online learning offers advantages in that content can be presented in various modalities. Some of the elements of content presentation include (a) providing a variety of instructional materials, (b) chunking content into manageable segments, (c) providing clear instructions, (d) aligning course content and activities with objectives, and (e) adapting content for learners with disabilities. Digital material can include (a) textbook readings, (b) instructor-created recorded video lectures, (c) content from experts in the form of audio or video, (d) Web resources, (e) animations or interactive games and simulations, and (f) scholarly articles (Stavredes & Herder, 2014). Learning management systems provide the functionality for embedding these varied instructional elements into the online course (Vai & Sosulski, 2016).

Ko and Rossen (2017) emphasized the importance of chunking content into manageable segments such as modules or units. Young (2006) found that students preferred courses that were structured and well organized. Instructions must be clearly written with sufficient detail. A critical aspect of content presentation includes instructional alignment—aligning instructional elements to both objectives and assessments. Czerkawski and Lyman (2016) emphasized the importance of aligning course content and activities in order to achieve objectives. In addition, it is imperative to include accommodations for learners with disabilities, such as providing (a) transcripts or closed captioning, (b) alternative text to accompany images, and (c) header information for tables. Dell et al. (2015) highlighted the importance of including information about the accessibility of all course technologies in the course.

Interaction and communication are critical in online courses. Some of the strategies to enhance interaction and communication include (a) providing opportunities for student-to-student interaction, (b) using activities to build community, (c) including collaborative activities to support active learning, and (d) using technology in such a way as to promote learner engagement and facilitate learning. Moore (1989) proposed an interaction framework, and listed student-student interaction as essential for online courses, in addition to student-content and student-instructor interaction. Moore stated that adult learners might be self-motivated to interact with peers, whereas younger learners might need some stimulation and motivation. Luo et al. (2017) highlighted that interaction assists in building a sense of community. These authors describe a sense of community “as values students obtain from interactions” with the online community (p. 154). Hence it is essential to intentionally design activities that build and maintain community in online courses. Strategies to build community include humanizing online courses by using videos and designing collaborative assignments that provide learners with opportunities to interact with peers (Liu et al., 2007). Shackelford and Maxwell (2012) found using introductions, collaborative group projects, whole-class discussions, as well as sharing personal experiences and resources predicted a sense of community. Online collaboration supports active learning as it involves lateral thinking, social empathy, and extensive ideation (Rennstich, 2019). Salmon (2013) described the importance of designing e-tivities for online participation and providing learners with scaffolding to achieve learning outcomes. A variety of technology systems and tools have been used to promote online learner engagement. Some of these technologies are e-mail, learning management systems, wikis, blogs, videos, social media, and mobile technologies (Anderson, 2017; Fathema et al., 2015; Pimmer et al., 2016).

Assessment and evaluation are essential in an online course to measure students’ learning outcomes and determine overall course effectiveness. Some of the strategies for well-designed assessment and evaluation include (a) aligning assessments with learning objectives, (b) providing several assessments throughout the course, (c) including grading rubrics for each assessment, (d) providing self-assessment opportunities for learners, and (e) giving students opportunities to provide feedback for course improvement. Dick (1996) emphasized the importance of aligned assessments in the instructional design process. Instructional design models recommend that assessments be aligned with learning objectives and instructional events. In addition, Quality Matters (2020) considered alignment between objectives, materials, activities, technologies, and assessments in online courses as essential because it helps students to understand the purpose of activities and assessments in relation to the objectives and instructional material.

Researchers have pointed out the importance of administering a variety of assessments throughout the course so that students can gauge their learning progress (Gaytan & McEwen, 2007). Martin et al. (2019) reported that award-winning online instructors recommend using rubrics for all types of assessments. Rubrics not only save time in the grading process, but they can assist instructors in providing effective feedback and supporting student learning (Stevens & Levi, 2013). Self-assessments help learners identify their progress towards the course outcomes.

Evaluation is an important element in course improvement. Kumar et al. (2019) found that expert instructors used mid- and end-semester surveys and student evaluations. They also use data from learning management systems and institutional course evaluations to improve courses. These practices illustrate the importance of providing learners with opportunities to give feedback to instructors.

Support is essential for online learners to be successful. Some of the strategies for providing support to the online learner include providing (a) intuitive and consistent course navigation, (b) media that can be easily accessed and viewed, (c) details for minimum technology requirements, and (d) resources for accessing technology and institutional support services. Support can be offered at the course, program, and college or institution level. At the course level, it is essential to provide learner support for easy and consistent navigation (Graf et al., 2010); otherwise, students can become easily frustrated and dissatisfied. Because online learners come from different backgrounds and have access to different resources, they may use various devices and platforms to access courses. Therefore, it is important to specify technology requirements and to design the course with media and files that can be easily viewed and accessed with mobile devices (Han & Shin, 2016; Ssekakubo et al., 2013). Additionally, it is important for the institution to provide a variety of support services (e.g., academic, technical).

Individuals with many years of experience in designing online courses tend to have a high level of expertise. Award-winning faculty who had designed and taught online courses were interviewed to identify important course design elements. These faculty members mentioned that they followed a systematic process. They chunked course content, aligned course elements using a backwards design approach, provided opportunities for learner interaction, and addressed the needs of diverse learners (Martin et al., 2019). Expert designers have “a rich and well-organized knowledge base in instructional design” (Le Maistre, 1998, p. 33). In general, compared to novice designers, they are more knowledgeable regarding design principles and are able to access a variety of resources (Perez et al., 1995).

The purpose of this study was to develop the Online Course Design Elements (OCDE) instrument and establish its reliability and construct validity. Baldwin et a. (2018) reviewed some of the few rubrics focus on online course design, most of these instruments have not been validated. Some of these rubrics were created by universities or at the state level.

Building on design elements from across the six rubrics examined in Baldwin et al. (2018), the OCDE captured the most common design elements from these various rubrics. This instrument filled the gap by designing a valid and reliable instrument that instructors and designers of online courses may use at no cost for developing or maintaining online courses. In addition to designing the instrument, we also examined whether years of experience or expertise was related to instructors’ and instructional designers’ use of design elements.

More specifically, the objectives of this study were to (a) develop an instrument to identify design elements frequently used in online courses, (b) validate the instrument by verifying its factor structure, and (c) examine the relationships of the latent variables to years of experience and self-reported level of expertise. While the instrument was validated in higher education, it can also be adapted and used by researchers and practitioners to other instructional contexts including K-12 and corporate.

This research was carried out in two phases. The first phase focused on the development of the OCDE instrument, and the second phase focused on validating the instrument. During the first phase, the research team developed the instrument, and the instrument was then reviewed by a panel who were experts in designing online courses and surveys. In the second phase, statistical analysis of reliability and validity of the instrument was conducted through a confirmatory factor analysis (CFA) and a multiple indicator multiple cause (MIMIC) model. CFA was used to test the conceptual measurement model implied in the design of the OCDE. The MIMIC was used to examine the relationships of the OCDE to participants’ years of experience and self-reported levels of expertise.

Development of the OCDE instrument was based on Baldwin et al. (2018) and their analysis of six online course rubrics: (a) Blackboard Exemplary Course Program Rubric (Blackboard, 2012); (b) Course Design Rubric (California Community Colleges Online Education Initiative, 2016); (c) QOLT Evaluation Instrument (California State University, 2015); (d) Quality Online Course Initiative (QOCI) Rubric (Illinois Online Network, 2015); (e) OSCQR Course Design Review (Online Learning Consortium, 2016); and (f) Specific Review Standards from the QM Higher Education Rubric (Quality Matters, 2020). Five of these rubrics are publicly available online, while one rubric is only available on a subscription basis or with permission. Baldwin et al. (2018) identified 22 standard online design components used in four of the six rubrics they analyzed.

After seeking the authors’ permission (Baldwin et al., 2018) to build on the results of their study, the 22 elements were used as the foundation of the OCDE instrument. We added critical elements to the instrument based on existing research (Jones, 2013; Luo et al., 2017; Stavredes & Herder, 2014). These included (a) a course orientation, (b) a variety of instructional materials, (c) student-to-instructor interaction, and (d) consistent course structure. The instrument that was reviewed by experts for face validity had 37 items in five categories. All items prompted respondents to indicate how frequently they used the design elements on a Likert scale ranging from 1 (Never) to 5 (Always).

Four experts were provided with a digital copy of the instrument and instructions to evaluate the clarity and fit of all items, make changes, and add or delete relevant items. Once their review was completed, the experts returned the instrument with feedback by e-mail to the lead researcher. Experts were selected based on their expertise and experience in online or blended teaching in higher education and their expertise in survey research methodology. Two experts were research methodologists with expertise in teaching online, and two experts were online learning experts. The researchers discussed the expert feedback, and several items were revised based on the reviewers’ feedback. Some of the changes recommended by the experts were to (a) provide examples for the items in parenthesis, (b) add an item regarding major course goals, (c) delete additional items on course objectives, and (d) modify the wording of some items. The final version of the instrument included 38 items with Likert scale responses (Table 1).

Table 1

Design Categories and Number of Items

| Category | Number of items | |

| Baldwin et al. (2018) | Current study | |

| Overview | 5 | 11 |

| Content presentation | 3 | 6 |

| Interaction and communication | 4 | 7 |

| Assessment and evaluation | 6 | 7 |

| Learner support | 4 | 7 |

| Total | 22 | 38 |

Data were collected in the Spring 2020 semester with the use of an online Qualtrics survey that was housed on a protected server. All subscribers to e-mail distribution lists of two professional associations received an invitation to participate in the study. Members of these organizations work with information or instructional technologies in industry or higher education as instructors, instructional designers, or in different areas of instructional support. Therefore, these individuals have varied experience in designing and supporting online courses. Additionally, invitations to participate in the study were posted to groups of these organizations on one social networking site. In order to increase the response rate, one reminder was sent or posted after two weeks. All responses were voluntary and anonymous, and no incentives were provided to participants.

A total of 222 respondents completed the survey including 101 online instructors and 121 instructional designers who were involved with online course design. Most of the respondents identified as female (n = 158; 71%). The average age of respondents was 48 years (SD = 10.74) and the average years of experience was 10.54 (SD = 6.93). Nearly half of respondents (n = 107; 48%) rated their level of expertise as expert, 29% identified as proficient, 15% as competent, and 5% identified as advanced beginner. Only one individual was a novice.

Descriptive statistics were reported at both the item level and the category level. After the data collection, three models were evaluated: (a) Model 1, a one-factor model; (b) Model 2, a five-factor model; and (c) Model 3, a five-factor higher-order model. The five-factor and higher-order models align with the development of the OCDE. Model 1 specified a unidimensional construct and endorsed the use of a total score instead of subscales. This model was examined to determine if the covariance among items was due to a single common factor. Model 2 specified a correlated five-factor model with eleven items loading on the overview factor (items 1-1), six items loading on the content presentation factor (items 13-18), seven items loading on the remaining factors of interaction and communication (items 20-26), assessment and evaluation (items 28-34), and learner support (items 36-42). Model 3 specified the same factor structure as Model 2 but included a second-order factor of OCDE. Correlated error variances were used to modify the model if the re-specification agreed with theory. In order to determine the best model, both statistical criteria and information about the parameter estimates were used. Because the models are not nested and statistical tests of differences between models were not available using weighted least square mean and variance adjusted (WLSMV) estimations (e.g., DIFFTEST or Akaike’s Information Criterion), no statistical tests of differences were conducted.

All models were tested with M plus 7.11 (Muthén & Muthén, 2012) using WLSMV estimator, which was designed for ordinal data (Li, 2016), and a polychoric correlation matrix.

The pattern coefficient for the first indicator of each latent variable was fixed to 1.00. Indices of model-data fit considered were chi-square test, root mean square error of approximation (RMSEA), standardized root mean squared residual (SRMR), and comparative fit index (CFI). For RMSEA, Browne and Cudeck (1992) suggested that values greater than .10 might indicate a lack of fit. CFI values greater than .90, which indicates that the proposed model is greater than 90% of than that of the baseline model, will serve as an indicator of adequate fit (Kline, 2016). Perfect model fit is indicated by SRMR = 0, and values greater than .10 may indicate poor fit (Kline, 2016). All models are overidentified indicating there is more than enough information in the data to estimate the model parameters.

After determining the best fitting model, a multiple indicators multiple cause model (MIMIC) was conducted to examine the a priori hypothesis that years of experience and level of expertise would have positive relationships with the latent variables of the OCDE. Specifically, we hypothesized years of experience and level of expertise to have a positive relationship to the latent variables.

In this section we review the data screening process, the descriptive statistics from the OCDE implementation, the validation of OCDE, and examination of the relationship between OCDE and variables of years of experience and level of expertise.

Initially, 238 individuals responded to the survey invitation; however, 16 cases had one-third or more data missing, and these 16 cases were deleted from the data set. Missing values for all variables did not exceed 1.4% (i.e., three respondents). Little’s (1988) Missing Completely at Random (MCAR) test was not statistically significant, χ2 = 113.76, df = 142, p = .961, suggesting that values could be treated as missing completely at random. These missing values were estimated using expectation-maximization algorithm (EM). All values were within range and no univariate or multivariate outliers were detected. Because the data were ordinal in nature, WLSMV estimations were used to estimate all parameters of the model. WLSMV is specifically designed for ordinal data (e.g., Likert-type data) and makes no distributional assumptions about the observed variables (Li, 2016). The variance inflation factor for all items were below 5.0, suggesting multicollinearity was not problematic.

The means and standard deviations for all items are reported in Table 2. All means exceeded 4.0 (on a 5-point scale; 1 = Never, 2 = Rarely, 3 = Sometimes, 4 = Often, and 5 = Always) except for two items. Reliability coefficients, as estimated using Cronbach’s alpha, were (a) .91 for all 38 items; (b) .82 for overview; (c) .66 for content presentation; (d) .83 for interaction and communication; (e) .73 for assessment and evaluation; and (f) .76 for learner support. Reliability coefficients greater than .70 are generally acceptable, values greater than .80 are adequate, and values greater than .90 are good (Kline, 2016; Nunnally & Bernstein, 1994). Given the values of the reliability coefficients, making inferences about individual respondents’ performance on the subdomains was not recommended. The correlation coefficients among the items ranged from .05 to .62. The correlation matrix for the items can be made available upon request.

Table 2

Means and Standard Deviations for Survey Items

| Category and item | M | SD |

| Overview (Cronbach’s alpha = .82) | ||

| 1. A student orientation (e.g., video overview of course elements) | 4.22 | 1.125 |

| 2. Major course goals | 4.82 | 0.579 |

| 3. Expectations regarding the quality of students’ communication (e.g., netiquette) | 4.50 | 0.886 |

| 4. Expectations regarding student participation (e.g., timing, frequency) | 4.64 | 0.822 |

| 5. Expectations about the quality of students’ assignments (e.g., good examples) | 4.22 | 0.994 |

| 6. The instructor’s contact information | 4.86 | 0.573 |

| 7. The instructor’s availability for office hours | 4.50 | 1.084 |

| 8. A biography of the instructor | 4.23 | 1.140 |

| 9. The instructor’s response time to e-mails and/or phone calls | 4.31 | 1.145 |

| 10. The instructor’s turn-around time on feedback to submitted assignments | 4.15 | 1.207 |

| 11. Policies about general expectations of students (e.g., late assignments, academic honesty) | 4.77 | 0.697 |

| Mean for overview category | 4.47 | 0.582 |

| Content presentation (Cronbach’s alpha = .66) | ||

| 13. A variety of instructional materials (e.g., textbook readings, video recorded lectures, web resources) | 4.68 | 0.524 |

| 14. Accommodations for learners with disabilities (e.g., transcripts, closed captioning) | 4.22 | 1.096 |

| 15. Course information that is chunked into modules or units | 4.84 | 0.527 |

| 16. Clearly written instructions | 4.81 | 0.485 |

| 17. Course activities that promote achievement of objectives | 4.77 | 0.589 |

| 18. Course objectives that are clearly defined (e.g., measurable) | 4.73 | 0.650 |

| Mean for content presentation category | 4.67 | 0.413 |

| Interaction and communication (Cronbach’s alpha = .83) | ||

| 20. Opportunities for students to interact with the instructor | 4.55 | 0.728 |

| 21. Required student-to-student interaction (e.g., graded activities) | 4.15 | 0.981 |

| 22. Frequently occurring student-to-student interactions (e.g., weekly) | 4.04 | 0.988 |

| 23. Activities that are used to build community (e.g., icebreaker activities, introduction activities) | 4.08 | 1.024 |

| 24. Collaborative activities that support student learning (e.g., small group assignments) | 3.73 | 1.025 |

| 25. Technology that is used to promote learner engagement (e.g., synchronous tools, discussion forums) | 4.53 | 0.747 |

| 26. Technologies that facilitate active learning (e.g., student-created artifacts) | 4.42 | 0.856 |

| Mean for interaction and communication category | 4.21 | 0.640 |

| Assessment and evaluation (Cronbach’s alpha = .73) | ||

| 28. Assessments that align with learning objectives | 4.82 | 0.527 |

| 29. Formative assessments to provide feedback on learner progress (e.g., discussions, practice activities) | 4.61 | 0.675 |

| 30. Summative assessments to measure student learning (e.g., final exam, final project) | 4.64 | 0.690 |

| 31. Assessments occurring throughout the course | 4.65 | 0.647 |

| 32. Rubrics for graded assignments | 4.45 | 0.853 |

| 33. Self-assessment options for learners (e.g., self-check quizzes) | 3.55 | 1.048 |

| 34. Opportunity for learners to give feedback on course improvement | 4.37 | 0.867 |

| Mean for assessment and evaluation category | 4.44 | 0.480 |

| Learner support (Cronbach’s alpha = .76) | ||

| 36. Easy course navigation (e.g., menus) | 4.77 | 0.589 |

| 37. Consistent course structure (e.g., design, look) | 4.78 | 0.555 |

| 38. Easily viewable media (e.g., streamed videos, optimized graphics) | 4.63 | 0.675 |

| 39. Media files accessible on different platforms and devices (e.g., tablets, smartphones) | 4.27 | 0.920 |

| 40. Minimum technology requirements (e.g., operating systems) | 4.25 | 1.148 |

| 41. Resources for accessing technology (e.g., guides, tutorials) | 4.27 | 0.906 |

| 42. Links to institutional support services (e.g., help desk, library, tutors) | 4.59 | 0.811 |

| Mean for learner support category | 4.51 | 0.545 |

*Note. Q12, Q19, Q27, and Q35 were open-ended questions and were not included in Table 2.

The results of the CFA are shown in Table 3. In all the of the analyses, the chi-square goodness-of-fit statistics were statistically significant. This suggest that none of the models fit perfectly. The other goodness-of-fit statistics suggested a reasonable fit for the models, except for Model 1. For Model 1, the Comparative Fit Index - CFI (.815), Tucker-Lewis Index -TLI (.815), Root Mean Square Error of Approximation - RMSEA (.082), and Standardized Root Mean Square Residual - SRMR (.129) exceeded the criteria, which suggest a one-factor model is not supported by the data. While Models 2 and 3 had reasonable fit, examinations of the residual correlation matrix suggested there was some local misfit, and both Models 2 and 3 were modified to improve the fit.

Models 2 and 3 modifications allowed for four correlated error variances between observed variables. Specifically, all of the correlated error variables were between items in the same factor. For the overview factor, the error variance for item 9 (instructor’s response time to e-mails and/or phone calls) was allowed to correlate with item 10 (instructor’s turnaround time for feedback on submitted assignments). The error variance for item 8 (a biography of the instructor) was correlated with item 9 (instructor’s response time to e-mails and/or phone calls). The two items in the interaction and communication factor with correlated error variances were item 21 (required student-to-student interaction, such as graded activities) and item 22 (frequently occurring student-to-student interactions, such as weekly). In the learner support factor, the error variance for item 36 (easy course navigation, such as menus) correlated with item 37 (consistent course structure, such as design and look). The goodness-of-fit statistics are reported in Table 3. For both modified models, the chi-square goodness-of-fit statistics were statistically significant, but the other fit statistics suggested an acceptable fit of the observed covariance to the model-implied covariance.

Table 3

Goodness-of-Fit Statistics

| Model | χ2 | df | CFI | TLI | RMSEA | RMSEA 90%CI | SRMR |

| Model 1 | 1667.37 | 665 | .825 | .815 | .082 | [.077,.087] | .129 |

| Model 2 | 1150.18 | 655 | .914 | .907 | .058 | [.053,.064] | .106 |

| Model 3 | 1135.84 | 660 | .917 | .911 | .057 | [.051,.063] | .106 |

| Modified | |||||||

| Model 2-mod | 1012.11 | 651 | .940 | .930 | .050 | [.044,.056] | .097 |

| Model 3-mod | 1002.85 | 656 | .939 | .935 | .049 | [.043,.055] | .098 |

*Note. Model 1 = one factor; Model 2 = five factors; Model 3 = higher order factor; Model 2-mod = five factors with correlated error variances (items 8 with 9, 9 with 10, 21 with 22, and 36 with 37); Model 3-mod = Higher order with correlated error variances (items 8 with 9, 9 with 10, 21 with 22, and 36 with 37).

The correlation between the five factors (reported in Table 4) ranged between .48 to .85. This suggests shared variance among the factors. Given the size of the correlation coefficients and large degree of overlap among the factors, the modified Model 3 appears to be the best model and is discussed in greater detail.

Table 4

Correlation Coefficients Among the Five Factors of the OCDE

| Factor | 1 | 2 | 3 | 4 |

| 1. Overview | ||||

| 2. Content presentation | .81 | |||

| 3. Interaction and communication | .68 | .68 | ||

| 4. Assessment and evaluation | .85 | .65 | .65 | |

| 5. Learner support | .60 | .68 | .48 | .60 |

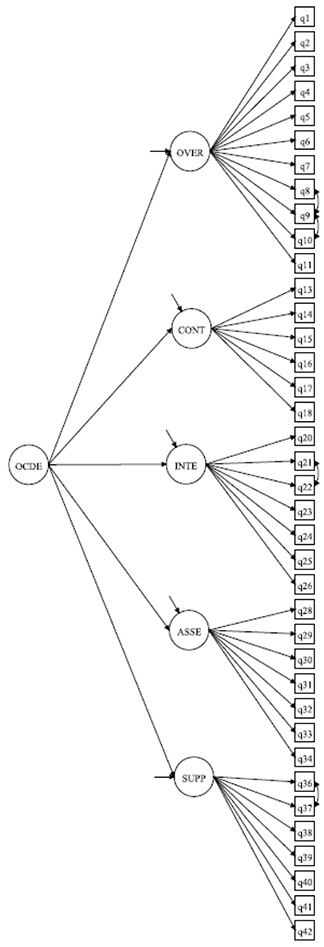

The unstandardized and standardized pattern coefficients for Model 3 modified are reported in Table 5. All coefficients are statistically significant (p < .001). Several of the standardized coefficients fell below .70, indicating that over half of the variance is unaccounted for in the model. The path coefficients between the factors and the second order factor ranged from .67 to .93 and were statistically significant. The recommended model is shown in Figure 2. Note that the covariances among the factors are not included in the figure.

Table 5

Unstandardized, Standardized Pattern Coefficients, and Standard Error (SE) for the Higher-Order Model

| Factor | Item/Factor | Unstandardized | Standardized | |

| Coefficient | SE | Coefficient | ||

| Overview | Q1 | 1.00 | .00 | .47 |

| Q2 | 1.75 | .28 | .83 | |

| Q3 | 1.60 | .21 | .75 | |

| Q4 | 1.65 | .22 | .78 | |

| Q5 | 1.29 | .23 | .61 | |

| Q6 | 1.93 | .29 | .91 | |

| Q7 | 1.34 | .24 | .63 | |

| Q8 | 1.23 | .20 | .58 | |

| Q9 | 1.45 | .24 | .69 | |

| Q10 | 1.35 | .22 | .64 | |

| Q11 | 1.78 | .27 | .84 | |

| Content presentation | Q13 | 1.00 | .00 | .51 |

| Q14 | .88 | .16 | .45 | |

| Q15 | 1.32 | .18 | .67 | |

| Q16 | 1.47 | .21 | .75 | |

| Q17 | 1.84 | .27 | .94 | |

| Q18 | 1.62 | .23 | .83 | |

| Interaction and communication | Q20 | 1.00 | .00 | .79 |

| Q21 | .95 | .07 | .75 | |

| Q22 | .75 | .07 | .60 | |

| Q23 | 1.03 | .08 | .82 | |

| Q24 | .75 | .09 | .59 | |

| Q25 | .95 | .08 | .75 | |

| Q26 | .84 | .08 | .67 | |

| Assessment and evaluation | Q28 | 1.00 | .00 | .95 |

| Q29 | .72 | .06 | .68 | |

| Q30 | .75 | .06 | .71 | |

| Q31 | .86 | .06 | .81 | |

| Q32 | .72 | .05 | .68 | |

| Q33 | .40 | .06 | .38 | |

| Q34 | .53 | .07 | .50 | |

| Learner support | Q36 | 1.00 | .00 | .85 |

| Q37 | .78 | .09 | .66 | |

| Q38 | .97 | .09 | .82 | |

| Q39 | .77 | .08 | .65 | |

| Q40 | .86 | .08 | .73 | |

| Q41 | .78 | .09 | .66 | |

| Q42 | 1.00 | .10 | .85 | |

| OCDE higher-order | OVER | 1.00 | .00 | .90 |

| CONT | 1.12 | .20 | .93 | |

| INTE | 1.35 | .20 | .73 | |

| ASSE | 2.06 | .29 | .93 | |

| SUPP | 1.34 | .19 | .67 | |

*Note. OVER = overview, CONT = content presentation, INTE = interaction and communication, ASSE = assessment and evaluation, and SUPP = learner support.

Figure 2

Best Fitting Higher-Order Model

A MIMIC model was conducted to examine the relationship between OCDE higher-order latent factor and the predictor variables of years of experience and level of expertise. The results suggested that level of expertise was a statistically significant predictor of OCDE (unstandardized coefficient = .09, SE = .04, standardized coefficient = .23), but years of experience was not statistically significant (unstandardized coefficient < .01, SE < .01, standardized coefficient < .01). This suggested for a one unit increase in the self-report level of expertise, there was about a.23 standard deviation increase in OCDE score.

In this section, we discuss instructors’ and instructional designers’ frequency of use of the design elements, validation of the OCDE instrument, and the significance of expertise but not experience in course design.

In this implementation with 222 respondents, except for two items, the frequency of use of OCDE items was rated above a Mean of 4.0. With 36 items rated above 4.0, the various design elements in the OCDE are those frequently used in online courses. The two items that were rated below 4.0 were collaborative activities that support student learning (M = 3.73) and self-assessment options for learners (M = 3.55). This demonstrates that collaborative activities and self-assessment options may not be included as often in the online courses as compared to the rest of the items in the OCDE instrument. Based on research and existing literature, these items are important in student learning. Martin and Bolliger (2018) pointed out the importance of collaboration using online communication tools to engage learners in online learning environments. Capdeferro and Romero (2012) recognized that online learners were frustrated with collaborative learning experiences and provided a list of recommendations for distance education stakeholders in order to improve learners’ experiences in computer-supported collaborative learning environments. Castle and McGuire (2010) discussed student-self assessment in online, blended, and face-to-face courses. Perhaps additional guidance or professional development needs to be provided for instructors on how to include these two aspects in the effective design and development of good quality online courses.

Evidence from Models 2 and 3 in this study supports inferences from OCDE. The total score demonstrates good reliability and factor structure. However, due to low reliability coefficients especially in the content presentation subscale where the reliability coefficient was at.66, caution needs to be taken if the factors or subscales are used individually. High correlation was found among the subscales, especially between overview and content presentation (.81), and overview and assessment and evaluation (.85). The OCDE instrument is recommended to be used as a whole, but due to low reliability coefficients, caution is advised if using individual subscales of overview, content presentation, interaction and communication, assessment, and evaluation and learner support.

The overall OCDE score is related to self-reported level of expertise. However, years of experience is not related to the OCDE. The perceived level of expertise was reported as expert, proficient, competent, advanced beginner, or novice. The level of expertise in online course design was a statistically significant predictor of the OCDE score, whereas years of experience was not. Perez et al. (1995) stated that compared to novice designers, expert designers use more design principles and access a variety of knowledge sources. A previous study suggested that experts are not just those with wealth of experience from their years teaching online (Martin et al., 2019) but also those who have the expertise and fluency.

While expertise can be developed with experience over time, this is not the only way to acquire it. Research on online learning strategies that focus on instructors’ years of experience might help us understand whether online teaching experience obtained over time makes one an expert instructor. Shanteau (1992) recommended that instead of focusing on their years of teaching experience, experts should be identified based on peer recommendations. Some of the characteristics of expert online instructors identified by Kumar et al. (2019) include (a) possessing a wide range of strategies, (b) knowing how to adapt materials for an online format, (c) choosing content and activities carefully, (d) monitoring activities continuously, and (e) tweaking and evaluating a course.

There were some limitations to this study. The elements included in this study are not an exhaustive list for the design and development of good quality online courses, though the OCDE was developed from the summary of six instruments, and from research and expert review. The reliability coefficients of some subscales were below.80, suggesting that rather than make decisions about individual subscales, the results of the study suggest that the research-based instrument can provide useful aggregated information to practitioners. As well, since the data are self-reported, social desirability may have been a factor in some of the participants’ responses. In addition, the OCDE was implemented with a relatively small sample of instructors and instructional designers most of whom were based in the United States.

The goal of the study was to develop and validate an instrument to address critical elements in online course design. Results show that the OCDE with its five constructs—overview, content presentation, interaction and communication, assessment and evaluation, and learner support—is a valid and reliable instrument. When relationships of the latent variables to years of experiences and self-reported level of expertise were examined, results indicated that the level of expertise in online course design was a statistically significant predictor of the OCDE score. The OCDE instrument was implemented in higher education. However, practitioners and researchers may adapt and use the instrument for design and research in different settings.

Researchers should continue to examine design elements that are not included in the OCDE and implement them in different settings such as K-12, community colleges, and other instructional settings. This study may be replicated with a larger sample or with participants who teach or support faculty in a variety of disciplines. Using the instrument in other countries, particularly where online teaching and learning is still either a novelty or not as established as in the United States would be worthwhile.

The OCDE can be used to support online teaching and design professional development for instructors and instructional designers, particularly those who are novices or beginners. Instructional designers can offer training for instructors using the OCDE as a checklist. Instructors who are interested in teaching online may also use this rubric to guide their course design.

We would like to thank the members of the review panel who generously volunteered their time and expertise to provide us with valuable feedback that led to the improvement of the OCDE instrument. Members of the expert panel were: Drs. Lynn Ahlgrim-Delzell, Drew Polly, Xiaoxia Newton, and Enoch Park at the University of North Carolina-Charlotte.

Allen, I. E., & Seaman, J. (2017). Digital learning compass: Distance education enrollment report 2017. https://onlinelearningsurvey.com/reports/digtiallearningcompassenrollment2017.pdf

Anderson, K. (2017). Have we reached an inflection point in online collaboration? From e-mail to social networks, online collaboration has evolved fast—as have users. Research Information, 92, 24.

Baldwin, S., Ching, Y-H., & Hsu, Y-C. (2018). Online course design in higher education: A review of national and statewide evaluation instruments. TechTrends, 62(1), 46-57. https://doi.org/10.1007/s11528-017-0215-z

Blackboard. (2012). Blackboard exemplary course program rubric. https://www.blackboard.com/resources/are-your-courses-exemplary

Bozarth, J., Chapman, D. D., & LaMonica, L. (2004). Preparing for distance learning: Designing an online student orientation course. Journal of Educational Technology & Society, 7(1), 87-106. https://www.jstor.org/stable/jeductechsoci.7.1.87

Browne, M. W., & Cudeck, R. (1992). Alternative ways of assessing model fit. Sociological Methods & Research, 21(2), 230-258. https://doi.org/10.1177/0049124192021002005

California Community Colleges Online Education Initiative. (2016). Course design rubric. http://cvc.edu/wp-content/uploads/2016/11/OEI_CourseDesignRubric Nov2016-3.pdf

California State University. (2015). QOLT evaluation rubric. https://cal.sdsu.edu/_resources/docs/QOLT%20Instrument.pdfCapdeferro, N., & Romero, M. (2012). Are online learners frustrated with collaborative learning experiences? International Review of Research in Open and Distributed Learning, 13(2), 26-44. https://doi.org/10.19173/irrodl.v13i2.1127

Castle, S. R., & McGuire, C. J. (2010). An analysis of student self-assessment of online, blended, and face-to-face learning environments: Implications for sustainable education delivery. International Education Studies, 3(3), 36-40. https://doi.org/10.5539/ies.v3n3p36

Chen, S.-J. (2007). Instructional design strategies for intensive online courses: An objectivist-constructivist blended approach. Journal of Interactive Online Learning, 6(1), 72-86. http://www.ncolr.org/jiol/issues/pdf/6.1.6.pdf

Czerkawski, B. C., & Lyman, E. W. III. (2016). An instructional design framework for fostering student engagement in online learning environments. TechTrends, 60(6), 532-539. https://doi.org/10.1007/s11528-016-0110-z

Dell, C. A., Dell, T. F., & Blackwell, T. L. (2015). Applying universal design for learning in online courses: Pedagogical and practical considerations. Journal of Educators Online, 12(2), 166-192. https://doi.org/10.9743/jeo.2015.2.1

Dick, W. (1996). The Dick and Carey model: Will it survive the decade? Educational Technology Research and Development, 44(3), 55-63. https://doi.org/10.1007/BF02300425

Dietz-Uhler, B., Fisher, A., & Han, A. (2007). Designing online courses to promote student retention. Journal of Educational Technology Systems, 36(1), 105-112. https://doi.org/10.2190/ET.36.1.g

Fathema, N., Shannon, D., & Ross, M. (2015). Expanding the technology acceptance model (TAM) to examine faculty use of learning management systems (LMSs) in higher education institutions. Journal of Online Learning & Teaching, 11(2), 210-232. https://jolt.merlot.org/Vol11no2/Fathema_0615.pdf

Gaytan, J., & McEwen, B. C. (2007). Effective online instructional and assessment strategies. American Journal of Distance Education, 21(3), 117-132. https://doi.org/10.1080/08923640701341653

Graf, S., Liu, T.-C., & Kinshuk. (2010). Analysis of learners’ navigational behaviour and their learning styles in an online course. Journal of Computer Assisted Learning, 26(2), 116-131. https://doi.org/10.1111/j.1365-2729.2009.00336.x

Han, I., & Shin, W. S. (2016). The use of a mobile learning management system and academic achievement of online students. Computers & Education, 102, 79-89. https://doi.org/10.1016/j.compedu.2016.07.003

Illinois Online Network. (2015). Quality online course initiative (QOCI) rubric. University of Illinois. https://www.uis.edu/ion/resources/qoci/

Jaggars, S. S., & Xu, D. (2016). How do online course design features influence student performance? Computers & Education, 95, 270-284. https://doi.org/10.1016/j.compedu.2016.01.014

Jones, K. R. (2013). Developing and implementing a mandatory online student orientation. Journal of Asynchronous Learning Networks, 17(1), 43-45. https://doi.org/10.24059/olj.v17i1.312

Kline, R. B. (2016). Principles and practice of structural equation modeling (4th ed.). Guilford Press.

Ko, S., & Rossen, S. (2017). Teaching online: A practical guide (4th ed.). Routledge.

Kumar, S., Martin, F., Budhrani, K., & Ritzhaupt, A. (2019). Award-winning faculty online teaching practices: Elements of award-winning courses. Online Learning, 23(4), 160-180. http://dx.doi.org/10.24059/olj.v23i4.2077

Laurillard, D., Charlton, P., Craft, B., Dimakopoulos, D., Ljubojevic, D., Magoulas, G., Masterman, E., Pujadas, R., Whitley, E.A., & Whittlestone, K. (2013). A constructionist learning environment for teachers to model learning designs. Journal of Computer Assisted Learning, 29(1), 15-30. https://doi.org/10.1111/j.1365-2729.2011.00458.x

Le Maistre, C. (1998). What is an expert instructional designer? Evidence of expert performance during formative evaluation. Educational Technology Research and Development, 46(3), 21-36. https://doi.org/10.1007/BF02299759

Li, C.-H. (2016). Confirmatory factor analysis with ordinal data: Comparing robust maximum likelihood and diagonally weighted least squares. Behavior Research Methods, 48(3), 936-949. https://doi.org/10.3758/s13428-015-0619-7

Little, R. J. A. (1988). A test of missing completely at random for multivariate data with missing values. Journal of the American Statistical Association, 83(404), 1198-1202.

Liu, X., Magjuka, R. J., Bonk, C. J., & Lee, S.-H. (2007). Does sense of community matter? An examination of participants’ perceptions of building learning communities in online courses. Quarterly Review of Distance Education, 8(1), 9-24.

Luo, N., Zhang, M., & Qi, D. (2017). Effects of different interactions on students’ sense of community in e-learning environment. Computers & Education, 115, 153-160. https://doi.org/10.1016/j.compedu.2017.08.006

Martin, F., & Bolliger, D. U. (2018). Engagement matters: Student perceptions on the importance of engagement strategies in the online learning environment. Online Learning, 22(1), 205-222. http://dx.doi.org/10.24059/olj.v22i1.1092

Martin, F., Wang, C., & Sadaf, A. (2018). Student perception of helpfulness of facilitation strategies that enhance instructor presence, connectedness, engagement and learning in online courses. The Internet and Higher Education, 37, 52-65. https://doi.org/10.1016/j.iheduc.2018.01.003

Martin, F., Ritzhaupt, A., Kumar, S., & Budhrani, K. (2019). Award-winning faculty online teaching practices: Course design, assessment and evaluation, and facilitation. The Internet and Higher Education, 42, 34-43. https://doi.org/10.1016/j.iheduc.2019.04.001

Moore, M. G. (1989). Three types of interaction [Editorial]. American Journal of Distance Education, 3(2), 1-7. https://doi.org/10.1080/08923648909526659

Muthén, L. K., & Muthén, B. O. (2012). M plus (Version 7.11) [Computer software]. M plus. https://www.statmodel.com/

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric theory (3rd ed.). McGraw-Hill.

Online Learning Consortium. (2016). OSCQR course design review. https://s3.amazonaws.com/scorecard-private-uploads/OSCQR+version+3.1.pdf

Perez, R. S., Johnson, J. F., & Emery, C. D. (1995). Instructional design expertise: A cognitive model of design. Instructional Science, 23(5-6), 321-349. https://doi.org/10.1007/BF00896877

Pimmer, C., Mateescu, M., & Grohbiel, U. (2016). Mobile and ubiquitous learning in higher education settings. A systematic review of empirical studies. Computers in Human Behavior, 63, 490-501. https://doi.org/10.1016/j.chb.2016.05.057

Price, J. M., Whitlatch, J., Maier, C. J., Burdi, M., & Peacock, J. (2016). Improving online teaching by using established best classroom teaching practices. Journal of Continuing Education in Nursing, 47(5), 222-227. https://doi.org/10.3928/00220124-20160419-08

Quality Matters. (2020). Specific review standards from the QM Higher Education Rubric (6th ed.). https://www.qualitymatters.org/sites/default/files/PDFs/StandardsfromtheQMHigherEducationRubric.pdf

Rennstich, J. K. (2019). Creative online collaboration: A special challenge for co-creation. Education and Information Technologies, 24(2), 1835-1836. https://doi.org/10.1007/s10639-019-09875-6

Salmon, G. (2013). E-tivities: The key to active online learning. Routledge.

Shackelford, J. L., & Maxwell, M. (2012). Sense of community in graduate online education: Contribution of learner to learner interaction. The International Review of Research in Open and Distributed Learning, 13(4), 228-249. https://doi.org/10.19173/irrodl.v13i4.1339

Shanteau, J. (1992). Competence in experts: The role of task characteristics. Organizational Behavior and Human Decision Processes, 53(2), 252-266. https://doi.org/10.1016/0749-5978(92)90064-E

Ssekakubo, G., Suleman, H., & Marsden, G. (2013). Designing mobile LMS interfaces: Learners’ expectations and experiences. Interactive Technology and Smart Education, 10(2), 147-167. https://doi.org/10.1108/ITSE-12-2012-0031

Stavredes, T., & Herder, T. (2014). A guide to online course design: Strategies for student success. Jossey Bass.

Stevens, D. D., & Levi, A. J. (2013). Introduction to rubrics: An assessment tool to save grading time, convey effective feedback, and promote student learning (2nd ed.). Stylus.

Swan, K. (2001). Virtual interaction: Design factors affecting student satisfaction and perceived learning in asynchronous online courses. Distance Education, 22(2), 306-331. https://doi.org/10.1080/0158791010220208

Vai, M., & Sosulski, K. (2016). Essentials of online course design: A standards-based guide (2nd ed.). Routledge.

Waterhouse, S., & Rogers, R. O. (2004). The importance of policies in e-learning instruction. EDUCAUSE Quarterly, 27(3), 28-39.

Young, S. (2006). Student views of effective online teaching in higher education. American Journal of Distance Education, 20(2), 65-77. https://doi.org/10.1207/s15389286ajde2002_2

Please indicate the frequency with which you include the following design elements in your online courses.

(Scale: 1=Never, 2=Rarely, 3=Sometimes, 4=Often, 5=Always)

Design Matters: Development and Validation of the Online Course Design Elements (OCDE) Instrument by Florence Martin, PhD, Doris U. Bolliger, EdD, and Claudia Flowers, PhD is licensed under a Creative Commons Attribution 4.0 International License.