Volume 22, Number 2

Mingxiao Lu1, Tianyi Cui2, Zhenyu Huang3, Hong Zhao1, Tao Li1, and Kai Wang1

1College of Computer Science, Nankai University, China; 2Business School, Nankai University, China; 3College of Business Administration, Central Michigan University, USA

Massive open online courses (MOOCs) have attracted much interest from educational researchers and practitioners around the world. There has been an increase in empirical studies about MOOCs in recent years, most of which used questionnaire surveys and quantitative methods to collect and analyze data. This study explored the research topics and paradigms of questionnaire-based quantitative research on MOOCs by reviewing 126 articles available in the Science Citation Index (SCI) and Social Sciences Citation Index (SSCI) databases from January 2015 to August 2020. This comprehensive overview showed that: (a) the top three MOOC research topics were the factors influencing learners’ performance, dropout rates and continuance intention to use MOOCs, and assessing MOOCs; (b) for these three topics, many studies designed questionnaires by adding new factors or adjustments to extant theoretical models or survey instruments; and (c) most researchers used descriptive statistics to analyze data, followed by the structural equation model, and reliability and validity analysis. This study elaborated on the relationship of research topics and key factors in the research models by building factors-goals (F-G) graphs. Finally, we proposed some directions and recommendations for future research on MOOCs.

Keywords: MOOC, factors-goals graph (F-G graph), questionnaire-based survey, quantitative analysis, research topics

Massive open online courses (MOOCs), an innovative technology-enhanced learning model, have offered educational opportunities to a vast number of learners, and have attracted much interest from educational researchers and practitioners around the world (Zhou, 2016). When COVID-19 suddenly broke out in early 2020, schools in many countries had to be closed to stop the spread of the pandemic according to media reports. MOOCs became a top choice for students studying online from home. Some stakeholders have suggested that MOOCs may have a groundbreaking impact on higher education, potentially making traditional physical universities obsolete (Shirky, 2013). While acknowledging the potential of MOOCs, some educators have expressed concerns about the pedagogical models based on information transmission that have been widely applied in MOOCs (Albert et al., 2015; Babori et al., 2019; Veletsianos & Shepherdson, 2016; Zhu et al., 2018). Despite the polarized debate, the number of MOOC courses offered and students enrolled has continued to grow, which has aroused the interest of researchers. There have been a substantial number of research studies and reports investigating various aspects and effective practices of MOOCs in recent times, some of which have focused on empirical research.

Questionnaire-based surveys can directly and quickly obtain information about the attitudes, behaviors, characteristics, and opinions of MOOC participants, all of which can be used as first-hand data for empirical research. Most questionnaire-based research has made use of measurement scales, with the collected answers quantitatively analyzed to extract value. Researchers considered various factors and used classical models and theories when they designed their questionnaires. Follow-up research is necessary to analyze and summarize this prior work. This paper explored the research topics and paradigms of questionnaire-based quantitative research on MOOCs. The main contribution is a graphical summary of the classical models and theories, as well as analysis of the key factors frequently considered in certain key topics.

Over the years, MOOCs have yielded many research publications and have attracted numerous types of review articles including systematic as well as critical reviews. Zhu et al. (2018) summarized the typical research topics and methods directed to MOOCs, as well as their geographical distribution, by reviewing 146 empirical studies of MOOCs published from 2014 to 2016. They summarized the typical research topics and methods through only a few statistical results in the form of numbers, bar charts, and pie charts. Rasheed et al. (2019) adopted a systematic mapping methodology to provide a fine-grain overview of the MOOC research domain by identifying the quantity and types of research, available results, and publication trends in educational aspects of MOOCs from 2009 to 2018. Their findings showed that most MOOC studies focused on addressing learners’ completion, dropout rates, and retention. Babori et al. (2019) examined the content of MOOC research in 65 peer-reviewed papers produced in five major educational technology research journals between 2012 and 2018. Their analysis revealed that these articles were mainly concerned with MOOCs’ objectives, prerequisites required for participation in MOOCs, and types of learning scenarios. In addition, empirical studies adopted a variety of conceptual frameworks that focused mainly on learning strategies. Montes-Rodriguez et al. (2019) examined the prevalence and characteristics of case studies on MOOCs, based on 92 articles selected from the Web of Science and Scopus. Their findings showed that even when searching solely for case studies, quantitative analysis was more prevalent for data collection and analysis in research on MOOCs.

The reviews cited above showed MOOC research trends and topics as rapidly evolving. Although the majority of early MOOC studies were mostly theoretical and conceptual, more empirical studies and topics have emerged in recent years. According to Fang et al. (2019) and Zhu et al. (2018) most empirical research on MOOCs has used quantitative methods for gathering and analyzing data. As a methodology, quantitative analysis is generally linked to interpretive paradigms that analyze the quantitative characteristics, relations, and changes of social phenomena. A key process in quantitative analysis is that of establishing a mathematical model to calculate various indicators and values of the research object based on statistical data. Therefore, how to effectively collect quantitative data is the basis of this methodology. For research on MOOCs, surveys, especially questionnaire-based surveys, have been the most frequently adopted method of data collection (Sanchez-Gordon & Lujan-Mora, 2017; Zhu et al., 2018).

Few studies have reviewed the questionnaire-based quantitative research about MOOCs and summarized the theories such research has been based on. A comprehensive picture of the methodologies adopted in these studies is needed in order to investigate the characteristics of research on MOOCs, including topic areas, theoretical models, and research methods. We reviewed questionnaire-based quantitative studies about MOOCs published from January 2015 to August 2020, in order to increase awareness of methodological issues and theoretical models in the MOOC research field. The following three research questions guided our review:

By using the keywords MOOC, MOOCs, massive open online course, and massive open online courses, we searched for articles from the Web of Science database as our source data. The attributes of each selected article included authors, title, year of publication, journal name, research focus, research model, analysis methodology, and article URL. We classified research methodologies as qualitative, quantitative, or mixed method (i.e., combining quantitative and qualitative approaches). In this study, we focused on articles with quantitative or mixed method research. We filtered the articles according to six ordered selection criteria, as shown in Table 1. Each criterion is a hard one, which means that an article was filtered out if it did not meet even one criterion. The filtering process comprised reading the title and abstract of each article and assigning a value of relevant or irrelevant. When the relevance was not evident from the title and abstract, we examined the article in detail, reading the methodology and results sections. A total of 126 articles about MOOCs were selected and verified, including 89 quantitative studies and 37 with mixed methods.

Table 1

Criteria for Selecting MOOC Articles

| Criterion | Operational definition |

| 1 | The article was retrieved from the SCI or SSCI database. |

| 2 | The article was published in English. |

| 3 | The article was published between January 2015 and August 2020. |

| 4 | The terms MOOC(s) or massive open online course(s) were used to screen titles, abstracts, and keywords. |

| 5 | The study mainly investigated the educational aspects of MOOCs. |

| 6 | The article reported on an empirical study using questionnaire-based survey data and quantitative analysis. |

To address our first research question, thematic content analysis was used to examine the key research topics in studies of MOOCs. First, researchers read the MOOC research articles and identified the specific research focuses of each paper; topics were then grouped into four categories, namely dropout rates and continuance intention to use MOOCs, learners’ performance, assessing MOOCs, and others. To answer research question two, related to the research models typically employed, we systematically presented the models by means of factors-goals (F-G) graphs. These graphs, which were first designed as a graphic device for this study, showed the correlation between research goals and influencing factors in order to provide a reference framework for building hypothesis models. F-G graphs provided a statistical baseline for accuracy, consistency, and representativeness to improve data quality. Finally, to answer the third research question, researchers counted the data analysis methods most often used in the quantitative studies.

To examine the general topics and focuses of quantitative MOOC studies, we divided the key topics of 126 papers into four different categories: (a) dropout rates and continuance intention to use MOOCs (n = 36; 28.57%); (b) factors influencing learners’ performance (n = 45; 35.71%); (c) assessing MOOCs (n = 29; 23.02%); and (d) others (n = 16; 12.70%).

MOOCs might not be equally successful in keeping learners through to course completion, though they are successful in attracting and accommodating numerous learners. Some studies showed that only a small number of participants completed an entire course, and others quit partway through after experiencing a few MOOC lessons (Shao, 2018; Yang et al., 2017). High dropout rates have been widely regarded as a serious issue for MOOCs (Bozkurt et al., 2017).

Most of the extant literature considered completion rate as a metric for evaluating the success or failure of a MOOC. It is vital to investigate the reasons why learners persist and complete their courses or drop out, so a large number of researchers have explored this issue through quantitative methods based on questionnaires. Both subjective and objective factors influenced MOOC participants’ retention and completion. The main subjective factors included learners’ preferences (Li et al., 2018), experience (Li et al., 2018; Zhao et al., 2020; Zhou, 2017), expectancy (Botero et al., 2018; Luik et al., 2019; Zhou, 2017), and psychological motivation (Botero et al., 2018; Yang & Su, 2017; Zhou, 2016). Objective factors included course quality (Hone & El Said, 2016; Yang et al., 2017), network externalities (Li et al., 2018), social motivation (Jung & Lee, 2018; Khan et al., 2018; Wu & Chen, 2017), and MOOC systems (Wu & Chen, 2017).

Dropout rate is not the only metric of the success of a MOOC. Learners have various motivations for taking online courses (Carlos et al., 2017), which can affect their attitude and intention to continue learning in MOOCs. The performance of learners attending a MOOC can be used as an essential reference for improving MOOC design and quality. Learners’ performance in MOOCs has been measured by course engagement, social interactions, sociability, and learning gains. Many studies have focused on the factors that influence learners’ performance (Carlos et al., 2017; Kahan et al., 2017; Soffer & Cohen, 2015; Zhang, 2016). From the articles reviewed in this study, we summarized the major factors affecting learners’ performance into four categories: motivation, self-regulated learning (SRL), attitudinal learning, and learning strategies.

Learners with different motivations for participating in a MOOC targeted different learning goals and strategies (de Barba et al., 2016; Watted & Barak, 2018). General participants were oriented toward acquiring knowledge and academic advancement, while university-affiliated students were also concerned with a need to obtain certificates. SRL is a learning strategy that influences MOOC learners’ academic performance. Independent learning in MOOCs calls for completing course content, making full use of platform resources, and allocating study time reasonably (Jansen et al., 2017; Kizilcec et al., 2017; Maldonado-Mahauad et al., 2018). The scale items of attitudinal learning conform to the following four-dimensional theoretical structure: cognitive learning, affective learning, behavioral learning, and social learning (Watson et al., 2016). Finally, learning strategy has been defined as a complex plan for a learning process that learners have purposefully and consciously formulated to improve their learning effectiveness and performance in MOOCs (Kizilcec et al., 2017; Maldonado-Mahauad et al., 2018).

Some articles investigated the overall assessment of MOOCs, specifically evaluation of the teaching model, course structure and content design, the MOOC platform technology, and the benefits from participating in MOOCs. We divided the studies we examined into two categories: assessment from the perspective of learners, and assessment from teachers’ points of view. Some student-oriented research used learners’ perceived benefits to determine which course design better helped learners meet their goals (Jung et al., 2019; Lowenthal et al., 2018). Teacher-focused evaluation paid close attention to teaching skills and challenges in MOOCs, as well as opportunities for future development (Donitsa-Schmidt & Topaz, 2018; Gan, 2018).

Questionnaire-based quantitative research generally includes the following steps: (a) propose the research questions to be solved; (b) select an appropriate theoretical model or develop a new model, drawing on classical theories and the hypothetical relationship between factors; (c) design questionnaire items, usually in the form of a Likert scale, to measure the factors and variables in the research model; (d) collect the questionnaires from research subjects; and (e) analyze the collected data to verify the hypothesis model.

Building a hypothesis model is the foundation of quantitative research. In examining the sorts of models MOOC researchers have relied on, we described three F-G graphs to depict the correlation between the top three categories of research topics and the research models summarized from the 126 articles. Tables A1, A2, A3 in Appendix A provide background details from 32 typical articles for the top three topics, including article titles, research topics, theoretical models and factors involved in the questionnaire, and analysis methods. This data formed the foundations for drawing the F-G graphs for our study.

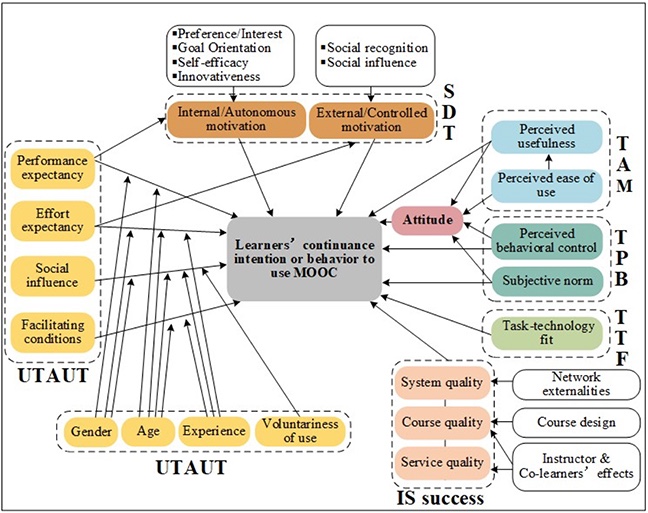

An F-G graph was built to demonstrate the correlation among the research models and the research goal of investigating factors that affect learners’ intentions to continue to use MOOCs. As shown in Figure 1, the F-G graph integrated the factors of research models frequently used in the articles we examined. The relationship hypotheses between factors are shown with straight arrows. The direction of an arrow points from an explanatory variable to a dependent variable. The factors in rounded rectangles are those that directly or indirectly affected learners’ intentions to continue to use MOOCs.

Figure 1

F-G Graph: Factors of Research Models for Dropout Rates or Continuance Intention to Use MOOCs

In the 126 articles we examined, most researchers designed questionnaire items by extending classical theoretical models, including: (a) the technology acceptance model (TAM; n = 12); (b) the self-determination theory (SDT; n = 7); (c) the task-technology fit (TTF; n = 4); (d) the theory of planned behavior (TPB; n = 4); (e) the unified theory of acceptance and use of technology (UTAUT; n = 3); and (f) the information system (IS) success model (n =2). In Figure 1, the key factors from each model are enclosed within black dotted boxes. In addition, some studies enhanced these models by adding new elements or adjustments to further explain learners’ continuance intention to use MOOCs. In Figure 1, these new factors, often considered by the reviewed articles, are listed in black solid boxes. The specific explanations of these theoretical models are summarized in Table 2 and addressed in detail following the table.

Table 2

Classical Models That Address Dropout Rates or Continuance Intention to Use MOOCs

| Model | Hypothesis |

| TAM | Perceived usefulness and perceived ease of use determine the individual’s attitude toward a MOOC as well as the behavioral intention to use it (Davis, 1989). |

| SDT | A motivation theory to investigate how and why a particular human behavior occurs. Distinguishes between autonomous and controlled motivations in terms of the degrees of self-determination (Deci et al., 1999). |

| TPB | Explains three determinants of individual’s behavioral intentions: perceived behavioral control, subjective norms, and attitude toward the behavior (Ajzen, 1985). |

| TTF | Task characteristics and technology characteristics can affect the task-technology fit, which determines users’ performance and utilization (Goodhue et al., 2000). |

| UTAUT | Incorporates eight classical models or theories, including TAM, TPB, theory of reasoned action (TRA), the motivational model (MM), a model combining the technology acceptance model (C-TAM-TPB), the model of PC utilization (MPCU), innovation diffusion theory(IDT), and social cognitive theory (SCT) (Venkatesh et al., 2003). |

| IS success | Users’ satisfaction with an information system depends on six variables: system quality, information quality, perceived usefulness, net benefits to individuals, net benefits to organizations, and net benefits to society (Seddon, 1997). |

In our analysis of factors in the TAM, attitude was considered a direct and positive factor that determined an individual’s intention and behavior. The TAM assumed that two main factors, perceived usefulness and perceived ease of use, determined an individual’s attitude toward a new MOOC technology as well as the behavioral intention to use it (Joo et al., 2018; Shao, 2018; Tao et al., 2019; Wu & Chen, 2017). To some extent, perceived usefulness also had a direct impact on the learner’s behavior.

The TPB aimed to explain that an individual could decide whether or not to continue learning in a MOOC according to his or her own free will, as affected by three factors—attitude, subjective norms, and perceived behavioral control (Khan et al., 2018; Shao, 2018; Sun et al., 2019; Zhou, 2016). The latter two were hypothesized to directly influence one’s attitude towards online learning. Subjective norms referred to the individual’s perception of social pressures. Perceived behavioral control, defined as the individual’s perceived ease or difficulty, had a direct impact on learning behavior.

Motivation significantly affected learners’ psychological and behavioral engagement, which is important to reduce the dropout rate of MOOCs. The SDT, a well-established motivation theory that has been widely adopted to investigate participants’ persistence in MOOCs, indicated that behavior may be encouraged not only by autonomous motivations but also by controlled motivations. It was found that meeting students’ needs for autonomy, competence, and relatedness can increase their intrinsic motivation and lead to their active engagement in MOOCs (Castano-Munoz et al., 2017; Hone & El Said, 2016; Khan et al., 2018; Sun et al., 2019). In addition to the SDT, some new factors were put forward that affect learners’ motivation and persistence in MOOCs, such as an individual’s preference or interest, goal orientation, self-efficacy, and innovativeness (Jung & Lee, 2018; Tsai et al., 2018; Zhang et al., 2016). External motivational factors were also investigated, including social recognition, social influence, and environmental stimulus (Luik et al., 2019; Wu & Chen, 2017; Zhao et al., 2020; Zhou, 2017).

The UTAUT was applied as a basic framework for designing questionnaire items, integrating eight classical models (Botero et al., 2018; Zhao et al., 2020). The UTAUT proposed several hypotheses regarding the impact of four factors on behavioral intentions: (a) performance expectancy, (b) effort expectancy, (c) social influence, and (d) facilitating conditions. It also considered that learners’ gender, age, experience, and voluntariness of use affected these four factors.

The TTF was used to evaluate how information technology leads to learners’ performance and utilization in MOOCs, and to judge the match between the learning task and the characteristics of MOOC technology (Khan et al., 2018; Wu & Chen, 2017).

In the analysis of factors in the IS success model, system quality, course quality, and service quality were significant antecedents of learners’ continuance intention to use MOOCs (Yang et al., 2017). Some new factors that influenced MOOC quality were also considered. Network externalities affected users’ persistence through the mediation of system quality (Li et al., 2018). MOOC course quality was mainly determined by the course design including course content and course structure (Hone & El Said, 2016). Instructor and co-learners effects, such as interaction, support, and feedback, influenced both course quality and service quality (Hone & El Said, 2016).

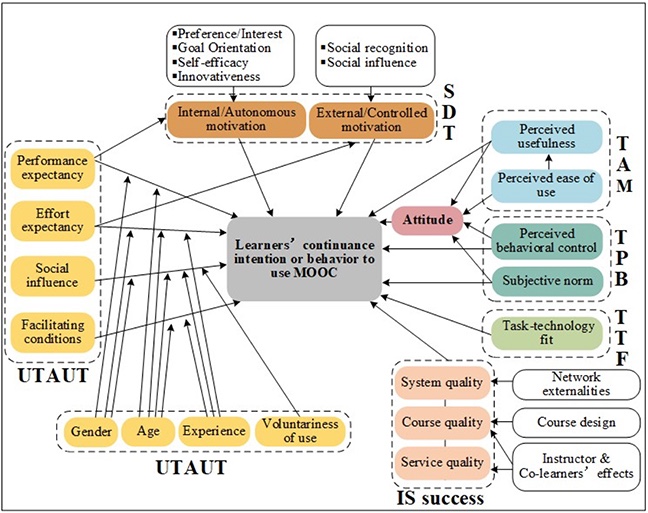

A challenge for this study was to build an F-G graph to summarize various factors about learners’ performance in MOOCs in terms of aspects of the research models that were examined. After reviewing the articles, we divided these factors into four categories: motivation, SRL, attitudinal learning, and learning strategies, clearly shown in different colors in Figure 2. The factors in the rounded rectangles had direct or indirect impacts on learners’ performance in MOOCs. The direction of an arrow points from an explanatory variable to a dependent variable.

Figure 2

F-G Graph: Factors Affecting Learners’ Performance in MOOCs

Many studies about learners’ performance have integrated existing survey instruments to design questionnaire items, such as the motivated strategies for learning questionnaire (MSLQ; n = 12), the online self-regulated learning questionnaire (OSLQ; n = 7), the meta-cognitive awareness inventory (MAI; n = 5), and the learning strategies questionnaire (LS; n = 5). The key factors in these instruments that have been considered in MOOC environments are enclosed with dashed boxes in Figure 2. In addition, Table 3 summarizes how factors about MOOC learners’ motivation and learning strategies have been addressed in these four instruments. A checkmark indicates that the questionnaire considered the corresponding factor.

Table 3

Factors Related to MOOC Learners’ Motivation and Learning Strategies in MSLQ, OSLQ, MAI, and LS

| Scale | Factor | Instrument | |||

| MSLQ | OSLQ | MAI | LS | ||

| Motivation | Intrinsic goal orientation | ✓ | |||

| Extrinsic goal orientation | ✓ | ||||

| Task value | ✓ | ||||

| Control beliefs | ✓ | ✓ | |||

| Self-efficacy | ✓ | ||||

| Meta-cognitive strategies | Goal setting | ✓ | ✓ | ||

| Strategic planning | ✓ | ||||

| Task strategies | ✓ | ✓ | ✓ | ||

| Elaboration | ✓ | ||||

| Critical thinking | ✓ | ||||

| Meta-cognitive self-regulation | ✓ | ||||

| Self-evaluation | ✓ | ✓ | ✓ | ||

| Resource management strategies | Time management | ✓ | ✓ | ||

| Environment structuring | ✓ | ✓ | |||

| Help seeking | ✓ | ✓ | ✓ | ||

| Strategy regulation | ✓ | ||||

| Effort regulation | ✓ | ||||

The MSLQ, a self-report questionnaire, has been used to measure types of academic motivation and learning strategies in educational contexts (Pintrich et al., 1991), and in the reviewed articles, it was used to study how motivation and learning strategies affect MOOC learners’ performance (Carlos et al., 2017; Hung et al., 2019; Jansen et al., 2017; Watted & Barak, 2018). The motivation section assessed learners’ goals (including intrinsic and external goals), value beliefs, and their expectations for a course. The learning strategies section included cognitive and meta-cognitive strategies and resource management strategies.

The OSLQ was adopted to measure learners’ SRL ability and strategies, including goal setting, environment structure, task strategies, time management, help-seeking, and self-evaluation (Kizilcec et al., 2017; Lee et al., 2020; Martinez-Lopez et al., 2017).

The MAI was constructed to measure meta-cognitive awareness as classified into two categories—cognition knowledge and cognition regulation (Schraw & Dennison, 1994).

The LS questionnaire has been used to measure three learning strategies—cognitive learning, behavioral learning, and self-regulatory learning—as associated with learning gain in MOOCs (Warr & Downing, 2000). The factors within these strategies included elaboration, help-seeking, motivation control, and comprehension monitoring (self-evaluation), among others.

In the analysis of new factors not included in the classical questionnaires, attitudinal learning was investigated in order to study the relationship between learners’ inherent positive attitudes and their belief in being able to complete learning tasks well (Watson et al., 2018; Watson et al., 2016). Learners’ emotional state and self-perceived achievement when attending a MOOC has been shown to affect their attitudinal learning. Behavioral learning was mainly predicted by learners’ engagement with activities (Ding & Zhao, 2020). Some new factors affecting learners’ motivation were also explored, such as individual benefits, including career, personal, and educational benefits. Social influence, similar to situational interest, was also studied and included certain conditions or stimuli in the social environment, such as peers’ recommendation and teacher’s support (de Barba et al., 2016; Durksen et al., 2016; Gallagher & Savage, 2016). MOOC instructors can refer to the influencing factors listed in Figure 2 to design for learner-centered experiences in the MOOC space (Blum-Smith et al., 2021).

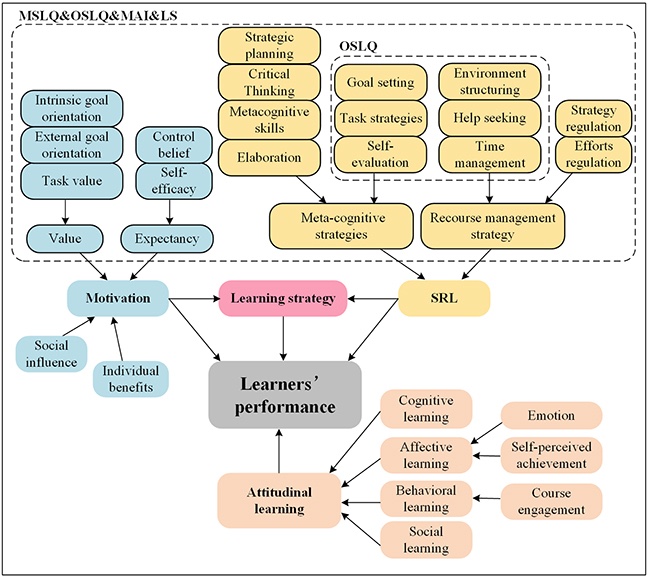

In the reviewed articles, some researchers investigated students’ and teachers’ overall evaluation of MOOCs before or after participating in their courses. Figure 3 is an F-G graph that illustrates our summary of research models for assessment of MOOCs. This analysis spanned four dimensions, namely, (a) learners’ evaluation, (b) learners’ perceived benefits from learning, (c) teachers’ evaluation, and (d) teachers’ perceived benefits from teaching. The factors in the rounded rectangles directly or indirectly affect the assessment of MOOCs by learners and teachers. The direction of an arrow points from an explanatory variable to a dependent variable.

Figure 3

F-G Graph: Factors for Assessment of MOOCs

Regarding evaluation by learners and teachers, in order to obtain feedback that contributed to improving MOOCs, most researchers collected opinions and suggestions from students and teachers about course design, including course content, course structure, and available resources (Gan, 2018), as well as teaching skills and methods (Gan, 2018; Kormos & Nijakowska, 2017; Lowenthal et al., 2018). Regarding teaching methods, students’ main concerns were feedback from and interaction with instructors and co-learners (Marta-Lazo et al., 2019). In addition, students’ views on criteria for evaluating academic performance were crucial to assessment of MOOCs (Robinson, 2016; Ruiz-Palmero et al., 2019; Sari et al., 2020; Teresa Garcia-Alvarez et al., 2018). Teachers were concerned about their course management skills, teaching challenges, and personal development (Donitsa-Schmidt & Topaz, 2018; Robinson, 2016).

Students evaluated MOOCs based on what they perceived as benefits, including academic achievement, expected certificates or rewards, progress of learning efficiency, and effort invested in acquiring new knowledge or practical skills (Jung et al., 2019; Ruiz-Palmero et al., 2019; Teresa Garcia-Alvarez et al., 2018). Through participating in MOOCs, learners gained tangible and intangible benefits that generally justified their expectations, usually coinciding with individuals’ plans to change their career, education, or life trajectory (Sablina et al., 2018).

Teachers’ perceived benefits from providing courses as MOOCs were the key factors when they evaluated MOOCs. Benefits consisted mainly of enriching their instructional practice and experience, professional development, and potential for lifelong learning (Donitsa-Schmidt & Topaz, 2018). A teaching-quality control system was proposed as a way to provide teachers with motivation for continuous teaching with MOOCs, and to promote teachers’ self-confidence and self-efficacy (Gan, 2018).

After collecting questionnaire data, researchers chose analysis methods according to their different research needs. Based on our summary of the analysis methods used in the 126 reviewed studies, 61 articles (48.41%) used descriptive analysis, 53 studies (42.06%) used a structural equation model (SEM), 48 articles (38.10%) performed reliability analysis, and 41 studies (32.54%) adopted validity analysis. Most articles used several quantitative analysis methods at the same time. Researchers used various statistical analysis software to assist the processes of data analysis, most often IBM SPSS (n = 34) and AMOS (n = 14).

In the research we investigated, descriptive statistics often dealt with demographic data including participants’ gender, age, educational background, and experience with MOOCs (Botero et al., 2018; de Barba et al., 2016; Farhan et al., 2019; Hone & El Said, 2016). Descriptive statistics of data characteristics included data frequency analysis, centralized trend analysis, dispersion analysis, distribution, and some basic statistical graphics.

Reliability analysis refers to the degree of consistency of the results obtained when a questionnaire repeatedly measures the same object. It is best to verify the reliability of the items before using a questionnaire instrument to collect data. In the articles we reviewed, Cronbach’s α was the most commonly used reliability coefficient (Kovanovic et al., 2017; Sun et al., 2019; Tsai et al., 2018; Zhao et al., 2020). The data collected through questionnaires was generally considered credible when Cronbach’s α was greater than 0.7.

Validity analysis determines the degree to which the measurement results of a questionnaire can accurately reflect what needs to be measured. Validity analysis comprises content validity and structural validity. In the studies we examined, researchers usually invited people with extensive development experience to check the content validity of their questionnaires (Jo, 2018; Zhou, 2017). Structural validity consisted of two main methods, namely exploratory factor analysis (EFA) and confirmatory factor analysis (CFA). EFA was commonly used in item analysis for scale preparation to explore the model structure, while CFA was used in reliability and validity analysis of mature questionnaires to verify the structure of a model (Jansen et al., 2017; Luik et al., 2019; Shahzad et al., 2020).

SEM is a more often recommended analysis method when attitude-related variables are included in the hypothesis model. SEM is a statistical method to analyze the relationship between variables based on a covariance matrix of variables for multivariate data analysis. The SEM methods used most frequently in the studies we examined were the partial least squares method (PLS-SEM) (Hone & El Said, 2016; Shao, 2018; Yang et al., 2017; Zhao et al., 2020) and the maximum likelihood estimation method (MLE-SEM) (de Barba et al., 2016; Teo & Dai, 2019; Zhou, 2016). When the collected data had no significant distribution characteristics, researchers most often used PLS-SEM analysis.

Through systematic review and analysis of 126 questionnaire-based quantitative research articles on MOOCs published between January 2015 and August 2020, this study explored the research paradigms associated with this field including research topics, models, and data analysis methods.

Our findings show that MOOC research remains an important and growing field of interest for educational researchers. Empirical studies of MOOCs explored multiple issues, most of which were based on quantitative investigation and research. This paper divided the key topics of these reviewed articles into three different categories: (a) the determinants of learners’ dropout rate or continuance intention, (b) the relevant factors of learners’ performance, and (c) participants’ assessment of MOOCs. Most research focused on MOOC participants or learners, with a few researchers actively concentrating on MOOC instructors, curriculum design, and platform development. It may be promising for researchers to conduct more in-depth exploration of the characteristics and profiles of MOOC participants and instructors, the potential for personalized customization in MOOCs, and MOOC quality improvements.

As shown in this study, most questionnaire-based quantitative studies of MOOCs had a solid theoretical foundation, a standardized research process, and effective research methods. By understanding the research paradigms summarized and expanded in this study, researchers will be better able to carry out more empirical research while experimenting with research methods that have not yet been commonly used. This paper provides three F-G graphs to separately analyze the correspondence between research topics and factors involved in the models or hypotheses studies were based on. By referring to the F-G graphs, MOOC researchers can design more reasonable questionnaire items and collect high-quality data to better support data science research.

This study revealed several limitations of MOOC research as apparent in the studies we reviewed, including small sample size during data collection, lack of diversity among the survey participants, and the limitations inherent in traditional statistical analysis. Based on these limitations, we suggest three new directions for the future development of research on MOOCs.

First, we recommend expanding the scope of data collection and establishing big data sets. In some studies of MOOCs selected for this paper, the sample size for surveys was relatively small. Some research results failed to be persuasive, or the factors investigated had no significant impact on the research subjects. A preferable approach may be to expand the scope and target of data collection, and establish a large-scale database in the MOOC field, perhaps even worldwide. This would serve to make the data sources more objective, more universal, and more convincing (Ang et al., 2020).

Second, we suggest standardizing multi-sourced heterogeneous data about MOOCs. This is an essential feature of big data, since the survey data from different studies are based on different collection scales and standards. Standardized multi-sourced heterogeneity data can provide a solid data foundation and further insights for subsequent data analysis.

Finally, we recommend applying data mining and deep learning methods. In the articles we reviewed, data analysis methods were mostly limited to traditional statistical approaches. Data mining and deep learning emphasize correlation judgments between samples and infer the population from the standard data set (Peral et al., 2017). What is more, researchers can apply data mining and deep learning to analyze objective behaviors and subjective perceptions of MOOC learners and instructors, make feature profiles of users, and propose personalized optimization schemes (Geng et al., 2020; Cagiltay et al., 2020).

Ajzen, I. (1985). From intentions to actions: A theory of planned behavior. In J. Kuhl & J. Beckmann (Eds.), Action control: From cognition to behavior (pp. 11-39). Springer.

Albert, S., Mercedes, G. S., & Terry, A. (2015). Meta-Analysis of the research about MOOC during 2013-2014. Educacion Xx1, 18(2). https://doi.org/10.5944/educxx1.14808

Ang, K. L. M., Ge, F. L., & Seng, K. P. (2020). Big educational data & analytics: Survey, architecture and challenges. IEEE Access, 8, 116392-116414. https://doi.org/10.1109/ACCESS.2020.2994561

Babori, A., Abdelkarim, Z., & Fassi, H. F. (2019). Research on MOOCs in major referred journals: The role and place of content. International Review of Research in Open and Distributed Learning, 20(3). https://doi.org/10.19173/irrodl.v20i4.4385

Blum-Smith, S., Yurkofsky, M. M., & Brennan, K. (2021). Stepping back and stepping in: Facilitating learner-centered experiences in MOOCs. Computers & Education, 160, 104042. https://doi.org/10.1016/j.compedu.2020.104042

Botero, G. G., Questier, F., Cincinnato, S., He, T., & Zhu, C. (2018). Acceptance and usage of mobile assisted language learning by higher education students. Journal of Computing in Higher Education, 5, 1-26. https://doi.org/10.1007/s12528-018-9177-1

Bozkurt, A., Akgun-Ozbek, E., & Zawacki-Richter, O. (2017). Trends and patterns in massive open online courses: Review and content analysis of research on MOOCs (2008-2015). International Review of Research in Open and Distributed Learning, 18(5), 118-147. http://doi.org/10.19173/irrodl.v18i5.3080

Cagiltay, N. E., Cagiltay, K., & Celik, B. (2020). An analysis of course characteristics, learner characteristics, and certification rates in MITx MOOCs. International Review of Research in Open and Distributed Learning, 21(3), 121-139. http://doi.org/10.19173/irrodl.v21i3.4698

Carlos, A. H., Iria, E. A., Mar, P. S., Carlos, D. K., & Carmen, F. P. (2017). Understanding learners’ motivation and learning strategies in MOOCs. International Review of Research in Open and Distributed Learning, 18(3), 119-137. http://doi.org/10.19173/irrodl.v18i3.2996

Castano-Munoz, J., Kreijns, K., Kalz, M., & Punie, Y. (2017). Does digital competence and occupational setting influence MOOC participation? Evidence from a cross-course survey. Journal of Computing in Higher Education, 29(1), 28-46. http://doi.org/10.1007/s12528-016-9123-z

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319-339. http://doi.org/10.2307/249008

de Barba, P. G., Kennedy, G. E., & Ainley, M. D. (2016). The role of students’ motivation and participation in predicting performance in a MOOC. Journal of Computer Assisted Learning, 32(3), 218-231. http://doi.org/10.1111/jcal.12130

Deci, E. L., Koestner, R., & Ryan, R. M. (1999). A meta-analytic review of experiments examining the effects of extrinsic rewards on intrinsic motivation. Psychological Bulletin, 125, 627-626. http://doi.org/10.1037/0033-2909.125.6.627

Ding, Y., & Zhao, T. (2020). Emotions, engagement, and self-perceived achievement in a small private online course. Journal of Computer Assisted Learning, 36(4), 449-457. https://doi.org/10.1111/jcal.12410

Donitsa-Schmidt, S., & Topaz, B. (2018). Massive open online courses as a knowledge base for teachers. Journal of Education for Teaching, 44(5), 608-620. https://doi.org/10.1080/02607476.2018.1516350

Durksen, T. L., Chu, M. W., Ahmad, Z. F., Radil, A. I., & Daniels, L. M. (2016). Motivation in a MOOC: A probabilistic analysis of online learners’ basic psychological needs. Social Psychology of Education, 19(2), 241-260. https://doi.org/10.1007/s11218-015-9331-9

Fang, J. W., Hwang, G. J., & Chang, C. Y. (2019). Advancement and the foci of investigation of MOOCs and open online courses for language learning: A review of journal publications from 2009 to 2018. Interactive Learning Environments, 28, 1-19. http://doi.org/10.1080/10494820.2019.1703011

Farhan, W., Razmak, J., Demers, S., & Laflamme, S. (2019). E-learning systems versus instructional communication tools: Developing and testing a new e-learning user interface from the perspectives of teachers and students. Technology in Society, 59, 101192. http://doi.org/10.1016/j.techsoc.2019.101192

Fryer, L. K., & Bovee, H. N. (2018). Staying motivated to e-learn: Person- and variable-centred perspectives on the longitudinal risks and support. Computers & Education, 120, 227-240. http://doi.org/10.1016/j.compedu.2018.01.006

Gallagher, S. E., & Savage, T. (2016). Comparing learner community behavior in multiple presentations of a massive open online course. Journal of Computing in Higher Education, 28(3), 358-369. http://doi.org/10.1007/s12528-016-9124-y

Gan, T. (2018). Construction of security system of flipped classroom based on MOOC in teaching quality control. Educational Sciences: Theory & Practice, 18(6), 2707-2717. http://doi.org/10.12738/estp.2018.6.170

Geng, S., Niu, B., Feng, Y. Y., & Huang, M. J. (2020). Understanding the focal points and sentiment of learners in MOOC reviews: A machine learning and SC-LIWC-based approach. British Journal of Educational Technology, 51(5), 1785-1803. http://doi.org/10.1111/bjet.12999

Goodhue, D. L., Klein, B. D., & March, S. T. (2000). User evaluations of IS as surrogates for objective performance. Information and Management, 38(2), 87-101. http://doi.org/10.1016/S0378-7206(00)00057-4

Hone, K. S., & El Said, G. R. (2016). Exploring the factors affecting MOOC retention: A survey study. Computers & Education, 98, 157-168. http://doi.org/10.1016/j.compedu.2016.03.016

Hung, C. Y., Sun, J. C. Y., & Liu, J. Y. (2019). Effects of flipped classrooms integrated with MOOCs and game-based learning on the learning motivation and outcomes of students from different backgrounds. Interactive Learning Environments, 27(8), 1028-1046. http://doi.org/10.1080/10494820.2018.1481103

Jansen, R. S., van Leeuwen, A., Janssen, J., Kester, L., & Kalz, M. (2017). Validation of the self-regulated online learning questionnaire. Journal of Computing in Higher Education, 29(1), 6-27. http://doi.org/10.1007/s12528-016-9125-x

Jo, D. H. (2018). Exploring the determinants of MOOCs continuance intention. Ksii Transactions on Internet and Information Systems, 12(8), 3992-4005. http://doi.org/10.3837/tiis.2018.08.024

Joo, Y. J., So, H. J., & Kim, N. H. (2018). Examination of relationships among students’ self-determination, technology acceptance, satisfaction, and continuance intention to use k-MOOCs. Computers & Education, 122, 260-272. http://doi.org/10.1016/j.compedu.2018.01.003

Jung, E., Kim, D., Yoon, M., Park, S. H., & Oakley, B. (2019). The influence of instructional design on learner control, sense of achievement, and perceived effectiveness in a supersize MOOC course. Computers & Education, 128, 377-388. https://doi.org/10.1016/j.compedu.2018.10.001

Jung, Y., & Lee, J. (2018). Learning engagement and persistence in massive open online courses (MOOCs). Computers & Education, 122, 9-22. https://doi.org/10.1016/j.compedu.2018.02.013

Kahan, T., Soffer, T., & Nachmias, R. (2017). Types of participant behavior in a massive open online course. International Review of Research in Open and Distributed Learning, 18(6). https://doi.org/10.19173/irrodl.v18i6.3087

Khan, I. U., Hameed, Z., Yu, Y., Islam, T., & Khan, S. U. (2018). Predicting the acceptance of MOOCs in a developing country: Application of task-technology fit model, social motivation, and self-determination theory. Telematics and Informatics, 35(4), 964-978. https://doi.org/10.1016/j.tele.2017.09.009

Kizilcec, R. F., Perez-Sanagustin, M., & Maldonado, J. J. (2017). Self-regulated learning strategies predict learner behavior and goal attainment in massive open online courses. Computers & Education, 104, 18-33. https://doi.org/10.1016/j.compedu.2016.10.001

Kormos, J., & Nijakowska, J. (2017). Inclusive practices in teaching students with dyslexia: Second language teachers’ concerns, attitudes and self-efficacy beliefs on a massive open online learning course. Teaching & Teacher Education, 68, 30-41. https://doi.org/10.1016/j.tate.2017.08.005

Kovanovic, V., Joksimovic, S., Poquet, O., Hennis, T., & Gasevic, D. (2017). Exploring communities of inquiry in massive open online courses. Computers & Education, 119, 44-58. https://doi.org/10.1016/j.compedu.2017.11.010

Lee, D., Watson, S. L., & Watson, W. R. (2020). The relationships between self-efficacy, task value, and self-regulated learning strategies in massive open online courses. International Review of Research in Open and Distributed Learning, 21(1), 23-39. https://doi.org/10.19173/irrodl.v20i5.4389

Li, B., Wang, X., & Tan, S. C. (2018). What makes MOOC users persist in completing MOOCs? A perspective from network externalities and human factors. Computers in Human Behavior, 85, 385-395. https://doi.org/10.1016/j.chb.2018.04.028

Lowenthal, P., Snelson, C., & Perkins, R. (2018). Teaching massive, open, online, courses (MOOCs): Tales from the front line. International Review of Research in Open and Distributed Learning, 19(3), 1-19. https://doi.org/10.19173/irrodl.v19i3.3505

Luik, P., Suviste, R., Lepp, M., Palts, T., Tonisson, E., Sade, M., & Papli, K. (2019). What motivates enrolment in programming MOOCs? British Journal of Educational Technology, 50(1), 153-165. https://doi.org/10.1111/bjet.12600

Maldonado-Mahauad, J., Perez-Sanagustin, M., Kizilcec, R. F., Morales, N., & Munoz-Gama, J. (2018). Mining theory-based patterns from big data: Identifying self-regulated learning strategies in massive open online courses. Computers in Human Behavior, 80, 179-196. https://doi.org/10.1016/j.chb.2017.11.011

Marta-Lazo, C., Frau-Meigs, D., & Osuna-Acedo, S. (2019). A collaborative digital pedagogy experience in the tMOOC “step by step.” Australasian Journal of Educational Technology, 35(5), 111-127. https://doi.org/10.14742/ajet.4215

Martin Nunez, J. L., Tovar Caro, E., & Hilera Gonzalez, J. R. (2017). From higher education to open education: Challenges in the transformation of an online traditional course. IEEE Transactions on Education, 60(2), 134-142. https://doi.org/10.1109/TE.2016.2607693

Martinez-Lopez, R., Yot, C., Tuovila, I., & Perera-Rodriguez, V. H. (2017). Online self-regulated learning questionnaire in a Russian MOOC. Computers in Human Behavior, 75, 966-974. https://doi.org/10.1016/j.chb.2017.06.015

Meinert, E., Alturkistani, A., Brindley, D., Carter, A., Wells, G., & Car, J. (2018). Protocol for a mixed-methods evaluation of a massive open online course on real world evidence. BMJ Open, 8(8), e025188. https://doi.org/10.1136/bmjopen-2018-025188

Montes-Rodriguez, R., Martinez-Rodriguez, J. B., & Ocana-Fernandez, A. (2019). Case study as a research method for analyzing MOOCs: Presence and characteristics of those case studies in the main scientific databases. International Review of Research in Open and Distributed Learning, 20(3), 59-79. https://doi.org/10.19173/irrodl.v20i4.4299

Peral, J., Maté, A., & Marco, M. (2017). Application of data mining techniques to identify relevant key performance indicators. Computer Standards & Interfaces, 50, 55-64. https://doi.org/10.1016/j.csi.2016.09.009

Pintrich, P. R., Smith, D. A. F., Duncan, T., & Mckeachie, W. J. (1991). A manual for the use of the motivated strategies for learning questionnaire (MSLQ). University of Michigan.

Rasheed, R. A., Kamsin, A., Abdullah, N. A., Zakari, A., & Haruna, K. (2019). A systematic mapping study of the empirical MOOC literature. IEEE Access, 7, 124809-124827. https://doi.org/10.1109/ACCESS.2019.2938561

Robinson, R. (2016). Delivering a medical school elective with massive open online course (MOOC) technology. Peerj, 4(1), e2343. https://doi.org/10.7717/peerj.2343

Ruiz-Palmero, J., Lopez-Alvarez, D., Sanchez-Rivas, E., & Sanchez-Rodriguez, J. (2019). An analysis of the profiles and the opinion of students enrolled on xMOOCs at the University of Malaga. Sustainability, 11(24). https://doi.org/10.3390/su11246910

Sablina, S., Kapliy, N., Trusevich, A., & Kostikova, S. (2018). How MOOC-takers estimate learning success: Retrospective reflection of perceived benefits. International Review of Research in Open and Distributed Learning, 19(5). https://doi.org/10.19173/irrodl.v19i5.3768

Sanchez-Gordon, S., & Lujan-Mora, S. (2017). Research challenges in accessible MOOCs: A systematic literature review 2008-2016. Universal Access in the Information Society, 17(4), 775-789. http://doi.org/10.1007/s10209-017-0531-2

Sari, A. R., Bonk, C. J., & Zhu, M. (2020). MOOC instructor designs and challenges: What can be learned from existing MOOCs in Indonesia and Malaysia? Asia Pacific Education Review, 21(1), 143-166. https://doi.org/10.1007/s12564-019-09618-9

Schraw, G., & Dennison, R. S. (1994). Assessing metacognitive awareness. Contemporary Educational Psychology, 19(4), 460-475. https://doi.org/10.1006/ceps.1994.1033

Seddon, P. (1997). A respecification and extension of the Delone and McLean model of IS success. Information Systems Research, 8, 240-253. https://doi.org/10.1287/isre.8.3.240

Shahzad, F., Xiu, G., Khan, I., Shahbaz, M., & Abbas, A. (2020). The moderating role of intrinsic motivation in cloud computing adoption in online education in a developing country: A structural equation model. Asia Pacific Education Review, 21(1), 121-141. https://doi.org/10.1007/s12564-019-09611-2

Shao, Z. (2018). Examining the impact mechanism of social psychological motivations on individuals’ continuance intention of MOOCs. Internet Research, 28(1), 232-250. https://doi.org/10.1108/IntR-11-2016-0335

Shirky, C. (2013, July 8). MOOCs and economic reality. Chronicle of Higher Education, 59(42), B2.

Sneddon J., Barlow G., Bradley S., Brink A., Chandy S. J., & Nathwani D. (2018). Development and impact of a massive open online course (MOOC) for antimicrobial stewardship. Journal of Antimicrobial Chemotherapy, 73(4), 1091-1097. https://doi.org/10.1093/jac/dkx493

Soffer, T., & Cohen, A. (2015). Implementation of Tel Aviv University MOOCs in academic curriculum: A pilot study. International Review of Research in Open and Distributed Learning, 16(1), 80-97. https://doi.org/10.19173/irrodl.v16i1.2031

Sun, Y., Ni, L., Zhao, Y., Shen, X.-L., & Wang, N. (2019). Understanding students’ engagement in MOOCs: An integration of self-determination theory and theory of relationship quality. British Journal of Educational Technology, 50(6), 3156-3174. https://doi.org/10.1111/bjet.12724

Tao, D., Fu, P., Wang, Y., Zhang, T., & Qu, X. (2019). Key characteristics in designing massive open online courses (MOOCs) for user acceptance: An application of the extended technology acceptance model. Interactive Learning Environments, 44, 1-14. https://doi.org/10.1080/10494820.2019.1695214

Teo, T., & Dai, H. M. (2019). The role of time in the acceptance of MOOCs among Chinese university students. Interactive Learning Environments, 1-14. https://doi.org/10.1080/10494820.2019.1674889

Teresa Garcia-Alvarez, M., Novo-Corti, I., & Varela-Candamio, L. (2018). The effects of social networks on the assessment of virtual learning environments: A study for social sciences degrees. Telematics and Informatics, 35(4), 1005-1017. https://doi.org/10.1016/j.tele.2017.09.013

Tsai, Y. H., Lin, C. H., Hong, J. C., & Tai, K. H. (2018). The effects of metacognition on online learning interest and continuance to learn with MOOCs. Computers & Education, 121, 18-29. https://doi.org/10.1016/j.compedu.2018.02.011

Veletsianos, G., & Shepherdson, P. (2016). A systematic analysis and synthesis of the empirical MOOC literature published in 2013-2015. International Review of Research in Open and Distributed Learning, 17(2). https://doi.org/10.19173/irrodl.v17i2.2448

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425-478. https://doi.org/10.2307/30036540

Wang, Y., & Baker, R. (2018). Grit and intention: Why do learners complete MOOCs? International Review of Research in Open and Distributed Learning, 19(3), 20-42. https://doi.org/10.19173/irrodl.v19i3.3393

Warr, P., & Downing, J. (2000). Learning strategies, learning anxiety and knowledge acquisition. British Journal of Psychology, 91(3), 311-333. https://doi.org/10.1348/000712600161853

Watson, S. L., Watson, W. R., & Tay, L. (2018). The development and validation of the attitudinal learning inventory (ALI): A measure of attitudinal learning and instruction. Educational Technology Research and Development, 66(6), 1601-1617. https://doi.org/10.1007/s11423-018-9625-7

Watson, W. R., Kim, W., & Watson, S. L. (2016). Learning outcomes of a MOOC designed for attitudinal change: A case study of an animal behavior and welfare MOOC. Computers & Education, 96, 83-93. https://doi.org/10.1016/j.compedu.2016.01.013

Watted, A., & Barak, M. (2018). Motivating factors of MOOC completers: Comparing between university-affiliated students and general participants. The Internet and Higher Education, 37, 11-20. https://doi.org/10.1016/j.iheduc.2017.12.001

Wu, B., & Chen, X. (2017). Continuance intention to use MOOCs: Integrating the technology acceptance model (TAM) and task technology fit (TTF) model. Computers in Human Behavior, 67, 221-232. https://doi.org/10.1016/j.chb.2016.10.028

Yang, H. H., & Su, C. H. (2017). Learner behaviour in a MOOC practice-oriented course: An empirical study integrating TAM and TPB. International Review of Research in Open and Distributed Learning, 18(5), 35-63. https://doi.org/10.19173/irrodl.v18i5.2991

Yang, M., Shao, Z., Liu, Q., & Liu, C. (2017). Understanding the quality factors that influence the continuance intention of students toward participation in MOOCs. Educational Technology Research and Development, 65(5), 1195-1214. https://doi.org/10.1007/s11423-017-9513-6

Zhang, J. (2016). Can MOOCs be interesting to students? An experimental investigation from regulatory focus perspective. Computers & Education, 95, 340-351. https://doi.org/10.1016/j.compedu.2016.02.003

Zhang, M., Yin, S., Luo, M., & Yan, W. (2016). Learner control, user characteristics, platform difference, and their role in adoption intention for MOOC learning in China. Australasian Journal of Educational Technology, 33(1), 114-133. https://doi.org/10.14742/ajet.2722

Zhao, Y., Wang, A., & Sun, Y. (2020). Technological environment, virtual experience, and MOOC continuance: A stimulus-organism-response perspective. Computers & Education, 144, 103721. https://doi.org/10.1016/j.compedu.2019.103721

Zhou, J. (2017). Exploring the factors affecting learners’ continuance intention of MOOCs for online collaborative learning: An extended ECM perspective. Australasian Journal of Educational Technology, 33(5), 123-135. http://doi.org/10.14742/ajet.2914

Zhou, M. (2016). Chinese university students’ acceptance of MOOCs: A self-determination perspective. Computers & Education, 92-93, 194-203. https://doi.org/10.1016/j.compedu.2015.10.012

Zhu, M., Sari, A., & Lee, M. M. (2018). A systematic review of research methods and topics of the empirical MOOC literature (2014-2016). The Internet and Higher Education, 37. https://doi.org/10.1016/j.iheduc.2018.01.002

Table A1

12 Typical Articles About Dropout Rate or Continuance Intention to Use MOOCs

| Article | Questionnaire model and items design | Quantitative analysis | Sample size |

| Yang & Su, 2017 | (1) TPB: Perceived behavior control, attitudes, subjective norms, behavior intention, actual behavior (2) TAM: Perceived ease of use (PEU), perceived usefulness (PU) | PLS-SEM Reliability analysis Validity analysis | 272 |

| Wu & Chen, 2017 | (1) TAM: PU, PEU, attitude toward using MOOCs, continuance intention to use (CIU) (2) TTF: Individual technology fit, task-technology fit (3) Social motivations: Social recognition, social influence (4) Features of MOOCs: Openness, reputation | PLS-SEM | 252 |

| Khan et al., 2018 | (1) TTF: Task characteristics, technology characteristics (2) Social motivation: Social recognition, social influence (3) SDT: Perceived relatedness, autonomy, competence | Multivariate assumptions Kolmogorov-Smirnov test | 414 |

| Zhu et al., 2018 | (1) TPB: Attitudes, perceived behavioral control, subjective norms, behavioral intention (2) SDT: Controlled motivation, autonomous motivation | CFA MLE-SEM | 475 |

| Yang et al., 2017 | (1) IS: System quality, course quality, service quality (2) TAM: PU, PEU, CIU | PLS-SEM | 294 |

| Botero et al., 2018 | UTAUT: Performance expectancy, effort expectancy, social influence, facilitating conditions, behavioral intention, attitudes towards behavior | SEM Descriptive statistics | 587 |

| Zhang et al., 2016 | (1) TAM: PU, PEU, CIU (2) Perceived learner control, personal innovativeness, information technology, E-learning self-efficacy | PLS-SEM Validity analysis: AVE Cronbach’s alpha | 214 |

| Jung & Lee, 2018 | (1) TAM: PU, PEU, academic self-efficacy (2) Teaching presence: Instructional design and organization (3) Learning engagement: Behavioral engagement, emotional engagement, cognitive engagement, learning persistence | SEM Reliability analysis: CR Validity analysis: CFA AVE | 306 |

| Wang & Baker, 2018 | (1) Motivation: Goal orientation, self-efficacy, grit, need for cognition (2) Three subscales of patterns of adaptive learning survey: Academic efficacy, mastery-goal orientation, performance-goal orientation | t-tests False discovery rate Bonferroni correction | 10348 |

| Luik et al., 2019 | (1) Social influence (2) Expectations on suitability: Personal suitability of distance learning, suitability for family and work (3) Interest and expectations on course, importance and perceived ability, usefulness related to certification, usefulness related to own children | EFA CFA Kaiser-Meyer-Olkin measure Correlation: Bartlett’s test | 1229 |

| Hone & El Said, 2016 | (1) Instructor effects: Instructor-learner interaction, instructor support, instructor feedback (2) Co-learner effects: Learner-learner interaction (3) Design and implementation effects: Course content, course structure, information delivery technology, perceived effectiveness | PLS-SEM Descriptive analysis Chi-square analysis EFA | 379 |

| Li et al., 2018 | (1) Network externalities: Network size, perceived complementarity, network benefit (2) User preference, user experience, motivation to achieve, persistence in completing MOOCs | PLS-SEM Reliability and validity Harman’s single-factor test | 346 |

Table A2

10 Typical Articles About Learners’ Performance in MOOCs

| Factor | Article | Questionnaire model and items design | Methodology | Sample Size |

| SRL | Lee et al., 2020 | OSLQ: Self-regulated learning strategies MSLQ: Self-efficacy, task value | Multiple regression Pearson’s correlation analysis | 184 |

| Martinez-Lopez et al., 2017 | OSLQ, MAI, and LS Goal setting, environment structuring, task strategies, management help, help-seeking, self-evaluation | Modified kappa Coefficient content validity Indexing (CVI) SEM | 45 | |

| Jansen et al., 2017 | MSLQ, OSLQ, MAI, and LS (1) Preparatory phase: Task definition, goal setting, strategic planning (2) Performance phase: Environmental structuring, time management, help-seeking, comprehension monitoring, task strategies, motivation control, effort regulation (3) Appraisal phase: Strategy regulation | EFA CFA Descriptive statistical analyses | 162 | |

| Kizilcec et al., 2017 | MSLQ, OSLQ, MAI, and LS Goal setting strategies, strategic planning, elaboration, help-seeking | Descriptive statistics Spearman correlation coefficients Fitted logistic regression | 4831 | |

| Motivation and learning strategy | Carlos et al., 2017 | Motivation: (1) Value component: Intrinsic goal orientation, task value (2) Expectancy component: Self-efficacy for learning and performance LS: (1) Cognitive and meta-cognitive strategies: critical thinking (2) Resource management strategies: Time, study environment | Descriptive statistics | 6335 |

| de Barba et al., 2016 | (1) Motivation: Individual interest, mastery-approach goals, value beliefs (2) Situational interest: Entering situational interest, maintaining situational interest | Cronbach’s alpha Descriptive statistics MLE-SEM Bootstrap Chi-square | 862 | |

| Fryer & Bovee, 2018 | (1) Prior competence, prior computer use, teacher support, smartphone use (2) Ability beliefs, effort beliefs, task value | CFI (confirmatory fit index) RMSEA (root mean square error of approximation) MANOVA Latent profile analysis (LPA) | 642 | |

| Watted & Barak, 2018 | (1) Career benefits: Certificate (2) Personal benefits: Improving knowledge (3) Educational benefits: Research and professional advancement | Kruskal-Wallis analysis | 377 | |

| Attitudinal learning | Ding & Zhao, 2020 | (1) Emotion, self-perceived achievement (2) Video engagement, assignment engagement | Reliability analysis Cronbach’s alpha | 378 |

| Watson et al., 2018 | Cognitive learning, affective learning, behavioral learning, social learning. | Descriptive statistics CFA | 1009 |

Table A3

10 Typical Articles About Assessment of MOOCs

| Respondent | Article | Questionnaire | Methodology | Sample Size |

| Learners | Martin Nunez et al., 2017 | Available resources, course forums, evaluations adequacy | Statistical analysis MANOVA | 112 |

| Jung et al., 2019 | (1) Course content, course structure, assessment method, learner-content interaction (2) Learner control, sense of progress, perceived effectiveness | Hierarchical linear regression | 1364 | |

| Robinson, 2016 | Teaching effectiveness, course objectives, overall rating, personal learning objectives, recommendation to others | t-tests Cronbach’s α Post-hoc power analysis | 21 | |

| Donitsa-Schmidt & Topaz, 2018 | (1) Flexibility and convenience (2) Learning opportunity, professional development | Descriptive analysis | 84 | |

| Meinert et al., 2018 | Motivation, learning methods, course content | Kruskal-Wallis analysis Logistic regression Descriptive analysis | 16 | |

| Teachers | Gan, 2018 | Teaching quality control security system: Teaching team, teaching content, teaching skills, teaching resources, course arrangement, policy support | Descriptive analysis | 20 |

| Sari et al., 2020 | (1) Course design: Preparation, attraction, participation, assessment, feedback (2) Teaching challenges | Descriptive analysis | 65 | |

| Lowenthal et al., 2018 | (1) Teaching motivation: Interest and passion, publicity and marketing, benefits and incentives (2) Teaching experience (3) Perception of MOOC educational value | Descriptive analysis | 186 | |

| Kormos & Nijakowska, 2017 | Participants’ attitudes, self-confidence, concerns about teaching practices, self-efficacy beliefs | Principal component analysis Regression factor scores MANOVA GLM | 752 | |

| Sneddon et al., 2018 | Course development, course evaluation, course delivery | Descriptive analysis | 219 |

A Systematic Review of Questionnaire-Based Quantitative Research on MOOCs by Mingxiao Lu, Tianyi Cui, Zhenyu Huang, Hong Zhao, Tao Li, and Kai Wang is licensed under a Creative Commons Attribution 4.0 International License.