Volume 22, Number 3

Florence Martin1, Ting Sun1, Murat Turk2, and Albert D. Ritzhaupt3

1University of North Carolina at Charlotte, 2University of Oklahoma, 3University of Florida

Synchronous online learning (SOL) provides an opportunity for instructors to connect in real-time with their students though separated by geographical distance. This meta-analysis examines the overall effect of SOL on cognitive and affective educational outcomes, while using asynchronous online learning or face-to-face learning as control groups. The effects are also examined for several moderating methodological, pedagogical, and demographical factors. Following a systematic identification and screening procedure, we identified 19 publications with 27 independent effect sizes published between 2000 and 2019. Overall, there was a statistically significant small effect in favor of synchronous online learning versus asynchronous online learning for cognitive outcomes. However, the other models were not statistically significant in this meta-analysis. The effect size data were normally distributed and significantly moderated by course duration, instructional method, student equivalence, learner level, and discipline. Implications for educational practice and research are included.

Keywords: synchronous, online learning, meta-analysis, face-to-face, asynchronous, affective, cognitive, outcome

With the increase in the number of online courses (Seaman et al., 2018), research on online learning has grown (Martin et al., 2020). Primary research has made way for systematic reviews and meta-analyses conducted on various online learning models. While there are several meta-analyses of online learning, most focus on asynchronous online learning. There is still a need for a meta-analysis to examine the effects of synchronous online learning (SOL).

SOL occurs when students and the instructor are together in “real time” but not at the “same place.” SOL is a specific type of online learning gaining importance due to the convenience it offers to both students and instructors while enhancing interactivity. Instructors and students are realizing the necessity of immediate interaction in their online experience, which is often referred to as “same time, some place learning.” Adding synchronous components to online courses can enrich meaningful interaction between student-instructor and student-student (Martin et al., 2012). As shown in Figure 1, SOL is considered a subset of online learning, and online learning a subset of distance education.

Figure 1

Synchronous Online Learning Conceptual Diagram

Synchronous online environments allow students and instructors to communicate using audio, video, text chat, interactive whiteboard, application sharing, instant polling, etc. as if face-to-face in a classroom. Participants can talk, view each other through a webcam, use emoticons, and work together in breakout rooms. Zoom, Blackboard Collaborate, Elluminate, Adobe Connect, and Webex are some of the synchronous online technologies prevalent in higher education. Synchronous technologies can be incorporated into online courses for community-building or social learning and are better suited to discussing less complex issues, getting acquainted, or planning tasks (Hrastinski, 2008). Synchronous online technologies are less flexible in terms of time, but can be accessed from anywhere. They render immediate feedback and allow multi-modality communication (Martin & Parker, 2014).

Fostering and sustaining different types of interactions among participants is particularly important in online learning environments since interaction plays a key role in influencing, if not determining, the quality and success of online education (Zimmerman, 2012). Given that online learners are much more likely both to feel isolated and alienated and to decide to drop out due to physical distance from peers and instructors, keeping online students interacting and engaging with others is also a significant factor in retention, which is known to be still lower in online education than in traditional face-to-face classrooms in higher education (Boston et al., 2010). In SOL, interaction is usually achieved through audio and/or video conferencing sessions, with synchronous chat features where each participant has a chance to receive and respond to messages or inputs in real-time, whereas asynchronous interaction is usually fostered and maintained via discussion boards where participants have time to reflect on the course content and their peers’ ideas since they are not supposed to work at the same time and there is no pressure to respond immediately (Banna et al., 2015; Bernard et al., 2009; Giesbers et al., 2014; Revere & Kovach, 2011). Interactions in the synchronous mode of online communication are usually found to be more useful and effective in fostering social-emotional relations, sense of community and belongingness, learner engagement, and immediate feedback and information exchanges among participants (Chou, 2002; Giesbers et al., 2014; Mabrito, 2006), and these interactions take place learner to learner, learner to instructor, and learner to content (Moore, 1993).

A number of empirical studies have compared SOL with the asynchronous online and face-to-face modes of learning, and a variety of significant findings have been reported in terms of specific learning outcomes, such as online interactions, sense of cooperation, sense of belonging, student emotions, cognitive presence, and critical and reflective thinking skills. We review these findings in the following sections.

Online learner interaction is one of those variables or outcomes empirically investigated when comparing synchronous and asynchronous learning environments. For example, using a content analysis method, Chou (2002) examined and compared online learners’ interaction transcripts from synchronous and asynchronous discussions. In synchronous discussions, learners engaged in more social-emotional exchanges, using more two-way communication, whereas the interactions in asynchronous modes of learning were much more focused on the learning tasks, using primarily one-way communication with less interactive exchanges (Chou, 2002). Using a case study research design, Mabrito (2006) similarly explored differences in the patterns and nature of learner interactions between synchronous and asynchronous modes of communication by analyzing online learners’ transcripts of discussions.

More recently, Peterson et al. (2018) found that asynchronous online cooperation yielded less sense of belonging and more negative emotions among learners, while the synchronous mode of communication positively influenced student sense of belonging, emotions, and cooperation in online groups. In a similar study, Molnar and Kearney (2017), as a result of their analysis of asynchronous and synchronous modes of online discussion, concluded that although both modes contributed to students’ cognitive presence, students participating in synchronous Web discussions engaged in more cognitive presence than their peers in the asynchronous discussions. These studies clearly indicate that the synchronous mode of online communication can also positively influence cognitive processes and skills of online learners.

Several studies have also empirically compared SOL with traditional face-to-face learning in terms of outcomes. Kunin et al. (2014) compared postgraduate dental residents’ perceptions regarding the perceived effectiveness of synchronous and asynchronous modes of online learning to traditional face-to-face learning and found that participants perceived the face-to-face mode as being most conducive to their ability to learn, while also favoring the asynchronous over the synchronous mode after experiencing both. On the other hand, Garratt (2014) investigated whether a synchronous mode of instruction could be used effectively to teach a set of psychomotor skills to a cohort of paramedic students in comparison to face-to-face instruction of the same skills. Garratt (2014) found no significant difference in the skills performance results of the two groups, indicating that the synchronous mode of learning could be as effective as traditional face-to-face instruction to teach even complex psychomotor skills, although it should be noted that the very limited sample size was a serious limitation to the study. Haney et al. (2012) used synchronous and face-to-face modes of instruction to teach wound closure skills to two groups of paramedics. On tests of both knowledge and skills, the students who received the same instruction through videoconferencing performed at least as well as those who received traditional face-to-face instruction, while traditional face-to-face instruction was still perceived to be the more effective method of teaching (Haney et al., 2012).

In support of the equal or almost equal effectiveness of the synchronous mode of online learning in comparison to face-to-face learning, Siler and Vanlehn (2009) found that synchronous one-to-one tutoring worked at least as effectively as face-to-face tutoring in terms of students’ gains in learning physics and several motivational outcomes, although the face-to-face tutoring was found to be more time-efficient and conducive to emotional exchanges, while also allowing more interaction. More recently, Francescucci and Rohani (2019) compared synchronous and face-to-face learning in terms of exam grades and perceived student engagement and found that students who received the synchronous online version of an introductory marketing course academically performed as successfully as their peers who took the face-to-face version of the same course. These studies cumulatively indicate that although the traditional face-to-face mode of learning is, as expected, perceived to be a more effective method of learning and instruction overall, the synchronous or asynchronous mode of online learning has the potential to help achieve desirable outcomes as effectively and successfully as conventional modes of learning and instruction.

Reviews of research have been conducted on distance education and exclusively on online learning. There have been a number of meta-analyses on distance education, specifically comparing face-to-face to online learning (Allen, 2004; Cook et al., 2008; Jahng et al., 2007; Shachar & Neumann, 2010; Todd et al., 2017; Zhao et al., 2005). However, we did not find a meta-analysis specifically examining SOL, comparing it to asynchronous online learning or to face-to-face learning, though we found a few studies examining SOL as a moderator variable (Bernard et al., 2004; Means et al., 2013; Williams, 2006). In the Bernard et al. (2004) review that examined 232 studies, synchronous and asynchronous were examined as a moderator variable. They found asynchronous distance education to have a small significant positive effect (g + = 0.05) on student achievement, and synchronous distance education to have a small significant negative effect (g + = -0.10). However, in this case, the studies were focused on all aspects of distance education and not specifically on online learning. Means et al. (2013) examined synchronicity as a moderator variable and found that it was not a significant moderator of online learning effectiveness. Williams (2006) examined 25 studies in allied health sciences and found that synchronous learning had a 0.24 average weighted effect size while asynchronous learning had a negative effect size of -0.06. There have been mixed findings when examining synchronous learning as a moderator. Also, when referring to synchronous learning, these studies did not specifically focus on SOL but on all synchronous distance education.

There is one systematic review on SOL in which Martin et al. (2017) reviewed 157 articles published from 1995 to 2014. The majority of the studies were conducted in the United States and in higher education. English/Foreign Language and Education were the top two content areas. Qualitative research methods were used in 57% of the studies and perception/attitude were examined in 61%. While questionnaires were used in 61% of the studies reviewed, 50% of the studies also used session transcripts to collect data. While this study provides a descriptive analysis of data on studies using SOL, it does not compare SOL to other delivery methods.

While there are a few meta-analyses focusing on the broader comparison of online learning versus face-to-face or blended learning, there is a gap in the research comparing SOL with either face-to-face or asynchronous online learning. There is only one systematic review conducted on SOL (Martin et al., 2017) and a few moderator analyses on synchronous distance education (Bernard et al., 2004, Means et al., 2013; Williams, 2006). However, there is no meta-analysis focusing on SOL, though it is a critical aspect of online learning.

A meta-analysis can advance the field of SOL by providing information to contextualize what we know about online learning and technology and how it is applied (Oliver, 2014). Systematic reviews help develop a common understanding among researchers about the state of their field and improve future research to close gaps and eliminate inconsistencies. We hope to provide a quantitative synthesis of research literature on SOL from 2000 to 2019 and examine SOL’s effectiveness in achieving educational outcomes.

This study followed the meta-analysis process as described by Wilson (2014). There were five steps:

Researchers have used different terminologies to describe SOL. It is referred to as synchronous virtual classrooms (Martin & Parker, 2014), Web conferencing, e-conferencing, or virtual conferencing (Rockinson-Szapkiw & Walker, 2009), and also commonly known as a webinar. In this study, we used seven terms to identify research on SOL. The search keywords were “Synchronous and Online Learning”, “Web conferenc*”, “Virtual Classroom”, “Synchronous and Elearning”, “Econferenc*”, “Virtual conferenc*”, and “Webinar”.

To ensure we identified relevant literature, we did a broad search of journal articles and doctoral dissertations published between 2000 and 2019. We chose the year 2000 as the starting point as this is when synchronous online tools became popular in online courses. An electronic search was conducted in six databases, which included Academic Search Complete, Communication & Mass Media Complete, Education Research Complete, ERIC, Library, Information Science & Technology Abstracts with Full Text, and PsycINFO.

To determine which articles to include in our study, we used the definition from Martin et al. (2017) which states that in SOL there is: (a) a permanent separation (of place) of the learner and instructor during planned learning events where (b) instruction occurred in real-time such that (c) students were able to communicate with other students and the instructor through text, audio, and/or video-based communication of two-way media. We then arrived at several criteria for inclusion/exclusion which are shown in Table 1.

Table 1

Inclusion/Exclusion Criteria

| Criteria | Inclusion | Exclusion |

| Technology | Any use of synchronous online technology | Other technology that is not synchronous online |

| Publication date | 2000 to 2019 | Prior to 1999 and after 2019 |

| Publication type | Scholarly articles of original research from peer reviewed journals and dissertations | Book chapters, technical reports, or proceedings |

| Language | Publication was written in English | Languages other than English |

| Research design | Experimental or quasi-experimental design and between subjects’ design comparing synchronous online with asynchronous or synchronous online with face-to-face | Non-experimental designs or within-subject design |

| Results of research | Adequate data for calculating effect sizes | Not enough statistics provided |

| Educational outcomes | Clear educational outcomes (cognitive and affective) | No clear educational outcomes |

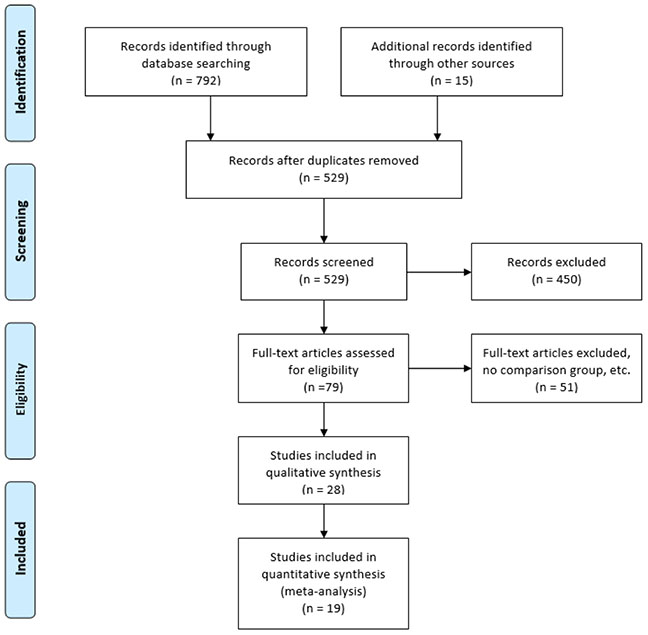

We used the PRISMA flow model (Figure 2) to guide the process of identification, screening, eligibility, and inclusion of studies. The PRISMA guidelines were proposed by the Ottawa Methods Centre for reporting items for systematic reviews and meta-analyses (Moher et al., 2009). Our initial search identified n = 807 manuscripts, which was reduced to n = 529 after removing duplicate entries. To ensure consistent screening procedures, we hosted a discussion session with two team members and screened a random sample of five manuscripts for calibration purposes. After screening the titles and abstracts, full-text screening was conducted in two rounds with n = 28 manuscripts. After systematically applying our inclusion and exclusion criteria, n = 19 manuscripts qualified for final inclusion in the study. They were subjected to our coding and data extraction procedures.

Figure 2

PRISMA Flowchart for SOL Review

The research team developed and used a Google form to code the variables described in Table 2. The form was divided into six sections to include (a) study identification, (b) outcome features, (c) methodological features, (d) pedagogical features, (e) synchronous technology features and (f) demographics. The initial coding was performed by two team members who met frequently with other team members to discuss coding related questions. The two team members coded the same five articles initially with inter-rater agreement of 88.46%.

Table 2

Description of the Coded Elements for Each Research Study

| Element | Description |

| Article information | Full reference including author(s), year of publication, article title, journal name, and type of publication (journal article, dissertation, or other). |

| Outcome features | Coded as cognitive, affective, and behavioral. Cognitive outcomes included measures such as learning, achievement, critical thinking skills, comprehension, and similar outcomes. The affective outcomes included learner satisfaction, emotions, attitudes, motivation, and related measures. Behavioral focused on interactions. |

| Outcome measures | Outcome measures were coded for each type of outcome variable. Options on the cognitive outcome measures included standardized test, researcher-made test, teacher-made test, teacher/researcher-made test, and unknown. |

| Control conditions and type | Number of control conditions were coded. This included one control with one synch, one control with more than one synch, one synch and more than one control, and more than one synch and more than one control. The control type was also coded to be either asynchronous or face-to-face. |

| Course duration and synchronous session duration | The different options for course duration included: less than 15 weeks, 15 weeks, more than 15 weeks, and unknown. Synchronous session duration included: less than 30 minutes, 30 minutes to 2 hours, more than 2 hours, and unknown. |

| Instructor and student equivalence | Instructor equivalence was coded as; same instructor, different instructor, and unknown, while student equivalence was coded as random assignment, non-random assignment with statistical control, non-random assignment without statistical control, and unknown. |

| Time and material equivalence | Time equivalence was coded as yes, no and unknown, and material equivalence was coded as same curriculum materials, different curriculum materials, and unknown. |

| Interaction features | Learner-learner, learner-instructor, and learner-content interactions were coded as opportunity to interact, no opportunity to interact, and unknown. |

| Instructional teaching method | This was coded as lecture, interactive lesson, unknown, and other. |

| Synchronous technology | Synchronous technology type along with different synchronous feature used were coded. |

| Demographics | Types of synchronous learners (K-12, undergraduate, graduate, military, industry/business, professionals), discipline, gender and age of participants, and country were coded. |

| Effect sizes | Statistical information (M, SD, n) to calculate effect sizes. |

Cognitive and affective educational outcomes were the dependent variables used in this study. Cognitive outcomes include measures such as learning, achievement, critical thinking skills, comprehension, and similar outcomes. The affective outcomes included learner satisfaction, emotions, attitudes, motivation, and related measures. Though it was our intention to also code for behavioral educational outcomes, only two studies reported on behavioral outcome and hence this was not part of this meta-analysis.

Several variables important in SOL were coded and examined as moderators. Though we coded for a number of variables, there was not sufficient information to examine all as moderators. Thus, only seven were chosen: two pedagogical (course duration and type of instructional method), one methodological (student equivalence), three demographic (learner level, discipline, country), and one publication type variable (publication source).

Moderators included: (a) course duration (i.e., less than one semester or one semester and more); (b) type of instructional method (i.e., lecture or interactive lesson); (c) student equivalence (i.e., non-random assignment or random assignment); (d) learner level (i.e., undergraduate or graduate/professional); (e) discipline (i.e., education or others); (f) country (i.e., United States of America or others); and (g) publication source (i.e., journal article or dissertation).

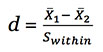

Data were analyzed using the computer software Comprehensive Meta-Analysis, version 3 (CMA 3.0; Borenstein et al., 2014). Effect size used in the current meta-analysis was Hedges’ g. First, standardized mean difference (Cohen’s d) was calculated by dividing the raw mean difference between the synchronous treatment condition and the control condition (asynchronous or face-to-face condition) by the pooled standard deviation of the two conditions using the following formula. Notations were borrowed from Borenstein et al. (2009).

| (1) |

| (2) |

Then was transformed into Hedges’s g for bias correction using the following formula (Borenstein et al., 2009).

| (3) |

We have three types of effect size statistics. Most studies reported statistics of means, standard deviations, and sample sizes for the synchronous treatment condition and the control condition (i.e., asynchronous or face-to-face). One study reported raw mean difference and significance of difference (i.e., Cleveland-Innes & Ally, 2004) and one study reported Cohen’s d (i.e., Francescucci & Rohani, 2019). The original data had 86 cases of effect size statistics in the 19 primary studies. Before conducting the meta-analysis, we had to deal with statistics that may have yielded dependent effect sizes within studies. For example, Peterson et al. (2018) reported multiple effect size statistics calculated from different affective measures. Ignoring the dependence issue would pose threats to validity of meta-analytic results because it may result in a spuriously smaller standard error of the summary effect size and a higher risk of committing type I error (Ahn et al., 2012). Literature suggested procedures in handling the dependence such as averaging or weighted averaging method (Borenstein et al., 2009) or robust variance estimation (RVE) (Hedges et al., 2010). Although RVE performs better than the averaging procedure in estimating unbiased standard error (Moeyaert et al., 2017), it requires a large sample (i.e., number of primary studies) for accuracy (Tanner-Smith & Tipton, 2014). Therefore, we used the weight averaging procedure to deal with the dependence issue. This resulted in 27 effect sizes in the 19 primary studies after we averaged effect size statistics of the same measure type (i.e., affective or cognitive) for each control group (i.e., asynchronous or face-to-face) within studies.

We employed a random-effects model for several reasons. First, the fixed-effect model assumes that all studies share one common effect size in the population (Borenstein et al., 2009), which can only make conditional inferences to the studies included in a meta-analysis (Field, 2001). Second, we hypothesized that the true effects were heterogeneous and the proposed moderators may explain the heterogeneity. Therefore, employing the random-effect model and assuming that the true effect sizes vary across studies was more appropriate and plausible. There were four conditions in the current meta-analysis:

First, we estimated the overall effect size for each condition. Overall averaged effect size, standard error, confidence intervals, Z and its related p-value, and heterogeneity statistics (Q and its p-value, T2, and I2) were computed. Overall average effect size provides an estimate of the effects of SOL on educational outcomes. Its standard error and confidence intervals provide evidence of the estimation accuracy. The Z and its p-value show whether the effect size estimate is statistically significant. Heterogeneity statistics provide evidence of the variation of the true effect sizes across studies. We also conducted moderator analyses on the four conditions to determine if the heterogeneity (if any) in effect sizes could be accounted for by pedagogical, methodological, demographical, and publication variables. All the moderators are categorical variables, and analyses were conducted with the mixed effects analysis (MEA) as implemented in the CMA 3.0.

Finally, it was important to address the issue of publication bias which is when the published research is not representative of the population of work in the domain. In this meta-analysis, both journal articles and dissertations were included, which means some grey literature was accounted for, but there was still the risk of publication bias. Several strategies were used to determine publication bias. Funnel plots showing the relationship between standard errors or studies included and effect sizes (Borenstein, 2009) illustrate the spread of the studies. In addition, classic fail-safe N (Rosenthal, 1979), to represent the number of missing studies to bring the p-value to a non-significant level, was included. Finally, we used Orwin’s fail-safe N (Orwin, 1983), which assists in computing the number of missing studies needed to bring the summary effect to a level below the specified value other than zero.

Table 3 shows the publication details of the 19 journal articles and dissertations included in this study. The studies were published in a wide array of journals in several different disciplines, and dissertations were completed at institutions of higher education across the United States. Among the studies, three were published in each of 2008, 2010, and 2014, while there were two in each of 2012, 2015, 2016, and 2018, and one study in 2004 and 2006.

Table 3

Journal Articles and Dissertation Details

| Journal articles (n = 12) | Dissertations (n = 7) |

| Australian Journal of Teacher Education Journal on Excellence in College Teaching Implementation Science Canadian Journal of University Continuing Education Journal of Marketing Education Online Learning Journal The Modern Language Journal International Journal of Distance Education Technologies Journal of Applied Business and Economics The Online Journal of Distance Education and e-Learning American Journal of Pharmaceutical Education Decision Sciences Journal of Innovative Education | Northcentral University The Ohio State University Capella University (3) The Texas Woman’s University Texas A & M University |

Descriptive information about the 19 primary studies is presented in Table 4. The final sample consisted of k = 27 independent effect sizes (across the four models) and n = 4,409 participants. A total of n = 1,114 students received SOL and the number of students who received asynchronous online learning and face-to-face learning were n = 1,079 and n = 2,216, respectively. Approximately half the studies were conducted with undergraduate students (n = 10, 52.6%), and the rest were conducted with graduates or professionals (n = 9, 47.4%). With respect to disciplines, the most frequently studied was education (n = 5, 26.3%), followed by business (n = 4, 21.1%) and medicine or nursing (n = 3, 15.8%). A majority of the studies were conducted in the United States (78.9%), and four others were conducted in Australia (i.e., Dyment & Downing, 2018), Canada (i.e., Cleveland-Innes & Ally, 2004), Japan (i.e., Shintani & Aubrey, 2016), and China (Taiwan) (i.e., Chen & Shaw, 2006). There were 12 journal articles (63.2%) and seven dissertations (36.8%).

Table 4

Descriptive Data for the Primary Studies

| Authors | Publication source | Outcome | Control type | Course duration - 15 weeks | Student equivalence | Type of instructional method | Learner level | Discipline | Country/ Region |

| Buxton (2014) | Journal | Affective | Asynch | Less | Non- random | Lecture | Professional | Histology | USA |

| Chen & Shaw (2006) | Journal | Cognitive/ Affective | Asynch/ F2F | Less | Random | Lecture | Undergraduate | Computer science | Taiwan |

| Cleveland-Innes & Ally (2004) | Journal | Affective | Asynch | Unknown | Random | Interactive lesson | Professional | Business | Canada |

| Dyment & Downing (2018) | Journal | Affective | Asynch/ F2F | Less | Non- random | Interactive lesson | Graduate | Education | Australia |

| Francescucci & Rohani (2019) | Journal | Cognitive/ Affective | Asynch/ F2F | More | Random | Interactive lesson | Undergraduate | Business | USA |

| Gable (2012) | Dissertation | Affective | Asynch | Less | Non- random | Interactive lesson | Graduate | Education | USA |

| Gilkey et al. (2014) | Journal | Affective | F2F | More | Random | Interactive lesson | Professional | Medicine | USA |

| Kizzier (2010) | Journal | Affective | Asynch/ F2F | Unknown | Unknown | Interactive lesson | Undergraduate | Business | USA |

| Kyger (2008) | Dissertation | Affective | Asynch | Less | Unknown | Interactive lesson | Undergraduate | Computer science | USA |

| Leiss (2010) | Dissertation | Affective | Asynch | Less | Non- random | Interactive lesson | Undergraduate | Health | USA |

| Moallem (2015) | Journal | Cognitive/ Affective | Asynch | Equal | Non- random | Interactive lesson | Graduate | Education | USA |

| Nelson (2010) | Dissertation | Cognitive | F2F | Equal | Random | Lecture | Undergraduate | Medicine | USA |

| Peterson et al. (2018) | Journal | Affective | Asynch | Equal | Random | Unknown | Undergraduate | Education | USA |

| Rowe (2019) | Dissertation | Cognitive | Asynch/ F2F | More | Non- random | Lecture | Graduate | Math | USA |

| Scharf (2015) | Dissertation | Cognitive | Asynch/ F2F | More | Non- random | Lecture | Graduate | Others | USA |

| Shintani & Aubrey (2016) | Journal | Cognitive | Asynch | Less | Random | Interactive lesson | Undergraduate | Science | Japan |

| Spalla (2012) | Dissertation | Affective | F2F | Equal | Random | Interactive lesson | Undergraduate | Medicine | USA |

| Stover & Miura (2015) | Journal | Affective | Asynch | Less | Non- random | Interactive lesson | Graduate | Education | USA |

| Strang (2012) | Journal | Cognitive | Asynch | Less | Non- random | Interactive lesson | Undergraduate | Business | USA |

Note. Asynch = asynchronous; F2F = face-to-face.

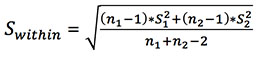

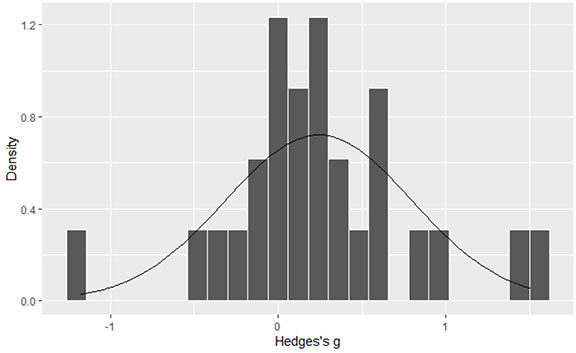

Meta-analyses assume normal distribution of observed effect sizes for accurate estimation (Borenstein et al., 2009). The distribution of Hedges’s g is plotted in Figure 3, which suggests that effect sizes were approximately normally distributed. Given the within-study dependent effect sizes, we conducted meta-analyses of the four conditions separately (i.e., synchronous vs. asynchronous with cognitive outcomes, synchronous vs. asynchronous with affective outcomes, synchronous vs. face-to-face with cognitive outcomes, synchronous vs. face-to-face with affective outcomes). The overall effect size statistics for each of the four conditions is presented in Table 5. The effect size was statistically significant in only one model (synchronous vs. asynchronous with cognitive outcomes), and it did not overlap zero in the confidence interval.

Figure 3

Histogram of Effect Size Estimates

Table 5

Overall Effect Size Estimates for the Four Conditions

| Effect size and 95% CI | Heterogeneity | ||||||||

| n | k | g | SE | 95% CI | Z | p | Q-value | df | |

| Synch vs. Asynch - Cognitive | 1260 | 7 | 0.367 | 0.159 | [0.055, 0.679] | 2.308 | .021 | 28.630*** | 6 |

| Synch vs. Asynch - Affective | 862 | 11 | 0.320 | 0.164 | [-0.001, 0.641] | 1.953 | .051 | 50.193*** | 10 |

| Synch vs. F2F - Cognitive | 1833 | 4 | -0.198 | 0.281 | [-0.749, 0.352] | -0.706 | .480 | 29.824*** | 3 |

| Synch vs. F2f - Affective | 1080 | 5 | 0.195 | 0.038 | [-0.195, 0.568] | 0.957 | .338 | 22.520*** | 4 |

Note. n = the number of participants; k = the number of studies; CI = confidence interval; synch = synchronous; asynch = asynchronous; F2F = face-to-face. p*** < .001.

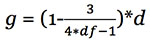

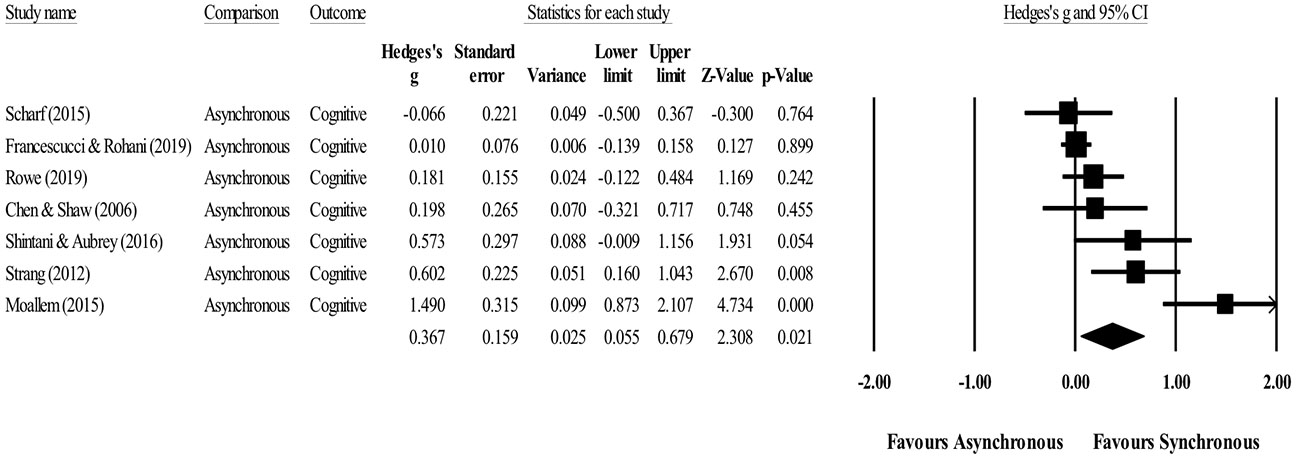

Seven studies comparing SOL with asynchronous online learning in terms of cognitive outcomes are shown in Figure 4. The last line indicates the statistics for the summary effect. The results of the weighted average applying a random model revealed a statistically significant effect size (g = 0.37, p = .02), with a 95% confidence interval of 0.055 to 0.679, indicating that SOL significantly and positively impacted students’ cognitive outcomes. The significant Q-value suggests that the true effect sizes were heterogeneous across studies (Q-value = 28.63, p < .001) with 79% of the observed variance reflecting true heterogeneity (I2 = 79.04).

Figure 4

Forest Plot of Cognitive Outcomes (Synchronous vs. Asynchronous)

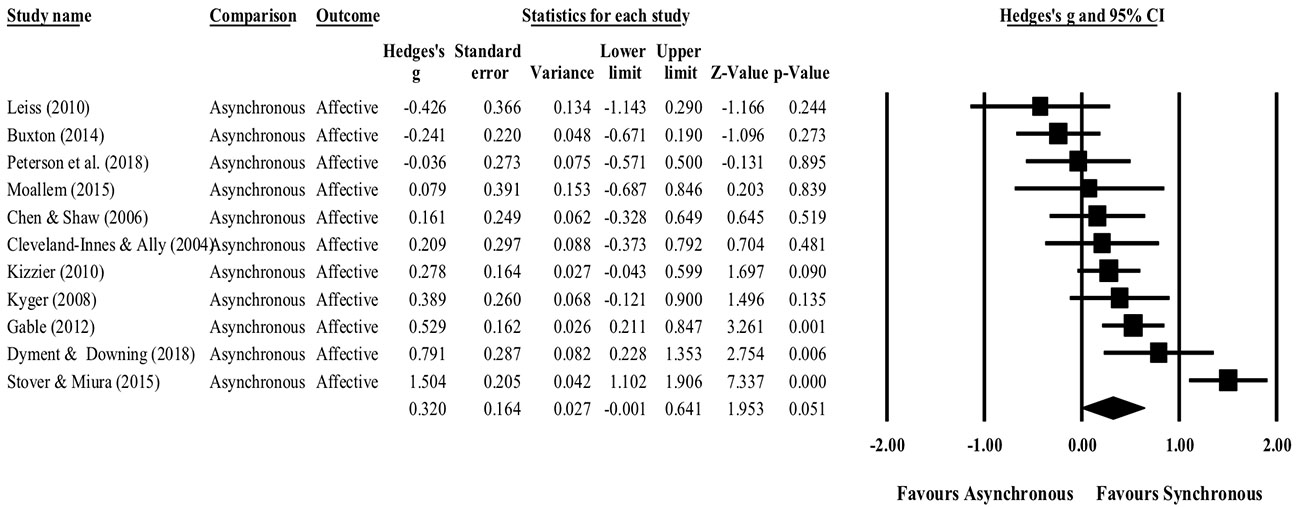

The eleven studies that compared SOL to asynchronous online learning with affective outcomes are shown in Figure 5. The results of the weighted average applying a random model revealed that SOL did not have a statistically significant effect on affective outcomes (g = 0.32, p = .051), with a 95% confidence interval of -0.001 to 0.641. The Q-value of homogeneity was statistically significant, indicating the true effect sizes varied across studies (Q-value = 50.19, p < .001) and a majority of variation of the observed effect sizes was due to between-studies variation (I2 = 80.08).

Figure 5

Forest Plot of Affective Outcomes (Synchronous vs. Asynchronous)

Four studies comparing SOL with face-to-face learning in terms of cognitive outcomes are shown in Figure 6. Results revealed a statistically insignificant negative effect size (g = -0.20, 95% CI [-0.749, 0.352], p = .48), indicating that SOL did not statistically significantly improve students’ cognitive outcomes compared with traditional face-to-face learning. The Q-value was statistically significant, indicating that the true effect sizes varied across studies (Q-value = 29.82, p < .001) and a substantial observed variation was real (I2 = 89.94).

Figure 6

Forest Plot of Cognitive Outcomes (Synchronous vs. Face-to-Face)

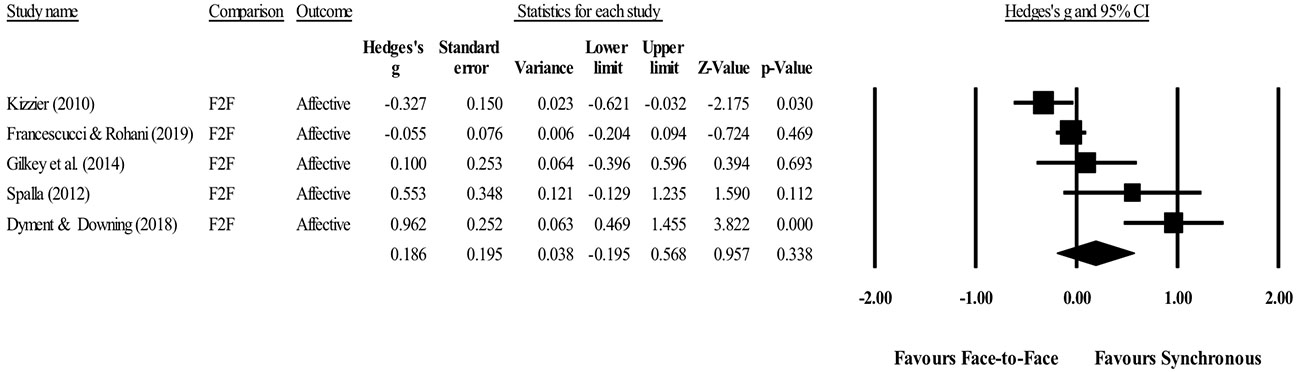

A final subset included five studies comparing SOL with face-to-face learning in affective outcomes and is illustrated in Figure 7. Results revealed a statistically insignificant and small effect size (g = 0.20, 95% CI [-0.195, 0.568], p = .34), indicating that SOL did not significantly improve students’ affective outcomes compared with the face-to-face learning mode. Heterogeneity statistics suggested that the true effect sizes varied across studies (Q = 22.52, p < .001) and a large proportion of the observed variance was between-study variation (I2 = 82.24).

Figure 7

Forest Plot of Affective Outcomes (Synchronous vs. Face-to-Face)

Since effect sizes were found to be heterogeneous across studies, moderator analyses were conducted to examine what factors may account for the heterogeneity of each condition. Seven moderating variables were chosen, falling into four categories: pedagogical, methodological, demographic, and publication variables. The results from the moderator analyses can be found in the Appendix in Tables A through D.

Type of instructional method and course duration were examined as potential pedagogical variables moderating effect size estimates.

Instructional Method. For the condition of synchronous vs. asynchronous with cognitive outcomes, the type of instructional method did not moderate effect size estimates. Although studies employing interactive lessons had a significant effect size estimate (g = 0.626, p = .048) and studies employing lectures resulted in an insignificant effect size (g = 0.118, p = .302), there was no statistically significant difference between the two conditions (Q-value = 0.115, p = .735). The results of moderator analyses for the condition of synchronous vs. asynchronous with cognitive and affective outcomes are presented in Table A and Table B. We found a moderating effect of the type of instructional method on effect size results. Interactive lessons had an effect size estimate statistically significantly larger than lectures (Q-value = 10.756, p = .001) and unknown condition (Q-value = 4.045, p = .044). Results of pedagogical moderator analyses for the condition of synchronous vs. face-to-face with cognitive outcomes and affective outcomes are presented in Table C and Table D, respectively. Since all studies employed lectures (k = 4) for the condition of synchronous vs. face-to-face with cognitive outcomes and all studies employed interactive lessons (k = 5) for the condition of synchronous vs. face-to-face with affective outcomes, the type of instructional method could not be examined as a moderator.

Course Duration. Comparing synchronous vs. asynchronous with cognitive outcomes, course duration tended to moderate effect size results. Studies with a course duration less than one semester yielded statistically significantly larger effect sizes than those with a course duration of one semester or longer (Q-value = 5.364, p = .021). In the condition of synchronous vs. asynchronous with affective outcomes, although effect sizes were all insignificant across the three conditions of course duration, there were statistically significant differences between the duration of less than one semester and that of one semester or longer, with the former yielding a statistically significantly larger effect size than the latter (Q-value = 4.191, p = .041). However, course duration did not moderate effect size under the condition of synchronous vs. face-to-face with cognitive outcomes (Q-value = 0.050, p < .824). On the condition of synchronous vs. face-to-face with affective outcomes, it was found that effect sizes varied as a function of course duration with shorter duration (i.e., less than one semester) having larger effect size estimates than the duration of one semester or longer (Q-value = 14.019, p < .001).

Student Equivalence. Student equivalence was examined as a potential methodological variable moderating the effect size estimates. This variable indicates whether studies employed random or non-random assignment to distribute students to the treatment and control condition. There were three studies employing random assignment and four studies employing non-random assignment when comparing the synchronous with the asynchronous condition in cognitive outcomes. Although both conditions yielded insignificant effect size estimates, non-random assignment had a statistically significantly larger effect size than random assignment (Q-value = 5.837, p < .016). On the condition of synchronous vs. asynchronous with affective outcomes, most studies employed non-random assignment (k = 6). Results revealed that student equivalence has moderating effects on effect sizes, with studies employing non-random assignment producing effect sizes statistically significantly larger than those employing random assignment (Q-value = 5.291, p = .021). Half the studies employed the random assignment (k = 2) when the control type was face-to-face and the outcomes were cognitive variables. Student equivalence did not moderate the effect size estimates (Q-value = 0.136, p < .713). In the condition of synchronous vs. face-to-face with affective outcomes, there were three studies employing random assignment and only one study employing non-random assignment. An additional study did not report information on student assignment. Results revealed that student equivalence moderated the effect size estimates, with studies employing non-random assignment having statistically significantly larger effect size than studies in the other two categories, studies employing random assignment (Q-value = 14.019, p < .001) and the study without information (Q-value = 19.331, p < .001).

Learner level, discipline, and country were examined as potential demographic variables to moderate effect sizes. We also hypothesized that effect sizes would vary as a function of publication source since studies with significant results or larger effect sizes tend to be published (Rothstein et al., 2005).

Learner Level. Results revealed that learner level did not moderate effect size in the two conditions with cognitive outcomes. However, effect sizes varied as a function of learner levels when outcomes were affective. Although none of the effect sizes was significant, the effect size for graduate/professional was statistically significantly larger than the undergraduate comparison for both conditions (Q-value = 7.732, p = .005 for asynchronous, and Q-value = 10.570, p = .001 for face-to-face).

Discipline. On the condition of synchronous versus asynchronous with cognitive outcomes, results revealed that effect sizes varied as a function of discipline, with the discipline of education having a statistically significantly larger effect size estimate than other disciplines (Q-value = 18.738, p < .001). There were also statistically significant differences in effect size estimates across disciplines on the condition of synchronous vs. asynchronous with affective outcomes, with the discipline of education again having a statistically significantly larger effect size estimate than other disciplines (Q-value = 16.773, p < .001). Likewise, on the condition of synchronous vs. face-to-face with affective outcomes, the discipline of education had a statistically significantly larger effect size estimate than other disciplines (Q-value = 15.904, p < .001). However, discipline did not moderate the effect size results on the condition of synchronous vs. face-to-face with cognitive outcomes.

Country. There were more studies conducted in the United States than in other countries. We failed to consistently find a moderating effect of country on effect size estimates across the four conditions, indicating that effect sizes of studies conducted in the United States were not statistically significantly different from those conducted in other countries.

Publication Source. We found that publication source was not a significant moderator of effect sizes either, suggesting that there was no statistical difference between effect size estimates obtained from journal articles and those obtained from dissertations.

Publication bias occurs when the studies included in a systematic review are not representative of all studies in a population (Rothstein et al., 2005). We took the following steps to address publication bias:

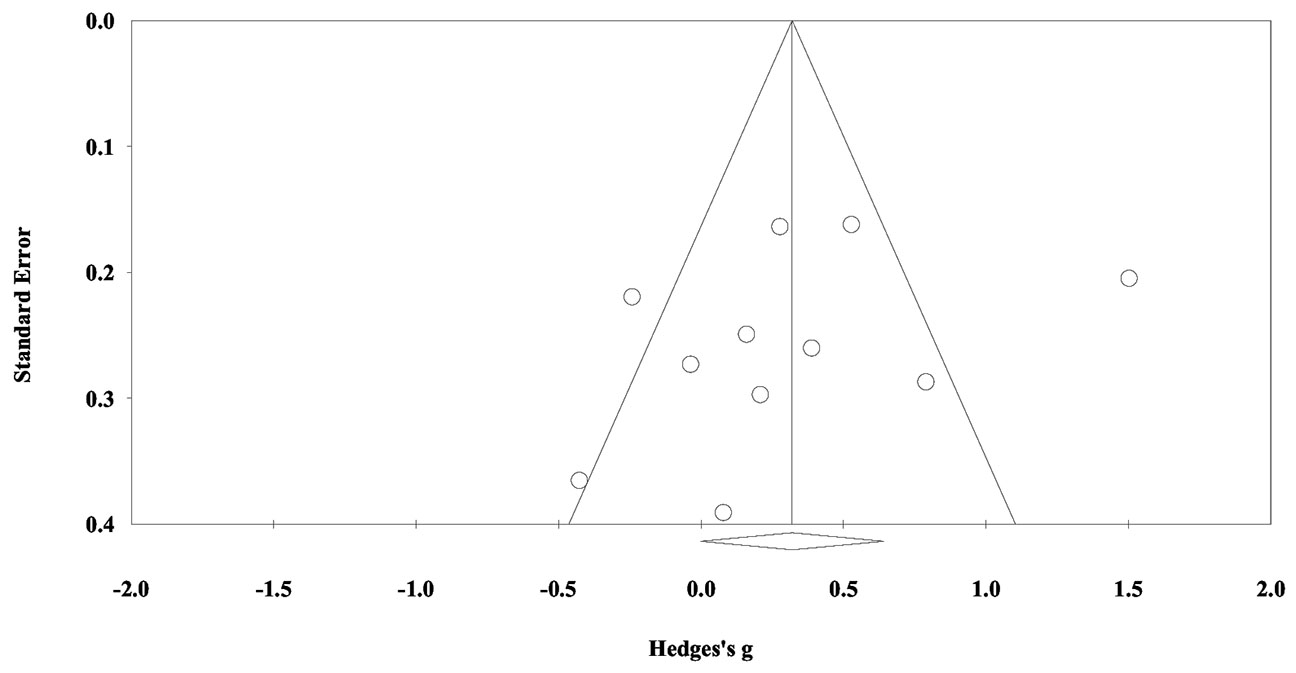

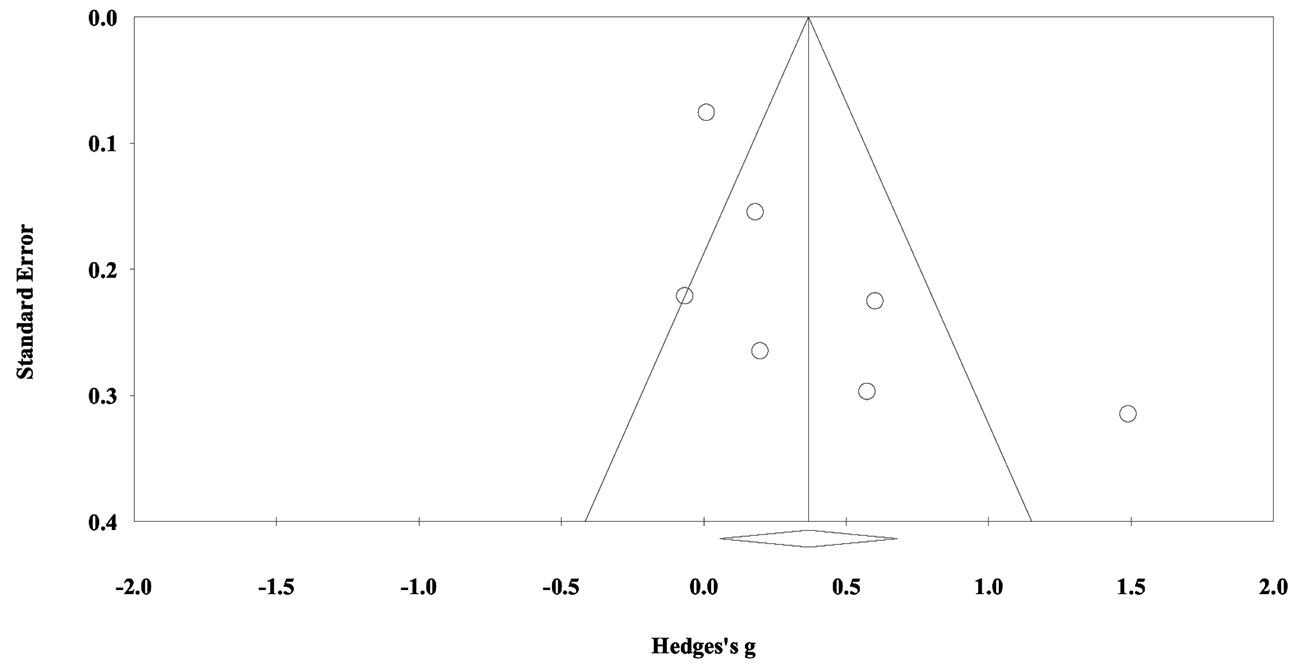

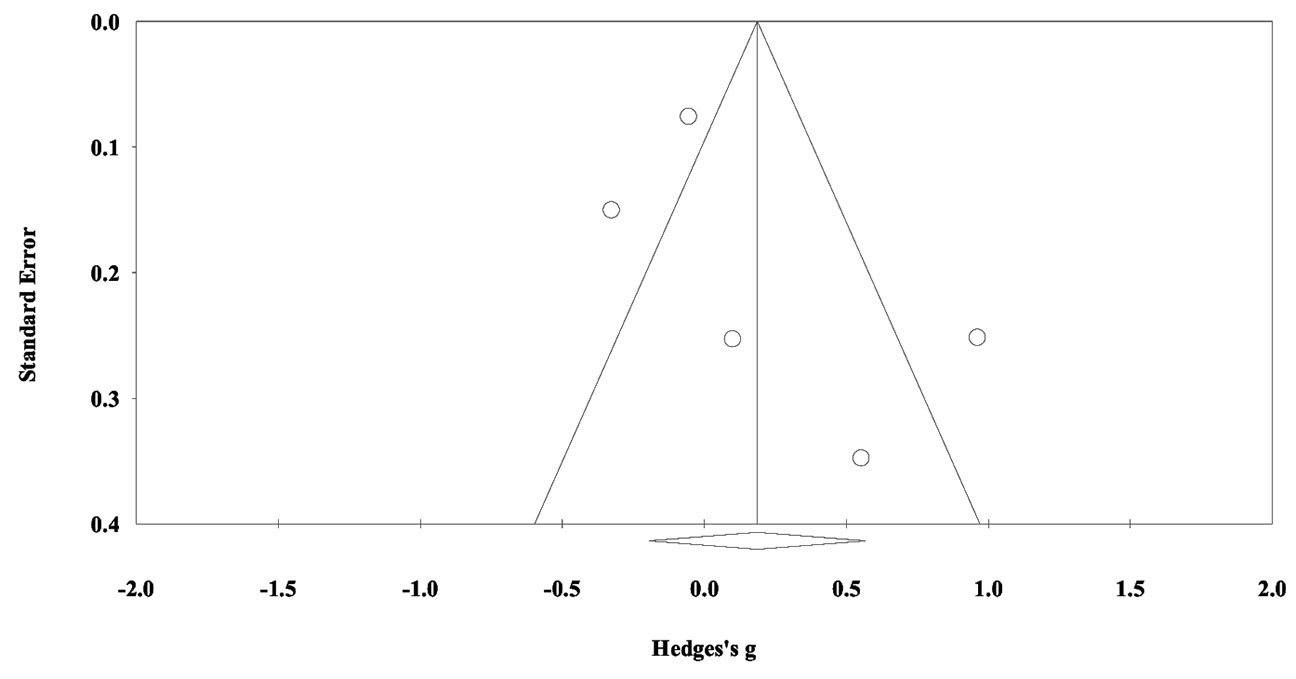

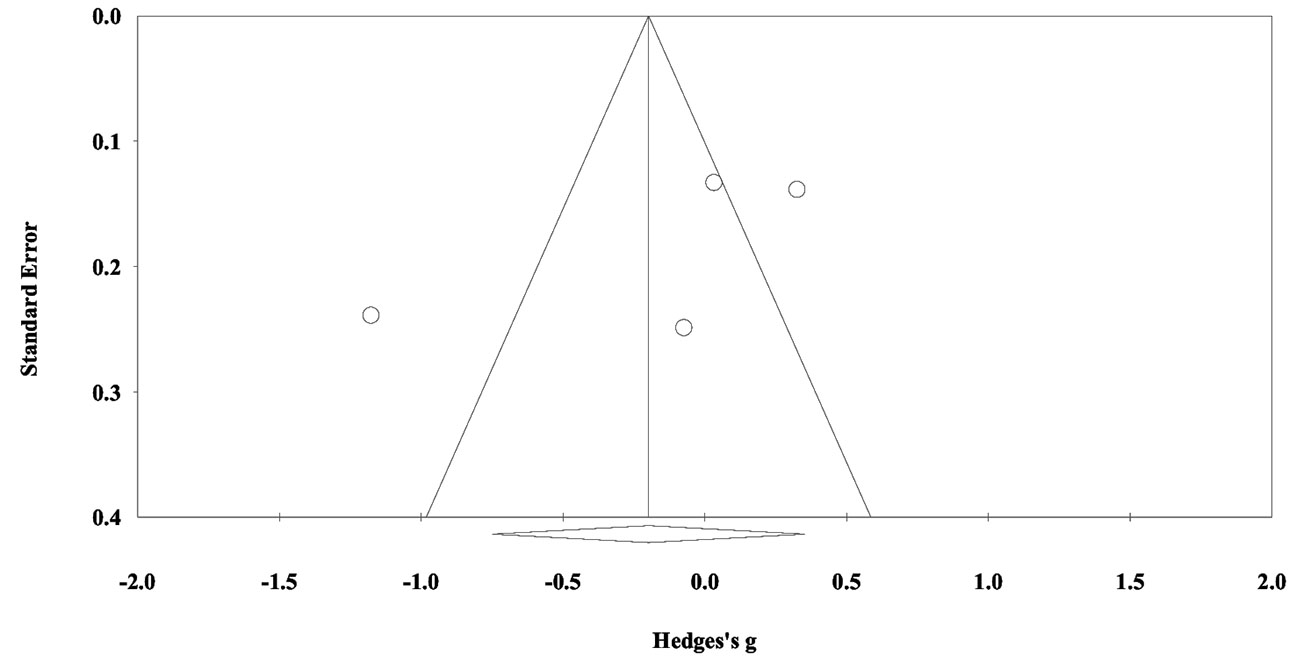

The funnel plots are shown in Figures 8 through 11.

The funnel plots show the effect size (i.e., Hedges’s g) on the x-axis and standard error on the y-axis to assess the likelihood of the presence of publication bias. The lack of a symmetrical distribution of effect sizes around the mean suggests the presence of publication bias in all four models, with a few notable outliers (Borenstein et al., 2009). The classic fail-safe n and Orwin’s fail-safe n are shown in Table 6 for each condition. Using the classic fail-safe n larger than 5k + 10 (Rosenthal, 1995) as a criterion, we expected publication bias to be a problem in all four models. All these criteria show evidence that our study was subject to the problem of publication bias, and thus, additional studies could substantially change the results of our models.

Table 6

Classic Fail-Safe N and Orwin’s Fail-Safe N for Each Model

| Model condition | Classic fail-safe N | Orwin’s fail-safe N |

| Synch vs. Asynch - Cognitive | 25 | 99 |

| Synch vs. Asynch - Affective | 54 | 424 |

| Synch vs. F2F - Cognitive | 0 | 1 |

| Synch vs. F2F - Affective | 0 | 4 |

Note. synch = synchronous; asynch = asynchronous; F2F = face-to-face.

Figure 8

Funnel Plot for the Random Effect Model (Asynchronous vs. Synchronous) for the Affective Domain

Note. k = 11; The diamond represents the average effect size (Hedges’s g).

Figure 9

Funnel Plot for the Random Effect Model (Asynchronous vs. Synchronous) for the Cognitive Domain

Note. k = 7; The diamond represents the average effect size (Hedges’s g).

Figure 10

Funnel Plot for the Random Effect Model (Face-to-face vs. Synchronous) for the Affective Domain

Note. k = 5; The diamond represents the average effect size (Hedges’s g).

Figure 11

Funnel Plot for the Random Effect Model (Face-to-face vs. Synchronous) for the Cognitive Domain

Note. k = 4; The diamond represents the average effect size (Hedges’s g).

Prior to discussing our results, we present our delimitations and limitations so readers can interpret the findings in light of these considerations. While we planned to examine three learning outcomes, there were not sufficient studies focusing on behavioral outcomes and, hence, that outcome was not examined. Also, among the studies examined, the numbers were still small because we did four model comparisons and did not combine the control group of face-to-face and asynchronous since each of these has different characteristics and shares the same samples (e.g., independence of observation). While some meta-analyses report combined effects for affective and cognitive outcomes, we believe these two constructs are too different to report in a single model. When we framed the study, we coded for several variables; however, we realized that authors did not report several of the details in their methods. While we desired to examine types of interaction, we found this was not reported in most studies. The findings of the moderator analysis should be taken with caution since the number of studies, especially when comparing synchronous online to face-to-face, were very few. Also notable, we averaged effect size by combining multiple effect sizes, which ignores any subject variability. We opted to do this as correlations are not usually reported, and we assumed a correlation value of 1.0. Finally, the common problem of publication bias was detected in all four models, and thus, additional studies could produce much different results.

Among the four models examined, the meta-analysis found significant differences between synchronous and asynchronous online learning to positively impact students’ cognitive outcomes. The effect size was small (g = 0.37) under a random effects model. This summary effect supports primary research on SOL that found synchronous interactions to be focusing on discussing the learning tasks (Chou, 2002) and reaching the highest phase of cognitive presence more frequently than in asynchronous interactions (Molnar & Kearney, 2017). However, given the small number of studies and the presence of publication bias, this is a tentative finding. The other three models did not show a significant difference between the groups either for cognitive or affective outcomes, with the confidence intervals overlapping zero.

Two types of instructional methods were examined as moderator variables. When SOL used interactive lessons instead of lecturing, it had significantly positive medium effect on students’ affective outcomes when compared to asynchronous online learning. This shows that students might not be as engaged when a synchronous online lesson is not interactive and when an instructor chooses to lecture instead. Students scheduling time to participate in synchronous sessions would prefer to have an interactive session (Martin et al., 2012) rather than listen to a lecture which could have been recorded and delivered asynchronously.

Course duration was coded to be less than a typical 15-week semester or more than a semester. It was found that when the course was less than a semester, synchronous to asynchronous learning for cognitive and affective outcomes were positively significant. In addition, SOL was positively significant when compared to face-to-face courses for affective outcomes. This signifies that when class duration is longer than a 15-week semester, synchronous online learning is not as effective for both cognitive and affective outcomes.

When non-random assignment was used instead of random assignment, there were significantly positive effects for synchronous compared to asynchronous online learning for both cognitive and affective outcomes. In addition, SOL was positively significant when compared to face-to-face courses for affective outcomes. This could have resulted from learners being self-selected into a delivery method of their preference rather than being randomly assigned.

Learner level moderated the effect of SOL on affective outcomes when compared with asynchronous or face-to-face learning. The effect is significantly larger for graduate students and professionals than for undergraduates. This signifies that for graduate and professional students’ affective outcomes, SOL may be a more effective delivery method.

Among education students, in contrast to other disciplines, there was a significantly positive effect size when comparing synchronous and asynchronous for both affective and cognitive outcomes, and when comparing synchronous to face-to-face for affective outcomes.

There were no differences between the groups based on country or publication source. As a reminder, most studies were published in the United States, and additionally, most studies were published as journal articles.

Overall, the findings of this study are different from those in the work of Bernard et al. (2004) who found that synchronous distance education had a negative effect and Means et al. (2013) who did not find synchronicity as a significant moderator. From the early days of online learning and when synchronous distance education included other forms of synchronicity, this study found one model where synchronous online learning had a small significant effect compared to the asynchronous online condition. This is similar to Williams (2006), who found a positive effective size when examining synchronous distance education with asynchronous online learning.

SOL had a significant moderate effect over asynchronous online learning for cognitive outcomes. This shows that including synchronous sessions in online courses is important. In addition, it was found that interactive lessons had significantly higher effect than lectures. This finding has implications for centers for teaching and learning, and for faculty developers who provide training on the use of synchronous tools and offer workshops. Workshops focusing on synchronous online technology should emphasize designing interactive lessons so that students get the greatest benefit. For campuses without synchronous online tools, this study has implications for administrators to purchase and include a synchronous online tool in the learning management system. Also, for instructors who are teaching online or considering online teaching, this suggests that including synchronous online meetings in their courses would be helpful.

There were only 19 studies that we were able to identify and use in this meta-analysis. There is a need for more high-quality studies on this topic. Since the number of studies were few, the moderator analysis resulted in few cell sizes. There is also a need for more studies to focus on behavioral outcomes in addition to cognitive and affective outcomes. Also, another challenge we encountered during coding was insufficient information reported in the methodology to describe synchronous online sessions. It is important for authors to give as much detail as possible about both the pedagogy and methodology. For example, we were unable to identify the various synchronous functionalities used in the intervention or, if all types of interaction occurred, learner-learner, learner-instructor, and learner-content. We acknowledge this might be due to journal word count limits, but the important consideration is that pedagogical and methodological dimensions are equally relevant to report in a manuscript.

*indicates articles that were included in the meta-analysis.

Ahn, S., Ames, A. J., & Myers, N. D. (2012). A review of meta-analyses in education: Methodological strengths and weaknesses. Review of Educational Research, 82(4), 436-476. https://doi.org/10.3102/0034654312458162

Allen, M., Mabry, E., Mattrey, M., Bourhis, J., Titsworth, S., & Burrell, N. (2004). Evaluating the effectiveness of distance learning: A comparison using meta‐analysis. Journal of Communication, 54(3), 402-420. https://doi.org/10.1111/j.1460-2466.2004.tb02636.x

Banna, J., Grace Lin, M. F., Stewart, M., & Fialkowski, M. K. (2015). Interaction matters: Strategies to promote engaged learning in an online introductory nutrition course. MERLOT Journal of Online Learning and Teaching, 11(2), 249-261. https://jolt.merlot.org/Vol11no2/Banna_0615.pdf

Bernard, R. M., Abrami, P. C., Borokhovski, E., Wade, C. A., Tamim, R. M., Surkes, M. A., & Bethel, E. C. (2009). A meta-analysis of three types of interaction treatments in distance education. Review of Educational Research, 79(3), 1243-1289. https://doi.org/10.3102/0034654309333844

Bernard, R. M., Abrami, P. C., Lou, Y., Borokhovski, E., Wade, A., Wozney, L., Wallet, P. A., Fiset, M., & Huang, B. (2004). How does distance education compare with classroom instruction? A meta-analysis of the empirical literature. Review of Educational Research, 74(3), 379-439. https://doi.org/10.3102/00346543074003379

Borenstein, M., Hedges, L. V., Higgins, J. P., & Rothstein, H. R. (2009). Introduction to Meta-Analysis. John Wiley & Sons.

Borenstein, M., Hedges, L., Higgins, J., & Rothstein, H. (2014). Comprehensive meta-analysis (Version 3) [Computer software]. Biostat. https://www.meta-analysis.com/

Boston, W., Diaz, S. R., Gibson, A. M., Ice, P., Richardson, J., & Swan, K. (2010). An exploration of the relationship between indicators of the Community of Inquiry framework and retention in online programs. Journal of Asynchronous Learning Networks, 14(1), 3-19. https://doi.org/10.24059/olj.v13i3.1657

*Buxton, E. C. (2014). Pharmacists’ perception of synchronous versus asynchronous distance learning for continuing education programs. American Journal of Pharmaceutical Education, 78(1). https://doi.org/10.5688/ajpe7818

*Chen, C. C., & Shaw, R. S. (2006). Online synchronous vs. asynchronous software training through the behavioral modeling approach: A longitudinal field experiment. International Journal of Distance Education Technologies (IJDET), 4(4), 88-102. https://doi.org/10.4018/978-1-59904-964-9.ch004

Chou, C. C. (2002). A comparative content analysis of student interaction in synchronous and asynchronous learning networks. In R. H. Sprague, Jr. (Ed.), Proceedings of the 35th Annual Hawaii International Conference on System Sciences (pp. 1795-1803). IEEE. https://doi.org/10.1109/HICSS.2002.994093

*Cleveland-Innes, M., & Ally, M. (2004). Affective learning outcomes in workplace training: A test of synchronous vs. asynchronous online learning environments. Canadian Journal of University Continuing Education, 30(1), 15-35. https://doi.org/10.21225/d5259v

Cook, D. A., Levinson, A. J., Garside, S., Dupras, D. M., Erwin, P. J., & Montori, V. M. (2008). Internet-based learning in the health professions: A meta-analysis. JAMA, 300(10), 1181-1196. https://doi.org/10.1001/jama.300.10.1181

*Dyment, J. E., & Downing, J. (2018). Online initial teacher education students’ perceptions of using web conferences to support professional conversations. Australian Journal of Teacher Education (Online), 43(4), 68. https://doi.org/10.14221/ajte.2018v43n4.5

Field, A. P. (2001). Meta-analysis of correlation coefficients: A Monte Carlo comparison of fixed- and random-effects methods. Psychological Methods, 6, 161-180. https://doi.org/10.1037/1082-989x.6.2.161

*Francescucci, A., & Rohani, L. (2019). Exclusively synchronous online (VIRI) learning: The impact on student performance and engagement outcomes. Journal of Marketing Education, 41(1), 60-69. https://doi.org/10.1177/0273475318818864

*Gable, K. (2012). Creating a village: The impact of the opportunity to participate in synchronous web conferencing on adult learner sense of community [Unpublished doctoral dissertation]. Capella University.

Garratt, M. (2014). Face-to-face versus remote synchronous instruction for the teaching of single-interrupted suturing to a group of undergraduate paramedic students: A randomised controlled trial. Innovative Practice in Higher Education, 2(1). http://journals.staffs.ac.uk/index.php/ipihe/article/view/60/121

Giesbers, B., Rienties, B., Tempelaar, D., & Gijselaers, W. (2014). A dynamic analysis of the interplay between asynchronous and synchronous communication in online learning: The impact of motivation. Journal of Computer Assisted Learning, 30(1), 30-50. https://doi.org/10.1111/jcal.12020

*Gilkey, M. B., Moss, J. L., Roberts, A. J., Dayton, A. M., Grimshaw, A. H., & Brewer, N. T. (2014). Comparing in-person and webinar delivery of an immunization quality improvement program: A process evaluation of the adolescent AFIX trial. Implementation Science, 9(1), Article 21. https://doi.org/10.1186/1748-5908-9-21

Haney, M., Silvestri, S., Van Dillen, C., Ralls, G., Cohen, E., & Papa, L. (2012). A comparison of tele-education versus conventional lectures in wound care knowledge and skill acquisition. Journal of Telemedicine and Telecare, 18(2), 79-81. https://doi.org/10.1258/jtt.2011.110811

Hedges, L. V., Tipton, E., & Johnson, M. C. (2010). Robust variance estimation in meta-regression with dependent effect size estimates. Research Synthesis Methods, 1(1), 39-65. https://doi.org/10.1002/jrsm.5

Hrastinski, S. (2008). Asynchronous and synchronous e-learning. EDUCAUSE Quarterly, 31(4), 51-55. https://er.educause.edu/articles/2008/11/asynchronous-and-synchronous-elearning

Jahng, N., Krug, D., & Zhang, Z. (2007). Student achievement in online distance education compared to face-to-face education. European Journal of Open, Distance and E-Learning, 10(1). https://core.ac.uk/download/pdf/24065525.pdf

*Kizzier, D. L. M. (2010). Empirical comparison of the effectiveness of six meeting venues on bottom line and organizational constructs. The Journal of Applied Business and Economics, 10(4), 76-103. http://www.m.www.na-businesspress.com/JABE/Jabe104/KizzierWeb.pdf

Kunin, M., Julliard, K. N., & Rodriguez, T. E. (2014). Comparing face-to-face, synchronous, and asynchronous learning: Postgraduate dental resident preferences. Journal of Dental Education, 78(6), 856-866. https://doi.org/10.1002/j.0022-0337.2014.78.6.tb05739.x

*Kyger, J. W. (2008). A study of synchronous and asynchronous learning environments in an online course and their effect on retention rates [Unpublished doctoral dissertation]. Texas A&M University-Kingsville.

*Leiss, D. P. (2010). Does synchronous communication technology influence classroom community? A study on the use of a live Web conferencing system within an online classroom [Unpublished doctoral dissertation]. Capella University.

Mabrito, M. (2006). A study of synchronous versus asynchronous collaboration in an online business writing class. American Journal of Distance Education, 20(2), 93-107. https://doi.org/10.1207/s15389286ajde2002_4

Martin, F., Ahlgrim-Delzell, L., & Budhrani, K. (2017). Systematic review of two decades (1995 to 2014) of research on synchronous online learning. American Journal of Distance Education, 31(1), 3-19. http://dx.doi.org/10.1080/08923647.2017.1264807

Martin, F., & Parker, M.A. (2014). Use of synchronous virtual classrooms: Why, who and how? MERLOT Journal of Online Learning and Teaching, 10(2), 192-210. https://jolt.merlot.org/vol10no2/martin_0614.pdf

Martin, F., Parker, M. A., & Deale, D. F. (2012). Examining interactivity in synchronous virtual classrooms. The International Review of Research in Open and Distributed Learning, 13(3), 228-261. https://doi.org/10.19173/irrodl.v13i3.1174

Martin, F., Sun, T., & Westine, C. D. (2020). A systematic review of research on online teaching and learning from 2009 to 2018. Computers & Education, 159, 104009. https://doi.org/10.1016/j.compedu.2020.104009

Means, B., Toyama, Y., Murphy, R., & Bakia, M. (2013). The effectiveness of online and blended learning: A meta-analysis of the empirical literature. Teachers College Record, 115(3), 1-47. https://psycnet.apa.org/record/2013-11078-005

*Moallem, M. (2015). The impact of synchronous and asynchronous communication tools on learner self-regulation, social presence, immediacy, intimacy and satisfaction in collaborative online learning. The Online Journal of Distance Education and e-Learning, 3(3), 55-77. https://www.tojdel.net/journals/tojdel/articles/v03i03/v03i03-08.pdf

Moeyaert, M., Ugille, M., Beretvas, S. N., Ferron, J., Bunuan, R., & den Noortgate, W. V. (2017). Methods for dealing with multiple outcomes in meta-analysis: A comparison between averaging effect sizes, robust variance estimation and multilevel meta-analysis. International Journal of Social Research Methodology, 20(6), 559-572. https://doi.org/10.1080/13645579.2016.1252189

Moher, D., Liberati, A., Tetzlaff, J., & Altman, D. G. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Annals of Internal Medicine, 151(4), 264-269. https://doi.org/10.7326/0003-4819-151-4-200908180-00135

Molnar, A. L., & Kearney, R. C. (2017). A comparison of cognitive presence in asynchronous and synchronous discussions in an online dental hygiene course. Journal of Dental Hygiene, 91(3), 14-21. https://jdh.adha.org/content/91/3/14.short

Moore, M. J. (1993). Three types of interaction. In K. Harry, M. John, & D. Keegan (Eds.), Distance education: New perspectives (pp. 19-24). Routledge. https://doi.org/10.4324/9781315003429

*Nelson, L. (2010). Learning outcomes of webinar versus classroom instruction among baccalaureate nursing students: A randomized controlled trial [Unpublished doctoral dissertation]. Texas Woman’s University.

Oliver, M. (2014). Fostering relevant research on educational communications and technology. In J. M. Spector, M. D. Merrill, J. Elen, & M. J. Bishop (Eds.), Handbook of research on educational communications and technology (pp. 909-918). Springer.

Orwin, R. G. (1983). A fail-safe N for effect size in meta-analysis. Journal of Educational Statistics, 8(2), 157-159. https://doi.org/10.2307/1164923

*Peterson, A. T., Beymer, P. N., & Putnam, R. T. (2018). Synchronous and asynchronous discussions: Effects on cooperation, belonging, and affect. Online Learning, 22(4), 7-25. https://doi.org/10.24059/olj.v22i4.1517

Revere, L., & Kovach, J. V. (2011). Online technologies for engaged learning: A meaningful synthesis for educators. Quarterly Review of Distance Education, 12(2), 113-124.

Rockinson-Szapkiw, A. J., & Walker, V. L. (2009). Web 2.0 technologies: Facilitating interaction in an online human services counseling skills course. Journal of Technology in Human Services, 27(3), 175-193. https://doi.org/10.1080/15228830903093031

Rosenthal, R. (1979). The “file drawer problem” and tolerance for null results. Psychological Bulletin, 86, 638-641. https://doi.org/10.1037/0033-2909.86.3.638

Rosenthal, R. (1995). Writing meta-analytic reviews. Psychological Bulletin, 118(2), 183-192. https://doi.org/10.1037/0033-2909.118.2.183

Rothstein, H.R., Sutton, A.J., & Borenstein, M. (2005). Publication bias in meta-analysis: Prevention, assessment and adjustments. John Wiley & Sons, Ltd. https://doi.org/10.1002/0470870168

*Rowe, J. A. (2019). Synchronous and asynchronous learning: How online supplemental instruction influences academic performance and predicts persistence [Unpublished doctoral dissertation]. Capella University.

*Scharf, M. T. (2015). Comparing student cumulative course grades, attrition, and satisfaction in traditional and virtual classroom environments [Unpublished doctoral dissertation]. Northcentral University.

Seaman, J. E., Allen, I. E., & Seaman, J. (2018). Grade increase: Tracking distance education in the United States. Babson Survey Research Group. http://onlinelearningsurvey.com/reports/gradeincrease.pdf

Shachar, M., & Neumann, Y. (2010). Twenty years of research on the academic performance differences between traditional and distance learning: Summative meta-analysis and trend examination. MERLOT Journal of Online Learning and Teaching, 6(2), 318-334. https://jolt.merlot.org/vol6no2/shachar_0610.pdf

*Shintani, N., & Aubrey, S. (2016). The effectiveness of synchronous and asynchronous written corrective feedback on grammatical accuracy in a computer‐mediated environment. The Modern Language Journal, 100(1), 296-319. https://doi.org/10.1111/modl.12317

Siler, S. A., & VanLehn, K. (2009). Learning, interactional, and motivational outcomes in one-to-one synchronous computer-mediated versus face-to-face tutoring. I. J. Artificial Intelligence in Education, 19, 73-102. http://www.public.asu.edu/~kvanlehn/Stringent/PDF/Siler_VanLehn_2009_ijaied.pdf

*Spalla, T. L. (2012). Building the ARC in nursing education: Cross-cultural experiential learning enabled by the technology of video or web conferencing [Unpublished doctoral dissertation]. The Ohio State University.

*Stover, S., & Miura, Y. (2015). The effects of Web conferencing on the community of inquiry in online classes. Journal on Excellence in College Teaching, 26(3), 121-143.

*Strang, K. D. (2012). Skype synchronous interaction effectiveness in a quantitative management science course. Decision Sciences Journal of Innovative Education, 10(1), 3-23. https://doi.org/10.1111/j.1540-4609.2011.00333.x

Tanner-Smith, E., & Tipton, E. (2014). Robust variance estimation with dependent effect sizes: Practical considerations and a software tutorial in Stata and SPSS. Research Synthesis Methods, 5(1), 13-30. https://doi.org/10.1002/jrsm.1091

Todd, E. M., Watts, L. L., Mulhearn, T. J., Torrence, B. S., Turner, M. R., Connelly, S., & Mumford, M. D. (2017). A meta-analytic comparison of face-to-face and online delivery in ethics instruction: The case for a hybrid approach. Science and Engineering Ethics, 23(6), 1719-1754. https://doi.org/10.1007/s11948-017-9869-3

Williams, S. L. (2006). The effectiveness of distance education in allied health science programs: A meta-analysis of outcomes. The American Journal of Distance Education, 20(3), 127-141. https://doi.org/10.1207/s15389286ajde2003_2

Wilson, L.C. (2014, September 30). Introduction to meta-analysis: A guide for the novice. Observer. https://www.psychologicalscience.org/observer/introduction-to-meta-analysis-a-guide-for-the-novice

Zhao, Y., Lei, J., Yan, B., Lai, C., & Tan, H. S. (2005). What makes the difference? A practical analysis of research on the effectiveness of distance education. Teachers College Record, 107(8), 1836-1884. https://doi.org/10.1111/j.1467-9620.2005.00544.x

Zimmerman, T. D. (2012). Exploring learner to content interaction as a success factor in online courses. The International Review of Research in Open and Distance Learning, 13(4), 152-165. https://doi.org/10.19173/irrodl.v13i4.1302

Table A

Moderator Analyses (Synchronous vs. Asynchronous Cognitive Outcomes)

| Effect | Effect size and 95% confidence interval | Heterogeneity | ||||||||

| n | k | g | SE | 95% CI | Z | p | Q-value | df | p | |

| Overall | 1260 | 7 | 0.367 | 0.159 | [0.055, 0.679] | 2.308 | .021 | 28.630 | 6 | <.001 |

| Course duration | ||||||||||

| Less than one semester | 193 | 3 | 0.468 | 0.149 | [0.176, 0.759] | 3.147 | .002 | |||

| One semester and longer | 1067 | 4 | 0.323 | 0.225 | [-0.118, 0.765] | 1.435 | .151 | |||

| Total between | 5.364 | 1 | .021 | |||||||

| Instructional method | ||||||||||

| Interactive lesson | 876 | 4 | 0.626 | 0.317 | [0.006, 1.247] | 1.978 | .048 | |||

| Lecture | 384 | 3 | 0.118 | 0.114 | [-0.106, 0.342] | 1.032 | .302 | |||

| Total between | 0.115 | 1 | .735 | |||||||

| Student equivalent | ||||||||||

| Random assignment | 810 | 3 | 0.163 | 0.156 | [-0.143, 0.469] | 1.043 | .297 | |||

| Non-random assignment | 450 | 4 | 0.509 | 0.278 | [-0.035, 1.053] | 1.835 | .067 | |||

| Total between | 5.837 | 1 | .016 | |||||||

| Learner level | ||||||||||

| Undergraduate | 891 | 4 | 0.295 | 0.175 | [-0.049, 0.638] | 1.682 | .093 | |||

| Graduate/Professional | 369 | 3 | 0.494 | 0.381 | [-0.254, 1.241] | 1.294 | .196 | |||

| Total between | 1.950 | 1 | .163 | |||||||

| Discipline | ||||||||||

| Education | 51 | 1 | 1.490 | 0.315 | [0.873, 2.107] | 4.734 | <.001 | |||

| Other disciplines | 1209 | 6 | 0.189 | 0.105 | [-0.017, 0.396] | 1.799 | .072 | |||

| Total between | 18.738 | 1 | <.001 | |||||||

| Country | ||||||||||

| USA | 1148 | 5 | 0.375 | 0.200 | [-0.016 0.766] | 1.878 | .060 | |||

| Other countries | 112 | 2 | 0.364 | 0.198 | [-0.023, 0.752] | 1.843 | .065 | |||

| Total between | 1.273 | 1 | .259 | |||||||

| Publication source | ||||||||||

| Journal article | 942 | 5 | 0.533 | 0.249 | [0.045, 1.021] | 2.136 | .032 | |||

| Dissertation | 318 | 2 | 0.100 | 0.127 | [-0.149, 0.348] | 0.786 | .432 | |||

| Total between | 0.211 | 1 | .646 | |||||||

Table B

Moderator Analyses (Synchronous vs. Asynchronous with Affective Outcomes)

| Effect | Effect size and 95% confidence interval | Heterogeneity | ||||||||

| n | k | g | SE | 95% CI | Z | p | Q-value | df | p | |

| Overall | 862 | 11 | 0.320 | 0.164 | [-0.001, 0.641] | 1.953 | .051 | 50.193 | 10 | <.001 |

| Course duration | ||||||||||

| Less than one semester | 587 | 7 | 0.408 | 0.245 | [-0.071, 0.887] | 1.668 | .095 | |||

| One semester and longer | 77 | 2 | 0.002 | 0.224 | [-0.437, 0.441] | 0.009 | .993 | |||

| Unknown | 198 | 2 | 0.262 | 0.143 | [-0.019, 0.543] | 1.826 | .068 | |||

| Total between | 5.224 | 2 | .073 | |||||||

| Instructional method | ||||||||||

| Interactive lesson | 662 | 8 | 0.460 | 0.193 | [0.082, 0.839] | 2.382 | .017 | |||

| Lecture | 148 | 2 | -0.057 | 0.200 | [-0.449, 0.335] | -0.287 | .774 | |||

| Unknown | 52 | 1 | -0.036 | 0.273 | [-0.571, 0.500] | -0.131 | .895 | |||

| Total between | 13.348 | 2 | .001 | |||||||

| Student equivalent | ||||||||||

| Random assignment | 162 | 3 | 0.110 | 0.157 | [-0.197, 0.416] | 0.700 | .484 | |||

| Non-random assignment | 487 | 6 | 0.532 | 0.096 | [0.344, 0.720] | 5.547 | <.001 | |||

| Unknown | 213 | 2 | 0.310 | 0.139 | [0.038, 0.581] | 2.233 | .131 | |||

| Total between | 5.733 | 2 | .057 | |||||||

| Learner Level | ||||||||||

| Undergraduate | 360 | 5 | 0.161 | 0.113 | [-0.060, 0.382] | 1.431 | .152 | |||

| Graduate/Professional | 502 | 6 | 0.496 | 0.273 | [-0.039, 1.030] | 1.818 | .069 | |||

| Total between | 7.732 | 1 | .005 | |||||||

| Discipline | ||||||||||

| Education | 539 | 5 | 0.604 | 0.282 | [0.051, 1.156] | 2.141 | .032 | |||

| Other disciplines | 323 | 6 | 0.104 | 0.119 | [-0.129, 0.336] | 0.875 | .382 | |||

| Total between | 16.773 | 1 | <.001 | |||||||

| Country | ||||||||||

| USA | 701 | 7 | 0.285 | 0.260 | [-0.225, 0.795] | 1.096 | .273 | |||

| Other countries | 161 | 4 | 0.328 | 0.122 | [0.089, 0.567] | 1.691 | .007 | |||

| Total between | 0.520 | 1 | .471 | |||||||

| Publication source | ||||||||||

| Journal article | 595 | 8 | 0.354 | 0.219 | [-0.075, 0.783] | 1.616 | .106 | |||

| Dissertation | 267 | 3 | 0.250 | 0.247 | [-0.234, 0.734] | 1.031 | .311 | |||

| Total between | 0.015 | 1 | .904 | |||||||

Table C

Moderator Analyses (Synchronous vs. Face-to-Face Cognitive Outcomes)

| Effect | Effect size and 95% confidence interval | Heterogeneity | ||||||||

| n | k | g | SE | 95% CI | Z | p | Q-value | df | p | |

| Overall | 1833 | 4 | -0.198 | 0.281 | [-0.749, 0.352] | -0.706 | .480 | 29.824 | 3 | <.001 |

| Course duration | ||||||||||

| Less than one semester | 56 | 1 | -0.073 | 0.249 | [-0.561, 0.415] | -0.292 | .770 | |||

| One semester and longer | 1777 | 3 | -0.244 | 0.362 | [-0.953, 0.465] | -0.674 | .501 | |||

| Total between | 0.050 | 1 | .824 | |||||||

| Instructional method | ||||||||||

| Lecture | 1833 | 4 | -0.198 | 0.281 | [-0.749, 0.352] | -0.706 | .480 | |||

| Total between | 0 | 0 | 1 | |||||||

| Student equivalent | ||||||||||

| Non-random assignment | 1553 | 2 | -0.412 | 0.751 | [-1.885, 1.060] | -0.549 | .583 | |||

| Random assignment | 280 | 2 | 0.010 | 0.117 | [-0.220, 0.240] | 0.083 | .934 | |||

| Total between | 0.136 | 1 | .713 | |||||||

| Learner level | ||||||||||

| Undergraduate | 280 | 2 | 0.010 | 0.118 | [-0.221, 0.241] | 0.085 | .993 | |||

| Graduate/Professional | 1553 | 2 | -0.418 | 0.757 | [-1.901, 1.066] | -0.552 | .581 | |||

| Total between | 0.125 | 1 | .723 | |||||||

| Discipline | ||||||||||

| Other disciplines | 1833 | 4 | -0.201 | 0.282 | [-0.754, 0.353] | -0.711 | .477 | |||

| Total between | 0 | 0 | 1 | |||||||

| Country | ||||||||||

| USA | 1777 | 3 | -0.247 | 0.363 | [-0.959, 0.465] | -0.679 | .497 | |||

| Other countries | 56 | 1 | -0.074 | 0.252 | [-0.568, 0.420] | -0.292 | .770 | |||

| Total between | 0.052 | 1 | .819 | |||||||

| Publication source | ||||||||||

| Journal article | 56 | 1 | -0.074 | 0.252 | [-0.568, 0.420] | -0.292 | .770 | |||

| Dissertation | 1777 | 3 | -0.247 | 0.363 | [-0.959, 0.465] | -0.679 | .497 | |||

| Total between | 0.052 | 1 | .819 | |||||||

Table D

Moderator Analyses (Synchronous vs. Face-to-Face Affective Outcomes)

| Effect | Effect size and 95% confidence interval | Heterogeneity | ||||||||

| n | k | g | SE | 95% CI | Z | p | Q-value | df | p | |

| Overall | 1080 | 5 | 0.195 | 0.038 | [-0.195, 0.568] | 0.957 | .338 | 22.520 | 4 | <.001 |

| Course duration | ||||||||||

| Less than one semester | 74 | 1 | 0.962 | 0.252 | [0.469, 1.455] | 3.822 | <.001 | |||

| One semester and longer | 792 | 3 | 0.065 | 0.143 | [-0.216, 0.345] | 0.451 | .652 | |||

| Unknown | 151 | 1 | -0.327 | 0.150 | [-0.621, -0.032] | -2.175 | .030 | |||

| Total between | 3.149 | 2 | .207 | |||||||

| Instructional method | ||||||||||

| Interactive lesson | 1080 | 5 | 0.186 | 0.195 | [-0.195, 0.568] | 0.957 | .338 | |||

| Total between | 0 | 0 | 1 | |||||||

| Student equivalent | ||||||||||

| Non-random assignment | 74 | 1 | 0.962 | 0.252 | [0.469, 1.455] | 3.822 | <.001 | |||

| Random assignment | 792 | 3 | 0.065 | 0.143 | [-0.216, 0.345] | 0.451 | .652 | |||

| Unknown | 214 | 1 | -0.327 | 0.150 | [-0.621, -0.032] | -2.175 | .030 | |||

| Total between | 3.149 | 2 | .207 | |||||||

| Learner level | ||||||||||

| Undergraduate | 945 | 3 | -0.061 | 0.162 | [-0.378, 0.257] | -0.375 | .708 | |||

| Graduate/Professional | 135 | 2 | 0.531 | 0.431 | [-0.314, 1.376] | 1.232 | .218 | |||

| Total between | 10.570 | 1 | .001 | |||||||

| Discipline | ||||||||||

| Education | 74 | 1 | 0.962 | 0.252 | [0.469, 1.455] | 3.822 | <.001 | |||

| Other disciplines | 1006 | 4 | 0.044 | 0.017 | [-0.297, 0.209] | -0.338 | .735 | |||

| Total between | 15.904 | 1 | <.001 | |||||||

| Country | ||||||||||

| USA | 1006 | 3 | 0.065 | 0.143 | [-0.216, 0.345] | 0.451 | .652 | |||

| Other countries | 74 | 2 | 0.302 | 0.644 | [-0.961, 1.565] | 0.468 | .639 | |||

| Total between | 0.039 | 1 | .843 | |||||||

| Publication source | ||||||||||

| Journal article | 1047 | 4 | 0.125 | 0.210 | [-0.288, 0.537] | 0.592 | .554 | |||

| Dissertation | 33 | 1 | 0.553 | 0.247 | [-0.129, 1.235] | 1.590 | .112 | |||

| Total between | 2.712 | 1 | .100 | |||||||

A Meta-Analysis on the Effects of Synchronous Online Learning on Cognitive and Affective Educational Outcomes by Florence Martin, Ting Sun, Murat Turk, and Albert D. Ritzhaupt is licensed under a Creative Commons Attribution 4.0 International License.