Volume 23, Number 1

Ting-Chia Hsu1, Hal Abelson2, and Jessica Van Brummelen2*

1Department of Technology Application and Human Resource Development, National Taiwan Normal University; 2Department of Electrical Engineering and Computer Science, Massachusetts Institute of Technology, USA

The purpose of this study was to design a curriculum of artificial intelligence (AI) application for secondary schools. The learning objective of the curriculum was to allow students to learn the application of conversational AI on a block-based programming platform. Moreover, the empirical study actually implemented the curriculum in the formal learning of a secondary school for a period of six weeks. The study evaluated the learning performance of students who were taught with the cycle of experiential learning in one class, while also evaluating the learning performance of students who were taught with the conventional instruction, which was called the cycle of doing projects. Two factors, learning approach and gender, were taken into account. The results showed that females’ learning effectiveness was significantly better than that of males regardless of whether they used experiential learning or the conventional projects approach. Most of the males tended to be distracted from the conversational AI curriculum because they misbehaved during the conversational AI process. In particular, in their performance using the Voice User Interface with the conventional learning approach, the females outperformed the males significantly. The results of two-way ANCOVA revealed a significant interaction between gender and learning approach on computational thinking concepts. Females with the conventional learning approach of doing projects had the best computational thinking concepts in comparison with the other groups.

Keywords: gender studies, conversational AI application, experiential learning, block-based programming

In the technology era, from understanding complex terminology, syntax, and error messages, to learning about functions, iterations, and new algorithms, some students, even at the university level, have difficulty learning to program (Piwek & Savage, 2020). Because of this, many researchers have investigated innovative and useful approaches for teaching and learning computer programming. For example, researchers have proposed an experiential learning cycle from project-based learning for learning computer science (Pucher & Lehner, 2011). These methods involved concrete experience, the application of acquired knowledge, the contextualization of projects in the real world, and hands-on implementation, which are highly relevant to developing computer programs (Efstratia, 2014; Sendall et al., 2019).

With the fast-paced, continual development of computer science, including huge gains in artificial intelligence (AI) and machine learning, the application of AI has become popular in our daily lives due to the high-speed development of hardware (Hsu et al., 2021). One rapidly-growing subfield includes conversational AI, which is the ability of machines to converse with humans, including voice-based technologies such as Amazon’s Alexa. The goal of the current study was therefore to investigate the effectiveness of using the cycle of experiential learning and the cycle of doing projects in a conversational AI curriculum. Specifically, this research investigated the two different teaching approaches—the cycle of experiential learning and the conventional cycle of doing projects—with a visual programming interface for conversational AI applications using the MIT App Inventor (Van Brummelen, 2019). The conversational AI curriculum we developed allowed young students to connect the application of audio interaction with the Internet of things (IoT) or simulative interaction in the block-based programming environment. This innovative, applied AI curriculum was designed to be implemented in junior high schools.

For novices and young students, there is evidence that visual programming, which is also termed block-based programming, is more effective in teaching programming than is conventional command-line programming with complex syntax (Cetin, 2016). In this study, visual programming tools referred to block-based programming tools such as MIT App Inventor or Scratch. In comparison with conventional text-based programming, such visual programming tools have been helpful for novices to fully focus on learning to solve problems as well as understand the logic and framework of the overall program, rather than attend to specific semantics or syntax (Grover & Pea, 2013; Hsu et al., 2018; Lye & Koh, 2014).

In conventional programming, programs are written with strict syntax, which can be difficult for general populations to learn, especially non-native English speakers, since a program cannot run successfully it has even minor spelling errors. On the other hand, if students use block-based programming to build the program, these errors will not occur. Block-based programming emphasizes recognition over recall; code-blocks are readily available in the visual interface. Furthermore, the blocks are categorized according to their function or logic. Students only need to concentrate on using appropriate blocks to complete the work they want to do or to create the effect they desire, rather than memorize syntax or particular keywords of the programming language. Moreover, the shape and color of the blocks provide the students with scaffolding to emphasize which blocks can be linked together and how code can (or cannot) be developed. During this process of visual code development, students learn the concepts of composing programs and that different blocks have various functions or properties. With block-based programming, students usually need only drag and connect the blocks, reducing the cognitive load and allowing students to focus on the logic and structures involved in programming rather than the syntax of writing programs (Kelleher & Pausch, 2005). Block-based programming provides students with media-rich learning environments, allowing them to connect with various personal interests (Brennan & Resnick, 2012). Chiu (2020) discovered that learners were very positive about the creation of applications (apps) by visual programming and project development, and recommended that novice programmers create apps with block-based programming. Finally, when students used a visual programming tool to write a program, they tended to focus on solving problems. Researchers have indicated that visual programming tools have a positive impact on programming self-efficacy and decrease student frustration (Yukselturk & Altiok, 2017).

It is especially important to reduce learning frustration for those who are underrepresented in computer science, as they face additional challenges when they first enter the field. Furthermore, it is important to increase their participation in computer science, as underrepresented groups provide unique perspectives and diverse, innovative solutions. In this paper, we investigated the effectiveness of different learning techniques by gender, since historically, females have been underrepresented in computer science, and the relative number of females entering the field has significantly decreased over the past 30 years (Weston et al., 2019). By determining and using the most effective pedagogical techniques for computer science by gender, more females may enter the field, and the gender gap may close.

A previous study has shown that gender impacted the ease of use and intention to use block-based programming (Cheng, 2019). Nonetheless, very little is known about the effect of gender on learning computational thinking skills in primary and secondary education (Kalelioǧlu, 2015). Due to the shortage of females participating in science, technology, engineering and mathematics (STEM) domains in comparison with the number of males, many countries have recently encouraged females to participate in those domains. However, researchers have indicated that the participation rate of females is still lower than that of males in the computer science domain (Cheryan et al., 2017). The difference in male and female interest in computer science likely originates from females having less experience learning computer science during childhood (Adya & Kaiser, 2005).

Information processing theory research has also indicated that different genders have different perceptions and processing modes in the brain (Meyers-Levy, 1986). Males tended to rely on the right brain to process and select the input information from outside. Thus, they often paid attention to visual information or contextual signals, while ignoring the details of processing methods (Meyers-Levy, 1989). Conversely, females tended to prefer using their left brain to accept and analyze the input information in detail, often resulting in higher stress levels. Moreover, females tended to relate, collaborate, and share information with others (Putrevu, 2001). Different genders have different information processing procedures in the brain, and tended to filter and accept different types of input from the same information (Martin et al., 2002). Accordingly, it is worth exploring the effect of gender on new curricula such as the conversational AI curriculum with MIT App Inventor.

A previous study has shown there was no significant difference between genders in students’ performance when programming using code.org, although females’ average reflective ability was slightly higher than that of males (Kalelioǧlu, 2015). Another study also showed that there was no significant difference between genders in LEGO construction and related programming, but females paid attention to the instructions of the task, whereas males rarely did (Lindh & Holgersson, 2007). Some studies have indicated significant gender differences in learning to program and acquiring computational thinking skills (Korkmaz & Altun, 2013; Özyurt & Özyurt, 2015).

According to the cognitivist view of information processing theory, females tended to perceive information in detail and concentrate on sharing and correlating information when their brain processes the information, while males tended to pay attention to the information context (Putrevu, 2001). According to the selective input of information and the perspectives of gender schema in information processing theory, males and females have demonstrated slight differences in their methods of selecting and processing information.

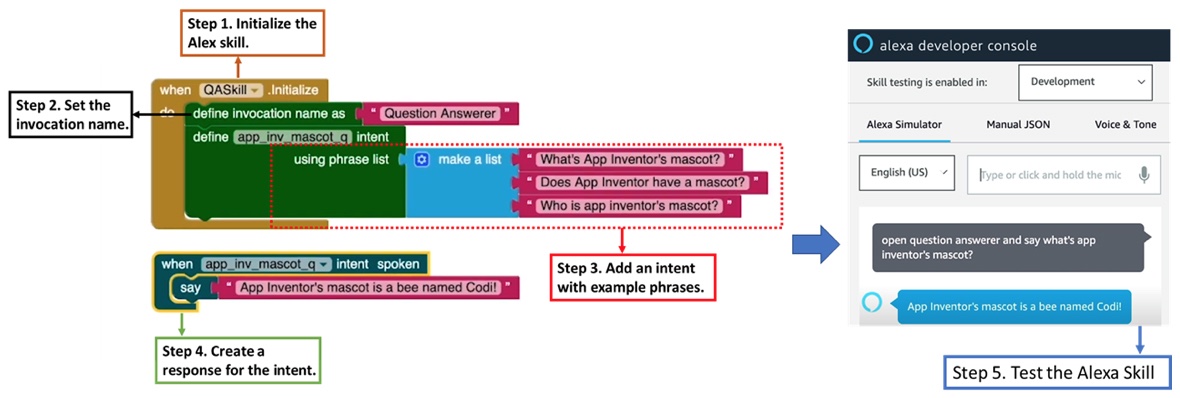

Many countries have encouraged females to engage in STEM disciplines. Females’ experiences during K-12 education affect their choices to continue with those subjects in the future. In addition, AI education in K-12 has become more popular (Long & Magerko, 2020; Touretzky et al., 2019). Due to this popularization and gender gap in STEM, it is important to explore the effects of gender on AI education. Specifically, we aimed to explore these effects using the conversational AI curriculum developed by Van Brummelen (2019). AI literacy has become increasingly important, particularly with the prevalence of voice-based AI technology such as Alexa, Google Home, Siri, and so on is. Voice technology is helpful for people who are not able to use conventional input devices, as they can directly talk to the computer or smartphone instead of typing or using a mouse. Figure 1 shows an example of a conversational AI application.

Figure 1

Example of a Conversational VoiceBot in the Alexa Simulator (Amazon, 2021)

The conversational AI system providing the voice user interface (VUI) is sometimes also called a voicebot, and is an intelligent assistant for humans’ daily life, which interacts with people through voice conversations. Conversational AI is the skeuomorphism of VUI. The innovation in this study was to implement the conversational AI curriculum in the formal classroom setting of a secondary school. The two approaches used to instruct this conversational AI curriculum involved the cycle of doing projects and the cycle of experiential learning. It was expected that the junior high school students would gain hands-on experience of programming and the application of AI in the conversational AI curriculum.

The curriculum taught students to develop mobile applications and Amazon Alexa skills, the programs that run on voice-first Alexa devices, using MIT App Inventor (Van Brummelen, 2019). MIT App Inventor, a block-based programming tool that encouraged the practice of computational thinking, included logical and problem-solving processes. Our study evaluated whether different learning approaches (conventional instruction using the cycle of doing projects vs. the cycle of experiential learning) and different genders would have effects on the learning effectiveness of conversational AI, the performance of VUI, and the computational thinking concept scale of the students. The following research questions guided our investigation.

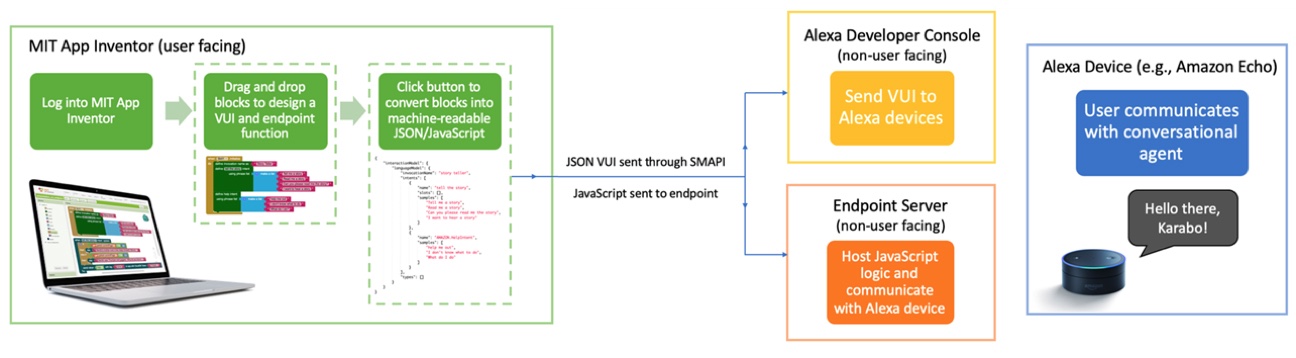

The conversational AI used in this study involved using audio to control Amazon Alexa. To make an Alexa skill, the student learned to write the conversation program with block-based programming. First, the student logged onto MIT App Inventor, and initialized the Alexa skill by dragging from the block menu, shown as step 1 in Figure 2. Second, the student dragged-and-dropped blocks to program the Alexa skill, shown as steps 2 to 4 in Figure 2. Then the student clicked a button to send the skill to Amazon. Finally, this action converted the blocks into text-based code, which was readable by Alexa devices or the Amazon Website as shown in the right-hand screenshot in Figure 2.

Figure 2

Example Program in the Block-Based Programming Conversational AI Interface

This conversational AI tool in the block-based programming environment was developed for K-12 students to create their own conversational agents (Van Brummelen, 2019). Students chatted with Alexa or the Alexa simulator Website after they wrote the conversational AI program. Amazon has embedded natural language processing inside their Alexa system and simulator. The combination of Alexa in Amazon and MIT App Inventor was chosen as a friendly learning tool and resource for primary or secondary school students to experience and apply conversational AI, even though they were not undergraduates in computer sciences.

The system framework behind the block-based programming platform is depicted in Figure 3. The system ensured low barriers to entry for primary and secondary school students, otherwise, creating Alexa skills would be difficult, even for a student majoring in computer science. For example, without the interface, connecting a lambda function on AWS to the voice user interface is complicated. However, the block-based interface design in Figure 3 abstracted that, and simplified the development of students’ own conversational agent.

Figure 3

System Framework of the Conversational AI Programming Tool in MIT App Inventor

From “Tools to Create and Democratize Conversational Artificial Intelligence,” by J. Van Brummelen, 2019, master’s thesis, MIT, Cambridge, p. 52 (https://hdl.handle.net/1721.1/122704).

Conversational AI is directly related to Brennan and Resnik’s (2013) computational thinking (CT) skill framework. In our study, students engaged with: (a) CT concepts including events, conditionals, data, sequences, loops, parallelism, and operators; (b) CT practices such as being incremental and iterative, testing and debugging, reusing and remixing, as well as abstracting and modularizing; and (c) CT perspectives like expressing, connecting, questioning, and so on. In addition to computational thinking naturally embedded in the conversational AI curriculum, students also learned AI-specific concepts, practices, and perspectives including, but not limited to (a) classification (e.g., determine intent); (b) prediction (e.g., predict best next letter); (c) generation (e.g., generate text block); (d) training, testing, and validating (e.g., vary training length); and (e) project evaluation (e.g., question project ethics).

It was hypothesized that an appropriate instructional approach will be helpful for assisting the students in learning to make conversational AI in computer education. Therefore, this empirical study aimed to evaluate two different learning approaches in two classes, respectively.

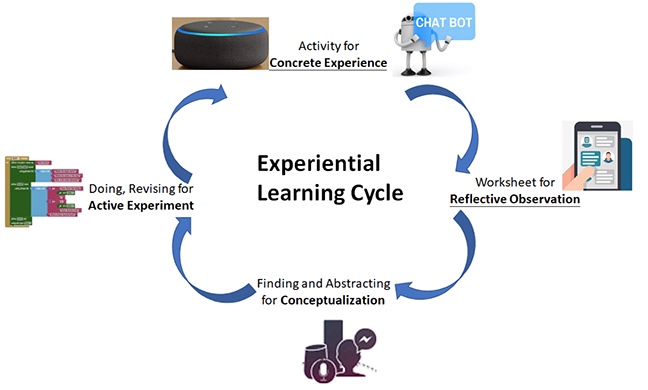

One class, labelled the experimental group, used the cycle of experiential learning; its instructional design is exhibited in Figure 4. The students already had concrete experience using conversational AI. For example, they used the phrase “Hey Google” to give their mobile phone oral rather than text commands, so that they could receive the oral and data response of the smartphone. The students filled out a worksheet about what they observed and found after they used conversational AI in their daily life. At this stage, they also thought about new tasks. The teacher encouraged the students to have conversations with the computer, and the students filled out the worksheet to show what they said and how the system reacted. The students also practiced problem decomposition in this stage. After the students progressed to the abstract conceptualization stage, they practiced pattern recognition and abstraction for problem solving. At this stage, students used their Amazon account to log into MIT App Inventor, but they did not yet write their own program. The teacher provided them with different blocks, and asked them to conceptualize which block could be used for which task. Finally, in the active experiment stage, the students actually implemented their own program and tested the running results. If they encountered any problems, they debugged and revised the program. During the process, they asked the teacher questions if they had a problem.

Figure 4

Experiential Learning Cycle Integrated into the Experimental Group’s Learning Process

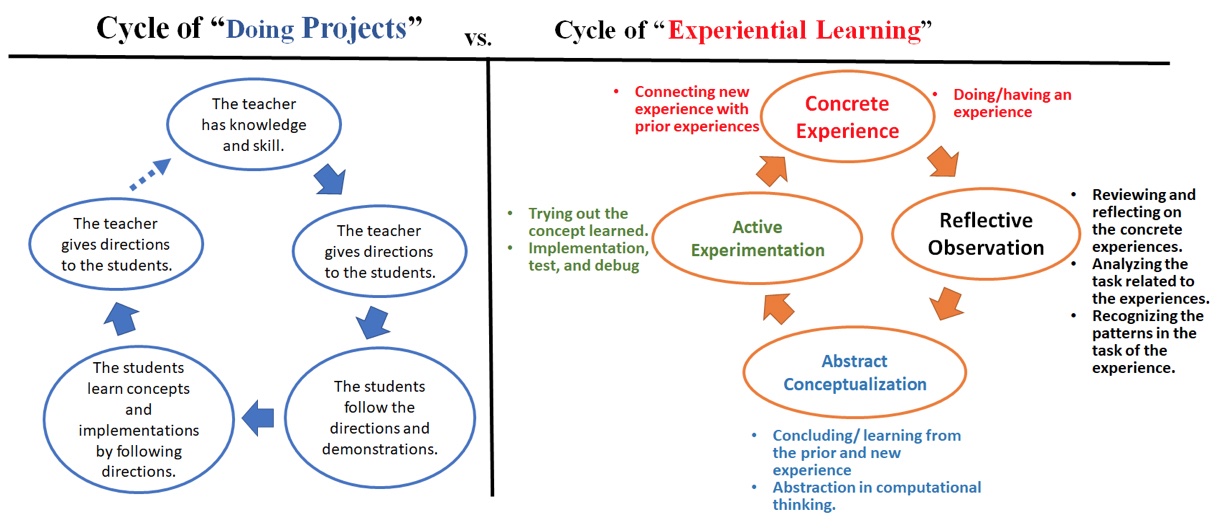

The conventional instruction approach, referred to as the cycle of doing projects, was used in the other class and is depicted on left-hand side of Figure 5. The teacher guided the process step-by-step. Students followed the teacher’s directions and when they implemented the project of conversational AI, students imitated the teacher’s demonstration of the codes. The difference between the cycle of doing projects in the conventional instruction of this study and the cycle of experiential learning is compared and illustrated in Figure 5.

Figure 5

The Cycle of Doing Projects (Control Group) Compared With the Cycle of Experiential Learning (Experimental Group)

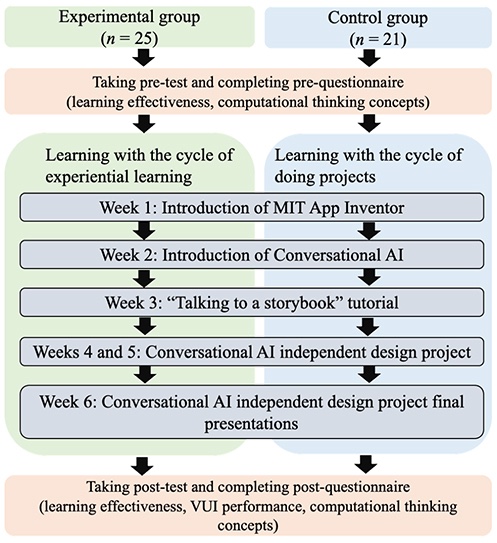

A total of 46 seventh-grade students participated in the conversational AI curriculum. As shown in Table 1, 25 were assigned to the experimental group and experiential learning, and 21 were assigned to the control group and the general cycle of doing projects.

Table 1

Gender and Number of Participants in Learning Approach Groups

| Learning approach | Gender | n |

| Cycle of experiential learning (experimental group) | Female | 11 |

| Male | 14 | |

| Cycle of doing projects (control group) | Female | 7 |

| Male | 14 |

The purpose of this research study was to determine whether the students could understand conversational artificial intelligence (the ability for a computer to have conversations with humans) and develop programming projects through formal classes in secondary school via two different learning approaches. Participation in the study was completely voluntary and the students’ parents filled out the consent form. The students were able to decline to answer any or all of the questions. If a student declined to answer any of the questions, he or she would no longer be participating in the study. The students could decline participation at any time. The data collected in this study were reported in a that protected individuals’ identities.

The conversational AI curriculum took a total of six weeks. The students in the two classes learned computational thinking and AI skills from the curriculum after they developed their own conversational AI projects during the six weeks. The learning objectives of the conversational AI curriculum were to learn how conversational agents decide what to say, to comfortably develop the conversational AI projects, and to better understand conversational agents. Accordingly, the students were encouraged to develop positive, socially useful, and meaningful projects in the course.

The pre-test of prior knowledge included 15 multiple-choice questions, with a perfect score of 100. The post-test for measuring the learning effectiveness also comprised 15 multiple-choice questions, with a perfect score of 100.

Figure 6

The Experimental Flow Chart

The VUI performance and computational thinking concepts were measured with a five-point Likert scale, ranging from strongly disagree to strongly agree. The VUI performance scale had five questions (Van Brummelen, 2019), namely, (a) I have interacted with conversational agents, (b) I understand how conversational agents decide what to say, (c) I feel comfortable making apps that interact with conversational agents, (d) I can think of ways that conversational agents can solve problems in my everyday life, and (e) my understanding of conversational agents improved through the curriculum. The Cronbach’s α value of the reliability of the VUI performance scale was 0.883. The computational thinking concept scale had five questions (Sáez-López et al., 2016), outlined below.

After learning block-based programming, I:

The reliability of the original combined scale was 0.789. The Cronbach’s α value of the retest reliability of the computational thinking concept scale was 0.921.

The students’ behaviors were video-recorded in the class. After the quantitative analysis, the recordings were used to infer and understand why the students learned well or not.

Two-way ANCOVA was employed to compare the learning effectiveness of the conversational AI curriculum with different learning approach (i.e., the cycle of doing projects and the cycle of experiential learning) and gender (males and females). The covariance was the pre-test used to measure the prior knowledge of the students before the conversational AI curriculum. The independent variables were gender and the learning approach. The dependent variable the post-test used to measure the students’ learning effectiveness after they completed the curriculum. The Levene’s test was not violated (F = 1.424, P = .249 > .050), suggesting that a common regression coefficient was appropriate for the two-way ANCOVA.

Table 2 shows the two-way ANCOVA results. It was found that there was interaction between the two independent factors, learning approach and gender, for the students’ learning results (F = 12.493**, P = .001 < .010). The effect size (partial η2) was 0.247, indicating a medium effect.

Table 2

Two-Way ANCOVA Tests of Between-Subjects Effects

| Resource | SS | MS | F | P | Partial η2 |

| Learning approach * Pre-test | 362.82 | 362.82 | 0.929 | .341 | |

| Gender * Pre-test | 898.18 | 898.18 | 2.300 | .138 | |

| Learning approach | 117.24 | 117.24 | 0.300 | .587 | |

| Gender | 83.65 | 83.65 | 0.214 | .646 | |

| Learning approach * Gender | 4879.23 | 4879.23 | 12.493** | .001 | 0.247 |

Note. ** p < .01.

A simple main-effect analysis based on the division of gender was explored; results are presented in Table 3. When the group was divided based on gender, the Levene’s test was not violated for males (F = 0.086, P = .772 > .050) or females (F = 2.137, P = .163 > .050). However, the pre-test had interaction with learning approach for males (F = 4.803*; P = .038 < .050) as well as females (F = 8.012*; P = .013 < .050). Therefore, the Johnson-Neyman process was further conducted.

Table 3

Simple Main-Effect Analysis Based on the Division of Gender

| Gender | Learning Approach | n | Mean | SD | Adjusted mean | SE |

| Female | Cycle of experiential learning | 11 | 71.52 | 19.34 | 71.48 | 6.76 |

| Cycle of doing projects | 7 | 67.84 | 8.50 | 67.84 | 8.50 | |

| Male | Cycle of experiential learning | 14 | 42.86 | 21.04 | 42.15 | 6.47 |

| Cycle of doing projects | 14 | 59.05 | 23.37 | 59.68 | 6.37 |

Note. * p < .05.

For males, it was found that when the pre-test was smaller than 57.646, the male students using the cycle of doing projects outperformed the male students using the cycle of experiential learning, as shown as Figure 7. Conversely, the high-prior competence of the males using the cycle of experiential learning performed better than the high-prior competence of the males using the cycle of doing projects.

Figure 7

Results of Johnson-Neyman Process for Males Using Different Learning Approaches

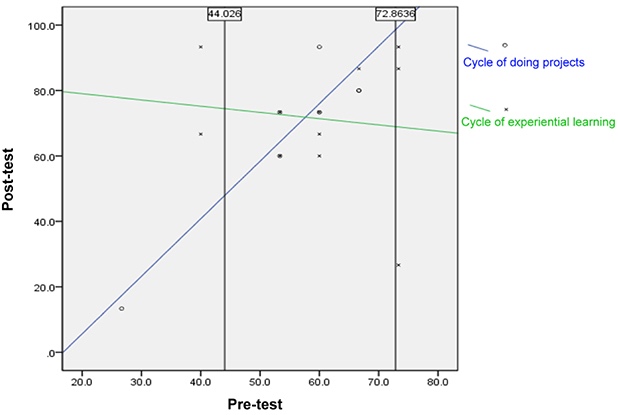

For females, when the pre-test was smaller than 44.026, the female students using the cycle of experiential learning outperformed the female students using the cycle of doing projects. Conversely, when the pre-test was larger than 72.864, the female students using the cycle of doing projects performed better than the female students using the cycle of experiential learning, as shown as Figure 8.

Figure 8

Results of Johnson-Neyman Process for Females Using Different Learning Approaches

A simple main-effect analysis based on the division of learning approaches was further explored; see results in Table 4. When the group was divided based on learning approach, the Levene’s test was not violated for the cycle of experiential learning approach (F = 0.116, P = .737 > .050) or for the cycle of doing projects (F = 4.101, P = .057 > .050). The pre-test had no interaction with gender for the cycle of experiential learning approach (F = 1.596; P = .220 > .050). However, the pre-test had interaction with gender for the cycle of doing projects (F = 12.146**; P = .003 < .010). Therefore, the Johnson-Neyman process was further conducted.

Table 4

Simple Main-Effect Analysis Based on the Division of Learning Approaches

| Learning approach | Gender | n | Mean | SD | Adjusted mean | SE |

| Cycle of experiential learning | Female | 11 | 71.52 | 19.34 | 71.48 | 6.76 |

| Male | 14 | 42.86 | 21.04 | 42.15 | 6.47 | |

| Cycle of doing projects | Female | 7 | 67.62 | 25.94 | 67.84 | 8.50 |

| Male | 14 | 59.05 | 23.37 | 59.68 | 6.37 |

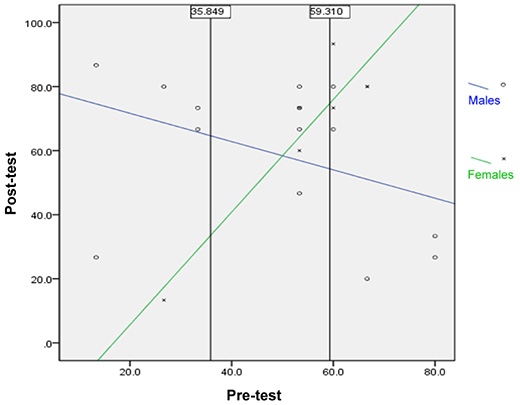

As for the group using the cycle of doing projects, when the pre-test was smaller than 35.849, the males outperformed the females. Conversely, when the pre-test was larger than 59.310, the females performed better than the males, as shown as Figure 9.

Figure 9

Results of Johnson-Neyman Process for Males and Females Using the Cycle of Doing Projects

Consequently, instructors are advised consider students’ prior knowledge when they choose learning approaches for the secondary school students learning conversational AI. Overall, the cycle of experiential learning was as effective as the cycle of doing projects for this curriculum. However, there was a significant interaction between gender and learning approach. From the classroom observations, this study found that most of the males tended to be distracted when they first studied the AI curriculum.

There were five items in the questionnaire of the performance of VUI. A two-way ANOVA was used to analyze the average scores of the five items determining whether students understood conversational artificial intelligence and had developed programming projects through the formal class in the secondary school with two different learning approaches. The dependent variable was the survey results after the instructional experiment. The two independent variables were gender and learning approach. The Levene’s test of determining homogeneity of regression was not violated (F(3,42) = 1.303, P = .286 > .05).

Table 5 shows the two-way ANOVA results of the VUI performance. It was found that there was significant impact on the interaction between learning approach and gender (F = 4.581*, P = .035 < 0.05, partial η2 = 0.098). At the same time, it was found that there were significant effects for gender (F = 6.543*, P = .014 < 0.05, partial η2 = 0.135) on students’ perspectives of the conversational AI curriculum, while no significant effect was found for students’ perspectives in the different learning approach conditions (F = 0.330, P = .569 > .05).

Table 5

Tests of Between-Subject Effects Measure in the Two-Way ANOVA for VUI Performance

| Source factor | Type III SS | MS | F | P | Partial η2 |

| Learning approach | 0.262 | 0.262 | 0.330 | .569 | |

| Gender | 5.204 | 5.204 | 6.543* | .014 | 0.135 |

| Learning approach * Gender | 3.644 | 3.644 | 4.581* | .038 | 0.098 |

Note. * p < .05.

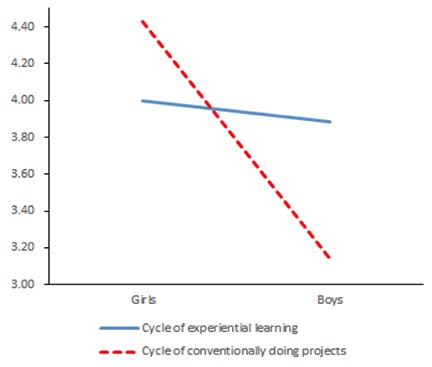

Because there was interaction between students’ VUI performance in the different learning approach conditions and for the different genders, simple main-effect analysis was further conducted. From the results presented in Table 6, we see that the VUI performance of the females learning with the cycle of experiential learning (mean = 4.00; SD = 0.63) and the cycle of doing projects (mean = 4.43; SD = 0.63) was similar (t = 1.416; P = .176 > .05). Furthermore, no significant difference (t = 1.924, P = .065 > .050) was found between the perspectives of males with the cycle of experiential learning (mean = 3.88; SD = 1.03) and the cycle of doing projects (mean = 3.14; SD = 1.01). In the cycle of doing projects, females’ VUI performance (mean = 4.43; SD = 0.63) outperformed males’ (mean = 3.14; SD = 1.01), which resulted in a significant difference (t = 2.923**; P = .009 < .01) with an effect size of 1.53. For the experiential learning approach, no significant difference (t = 0.322; P = .750 > .050) was found between the VUI performance of females (mean = 4.00; SD = 0.63) and males (mean = 3.88; SD = 1.03). Overall, the VUI performance of the females outperformed that of the males.

Table 6

Descriptive Statistics Results After the Simple Main-Effect Analysis in VUI Performance

| Learning approach | Gender | n | Mean | SD | Adjusted mean | SE |

| Cycle of experiential learning | Female | 11 | 4.00 | 0.63 | 4.00 | 0.27 |

| Male | 14 | 3.88 | 1.03 | 3.89 | 0.24 | |

| Cycle of doing projects | Female | 7 | 4.43 | 0.63 | 4.43 | 0.34 |

| Male | 14 | 3.14 | 1.01 | 3.14 | 0.24 |

Figure 10 shows the interaction between learning approach and gender on the students’ VUI performance. In the cycle of doing projects, the VUI performance of females was significantly better than that of males.

Figure 10

Interaction Between Learning Approach and Gender Regarding Students’ VUI Performance

The two-way ANCOVA was employed to compare the computational thinking of students using the different instructional approaches and their gender. The covariance was the initial measurement of computational thinking before the learning activity took place. The independent variables were gender (i.e., male and female) and learning approach (i.e., experiential learning and project-based learning). The dependent variable was the post-measurement of the computational thinking scale. Levene’s test was not violated (F(3,42) = 0.636, P = .596 > .050), suggesting that a common regression coefficient was appropriate for the two-way ANCOVA.

Table 7 shows the two-way ANCOVA results on the computational thinking scale. It was found that the covariance (i.e., the pre-measurement of computational thinking) would not cause significant effects on the interaction between the two factors, namely learning approach and gender, for the students’ computational thinking concepts. Therefore, it was meaningful to directly examine the interaction between learning approach and gender on students’ computational thinking. When the pre-measurement was not taken into consideration in the interaction, there was significant interaction between the two independent variables (F(3,42) = 7.047*, p = .011 < 0.050). Furthermore, the effect size (partial η2) of the interaction between learning approach and gender was 0.147, indicating a small to medium effect, larger than 0.10 presenting a small effect (Cohen, 1988).

Table 7

Two-Way ANCOVA Tests of Between-Subjects Effects on Computational Thinking Concepts

| Resource | SS | MS | F | P | Partial η2 |

| Learning approach * Pre-test | 0.12 | 0.12 | 0.200 | .658 | |

| Gender * Pre-test | 2.02 | 2.02 | 3.438 | .071 | |

| Learning approach * Gender * Pre-test | 0.53 | 0.53 | 0.896 | .350 | |

| Learning approach | 0.00 | 0.00 | 0.000 | .992 | |

| Gender | 0.33 | 0.33 | 0.537 | .468 | |

| Learning approach * Gender | 4.30 | 4.30 | 7.047* | .011 | 0.147 |

Note. * p < .05.

Because the interaction between learning approach and gender was significant, simple main-effect analysis was used. Table 8 shows that the computational thinking of males with the experiential learning approach (mean = 3.86; SD = 0.91) outperformed (t = 2.140*; P = .042 < 0.50) that of the males with the cycle of doing projects (mean = 3.19; SD = 0.74), with an effect size of 0.81. With the conventional instruction of the cycle of doing projects, females (mean = 4.20; SD = 0.77) presented significantly (t = 3.066**; P = .006 < .010) better computational thinking than did males (mean = 3.19; SD = 0.74) with an effect size of 1.34. There was no significant difference (t = 1.791, P = .095 > .050 ) between the computational thinking of females with the cycle of experiential learning approach (mean = 3.51; SD = 0.85) or the cycle of doing projects (mean = 4.20; SD = 0.77).

Table 8

Descriptive Data after the Simple Main-Effect Analysis for Computational Thinking Concepts

| Learning approach | Gender | n | Mean | SD | Adjusted mean | SE |

| Cycle of experiential learning | Female | 11 | 3.51 | 0.85 | 3.44 | 0.24 |

| Male | 14 | 3.86 | 0.91 | 3.90 | 0.21 | |

| Cycle of doing projects | Female | 7 | 4.20 | 0.77 | 4.08 | 0.30 |

| Male | 14 | 3.19 | 0.74 | 3.26 | 0.21 |

According to the results of this empirical study, when teachers instruct secondary school students to learn conversational AI curriculum, it is recommended that the low-achievement males and high-achievement females adopt the cycle of doing projects. It is also suggested that the high-achievement males and low-achievement females use the cycle of experiential learning, so as to meet their individual needs and differences.

This empirical study of applying the conventional cycle of doing projects to conversational AI curriculum found that females performed better than males in terms of computational thinking concepts. Based on information processing theory in cognitivism, males and females do not have the same level of focus when receiving and processing information. According to this theory, males require strong context linkage when processing information; we suggest that instructors provide additional scaffolding. It particular, it would be helpful to focus on context for male, so as to prevent them from being distracted, as was found in this study.

According to information processing theory, females focus on sharing information and developing correlations among the information they are aware of. In comparison with males, females are accustomed to taking in detailed information and understanding detailed processes. Therefore, in the future, it will be important to explore further the effects of various learning approaches on K-12 students of different gender learning AI.

Limitations of this study included the sample size for the instructional experiments, and the small number of the countries with experience learning the new functions of conversational AI in MIT App Inventor. Due to increased use of reliance on IoT, future research to apply the conversational AI tool used in this current study to K-12 education is encouraged.

The dataset is available by contacting the corresponding author.

The ethics rules and regulations of the Declaration of Helsinki were followed during the experiment. All the participants were volunteers and were told that they could quit the study at any time.

The researchers have no conflict of interest.

This study was supported in part by the Ministry of Science and Technology under contract numbers MOST 108-2511-H-003 -056 -MY3 and by the Hong Kong Jockey Club Charities Trust.

Adya, M., & Kaiser, K. M. (2005). Early determinants of women in the IT workforce: A model of girls’ career choices. Information Technology and People, 18(3), 230-259. https://doi.org/10.1108/09593840510615860

Amazon. (2021). Alexa Developer Console. Retrieved October 20, 2021, from https://developer.amazon.com/alexa/console/ask

Brennan, K., & Resnick, M. (2012). New frameworks for studying and assessing the development of computational thinking. Proceedings of the 2012 Annual Meeting of the American Educational Research Association (pp. 1-25). Vancouver, Canada.

Cetin, I. (2016). Preservice teachers’ introduction to computing: Exploring utilization of Scratch. Journal of Educational Computing Research, 54(7), 997-1021. https://doi.org/10.1177/0735633116642774

Cheng, G. (2019). Exploring factors influencing the acceptance of visual programming environment among boys and girls in primary schools. Computers in Human Behavior, 92, 361-372. https://doi.org/10.1016/j.chb.2018.11.043

Cheryan, S., Ziegler, S. A., Montoya, A. K., & Jiang, L. (2017). Why are some STEM fields more gender balanced than others? Psychological Bulletin, 143(1), 1-35.

Chiu, C. F. (2020). Facilitating K-12 teachers in creating apps by visual programming and project-based learning. International Journal of Emerging Technologies in Learning, 15(1), 103-118.

Efstratia, D. (2014). Experiential education through project based learning. Procedia - Social and Behavioral Sciences, 152, 1256-1260. https://doi.org/10.1016/j.sbspro.2014.09.362

Grover, S., & Pea, R. (2013). Computational thinking in K-12: A review of the state of the field. Educational Researcher, 42(1), 38-43. https://doi.org/10.3102/0013189X12463051

Hsu, T.-C., Abelson, H., Lao, N., Tseng, Y.-H. & Lin, Y.-T. (2021). Behavioral-pattern exploration and development of an instructional tool for young children to learn AI. Computers and Education: Artificial Intelligence, 2, 100012. https://doi.org/10.1016/j.caeai.2021.100012

Hsu, T.-C., Chang, S.-C., & Hung, Y.-T. (2018). How to learn and how to teach computational thinking: Suggestions based on a review of the literature. Computers & Education, 126, 296-310. https://doi.org/10.1016/j.compedu.2018.07.004

Kalelioǧlu, F. (2015). A new way of teaching programming skills to K-12 students: Code. org. Computers in Human Behavior, 52, 200-210. https://doi.org/10.1016/j.chb.2015.05.047

Kelleher, C., & Pausch, R. (2005). Lowering the barriers to programming: A taxonomy of programming environments and languages for novice programmers. ACM Computing Surveys, 37(2), 83-137. https://doi.org/10.1145/1089733.1089734

Korkmaz, Ö., & Altun, H. (2013). Engineering and CEIT student’s attitude towards learning computer programming. International Journal of Social Science Studies, 6(2), 1169-1185.

Lindh, J., & Holgersson, T. (2007). Does LEGO training stimulate pupils’ ability to solve logical problems? Computers & Education, 49(4), 1097-1111. https://doi.org/10.1016/j.compedu.2005.12.008

Long, D., & Magerko, B. (2020, April). What is AI literacy? Competencies and design considerations. Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1-16). Association for Computing Machinery, New York, NY, USA.

Lye, S. Y., & Koh, J. H. L. (2014). Review on teaching and learning of computational thinking through programming: What is next for K-12? Computers in Human Behavior, 41, 51-61. https://doi.org/10.1016/j.chb.2014.09.012

Martin, C. L., Ruble, D. N., & Szkrybalo, J. (2002). Cognitive theories of early gender development. Psychological Bulletin, 128(6), 903-933.

Meyers-Levy, J. (1986). Gender differences in information processing: A selectivity interpretation. Northwestern University.

Meyers-Levy, J. (1989). The influence of a brand name’s association set size and word frequency on brand memory. Journal of Consumer Research, 16(2), 197-207. https://doi.org/10.1086/209208

Özyurt, Ö., & Özyurt, H. (2015). A study for determining computer programming students’ attitudes towards programming and their programming self-efficacy. Journal of Theory and Practice in Education, 11(1), 51-67.

Piwek, P. & Savage, S. (2020). Challenges with learning to program and problem solve: An analysis of student online discussions. The 51st ACM Technical Symposium on Computer Science Education, SIGCSE ’20 (pp. 494-499). Association for Computing Machinery, Portland, OR, USA. https://doi.org/10.1145/3328778.3366838

Pucher, R. & Lehner M. (2011). Project based learning in computer science - A review of more than 500 projects. Procedia - Social and Behavioral Sciences, 29, 1561-1566. https://doi.org/10.1016/j.sbspro.2011.11.398

Putrevu, S. (2001). Exploring the origins and information processing differences between men and women: Implications for advertisers. Academy of Marketing Science Review, 10(1), 1-14.

Sáez-López, J. M., Román-González, M., & Vázquez-Cano, E. (2016). Visual programming languages integrated across the curriculum in elementary school: A two year case study using “Scratch” in five schools. Computers & Education, 97, 129-141. https://doi.org/10.1016/j.compedu.2016.03.003

Sendall, P., Stuetzle, C. S., Kissel, Z. A., Hameed, T. (2019). Experiential learning in the technology disciplines. Proceedings of the 2019 EDSIG Conference (Vol.5, n4968). Information Systems and Computing Academic Professionals.

Touretzky, D., Gardner-McCune, C., Martin, F., & Seehorn, D. (2019, July). Envisioning AI for K-12: What should every child know about AI? Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 33, pp. 9795-9799). https://doi.org/10.1609/aaai.v33i01.33019795

Van Brummelen, J. (2019). Tools to create and democratize conversational artificial intelligence [Master’s thesis, Massachusetts Institute of Technology]. https://hdl.handle.net/1721.1/122704

Weston, T. J., Dubow, W. M., Kaminsky, A. (2019). Predicting women’s persistence in computer science- and technology-related majors from high school to college. ACM Transactions on Computing Education, 20(1). https://doi.org/10.1145/3343195

Yukselturk, E., & Altiok, S. (2017). An investigation of the effects of programming with Scratch on the preservice IT teachers’ self-efficacy perceptions and attitudes towards computer programming. British Journal of Educational Technology, 48(3), 789-801. https://doi.org/10.1111/bjet.12453

The Effects on Secondary School Students of Applying Experiential Learning to the Conversational AI Learning Curriculum by Ting-Chia Hsu, Hal Abelson, and Jessica Van Brummelen is licensed under a Creative Commons Attribution 4.0 International License.