Volume 23, Number 3

Diana Mindrila and Li Cao

University of West Georgia

This study used a combined person- and variable-centered approach to identify self-regulated online learning latent profiles and examine their relationships with the predicted and earned course grades. College students (N=177) at a Southeastern U.S. university responded to the Online Self-Regulated Learning Questionnaire. Exploratory structural equation modeling revealed four self-regulation factors: goal setting, environment management, peer help-seeking, and task strategies. Latent profile analysis yielded four latent profiles: Below Average Self-Regulation (BASR), Average Self-Regulation (ASR), Above Average Self-Regulation (AASR), and Low Peer Help-Seeking (LPHS). Compared with the AASR group, when students anticipated obtaining a higher course grade, they were less likely to engage in peer help-seeking and task strategies and more likely to adopt the LPHS self-regulation profile. Relating to LPHS, membership to all other groups predicted significantly lower course grades. AASR and LPHS predicted their performance most accurately, with non-significant differences between the predicted and the final course grades.

Keywords: online self-regulated learning, latent profile analysis, person-centered approach, variable-centered approach, higher education

With advances in technology, as well as the COVID-19 pandemic, online learning has become an essential component of the learning opportunities available to most students (Aristovnik et al., 2020; Kaplan, 2017) and will likely become mainstream by 2025 (Palvia et al., 2018. Online learning, defined as learning taking place on the Internet (Moore et al., 2011) implies learners’ physical separation from their instructors and the institution. It requires students to engage more actively in regulating their learning as they decide where, when, and how to study (Gerjets et al., 2008; Wang et al., 2013). Therefore, students’ self-regulating abilities are essential for their academic performance when taking online courses (Ally, 2004; Barnard et al., 2009; Sitzmann et al., 2009; Winters et al., 2008; Zimmerman, 2008).

Self-regulated learning (SRL) is essential in all learning environments, whether face-to-face, blended, or online (Greene, 2018; Zimmerman, 2008); it allows for the achievement of learning goals and serves as a valuable educational outcome in and of itself (Chen, 2012; Greene, 2018). However, students encounter more difficulties in online classes than in other environments when they do not employ effective SRL techniques (Azevedo, 2005). Understanding how individual students develop and deploy self-regulation strategies is essential in order to promote online SRL (OSRL) and provide differentiated support according to students’ characteristics and needs (Guo & Reinecke, 2014; Hood et al., 2015; Kocdar et al., 2018; Schwam et al., 2021; Wong et al., 2019; Yeh et al., 2010; Zhang et al., 2015; Zheng, 2016;). Although research has acknowledged differences in students’ SRL skills and strategies (Greene & Azevedo, 2007), there has been a lack of clarity on how to account for these differences (Barnard-Brak et al., 2010). The current study addressed this gap by classifying OSRL characteristics based on how individual students endorsed and employed self-regulated learning strategies in an online course. Identifying profiles based on attributes of SRL among online students, and determining how such profiles relate to academic performance, was intended to advance SRL theory and offer guidance to support OSRL through a more nuanced personal approach.

Most research studies on SRL have followed a variable-centered approach, examining relationships among variables and summarizing trends for an entire sample without investigating how such relationships may vary across subgroups (Howard & Hoffman, 2017). In contrast, person-oriented approaches have examined each person, identifying subgroups of individuals with similar profiles (Bergman & Anderson, 2010). Such profiles reflect individual differences in motivation, strategy use, communication, and relation to others (Hampson & Colman, 1995; Woolfolk et al., 2006). Identifying OSRL profiles helps reveal distinct OSRL strategies and provided valuable information for promoting self-regulatory strategies based on individual student needs (Woolfolk, 2001). Combining the variable-centered and the person-centered methods allows researchers to investigate generalities across entire samples as well as the profiles of distinct subgroups (Marsh et al., 2009; Raufelder et al., 2013). In the current study, we used the combined approach to:

The term self-regulation has been used to refer to self-generated thoughts, feelings, and actions that learners activate and maintain to attain personal goals (Zimmerman, 1998; Zimmerman & Kitsantas, 2014). Self-regulated learners often displayed specific motivational beliefs or attitudes, cognitive strategies, and metacognitive abilities. They engaged in a cyclical process of planning, performing, evaluating, and reflecting, functioned as active agents in their learning (Schunk & Zimmerman, 2008; Winne, 1997; 2018), and obtained improved academic performance (Greene, 2018; Pintrich, 2004; Pintrich & DeGroot, 1990). Research has shown that SRL processes enabled learning in online environments (Azevedo & Hadwin, 2005; Lehmann et al., 2014; Winters et al., 2008). SRL strategies have led to increased academic achievement in online learning (e.g., Broadbent & Poon, 2015; Cuesta, 2010). “Individuals who are self-regulated in their learning appear to achieve more positive academic outcomes than individuals who do not exhibit self-regulated learning behaviors” (Barnard-Brak et al., 2010, p. 61).

Over the past four decades, SRL has been examined from various theoretical perspectives (Boekaerts, 1996, 1999; Boekaerts & Corno, 2005; Efklides, 2011; Paris & Paris, 2001; Pintrich & Zusho, 2002; Schunk & Greene, 2018; Zimmerman & Schunk, 1989). A common feature of these theoretical perspectives their description of SRL as an active cyclical process of three phases: (a) a preparatory phase of task analysis and goal setting; (b) a performance phase of strategy use and monitoring; and (c) an appraisal phrase of reflection on and evaluation of learning outcomes (Panadero, 2017; Peel, 2019; Puustinen & Pulkkinen, 2001). This broader view has highlighted SRL as a multi-dimensional process, driven by goal-directed learning of individual learners and mediated by contextual influences. By adopting this view, researchers have made continuous efforts to identify the personal and environmental factors that may influence SRL (Greene, 2018; Peel, 2019; Winne, 2018).

Understanding how students developed and deployed an optimal combination of the SRL knowledge and skills for enhanced learning and achievement has gained attention in the recent research on OSRL. This research has often focused on identifying motivation factors (Puzziferro, 2008; Wang et al., 2013) and the most effective strategies to help students learn and achieve academic goals (Cleary & Callan, 2018; Greene, 2018; Hirt et al., 2021; Lynch & Dembo, 2004; Reimann & Bannert, 2018; Schunk & Greene, 2018). Such studies have been criticized for using predominantly self-reported measures of student satisfaction, feelings, or perceived value of the educational experience (Deimann & Bastiaens, 2010; Wang et al., 2013) and for their lack of accurate determinants of the effectiveness of SRL (Broadbent & Fuller-Tysiewicz, 2018; Reimann & Bannert, 2018; Schunk & Greene, 2018; Zimmerman, 1989, 1990). These authors did not compare survey results with academic performance as measured by the course grade; further, they did not investigate the impact of the students’ prediction of their course grades on self-regulation strategies. The current study addressed this limitation by examining the relationships between predicted course grades (will), the selection and use of self-regulatory techniques (skill), and earned course grades (outcome).

Most researchers have used variable-centered approaches to examine SRL. Such methods focused on associations between variables and predictors’ contributions to a response variable (Lausen & Hoff, 2006); they did not help explain how individuals selectively used combinations of self-regulatory techniques and how the selected strategies integrated into self-regulation profiles (Schwinger et al., 2012). Variable-centered approaches have relied upon “the assumption that relationships observed at this group level are representative of the whole sample; an assumption that will be false in cases where distinct subgroups exist” (Broadbent & Fuller-Tysiewicz, 2018, p. 1437).

Studies that used variable-centered approaches neglected individual differences and the existence of distinct subgroups. Such studies did not explain how individual students selected and implemented motivational and learning strategies, and how specific regulation profiles were formulated based on each learner’s individualized strategy use (Broadbent & Fuller-Tysiewicz, 2018; Schwinger et al., 2012). In contrast, person-centered approaches to SRL adopted an idiographic perspective (Molenaar, 2004; Molenaar & Campbell, 2009). This perspective viewed individuals as organized entities who functioned and developed distinctively from other individuals (Bergman & Magnusson, 1997; Bergman et al., 2003). Person-centered approaches defined SRL as a dynamic, multi-dimensional process influenced by student characteristics, abilities, and personal experiences. Characterizing SRL as a multi-dimensional and individualized process provided a unique perspective and examined how “learners personally activate and sustain cognitions, affects, and behaviors that are systematically oriented toward the attainment of personal goals” (Zimmerman & Schunk, 2011, p. 1).

Variable-centered and person-centered approaches operated on disparate but complementary assumptions; therefore, a combinational method has emerged. The combined method offered the potential to minimize weaknesses and maximize each method’s advantages (Bámaca-Colbert & Gayles, 2010; Raufelder et al., 2013) and provided an alternative way to explore individual differences in educational research. Building on different cases, Bergman (1998) and von Eye (2010) proposed the following sequence of combined analyses: (a) start with variable-centered procedures to identify operating factors (Feyerabend, 1975; von Eye & Bogat, 2006); (b) use exploratory, person-centered analyses to distinguish possibly existing subpopulations (von Eye & Bogat, 2006); (c) use confirmatory person-centered analyses of data from independent samples to test theoretical assumptions; and finally, (d) use variable-centered methods to link theories and results from the various research approaches (Feyerabend, 1975; Molenaar & Campbell, 2009).

Following the recommended sequence (Bergman, 1998; von Eye, 2010), the current study used a combined variable-centered and person-centered approach and focused on the link between individualized use of SRL strategies and academic achievement (e.g., Schwinger et al., 2009, 2012; Wang et al., 2013). First, we employed the variable-centered method to operationalize the construct of SRL and its latent dimensions. We used survey variables to identify OSRL factors and estimate OSRL factor scores. Second, we used the person-centered approach to analyze and compare online learning self-regulation patterns and identify latent profiles. As Bergman and Magnusson (1997) suggested, variables included in the analyses were considered “only as components of the pattern under analysis and interpreted in relation to all the other variables considered simultaneously; the relevant aspect is the profile of scores” (p. 293). Third, we used the variable-centered procedures to investigate the relationship between self-regulation profiles and academic performance.

The course grade served as an outcome variable for evaluating the overall effectiveness of online learning (Lim et al., 2006). Previous studies did not include critical predictors of academic performance. Such predictors include help-seeking, metacognition, effort regulation, elaboration, time management, and critical thinking (Schwinger et al., 2009, 2012). Examining the relatedness of profiles of SRL to student predicted and actual course grades addressed this limitation.

Including a broader range of OSRL techniques allowed us to determine whether high-performing students used all SRL strategies more than did other students or whether they employed only the most effective ones (Broadbent & Fuller-Tysiewicz, 2018; Hirt et al., 2021). The person-centered approach enabled us to (a) identify several distinct self-regulation profiles with unique characteristics, (b) determine whether the academic performance anticipated at the beginning of the course impacted the self-regulation profile employed by online learners, and (c) determine which of the identified profiles predicted increased academic performance. Establishing SRL profiles helped us better understand how motivations and strategies interacted in OSRL. Specifically, we investigated four hypotheses:

Distinct latent factors of online learning self-regulation underlie the data (Barnard et al., 2009). We examined a broad range of motivational and SRL skills to identify the OSRL strategies most frequently employed by the students in our sample.

Distinct latent profiles of self-regulation exist based on participants’ endorsement of OSRL factors (Barnard et al., 2008). The OSRL profiles help educators facilitate the use and proficiency of the most effective self-regulation techniques (Bruso & Stefaniak, 2016; Panadero, 2017).

Depending on the expected course grade, students adopt specific OSRL strategies as described by the online learning self-regulation latent profiles (Barnard et al., 2008). Including student-predicted course grades is a novel approach to explore how OSRL profiles differ based on the expected level of performance.

Students’ final course grades vary across OSRL latent profiles. This hypothesis assumes that academic outcomes are significantly related to OSRL strategies (Greene, 2018). Unlike previous research, our study employed more complex modeling techniques that estimated the impact of variables measuring expected academic performance on the classification process. Based on previous research, our goal was to identify strategies of self-regulation and describe how such strategies may vary due to context, procedural factors, and individual differences. Based on previous research, our goal was to identify self-regulation strategies and describe how such strategies may vary due to context, procedural factors, and individual differences (Hampson & Colman, 1995; Händel et al., 2020; Schwam et al., 2021).

Participants in the study (N = 177) were students enrolled in fully online graduate-level education courses at a southeastern US university. Most participants were females (n = 161, 91%,) and only 9% (n = 16) identified as males. Participants identified themselves as White (n = 133, 75.1%), African American (n = 33, 18.6%), Hispanic (n = 6, 3.4%), or as other ethnicities (n = 5, 2.9%).

Data for the study consisted of (a) demographic information, (b) predicted course grade, (c) final course grade, and (d) responses to an online learning self-regulation questionnaire. At the beginning of the semester, we collected demographic information and asked participants to predict their overall grade at the end of the course as a percent correct estimate (e.g., 84%). The course syllabus described the class letter grade scale as: A = 90%, B = 80%, C = 70%, and F = 69% or below. The final course grade was the sum of student performance scores on (a) six end-of-unit tests developed by the instructor based on the textbook (Alexander, 2016); (b) two theory-to-practice assignments; and (c) two online discussions.

The Online Self-Regulated Learning Questionnaire (OSLQ; Barnard et al., 2009) was adapted to examine student OSRL. The OSLQ was designed to measure students’ ability to self-regulate their learning in online and traditional face-to-face learning environments. Our modified OSLQ included 24 items that measured 6 dimensions of self-regulation: (a) goal setting; (b) environment structuring; (c) task strategies; (d) time management; (e) help-seeking; and (f) self-evaluation on a Likert scale, ranging from 1 (strongly disagree) to 7 (strongly agree). Higher scores showed increased OSRL. According to Nunnally (1978), Cronbach α coefficient of.70 or better is acceptable when used in social science research such as this study. The values for Cronbach α ranged from.786 to.899 in the current study, indicating high levels of internal consistency.

Data analyses consisted of (a) descriptive analysis, (b) exploratory factor analysis, (c) latent profile analysis with a covariate and a distal outcome, and (d) examining demographic and achievement variables by latent profile. We calculated item means and standard deviations to examine the distribution of survey responses. Then, we used exploratory factor analysis (EFA) within the exploratory structural equation modeling (ESEM) framework to identify the latent variables underlying the data (Marsh et al., 2014). The ESEM approach was beneficial because it allowed the estimation and rotation of common factors and yielded a realistic representation of the data by calculating cross-loadings and assessing model fit (Marsh et al., 2014; Morin & Maiano, 2011; Morin et al., 2013). ESEM was employed to overcome the limitations of confirmatory procedures. With confirmatory approaches, the strict requirement of zero cross-loadings may lead to distorted factors, overestimated factor correlations, and distorted structural coefficients (Asparouhov & Muthén, 2009). Especially in the early stages of theory development, items are rarely pure indicators of the corresponding constructs. Cross-loadings other than zero could inflate the associations between the factors and misspecified cross-loading items. Simulation studies have shown that researchers should estimate even small cross-loadings such as.100; otherwise, parameters could be inflated or biased (Asparouhov et al., 2015).

We used Mplus 8.2 statistical software as well as the mean- and variance-adjusted weighted least squares (WLSMV) estimation procedure with Geomin rotation. The WLSMV method does not rely on the assumption of multivariate normality and provides more accurate results with smaller samples and ordinal data than do other estimation procedures (Finney & DiStefano, 2006). The indices used to assess model fit were the (a) χ2 statistic and its p-value; (b) χ2/df index; (c) root mean square error of approximation index (RMSEA) and its 95% confidence interval (CI); (d) comparative fit index (CFI); (e) Tucker-Lewis index (TLI); and (f) weighted root mean residual (WRMR). We sequentially removed cross-loading items until the model reached a simple structure. After obtaining an optimal solution, researchers computed scores for individual factors. These coefficients estimate the location of every person on the identified factors (DiStefano et al., 2009).

We then conducted latent profile analysis (LPA) to estimate a latent categorical variable (C) using a set of continuous observed indicators (Collins & Lanza, 2009). The LPA model specified the individual factor scores as observed indicators of the latent categorical variable of online learning self-regulation. The LPA model included the predicted course grade as a covariate of C and the final course grade as C’s distal outcome. To estimate this model, we followed Asparouhov and Muthén’s (2014) three-step approach. It corrects for classification error by (a) estimating the LPA model first, (b) creating a nominal most likely profile variable N, and (c) estimating the mixture model with covariates and a distal outcome where N is an indicator of C with measurement error at the misclassification rate estimated at step one.

Models with two (model 2), three (model 3), four (model 4), and five (model 5) latent profiles were estimated. We selected the optimal model based on the interpretability of the latent profiles and statistical measures. The statistical criteria consisted of goodness of fit indices and estimates of classification precision. The indices used to assess model fit were the Bayesian Information Criteria (BIC) and the Akaike Information Criteria (AIC). Models with lower AIC and BIC values are more parsimonious and better fit the data (DiStefano, 2012; Muthén, 2004; Vermunt & Magidson, 2002).

The classification precision measures were (a) the average latent profile probabilities for the most likely profile membership, (b) classification probabilities for the most likely latent profile membership, and (c) entropy. Average latent profile probabilities and classification probabilities for most likely profile membership represent the proportions of correctly classified cases in each latent profile. Entropy is an overall index of classification certainty and shows whether the estimated profiles have distinct characteristics. Entropy coefficients range from 0 to 1, and values closer to 1 show that a model has superior classification precision (Akaike, 1977; Ramaswamy et al., 1993; Vermunt & Magdison, 2002).

Finally, latent profiles were further described by comparing factor scores, demographic information, student GPA, course grades, and predicted grades across groups. We compared continuous variables across groups using the Kruskal-Wallis H test. Similarly, we compared the distribution of categorical variables across profiles using the χ2 test. Using the Wilcoxon Signed Ranks Test, we examined differences between predicted grades and final course grades within each group.

The survey item with the highest mean rating was from the environment structuring scale (Barnard et al., 2009); the item stated “I know where I can study most efficiently for online courses.” The item with the lowest mean rating was from the help-seeking scale (Barnard et al., 2009) and stated “I am persistent in getting help from the instructor through e-mail.” Table 1 reports the means and standards deviation of all survey items.

Table 1

Descriptive Statistics and Model Parameter Estimates

| Factors | Cronbach’s alpha | Survey item | M (SD) | Loading | Two-tailed p-value |

| F1: Goal setting | .826 | I set goals to help me manage my studying time for my online courses. | 5.20 (1.418) | 0.886 | 0.000 |

| I set short-term (daily or weekly) goals as well as long-term goals (monthly or for the semester). | 5.19 (1.590) | 0.885 | 0.000 | ||

| I keep a high standard for my learning in my online courses. | 5.59 (1.268) | 0.478 | 0.000 | ||

| I try to schedule the same time every day or every week to study for my online courses, and I observe the schedule. | 4.86 (1.691) | 0.441 | 0.000 | ||

| F2: Environment management | .899 | I find a comfortable way to study. | 5.62 (1.369) | 0.922 | 0.000 |

| I choose the location where I want to study to avoid too much distraction. | 5.58 (1.334) | 0.914 | 0.000 | ||

| I know where I can study most efficiently for online courses. | 5.66 (1.292) | 0.727 | 0.000 | ||

| I choose a time with few distractions for studying for my online courses. | 5.40 (1.419) | 0.678 | 0.000 | ||

| F3: Peer help-seeking | .893 | I communicate with my classmates to find out how I am doing in my online classes. | 4.51 (1.837) | 0.998 | 0.000 |

| I share my problems with my classmates online, so we know what we are struggling with and how to solve our problems. | 4.59 (1.817) | 0.865 | 0.000 | ||

| I communicate with my classmates to find out what I am learning that is different from what they are learning. | 4.37 (1.820) | 0.86 | 0.000 | ||

| I find someone knowledgeable in course content so that I can consult with him or her when I need help. | 4.33 (1.817) | 0.685 | 0.000 | ||

| If needed, I try to meet my classmates face-to-face. | 4.54 (1.974) | 0.574 | 0.000 | ||

| F4: Task strategies | .786 | I summarize my learning in online courses to examine my understanding of what I learned. | 4.77 (1.476) | 0.757 | 0.000 |

| I work extra problems in my online courses in addition to the assigned ones to master the course content. | 3.75 (1.824) | 0.702 | 0.000 | ||

| I prepare my questions before joining the chat room and discussion. | 4.47 (1.758) | 0.674 | 0.000 | ||

| I ask myself a lot of questions about the course material when studying for an online course. | 4.67 (1.468) | 0.623 | 0.000 | ||

| I am persistent in getting help from the instructor through email. | 3.60 (1.800) | 0.464 | 0.000 | ||

| I read aloud instructional materials posted online to fight against distractions. | 4.56 (1.846) | 0.37 | 0.000 | ||

| F2 - F1 | 0.538 | 0.000 | |||

| F3 - F1 | 0.221 | 0.001 | |||

| F3 - F2 | 0.228 | 0.000 | |||

| F4 - F1 | 0.620 | 0.000 | |||

| F4 - F2 | 0.402 | 0.000 | |||

| F4 - F3 | 0.441 | 0.000 | |||

Exploratory factor analysis (EFA) yielded a four-factor solution. We obtained a simple structure after sequentially removing five cross-loading items. Goodness of fit indices showed that the final solution had an overall good fit to the data (χ2(101) = 211.255, χ2/df = 2.091, RMSEA = 0.079, CI = [0.064 - 0.093]; CFI = 0.983; TLI = 0.971). Table 1 lists the items included in each factor and their factor loading estimates, and p values. The first factor, F1, included four items referring to setting learning goals and study time. Cronbach’s α index of internal consistency for this factor was .826. The second factor, F2, included four items referring to choosing a suitable environment for studying. F2 had an internal consistency of .899. The third factor, F3, included five items referring to communicating with peers for self-evaluation and help-seeking. This factor had an internal consistency of .893. Finally, the fourth factor, F4, included six items referring to task strategies and had an internal consistency of .786. As indicated in Table 1, all relationships among factors were statistically significant. The strongest relationships were F1 - F4 and F1 - F2 (Table 1). Table 2 presents the factor covariances and correlations.

Table 2

Factor Covariances and Correlations

| Factor | F1 | F2 | F3 | F4 |

| Covariance | ||||

| F1 | 0.921 | |||

| F2 | 0.559 | 0.891 | ||

| F3 | 0.233 | 0.232 | 0.919 | |

| F4 | 0.666 | 0.419 | 0.434 | 0.901 |

| Correlation | ||||

| F2 | 0.618** | |||

| F3 | 0.254** | 0.257** | ||

| F4 | 0.731** | 0.468** | 0.477** | |

Note. ** Significant at α = 0.01 (2-tailed). F1: goal setting; F2: environment management; F3: peer help-seeking; F4: task strategies.

We estimated LPA models with two (Model 2), three (Model 3), four (Model 4), and five (Model 5) latent profiles. Although Model 5 had a better fit to the data (Table 3), it included a group of only four individuals, which may indicate an overfitted model (DiStefano, 2012; Nylund et al., 2007). Typically, groups that include less than 5% of the sample are considered too small (Nasserinejad et al., 2017).

Table 3

Goodness of Fit and Classification Precision by Model

| Index | Model 2 | Model 3 | Model 4 | Model 5 |

| Entropy | .864 | .816 | .841 | .855 |

| AIC | 1817.507 | 1750.905 | 1724.390 | 1703.768 |

| BIC | 1861.973 | 1814.428 | 1806.969 | 1805.405 |

| Sample-adjusted BIC | 1817.638 | 1751.092 | 1724.632 | 1704.067 |

Note. AIC: Akaike Information Criterion; BIC: Bayesian Information Criterion.

Model 4 included larger groups, had the most informative solution, and had a good fit to the data. This model was, therefore, selected as the optimal model. Model 4 had an adequate classification precision, with an entropy of 84.1%. As illustrated in Figure 1, the largest group (n = 92) had factor scores close to average on all factor scores and was labeled average self-regulation (ASR). The second largest group (n = 32) had scores that were significantly below the average on all factors and was labeled below average self-regulation (BASR). The third group (n = 30) had scores significantly above average on all factors, and we labeled it above average self-regulation (AASR). The fourth latent profile (n = 23) had scores higher than average on F1 and F2, lower than average on F3, and close to average on F4. We named this group low peer help-seeking (LPHS). Average latent profile probabilities and classification probabilities for most likely latent profile membership ranged between 75.7% and 95.5% (Table 4).

Figure 1

Mean Factor Scores by Latent Profile

Table 4

Model 4: Average Latent Profile Probabilities and Classification Probabilities

| Latent Profile Probability | BASR | ASR | LPHS | AASR |

| BASR: Average latent profile | .909 | .091 | .000 | .000 |

| BASR: Classification | .830 | .170 | .000 | .000 |

| ASR: Average latent profile | .053 | .917 | .024 | .006 |

| ASR: Classification | .027 | .955 | .012 | .006 |

| LPHS: Average latent profile | .000 | .101 | .878 | .021 |

| LPHS: Classification | .000 | .178 | .757 | .064 |

| AASR: Average latent profile | .000 | .033 | .049 | .919 |

| AASR: Classification | .000 | .035 | .014 | .951 |

Note. Below average self-regulation (BASR), average self-regulation (ASR), above average self-regulation (AASR), low peer help-seeking (LPHS).

Table 5 reports the latent profile mean factor scores and two-tailed p-values. The Kruskal-Wallis test showed that scores on the four factors differed significantly across latent profiles (Table 6).

Table 5

Mean Factor Scores by Latent Profile

| Profile | Factor | Estimate | Two-tailed p value |

| BASR (n = 32) | F1 | -1.188 | 0.000 |

| F2 | -0.790 | 0.000 | |

| F3 | -0.642 | 0.000 | |

| F4 | -0.978 | 0.000 | |

| ASR (n = 92) | F1 | -0.045 | 0.693 |

| F2 | -0.116 | 0.286 | |

| F3 | 0.118 | 0.318 | |

| F4 | 0.000 | 0.999 | |

| LPHS (n = 23) | F1 | 1.077 | 0.000 |

| F2 | 1.038 | 0.000 | |

| F3 | -0.933 | 0.007 | |

| F4 | 0.135 | 0.695 | |

| AASR (n = 30) | F1 | 1.640 | 0.000 |

| F2 | 1.219 | 0.000 | |

| F3 | 1.162 | 0.000 | |

| F4 | 1.729 | 0.000 |

Note. Below average self-regulation (BASR), average self-regulation (ASR), above average self-regulation (AASR), low peer help-seeking (LPHS).

Table 6

Tests Factor Score Variation Across Latent Profiles

| Statistic | F1 | F2 | F3 | F4 |

| Kruskal-Wallis H | 123.542 | 78.267 | 60.300 | 89.833 |

| df | 3 | 3 | 3 | 3 |

| p value | < .001 | < .001 | < .001 | < .001 |

Note. F1: goal setting; F2: environment management; F3: peer help-seeking; F4: task strategies.

Table 7 reports, for each group, demographic distribution, average GPA, predicted grade, final course grade, and the difference between the expected and the final course grade. Results from significance tests showed that ethnicity (χ2(12) = 6.492, p = .889), gender (χ2(3) = 1.402, p = .705), age (H(3) = 5.874, p = .118), GPA (H(3) = 5.614, p = .132), final course grade (H(3) = 7.500, p = .058), and the difference between the predicted and the final course grade (H(3) =.431, p =.934) did not differ significantly across latent profiles. Only the predicted course grade (H(3) = 10.886, p = .012) recorded statistically significant differences across groups, with higher values for the LPHS group, followed by AASR, ASR, and BASR.

Table 7

Demographics and Student Achievement by Latent Profile

| Variable | BASR n = 32 18% | ASR n = 92 52% | LPHS n = 23 13% | AASR n = 30 17% | Total N = 177 100% | |

| Ethnicity | White | 75% | 77% | 66% | 63% | 73% |

| African American | 22% | 14% | 30% | 37% | 21% | |

| Hispanic | 0% | 5% | 4% | 0% | 3% | |

| Native American | 0% | 1% | 0% | 0% | 1% | |

| Other | 3% | 3% | 0% | 0% | 2% | |

| Gender | Male | 9% | 12% | 0% | 7% | 9% |

| Female | 91% | 88% | 100% | 93% | 91% | |

| Age | M (SD) | 24 (6) | 25 (8) | 30 (11) | 23 (6) | 25 (8) |

| Kruskal-Wallis Test | ||||||

| Mean rank | 93.67 | 85.92 | 118.69 | 79.50 | ||

| GPA | M (SD) | 3.49 (.41) | 3.49 (.36) | 3.62 (.49) | 3.35 (.40) | 3.49 (.39) |

| Kruskal-Wallis Test | ||||||

| Mean rank | 91.17 | 88.87 | 113.27 | 70.5 | ||

| Predicted grade | M (SD) | 86.52 (5.01) | 88.12 (5.62) | 91.85 (3.24) | 88.56 (5.13) | 88.12 (5.42) |

| Kruskal-Wallis Test | ||||||

| Mean rank | 71.50 | 89.22 | 126.04 | 91.70 | ||

| Course grade | M (SD) | 89.44 (6.11) | 90.32 (6.33) | 94.47 (3.88) | 90.43 (7.73) | 90.48 (6.39) |

| Kruskal-Wallis Test | ||||||

| Mean rank | 79.10 | 86.63 | 123.85 | 90.60 | ||

| Predicted grade - Course grade | M (SD) | -2.92 (5.48) | -2.20 (6.16) | -2.62 (4.72) | -1.87 (8.31) | -2.32 (6.19) |

| Kruskal-Wallis Test | ||||||

| Mean rank | 71.50 | 89.22 | 126.04 | 91.70 | ||

| Wilcoxon Signed Ranks Test | ||||||

| Z | -2.704 | -3.848 | -1.853 | -1.456 | ||

| P | .007** | .000** | .064 | .145 | ||

Note. *Statistically significant at α = .05. ** Statistically significant at α = .01. Below average self-regulation (BASR), average self-regulation (ASR), above average self-regulation (AASR), low peer help-seeking (LPHS).

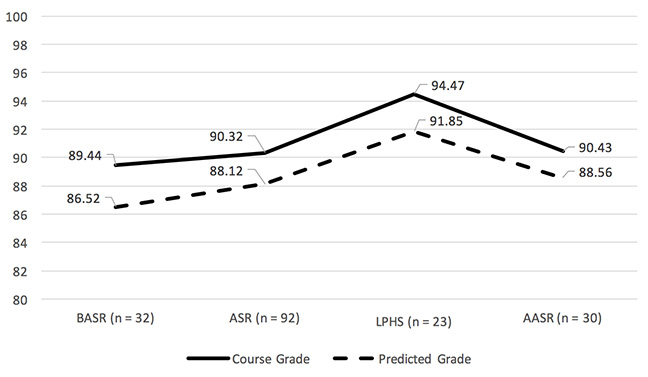

The final course grade followed the same pattern (Figure 2), but differences across groups were not statistically significant. The LPHS profile had the highest average GPA, the highest final grade, and the highest predicted course grade. All groups underestimated their course grade by approximately two points. The Wilcoxon Signed Ranks test yielded differences statistically different from zero between the predicted course grades and final course grades for the ASR and the BASR latent profiles. These differences were not statistically significant for the LPHS and AASR latent profiles. Overall, the difference between the predicted grade and final course grade did not vary significantly across the four latent profiles (Table 7).

Figure 2

Average Course Grade and Predicted Grade by Latent Profile

Compared to AASR, the predicted grade had a significant relationship with the LPHS group, but not with the other latent profiles (Table 8). In other words, in the AASR group, individuals with higher predicted grades were 20.7% more likely to be members of the LPHS group. Compared to the LPHS group, membership in any other latent profile was a significant predictor of a lower final course grade. Conversely, members of the LPHS latent profile were more likely to have higher final grades than those of the BASR and the ASR latent profiles (Table 8).

Table 8

Relationships between Latent Profiles, Predicted Grade, and the Course Grade

| Relationship | Estimate | SE | Estimate/ SE | Two-tailed p-value | Odds ratio | |

| Reference: AASR | Predicted grade → BASR | -0.059 | 0.064 | -0.923 | 0.356 | 0.945 |

| Predicted grade → ASR | 0.007 | 0.059 | 0.122 | 0.903 | 1.007 | |

| Predicted grade → LPHS | 0.188 | 0.079 | 2.373 | 0.018* | 1.207 | |

| Reference: AASR | BASR → Final grade | -0.016 | 0.055 | -0.286 | 0.775 | |

| ASR → Final grade | 0.004 | 0.051 | 0.086 | 0.932 | ||

| LPHS → Final grade | 0.198 | 0.102 | 1.952 | 0.051 | ||

| Reference: BASR | ASR → Final grade | 0.020 | 0.033 | 0.610 | 0.542 | |

| LPHS → Final grade | 0.214 | 0.087 | 2.462 | 0.014* | ||

| AASR → Final grade | 0.016 | 0.055 | 0.286 | 0.775 | ||

| Reference: ARS | BASR → Final grade | -0.020 | 0.033 | -0.610 | 0.542 | |

| LPHS → Final grade | 0.194 | 0.086 | 2.256 | 0.024* | ||

| AASR → Final grade | -0.004 | 0.051 | -0.086 | 0.932 | ||

| Reference: LPHS | BASR → Final grade | -0.214 | 0.087 | -2.462 | 0.014* | |

| ASR → Final grade | -0.194 | 0.086 | -2.256 | 0.024* | ||

| AASR → Final grade | -0.201 | 0.102 | -1.970 | 0.049* | ||

Note. * Statistically significant at α = .05. Below average self-regulation (BASR), average self-regulation (ASR), above average self-regulation (AASR), low peer help-seeking (LPHS).

The current study combined person-centered and variable-centered perspectives (Bergman & Magnusson, 1997; Marsh et al., 2009; von Eye, 2010) and expanded the existing research on OSRL (e.g., Abar & Locken, 2010; Barnard-Brak et al., 2010; Broadbent & Poon, 2015; Cuesta, 2010; Schwinger et al., 2009, 2012). Our results helped clarify the complexity of self-regulation of learning in the online environment: particularly, on what constitutes OSRL, how individual differences permeate through profiles of OSRL, and how the OSRL latent profiles are related to academic performance in the online learning environment.

Our first objective was to ascertain the factors of OSRL. Exploratory factor analytic procedures within the ESEM framework yielded four OSRL factors: (a) goal-setting (F1); (b) environment management (F2); (c) peer help-seeking (F3); and (d) task strategies (F4). Items included in these four factors had high internal consistency levels, and the factor structure had a very good model fit. This evidence supported the four-factor solution as the optimal factor structure for our sample. Results were very similar to some of the factors of online self-regulated learning identified by Barnard et al. (2009): goal setting, environment structuring, task strategies, and help-seeking.

Nevertheless, our factor structure did not include two of the factors identified by Barnard et al. namely time management and self-evaluation. Items from these scales were either removed because of cross-loadings or loaded under a different factor. For example, two items from the original self-evaluation scale loaded under the task strategies factor (F4), whereas the other two loaded under the peer help-seeking factor (F3). The help-seeking factor was redefined as peer help-seeking (F3). This factor referred only to communicating with peers for self-evaluation and information on class progress and did not include seeking help from the instructor.

Similarly, the environment structuring factor became environment management. This factor referred primarily to selecting or manipulating the environment rather than making changes. All factor correlations and covariances were statistically significant. The most robust relationship was between goal-setting (F1) and task strategies (F4), followed by goal setting (F1) and environment management (F2). In contrast, the weakest relationships were between peer help-seeking (F3) and environment management (F2), and between peer help-seeking (F3) and goal setting (F1). However, the factor structure identified in this study varied from previous studies using the same instrument (Barnard et al., 2009). Further research should focus on examining the dimensions of OSRL with samples that are larger and reflect demographic distributions that are representative of the population. Such studies would provide evidence of external validity for the OSRL factor structure.

The second objective was to identify latent profiles of OSRL. Results from LPA showed that students in our sample employed four types of self-regulation, as described by the BASR, ASR, LPHS, and AARS latent profiles. The BASR, ASR, and AASR latent profiles differed mainly quantitatively in the overall level of self-regulation. Nevertheless, the LPHS latent profile described a pattern of high levels of goal setting and environment management, average levels of task strategies, and reduced peer help-seeking levels. This model had good classification precision and was a good fit to the data. Scores on the four factors showed significant variations across latent profiles. These results constitute evidence that the four groups represent distinct profiles of online learning self-regulation.

The study conducted by Barnard-Brak et al. (2010) identified five profiles of online self-regulated learning identified as (a) super self-regulators, (b) competent self-regulators, (c) forethought-endorsing self-regulators, (d) “performance-reflection self-regulators, and (e) non- or minimal self-regulators. Although the current study used the same data collection instrument, we found a four-profile model optimal for our sample. These four latent profiles were comparable to four of the groups identified by Barnard-Brak et al. The AASR, ASR, and BASR latent profiles identified in the current study described overall online learning self-regulation levels. These groups had profiles similar to the super self-regulators, competent self-regulators, and non- or minimal self-regulators. The LPHS latent profile corresponds to the forethought-endorsing self-regulators. Individuals assigned to this group more highly endorse goal setting and environment structuring as self-regulated learning skills while endorsing task strategies, time management, help-seeking, and self-evaluation to much lesser extents (Barnard-Brak et al., 2010). We did not identify a group of performance-reflection self-regulators in our sample. A critical difference between the two studies was that items measuring time management and self-evaluation did not load under distinct factors and were not, consequently, used as separate observed indicators for LPA. These survey items loaded under the task strategies factor (F4) and the peer help-seeking factor (F3).

While very informative and directly applicable to online learning, the OSRL latent profiles that we identified in the current study are sample-specific and based on a relatively small group of students. Future studies could continue examining OSRL using cross-sectional designs to determine whether the OSRL latent profiles differ across age groups, educational levels, subject areas, or geographical locations. Replicating the study with larger samples and samples with a more balanced demographic distribution would support the external validity of the OSRL latent profile model. Further, repeating the current study in other subject areas and learning environments would show the extent to which the learning context influences how students approach their learning (Severiens et al., 2001; Wong et al., 2019).

In addition to a cross-sectional design, future research should also investigate OSRL longitudinally, as OSRL entails a dynamic personal process that continually evolves with students’ development of existing behaviors and strategies based on prior success and emerging challenges (Abar & Loken, 2010; Greene, 2018; Severiens et al., 2001; Winne, 1995, 1996, 1997, 2018). Such studies would clarify whether increases in students’ OSRL are associated with gains in academic achievement over time (Barnard et al., 2009). Further, longitudinal studies using latent transition analysis may help determine whether students’ OSRL latent profiles change across time.

Our third objective focused on the relationship between the predicted course grades and the adoption of specific latent profiles of online learning self-regulation. Predicted course grades showed significant differences across groups, with the highest values for the LPHS latent profile, followed by the AASR, ASR, and BASR latent profiles. Results from LPA showed that, in reference to AASR, a higher predicted grade was a significant predictor of adopting the LPHS self-regulation strategy. In other words, compared with the AASR group, when students anticipated obtaining a higher course grade, they were less likely to engage in peer help-seeking and task strategies and, therefore, were more likely to adopt the LPHS self-regulation profile. This finding partially confirmed the initial hypothesis that the predicted course grade is a significant predictor of adopting a specific type of online learning self-regulation as described by the online learning self-regulation latent profiles (Barnard-Brak et al., 2010; Stan, 2012; Wang et al., 2013). Our study also examined the differences between students’ predicted course grades and their final course grades. All students underestimated their final course grades; however, the two groups with higher levels of OSRL (AASR and LPHS) had better prediction accuracy. These were the only groups with non-significant differences between the predicted grade and the final course grade.

Examining the relationship between expected outcomes and OSRL latent profiles is a unique contribution of our study. As far as we know, this has not been investigated previously. Therefore, further research is needed to determine whether the current results are sample-specific or replicate with other samples. Also, future research should investigate whether the relationship between predicted grades and OSRL strategies varies across age groups and subject areas. Additional covariates, representing human factors or individual differences, could be included in the latent profile model to examine how they predict OSRL latent profile memberships. Such covariates may be self-efficacy, goal orientations, educational level, prior academic performance, and so on.

Our fourth objective was to examine the relationship between latent profiles of online learning self-regulation and student academic performance by including the final course grade as a distal outcome of the self-regulation latent categorical variable. Descriptive statistics showed that groups with higher self-regulation levels had slightly higher final course grades, but variations across groups did not reach statistical significance. Specifically, the LPHS group had the highest course grades, followed by the AASR, ASR, and BASR groups. LPA results showed that, compared to the LPHS latent profile, membership in any of the other latent profiles predicted a lower final course grade. However, some relationships between latent profile memberships and final course grades did not vary significantly across all latent profiles.

These findings partially support the hypothesis that academic outcomes differ across self-regulation latent profiles (Broadbent & Poon, 2015; Greene, 2018; Wang et al., 2013). Further, our study did not replicate the significant differences in student GPA across online learning self-regulation latent profiles recorded by Barnard-Brak et al. (2010). Therefore, further research is needed to determine whether OSRL latent profiles are course-specific and how they relate to students’ GPAs. Investigating the predictive power of the OSRL latent profiles on course grades across age groups and subject areas would contribute to a better understanding of how individualized OSRL impacts outcomes of online learning.

The current study expanded the research on establishing profiles of online SRL from undergraduate students (e.g., Barnard et al., 2009; Barnard-Brak, et al., 2010; Broadbent & Fuller-Tyszkiewicz, 2018; Broadbent & Poon, 2015) to graduate students. These findings can help online instructors design interventions and instructional techniques that support those strategies of self-regulation that have a positive impact on student achievement and thus avoid the practices that are not productive (Bruso & Stefaniak, 2016; Greene, 2018; Panadero, 2017; Wang et al., 2013; Winne & Nesbit, 2010). This information is critical in the online learning environment, which may require increased levels of self-regulation to achieve academic success (Barnard et al., 2009). For instance, (a) providing increased opportunities for interaction, (b) including a self-monitoring system, (c) supporting self-efficacy beliefs and optimistic attributions, (d) creating opportunities for cognitive apprenticeship such as coaching, and (e) providing feedback have been shown to help self-regulation when using technology-enhanced learning environments (Abar & Loken, 2010; Steffens, 2006; Wong et al., 2019).

The current study aimed to better understand how individual students used self-regulation strategies in the online learning environment. Using both variable-centered and person-centered approaches, we ascertained four dimensions of OSRL, identified four latent profiles of OSRL strategies employed by the students, and examined how the OSRL latent profiles relate to the predicted and the final course grades. Our results showed that the latent self-regulation variable did not necessarily have a continuous distribution. Students employed the various dimensions of self-regulation to a different extent based on their predicted performance in the course. The majority of high-performing students used all self-regulating strategies at the highest level (AARS). Nevertheless, a smaller group of high-performing students (LHPS) sought less help from their peers than the other groups and employed fewer task strategies than did the AASR group. Our results also showed that high-performing students predicted their course grades with more accuracy than did low-performing groups.

Together with other emerging studies (Abar & Loken, 2010; Barnard-Brak et al., 2010; Broadbent & Poon, 2015; Broadbent & Fuller-Tyszkiewicz, 2018; Hirt et al., 2021; Schwinger et al., 2009, 2012), the current research demonstrated the advantages of using a combined person-centered and variable-centered approach in the research of SRL in the online environment. Our results showed that viewing SRL as a multi-dimensional construct, and particularly as a discrete latent variable, could help us better understand the self-regulation of online learning. Addressing individual difference factors in the study of OSRL would enable us to account for the wide range of personal and contextual factors (Schwam et al., 2021; Wong et al., 2019). More importantly, such studies will shed light on developing online learning environments with adaptive support to optimize learning for individual students.

Abar, B., & Loken, E. (2010). Self-regulated learning and self-directed study in a pre-college sample. Learning and Individual Differences, 20, 25-29. https://doi.org/10.1016/j.lindif.2009.09.002

Akaike, H. (1977). On entropy maximization principle. In P. R. Krishnaiah (Ed.), Applications of statistics (pp. 27-41). Elsevier Science.

Alexander, P. A. (2016). Psychology in learning and instruction. Pearson.

Ally, M. (2004). Foundations of educational theory for online learning. In T. Anderson (Ed.), The theory and practice of online learning (pp. 15-44). Athabasca University Press.

Aristovnik, A., Keržič, D., Ravšelj, D., Tomaževič, N., & Umek, L. (2020). Impacts of the COVID-19 pandemic on life of higher education students: A global perspective. Sustainability, 12(20), 8438. https://doi.org/10.3390/su12208438

Asparouhov, T., & Muthén, B. (2009). Exploratory structural equation modeling. Structural Equation Modeling: A Multidisciplinary Journal, 16, 397-438. https://doi:10.1080/10705510903008204

Asparouhov, T., & Muthén, B. (2012). Auxiliary variables in mixture modeling: A 3-step approach using Mplus. Structural Equation Modeling: A Multidisciplinary Journal, 21(3), 329-341. https://doi.org/10.1080/10705511.2014.915181

Asparouhov, T., Muthén, B., & Morin, A. J. (2015). Bayesian structural equation modeling with cross-loadings and residual covariances: Comments on Stromeyer et al. Journal of Management, 41, 1561-1577. https://doi:10.1177/0149206315591075

Azevedo, R. (2005). Using hypermedia as a metacognitive tool for enhancing student learning? The role of self-regulated learning. Educational Psychologist, 40(4), 199-209. https://doi.org/10.1207/s15326985ep4004_2

Azevedo, R., & Hadwin, A. F. (2005). Scaffolding self-regulated learning and metacognition—Implications for the design of computer-based scaffolds. Instructional Science, 33(5-6), 367-379. https://doi.org/10.1007/s11251-005-1272-9

Bámaca-Colbert, M. Y., & Gayles, J. G. (2010). Variable-centered and person-centered approaches to studying Mexican-origin mother-daughter cultural orientation dissonance. Journal of Youth and Adolescence, 39(11), 1274-1292. https://doi.org/10.1007/s10964-009-9447-3

Barnard, L., Lan, W. Y., To, Y. M., Paton, V. O., & Lai, S. L. (2009). Measuring self-regulation in online and blended learning environments. Internet and Higher Education, 12(1), 1-6. https://doi.org/10.1016/j.iheduc.2008.10.005

Barnard, L., Paton, V. O., & Lan, W. Y. (2008). Online self-regulatory learning behaviors as a mediator in the relationship between online course perceptions with achievement. International Review of Research in Open and Distributed Learning, 9(2), 1-11. https://doi.org/10.1016/j.iheduc.2008.10.005

Barnard-Brak, L., Lan, W. Y., & Paton, V. O. (2010). Profiles in self-regulated learning in the online learning environment. International Review of Research in Open and Distributed Learning, 11(1), 63-80. https://doi.org/10.19173/irrodl.v11i1.769

Bergman, L. R. (1998). A pattern-oriented approach to studying individual development: Snapshots and processes. In R. B. Cairns, L. R. Bergman, & J. Kagan (Eds.), Methods and models for studying the individual (pp. 83-122). Sage Publications, Inc.

Bergman, L. R., & Anderson, H. (2010). The person and the variable in developmental psychology. The Journal of Psychology, 218(3), 155-165. https://doi.org/10.1027/0044-3409/a000025

Bergman, L. R., & Magnusson, D. (1997). A person-oriented approach in research on developmental psychopathology. Development and Psychopathology, 9, 291-319. https://doi.org/10.1017/S095457949700206X

Bergman, L. R., Magnusson, D., & El-Khouri, B. M. (2003). Studying individual development in an interindividual context. Erlbaum.

Boekaerts, M. (1996). Self-regulated learning and the junction of cognition and motivation, European Psychologist, 1, 100-112. https://psycnet.apa.org/doi/10.1027/1016-9040.1.2.100

Boekaerts, M. (1999). Self-regulated learning: Where we are today. International Journal of Educational Research, 31(6), 445-457. https://doi.org/10.1016/S0883-0355(99)00014-2

Boekaerts, M., & Corno, L. (2005). Self-Regulation in the classroom: A perspective on assessment and intervention. Applied Psychology: An International Review, 54(2), 199-231. https://doi.org/10.1111/j.1464-0597.2005.00205.x

Broadbent, J., & Fuller-Tyszkiewicz, M. (2018). Profiles in self-regulated learning and their correlates for online and blended learning students. Educational Technology Research and Development 66, 1435-1455. https://doi.org/10.1007/s11423-018-9595-9

Broadbent, J., & Poon, W. L. (2015). Self-regulated learning strategies and academic achievement in online higher education learning environments: A systematic review. The Internet and Higher Education, 27, 1-13. http://dx.doi.org/10.1016/j.iheduc.2015.04.007

Bruso, J. L., & Stefaniak, J. E. (2016). The use of self-regulated learning measure questionnaires as a predictor of academic success. Tech Trends, 60, 577-584. https://doi.org/10.1007/s11528-016-0096-6

Chen, J. A. (2012). Implicit theories, epistemic beliefs, and science motivation: A person-centered approach. Learning and Individual Differences, 22, 724-735. https://doi.org/10.1016/j.lindif.2012.07.013

Cleary, T. J., & Callan, G. L. (2018). Assessing self-regulated learning using microanalytic methods. In D. H. Schunk & J. A. Greene (Eds.), Handbook of self-regulation of learning and performance (pp. 338-351). Routledge/Taylor & Francis Group. https://doi.org/10.4324/9780203839010

Collins, L. M., & Lanza, S. T. (2009). Latent class and latent transition analysis: With applications in the social, behavioral, and health sciences (Vol. 718). John Wiley & Sons.

Cuesta, L. (2010). Metacognitive instructional strategies: A study of e-learners´ self-regulation. In The Fourteenth International CALL Conference Proceedings: Motivation and Beyond. ISBN: 978-9057282973. Retrieved from http://uahost.uantwerpen.be/linguapolis/scuati/proceedings_CALL 2010.pdf

Deimann, M., & Bastiaens, T. (2010). The role of volition in distance education: An exploration of its capacities. International Review of Research in Open and Distributed Learning, 11(1), 1-16. https://doi.org/10.19173/irrodl.v11i1.778

DiStefano, C., Zhu, M., & Mindrila, D. (2009). Understanding and using factor scores: Considerations for the applied researcher. Practical Assessment, Research, and Evaluation, 14(1), 20.

DiStefano, C. (2012). Cluster analysis and latent class clustering techniques. In B. Laursen, T. D. Little, & N. A. Card (Eds.), Handbook of developmental research methods (pp. 645-666). Guilford Press.

Efklides, A. (2011). Interactions of metacognition with motivation and affect in self-regulated learning: The MASRL model. Educational Psychology, 46, 6-25. https://doi:10.1080/00461520.2011.538645

Feyerabend, P. (1975). Against method. Wiley.

Finney, S. J., & DiStefano, C. (2006). Non-normal and categorical data in structural equation modeling. In G. R. Hancock & R. O. Mueller (Eds.), Structural equation modeling: A second course (pp. 269-314). Information Age Publishing.

Gerjets, P., Scheiter, K., & Schuh, K. (2008). Information comparisons in example-based hypermedia environments: Supporting learners with processing prompts and an interactive comparison tool. Educational Technology Research and Development, 56, 73-92. http://dx.doi.org/10.1007/s11423-007-9068-z

Greene, J. A. (2018). Self-regulation in education. Routledge.

Greene, J. A., & Azevedo, R. (2007). A theoretical review of Winne and Hadwin’s model of self-regulated learning: New perspectives and directions. Review of Educational Research, 77(3), 334-372. https://doi.org/10.3102%2F003465430303953

Guo, P. J., & Reinecke, K. (2014). Demographic differences in how students navigate through MOOCs. In Proceedings of the First ACM Conference on Learning@Scale, (pp. 21-30).

Hampson, S. E., & Colman, A. M. (Eds.). (1995). Individual differences and personality. Longman.

Händel, M., de Bruin, A. B., & Dresel, M. (2020). Individual differences in local and global metacognitive judgments. Metacognition and Learning, 15(1), 51-75. https://doi.org/10.1007/s11409-020-09220-0

Hirt, C. N., Karlena, Y., Merki, K. M., & Suter, F. (2021). What makes high achievers different from low achievers? Self-regulated learners in the context of a high-stakes academic long-term task. Learning and Individual Differences, 92, 102085. https://doi.org/10.1016/j.lindif.2021.102085

Hood, N., Littlejohn, A., & Milligan, C. (2015). Context counts: How learners’ contexts influence learning in a MOOC. Computers & Education, 91, 83-91. https://doi.org/10.1016/j.compedu.2015.10.019

Howard, M. C., & Hoffman, M. E. (2017). Variable-centered, person-centered, and person-specific approaches: Where theory meets the method. Organizational Research Methods, 21(4), 846-876. https://doi.org/10.1177%2F1094428117744021

Kaplan, A. (2017). Academia goes social media, MOOC, SPOC, SMOC, and SSOC: The digital transformation of higher education institutions and universities. In B. Rishi & S. Bandyopadhyay (Eds.), Contemporary issues in social media marketing (pp. 20-31). Routledge.

Kocdar, S., Karadeniz, A., Bozkurt, A., & Buyuk, K. (2018). Measuring self-regulation in self-paced open and distance learning environments. The International Review of Research in Open and Distributed Learning, 19(1). https://doi.org/10.19173/irrodl.v19i1.3255

Laursen, B. P., & Hoff, E. (2006). Person-centered and variable-centered approaches to longitudinal data. Merrill-Palmer Quarterly, 52(3), 377-389. https://doi.org/10.1353/mpq.2006.0029

Lehmann, T., Hähnlein, I., & Ifenthaler, D. (2014). Cognitive, metacognitive and motivational perspectives on preflection in self-regulated online learning. Computers in Human Behavior, 32, 313-323. https://doi.org/10.1016/j.chb.2013.07.051

Lim, D. H., Yoon, W., & Morris, M. L. (February, 2006). Instructional and learner factors influencing learning outcomes with online learning environment [paper]. The Academy of Human Resource Development International Conference (AHRD), Columbus, OH.

Lynch, R., & Dembo, M. (2004). The relationship between self-regulation and online learning in a blended learning context. International Review of Research in Open and Distributed Learning, 5(2), 1-16. https://doi.org/10.19173/irrodl.v5i2.189

Marsh, H. W., Lüdtke, O., Trautwein, U., & Morin, A. J. (2009). Classical latent profile analysis of academic self-concept dimensions: Synergy of person-and variable-centered approaches to theoretical models of self-concept. Structural Equation Modeling: A Multidisciplinary Journal, 16(2), 191-225. http://dx.doi.org/10.1080/10705510902751010

Marsh, H. W., Morin, A. J., Parker, P. D., & Kaur, G. (2014). Exploratory structural equation modeling: An integration of the best features of exploratory and confirmatory factor analysis. Annual Review of Clinical Psychology, 10, 85-110. https://doi:10. 1146/annurev-clinpsy-032813-153700

Molenaar, P. C. (2004). A manifesto on psychology as idiographic science: Bringing the person back into scientific psychology, this time forever. Measurement, 2(4), 201-218. https://doi.org/10.1207/s15366359mea0204_1

Molenaar, P. C., & Campbell, C. G. (2009). The new person-specific paradigm in psychology. Current Directions in Psychological Science, 18(2), 112-117. https://doi.org/10.1111/j.1467-8721.2009.01619.x

Morin, A. J. S., & Maiano, C. (2011). Cross-validation of the short form of the physical self-inventory (PSI-S) using exploratory structural equation modeling (ESEM). Psychology of Sport and Exercise, 12, 540-554. https://doi:10.1016/j.psychsport.2011.04.003

Morin, A. J. S., Marsh, H. W., & Nagengast, B. (2013). Exploratory structural equation modeling In G. R. Hancock & R. O. Mueller (Eds.), Structural equation modeling: A second course (2nd ed., pp. 395-436). Information Age Publishing.

Moore, J. L., Dickson-Deane, C., & Galyen, K. (2011). E-learning, online learning, and distance learning environments: Are they the same? The Internet and Higher Education, 14(2), 129-135. https://doi.org/10.1016/j.iheduc.2010.10.001

Muthén, B. (2004). Latent variable analysis. In The Sage handbook of quantitative methodology for the social sciences (pp. 346-369). Sage. https://dx.doi.org/10.4135/9781412986311

Nasserinejad, K., van Rosmalen, J., de Kort, W., & Lesaffre, E. (2017). Comparison of criteria for choosing the number of classes in Bayesian finite mixture models. PloS ONE, 12(1), e0168838. https://doi.org/10.1371/journal.pone.0168838

Nunnally, J. C. (1978). Psychometric theory (2nd ed.). McGraw-Hill.

Nylund, K. L., Asparouhov, T., & Muthén, B. O. (2007). Deciding on the number of classes in latent class analysis and growth mixture modeling: A Monte Carlo simulation study: Structural Equation Modeling, 14(4), 535-569. https://doi.org/10.1080/10705510701793320

Palvia, S., Aeron, P., Gupta, P., Mahapatra, D., Parida, R., Rosner, R., & Sindhi, S. (2018). Online education: Worldwide status, challenges, trends, and implications. Journal of Global Information Technology Management, 21(4), 233-241. https://doi.org/10.1080/1097198X.2018.1542262

Panadero, E. (2017). A review of self-regulated learning: Six models and four directions for research. Frontiers in Psychology, 8, 422. https://doi.org/10.3389/fpsyg.2017.00422

Paris, S. G., & Paris, A. H. (2001). Classroom applications of research on self-regulated learning. Educational Psychologist, 36(2), 89-101. https://doi.org/10.1207/S15326985EP3602_4

Peel, K. (2019). The fundamentals for self-regulated learning: A framework to guide analysis and reflection. Educational Practice and Theory, 41(1), 23-49. http://dx.doi.org/10.7459/ept/41.1.03

Pintrich, P. R. (2004). A conceptual framework for assessing motivation and self-regulated learning in college students. Educational Psychology Review, 16(4), 385-407. https://doi.org/10.1007/s10648-004-0006-x

Pintrich, P. R., & DeGroot, E. (1990). Motivational and self-regulated learning components of classroom academic performance. Journal of Educational Psychology, 82, 33-40. https://doi.org/10.1037/0022-0663.82.1.33

Pintrich, P. R., & Zusho, A. (2002). The development of academic self-regulation: The role of cognitive and motivational factors. In A. Wigfield & J. Eccles (Eds.), Development of achievement motivation (pp. 249-284). Academic Press.

Puustinen, M., & Pulkkinen, L. (2001). Models of self-regulated learning: A review. Scandinavian Journal of Educational Research, 45(3), 269-286. https://doi.org/10.1080/00313830120074206

Puzziferro, M. (2008). Online technologies self-efficacy and self-regulated learning as predictors of final grade and satisfaction in college-level online courses. The American Journal of Distance Education, 22(2), 72-89. https://doi.org/10.1080/08923640802039024

Ramaswamy, V., Desarbo, W. S., Reibstein, D. J., & Robinson, W. T. (1993). An empirical pooling approach for estimating marketing mix elasticities with PIMS data. Market Science, 12(1), 103-124. https://www.jstor.org/stable/183740

Raufelder, D., Jagenow, D., Hoferichter, F., & Drury, K. M. (2013). The person-oriented approach in the field of educational psychology. Problems of Psychology in the 21st Century, 5(2013), 79-88. https://doi.org/10.33225/ppc/13.05.79

Reimann, P., & Bannert, M. (2018). Self-regulation of learning and performance in computer-supported collaborative learning environments. In D. H. Schunk, & J. A. Greene (Eds.). Handbook of self-regulation of learning and performance (pp. 285-304). Routledge.

Schunk, D. H., & Greene, J. A. (Eds.). (2018). Handbook of self-regulation of learning and performance. Routledge.

Schunk, D. H., & Zimmerman, B. J. (Eds.). (2008). Motivation and self-regulated learning: Theory, research, and applications. Erlbaum.

Schwam, D., Greenberg, D., & Li, H., (2021). Individual differences in self-regulated learning of college students enrolled in online college courses, American Journal of Distance Education, 35(2), 133-151. https://doi.org/10.1080/08923647.2020.1829255

Schwinger, M., Steinmayr, R., & Spinath, B. (2009). How do motivational regulation strategies affect achievement: Mediated by effort management and moderated by intelligence. Learning and Individual Differences, 19(4), 621-627. https://doi.org/10.1016/j.lindif.2009.08.006

Schwinger, M., Steinmayr, R., & Spinath, B. (2012). Not all roads lead to Rome—Comparing different types of motivational regulation profiles. Learning and Individual Differences, 22(3), 269-279. https://doi.org/10.1016/j.lindif.2011.12.006

Severiens, S., Ten Dam, G., & Wolters, B. V. H. (2001). Stability of processing and regulation strategies: Two longitudinal studies on student learning. Higher Education, 42(4), 437-453. https://doi.org/10.1023/A:1012227619770

Sitzmann, T., Bell, B. S., Kraiger, K., & Kanar, A. M. (2009). A multilevel analysis of the effect of prompting self-regulation in technology-delivered instruction. Personnel Psychology, 62(4), 697-734. https://doi.org/10.1111/j.1744-6570.2009.01155.x

Stan, E. (2012). The role of grades in motivating students to learn. Social and Behavioral Sciences, 69, 1998-2003. https://doi.org/10.1016/j.sbspro.2012.12.156

Steffens, K. (2006). Self-regulated learning in technology-enhanced learning environments: Lessons of a European peer review. European Journal of Education, 41(3), 353-379. https://doi.org/10.1111/j.1465-3435.2006.00271.x

Vermunt, J. K., & Magidson, J. (2002). Latent class cluster analysis. In J. A. Hagenaars & A. L. McCutcheon (Eds.), Applied latent class analysis (pp. 89-106). Cambridge University Press. https://doi.org/10.1017/CBO9780511499531

von Eye, A. (2010). Developing the person-oriented approach—Theory and methods of analysis. Development and Psychopathology, 22, 277-285. https://doi.org/10.1017/s0954579410000052

von Eye, A., & Bogat, G. A. (2006). Person-oriented and variable-oriented research: Concepts, results, and development. Merrill Palmer Quarterly, 52, 390-420. https://doi.org/10.1353/mpq.2006.0032

Wang, C. H., Shannon, D., & Ross, M. (2013). Students’ characteristics, self-regulated learning, technology self-efficacy, and course outcomes in online learning. Distance Education, 34(3), 302-323. https://doi.org/10.1080/01587919.2013.835779

Winne, P. H. (1995). Self-regulation is ubiquitous, but its forms vary with knowledge. Educational Psychologist, 30(4), 223-228. https://doi.org/10.1207/s15326985ep3004_9

Winne, P. H. (1996). A metacognitive view of individual differences in self-regulated learning. Learning and Individual Differences, 8(4), 327-353. http://dx.doi.org/10.1016/S1041-6080(96)90022-9

Winne, P. H. (1997). Experimenting to bootstrap self-regulated learning. Journal of Educational Psychology, 89(3), 397-410. https://doi.org/10.1037/0022-0663.89.3.397

Winne, P. H. (2018). Theorizing and researching levels of processing in self-regulated learning. British Journal of Educational Psychology, 88(1), 9-20. https://doi.org/10.1111/bjep.12173

Winne, P. H., & Nesbit, J. C. (2010). The psychology of academic achievement. Annual Review of Psychology, 61, 653-678. https://doi.org/10.1146/annurev.psych.093008.100348

Winters, F. I., Greene, J. A., & Costich, C. M. (2008). Self-regulation of learning within computer-based learning environments: A critical analysis. Educational Psychology Review 20, 429-444. https://doi.org/10.1007/s10648-008-9080-9

Woolfolk, A. (2001). Educational psychology (8th ed.). Allyn and Bacon. Woolfolk, A., Winne, P. H. & Perry, N. E. (2006). Educational psychology (3rd Canadian ed.). Pearson.

Woolfolk, R. L., Doris, J. M., & Darley, J. M. (2006). Identification, situational constraint, and social cognition: Studies in the attribution of moral responsibility. Cognition, 100(2), 283-301. https://doi.org/10.1016/j.cognition.2005.05.002

Wong, J. Baars, M., Davis, D., Van Der Zee, T., Houben, G., & Paas, F. (2019). Supporting self-regulated learning in online learning environments and MOOCs: A systematic review. International Journal of Human-Computer Interaction, 35(4-5), 356-373. https://doi.org/10.1080/10447318.2018.1543084

Yeh, Y. F., Chen, M. C., Hung, P. H., & Hwang, G. J. (2010). Optimal self-explanation prompt design in dynamic multi-representational learning environments. Computers & Education, 54(4), 1089-1100. https://doi.org/10.1016/j.compedu.2009.10.013

Zhang, W. X., Hsu, Y. S., Wang, C. Y., & Ho, Y. T. (2015). Exploring the impacts of cognitive and metacognitive prompting on students’ scientific inquiry practices within an e-learning environment. International Journal of Science Education, 37(3), 529-553. https://doi.org/10.1080/09500693.2014.996796

Zheng, L. (2016). The effectiveness of self-regulated learning scaffolds on academic performance in computer-based learning environments: A meta-analysis. Asia Pacific Education Review, 17(2), 187-202. https://doi.org/10.1007/s12564-016-9426-9

Zimmerman, B. J. (1989). A social cognitive view of self-regulated academic learning. Journal of Educational Psychology, 81(3), 329-339. https://psycnet.apa.org/doi/10.1037/0022-0663.81.3.329

Zimmerman, B. J. (1990). Self-regulation learning and academic achievement: An overview. Educational Psychologist, 25(1), 3-17. https://doi.org/10.1207/s15326985ep2501_2

Zimmerman, B. J. (1998). Developing self-fulfilling cycles of academic self-regulation: An analysis of exemplary instructional models. In D. H. Schunk & B. J. Zimmerman (Eds.), Self-regulated learning: From teaching to self-reflective practice (pp. 1-19). Guilford Press.

Zimmerman, B. J. (2008). Investigating self-regulation and motivation: Historical background, methodological developments, and future prospects. American Educational Research Journal, 45(1), 166-183. https://doi.org/10.3102/0002831207312909

Zimmerman, B. J., & Kitsantas, A. (2014). Comparing students’ self-discipline and self-regulation measures and their prediction of academic achievement. Contemporary Educational Psychology, 39, 145-155. https://psycnet.apa.org/doi/10.1016/j.cedpsych.2014.03.004

Zimmerman, B. J., & Schunk, D. H. (2011). Self-regulated learning and performance: An introduction and an overview. In B. J. Zimmerman & D. H. Schunk (Eds.), Educational psychology handbook series. Handbook of self-regulation of learning and performance (pp. 1-12). Routledge/Taylor & Francis.

Latent Profiles of Online Self-Regulated Learning: Relationships with Predicted and Final Course Grades by Diana Mindrila and Li Cao is licensed under a Creative Commons Attribution 4.0 International License.