Volume 23, Number 3

Mutlu Şen-Akbulut, Duygu Umutlu, and Serkan Arıkan

Faculty of Education, Bogazici University

Since the mandatory switch to online education due to the COVID-19 outbreak in 2020, technology has gained more importance for online teaching and learning environments. The Community of Inquiry (CoI) is one of the validated frameworks widely used to examine online learning. In this paper, we offer an extension to the CoI framework and survey, arguing that meaningful and appropriate use of technologies has become a requirement in today’s pandemic and post-pandemic educational contexts. With this goal, we propose adding three technology-related sub-dimensions that would fall under each main presence of the CoI framework: (a) technology for teaching, (b) technology for interaction, and (c) technology for learning. Based on exploratory and confirmatory factor analyses, we added 5 items for technology for teaching sub-dimension, 4 items for technology for interaction sub-dimension, and 5 items for technology for learning sub-dimension in the original CoI survey. Further research and practice implications are also discussed in this paper.

Keywords: Community of Inquiry framework, extending the CoI framework, technology sub-dimensions, exploratory factor analysis, confirmatory factor analysis

As a result of the coronavirus outbreak in the beginning of 2020, most educational institutions were forced to switch to fully-online education. Face-to-face instruction was rare, and most teachers had to offer classes online. This brought about a tremendous transformation in education as both teachers and students became dependent on online technologies to either offer or to have access to instruction. Under these circumstances, the matter of how proficient teachers and students are in using technologies for educational purposes gained more importance.

The role of educators in 21st century classrooms has been changing, particularly when moving from traditional to more technology-enhanced learning environments. These changes were taking place long before the pandemic began. However, the rapid shift due to the pandemic underlined the importance of educational technologies to support and/or transform teaching and learning in distance and online learning environments. Many researchers have been investigating how learning theories can be used to improve the quality of learning and teaching in online learning environments (Mayer, 2019). Frameworks and models, such as technological pedagogical content knowledge (TPACK; Mishra & Koehler, 2006) and the Community of Inquiry model (CoI; Garrison et al., 2000), have been used extensively to design teaching and learning processes in online education (Ní Shé et al., 2019).

Up to now, the CoI framework has been used in several empirical studies (e.g., Choo et al., 2020; Horzum, 2015) to examine online learning environments and enhance learners’ learning experiences. Yet, the contexts where previous studies were conducted were mostly blended learning environments, and online learning activities were mostly based on asynchronous tasks. The CoI framework was originally developed to analyze asynchronous online class discussions (Garrison et al., 2000). Although the framework has been revised on various occasions and several extensions were suggested (Pool et al., 2017; Shea & Bidjerano, 2010), it had not been used to examine fully-online courses until the coronavirus outbreak. After the COVID-19 pandemic started, a combination of synchronous online classes and asynchronous tasks were implemented in many schools in the academic year 2020-2021. Although the original CoI survey is a validated instrument that includes three main presences and 10 sub-dimensions to examine online learning and teaching, the recent developments entailed bringing other sub-dimensions into consideration: how both instructors and students use technology purposefully to teach and learn in a community of inquiry for both synchronous and asynchronous parts of a course. The CoI framework, its presences, sub-dimensions, and previous extensions of the framework are described in the next section.

The CoI (Garrison et al., 2000) is an extensively-used framework for analyzing inquiry processes among learners and instructors and supporting the learning process in online and blended environments (Garrison et al., 2000; Maddrell et al., 2017). The framework is defined as “a group of individuals who collaboratively engage in purposeful critical discourse and reflection to construct personal meaning and confirm mutual understanding” (Garrison, 2017, p. 2). Researchers have been arguing that the CoI framework supports learners’ engagement and communication by providing deep and meaningful learning in online and blended learning environments (Garrison et al., 2000; Maddrell et al., 2017).

The CoI framework includes three main presences: teaching presence (TP), social presence (SP), and cognitive presence (CP). Leveraging these presences, the CoI framework aims to create meaningful and constructive learning experiences for learners in online education (Cleveland-Innes et al., 2018). Within the framework, TP involves skillful orchestration and facilitation of learners’ cognitive and social presences to provide meaningful learning processes. CP refers to how learners are cognitively engaged to construct their own knowledge from the discourse generated within the online community. SP represents learners’ identifying themselves with the online learning community through active participation and communication. Design and organization, facilitation, and direct instruction are the three sub-dimensions of TP. CP has four sub-dimensions: triggering event, exploration, integration, and resolution. SP includes three sub-dimensions: affective expression, open communication, and group cohesion.

Several recent studies (e.g., Caskurlu, 2018; Dempsey & Zhang, 2019; Heilporn & Lakhal, 2020; Ma et al., 2017; Şen-Akbulut et al., 2022) focused on validating the structure of the CoI framework along with its three presences. In the systematic review that Stenbom (2018) conducted, 103 CoI papers published between 2008 and 2017 were examined. Stenbom (2018) found that the CoI survey was reported to be valid and reliable in all the reviewed studies. Out of 103 studies, 83 included the original three presences, whereas 20 studies included either only one or two presences. Ma et al. (2017) validated the Chinese version of the CoI survey with 350 Chinese undergraduate students. They implemented a revised version of the CoI survey that included learning presence. They accepted a 47-item model as the final version (χ2/df = 2.29, NNFI = 0.933, CFI = 0.936, RMSEA = 0.067). Reliability values of the four dimensions were acceptable (all Cronbach’s α > .765). Ma et al. (2017) also found that how learning presence, a partial mediator, is perceived is predicted directly by TP and CP. In another study, Heilporn and Lakhal (2020) investigated reliability and validity of sub-dimensions within each presence. Participants were 763 French-speaking university students taking online courses. They concluded that the sub-dimensions within each presence were reliable (Cronbach’s α ranged from 0.80 to 0.94) and student data supported the structure (CFI = 0.94, RMSEA = 0.050).

Caskurlu (2018) conducted a confirmatory factor analysis on the CoI survey with 310 graduate students at a large university in the midwestern United States and found that each presence had a valid factor solution as the data fit very well with the nine item-three factor SP, thirteen item-three factor TP, and twelve item-four factor CP. Similarly, Kozan (2016) examined the relationships between the CoI presences and investigated which structural equation model fit better with the data. The study was conducted with 320 graduate students at a public university in the midwestern United States. The results showed that there was a statistically higher level of TP than cognitive and social presence, as well as a statistically higher level of CP when compared to SP. The results further revealed that there was either a direct or indirect relationship between TP and CP, and that SP was a mediator between TP and CP. Kozan and Richardson (2014) also conducted confirmatory factor analysis to evaluate the structure of the CoI survey. The data collected from 178 students in the USA resulted in an adequate fit to the data (CFI = 0.980, RMSEA = 0.079).

One of the critiques of the CoI framework is that the model needs additional presences or sub-dimensions to be more comprehensive (Castellanos-Reyes, 2020). Several studies have aimed to revise and extend the CoI framework and have suggested numerous new presences to be included for the refinement of the framework (Anderson, 2016; Pool et al., 2017; Shea & Bidjerano, 2010). Kozan and Caskurlu (2018) conducted a literature review in order to identify the proposed contributions to the CoI framework and arguments behind those suggestions. The researchers included peer-reviewed journal articles written in English from 1996 to 2017 and selected 23 studies for the review. Their study identified suggestions of four types of additional presence and seven types of expansion of the existing presences. Suggested new presences were categorized as autonomy presence, learning presence, emotional presence, and instructor presence. However, Kozan and Caskurlu (2018) further recommended that arguments for the proposed presences and the validation and reliability of the studies should be clearly presented because revising the framework would damage its integrity.

Richardson et al. (2015) conducted a multiple-case study to conceptualize instructor presence and to explore how instructors incorporate instructor presence into their courses. Several further studies supported that within online and blended courses, instructor presence made a difference in engagement and learning (Hanshaw, 2021; Ng & Przybyłek, 2021; Ní Shé et al., 2019; Stone & Springer, 2019). Regarding teaching and social presence, some other studies included presences as instructor social presence and teacher engagement. However, these studies lacked discussion on how these new presences could be validated and how they would bring any additional research-based contributions to the CoI framework. Some other studies adapted the CoI survey for extending the CoI framework to conceptualize, implement, and evaluate K-12 or graduate-level programs (Kumar & Ritzhaupt, 2014; Wei et al., 2020). These adaptations included rewording, removing, and adding items to the survey so that it could be applied in the specific context of the study. Referring to the studies which used revised versions of the CoI survey, Castellanos-Reyes (2020) stated that although additional presences have been inserted within the CoI framework since the survey was first developed in 2008, none of these new presences has been validated as of 2020.

A large amount of research has indicated that online learning requires both instructors’ and students’ technology competencies more than is the case in traditional settings (Hanshaw, 2021; Ibrahim et al., 2021). However, the original CoI survey does not include any elements related to how technology is used pedagogically for teaching and learning processes and how its use is supported in the original three presences within the framework. To date, none of the aforementioned studies that suggested the expansion of the framework included elements related to technology use within a CoI. To fill this missing component, we suggest that the CoI survey needs a revision in order to include sub-dimensions related to technology use. In this study, we propose the expansion of the existing three presences to include one new sub-dimension under each presence: technology for teaching, technology for interaction, and technology for learning under TP, SP, and CP, respectively.

So far, several technology competency surveys have been developed and implemented to measure educators’ and students’ technology use in educational settings (e.g., Christensen & Knezek, 2017). However, the constant introduction of new technological tools necessitates the expansion of technological competencies that mostly focus on technical skills (Şen-Akbulut & Oner, 2021; Tondeur et al., 2017). Thus, the current study proposes that expansion of the CoI framework should be grounded in approaches and theories that argue technology integration should be made in pedagogically-sound ways because the CoI framework aims to create collaborative-constructivist learning environments (Cleveland-Innes et al., 2018).

To address this need, we adopted a holistic and integrated approach while designing the revised survey with the new sub-dimensions. The formation of these new items related to new sub-dimensions was informed by the original CoI presences, the TPACK (Mishra & Koehler, 2006) framework, and the International Society for Technology in Education (ISTE) standards for students (ISTE, 2016). According to the standards published by ISTE, educators are individuals who design learning experiences for the 21st century, facilitate students’ learning, and exhibit 21st-century skills such as collaboration and critical thinking (ISTE, 2016). Along with Mishra and Koehler’s (2006) seven-construct TPACK framework, other surveys focusing on the aspects of constructivist-oriented TPACK have been used as a theoretical basis for developing the current items (Chai et al., 2012; Graham et al., 2009; Schmidt et al., 2009). To achieve the goal of creating an integrated survey, we inserted the technology for teaching (TFT), technology for interaction (TFI), and technology for learning (TFL) sub-dimensions within the original three presences. Also, we followed the TPACK framework’s integrative approach of interrelated knowledge types and ISTE standards to form the items in these new sub-dimensions. We conceptualize these new sub-dimensions (TFT, TFI, and TFL) as the use of technology by the instructors and students as a tool to create meaningful learning experiences.

The extended CoI survey was sent to undergraduate and graduate students at two public universities in Turkey where the medium of instruction is English. From these two universities, 653 students (44% male, 56% female; 94% undergraduate, 6% graduate) responded. There were no missing responses among the completed surveys. The data were collected at the end of the 2020-2021 fall semester when all courses at these two universities were fully online due to the pandemic. Course activities included both synchronous and asynchronous tasks. Instructors used learning management systems (e.g., Moodle) and video conferencing tools (e.g., Zoom) for course activities. Ethical consents were granted from the universities’ institutional review boards, and students voluntarily completed online questionnaires. Therefore, the sampling method of the current study was convenience sampling. Participants represented a wide range of faculties including architecture, arts and sciences, economics and administrative sciences, education, engineering, and the school of applied disciplines.

The original CoI survey was developed by Arbaugh et al. (2008) to measure three presences (TP, SP, and CP) having a total of 10 sub-dimensions. The researchers conducted exploratory factor analysis (EFA) to develop 34 items for inclusion in the survey. The internal consistency of the survey was 0.94 for TP, 0.91 for SP, and 0.95 for CP.

In the original survey, TP had three sub-dimensions: design and organization (DO; 4 items), facilitation (F; 6 items), and direct instruction (DI; 3 items). SP had three sub-dimensions: affective expression (AE; 3 items), open communication (OC; 3 items), and group cohesion (GC; 3 items). CP included four sub-dimensions: triggering event (TE; 3 items), exploration (E; 3 items), integration (I; 3 items), and resolution (R; 3 items).

To extend the CoI survey based on the TPACK framework (Mishra & Koehler, 2006) and the ISTE standards (ISTE, 2016), we developed 35 new items measuring technological components of online education: 12 items for the TFT sub-dimension under TP, 12 items for the TFI sub-dimension under SP, and 11 items for the TFL sub-dimension under CP. These new items were written in English.

We aimed to meet three criteria while generating the new items: (a) whether the item aligned with the target presence, (b) whether the item was distinctive enough from the items under the target presence of the original survey, and (c) whether the item was clear enough to understand. These 35 new items along with the original CoI survey were sent to two experts. Based on their feedback, some items were revised. The revised 35 items along with the original survey were sent to three undergraduate students from different programs. These student reviews resulted in 32 items for the TFT, TFI, and TFL sub-dimensions. The expert and student review processes are explained elaborately below.

For the review, two experts were selected: a scholar from the educational technology field who was well-versed in the CoI framework and a scholar from the assessment and evaluation field. Three prompts were given to the expert reviewers: (a) “Is this item clear?” (b) “Is this item relevant to the target presence?” and (c) “Is this item distinctive enough from other original items of related sub-dimensions?” First, the educational technology scholar scrutinized all items based on the three prompts. After getting that reviewer’s comments, we revised five items, mostly through clarification and simplification. For instance, with the item “The instructor used collaborative tools (e.g., Google documents, Padlet) to create meaningful, real-world learning experiences in class,” the reviewer found that the item had two layers, task design and use of collaborative tools, and recommended they be kept separate. Thus, the item was changed to “The instructor successfully incorporated collaborative tools (e.g., Google documents, Padlet) into the course activities.” Afterwards, revised items were sent to the reviewer with expertise in the assessment and evaluation field who recommended changes to wording and sentence structure to make items clearer. For example, the item “Group work during online live class sessions enhanced my participation and engagement” was changed to “I felt more engaged during live class sessions when we had group work.” As a result of the second expert review, 9 items in total were revised.

Following the two expert reviews, we met with three students from different departments to review the items. The meetings were held online using a video-conferencing tool. We asked students to read the items and express what they understood. We also asked the students to give possible examples related to the items to make sure that the students captured the intended meaning. Based on the students’ reviews, some clarifications and simplifications were made to 14 items. For example, the item “The instructor used video-conferencing tools effectively for live classes” was changed to “The instructor used video-conferencing tools (e.g., Zoom and GoogleMeet) effectively for live classes.” Examples of technological tools or online activities were added in parentheses to three items since students indicated it was difficult to understand those. Several items were simplified by changing the sentence structure or wording. For instance, the item “I was able to communicate complex ideas clearly and effectively with my peers by creating or using a variety of digital tools (such as presentations, visualizations, or simulations)” was simplified by deleting the words “clearly” and “creating.”

The survey including 32 new items (see Appendix A) along with the original 34 CoI items (66 in total) was administered to participants within the scope of this study. During the survey administration, items were randomized to avoid any bias due to ordering.

In this study, the reliability of the collected data was evaluated based on Cronbach’s alpha coefficient. A Cronbach’s alpha value between 0.70 and 0.80 is considered “acceptable”; between 0.80 and 0.90 is considered “good”; and above 0.90 is considered “excellent” (George & Mallery, 2003). IBM SPSS Statistics (Version 25.0) was used to estimate the alpha coefficients for the original and extended surveys.

In order to select items for new sub-dimensions, EFA, using principal axis factoring with direct oblimin rotation, was conducted. Items that had 0.400 or less item loading to a primary factor and items that were loaded to at least two factors at the same time (cases in which a factor loading difference of an item to a primary factor and other factor was less than 0.100) were to be discarded (Field, 2013). Then, items that were highly loaded to the TP, SP, and CP were selected to be included in the final form of the survey by keeping content representation.

After deciding the final form of the extended survey as a result of the EFA, a second-order confirmatory factor analysis (CFA) was conducted to evaluate whether the extended CoI survey’s proposed structure fit students’ responses. Both EFA and CFA were conducted using the same dataset from 653 participants. As a first step, Arbaugh et al.’s (2008) original structure with three presences and ten sub-dimensions was tested using weighted least squares means and a variance adjusted estimation method (WLSMV) as questionnaire items were ordinal. Then, the extended framework structure, including three presences and thirteen sub-dimensions was tested. The model fits to the student responses were evaluated by estimating root mean square error of approximation (RMSEA), comparative fit index (CFI), and Tucker-Lewis index (TLI). A good fit for the data was evaluated with an RMSEA value of less than 0.06, and CFI and TLI values higher than 0.95 (Browne & Cudeck, 1993; Hu & Bentler, 1999 Kline, 2010). Mplus 7.2 (Muthén & Muthén, 2013) was used to conduct the second-order CFA.

In this study, the reliability coefficients of the original survey and the extended survey were estimated by Cronbach’s alpha. For the original survey with 34 items, the Cronbach’s alpha was calculated to be 0.96 for TP, 0.92 for SP, and 0.94 for CP. For the new survey with 66 items, the Cronbach’s alpha coefficients were 0.97, 0.95, and 0.97 for TP, SP, and CP respectively. These values indicate excellent internal consistency of the data (George & Mallery, 2003). All corrected item-total correlations were above 0.400, indicating the items were related to each other in related presences.

EFA was conducted with a total of 66 items (34 original and 32 new items; see Table 1). EFA results showed a Kaiser-Meyer-Olkin measure of sampling adequacy value of 0.977, indicating that the sampling was marvelous. Bartlett’s test of sphericity (p < .05) showed that the correlation matrix was different from an identity matrix. Therefore, the questionnaire data was appropriate for conducting the EFA. Additionally, there were 8 factors that had eigenvalues higher than 1. These factors explained 69% of the total variance in the dataset.

Factors 1, 2, and 3 consisted mainly of TP, SP, and CP items, respectively. These three factors explained 59% of the total variance. Although factors 4, 5, 6, and 7 explained lower percentages of variance, these factors also clearly represented remaining parts of CP, SP, TP, and SP respectively. Factor 8 did not provide a unique factor as factor loadings were less than 0.40 or factor loading difference of an item to a primary factor and other factor was less than 0.100. As the purpose of the study was to extend the COI framework with new items measuring technological presence subdomains for TP, SP, and CP, new items that were highly loaded to TP, SP, and CP were selected based on both EFA results and our content evaluations.

To add a new technology sub-dimension under the TP domain, items TFT44, TFT45, TFT41, and TFT43 (see Appendix A for all technology-related items) were selected, as these items were highly loaded to factor 1. Additionally, TFT36 was selected as this item also represented the technological sub-dimension of TP. TFT36 was the highest loaded item of factor 6 which also consisted of TP items. Therefore, TFT36 was included in the final form, and we named this new sub-dimension technology for teaching.

For the new technology sub-dimension under the SP domain, items TFI55, TFI56, TFI49, and TFI50 were selected, as these items highly loaded to factor 2. Content evaluation supported that these items represented a wide range of the technology sub-dimension under the SP domain. We named this the technology for interaction sub-dimension.

For the new technology sub-dimension under the CP domain, items TFL61, TFL59, TFL60, TFL66, and TFL65 were selected, as these items were highly loaded to factor 3. Content evaluation supported that these items were related to the technology sub-dimension under the CP domain. We named this the technology for learning sub-dimension.

Table 1

Exploratory Factor Analysis of 66 Prospective Items for the Extended CoI Survey

| Item | Factor | |||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

| DI12 | .575 | |||||||

| TFT44 | .570 | |||||||

| TFT45 | .539 | |||||||

| TFT41 | .508 | .334 | ||||||

| F10 | .502 | |||||||

| DI13 | .496 | |||||||

| TFT43 | .489 | .333 | ||||||

| TFT42 | .467 | .383 | ||||||

| F8 | .456 | |||||||

| F7 | .454 | |||||||

| F5 | .448 | |||||||

| TFT38 | .444 | .352 | ||||||

| DI11 | .405 | |||||||

| F9 | .394 | |||||||

| F6 | .377 | |||||||

| E28 | .325 | |||||||

| TFI55 | .604 | |||||||

| TFI56 | .520 | |||||||

| TFI49 | .508 | |||||||

| AE14 | .503 | |||||||

| AE15 | .485 | |||||||

| TFI50 | .472 | |||||||

| TFL63 | .464 | -.309 | ||||||

| TFI48 | .451 | |||||||

| TFL62 | .380 | -.371 | ||||||

| AE16 | .378 | |||||||

| TFI47 | .357 | |||||||

| TFL61 | -.854 | |||||||

| TFL59 | -.837 | |||||||

| TFL60 | -.817 | |||||||

| TFL66 | -.772 | |||||||

| TFL65 | -.726 | |||||||

| TFL58 | -.711 | |||||||

| TFL64 | -.698 | |||||||

| TFL57 | -.624 | |||||||

| R33 | -.577 | -.307 | ||||||

| R32 | -.533 | -.339 | ||||||

| R34 | -.450 | -.326 | ||||||

| E26 | -.367 | |||||||

| E27 | .305 | -.356 | ||||||

| I29 | .343 | -.350 | ||||||

| I30 | -.321 | |||||||

| TE24 | -.582 | |||||||

| TE25 | -.539 | |||||||

| TE23 | -.522 | |||||||

| TFI53 | .852 | |||||||

| TFI52 | .842 | |||||||

| TFT36 | .871 | |||||||

| TFT35 | .821 | |||||||

| TFT37 | .599 | |||||||

| DO1 | .564 | |||||||

| DO4 | .559 | |||||||

| DO2 | .510 | |||||||

| DO3 | .444 | |||||||

| TFT40 | .390 | |||||||

| TFT39 | .351 | .381 | ||||||

| OC18 | -.857 | |||||||

| OC17 | -.777 | |||||||

| OC19 | -.722 | |||||||

| TFI54 | .453 | -.528 | ||||||

| TFI46 | -.430 | |||||||

| GC21 | -.411 | -.336 | ||||||

| GC22 | .355 | -.401 | ||||||

| TFI51 | ||||||||

| GC20 | -.378 | -.416 | ||||||

| I31 | ||||||||

Note. N = 653. The extraction method was principal axis factoring with oblique (direct oblimin) rotation.AE = affective expression; DI = direct instruction; DO = design and organization; E = exploration; F = facilitation; GC = group cohesion; I = integration; OC = open communication; R = resolution; TE = triggering event; TFI = technology for interaction; TFL = technology for learning; TFT = technology for teaching. Items selected for inclusion in the extended survey are in bold.

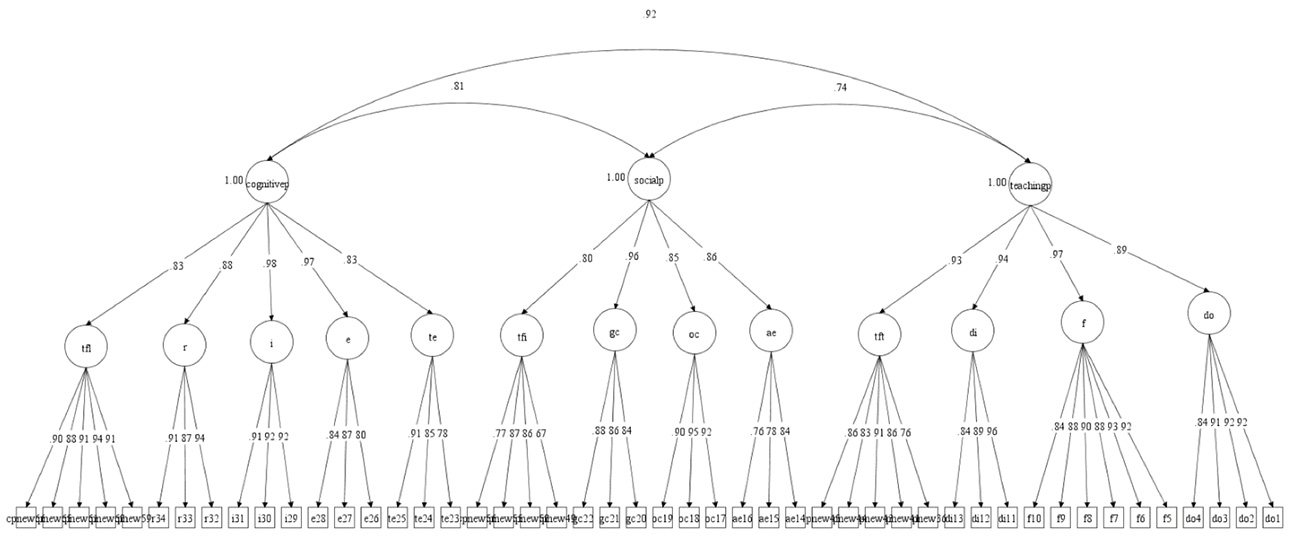

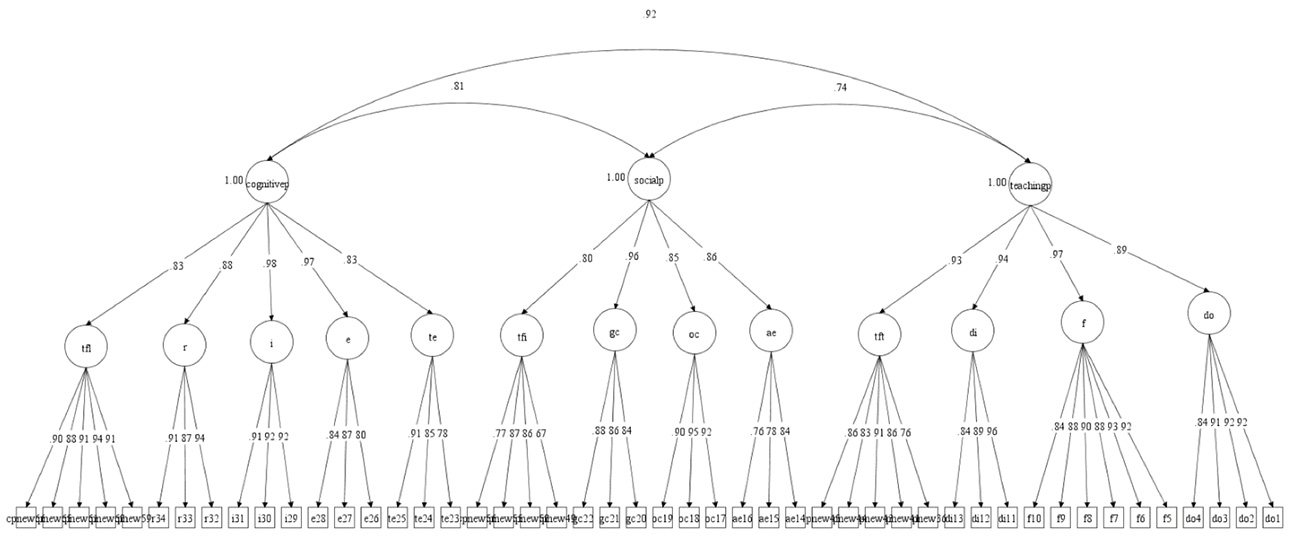

After constructing the final form of the extended CoI survey, two second-order CFAs were conducted. In the first, CFA was conducted on the original CoI survey (3 presences, 10 sub-dimensions, 34 items) and in the second, CFA was conducted on the extended CoI survey (3 presences, 13 sub-dimensions, 48 items). The CFA results of both are presented in Table 2 and Figure 1. The results show that the proposed second-order structure of the extended framework was supported by the student responses (CFI > .950; TLI > .950; RMSEA around .060). Compared to the original survey, the extended survey structure provided a better value in terms of the RMSEA and χ2/df values. Standardized factor loadings are provided in Table 3. All standardized factor loadings were adequately high. These findings support the claim that technological sub-dimensions added under TP, SP, and CP were distinct.

Table 2

Results of Second-Order Confirmatory Factor Analysis for the Two Frameworks

| Framework | χ2 | df | χ2/df | CFI | TLI | RMSEA | |

| Value | 90% CI | ||||||

| Original | 2177.618*** | 514 | 4.24 | .966 | .963 | .070 | [.067,.073] |

| Extended | 3775.517*** | 1064 | 3.55 | .955 | .953 | .062 | [.060,.065] |

Note. CFI = comparative fit index; TLI = Tucker-Lewis index; RMSEA = root-mean-square error of approximation; CI = confidence interval. ***p < .001

Figure 1

Second-Order Confirmatory Factor Analysis for the Extended CoI Framework

Note. AE = affective expression; cognitivep = cognitive presence; DI = direct instruction; DO = design and organization; E = exploration; F = facilitation; GC = group cohesion; I = integration; OC = open communication; R = resolution; socialp = social presence; TE = triggering event; teachingp = teaching presence; TFI = technology for interaction; TFL = technology for learning; TFT = technology for teaching.

Table 3

Standardized Factor Loadings of All Items Included in the Extended CoI Survey

| Presence | Sub-dimension | Item | Standardized loading |

| Teaching | Design and organization | DO1 | .924 |

| DO2 | .921 | ||

| DO3 | .905 | ||

| DO4 | .839 | ||

| Facilitation | F5 | .918 | |

| F6 | .929 | ||

| F7 | .883 | ||

| F8 | .901 | ||

| F9 | .882 | ||

| F10 | .841 | ||

| Direct instruction | DI11 | .962 | |

| DI12 | .887 | ||

| DI13 | .836 | ||

| Technology for teaching | TFT36 | .759 | |

| TFT41 | .864 | ||

| TFT43 | .906 | ||

| TFT44 | .832 | ||

| TFT45 | .860 | ||

| Social | Affective expression | AE14 | .841 |

| AE15 | .781 | ||

| AE16 | .758 | ||

| Open communication | OC17 | .924 | |

| OC18 | .946 | ||

| OC19 | .896 | ||

| Group cohesion | GC20 | .845 | |

| GC21 | .860 | ||

| GC22 | .877 | ||

| Technology for interaction | TFI49 | .673 | |

| TFI50 | .862 | ||

| TFI55 | .866 | ||

| TFI56 | .766 | ||

| Cognitive | Triggering event | TE23 | .778 |

| TE24 | .853 | ||

| TE25 | .908 | ||

| Exploration | E26 | .795 | |

| E27 | .867 | ||

| E28 | .840 | ||

| Integration | I29 | .917 | |

| I30 | .922 | ||

| I31 | .911 | ||

| Resolution | R32 | .941 | |

| R33 | .867 | ||

| R34 | .910 | ||

| Technology for learning | TFL59 | .906 | |

| TFL60 | .943 | ||

| TFL61 | .912 | ||

| TFL65 | .883 | ||

| TFL66 | .895 |

Note. AE = affective expression; DI = direct instruction; DO = design and organization; E = exploration; F = facilitation; GC = group cohesion; I = integration; OC = open communication; R = resolution; TE = triggering event; TFI = technology for interaction; TFL = technology for learning; TFT = technology for teaching.

The capacity to use technology is becoming an increasingly important skill because of expectations of 21st-century students and the growth of learning technologies. In online environments, purposeful, meaningful, and pedagogical use of technology should be an indispensable component for teaching and learning processes. Resonating with this perspective, we added technology components as distinct sub-dimensions to the CoI framework after extensive investigation.

In their efforts to extend the CoI framework, some studies focused on proposing new dimensions whereas others suggested new presences. We developed these new items as sub-dimensions for the three original main presences. It is important to examine how technology can be used to support TP, SP, and CP effectively for online learning since the use of technology is a vital component that connects all three types of presence (Hanshaw, 2021; Thompson et al., 2017).

The current study aimed to extend the CoI framework by adding technology related sub-dimensions to the original presences as follows: the TFT sub-dimension for TP, the TFI sub-dimension for SP, and the TFL sub-dimension for CP. This study is novel since none of the previous CoI surveys assessed meaningful use of technology for teaching and learning. Following strictly the guidelines of scale development, we added 5 new items to the TFT sub-dimension, 4 new items to the TFI sub-dimension, and 5 new items to the TFL sub-dimension. In this way, the original CoI survey structure (3 presences, 10 sub-dimensions, and 34 items) was extended to 3 presences, 13 sub-dimensions, and 48 items.

This study shows that with the suggested technology sub-dimensions, the CoI framework provides a research-based theoretical model for systematically selecting tools and effectively incorporating them into our teaching practices in online learning environments (Thompson et al., 2017). By exploring meaningful use of technology through the CoI framework, we expect that instructors and practitioners would have an in-depth understanding of how to make the most of technology to promote student learning in an online environment. We argue that the technology sub-dimensions suggested in this study will be useful for fully online, blended, or hybrid learning environments, both for synchronous and asynchronous tasks, since the sub-dimensions can be applicable to different types of interaction between instructors and students, students and students, and students and content. Communicating and interacting with students and content by using technology tools is crucial not only for creating a strong instructor presence (Hanshaw, 2021) but also to promote meaningful learning especially through online activities.

In this study, the data were collected from two universities where the medium of instruction is English. Both universities accept students who are quite successful in the national university entrance examination. Therefore, the sample does not represent all university students. It is suggested to test the new extended CoI structure with other samples in this as well as in other countries.

The original CoI survey is an instrument that has been in use for more than ten years. It has functioned properly in terms of exploring teaching and learning in online environments as processes of collaborative inquiry. In this study, a new version of the CoI survey that adds items related to meaningful use of technology under three new sub-dimensions (TFT, TFI, and TFL) has been introduced and has demonstrated a good level of reliability and validity, specifically 0.97, 0.95, and 0.97 for TP, SP, and CP, respectively. This shows that there is a high level of consistency among items in each presence. Second-order confirmatory factor analysis confirms that TFT, TFI, and TFL sub-dimensions added under TP, SP, and CP are distinct sub-dimensions (CFI > .950; TLI > .950; RMSEA around .060). Thus, the data collected supports the newly proposed factor structure. All 32 new items for the technology sub-dimensions in the extended CoI survey are shown in Appendix 1.

With this extension, the maximum sub-score from the TP category is 90 points, based on a 5-point Likert scale. In SP, the maximum sub-score is 65, while it is 85 for CP. To sum up, the items in the TFT sub-dimension highlight the instructor’s technology use to enhance course management, student learning, communication and interaction among students, and to provide feedback on student work. TFI items demonstrate how students use technology to communicate and interact with their peers to be socially present in online environments. The items in the TFL sub-dimension include how technologies can be used by students to be involved in higher-order thinking and active learning.

Since the beginning of the COVID-19 pandemic, evidence that online classes will be a permanent part of our educational systems has been accumulating. Research suggests that educators generally have basic sets of technology skills and that meaningful use of technology is still a complex process in all types of learning environments (Christensen & Knezek, 2017). In this sense, the extended CoI survey appears to be a valid instrument for designing and assessing online learning experiences with meaningful use of technology.

Anderson, T. (2016, January 4). A fourth presence for the Community of Inquiry model? Virtual Canuck. https://virtualcanuck.ca/2016/01/04/a-fourth-presence-for-the-community-of-inquiry-model/

Arbaugh, J. B., Cleveland-Innes, M., Diaz, S. R., Garrison, D. R., Ice, P., Richardson, J. C., & Swan, K. P. (2008). Developing a community of inquiry instrument: Testing a measure of the Community of Inquiry framework using a multi-institutional sample. The Internet and Higher Education, 11(3-4), 133-136. http://dx.doi.org/10.1016/j.iheduc.2008.06.003

Browne, M. W., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. A. Bollen & J. S. Long (Eds.), Testing structural equation models (pp. 137-162). Sage.

Caskurlu, S. (2018). Confirming the subdimensions of teaching, social, and cognitive presences: A construct validity study. The Internet and Higher Education, 39, 1-12. https://doi.org/10.1016/j.iheduc.2018.05.002

Castellanos-Reyes, D. (2020). 20 years of the Community of Inquiry framework. TechTrends, 64, 557-560. https://doi.org/10.1007/s11528-020-00491-7

Chai, C. S., Koh, J. H. L., Ho, H. N. J., & Tsai, C.-C. (2012). Examining preservice teachers’ perceived knowledge of TPACK and cyberwellness through structural equation modeling. Australasian Journal of Educational Technology, 28(6), 1000-1019. https://doi.org/10.14742/ajet.807

Choo, J., Bakir, N., Scagnoli, N. I., Ju, B., & Tong, X. (2020). Using the Community of Inquiry framework to understand students’ learning experience in online undergraduate business courses. TechTrends, 64(1), 172-181. https://doi.org/10.1007/s11528-019-00444-9

Christensen, R., & Knezek, G. (2017). Validating the technology proficiency self-assessment questionnaire for 21st-century learning (TPSA C-21). Journal of Digital Learning in Teacher Education, 33(1), 20-31.

Cleveland-Innes, M., Garrison, D. R., & Vaughan, N. (2018). The Community of Inquiry theoretical framework: Implications for distance education and beyond. In M.G. Moore & W.C. Diehl (Eds.), Handbook of distance education (4th ed., pp. 67-78). Routledge.

Dempsey, P. R., & Zhang, J. (2019). Re-examining the construct validity and causal relationships of teaching, cognitive, and social presence in Community of Inquiry framework. Online Learning, 23(1), 62-79. https://doi.org/10.24059/olj.v23i1.1419

Field, A. (2013). Discovering statistics using IBM SPSS statistics. Sage.

Garrison, D. (2017). E-learning in the 21st century: A framework for research and practice (3rd ed.). Routledge.

Garrison, D. R., Anderson, T., & Archer, W. (2000). Critical inquiry in a text-based environment: Computer conferencing in higher education. The Internet and Higher Education, 2(2-3), 87-105. https://doi.org/10.1016/s1096-7516(00)00016-6

George, D., & Mallery, P. (2003). SPSS for Windows step by step: A simple guide and reference: 11.0 update (4th ed.). Allyn & Bacon.

Graham, R. C., Burgoyne, N., Cantrell, P., Smith, L., St. Clair, L., & Harris, R. (2009). Measuring the TPACK confidence of inservice science teachers. TechTrends, 53(5), 70-79. https://link.springer.com/content/pdf/10.1007/s11528-009-0328-0.pdf

Hanshaw, G. (2021). Use technology to engage students and create a stronger instructor presence. In C. L. Jennings (Ed.), Ensuring adult and non-traditional learners’ success with technology, design, and structure (pp. 97-110). IGI Global. https://www.doi.org/10.4018/978-1-7998-6762-3.ch006

Heilporn, G., & Lakhal, S. (2020). Investigating the reliability and validity of the Community of Inquiry framework: An analysis of categories within each presence. Computers & Education, 145, Article 103712. https://doi.org/10.1016/j.compedu.2019.103712

Horzum, M. B. (2015). Online learning students’ perceptions of the community of inquiry based on learning outcomes and demographic variables. Croatian Journal of Education, 17(2), 535-567. https://doi.org/10.15516/cje.v17i2.607

Hu, L.-T. & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6(1), 1-55.

Ibrahim, R., Wahid, F. N., Norman, H., Nordin, N., Baharudin, H., & Tumiran, M. A. (2021). Students’ technology competency levels for online learning using MOOCs during the COVID-19 pandemic. International Journal of Advanced Research in Education and Society, 3(4), 137-145. https://myjms.mohe.gov.my/index.php/ijares/article/view/16670

International Society for Technology in Education [ISTE]. (2016). ISTE standards: Students. https://www.iste.org/standards/for-students

Kline, R. B. (2010). Principles and practice of structural equation modeling. Guilford Publications.

Kozan, K. (2016). A comparative structural equation modeling investigation of the relationships among teaching, cognitive, and social presence. Online Learning, 20(3), 210-227. http://dx.doi.org/10.24059/olj.v20i3.654

Kozan, K., & Caskurlu, S. (2018). On the Nth presence for the Community of Inquiry framework. Computers and Education, 122, 104-118. http://dx.doi.org/10.1016/j.compedu.2018.03.010

Kozan, K., & Richardson, J. C. (2014). Interrelationships between and among social, teaching, and cognitive presence. The Internet and Higher Education, 21, 68-73. https://doi.org/10.1016/j.iheduc.2013.10.007

Kumar, S., & Ritzhaupt, A. D. (2014). Adapting the Community of Inquiry survey for an online graduate program: Implications for online programs. E-learning and Digital Media, 11(1), 59-71. https://doi.org/10.2304/elea.2014.11.1.59

Ma, Z., Wang, J., Wang, Q., Kong, L., Wu, Y., & Yang, H. (2017). Verifying causal relationships among the presences of the Community of Inquiry framework in the Chinese context. International Review of Research in Open and Distributed Learning, 18(6), 213-230. https://doi.org/10.19173/irrodl.v18i6.3197

Maddrell, J. A., Morrison, G. R., & Watson, G. S. (2017). Presence and learning in a community of inquiry. Distance Education, 38(2), 245-258. https://doi.org/10.1080/01587919.2017.1322062

Mayer, R. E. (2019). Thirty years of research on online learning. Applied Cognitive Psychology, 33(2), 152-159. https://doi.org/10.1002/acp.3482

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6), 1017-1054. https://www.tcrecord.org/content.asp?contentid=12516

Muthén, L. K., & Muthén, B. O. (2013). Mplus user’s guide (7th ed.). Muthén and Muthén.

Ng, Y. Y., & Przybyłek, A. (2021). Instructor presence in video lectures: Preliminary findings from an online experiment. IEEE Access, 9, 36485-36499. https://doi.org/10.1109/ACCESS.2021.3058735

Ní Shé, C., Farrell, O., Brunton, J., Costello, E., Donlon, E., Trevaskis, S., & Eccles, S. (2019). Teaching online is different: Critical perspectives from the literature. Dublin City University. https://doi.org/10.5281/zenodo.3479402

Pool, J., Reitsma, G., & van den Berg, D. (2017). Revised Community of Inquiry framework: Examining learning presence in a blended mode of delivery. Online Learning, 21(3), 153- 165. https://doi.org/10.24059/olj.v21i3.866

Richardson, J. C., Koehler, A. A., Besser, E. D., Caskurlu, S., Lim, J., & Mueller, C. M. (2015). Conceptualizing and investigating instructor presence in online learning environments. The International Review of Research in Open and Distributed Learning, 16(3). https://doi.org/10.19173/irrodl.v16i3.2123

Schmidt, D. A., Baran, E., Thompson, A. D., Mishra, P., Koehler, M. J., & Shin, T. S. (2009). Technological pedagogical content knowledge (TPACK): The development and validation of an assessment instrument for preservice teachers. Journal of Research on Technology in Education, 42(2), 123-149. https://doi.org/10.1080/15391523.2009.10782544

Shea, P., & Bidjerano, T. (2010). Learning presence: Towards a theory of self-efficacy, self-regulation, and the development of a communities of inquiry in online and blended learning environments. Computers & Education, 55(4), 1721-1731. https://doi.org/10.1016/j.compedu.2010.07.017

Stenbom, S. (2018). A systematic review of the Community of Inquiry survey. The Internet and Higher Education, 39, 22-32. https://doi.org/10.1016/j.iheduc.2018.06.001

Stone, C., & Springer, M. (2019). Interactivity, connectedness and “teacher-presence”: Engaging and retaining students online. Australian Journal of Adult Learning, 59(2), 146-169. https://search.informit.org/doi/pdf/10.3316/aeipt.224048?download=true

Şen-Akbulut, M. & Oner, D. (2021). Developing pre-service teachers’ technology competencies: A project-based learning experience. Cukurova University Faculty of Education Journal, 50(1), 247-275. https://dergipark.org.tr/en/pub/cuefd/issue/59484/753044

Şen-Akbulut, M., Umutlu, D., Oner, D. & Arıkan, S. (2022). Exploring university students’ learning experiences in the Covid-19 semester through the Community of Inquiry framework. Turkish Online Journal of Distance Education, 23(1), 1-18. https://doi.org/10.17718/tojde.1050334

Thompson, P., Vogler, J. S., & Xiu, Y. (2017). Strategic tooling: Technology for constructing a community of inquiry. Journal of Educators Online, 14(2). https://files.eric.ed.gov/fulltext/EJ1150675.pdf

Tondeur, J., Aesaert, K., Pynoo, B., Braak, J., Fraeyman, N., & Erstad, O. (2017). Developing a validated instrument to measure preservice teachers’ ICT competencies: Meeting the demands of the 21st-century. British Journal of Educational Technology, 48(2), 462- 472. https://doi.org/10.1111/bjet.12380

Wei, L., Hu, Y., Zuo, M., & Luo, H. (2020). Extending the CoI framework to K-12 education: Development and validation of a learning experience questionnaire. In S. Cheung, R. Li, K. Phusavat, N. Paoprasert, & L. Kwok (Eds.), Blended learning: Education in a smart learning environment. ICBL 2020 (pp. 315-325). Springer. https://doi.org/10.1007/978-3-030-51968-1_26

TFT35: The instructor clearly set up the course page on the learning management system (e.g., Moodle, Canvas, Blackboard, itslearning).

TFT36: The instructor clearly kept the course page updated on the learning management system (e.g., Moodle, Canvas, Blackboard, itslearning).

TFT37: The instructor used video-conferencing tools (e.g., Zoom, GoogleMeet) effectively for live classes.

TFT38: The instructor successfully incorporated collaborative tools (e.g., Google documents, Padlet) into the course activities.

TFT39: The instructor facilitated synchronous class activities (e.g., live class discussions) effectively.

TFT40: The instructor facilitated asynchronous class activities (e.g., Moodle/Blackboard forum discussions) effectively.

TFT41: The instructor used digital tools and resources to maximize student learning.

TFT42: The instructor successfully used technology to assess our learning.

TFT43: The instructor effectively communicated ideas or information via digital tools.

TFT44: The instructor used technology to support interaction among course participants.

TFT45: The instructor effectively used technology to provide feedback on our tasks or assignments.

TFI46: I was able to express my ideas through chat during live class sessions.

TFI47: Use of digital tools (such as Kahoot and Mentimeter) during live sessions encouraged me to participate in classes.

TFI48: I was able to communicate complex ideas clearly with my classmates by using a variety of digital tools (such as presentations, visualizations, or simulations).

TFI49: I used collaborative technologies (e.g., Google documents, Zoom) to work with my classmates outside of class time.

TFI50: Being able to communicate and collaborate with classmates anywhere and anytime digitally is an advantage for me.

TFI51: Features of video-conferencing tools (e.g., “raise virtual hand” option and chats) helped me to speak up and participate in online live classes.

TFI52: I felt more comfortable sharing my ideas during live classes when my camera was on.

TFI53: I felt more comfortable sharing my ideas during live classes when other course participants’ cameras were on.

TFI54: It was easy for me to share my ideas during online live classes.

TFI55: Working with my classmates outside the live class time on digital platforms motivated me to prepare for course-related tasks.

TFI56: I felt more engaged during live class sessions when we had group work.

TFL57: Exploring course topics via digital tools/resources increased my interest in the course.

TFL58: I was able to build up my knowledge by actively exploring real-world problems via digital tools/resources.

TFL59: Digital tools/resources helped me to examine problems from multiple viewpoints.

TFL60: Digital tools/resources helped me brainstorm ideas to complete course tasks.

TFL61: Digital tools/resources helped me to further investigate course topics.

TFL62: Online forums where I was able to explore my classmates’ ideas enhanced my learning in the course.

TFL63: Peer interaction on online platforms helped me construct my knowledge better.

TFL64: I was able to collect information from resources using a variety of digital tools.

TFL65: Digital tools/resources helped me generate new information to answer questions raised during classes.

TFL66: Digital tools/resources helped me think deeply about the course content.

Extending the Community of Inquiry Framework: Development and Validation of Technology Sub-Dimensions by Mutlu Şen-Akbulut, Duygu Umutlu, and Serkan Arıkan is licensed under a Creative Commons Attribution 4.0 International License.