Volume 24, Number 2

Veenita Shah, Sahana Murthy, and Sridhar Iyer

IDP in Educational Technology, IIT Bombay, Mumbai, India

MOOCs popularly support the diverse learning needs of participants across the globe. However, literature suggests well-known scepticism regarding MOOC pedagogy which questions the effectiveness of the educational experience offered by it. One way to ensure the quality of MOOCs is through systematic evaluation of its pedagogy with the goal to improve over time. Most existing MOOCs’ quality evaluation methods do not account for the increasing significance of learner-centric pedagogy towards providing a richer learning experience. This paper presents a MOOC evaluation framework (MEF), designed with a strong pedagogical basis underpinned by theory and MOOC design practices, which evaluates the integration of learner-centric pedagogy in MOOCs. Using mixed-methods research, the internal validation was conducted through expert reviews (N = 2), and external validation (N = 13) was conducted in the field to test model usability and usefulness. The framework was classified as “good” (SUS: 78.46) in terms of usability. A high perception of usefulness (84%-92%) was observed for the framework as a formative evaluation tool for assessing the integration of learner-centric pedagogy and bringing a positive change in MOOC design. Different participants acknowledged new learning from varied dimensions of the framework. Participants also recognized that the scores obtained using the MEF truly reflected the efforts taken to incorporate learner-centric design strategies in the evaluated dimensions. The framework focuses on learner-centric evaluation of MOOC design with a goal to facilitate improved pedagogy.

Keywords: massive open online course, pedagogical quality of MOOCs, instructional design, quality evaluation methods, formative evaluation of MOOC pedagogy

MOOCs have been reported to benefit varied stakeholders, including students, academicians, and corporate professionals (Egloffstein & Ifenthaler, 2017; Konrad, 2017). Additionally, there has been an increase in the acceptance of MOOCs by higher education institutions using varied models (Burd et al., 2015). However, such MOOC initiatives also raise concerns as the quality of learning experience in MOOCs still remains debatable (Lowenthal & Hodges, 2015; Margaryan et al., 2015; Toven-Lindsey et al., 2015). The research points towards known limitations in the design of MOOCs and its insufficiency to cater to the diversity of learners with varied motivations and learning requirements (Hew, 2018; Yousef et al., 2014). This questions the meaningfulness and effectiveness of the educational experience that is offered by MOOCs. Therefore, MOOCs are expected to meet some quality standards in their pedagogical considerations.

Numerous MOOC design guidelines and frameworks in literature enumerate important learning support elements to address the pedagogical quality of MOOCs (Conole, 2013; Hew, 2018; Lee et al., 2016; Pilli & Admiraal, 2017). However, the overall integration of these principles in MOOCs is found to be low in literature (Lowenthal & Hodges, 2015; Margaryan et al., 2015; Watson et al., 2017). This indicates that though there are some guiding pedagogical frameworks and principles, the potential direction towards improving the instructional quality of MOOCs seems to be lacking. A recent literature review on MOOCs’ quality also suggested deepening of research in the subject and designing new guidelines that ensure quality (Stracke & Trisolini, 2021).

Since quality is the output of the systematic process of design and evaluation, one way to ensure the quality of MOOCs is through the evaluation of these courses with the goal to improve over time (Alturkistani et al., 2020; Jansen et al., 2017). There are a few existing e-learning quality approaches, some specific to MOOCs, intended to provide a useful overview and guide to certain quality issues (Ossiannilsson et al., 2015). However, most of these methods need to be enriched to effectively evaluate the instructional design in MOOCs implementing active learning pedagogies (Aloizou et al., 2019). This study presents an enhanced learner-centric instructional framework, termed MOOC Evaluation Framework (MEF), for MOOC creators or instructors for formative evaluation of their MOOCs in order to improve upon their pedagogical design. In addition, the study evaluates the usability and usefulness of the MEF from the perspective of MOOC creators in the field, their experience with the MEF in terms of new learning, and their perception on its usefulness in evaluating the integration of a learner-centric approach in MOOCs.

Over the years, studies have been presenting strategies such as collaboration, peer interaction, feedback, learner-instructor connection, and so forth to enhance students’ engagement, improve academic achievement and lower the attrition rates in MOOCs (Hew, 2018; Pilli & Admiraal, 2017; Yousef et al., 2014). Though the proposed strategies for improvements differ in details, a remarkably consistent theme is the call to emphasise learner-centric instructional strategies such as active learning, problem-centric learning, and instructor accessibility in MOOCs. The learner-centric instructional design encourages interactions with peers and instructors, and focuses on recurrent learning activities and feedback mechanisms. The approach engages learners to create their own learning experience and become independent and critical thinkers (Bransford et al., 2000).

There exist several MOOC design models and frameworks guiding the development of MOOCs (Conole, 2013; Fidalgo-Blanco et al., 2015; Lee et al., 2016) for enhanced learner experience and quality of MOOCs. A recently presented learner-centric MOOC model for MOOC design also established the role of learner-centric pedagogy in attaining active learner participation and higher completion rates in MOOCs (Shah et al., 2022). However, there is an identified research gap in the quality assurance methods of MOOCs implementing active learning strategies (Aloizou et al., 2019). Most of the existing quality evaluation methods for MOOCs do not account for the increasing significance of learner-centric pedagogy that aims at providing richer learning experience for participants.

Most of the studies which evaluate MOOCs’ pedagogy use instructional design principles or some standardized frameworks. This section provides a brief overview on some of these evaluation approaches and a few observations associated with respect to their quality criteria and applications.

Merrill’s first principles of instruction, abstracted from key instructional design theories and models, were supplemented by five additional principles, abstracted from literature (Maragaryan et al., 2015; Merrill, 2002). A MOOC evaluation study presented an analysis of design quality determined from first principles of instruction for 76 MOOCs (Maragaryan et al., 2015). The majority of MOOCs scored poorly on most instructional design principles but highly on organisation and presentation of course material. This indicated that although most MOOCs were well-packaged, their instructional design quality was low. A similar evaluation study conducted on 27 open courses, using the first principles of instruction, showed parallel results with poor instructional design of courses (Chukwuemeka et al., 2015). Another study applied Merrill’s first principles of instruction to review nine MOOCs and found that the principles were generally well incorporated into the course design (Watson et al., 2017). However, here the evaluation included MOOCs that specifically targeted attitudinal change.

A recent evaluation study conducted on six courses, using Chickering and Gamson’s principles (Chickering & Gamson, 1987) and part of the quality online course initiative rubric, showed the need for further enhancement to support active learning in these courses (Yilmaz et al., 2017). Another recent study used Clark and Mayer’s e-learning guidelines (Clark & Mayer, 2016) to evaluate the pedagogical design of 40 MOOCs (Oh et al., 2020). The findings of the study indicated a relatively low application of these principles in general, with the exception of those related to the organization and presentation of content. The principles which scored particularly low included practice, worked examples, and feedback.

Different organisations have come up with a number of standard models or frameworks for quality check of e-learning, with some specifically developed for MOOCs. One of these includes OpenupEd (Rosewell & Jansen, 2014), initiated by the European Association of Distance Teaching Universities (EADTU). This comprises 11 course-level and 21 institutional-level benchmarks that cover six areas, including strategic management, curriculum design, course design, course delivery, staff support, and student support. Though OpenupEd promotes features that put the learner in the centre, the quick scan should be further fleshed out using a more detailed self-assessment process (Jansen et al., 2017). Another quality assurance model with a similar approach is the European Foundation for Quality in e-Learning (EFQUEL) which operates the UNIQUe certification (Creelman et al., 2014). These models are mainly intended for certification, accreditation, benchmarking, or labelling as a frame of reference (Ossiannilsson et al., 2015).

Read and Rodrigo (2014) also reported a quality model for Spain’s National Distance Education University MOOCs; however, it presented high-level guidelines on course design aspects such as topic, reuse of existing content, overall duration, course structure, and so forth. This work was based largely on MOOCs which were adaptations of existing courses to a MOOC format. Quality Matters (QM), though not specifically addressing the context of MOOCs, is another assurance system for evaluating online courses such as MOOCs (Shattuck, 2015). The framework consists of 47 specific criteria with eight general standards. According to a study, none of the six courses reviewed using QM achieved a passing score of 85% (Lowenthal & Hodges, 2015). The study also mentioned the tendency of the framework to heavily focus on the aspects of course design and not enough on instructional approaches for active engagement, communication, and collaboration. Another framework, the Quality Reference Framework (QRF), was developed by the European Alliance for the Quality of MOOCs called MOOQ (Stracke et al., 2018). The framework consists of two quality instruments with action items for potential activities and leading questions to assist in MOOC design and development. It is a generic framework that can be adapted to specific contexts for improving MOOC design, development, and evaluation of created MOOCs (Stracke, 2019). However, no evidence from testing the framework in the field has yet been reported.

Hence, the application of existing instructional design principles often remains limited, and most of the quality evaluation models and frameworks tend towards certification and accreditation with high-level guidelines. A recent study which evaluated three mature quality analysis tools, including the 10-principle framework, the OpenupED, and Quality Matters proposed the need for clear and simple questions, assessing specific elements of the active learning pedagogies to make accurate conclusions about MOOC quality (Aloizou et al., 2019). According to another recent systematic review, one of the least studied aspects of MOOC evaluation of effectiveness is pedagogical practices (Alturkistani et al., 2020). Hence, the goal of our research was to create an evaluation framework which is focused on the pedagogical perspective of MOOC design, with a learner-centric approach at its core.

The MEF distinguishes itself from other MOOC evaluation measures as it primarily focuses on evaluation of learner-centric pedagogy in MOOC design. Though a few existing frameworks evaluate certain learner-centric components in online courses (Rosewell & Jansen, 2014; Shattuck, 2015; Stracke, 2019), greater emphasis has been observed in constructs such as learning objectives, learning activities, assessment, and so forth with broad guidelines. While broad course guidelines can help evaluate and improve MOOC quality to some extent, they do not address specific pedagogical challenges of poor learner engagement, learner interaction, collaboration, feedback, and so forth. (Lowenthal & Hodges, 2015; Maragaryan et al., 2015; Oh et al., 2020; Yilmaz et al., 2017). The MEF offers evaluation and guidance on incorporation of learner-centric practices, which have been shown to address some of these pedagogical challenges (Shah et al., 2022). The framework goes beyond a crisp checklist, in the form of questions or high-level recommendations, to provide comprehensive indicators for MOOC creators or reviewers. It also provides an opportunity for formative evaluation of MOOC design in a structured and comprehensive manner. Formative evaluation, a term first coined by Scriven (Scriven, 1967), is a process of reviewing pilot stage courses to determine their strengths and weaknesses before the programme of instruction is finalized (Tessmer, 2013). In this setting, formative evaluation through the MEF will allow the instructor to continuously monitor the integration of learner-centric activities during the development phase of the MOOC. It will provide constant feedback and suggest ways to improve through reflective and easy to comprehend design indicators, organised in different dimensions.

The MEF is grounded in a number of theoretical approaches. Following the cognitive load theory (Paas & Sweller, 2014; Sweller et al., 2011) and the theory of multimedia learning (Mayer, 2019), the framework evaluates design elements to ensure ease in processing of learning content and reduction in extraneous processing. Cognitivists believe in making the learning process meaningful by organizing the information into structured and smaller chunks. In the context of MOOCs, chunking of concepts into small-length video content with in-video activities makes knowledge meaningful and connects new information with prior knowledge (Shah et al., 2022). Such design interventions related to video content are used by the framework.

Based on constructivist approaches of learning (Mayer, 2019), the MEF evaluates MOOC content for building learner knowledge rather than passive consumption of information. The learning activities are evaluated for promotion of active participation from students where they construct new knowledge based on their prior knowledge. Attention is given to learner diversity, individual differences, and presence of multiple visual representations. The theory of social constructivism (Vygotsky & Cole, 1978) emphasises the importance of social interactions in constructing one’s own learning. The framework ensures evaluation of aspects such as peer interactions, collaborative learning, building of learner community, and so forth. Through evaluation of immediate and constructive feedback in learning activities, assignments, and forum tasks, the framework incorporates reflection (Bransford et al., 2000).

The framework incorporates the principle of constructive alignment where curriculum objectives, teaching-learning activities, and assessment tasks are aligned with each other (Biggs, 1999). Moreover, the evaluation criteria are also drawn from the knowledge of first principles of instruction and other research-based practices (Hew, 2018; Margaryan et al., 2015; Merrill, 2002), keeping a focus on learner-centric design.

The MEF evaluates the pedagogy design of MOOCs with a learner-centric lens to achieve active learner participation, stronger learner connection with the course content and team, and effective collaboration. The framework focuses on xMOOCs, characterised by structured learning components such as videos, learning activities, assessment, discussion forums, and additional learning resources (Conole, 2013). Aspects that are out of the scope of this framework include institutional policies and evaluation of technological platforms. Also, it is not designed to evaluate the effectiveness of MOOCs in terms of learning outcomes, learner retention, or learner experience.

The framework organises the integral learning components and pedagogical features of a MOOC into eight dimensions. As shown in Figure 1, the MEF includes five structural dimensions (D2, D3, D4, D5, and D6) and three operational dimensions (D1, D7, and D8). The structural dimensions of the framework are integral learning components for most xMOOCs, while the operational dimensions have evolved in view of the need to keep the course learner-centric in its content, practices and offering.

Figure 1

Types of Dimensions in the MEF

Each dimension further consists of quality criteria related to various aspects of that dimension (Table 1). The goodness of a criterion is described by an array of benchmark indicators, which define the set of actions that need to be fulfilled in order to achieve a high-quality pedagogical design. It is by means of these indicators that the framework aids in formative evaluation of pedagogical features to bring a positive change in MOOC design.

Table 1

List of all Dimensions and Criteria in the MEF

| Dimension | Criteria |

| D1: Course structure and expectations | Course framework and content Prerequisites for the course Comprehending course components Guidelines for learner interactions with content and peers Exams and grading policy Communication with course team |

| D2: Video content | Video content appropriateness Video chunk length Presence of in-video activities Purpose of in-video activities Positioning and time span of in-video activities Feedback on in-video activity and its nature Video content presentation |

| D3: Learning resources | Offering of supplementary learning resources Addressing diverse learner needs and interests Ensuring learner engagement with resources |

| D4: Discussion forum | Opportunities and goals of interaction activities on the forum Design of peer interaction activities Moderator support Feedback on forum Clear communication Integration of technology tools |

| D5: Synchronous interactions | Opportunities for synchronous interactions Purpose of synchronous interactions Update on upcoming interaction Effective conduct of interaction Ease of technology for participation Availability of interaction videos |

| D6: Assessment (formative and summative) | Presence of formative assessment activities Frequency of assessment opportunities Format of assessment activities Pedagogical role of assessment activities Feedback on assessment Grading of assessment activities Grading strategies |

| D7: Content alignment and integrity | Constructive alignment Alignment of technology and pedagogy Academic integrity |

| D8: Learner connection practices | Prompt communication Motivating learners Support for learner agency Community building Understanding learner difficulties Learner feedback |

The MEF toolkit (https://mef22.github.io/etiitb-vs) supports MOOC evaluation. The toolkit, consisting of 44 criteria configured into eight dimensions, can be employed to perform formative evaluation of a MOOC to gain insights on its pedagogical strengths and weaknesses. An overall judgement can be made on the extent to which a benchmark indicator is achieved. Each indicator is rated on a 4-point scale (missing, inadequate, adequate, or proficient) ranging from 0 to 3, demonstrating the level of performance. Figure 2 illustrates a part of dimension 3, listing two criteria: “Offering of supplementary learning resources” and “Addressing diverse learner needs and interests,” with multiple indicators. Some indicators entail numerous items (each denoted by a hyphen). In such cases, the greater the number of items fulfilled, the closer an indicator will be to the proficient level of performance.

Figure 2

Screenshot of the MEF Toolkit Displaying a Part of Dimension 3

The MEF calculates an average score for every criterion as well as dimension, depending on the user’s selection for the corresponding indicators. Based on average dimension score, the user receives feedback which is distributed over 6 score bands: 0-0.9 (missing or minimal); 1.0-1.4 (inadequate); 1.5-1.9 (towards adequate); 2.0-2.4 (adequate); 2.5-2.9 (towards proficient) ; 3 (proficient). These score bands act as a standard to measure the strength of learner-centric design in a particular dimension. However, the choice of such benchmarks is inevitably arbitrary, and the effects of prevalence and bias on the score must be considered when judging its implication (Sim & Wright, 2005). Hence, even though these scoring bands help in visualizing the interpretation of the obtained score, some amount of individual judgement and circumstances should also be taken into account.

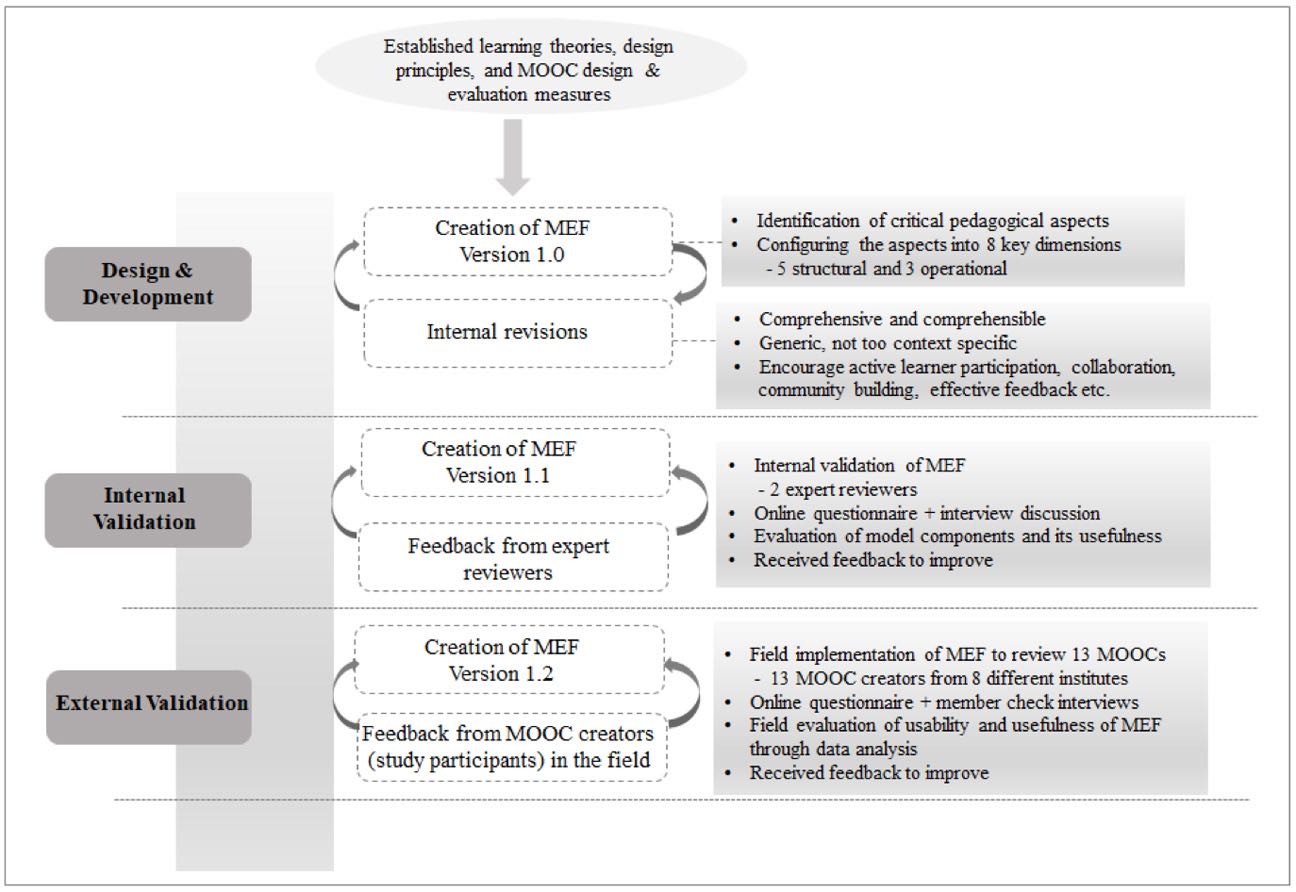

A schematic outline of the steps involved in development of the framework and validation studies is shown in Figure 3. A total of 15 MOOC creators, who are responsible for the overall vision, content creation, design, and orchestration of the course, participated in this study. Out of these, two experts conducted the internal validation of the framework. These reviewers had expertise not only in instructional design, but also in model development and learner-centric pedagogy. Thirteen MOOC creators participated in the external validation of the framework. All participants were provided with the MEF toolkit along with detailed guidelines to perform their respective MOOC evaluations. The usability and usefulness of the framework was evaluated through mixed-methods research using quantitative and qualitative analyses, drawing on the strengths of both approaches. The convergent design approach was used for the quantitative and qualitative data collection performed at similar times, followed by an integrated analysis.

Figure 3

Schematic Outline of the Steps in Development and Validation of the MEF

Internal validation studies were performed to evaluate the components and processes of MEF creation, whereas the following three research questions were investigated through the external validation study.

An internal validation study was conducted through expert review using a questionnaire followed by an interview. The questionnaire included questions (10 multiple-choice and nine open-ended questions) about the model components and model use. These questions were designed to address certain factors pertinent to the character of internal validation (Richey, 2006). In addition, there were questions derived from an instrument created for validating the model theorization process (Lee et al., 2016). These questions attempted to seek answers for 6 items: synthesis of literature for creation of the framework, use of appropriate terminology, comprehensibility, comprehensiveness, validity, and its usefulness. Interviews were conducted to gain thorough understanding of experts’ suggestions on certain aspects of the framework.

Based on literature recommendation (Richey, 2006), this evaluation study examined the ease of usability and usefulness of the framework through questions which aimed at answering aspects such as: Do MOOC creators find the MEF useful in meeting their MOOC evaluation needs? Why should the MEF be made available/unavailable to MOOC creators for formative evaluation? The questionnaire entailed 13 multiple-choice and eight open-ended questions. Ten multiple-choice questions, derived with slight modification from the original system usability scale or SUS (Bangor et al., 2008), majorly focused on usability of the framework. Remaining questions focused on the strong and weak points of the MEF, with suggestions for improvement, for detailed evaluation of its effectiveness.

From the internal validation study, quantitative data of experts’ ratings were analysed for content validity index (CVI) and inter-rater agreement (IRA) using earlier methodology (Rubio et al., 2003). In the external validation study, SUS was calculated to determine usability of the framework (Brooke, 1996). The quantitative data received from questionnaire responses on the usefulness of the course was examined by performing frequency analysis from the Likert scale to yield percentages.

The qualitative data received during interviews with internal validation experts was used as feedback to revise the framework. For the external validation study, the qualitative data provided a detailed description of factors evaluating the ease and complexity in usability and degree of usefulness of the framework. After the questionnaire was completed, member checks were conducted, in the form of interviews, to discuss certain remarks more deeply. Inductive thematic analysis (Braun & Clarke, 2006) of responses was performed to understand and classify participants’ perceptions on usability and usefulness of the MEF in evaluating the integration of learner-centric pedagogy in MOOC design.

Two learner-centric pedagogy experts reviewed the MEF to evaluate six items in order to validate model components and assess its usefulness. The mean for different component scores ranged from 4.5 to 5, with CVI and IRA as 1 in 6 items. Since the CVI and IRA were above 0.8, it suggested strong content validity and reliability (Rubio et al., 2003). In addition, data from the open-ended questions and interviews with experts was used to make subsequent revisions in the framework, which strengthened it further to Version 1.1.

Of the whole group (N = 13), 10 participants were faculty, whereas three were final year PhD students who have been active participants in MOOC creation and offering (Table 2). Participants chosen for this study have created one or more MOOCs, and belonged to eight different recognised educational institutes in India. Thirteen MOOCs from six different disciplines, evaluated in this research using the MEF, were created by study participants in their respective institutions. All participants evaluated their MOOCs in all eight dimensions of the framework.

Table 2

Details of the External Validation Study Conducted on MOOC Creators

| Study feature | Details |

| Participant profile | N = 13 Number of faculty = 10 Number of final year PhD scholars = 3 Number of institutes involved = 8 |

| Evaluated MOOC disciplines | N = 6 Computer science, instructional design, chemistry, management, analytics, math |

| Employment of MEF dimensions | All participants used all 8 dimensions for their MOOC evaluation |

We focused on our first research question through quantitative analysis of usability and usefulness of the MEF for MOOC creators. The average equivalent score for the scale of usability for all participants was 78.46. Hence, based on the mean system SUS scores rating (Bangor et al., 2008), MOOC instructors classified the MEF as “good” in terms of its usability. There was a high level of agreement observed in the perception of usefulness for each dimension, ranging from 84% to 100% (average 97%). MOOC creators also showed a strong positive perception of the use of the MEF in: (a) evaluating integration of learner-centric approaches in MOOCs (84%); (b) employing the framework as a formative evaluation tool (92%); and (c) bringing a positive change in pedagogy design of MOOCs (92%). These results, shown in Figure 4, were based on the experience of participants with the framework while evaluating their own MOOCs.

Additionally, open-ended questions on MEF components that were missing or difficult to comprehend provided insightful feedback. The responses from the participants were analysed and categorised into two groups: (a) as useful suggestions which further helped in improving clarity and resulted in Version 1.2 of the MEF, and (b) as identified limitations of the framework which have been acknowledged in the discussion section.

Figure 4

Perception Results on the A) Overall Usefulness of the MEF and B) Usefulness of its Individual Dimensions

To answer our second research question, the participants were asked to provide (a) examples of new learning from the MEF and (b) their perception on making this framework available to MOOC creators for formative evaluation. One interesting point to note was that different participants (n = 12) acknowledged different aspects from varied dimensions as new learning. The content analysis of these responses showed that new learning branched from all dimensions, except dimension 1, which may seem fundamental to many (Figure 5). Some of the learning aspects were emphasised multiple times by different learners. The learning aspect which was cited most often was the pedagogical design of the video content and associated in-video activities.

Figure 5

Visual Distribution of New Learning Aspects, as Identified by the Study Participants, From Different Dimensions of the MEF

Participants (n = 12) expressed their rationale to provide the MEF to MOOC creators for formative evaluation of their courses. Inductive thematic analysis led to the generation of two themes of perception for potential use of the MEF in the field. These included the use of the MEF (a) as a comprehensive guide for MOOC creators, and (b) in bringing reflections and improving the MOOC experience (Table 3). The framework was recognised as a comprehensive guide, which was perceived to provide effective pedagogical direction towards planning, creation, and evaluation of MOOCs. Additionally, the learners also perceived the framework as bringing reflections on various aspects of course design and learner connection, thus improving the MOOC experience for both instructors and students.

Table 3

Illustrative Examples for the Two Themes Identified From Learners’ Perceptions on the Potential Use of the MEF in the Field

| Themes | Excerpts from learners’ perceptions |

| Comprehensive guide for MOOC creators | “The framework will give an idea of what a course instructor needs to consider when preparing for a MOOC. I would say even when the instructor has begun the preparation, he/she can revisit the framework to keep a careful check on the different criteria.” “Yes. It is a comprehensive list, and it will help me at all stages of MOOCs development like planning, production, post production, delivering, and managing the course.” |

| Bringing reflections and improving MOOC experience | “I looked at my course design in retrospect and realised inadequacies of some components that would have made the MOOC better.” “It will help improve the experience of both instructors and students if used before offering the course.” |

Participants’ Reflections on Their MOOC Scores Obtained Using the MEF

Employing the MEF, participants obtained dimension-based scores for their respective courses. To answer our third research question, participants were asked a focused question: “Can you provide one example from your MOOC scores to reflect on your experience with the MEF in evaluating integration of a learner-centric approach?” Participants (n = 12) provided examples, expressing ways in which they could reflect on the differences in scores obtained for different dimensions and how that related to their corresponding design efforts (Table 4).

Table 4

Illustrative Examples of Reflections From MOOC Creators on Their Scores Obtained From the MEF and its Correlation to Their Corresponding Design Efforts

| Evaluated MOOC discipline | Excerpts from learners’ reflections |

| Computer science | “We spent a lot of time thinking about the videos, and how to structure content. So we scored well in dimension 2. Towards the end, we did not have the bandwidth to think deeply about discussion forums, learner connection, and formative assessments. These dimensions received a lower score in MEF.” |

| Learning analytics | “For videos, we used the learner-centric MOOC model to develop the content, so we got better scores in dimension 2. However, we didn’t focus on dimension 8, i.e., learner connection practices during our course offering which got a low score.” |

| Instructional design | “In my MOOC, ‘video content’ dimension scored highest (3) and learner connection practices scored 2.1. This was because most indicators under video content dimension were adequately addressed in my MOOC, but with respect to learner connection dimension, indicators related to community building were either missing or inadequately addressed.” |

As observed in Table 4, MOOC creators agreed that the scores obtained for their MOOCs using the MEF aligned with the efforts with respect to their pedagogy design. The dimensions that included more learner-centric interventions in their MOOC scored higher as compared to the dimensions which were not learner-centric or less learner-centric. This implied the effectiveness of the framework in evaluating the integration of learner-centric pedagogy in MOOCs.

Systematic evaluation of pedagogical practices is one way of bringing a positive change to MOOC design (Jansen et al., 2017). There are some existing quality evaluation approaches which provide a useful overview and guide for e-learning design (Ossiannilsson et al., 2015; Rosewell & Jansen, 2014; Shattuck, 2015). The proposed evaluation framework described in this study enriches the existing methods to assess the incorporation of learner-centric pedagogy in MOOCs.

The MEF focuses on formative evaluation of the pedagogical quality of all critical input elements (Jansen et al., 2017) of an xMOOC. The detailed indicators in the framework surpass the superficial evaluation of prescribed criteria and enable identification of weaknesses and strengths in different dimensions of MOOCs. Hence, the MEF not only focuses on quality evaluation but also provides reflective indicators for quality enhancement. Recent literature (Aloizou et al., 2019) has called for the design of such evaluation methods which can facilitate higher pedagogical quality in MOOCs.

We examined the usability and usefulness of the MEF from the perspective of MOOC creators in the field. Quantitative results showed a good usability of the MEF for MOOC creators (N = 13) with an average SUS score of 78.46. The quantitative analysis also showed a high level of agreement in the perception of usefulness for all dimensions of the MEF, and the use of the framework (a) in evaluating integration of learner-centric approaches in MOOCs, (b) as a formative evaluation tool, and (c) in bringing a positive change in pedagogy design of MOOCs.

Participants expressed their learning from the framework and its potential use in the field for formative evaluation of MOOCs. A notable observation was that the participants acknowledged new learning from varied dimensions of the framework. This implies the potential usefulness of each dimension, considering the scale and versatility of MOOC creators. New learning on pedagogical design of the video content and associated in-video activities were most often emphasised by participants. This is not surprising considering the role of video content in MOOCs and existing literature on challenges of low learner engagement in MOOC videos (Geri et al., 2017).

The participants expressed a positive perception towards the potential use of the framework as a comprehensive guide in bringing reflections and improving the experience of instructors and students. The promising uses of the MEF which emerged in this analysis align with literature recommendations for new frameworks with quality indicators to clearly assess specific elements of the active learning pedagogies with an emphasis on reflection (Aloizou et al., 2019; Jansen et al., 2017). Regarding the scores obtained using the MEF, the participants agreed that their respective scores correctly reflected the efforts taken to integrate learner-centric strategies in the evaluated dimensions. This indicated the effectiveness of the MEF in evaluating learner-centric pedagogy design of MOOCs in varied disciplines.

In the process of examining learner-centric pedagogy, the MEF attempts to assess the opportunities provided for learner engagement in the context of MOOCs (Deng et al., 2020). Emotional engagement opportunities are assessed by evaluating the presence of learner interactions in videos and constructive feedback mechanisms. Cognitive engagement opportunities are assessed by evaluating the design of learning resources and assessment activities at varied cognitive levels. Social engagement opportunities are examined by evaluating the presence of collaborative activities and interactions between peers.

In terms of limitations, the framework does not particularly focus on specially-abled learners in its design. However, some efforts have been made toward including diverse learner needs in dimension 3 and dimension 8. Secondly, the large-scale MOOC enrolment may interfere with straightforward evaluation of a few indicators related to collaboration-based activities. Thirdly, two MOOC creators pointed out that evaluating all dimensions at once, using the MEF toolkit, involves a time-consuming process. Though it may take a little longer for reflection during the first encounter with the MEF, it is a comprehensive tool which can be used for structured and straightforward formative evaluation of all future MOOC offerings.

In terms of generalizability, the adoption of the MEF is not only restricted to MOOCs but may also be used for pedagogy evaluation of other online or blended courses following a similar course structure. However, as a limitation, the current study was restricted to a small sample size in the local context and did not evaluate the use of the MEF with a large and diverse population of MOOC creators or MOOC providers. To address the same, subsequent to this primary implementation of the MEF towards its validation, the framework will be re-examined by diverse users during its large-scale field implementation in order to establish its generalizability. In view of a recent field study (Kizilcec et al., 2020) which emphasised on the context-based effects of interventions in MOOCs, it will be intriguing to examine the influence of the MEF in different contexts. The impact of the MEF on the effectiveness of MOOCs and its learning experience will also be investigated in future studies.

This study demonstrates the MOOC Evaluation Framework which evaluates the integration of learner-centric pedagogy in MOOC design. The framework provides an opportunity to MOOC creators for formative evaluation of their pedagogy to promote active learner participation and enhance engagement of learners with content, course team, and peers. Thirteen MOOC creators from eight different educational institutes evaluated their courses, in six different disciplines, using the MEF. The framework was found to be useful as a formative evaluation tool for evaluating integration of learner-centric approaches and bringing a positive change in pedagogy design of MOOCs. Benefits of the framework, expressed by MOOC creators, aligned with literature recommendations for new MOOC pedagogy evaluation measures, that is, to assess specific elements of active learning pedagogy; detect weaknesses in course elements; and acquire important learning for designing or redesigning a MOOC. The MEF seems to be a critical step forward for MOOC creators and MOOC providers to ensure learner-centric approach in pedagogy design with bigger goals to facilitate active learner participation, enhance learner engagement, and lower the attrition rates in MOOCs.

We would like to thank Next Education Research Lab for their financial support in the project. We are thankful to Mr. Danish Shaikh for the design of the web-based application of the MEF. We would also like to thank Prof. Sameer Sahasrabudhe and Dr. Jayakrishnan Warriem for their support as experts in the internal validation study. We would like to express our gratitude to all faculty and research scholar participants from different institutes who participated in the external validation study. Special thanks to Dr. Jayakrishnan Warriem for helping us approach many of these participants. We would also like to thank Mr. Jatin Ambasana for insightful discussions on the literature related to existing MOOC evaluation approaches. We are also thankful to Prof. Ramkumar Rajendran, Mr. David John, and Mr. Herold PC for their advice on various aspects of the web-based design of the MEF toolkit.

Aloizou, V., Villagrá Sobrino, S. L., Martínez Monés, A., Asensio-Pérez, J. I., & García-Sastre, S. (2019) Quality assurance methods assessing instructional design in MOOCs that implement active learning pedagogies: An evaluative case study. In M. Calise, C. Delgado Kloos, C. Mongenet, J. Reich, J. A. Ruipérez-Valiente, G. Shimshon, T. Staubitz, & M. Wirsing (Eds.), EMOOCs 2019 work in progress papers of research, experience and business tracks (pp. 14-19). Sun SITE CEUR. http://ceur-ws.org/Vol-2356/research_short3.pdf

Alturkistani, A., Lam, C., Foley, K., Stenfors, T., Blum, E. R., Van Velthoven, M. H., & Meinert, E. (2020). Massive open online course evaluation methods: Systematic review. Journal of Medical Internet Research, 22(4), Article e13851. https://doi.org/10.2196/13851

Bangor, A., Kortum, P. T., & Miller, J. T. (2008). An empirical evaluation of the system usability scale. Inte rnationa l Journal of Human-Computer Interaction, 24(6), 574-594. https://doi.org/10.1080/10447310802205776

Biggs, J. (1999). What the student does: Teaching for enhanced learning. Higher Education Research & Development, 18(1), 57-75. https://doi.org/10.1080/0729436990180105

Bransford, J. D., Brown, A. L., & Cocking, R. R. (Eds.). (2000). How people learn: Brain, mind, experience, and school. National Academy Press. http://www.csun.edu/~SB4310/How%20People%20Learn.pdf

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77-101. https://doi.org/10.1191/1478088706qp063oa

Brooke, J. (1996). SUS: A “quick and dirty” usability scale. In P. W. Jordan, B. Thomas, I. L. McClelland, & B. Weerdmeester (Eds.), Usability evaluation in industry (chapter 21). Taylor & Francis. https://www.taylorfrancis.com/chapters/edit/10.1201/9781498710411-35/sus-quick-dirty-usability-scale-john-brooke

Burd, E. L., Smith, S. P., & Reisman, S. (2015). Exploring business models for MOOCs in higher education. Innovative Higher Education, 40(1), 37-49. https://doi.org/10.1007/s10755-014-9297-0

Chickering, A. W., & Gamson, Z. F. (1987, March). Seven principles for good practice in undergraduate education. AAHE Bulletin, 3-7. https://eric.ed.gov/?id=ed282491

Chukwuemeka, E. J., Yoila, A. O., & Iscioglu, E. (2015). Instructional design quality: An evaluation of Open Education Europa Networks’ open courses using the first principles of instruction. International Journal of Science and Research, 4(11), 878-884. https://www.ijsr.net/get_abstract.php?paper_id=NOV151323

Clark, R. C., & Mayer, R. E. (Eds.). (2016). E-learning and the science of instruction: Proven guidelines for consumers and designers of multimedia learning. John Wiley & Sons. https://doi.org/10.1002/9781119239086

Conole, G. G. (2013). MOOCs as disruptive technologies: Strategies for enhancing the learner experience and quality of MOOCs. Revista de Educación a Distancia (RED), 39. https://revistas.um.es/red/article/view/234221

Creelman, A., Ehlers, U., & Ossiannilsson, E. (2014). Perspectives on MOOC quality: An account of the EFQUEL MOOC Quality Project. INNOQUAL-International Journal for Innovation and Quality in Learning, 2(3), 78-87. https://www.lunduniversity.lu.se/lup/publication/225285a4-84b4-49d4-9f64-59ec16b15a83

Deng, R., Benckendorff, P., & Gannaway, D. (2020). Learner engagement in MOOCs: Scale development and validation. British Journal of Educational Technology, 51(1), 245-262. https://doi.org/10.1111/bjet.12810

Egloffstein, M., & Ifenthaler, D. (2017). Employee perspectives on MOOCs for workplace learning. TechTrends, 61(1), 65-70. https://doi.org/10.1007/s11528-016-0127-3

Fidalgo-Blanco, á., Sein-Echaluce, M. L., & García-Peñalvo, F. J. (2015). Methodological approach and technological framework to break the current limitations of the MOOC model. Journal of Universal Computer Science, 21(5), 712-734. https://repositorio.grial.eu/bitstream/grial/426/1/jucs_21_05_0712_0734_blanco.pdf

Geri, N., Winer, A., & Zaks, B. (2017). Challenging the six-minute myth of online video lectures: Can interactivity expand the attention span of learners? Online Journal of Applied Knowledge Management (OJAKM), 5(1), 101-111. https://doi.org/10.36965/OJAKM.2017.5(1)101-111

Hew, K. F. (2018). Unpacking the strategies of ten highly rated MOOCs: Implications for engaging students in large online courses. Teachers College Record, 120(1), 1-40. https://doi.org/10.1177/016146811812000107

Jansen, D., Rosewell, J., & Kear, K. (2017). Quality frameworks for MOOCs. In M. Jemni, Kinshuk, & M. Khribi (Eds.), Open education: From OERs to MOOCs (pp. 261-281). Springer. https://doi.org/10.1007/978-3-662-52925-6_14

Kizilcec, R. F., Reich, J., Yeomans, M., Dann, C., Brunskill, E., Lopez, G., Turkay, S., Williams, J. J., & Tingley, D. (2020). Scaling up behavioral science interventions in online education. Proceedings of the National Academy of Sciences, 117(26), 14900-14905. https://doi.org/10.1073/pnas.1921417117

Konrad, A. (2017, December 20). Coursera fights to keep the promise of MOOCs alive with corporate customer push. Forbes. https://www.forbes.com/sites/alexkonrad/2017/12/20/coursera-goes-corporate-to-keep-alive-promise-of-moocs/?sh=1c5e03b2543c

Lee, G., Keum, S., Kim, M., Choi, Y., & Rha, I. (2016). A study on the development of a MOOC design model. Educational Technology International, 17(1), 1-37. https://koreascience.kr/article/JAKO201612359835226.pdf

Lowenthal, P. R., & Hodges, C. B. (2015). In search of quality: Using quality matters to analyze the quality of massive, open, online courses (MOOCs). The International Review of Research in Open and Distributed Learning, 16(5), 83-101. https://doi.org/10.19173/irrodl.v16i5.2348

Margaryan, A., Bianco, M., & Littlejohn, A. (2015). Instructional quality of massive open online courses (MOOCs). Computers & Education, 80, 77-83. https://doi.org/10.1016/j.compedu.2014.08.005

Mayer, R. E. (2019). Thirty years of research on online learning. Applied Cognitive Psychology, 33(2), 152-159. https://doi.org/10.1002/acp.3482

Merrill, M. D. (2002). First principles of instruction. Educational Technology Research and Development, 50(3), 43-59. https://doi.org/10.1007/BF02505024

Oh, E. G., Chang, Y., & Park, S. W. (2020). Design review of MOOCs: Application of e-learning design principles. Journal of Computing in Higher Education, 32(3), 455-475. https://doi.org/10.1007/s12528-019-09243-w

Ossiannilsson, E., Williams, K., Camilleri, A. F., & Brown, M. (2015). Quality models in online and open education around the globe. State of the art and recommendations. International Council for Open and Distance Education. https://doi.org/10.25656/01:10879

Paas, F., & Sweller, J. (2014). Implications of cognitive load theory for multimedia learning. In R. E. Mayer (Ed.), The Cambridge handbook of multimedia learning (2nd ed.) (pp. 27-42). New York: Cambridge University Press. https://doi.org/10.1017/CBO9781139547369.004

Pilli, O., & Admiraal, W. F. (2017). Students’ learning outcomes in Massive Open Online Courses (MOOCs): Some suggestions for course design. Journal of Higher Education, 7(1), 46-71. https://scholarlypublications.universiteitleiden.nl/access/item%3A2903812/view

Read, T., & Rodrigo, C. (2014). Towards a quality model for UNED MOOCs. Proceedings of the European MOOC Stakeholder Summit, 282-287. https://www.oerknowledgecloud.org/record686

Richey, R. C. (2006). Validating instructional design and development models. In J. M. Spector, C. Ohrazda, A. Van Schaack, & D. A. Wiley (Eds.), Innovations in instructional technology (pp. 171-185). Routledge. https://doi.org/10.4324/9781410613684

Rosewell, J., & Jansen, D. (2014). The OpenupEd quality label: Benchmarks for MOOCs. INNOQUAL: The International Journal for Innovation and Quality in Learning, 2(3), 88-100. http://oro.open.ac.uk/41173/

Rubio, D. M., Berg-Weger, M., Tebb, S. S., Lee, E. S., & Rauch, S. (2003). Objectifying content validity: Conducting a content validity study in social work research. Social Work Research, 27(2), 94-104. https://doi.org/10.1093/swr/27.2.94

Shah, V., Murthy, S., Warriem, J., Sahasrabudhe, S., Banerjee, G., & Iyer, S. (2022). Learner-centric MOOC model: A pedagogical design model towards active learner participation and higher completion rates. Educational Technology Research and Development, 70(1), 263-288. https://link.springer.com/article/10.1007/s11423-022-10081-4

Scriven, M. (1967). The methodology of evaluation. In R. W. Tyler, R. M. Gagne, & M. Scriven (Eds.), Perspectives of curriculum evaluation (pp. 39-83). Rand McNally. https://www.scirp.org/(S(czeh2tfqw2orz553k1w0r45))/reference/referencespapers.aspx?referenceid=3081112

Shattuck, K. (2015). Research inputs and outputs of Quality Matters: Update to 2012 and 2014 versions of What We’re Learning from QM-Focused Research. Quality Matters. https://ascnet.osu.edu/storage/meeting_documents/1384/FINAL-9_18_15%20update-QM%20Research.pdf

Sim, J., & Wright, C. C. (2005). The kappa statistic in reliability studies: Use, interpretation, and sample size requirements. Physical Therapy, 85(3), 257-268. https://doi.org/10.1093/ptj/85.3.257

Stracke, C. M., & Trisolini, G. (2021). A systematic literature review on the quality of MOOCs. Sustainability, 13(11), Article 5817. https://doi.org/10.3390/su13115817

Stracke, C. M. (2019). Quality frameworks and learning design for open education. The International Review of Research in Open and Distributed Learning, 20(2). https://doi.org/10.19173/irrodl.v20i2.4213

Stracke, C. M., Tan, E., Texeira, A. M., do Carmo Pinto, M., Vassiliadis, B., Kameas, A., Sgouropoulou, C., & Vidal, G. (2018). Quality reference framework (QRF) for the quality of massive open online courses (MOOCs): Developed by MOOQ in close collaboration with all interested parties worldwide. MOOQ. https://research.ou.nl/en/publications/quality-reference-framework-qrf-for-the-quality-of-massive-open-o

Sweller, J., Ayres, P., Kalyuga, S. (2011). Measuring cognitive load. Cognitive load theory, 71-85. https://link.springer.com/chapter/10.1007/978-1-4419-8126-4_6

Tessmer, M. (1993). Planning and conducting formative evaluations. Routledge. https://doi.org/10.4324/9780203061978

Toven-Lindsey, B., Rhoads, R. A., & Lozano, J. B. (2015). Virtually unlimited classrooms: Pedagogical practices in massive open online courses. The Internet and Higher Education, 24, 1-12. https://doi.org/10.1016/j.iheduc.2014.07.001

Watson, W. R., Watson, S. L., & Janakiraman, S. (2017). Instructional quality of massive open online courses: A review of attitudinal change MOOCs. International Journal of Learning Technology, 12(3), 219-240. https://doi.org/10.1504/IJLT.2017.088406

Vygotsky, L. S. (1978). Mind in society: Development of higher psychological processes (M. Cole, V. John-Steiner, S. Scribner, & E. Souberman, Eds.). Harvard University Press. https://www.hup.harvard.edu/catalog.php?isbn=9780674576292

Yilmaz, A. B., Ünal, M., & Çakir, H. (2017). Evaluating MOOCs according to instructional design principles. Journal of Learning and Teaching in Digital Age, 2(2), 26-35. https://dergipark.org.tr/en/pub/joltida/issue/55467/760086

Yousef, A. M. F., Chatti, M. A., Schroeder, U., & Wosnitza, M. (2014, July). What drives a successful MOOC? An empirical examination of criteria to assure design quality of MOOCs. In 2014 IEEE 14th International Conference on Advanced Learning Technologies (pp. 44-48). IEEE. https://doi.org/10.1109/ICALT.2014.23

Is My MOOC Learner-Centric? A Framework for Formative Evaluation of MOOC Pedagogy by Veenita Shah, Sahana Murthy, and Sridhar Iyer is licensed under a Creative Commons Attribution 4.0 International License.