Volume 24, Number 2

Ünal Çakıroğlu1, Merve Aydın2, Yılmaz Bahadır Kurtoğlu3, and Ümit Cebeci4

1,2Trabzon University, Turkey; 3Recep Tayyip Erdoğan University, Turkey; 4Karabük University, Turkey

Higher education instructors tried to find best teaching ways during the pandemic. Instructors who were faced with emergency situations used various technologies to deliver their courses. In this study, an online survey was used to ask instructors about their experiences regarding their development of technological pedagogical content knowledge (TPACK) during emergency remote teaching (ERT); 231 responses were received from instructors from faculties of education. The survey was a five-point Likert-type scale include the dimensions of pedagogical knowledge, pedagogical knowledge, technological knowledge, technological content knowledge, pedagogical content knowledge, technological pedagogical knowledge, and technological pedagogical content knowledge. Instructors rated their own non-technological knowledge (pedagogical knowledge, content knowledge, and pedagogical content knowledge) relatively higher than their knowledge including technology (technological knowledge, technological pedagogical knowledge, and technological content knowledge). The findings indicate that instructors had a consistently high level of perceived knowledge in all TPACK dimensions. Regarding developments in instructors’ TPACK, several suggestions were made, including novel technologies and pedagogies specialized for ERT.

Keywords: emergency remote teaching, ERT, technological pedagogical content knowledge, TPACK, instructors, instructor’s component

The widespread closing of schools due to the COVID-19 outbreak shocked the educational community. The global pandemic dramatically affected higher education institutions worldwide as campuses around the globe were forced to close their doors. Instructors had to remain at home from the spring of 2020 onward, and a temporary shift from in-person instructional delivery to an alternate delivery mode was required.

Instruction during emergency remote teaching required provision of solutions to the urgent need for online teaching via online teaching tools (Barbour et al., 2020). This situation forced instructors at higher education institutions to find the best way to effectively plan their instruction, deliver courses, and assess students’ learning and their teaching (Hodges et al., 2020). This shift of instructional delivery method due to crisis circumstances has involved the use of fully remote teaching solutions for instruction or education (emergency remote teaching [ERT]). Instructors also needed to cope with organizational issues. Many adapted their courses to be delivered via a learning management system (LMS). However, some instructors came across technological and pedagogical challenges during this period (Ferri et al., 2020). Some were caught unprepared for this new form of teaching and learning (Tanak, 2019). Instructors need specific skills to implement pedagogical strategies; they therefore must adopt new technologies and content knowledge to do so.

The challenges of online learning generally originate from instructors’ lack of knowledge in regard to technology use as well as their need to learn appropriate pedagogy for technology integration; engage students online via materials such as videos, images, and animations; and assess learning and instruction in an online context (Verawardina et al., 2020). Thomas and Rogers (2020) state that technological challenges result mainly from lack of access to technology, online teaching platforms, and/or the Internet. Instructors’ technological knowledge includes efficient use of various digital tools in the online teaching process. In addition to technological knowledge, teachers are also required to master pedagogical and content knowledge to identify, integrate, manage, and evaluate learners’ performances during teaching (Valtonen et al., 2017). Social challenges such as peer support and inadequate instructor-student interaction also exist.

In sum, instructors found themselves exposed to these challenging imperative tasks during ERT. The emergency situation required instructors be able to holistically teach, plan, organize, and continue online courses. Thus, during the COVID-19 pandemic, technological pedagogical content knowledge (TPACK) became essential to be exhibited in remote teaching to increase instructors’ capacity to teach online. This study attempts to understand this complexity, considering the developments of the integration of three areas of knowledge (pedagogical, technological, and content knowledge) in the context of the TPACK framework (Koehler et al., 2013) during the pandemic.

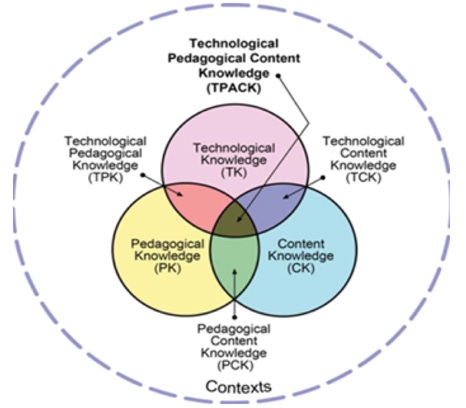

TPACK involves an understanding of technology integration in an educational context to help align technology, pedagogy, and content (Giannakos et al., 2015; Harris & Hofer, 2009; Koehler et al., 2013), as well as the complexity of relationships among students, teachers, content, technologies, and practices (Oliver, 2011; Sang et al., 2016; Voogt et al., 2013). Using Shulman’s (1986) pedagogical content knowledge framework and combining the relationships between content knowledge (subject matter), technological knowledge (computers, the Internet, digital video, etc.), and pedagogical knowledge (practices, processes, strategies, procedures, and methods of teaching and learning), Koehler and Mishra (2009) define TPACK as the connections and interactions between these three types of knowledge (Figure 1).

Figure 1

Technological Pedagogical Content Knowledge (TPACK) Model

Note. From “What is technological pedagogical content knowledge (TPACK)?” by M. Koehler and P. Mishra, 2009, Contemporary Issues in Technology and Teacher Education, 9(1), p. 63 (https://www.learntechlib.org/primary/p/29544/). Copyright 2009 by Society for Information Technology & Teacher Education.

In the model, technological pedagogical knowledge (TPK) includes the teacher’s knowledge of technologies and their uses in teaching within appropriate pedagogy (Koehler & Mishra, 2009). Technological content knowledge (TCK) involves understanding affordances of technologies within a subject matter to be taught (Mishra & Koehler, 2009). Pedagogical content knowledge (PCK) refers to knowledge of the content to be taught and the pedagogy, including effective teaching strategies to guide instructors (Koehler & Mishra, 2009).

Previous TPACK studies involve investigations of teachers’ TPACK by means of observing lesson plans (Canbazoglu Bilici et al., 2016), tasks, and TPACK surveys (Cheng, 2017; Ciptaningrum, 2017; Getenet et al., 2016; Giannakos et al., 2015). Different versions of the TPACK model have been applied to understanding both pre-service and in-service teachers’ knowledge of and skills in integrating technology into teaching, which is also used in ERT (Lamminpää, 2021).

During the pandemic, instructors have needed to cope with unforeseen problems to meet students’ needs. One of the biggest disruptions faced by instructors was transforming their traditional in-person teaching into remote teaching. However, they started this transformation by devising their own ways of technology integration to deliver their instruction as a result of the emergency (Arcueno et al., 2021). Lack of teachers’ TPACK and skills leads to ineffective student learning. It is essential to provide instructors to notice and appreciate their strengths as educators in such cases (Can & Silman-Karanfil, 2022). Accordingly, TPACK may be an important element of teacher’s knowledge, which is of great significance to the cultivation of teachers’ professional development in ERT.

The COVID-19 outbreak required new demands of instructors in terms of using intensive technology (Ferri et al., 2020) and their ability to use such technology in remote teaching (Ahtiainen et al., 2022). Before the pandemic, no clear directions existed to guide educators in this regard. Thus, direction for sustainable education in these unprecedented times is needed. Understanding instructors’ experiences may provide valuable insights into how individuals responded, and it can inform future course design, institutional responses, and support structures for instructors, students, and organizers.

In addition, this study, by identifying instructors’ TPACK, raises awareness of the urgency of TPACK in ERT. In this context, there are studies regarding TPACK in face-to-face teaching (Tyarakanita, 2020) and limited studies of TPACK in online teaching suggesting that TPACK was beneficial to instructors’ professional development and efficient for assessing instructors’ skills (Archambault & Crippen, 2009; Haviz et al., 2020; Juanda et al., 2021). However, there is still a need to fill in the gaps resulting from the lack of TPACK assessment in ERT studies. Thus, this study is focused on addressing instructors’ experiences during ERT to understand their integration process and the conditions of technology and pedagogy.

The purpose of this study is to investigate how ERT due to the COVID-19 pandemic affected instructors’ development of TPACK within their teaching experiences.

Guided by our main research question, “How does ERT affect instructors’ ability to use TPACK?” we also addressed the following questions:

This study examines instructors’ TPACK emerging from their exposure to ERT. Qualitative data were gathered with a descriptive survey.

The study participants were chosen via purposeful sampling. They consisted of 231 instructors from 20 different education faculties of higher education institutions in Turkey. Instructors were between 25 and 60 years of age; 48.5% identified as male and 51.5% female. Participants’ demographic data are provided in Table 1.

Table 1

Participant Demographics

| Characteristic | f | % | |

| Gender | Female | 119 | 51.5 |

| Male | 112 | 48.5 | |

| Age | 25-34 | 42 | 18.2 |

| 35-44 | 102 | 44.2 | |

| 45-60 | 73 | 31.6 | |

| 60+ | 14 | 6.1 | |

| Years in profession | 0-10 | 61 | 26.4 |

| 11-20 | 86 | 37.2 | |

| 21-30 | 56 | 24.2 | |

| 30+ | 28 | 12.1 | |

The participants used various LMSs and virtual classrooms as online teaching platforms during the pandemic period. The reported platforms are presented in Table 2.

Table 2

Online Teaching Platforms Used by Institutions During the COVID-19 Pandemic

| Virtual classroom | LMS | LMS and virtual classroom | Other teaching tools |

| Google Meet Microsoft Teams BigBlueButton Perculus Zoom Adobe Connect | Moodle ALMS ToteltekLMS Google Classroom Yeri Uzem Portal Olive Canvas | Blackboard Mergen | Microsoft 365 Safe Exam Cisco Screencasts Generic online teaching tools |

We used the technological pedagogical content knowledge scale developed by Horzum et al. (2014) to determine the TPACK of the instructors. This is a five-point Likert-type scale with the following ratings: 5 = completely agree; 4 = agree; 3 = undecided; 4 = disagree; 1 = strongly disagree. It has a reliability coefficient of 0.98. The participants’ TPACK levels were interpreted according to the scores obtained from the dimensions in the scale. The TPACK scale has 7 subdimensions consisting of 51 items total: 8 items about content knowledge (PK), 7 items about pedagogical knowledge (PK), 6 items about technological knowledge (TK), 6 items about technological content knowledge (TCK), 8 items about pedagogical content knowledge (PCK), 8 items about technological pedagogical knowledge (TPK), and 8 items about technological pedagogical content knowledge (TPACK). Responses to the items were interpreted to identify how participants thought the period of ERT had affected their information and communication technology skills. If respondents thought their skills had changed, they could specify whether they thought they had improved or declined. They could also describe their experiences with ERT in their own words.

The TPACK scale was used to gather data. Cronbach’s alpha (α) reliability coefficient of the scale for this study was 0.972. The normality test was applied to the total score of the TPACK scale; our findings indicate that the TPACK scores meet the normality condition. Four intervals were calculated to describe the scores from the scale as follows: 1.00-1.79 = very low; 1.80-2.59 = low; 2.60-3.39 = moderate; 3.40-4.19 = high; and 4.20-5.00 = very high. An independent t-test was used to determine whether TPACK scores differed significantly in terms of the gender variable, and one-way analysis of variance (ANOVA) was used to determine whether there was a significant difference in TPACK scores in terms of respondents’ occupation, seniority, and age.

In presenting our results from the survey, first, the scores from dimensions of TPACK are described, and then relationships between the scores in the dimensions and variables are addressed. In general, instructors were found to have consistently high levels of perceived knowledge in all TPACK domains.

The participant’ perspectives regarding TK (arithmetic mean and frequencies) are shown in Table 3.

Table 3

Technological Knowledge Scores

| Item | X̄ | SD | |

| 1 | I follow new technologies. | 4.16 | 0.840 |

| 2 | I know how to solve problems related to technology. | 3.84 | 0.884 |

| 3 | I have sufficient knowledge about using the technologies I need. | 3.99 | 0.808 |

| 4 | I have the technological knowledge necessary to access information. | 4.26 | 0.728 |

| 5 | I have the necessary technological knowledge to use the information in the resources I access. | 4.19 | 0.749 |

| 6 | I have enough knowledge to support students in my class when they have problems with technology use. | 3.83 | 0.930 |

The value for the scores of all TK items is relatively high, with an average value of 4.04. When the responses about this type of knowledge are examined, the level of TK required to access information got the highest score; the item about finding solutions to students’ technological problems was scored lower on average than other items.

Table 4 shows the mean values of instructors’ responses to PK items. The items on the subject of course management and use of teaching methods and techniques are above average at 4.58. Item 13, “I can make students evaluate each other,” has a noteworthy lower-than-average score of 3.74.

Table 4

Pedagogical Knowledge Scores

| Item | X̄ | SD | |

| 7 | I can adapt my teaching depending on the learning levels of the students. | 4.34 | 0.728 |

| 8 | I know how to measure student performance. | 4.45 | 0.609 |

| 9 | I can adapt the teaching process for students with different learning styles. | 4.22 | 0.767 |

| 10 | I use appropriate teaching strategies, methods, and techniques according to the characteristics of the class. | 4.35 | 0.668 |

| 11 | In my class, I manage the class as needed. | 4.58 | 0.569 |

| 12 | I know the necessary methods and techniques to ensure effective participation of students. | 4.58 | 0.599 |

| 13 | I can make students evaluate each other. | 3.74 | 1.079 |

Table 4 shows that the PK items have high average scores between 4.00 and 4.50. It is understood that participants’ PK level is considerably higher than their TK level, with an average score of 4.32.

The descriptive statistics of the instructor’s responses on CK are shown in Table 5.

Table 5

Content Knowledge Scores

| Item | X̄ | SD | |

| 14 | I decide on the scope of the topics I will lecture. | 4.64 | 0.565 |

| 15 | I learn new and changing information about my field. | 4.58 | 0.569 |

| 16 | I follow the developments in my field. | 4.56 | 0.635 |

| 17 | I know the current classification of information in my field. | 4.48 | 0.617 |

| 18 | I know the terms related to my field. | 4.64 | 0.525 |

| 19 | I know the sources of information regarding my field. | 4.61 | 0.523 |

| 20 | I know the appropriate resources to direct my students regarding my field. | 4.58 | 0.561 |

| 21 | I know how to improve myself in my field. | 4.64 | 0.525 |

All items regarding content knowledge were scored very high: above 4.50. The average of the items about being aware of developments in one’s field, knowing sources and concepts, and classifying information was 4.59, which is considerably high compared with all other knowledge domains.

The descriptive statistics of each item regarding 231 participants’ responses to items about TCK are provided in Table 6.

Table 6

Technological Content Knowledge Scores

| Item | X̄ | SD | |

| 22 | I have the necessary technological knowledge to access, organize, and use resources related to my field. | 4.38 | 0.680 |

| 23 | I can use available content related to my field. | 4.09 | 0.842 |

| 24 | I follow the updates and changes about programs related to my field by using the Internet. | 4.43 | 0.668 |

| 25 | I enable my students to use technologies related to my field. | 4.15 | 0.757 |

| 26 | I can benefit from social networks where experts in my field come together to develop professionally. | 4.11 | 0.902 |

| 27 | I have the necessary technological knowledge and skills to improve my knowledge in my field. | 4.24 | 0.752 |

The average score for the TCK dimension is high at 4.23. Item 24, “I follow the updates and changes about programs related to my field by using the Internet,” scored the highest at 4.43. The item regarding using computer software related to one’s field has a relatively lower average score (4.09) compared with the other items.

PCK scores are shown in Table 7.

Table 7

Pedagogical Content Knowledge Scores

| Item | X̄ | SD | |

| 28 | I can easily prepare lesson plans for the lesson I will teach. | 4.58 | 0.568 |

| 29 | I can choose the most appropriate teaching strategy to teach a particular concept. | 4.53 | 0.588 |

| 30 | I can distinguish the correctness of attempts of my students in problem-solving. | 4.45 | 0.601 |

| 31 | I know the misconceptions that students may have about a particular subject and I teach accordingly. | 4.39 | 0.657 |

| 32 | I can choose the appropriate teaching approach necessary to lead my students to think and learn. | 4.55 | 0.564 |

| 33 | I can use teaching strategies appropriate to the topics I teach. | 4.50 | 0.611 |

| 34 | I know the subjects that students find difficult to learn in my field | 4.54 | 0.609 |

| 35 | I can appropriately order the concepts that I will explain. | 4.60 | 0.541 |

The average score for PCK items is 4.51. Survey item 35, “I can appropriately order the concepts that I will explain,” has the highest score (4.60). Items 28 and 32, which point to topics such as shaping the lesson plans and appropriately choosing teaching approaches related to the course, also have higher average scores. Item 31, “I know the misconceptions that students may have about a particular subject and I teach accordingly,” has the lowest average score among the PCK items (4.39).

The average score is high (X̄ = 4.17) in the items related to TPK. The mean and standard deviation scores for each item are given in Table 8.

Table 8

Technological Pedagogical Knowledge Scores

| Item | X̄ | SD | |

| 36 | I can use technologies that will enable students to acquire new knowledge and skills. | 4.22 | 0.714 |

| 37 | I have the knowledge and skills to select and use technologies appropriate for students’ development in order to enable them to learn effectively. | 4.16 | 0.763 |

| 38 | I know how the technologies and teaching approaches that I will use affect each other. | 4.13 | 0.761 |

| 39 | I can choose technologies that can enable my students to learn better. | 4.15 | 0.727 |

| 40 | I can use technology to create richer learning environments. | 4.26 | 0.707 |

| 41 | I have enough knowledge to discuss how I can use technology in my lessons. | 4.05 | 0.873 |

| 42 | I use technology to improve my teaching performance when necessary. | 4.26 | 0.693 |

| 43 | I can adapt new technologies while using different methods in my teaching. | 4.20 | 0.725 |

The average scores of all items in the TPK dimension are similar. Items 40 and 42, which focus on rich learning environments and using technology, both have an above-average score of 4.26. However, item 38, which expresses how these technologies and environments will affect each other, has the lowest average score (4.13).

The average score in the TPACK dimension was 4.13. The mean scores for each item are shown in Table 9.

Table 9

Technological Pedagogical Content Knowledge Scores

| Item | X̄ | SD | |

| 44 | I can use technology to determine students’ level of skill and understanding about a particular subject. | 4.15 | 0.760 |

| 45 | I can choose and use the strategy, method, and technology appropriate for the course content. | 4.33 | 0.689 |

| 46 | I can lead my colleagues in the selection and use of appropriate methods and technologies. | 3.66 | 1.033 |

| 47 | I can develop teaching materials suitable for the subject area, teaching method, and technology. | 4.03 | 0.844 |

| 48 | I can use technologies that will provide a better understanding of the subject while teaching. | 4.25 | 0.688 |

| 49 | I can use methods and technologies that will enable students to learn more effectively according to the subject I teach. | 4.26 | 0.685 |

| 50 | I enable students to use technologies suitable for the teaching method to learn the subject better. | 4.13 | 0.707 |

| 51 | I can choose teaching methods and technologies that will enable students to study the subject more willingly. | 4.29 | 0.653 |

Item 46, “I can lead my colleagues in the selection and use of appropriate methods and technologies,” has a below-average score of 3.66. On the other hand, item 45, “I can choose and use the strategy, method, and technology appropriate to the course content,” which is about teaching approaches and course management, has the highest average score in the TPACK dimension (4.33).

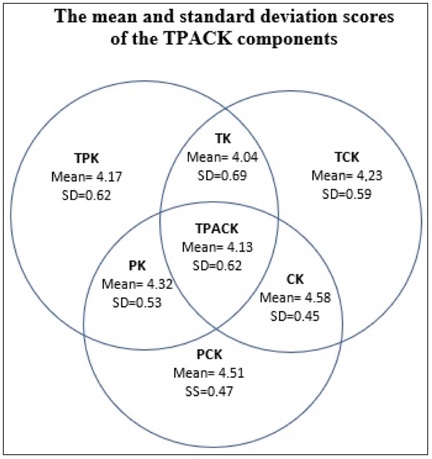

Figure 2 illustrates the mean and standard deviation scores of the TPACK components regarding technology, pedagogy, and content both solely and combined.

Figure 2

Mean Scores in All Dimensions of the Technological Pedagogical Content Knowledge Survey

Note. TPK = technological pedagogical knowledge; TK = technical knowledge; TCK = technological content knowledge; TPACK = technological pedagogical content knowledge; PK = pedagogical knowledge; CK = content knowledge; PCK = pedagogical content knowledge

PCK received the highest average score (4.51), and TK had the lowest (4.04). It is remarkable that the mean scores of the instructors’ TK are lower than their scores in other dimensions. Surprisingly, a non-technological knowledge domain, PCK, has one of the highest average scores.

Pearson’s correlation coefficient was used to examine the relationship between each component of TPACK, which has previously been tested for reliability and normality. The results of the analysis are shown in Table 10.

Table 10

Relationships Between Average Scores of TPACK Components

| Variable | TK | PK | CK | TCK | PCK | TPK | TPACK |

| TK | — | 0.448** | 0.314** | 0.728** | 0.311** | 0.779** | 0.740** |

| PK | — | 0.656** | 0.565** | 0.750** | 0.540** | 0.579** | |

| CK | — | 0.574** | 0.753** | 0.464** | 0.462** | ||

| TCK | — | 0.469** | 0.800** | 0.724** | |||

| PCK | — | 0.507** | 0.527** | ||||

| TPK | — | 0.875** | |||||

| TPACK | — |

Note. n = 231. Pearson’s correlation coefficient was used. TPACK = technological pedagogical content knowledge; TK = technological knowledge; PK = pedagogical knowledge; CK = content knowledge; TCK = technological content knowledge; PCK = pedagogical content knowledge; TPK = technological pedagogical knowledge.

** p < .001.

Table 10 demonstrates that a moderately positive relationship was found between all domains. When the scores in each domain were analysed separately, the highest correlation was found between TPK and TPACK (r = 0.875, p < 0.001), and the lowest correlation was found between PCK and TK (r = 0.311, p < 0.001).

The independent groups t-test was used to determine whether the TPACK levels of the instructors differed according to gender (Table 11).

Table 11

TPACK Scores in Terms of Gender

| Gender | n | X̄ | SD | p |

| Female | 119 | 4.35 | 0.4347 | 0.56 |

| Male | 112 | 4.23 | 0.4847 | 0.56 |

Note. TPACK = technological pedagogical content knowledge.

The test result showed that the difference among TPACK scores in terms of gender was not statistically significant (p > 0.05). However, after analysing each TPACK subdimension, we found that PK, content knowledge, and PCK values (p > 0.05) were statistically significant, and technology knowledge in terms of gender was not statistically significant. In addition, ANOVA was applied to determine whether TPACK scores differed significantly according to seniority and age (Table 12).

Table 12

TPACK Scores in Terms of Seniority and Age

| Variable | Sum of squares | df | Mean square | F | p |

| Age | |||||

| Between groups | 239.368 | 75 | 3.192 | 0.910 | |

| Within groups | 543.706 | 155 | 3.508 | 0.673 | |

| Total | 783.074 | 230 | |||

| Seniority | |||||

| Between groups | 268.573 | 75 | 3.581 | 1.162 | 0.217 |

| Within groups | 477.713 | 155 | 3.082 | ||

| Total | 746.286 | 230 | |||

Note. TPACK = technological pedagogical content knowledge.

In addition, ANOVA was applied to determine whether TPACK scores differed significantly by communication type (Table 13).

Table 13

TPACK Scores in Terms of Communication Type

| Communication type | Sum of squares | df | Mean square | F | p |

| Between groups | 73.567 | 75 | 0.981 | 1.163 | 0.215 |

| Within groups | 130.701 | 155 | 0.843 | ||

| Total | 204.268 | 230 |

Note. TPACK = technoloical pedagogical content knowledge.

The results showed that the average TPACK scores did not significantly differ depending on the online teaching mode communication type (synchronous, asynchronous, or both synchronous and asynchronous).

This study investigated the instructors’ TPACK development during the COVID-19 pandemic. The survey data show that while some types of TPACK knowledge was more developed, others were limited.

Data analysis shows that approximately 73% of participating instructors agreed on the positive perspectives about PK. It is remarkable that most of them agreed on their developments in CK and PCK. These findings indicate that non-technological knowledge was positively developed; 69.2% gave positive scores in regard to TPK, 73% for TCK, and 60% for TPACK. Surprisingly, approximately 58.8% of participants believed their TK had improved during pandemic, whereas the remainder felt their skills had stayed the same. Some researchers suggest that technological knowledge levels also indicate how often teachers keep up with technological developments (Dalal et al., 2017; Holland & Piper, 2016; Koh & Chai, 2016). Some instructors may have found it difficult to search and find appropriate technological tools to deliver their courses. As Li et al. (2015) have suggested, having few opportunities to deal with technological issues might influence knowledge about integrating technology at a limited level.

In order to learn concepts appropriately, instructors need to have PK, including knowledge of different course delivery methods. Thus, instructors can use different methods to design their courses, including collaborative interactive online activities for students’ effective learning (Ferdig, 2006). Because this knowledge is a prerequisite for developing TPACK, the instructor must master it (Tanak, 2019). In this study, almost all instructors reported positive experiences about developing their PK and CK. This result was unexpected. There was in fact no change in the curriculum during the pandemic period. CK includes knowledge of concepts, facts, procedures, and theories; knowledge to combine and organize ideas; and knowledge of scientific evidence and facts (Mishra & Koehler, 2006). The majority of the instructors stated that they showed particular improvement in CK.

Therefore, it is assumed that the digital materials in different formats and the contents of the material that the instructors used in their online teaching contributed to the development of their CK beyond content delivery. The fact that the instructors perceived themselves as relatively less developed in TPK, TCK, and TPACK dimensions indicates that they may not have had enough time to learn new technologies or evaluate how they would teach students with these technologies during the two-term teaching process they were exposed to during the pandemic. Another reason might result from the fact that they used their existing technological knowledge, adapting the technologies they already knew or used during their teaching in the pandemic. Hsu et al. (2013) have also suggested that instructors with good training experience use various technologies. Thus, instructors may not have considered their use of these technologies as a development as they already knew how to use them before the pandemic and didn’t compare their previous use to their use in a pandemic situation.

Instructors demonstrated positive perspectives, with an average of > 4.00 in all dimensions of TPACK. PCK had the highest score, with an average of 4.51, and TK had the lowest score, with an average of 4.04. Even though they are in different departments from faculties of education, the positive perspectives of the instructors regarding the pandemic process in terms of preparing and presenting the content for online learning, using technologies for online teaching, and conducting their lessons in this way might result from the fact that they recognized online teaching during the pandemic as an opportunity to deliver teaching in a different way. Different institutions or departments likely had different training. However, instructors’ evaluations of themselves as capable of conducting their courses online, even if they did not receive such training, may have resulted from the organizational principles, the internal motivation of the instructors, and the demands of the students. In addition, in-service training that instructors can quickly experience occur on platforms such as Zoom, Google Meet, Microsoft Teams, Moodle, and Blackboard, and institutions’ technological support for online teaching may have played a role in their positive evaluations.

Within the TPACK framework, the instructors’ evaluations can help determine the methods and technologies that will enable students to learn effectively and use the technologies where necessary for the planning, practising, and assessment stages of teaching. In general, the development regarding the TPACK framework has been realized at a high level.

On one hand, the fact that instructors needed to rely on such assessments may have prevented them from seeking new ways to improve themselves during the pandemic. On the other hand, responses to the item “I can lead my colleagues in the selection and use of appropriate methods and technologies” scored relatively lower than the other items. Also, the instructors of faculty of education may tend to apply new ways of learning by mixing them with their existing theoretical knowledge. However, an important reason why faculty members did not make positive evaluations about leading their colleagues regarding TPACK may be because they did not have enough time to test their own TPACK levels during this period, and the results of their practices were not yet clear.

Moreover, instructors’ positive evaluations of TCK and TPK may be related to their abilities to use existing online teaching technologies knowledge and newly learned technologies to teach relevant content. This can be interpreted that they used technology not only for presenting content but also for building a student-centred environment. As PCK is defined as knowledge of the material, the reasons for choosing the material, and plans to teach the material to students (Dunlosky et al., 2013; Magnusson et al., 1999), in this dimension, there is no direct interaction with technology. Thus, the instructors’ previous experiences can be reflected in ERT. At this point, it can be evaluated that during the pandemic period, instructors were able to use the teaching strategies they had already determined regarding many types of knowledge. Due to the static nature of CK, it was likely not easy for the instructors to develop CK in the context of the pandemic. Mourlam et al. (2021) have stated that prior knowledge (PCK) may not adequately meet the needs of a new context; however, instructors who responded to this study may have used available digital materials instead of creating their own digital content to quickly deliver lectures in some cases. Therefore, either the instructors’ level of PCK at the time was sufficient to present the relevant content, or it was reconstructed in a positive way during the pandemic. When the content is mostly that of an operational and practising nature, instructors might use various Web 2.0 tools to deliver it. However, when the content is more static and theoretical, the tools for delivering this kind of content are limited. Thus, the type of content may have indirectly affected participants’ use of various technologies used to present the content.

In many of the TPACK studies, the subdimensions somehow affect each other or may be a prerequisite for each other. Our findings accord with previous studies in that all components have a moderately positive relationship with each other (Tseng et al., 2022). When the components are examined separately, it can be said that the least significant relationship is between PCK and TK and that teaching content does not change much with new technologies. In some studies, instructors’ seniority is shown to correlate positively (Akturk & Saka Ozturk, 2019) or negatively (Karakaya & Avgin, 2016) with TPACK. In this study, it is noteworthy that that the seniority of the instructors did not result in significant differences for any component of TPACK. As Archambault and Crippen (2009) have suggested, instructors without online teaching experience were in the process of learning how to teach online. Instructors continued to find what worked best and were determined to keep trying different methods and strategies to do so. One reason for this may be that the higher education institutions’ set principles to be followed for the pandemic period improved the instructors’ TPACK to some extent. The institutions used different software, such as Blackboard, BigBlueButton, Cisco, and some other generic tools. In addition, there was no significant difference between the TPACK components among the instructors who delivered courses synchronously or asynchronously. In this framework, many institutions determine the LMS and live course environment to be used and developed as a framework for digital materials to be used. Therefore, instructors with low TPACK knowledge may not need to improve themselves, and those who are already at a high level may not need extra development to conduct lessons as there are predetermined frameworks and tools for online teaching.

Some prior studies have focused on the dimension of interaction in online learning and found that instructors should develop knowledge to enhance interaction (Evans & Myrick, 2015; Hew & Cheung, 2014). In this study, it is noteworthy that participants highly and positively evaluated items about technologies that would provide a better understanding of the subject within the framework of TPACK knowledge, the use of technologies suitable for the teaching method, and technologies that would enable students to study more willingly. Considering the interaction between students’ understanding and motivation, the positive answers given to these items may also be related to the instructors’ thinking that they had made progress in online teaching. These findings concur with results of previous work (Breslow et al., 2013; Koutropoulos et al., 2012; Liu et al., 2005) emphasizing the creation of a supportive online learning environment. Instructors might have mastered basic skills to use an online platform, which mainly focus on teaching knowledge about using all kinds of tools to strengthen instructor-student interactions in order to carry about more diverse online activities (Li et al., 2015). However, explanations for these different findings might be related to the fact that instructors carried out online teaching freely and personally in the previous studies, while in this study, the pandemic background made teachers to find quick solutions.

Overall, the improvements in TK, CK, PK, and TPK, TCK, and PCK during the pandemic are positively evaluated by the instructors. Positive average mean scores in these dimensions indicate that instructors’ knowledge is high related to their abilities to use a variety of teaching strategies, to create materials, and to plan the scope and sequence of topics within their course. This finding of the present study is consistent with the findings reported by Elçi (2020) that the compulsory and urgent transition process does not seem to be much different than other transitions. In this study, among the important reasons for this finding are the results of the instructors’ use of online tools, organizational factors, such as the motivation to be successful, as well as students’ motivation for learning. Researchers suggest that the instructors became their own champions by developing their TPACK and practice in a limited time (Can & Silman-Karanfil, 2022).

This study helps explain instructors’ experiences of a transition in their traditional classrooms to a novel online setting for which they were likely not prepared (Mourlam et al., 2021). An obvious limitation is that the sample size was relatively small. Deeper investigation about the target sample can be done by linking instructors’ self-reported knowledge to their recent experiences in the pandemic period.

The purpose of this study was to examine educational faculty instructors’ perspectives about their knowledge in the TPACK conceptual framework. Their ratings of their own knowledge in non-technological areas (PK, CK, and PCK) were relatively higher than those including technological knowledge (TK, TPK, and TCK). What is evident from the results is that instructors felt positive about issues related to TPACK. In Turkish educational institutions, the scores related to instructors’ perspectives are positively correlated as the nature of TPACK involves a teaching knowledge. In the COVID-19 emergency situation, several contexts influenced in multiple ways such as using tools, seeking for new teaching approaches, creating new and unfamiliar situations that likely impacted instructors’ skills to teach online.

Understanding how instructors’ pedagogical and technological knowledge affect technology adoption is critical in facilitating effective integration of technology after the pandemic. In this study, during ERT, instructors somewhat reconstructed their TPACK, adapted their TPACK, or did not change previous TPACK in the context of planning lessons, using teaching strategies to convey content, and evaluating students’ work. In this context, our results again confirmed that TPACK is a framework that should be used to examine instructors’ knowledge of teaching online within not only new but also unfamiliar technologies. Overall, it can be concluded that the pandemic has been an opportunity to exercise ERT and evaluate challenges that emerge during emergencies, including ones that may happen in the future.

Ultimately, instructors need to have sufficient knowledge of technology, pedagogy, and content to teach online effectively. The importance of instructors’ training on the TPACK framework emerges as a key factor for effective ERT considering the changes required from conventional online teaching practices. Therefore, a systematic training initiative should be provided to holistically develop instructors’ TPACK required to deliver their courses efficiently in emergency situations. Moreover, TPACK, with its components, will also assist instructors in their decision-making in emergency cases that require them to take actions towards delivering effective courses in changing situations and environments. We hope this study brings new insights regarding instructors’ current TPACK developments and that it helps provide an understanding of the demanding circumstances present in emergency teaching situations.

Ahtiainen, R., Eisenschmidt, E., Heikonen, L., & Meristo, M. (2022). Leading schools during the COVID-19 school closures in Estonia and Finland. European Educational Research Journal, 1-18.

Akturk, A. O., & Saka Ozturk, H. (2019). Teachers’ TPACK levels and students’ self-efficacy as predictors of students’ academic achievement. International Journal of Research in Education and Science (IJRES), 5(1), 283-294.

Archambault, L., & Crippen, K. (2009). Examining TPACK among K-12 online distance educators in the United States. Contemporary Issues in Technology and Teacher Education, 9(1), 71-88. https://www.learntechlib.org/primary/p/29332/

Arcueno, G., Arga, H., Manalili, T. A., & Garcia, J. A. (2021, July 7-9). TPACK and ERT: Understanding teacher decisions and challenges with integrating technology in planning lessons and instructions. DLSU Research Congress 2021, De La Salle University, Manila, Philippines. https://login.easychair.org/publications/preprint_download/STc4

Barbour, M. K., LaBonte, R., Hodges, C. B., Moore, S., Lockee, B. B., Trust, T., Bond, M. A., Hill, P., & Kelly, K. (2020, December). Understanding pandemic pedagogy: Differences between emergency remote, remote, and online teaching. State of the Nation: K-12 e-Learning in Canada. http://hdl.handle.net/10919/101905

Breslow, L., Pritchard, D. E., DeBoer, J., Stump, G. S., Ho, A. D., & Seaton, D. T. (2013). Studying learning in the worldwide classroom: Research into edX’s first MOOC. Journal of Research & Practice in Assessment, 8, 13-25. https://eric.ed.gov/?id=ej1062850

Can, I., & Silman-Karanfil, L. (2022). Insights into emergency remote teaching in EFL. ELT Journal, 76(1), 34-43. https://doi.org/10.1093/elt/ccab073

Canbazoglu Bilici, S., Guzey, S. S., & Yamak, H. (2016). Assessing pre-service science teachers’ technological pedagogical content knowledge (TPACK) through observations and lesson plans. Research in Science and Technological Education, 34(2), 237-251. https://doi.org/10.1080/02635143.2016.1144050

Cheng, K.-H. (2017). A survey of native language teachers’ technological pedagogical and content knowledge (TPACK) in Taiwan. Computer Assisted Language Learning, 30(7), 692-708. https://doi.org/10.1080/09588221.2017.1349805

Ciptaningrum, D. S. (2017). The development of the survey of technology use, teaching, and technology-related learning experiences among pre-service English language teachers in Indonesia. Journal of Foreign Language Teaching & Learning, 2(2), 11-26. https://doi.org/10.18196/ftl.2220

Dalal, M., Archambault, L., & Shelton, C. (2017). Professional development for international teachers: Examining TPACK and technology integration decision making. Journal of Research on Technology in Education, 49(3-4), 117-133. https://doi.org/10.1080/15391523.2017.1314780

Dunlosky, J., Rawson, K. A., Marsh, E. J., Nathan, M. J., & Willingham, D. T. (2013). Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychological Science in the Public Interest, 14(1), 4-58. https://doi.org/10.1177/1529100612453266

Elçi, A. (2020). An urgency for change in roles: A cross analysis of digital teaching and learning environments from students and faculty perspective. In E. Alqurashi (Ed.), Handbook of research on fostering student engagement with instructional technology in higher education (pp. 462-483). IGI Global. https://doi.org//10.4018/978-1-7998-0119-1.ch025

Evans, S., & Myrick, J. G. (2015). How MOOC instructors view the pedagogy and purposes of massive open online courses. Distance Education, 36(3), 295-311. https://doi.org/10.1080/01587919.2015.1081736

Ferdig, R. E. (2006). Assessing technologies for teaching and learning: Understanding the importance of technological pedagogical content knowledge. British Journal of Educational Technology, 37(5), 749-760. https://doi.org/10.1111/j.1467-8535.2006.00559.x

Ferri, F., Grifoni, P., & Guzzo, T. (2020). Online learning and emergency remote teaching: Opportunities and challenges in emergency situations. Societies, 10(4), Article 86. https://doi.org/10.3390/soc10040086

Getenet, S. T., Beswick, K., & Callingham, R. (2016). Professionalizing in-service teachers’ focus on technological pedagogical and content knowledge. Education and Information Technologies, 21(1), 19-34. https://doi.org/10.1007/s10639-013-9306-4

Giannakos, M. N., Doukakis, S., Pappas, I. O., Adamopoulos, N., & Giannopoulou, P. (2015). Investigating teachers’ confidence on technological pedagogical and content knowledge: An initial validation of TPACK scales in K-12 computing education context. Journal of Computers in Education, 2, 43-59. https://doi.org/10.1007/s40692-014-0024-8

Harris, J., & Hofer, M. (2009). Instructional planning activity types as vehicles for curriculum-based TPACK development. In I. Gibson, R. Weber, K. McFerrin, R. Carlsen, & D. Willis (Eds.), Proceedings of SITE 2009—Society for Information Technology & Teacher Education International Conference (pp. 4087-4095). Association for the Advancement of Computing in Education (AACE). https://www.learntechlib.org/p/31298/

Haviz, M., Maris, I. M., & Herlina, E. (2020). Relationships between teaching experience and teaching ability with TPACK: Perceptions of mathematics and science lecturers at an Islamic university. Journal of Science Learning, 4(1), 1-7. https://eric.ed.gov/?id=EJ1281833

Hew, K. F., & Cheung, W. S. (2014). Students’ and instructors’ use of massive open online courses (MOOCs): Motivations and challenges. Educational Research Review, 12, 45-58. https://doi.org/10.1016/j.edurev.2014.05.001

Hodges, C. B., & Fowler, D. J. (2020). COVID-19 crisis and faculty members in higher education: From emergency remote teaching to better teaching through reflection. International Journal of Multidisciplinary Perspectives in Higher Education, 5(1), 118-122. https://doi.org/10.32674/jimphe.v5i1.2507

Holland, D. D., & Piper, R. T. (2016). A technology integration education (TIE) model for millennial preservice teachers: Exploring the canonical correlation relationships among attitudes, subjective norms, perceived behavioral controls, motivation, and technological, pedagogical, and content knowledge (TPACK) competencies. Journal of Research on Technology in Education, 48(3), 212-226. https://doi.org/10.1080/15391523.2016.1172448

Horzum, M. B., Akgün, Ö. E., & Öztürk, E. (2014). The psychometric properties of the technological pedagogical content knowledge scale. International Online Journal of Educational Sciences, 6(3), 544-557. https://doi.org/10.15345/iojes.2014.03.004

Hsu, C. Y., Liang, J. C., Chai, C. S., & Tsai, C. C. (2013). Exploring preschool teachers’ technological pedagogical content knowledge of educational games. Journal of Educational Computing Research, 49(4), 461-479. https://doi.org/10.2190/EC.49.4.c

Juanda, A., Shidiq, A. S., & Nasrudin, D. (2021). Teacher learning management: Investigating biology teachers’ TPACK to conduct learning during the COVID-19 outbreak. Jurnal Pendidikan IPA Indonesia / Indonesian Journal of Science Education, 10(1), 48-59. https://doi.org/10.15294/jpii.v10i1.26499

Karakaya, F., & Avgin, S. S. (2016). Investigation of teacher science discipline self-confidence about their technological pedagogical content knowledge (TPACK). European Journal of Education Studies, 2(9). https://doi.org/10.5281/zenodo.165850

Koehler, M., & Mishra, P. (2009). What is technological pedagogical content knowledge (TPACK)? Contemporary Issues in Technology and Teacher Education, 9(1), 60-70. https://www.learntechlib.org/primary/p/29544/

Koehler, M. J., Mishra, P., & Cain, W. (2013). What is technological pedagogical content knowledge (TPACK)? Journal of Education, 193(3), 13-19. https://doi.org/10.1177/002205741319300303

Koh, J. H. L., & Chai, C. S. (2016). Seven design frames that teachers use when considering technological pedagogical content knowledge (TPACK). Computers & Education, 102, 244-257. https://doi.org/10.1016/j.compedu.2016.09.003

Koutropoulos, A., Gallagher, M. S., Abajian, S. C., de Waard, I., Hogue, R. J., Keskin, N. Ö., & Rodriguez, C. O. (2012). Emotive vocabulary in MOOCs: Context and participant retention. European Journal of Open, Distance and E-Learning, 15(1). https://eric.ed.gov/?id=EJ979609

Liu, X., Bonk, C. J., Magjuka, R. J., Lee, S., and Su, B. (2005). Exploring four dimensions of online instructor roles: A program level case study. Online Learning, 9(4), 29-48. https://doi.org/10.24059/olj.v9i4.1777

Lamminpää, M. (2021). Exploring Finnish EFL teachers’ perceived technological pedagogical content knowledge (TPACK) following emergency remote teaching: A quantitative approach [Master’s thesis, University of Turku]. UTUPub. https://urn.fi/URN:NBN:fi-fe2021050428691

Li, Y., Zhang, M., Bonk, C. J., & Guo, Y. (2015). Integrating MOOC and flipped classroom practice in a traditional undergraduate course: Students’ experience and perceptions. International Journal of Emerging Technologies in Learning, 10(6), 4-10. https://doi.org/10.3991/ijet.v10i6.4708

Magnusson, S. J., Borko, H., & Krajcik, J. S. (1999). Nature, sources, and development of pedagogical content knowledge for science teaching. In J. Gess-Newsome & N. Lederman (Eds.), Examining Pedagogical Content Knowledge (pp. 95-132). Boston, MA: Kluwer Press.

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6), 1017-1054. https://doi.org/10.1111/j.1467-9620.2006.00684.x

Mourlam, D., Chesnut, S., & Bleecker, H. (2021). Exploring preservice teacher self-reported and enacted TPACK after participating in a learning activity types short course. Australasian Journal of Educational Technology, 37(3), 152-169 https://doi.org/10.14742/ajet.6310

Oliver, M. (2011). Handbook of technological pedagogical content knowledge (TPCK) for educators. Learning, Media and Technology, 36(1), 91-93. https://doi.org/10.1080/17439884.2011.549829

Sang, G., Tondeur, J., Chai, C. S., & Dong, Y. (2016). Validation and profile of Chinese pre-service teachers’ technological pedagogical content knowledge scale. Asia-Pacific Journal of Teacher Education, 44(1), 49-65. https://doi.org/10.1080/1359866X.2014.960800

Shulman, L. S. (1986). Those who understand: Knowledge growth in teaching. Journal of Education, 15(2), 4-14. https://doi.org/10.3102/0013189X015002004

Supiano, B. (2020, April 30). Why you shouldn’t try to replicate your classroom teaching online. The Chronicle of Higher Education. https://www.chronicle.com/newsletter/teaching/2020-04-30

Tanak, A. (2019). Designing TPACK-based course for preparing student teachers to teach science with technological pedagogical content knowledge. Kasetsart Journal of Social Sciences, 41(1), 53-59. https://doi.org/10.1016/j.kjss.2018.07.012

Thomas, M S., & Rogers, C. (2020) Education, the science of learning, and the COVID-19 crisis. Prospects, 49, 87-90. https://doi.org/10.1007/s11125-020-09468-z

Tseng, J. J., Chai, C. S., Tan, L., & Park, M. (2022). A critical review of research on technological pedagogical and content knowledge (TPACK) in language teaching. Computer Assisted Language Learning, 35(4), 948-971. https://doi.org/10.1080/09588221.2020.1868531

Tyarakanita, A. (2020). A case study of pre-service teachers’ enabling TPACK knowledge: Lesson design projects. ELS Journal on Interdisciplinary Studies in Humanities, 3(2), 158-169.

Valtonen, T., Sointu, E., Kukkonen, J., Kontkanen, S., Lambert, M. C., & Mäkitalo-Siegl, K. (2017). TPACK updated to measure pre-service teachers’ twenty-first century skills. Australasian Journal of Educational Technology, 33(3). https://doi.org/10.14742/ajet.3518

Verawardina, U., Asnur, L., Lubis, A. L., Hendriyani, Y., Ramadhani, D., Dewi, I. P., & Sriwahyuni, T. (2020). Reviewing online learning facing the COVID-19 outbreak. Journal of Talent Development and Excellence, 12, 385-392.

Voogt, J., Fisser, P., Pareja Roblin, N., Tondeur, J., & van Braak, J. (2013). Technological pedagogical content knowledge—A review of the literature. Journal of Computer Assisted Learning, 29(2), 109-121. https://doi.org/10.1111/j.1365-2729.2012.00487.x

How Instructors’ TPACK Developed During Emergency Remote Teaching: Evidence From Instructors in Faculties of Education by Ünal Çakıroğlu, Merve Aydın, Yılmaz Bahadır Kurtoğlu, and Ümit Cebeci is licensed under a Creative Commons Attribution 4.0 International License.