Volume 24, Number 4

Craig E. Shepherd1, Doris U. Bolliger2,*, and Courtney McKim3

1University of Memphis, 2Texas Tech University, 3University of Wyoming, *corresponding author

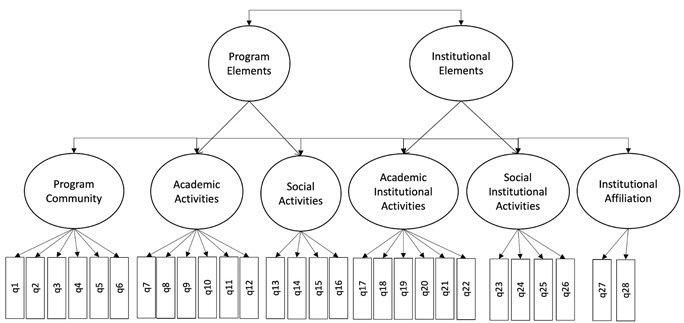

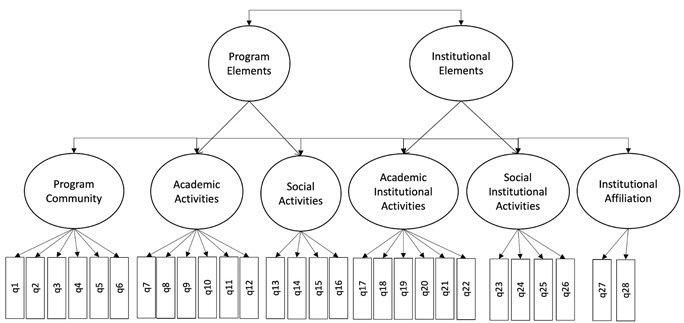

This study focused on the development and validation of the Sense of Online Community Scale (SOCS), which includes 28 Likert-type scale items across six subscales: (a) program community, (b) program academic activities, (c) program social activities, (d) institutional academic activities, (e) institutional social activities, and (f) affiliation. The validation process included an implementation with 293 learners enrolled in online programs at a higher education institution in the eastern United States. The model was evaluated with and without outliers, and results show that the model aligned well with the SOCS. The means of all items except one exceeded 3.5 on a 5.0 scale, ranging from 1 (strongly disagree) to 5 (strongly agree). Time in an online program was not a statistically significant predictor of the validation model, though most of our participants were in the first or second year of their degree programs. Findings demonstrate that the SOCS is a reliable and valid instrument that other researchers may use to investigate community in online environments on both the program and the institutional level.

Keywords: online community, online students, online learning, confirmatory factor analysis, higher education

As higher education institutions increasingly turn to online delivery to increase student numbers, expand access to university programs, and meet the interests of learners who have become more amenable to distance education since the COVID-19 pandemic, they also seek solutions to combat feelings of isolation among students and faculty, increase retention, and better prepare learners for career advancement and promotion. One common approach is the establishment of a sense of community. Community has been defined as a sense of membership, belonging, and trust (Glazer et al., 2013; McMillan & Chavis, 1986; Rovai, 2001). As faculty and students interact and collaborate with each other over time, relationships strengthen, mutual goals form, and all parties benefit (Jason et al., 2015; Jiang, 2016; Wenger et al., 2002).

However, most community research focuses on implementations within courses (Palloff & Pratt, 2007; Rockinson-Szapkiw & Wendt, 2015; Shepherd & Bolliger, 2023a). This focus is problematic because courses may lack the duration required for sustained community development (Bellah et al., 1985; Liu et al., 2007; Smith et al., 2017); course-based communities place additional responsibility on instructors for their implementation and maintenance (Shepherd & Bolliger, 2019); and a focus on course practices ignores the larger program, institutional, and professional endeavors that influence retention and success of students and faculty members (Lee & Choi, 2011; Milman et al., 2015; Shepherd & Bolliger, 2023a; Tinto, 1999, 2012). Based on these concerns, researchers are beginning to broaden community foci (Bolliger et al., 2019; Glazer et al., 2013; Jason et al., 2015; Shepherd & Bolliger, 2019). Indeed, Shepherd and Bolliger (2022) defined community as “a feeling of belonging, affiliation, purpose, and interdependence that exists among instructors, support staff, students, alumni, and program, college, or university friends as they collaborate and progress on shared learning goals and activities over time” (p. 2). Although online programs are beginning to consider community development from both micro and macro perspectives, little research indicates what events and activities promote a sense of community among learners. The purpose of this study was to develop and validate an instrument to help higher education institutions determine what academic and social events influence perception of community formation.

Although most research focuses on course-based communities in online degree programs (O’Shea et al., 2015; Rockinson-Szapkiw & Wendt, 2015; Speiser et al., 2022), the concept of extending community to other settings is not new. Prior to online learning, researchers discussed the need to extend community concepts beyond proximate, physical spaces to consider disbursed relationships (McMillan & Chavis, 1986; Wellman, 1979). These concepts heralded views of community in online settings (Rovai, 2001) and pointed to broader foci of community establishment. Hill (1996) indicated that communities look and function differently in classrooms and programs. Wenger et al. (2002) stated that community members are often associated with myriad communities, some accounting for larger social perspectives. In higher education settings, some researchers highlight the individual, the course, and the institution as separate community layers (Bolliger et al., 2019; Glazer et al, 2013; Palloff & Pratt, 2007; Shepherd & Bolliger, 2019). Jason et al. (2015) referred to these layers as micro and macro elements of community. In these instances, a sense of community was associated with individual, instructor, and institutional factors. Shepherd and Bolliger (2023a) further included a professional dimension that begins within programs but extends beyond degree attainment. The following sections describe these layers.

Higher education institutions exert time and energy to make their campuses welcoming and inviting. Physical buildings and manicured grounds provide a sense of prestige (Nathan, 2005). Recreation facilities, updated housing, museums, and other amenities attract students. Yet, community often begins prior to entering a campus. Decades of academic tradition, collegiate sports, university colors, mascots, and logos brand institutions of higher education (Dennis et al., 2016). These resources provide a sense of affiliation and shared interest among individuals on and off campus. Indeed, many aspects of higher education promote community. Admission requirements formalize membership and instill a sense of belonging (Shepherd & Bolliger, 2023a; Tinto, 1999). Administrative policies and procedures articulate shared governance among students, faculty members, and professionals while encouraging continued and sustained interaction to promote learning among groups with similar interests and goals (Smith et al., 2017; Tinto, 2012; Wenger et al., 2002). These interactions over time foster trust, a sense of belonging and value, and interdependence, and they add to the shared history that influences the institution’s reputation and brand (Dennis et al., 2016). The role of the institution for online students follows a similar trajectory. Although physical grounds and buildings may be less proximate, student ties to a physical, storied location influence a sense of membership. Indeed, most online students live within an hour of campus (Seaman et al., 2018; Shepherd & Bolliger, 2023b; Xu & Jaggars, 2013) and are aware of its physical and social presence in the surrounding community. Existing policies and procedures also provide a guide for community entrance, interaction, and goal attainment among online students (Shepherd & Bolliger, 2023a; Wenger et al., 2002).

Institutions play a large role in helping students enter and feel that they belong in learning communities (Lee & Choi, 2011; Tinto, 1999). Academic and social programs help students enter and maintain success in the community. Academic supports such as writing centers, libraries, and career and counseling centers provide needed services for diverse students (Kang & Pak, 2023; Milman et al., 2015; Trespalacios et al., 2023). So, too, do the myriad student groups, clubs, and activities that help students locate others with similar interests and aid the transition to university life (Exter et al., 2009; Nathan, 2005; Shepherd & Bolliger, 2019; Tinto, 1999). These resources promote a sense of belonging and safety. They also promote trust that individual goals will be attainable at the institution.

One focus of higher education is to help students narrow or refine their learning interests to those that match degree programs. Although university services focus on the broader community, program areas support more specific academic and professional goals. Within programs, learners find course sequences and action plans designed to help them enter or progress in a desired profession. Within programs, learners interact with others who have similar goals and interests; they devote their time and energy to extend learning, collaborate with others, and strengthen professional relationships. Program policies and procedures provide guidance about how to navigate the environment successfully, and how to deepen involvement with the learning community (Tinto, 1999).

A portion of community building occurs in courses. Within courses, students become better acquainted with each other and their instructors. They also gain additional knowledge regarding program and professional interests. Group projects, discussions, instructor feedback, and other activities are often cited as community promoters (Milman et al., 2015; O’Shea et al., 2015; Rockinson-Szapkiw & Wendt, 2015; Shepherd & Bolliger, 2019, 2023b). Although courses are useful for community promotion, they may be insufficient to develop sustained communities, particularly ones that extend beyond graduation and help students enter a larger profession (Shepherd & Bolliger, 2023a, 2023b). Courses are limited in duration, ranging from a few weeks to a few months. These short durations may provide insufficient interaction to yield the perceptions of trust, safety, belonging, and interdependence needed for sustained community formation (Bellah et al., 1985; Liu et al., 2007; Smith et al., 2017). Course-based communities put the onus of development and maintenance on individual faculty members. This onus is rarely supported in institutions of higher education and may not be sustainable (Bolliger et al., 2019; Fong et al., 2016; Larson & James, 2022). Additional services are required to sustain interaction, develop shared goals and histories, bolster a sense of belonging, and promote interdependence (Jason et al., 2015; Lee & Choi, 2011; Milman et al., 2015; Shepherd & Bolliger, 2019, 2023b).

Like the institutional level, program support consists of academic and social endeavors. Academic endeavors provide background knowledge to further enter the learning community, extend opportunities to collaborate and grow the knowledge base over time, and facilitate entrance or access to professional communities (Aldosemani et al., 2016; Wenger et al., 2002). These services (e.g., individual and group advising, research participation, reading groups, service learning opportunities, intern- and externships) extend interactions among students, faculty, and others over time; are not limited to course enrollments; and may broaden community foci (Shepherd & Bolliger, 2023b). Thus, programs represent a microcommunity within higher education institutions as well as within related professions.

Yet, social activities are also important for community formation and maintenance (Shepherd & Bolliger, 2019, 2023b). Social events promote shared experiences in non-threatening environments. These experiences provide easy access to faculty and other professionals that may otherwise seem beyond reach to online students (Exter et al., 2009). Additionally, social events may not be limited to online activities. Whereas some research suggests that reminders of on-campus activities further alienate distance students because they are reminded of the fewer services available to them (O’Shea et al., 2015), other studies suggest that campus-specific social events are helpful for community formation because most online students live within close proximity of campus (Shepherd & Bolliger, 2023b).

Regardless of the services provided for community facilitation and maintenance, students play an integral role in community formation. Most online students are nontraditional learners (Kang & Pak, 2023; Seaman et al., 2018). They are more likely to be older students, have dependent care responsibilities, and have years of experience in an occupation (Milman et al., 2015; Seaman et al., 2018). These students come with myriad support communities. While the majority indicate they want a sense of community within their degree program, a smaller number state they have no desire for student and faculty interaction and the endeavors that promote it (Exter et al., 2009; O’Shea et al., 2015; Shepherd & Bolliger, 2023b). Indeed, when faculty members were surveyed about the importance of community in online degree programs, several indicated that limited student attendance made them second-guess their efforts (Bolliger et al., 2019). Expressed interests in community formation may not equate with actual effort. Community partners may need to better educate students regarding the purposes behind event creation and the role of community during and after graduation (Shepherd & Bolliger, 2023b). Additionally, it is likely that time in the program influences the desire for specific institutional and program activities. Those entering the program may desire social events as opportunities to get to know faculty members, community partners, and colleagues (Baker & Pifer, 2011; Pifer & Baker, 2016). More established students may desire opportunities to co-construct knowledge and network with the larger profession. Strengthening professional networks and communities may be a larger focus of those nearing graduation. Yet, little research has examined the time factors of community formation (Smith et al., 2017).

The purposes of this study were to develop the Sense of Online Community Scale (SOCS), based on the framework developed by Shepherd and Bolliger (2023a), who focused on academic and social activities offered on the program and institutional levels, and to establish the instrument’s reliability and construct validity. More specifically, the objectives of the study were to (a) develop an instrument to measure students’ sense of community in online academic programs, (b) validate the instrument by verifying its factor structure, and (c) examine the relationships of the latent variables to time in an online program.

The instrument was developed in two phases. First, several items were developed in different categories by the researchers. Once a first draft was generated, the instrument was reviewed by a panel of experts. Second, the instrument was validated by conducting a confirmatory factor analysis.

Scale items were developed after an extensive literature search. Thirty items rated on a 5-point Likert-type scale (1 = strongly disagree, 2 = disagree, 3 = neither agree nor disagree, 4 = agree, and 5 = strongly agree) were developed for all factors. A “not applicable” response category was also included for all items. To ensure face validity, a panel of four experts reviewed the instrument prior to data collection. These individuals had at least six years of online teaching experience in higher education and were active researchers in online learning environments. All experts had terminal degrees; three faculty members held the rank of associate professor, and one person held the rank of professor emerita.

Panel members received instructions and the instrument via email. They were informed about the aim of the instrument and were instructed to review all items and categories for clarity and fit, modify items, and add or delete statements. Based on the reviewers’ feedback, several items were revised, two items were deleted, and items that addressed connectedness, trust, and affiliations were grouped into categories. The final version of the instrument included 28 Likert-type statements, one open-ended question, seven questions pertaining to participants’ academic programs, and three demographic questions. Categories included the importance of program community, affiliation, and program and institutional elements. Program and institutional elements were divided into two subcategories: academic and social elements.

After approvals from all relevant Institutional Review Boards were obtained, data were collected from undergraduate and graduate students at one midsize, urban university located in the southern United States during fall 2021 and spring 2022 semesters. All students in online degree programs were invited to participate in the study via a mailing list. The invitation included the purpose of the study, its voluntary nature, and benefits and limitations of participation. A link to an anonymous online survey was embedded in the email invitation, and completers were informed that they could register for the drawing of one of ten $10 gift cards by providing their names and email addresses in a Google form. To increase the response rate, three reminders and “thank you” emails were sent (Dillman et al., 2014).

A total of 319 students responded to the survey. However, 26 cases had one third or more data missing and were deleted. The dataset included 16 outliers (z = ±3.0); however, these cases were deleted for the majority of analyses but not for testing the initial fit of the model. This resulted in 293 valid cases and a 14.4% response rate. Frequencies, mean scores, and standard deviations were generated.

Data were examined a priori to confirm parametric and multivariate assumptions were met. Bivariate correlations were examined for multicollinearity and correlations. There were no issues regarding multicollinearity, and correlations supported a priori expectations. All bivariate correlations between the subscales Program Community (items 1-6), Academic Program Activities (items 7-12), Social Program Activities (items 13-16), Academic Institutional Activities (items 17-22), Social Institutional Activities (items 23-26), and Institutional Affiliation (items 27 and 28) were significant and indicated initial support for the validation model.

The means and standard deviations based on the data with outliers removed (more on the removal of outliers discussed later) are reported in Table 1. All means exceeded 3.5 on a 5.0 scale (1 = strongly disagree, 2 = disagree, 3 = neither agree nor disagree, 4 = agree, and 5 = strongly agree) except for one item. Within the Program Community subscale, the item with the highest mean was item 5 (M = 4.42, SD = 0.68), which pertained to the feeling of belonging to the academic program. Completing program milestones (item 8) was the highest rated item for the subscale Academic Program Activities (M = 4.21, SD = 0.85). Virtual or remote social activities (item 14) was the highest rated item for the Social Program Activities subscale (M = 3.81, SD = 1.02). Academic support services provided by the institution (item 21) had the highest mean for the Academic Institutional Elements subscale (M = 4.14, SD = 0.86). Institution-wide initiatives regarding equity and inclusion (item 23) were most important for students in the Social Institutional Elements subscale (M = 3.87, SD = 0.93). With regard to Institutional Affiliation, students agreed the most with having a strong sense of affiliation with their current university (M = 3.76, SD = 1.06). Mean scores of all subscales ranged from 3.69 to 4.11. The Program Community subscale had the highest mean (M = 4.11, SD = 0.61), whereas the Social Program Activities had the lowest mean (M = 3.69, SD = 0.88).

Internal reliability coefficients, using Cronbach’s alpha, were adequate for all subscales: (a) Program Community, α = .80; (b) Academic Program Activities, α = .80; (c) Social Program Activities, α = .89; (d) Academic Institutional Activities, α = .84; (e) Social Institutional Activities, α = .87; and (f) Institutional Affiliation, α = .88. According to Kline (2016) and Nunnally and Bernstein (1994), reliability coefficients greater than .80 are considered adequate. The correlation coefficients among the items ranged from .37 to .74.

Table 1

Descriptive Information for Survey Items and Subscales (N = 277)

| Category and item | M | SD |

| Program community (Cronbach’s α = .80) | ||

| 1. Having a sense of community in my program (e.g., a sense of belonging, interconnection, trust) is important to me | 3.96 | 1.03 |

| The following are important to develop a sense of program community: | ||

| 2. Trusting others in my program | 4.23 | 0.72 |

| 3. Having similar interests with others in my program | 3.95 | 0.90 |

| 4. Having similar experiences with others in my program | 3.70 | 0.99 |

| 5. Feeling that I belong in my program | 4.42 | 0.68 |

| 6. Feeling that I am affiliated with my program | 4.37 | 0.76 |

| Descriptives for program community category | 4.11 | 0.61 |

| Academic program activities (Cronbach’s α = .80) | ||

| The following elements help me feel like I am part of a program community: | ||

| 7. Program advising activities (e.g., orientations, retreats, guidance regarding course selection) | 4.02 | 0.98 |

| 8. Completion of program milestones (e.g., portfolios, exams, defenses) | 4.21 | 0.85 |

| 9. Opportunities to participate in faculty research (e.g., research groups, presentations, publications) | 3.82 | 0.93 |

| 10. Opportunities to attend academic program events outside of courses (e.g., guest lectures, internships, field trips, professional meetings) | 3.97 | 0.95 |

| 11. Professional resource sharing with others in my program (e.g., job postings, conference announcements, calls for proposal, professional services) | 4.14 | 0.84 |

| 12. Taking required courses that include students from outside the program (e.g., statistics, writing) | 3.61 | 1.04 |

| Descriptives for academic program activities category | 3.96 | 0.66 |

| Social program activities (Cronbach’s α = .89) | ||

| The following elements help me feel like I am part of a program community: | ||

| 13. In-person social activities within my program (e.g., picnics, parties, get-togethers) | 3.49 | 1.08 |

| 14. Virtual or remote social activities within my program (e.g., social media posts, online games, chat rooms) | 3.81 | 1.02 |

| 15. Student-initiated social activities within my program | 3.67 | 1.02 |

| 16. Professor-initiated social activities within my program | 3.80 | 0.94 |

| Descriptives for social program activities category | 3.69 | 0.88 |

| Academic institutional activities (Cronbach’s α = .84) | ||

| The following institutional elements help me feel like I am part of a program community: | ||

| 17. Academic events for multiple programs (e.g., capstone meetings, retreats, guest speakers, research days/symposia) | 3.82 | 0.90 |

| 18. Student organizations associated with my program | 3.86 | 0.88 |

| 19. Institutional wellness supports (e.g., personal counseling, health centers, fitness centers) | 3.84 | 0.97 |

| 20. Institutional career services (e.g., career counseling, interview support, résumé building) | 4.11 | 0.85 |

| 21. Institutional academic supports (e.g., writing centers, tutoring, library and research services) | 4.14 | 0.86 |

| 22. Voluntary interest groups (e.g., social media groups, study or research groups) | 3.78 | 0.93 |

| Descriptives for academic institutional activities category | 3.92 | 0.67 |

| Social institutional activities (Cronbach’s α = .87) | ||

| The following institutional elements help me feel like I am part of a program community: | ||

| 23. Institution-wide initiatives regarding equity and inclusion | 3.87 | 0.93 |

| 24. In-person or remote institution-wide sporting events (e.g., football, basketball, soccer) | 3.65 | 1.06 |

| 25. In-person or remote institution-wide fine and performing arts events (e.g., plays, concerts, ballets, art galleries) | 3.71 | 1.03 |

| 26. In-person or remote institution-wide celebrations (e.g., homecoming, graduation, Veteran’s Day celebrations) | 3.79 | 0.95 |

| Descriptives for social institutional activities category | 3.76 | 0.84 |

| Institutional affiliation (Cronbach’s α = .88) | ||

| 27. I have a strong affiliation (e.g., sense of membership) with my current online program | 3.74 | 1.05 |

| 28. I have a strong sense of affiliation with my current university | 3.76 | 1.06 |

| Descriptives for institutional affiliation category | 3.75 | 0.99 |

Note. Scale items ranged from 1 (strongly disagree) to 5 (strongly agree).

A confirmatory analysis of the hypothesized structural model was carried out using Mplus software (Muthén & Muthén, 2010). Model fit and parameter estimates are presented below. The model represents the conceptual framework presented earlier in the paper. There were six subscales (Program Community, Academic Program Activities, Social Program Activities, Academic Institutional Activities, Social Institutional Activities, and Institutional Affiliation). The subscales Program Community, Academic Program Activities, and Academic Institutional Activities comprised six items each. The subscales Social Program Activities and Social Institutional Activities each contained four items, and the Institutional Affiliation subscale had two items. The Academic Program Activities and Social Program Activities subscales created an overarching construct called Program Elements. The construct Academic Elements was measured by Academic Institutional Activities and Social Institutional Activities.

We evaluated structural model fit with several fit indices, such as the Comparative Fit Index (CFI values above 0.95 indicate very good fit, and those at or above 0.90 indicate reasonable fit; Bentler, 1990), Steiger’s Root Mean Square Error of Approximation (RMSEA values below 0.05 indicate a very good fit, and those at or below 0.10 indicate a reasonable fit; Steiger, 1990), and the Standardized Root Mean Square Residual (SRMR < .05; Hu & Bentler, 1999). Model fit estimates for the model with outliers were either good or acceptable fit to the data (x2 = 810.93, df = 342; CFI = 0.88; RMSEA = 0.08; SRMR = 0.07).

We also ran the model removing outliers. Outliers were determined to be responses with a z -score equal to or greater than 3.0. We evaluated structural model fit with several fit indices, such as the CFI (values above 0.95 indicate very good fit and those at or above 0.90 indicate reasonable fit; Bentler, 1990), Steiger’s RMSEA (values below 0.05 indicate a very good fit and those at or below 0.10 indicate a reasonable fit; Steiger, 1990), and the SRMR (< .05; Hu & Bentler, 1999). Model fit estimates for the validation model were either good or acceptable fit to the data (x2 = 812.78, df = 340; CFI = 0.86; RMSEA = 0.08; SRMR = 0.07).

Given that both models exhibited an acceptable/good fit to the data, we present the model with outliers removed (Figure 1). Estimates of the structural relationships among the model variables were interpreted. Estimated path coefficients were largely consistent with hypothesized linkages (all coefficients reported are standardized values and significant at p < .05). The best indicator of Program Community was item 6 (0.89), “Feeling that I am affiliated with my program,” followed by item 5 (0.838), “Feeling that I belong in my program.” For Academic Program Activities, the best predictor was item 10 (0.802), “Opportunities to attend academic program events outside of courses.” Item 15 (0.907), “Student-initiated social activities within my program,” was the best predictor of Social Program Activities. Item 22 (0.774), “Voluntary interest groups,” was the best indicator of Academic Institutional Activities, and item 24 (0.867), “In-person or remote institution-wide sporting events,” was the best indicator of Social Institutional Activities. Item 28 (0.888), “I have a strong sense of affiliation with my current university,” was the best indicator of Institutional Affiliation. Academic Program Activities was a better predictor of Program Elements (0.920) than Social Program Activities, and Academic Institutional Activities was a strong predictor of Institutional Elements (0.999). The correlation coefficients between the six factors ranged between 0.37 to 0.74 (Table 2). This suggests shared variance among the factors.

Figure 1

Confirmatory Factor Analysis Model

Table 2

Bivariate Correlations Coefficients Among the Six Factors

| Factor | 1 | 2 | 3 | 4 | 5 |

| 1. Program community | |||||

| 2. Academic program activities | 0.59 | ||||

| 3. Social program activities | 0.55 | 0.65 | |||

| 4. Academic institutional activities | 0.52 | 0.66 | 0.60 | ||

| 5. Social institutional activities | 0.46 | 0.57 | 0.54 | 0.74 | |

| 6. Institutional affiliation | 0.44 | 0.43 | 0.37 | 0.37 | 0.38 |

To examine the relationships of the latent variables to time in an online program, a regression analysis was performed. We created two constructs: (a) Academic Activities and (b) Social Activities (a combination of the program level and institutional level). Results suggested that time in an online program was not a statistically significant predictor of the validation model (standardized coefficient = 0.113). However, most students reported being in their first or second year. Because most students were early in their academic program (M = 1.625, SD = 0.8430), there was not much variability in the sample regarding time in program. The correlations between the constructs (Social, Academics, and Program Community) were examined. All correlation values were small and nonsignificant (Program Community, r = 0.063; Academic Activities, r = 0.114; Social Activities, r = 0.032).

Readers should be aware of limitations in this study. First, only one institution was selected to participate, and the selection process was not random. Participants who completed the survey may have had biased responses, compared with those who did not respond to the invitation to participate. Second, because only one large, research-intensive, public institution in the southeast United States participated, the study is geographically limited. In the future, other researchers may include a variety of sites based on criteria such as location and type and or size of institution. Last, due to the type of study, all data were self-reported; hence, social desirability may have impacted some of the responses.

Overall, participants agreed that having a sense of program community was important to them (M = 4.11). A feeling of affiliation and belonging also received the highest mean scores (M = 4.37 and 4.42 respectively) and were the most predictive of the Program Community subconstruct. This comes as no surprise as these factors (along with trust) are commonly included as components of community formation (Glazer et al., 2013; McMillan & Chavis, 1986; Rovai, 2001; Shepherd & Bolliger, 2023a). Mean scores for the program community subscale surpassed those of the institutional affiliation subscale (M = 3.75). This is not surprising because programs are more tailored to the personal and professional interests of their students than institutions (Tinto, 1999, 2012). However, it is surprising that participants did not rate institutional affiliation as high, given their status as first- and second-year students. Several researchers claim that institutions play a large role in helping students enter and feel welcomed in a new learning community (Baker & Pifer, 2011; Lee & Choi, 2011; Pifer & Baker, 2016; Tinto, 1999, 2012). As students enter unfamiliar social systems, institutional supports can help them acclimate to the new environment. However, it appears overall affiliation with the institution was not as desired among participants. Disinterest in institutional affiliation may be based on the nontraditional nature of participants. They may have had existing support groups and been able to remain in familiar living environments as they matriculated through degree programs. Additionally, they were more likely to have specific personal and professional goals associated with degree attainment, reducing the need for brand affiliation (Dennis et al., 2016). Regardless, program community is desired by most online students and is supported by institutional and program activities.

Across institutional and program events, participants rated academic activities higher than social activities for helping them feel like part of a program community. This finding is not surprising given the purpose of degree attainment and the nontraditional makeup of most online students, who often juggle family, professional, and personal activities with their educational pursuits (Milman et al., 2015; Stephen et al., 2020). It is noteworthy that although students rated completion of program milestones (M = 4.21), professional resource sharing (M = 4.14), and program advising activities (M = 4.02) higher than opportunities to attend academic program events outside of courses (M = 3.97), the latter was most predictive of the Academic Program Activities subscale. Similarly, although five of the six items in the Academic Institutional Activities subscale had higher mean scores than voluntary interest groups, the latter was the best predictor. Although all of these endeavors are useful for community formation and student persistence (Lee & Choi, 2011; Milman et al., 2015; Trespalacios et al., 2023), voluntary interest groups and extracurricular events may better capture student initiative to participate in community events because advising sessions, orientations, writing centers, and possibly research participation may be expected or mandated. Alternatively, some activities like orientations, advising, program milestones, and resource sharing may be expected norms in online programs and therefore not as indicative of program community efforts.

Remember, however, that the focus of this instrument was to move online community-building conversations beyond course activities. Thus, items regarding the role courses played in program community development were minimized. Although extracurricular events are the most predictive of Academic Program Activities, it does not mean that course activities are not useful for community formation. Ample research suggests that course endeavors play a major role in community formation (O’Shea et al., 2015; Rockinson-Szapkiw & Wendt, 2015; Rovai, 2001; Speiser et al., 2022). This instrument focuses on additional activities that lend to community formation.

Similarly, although institutional and program social activities were rated lower than academic activities, participants still rated them moderately useful for the formation of program community (M = 3.76 and 3.69 respectively). Interestingly, student-initiated activities emerged as the best predictor of the Social Program Activities subscale, even though professor-initiated social activities and virtual-remote social activities received higher mean scores. Again, it is possible the best predictive item captured student willingness and initiative to promote community. Additionally, although research suggests that most online students live within 50 miles of their institution (Seaman et al., 2018; Xu & Jaggars, 2013), participants rated in-person social activities lowest of all items in the social program activities subscale. This finding aligns with those of O’Shea et al. (2015), who reported that campus activities isolated some distance-based students by reminding them of unavailable opportunities. Despite this concern, the best predictor of the Institutional Social Activities subscale was in-person or remote institution-wide sporting events—despite having the smallest mean score on the subscale (behind institution-wide celebrations, equity and inclusion initiatives, and fine and performing arts events).

This finding regarding sporting events may demonstrate the desire of some students to align their educational interests and pursuits with the perceived prestige and branding of the institution (Dennis et al., 2016; Nathan, 2005). Students may want to tie their degree to an established and storied institution, believing that it may provide greater value than an online institution. More research is needed regarding this interpretation. Our study took place among online degree programs at a traditional brick-and-mortar institution. Students self-selected to attend this institution, and their views may not represent those of students who enroll in solely online institutions. Similarly, the institution competes in the top tier of collegiate athletics. In fully online institutions, university sports may not be a consideration. Yet, the predictability of institution-wide sporting events raises other questions. The United States has a long history of broadcasting collegiate sporting events to viewers across the nation via radio, television, and the Internet. Indeed, the means to provide these social activities at a distance predates most distance programs. The same may not be said of other social activities. Performing arts events, gallery exhibitions, student association events, and other endeavors do not receive comparable treatment. Thus, it is possible sporting events best predict Institutional Social Activities because they are the only social events consistently provided at a distance. Additional research is needed at fully online institutions as well as those without collegiate sporting programs to see how perceptions of institutional social activities compare at those locations.

Pifer and Baker (2016) alluded to time being a factor that impacts learner perceptions of community. As students become more familiar with norms and values, and as they progress in their academic programs by completing coursework and milestones, their desire may change for academic and social opportunities to connect with others in their program (Baker & Pifer, 2011; Pifer & Baker, 2016). However, results of this study did not show that learners’ time in program was a good predictor. Because most of our participants were students in their first or second year of studies, the dataset was limited. There is a need for future research with a more representative sample in terms of time in program that includes undergraduate and graduate students.

Evidence from the validation model in this study supports inferences from the SOCS. Results show that the instrument has good reliability and a good factor structure that supports the theoretical framework developed by Shepherd and Bolliger (2023a). Correlations among most of the subscales were moderate or low. However, a strong correlation was found between two of the subscales: Academic Institutional Activities and Social Institutional Activities (r = 0.74). Caution should be taken when using these subscales individually. Therefore, we recommend administering the entire scale to a sample.

In conclusion, the SOCS instrument is a reliable and valid instrument that other researchers may use to investigate community in online environments on the program and institutional level. Practitioners and administrators may use the instrument to develop appropriate academic and social activities for and make resources available to online learners that support the formation of community and assist in sustaining it.

We would like to express our gratitude to the following individuals who volunteered their time and expertise to the review of the instrument: Michael Barbour, Touro University; Jered Borup, George Mason University; Fethi Inan, Texas Tech University; and Suzanne Young, University of Wyoming. The thoughtful comments and suggestions of these individuals improved the final version of the instrument.

Aldosemani, T. I., Shepherd, C. E., Gashim, I., & Dousay, T. (2016). Developing third places to foster sense of community in online instruction. British Journal of Educational Technology, 47(6), 1020-1031. https://doi.org/10.1111/bjet.12315

Baker, V. L., & Pifer, M. J. (2011). The role of relationships in the transition from doctoral student to independent scholar. Studies in Continuing Education, 33(1), 5-17. https://doi.org/10.1080/0158037X.2010.515569

Bellah, R. N., Madsen, R., Sullivan, W. M., Swidler, A., & Tipton, S. M. (1985). Habits of the heart: Individualism and commitment in American life. Harper & Row.

Bentler, P. M. (1990). Comparative fit indexes in structural models. Psychological Bulletin, 107(2), 238-246. https://doi.org/10.1037/0033-2909.107.2.238

Bolliger, D. U., Shepherd, C. E., & Bryant, H. V. (2019). Faculty members’ perceptions of online program community and their efforts to sustain it. British Journal of Educational Technology, 50(6), 3283-3299. https://doi.org/10.1111/bjet.12734

Dennis, C., Papigiannidis, S., Alamanos, E., & Bourlakis, M. (2016). The role of brand attachment strength in higher education. Journal of Business Research, 69(8), 3049-3057. https://doi.org/10.1016/j.jbusres.2016.01.020

Dillman, D. A., Smyth, J. D., & Christian, L. M. (2014). Internet, phone, mail, and mixed-mode surveys: The tailored design method (4th ed.). John Wiley & Sons.

Exter, M. E., Korkmaz, N., Harlin, N. M., & Bichelmeyer, B. A. (2009). Sense of community within a fully online program: Perspectives of graduate students. Quarterly Review of Distance Education, 10(2), 177-194. https://www.infoagepub.com/qrde-issue.html?i=p54c3c7d4c7e8b

Fong, B. L., Wang, M., White, K., & Tipton, R. (2016). Assessing and serving the workshop needs of graduate students. Journal of Academic Librarianship, 42(5), 569-580. https://doi.org/10.1016/j.acalib.2016.06.003

Glazer, H. R., Breslin, M., & Wanstreet, C. E. (2013). Online professional and academic learning communities: Faculty perspectives. Quarterly Review of Distance Education, 14(3), 123-130. https://www.infoagepub.com/qrde-issue.html?i=p54c3c36640621

Hill, J. L. (1996). Psychological sense of community: Suggestions for future research. Journal of Community Psychology, 24(4), 431-438. https://doi.org/10.1002/(SICI)1520-6629(199610)24:4<431::AID-JCOP10>3.0.CO;2-T

Hu, L.-t., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6(1), 1-55. https://doi.org/10.1080/10705519909540118

Jason, L. A., Stevens, E., & Ram, D. (2015). Development of a three-factor psychological sense of community scale. Journal of Community Psychology, 43(8), 973-985. https://doi.org/10.1002/jcop.21726

Jiang, Y. W. (2016). Exploring the determinants of perceived irreplaceability in online community. Open Journal of Social Sciences, 4(2), 39-46. https://doi.org/10.4236/jss.2016.42008

Kang, H.-Y., & Pak, Y. (2023). Student engagement in online graduate program in education: A mixed-methods study. American Journal of Distance Education. Advance online publication. https://doi.org/10.1080/08923647.2023.2175560

Kline, R. B. (2016). Principles and practice of structural equation modeling (4th ed.). Guilford Press.

Larson, A., & James, T. (2022). A sense of belonging in Australian higher education: The significance of self-efficacy and the student-educator relationship. Journal of University Teaching and Learning Practice, 19(4), Article 05. https://ro.uow.edu.au/jutlp/vol19/iss4/05

Lee, Y., & Choi, J. (2011). A review of online course dropout research: Implications for practice and future research. Educational Technology Research and Development, 59(5), 593-618. https://doi.org/10.1007/s11423-010-9177-y

Liu, X., Magjuka, R. J., Bonk, C. J., & Lee, S.-H. (2007). Does sense of community matter? An examination of participants’ perceptions of building learning communities in online courses. Quarterly Review of Distance Education, 8(1), 9-24. https://www.infoagepub.com/qrde-issue.html?i=p54c3c9c5901c7

McMillan, D. W., & Chavis, D. M. (1986). Sense of community: A definition and theory. Journal of Community Psychology, 14(1), 6-23. https://doi.org/10.1002/1520-6629(198601)14:1<6::AID-JCOP2290140103>3.0.CO;2-I

Milman, N. B., Posey, L., Pintz, C., Wright, K., & Zhou, P. (2015). Online master’s students’ perceptions of institutional supports and resources: Initial survey results. Online Learning, 19(4), 45-66. https://doi.org/10.24059/olj.v19i4.549

Muthén, L. K., & Muthén, B. O. (2010). Mplus user’s guide (6th ed.). Muthén & Muthén. https://www.statmodel.com/download/usersguide/Mplus%20Users%20Guide%20v6.pdf

Nathan, R. (2005). My freshman year: What a professor learned by becoming a student. Cornell University Press.

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric theory (3rd ed.). McGraw-Hill.

O’Shea, S., Stone, C., & Delahunty, J. (2015). “I ‘feel’ like I am at university even though I am online.” Exploring how students narrate their engagement with higher education institutions in an online learning environment. Distance Education, 36(1), 41-58. https://doi.org/10.1080/01587919.2015.1019970

Palloff, R. M., & Pratt, K. (2007). Building online learning communities: Effective strategies for the virtual classroom. Jossey-Bass.

Pifer, M. J., & Baker, V. L. (2016). Stage-based challenges and strategies for support in doctoral education: A practical guide for students, faculty members, and program administrators. International Journal of Doctoral Studies, 11, 15-34. http://ijds.org/Volume11/IJDSv11p015-034Pifer2155.pdf

Rockinson-Szapkiw, A., & Wendt, J. (2015). Technologies that assist in online group work: A comparison of synchronous and asynchronous computer mediated communication technologies on students’ learning and community. Journal of Educational Media and Hypermedia, 24(3), 263-279. https://www.learntechlib.org/primary/p/147266/

Rovai, A. P. (2001). Building classroom community at a distance: A case study. Educational Technology Research and Development, 49(4), 33-48. https://doi.org/10.1007/BF02504946

Seaman, J. E., Allen, E., & Seaman, J. (2018). Grade increase: Tracking distance education in the United States. Babson Survey Research Group. https://www.bayviewanalytics.com/reports/gradeincrease.pdf

Shepherd, C. E., & Bolliger, D. U. (2019). Online graduate student perceptions of program community. Journal of Educators Online, 16(2). https://www.thejeo.com/archive/2019_16_2~2/shepherd__bollinger

Shepherd, C. E., & Bolliger, D. U. (2022). Extending online community beyond courses: Making the case for program community. In J. M. Spector (Ed.), Routledge encyclopedia of education (pp. 1-6). Routledge. https://doi.org/10.4324/9781138609877-REE154-1

Shepherd, C. E., & Bolliger, D. U. (2023a). Institutional, program, and professional community: A framework for online higher education. Educational Technology Research and Development, 71(3), 1233-1252. https://doi.org/10.1007/s11423-023-10214-3

Shepherd, C. E., & Bolliger, D. U. (2023b). Online university students’ perceptions of institution and program community and the activities that support them [Unpublished manuscript]. University of Memphis.

Smith, S. U., Hayes, S., & Shea, P. (2017). A critical review of Wenger’s Community of Practice (CoP) theoretical framework in online and blended learning research, 2000-2014. Online Learning, 21(1), 209-237. https://doi.org/10.24059/olj.v21i1.963

Speiser, R., Chen-Wu, H., & Lee, J. S. (2022). Developing an “inclusive learning tree”: Reflections on promoting a sense of community in remote instruction. Journal of Educators Online, 19(2). https://doi.org/10.9743/JEO.2022.19.2.11

Steiger, J. H. (1990). Structural model evaluation and modification: An interval estimation approach. Multivariate Behavioral Research, 25(2), 173-180. https://doi.org/10.1207/s15327906mbr2502_4

Stephen, J. S., Rockinson-Szapkiw, A. J., & Dubay, C. (2020). Persistence model of non-traditional online learners: Self-efficacy, self-regulation, and self-direction. American Journal of Distance Education, 34(4), 306-321. https://doi.org/10.1080/08923647.2020.1745619

Tinto, V. (1999). Leaving college: Rethinking the causes and cures of student attrition (2nd ed.). University of Chicago Press.

Tinto, V. (2012). Enhancing student success: Taking the classroom seriously. International Journal of the First Year in Higher Education, 3(1), 1-8. https://doi.org/10.5204/intjfyhe.v3i1.119

Trespalacios, J., Uribe-Flóres, L., Lowenthal, P. R., Lowe, S., & Jensen, S. (2023). Students’ perceptions of institutional services and online learning self-efficacy. American Journal of Distance Education, 37(1), 38-52. https://doi.org/10.1080/08923647.2021.1956836

Wellman, B. (1979). The community question: The intimate networks of East Yorkers. American Journal of Sociology, 84(5), 1201-1231. https://doi.org/10.1086/226906

Wenger, E., McDermott, R., & Snyder, W. M. (2002). Cultivating communities of practice: A guide to managing knowledge. Harvard Business Review Press.

Xu, D., & Jaggars, S. S. (2013). The impact of online learning on students’ course outcomes: Evidence from a large community and technical college system. Economics of Education Review, 37, 46-57. https://doi.org/10.1016/j.econedurev.2013.08.001

Development and Validation of the Sense of Online Community Scale by Craig E. Shepherd, Doris U. Bolliger, and Courtney McKim is licensed under a Creative Commons Attribution 4.0 International License.