Volume 25, Number 1

Hanane Sebbaq* and Nour-eddine El Faddouli

RIME Team, MASI Laboratory, E3S Research Center, Mohammadia School of Engineers (EMI), Mohammed V University in Rabat, Morocco; *Corresponding author

MOOCs (massive open online courses), because of their scale and accessibility, have become a major area of interest in contemporary education. However, despite their growing popularity, the question of their quality remains a central concern, partly due to the lack of consensus on the criteria establishing such quality. This study set out to fill this gap by carrying out a systematic review of the existing literature on MOOC quality and proposing a specific quality assurance framework at a micro level. The methodology employed in this research consisted of a careful analysis of MOOC success factor’s using Biggs’ classification scheme, conducted over a four-year period from 2018 to 2022. The results highlighted the compelling need to consider various indicators across presage, process, and product variables when designing and evaluating MOOCs. This implied paying particular attention to pedagogical quality, both from the learner’s and the teacher’s point of view. The quality framework thus developed is of significant importance. It offers valuable guidance to MOOC designers, learners, and researchers, providing them with an in-depth understanding of the key elements contributing to MOOC quality and facilitating their continuous improvement. In addition, this study highlighted the need to address aspects for future research, including large-scale automated evaluation of MOOCs. By focusing on pedagogical quality, MOOCs can play a vital role in providing meaningful learning experiences, maximizing learner satisfaction, and ensuring their success as innovative educational systems adapted to the changing needs of contemporary education.

Keywords: MOOC quality, quality assurance, pedagogical quality framework, MOOC success factors

The educational landscape has undergone rapid transformation, with a pronounced shift towards online learning models, seen most notably in the widespread use of massive open online courses (MOOCs) by universities. However, defining excellence in these diverse online courses poses a considerable challenge, impacting the design and quality of pedagogical content. The multifaceted nature of MOOCs demands a comprehensive assessment of their quality, in harmony with participants’ varied motivations, goal orientations, and behaviors (Littlejohn & Hood, 2018).

Recognizing the crucial role of quality in effective learning within online systems, this systematic literature review delved into the complex area of pedagogical quality in MOOCs. The lack of precise consensus on what constitutes quality in these courses highlights the complexities of the educational framework, prompting a closer look at its various dimensions (Chansanam et al., 2021).

This research sought to understand and evaluate the multifaceted dimensions of pedagogical quality in MOOCs, focusing specifically on the micro level of quality management. The main objective was to explore quality assurance as a fundamental approach to maintaining high standards in these constantly evolving online learning environments.

In the midst of the evolving online education landscape, this systematic literature review has become of paramount importance. By examining pedagogical quality in MOOCs, it aimed to unravel the complexities surrounding quality assessment, filling important gaps in the existing literature. The results of this research offered valuable insights into effective quality assessment and improvement in MOOCs, contributing significantly to the field of online education knowledge. Furthermore, the practical implications of this study were envisaged as beneficial for educators, policy makers, and institutions striving to raise the quality standards of MOOC-based education.

This section outlines our analysis of existing literature reviews on the quality, success, and effectiveness of MOOCs. There have been few systematic scientific publications related to quality in MOOCs. The majority investigated the factors influencing the success and effectiveness of MOOCs but did not assess the pedagogical quality of MOOCs (Albelbisi et al., 2018, p. 5486). Some studies have proposed classification schemes based on factors from previous reviews but not on their review of existing work. Other reviews did not go as far as proposing classification schemes, (Chansanam et al., 2021; Suwita et al., 2019). The limited scope and small number of articles reviewed are also important limitations of some studies. For their part, Stracke and Trisolini (2021) conducted a literature review on a large number of articles (n = 103) and established a categorization scheme for the different dimensions. But their classification did not take into account the learner and the teacher as main inputs. It should also be noted that the main difference between our review and these previous reviews lies in the depth of our analysis and the longer period of years considered in our review. The overall aim of our review was to carry out a systematic review of the academic literature on quality in MOOCs in the period between 2018 and 2022, to analyze the aspects of pedagogical quality in MOOCs addressed in this literature.

In this section, we detail the research methodology used for this literature review, following Kitchenham’s guidelines (Kitchenham et al., 2010) and incorporating the so-called snowballing procedure proposed by Wohlin (2014). Our systematic literature review aimed to achieve several objectives: (a) summarize empirical evidence, (b) identify research gaps, and (c) provide a contextual framework for future investigations. Specifically, our study focused on examining existing quality assurance frameworks and criteria influencing the success and quality of MOOCs. By conducting an extensive database search, we identified several quality assurance methods and carefully selected those that corresponded to the criteria defined for the study.

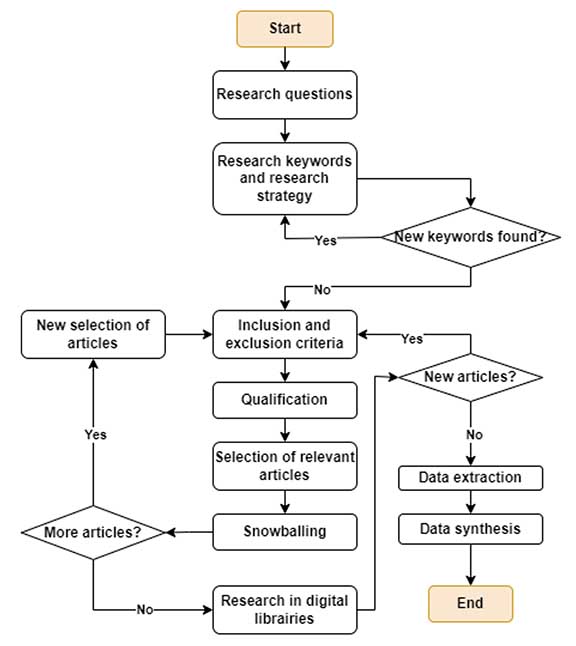

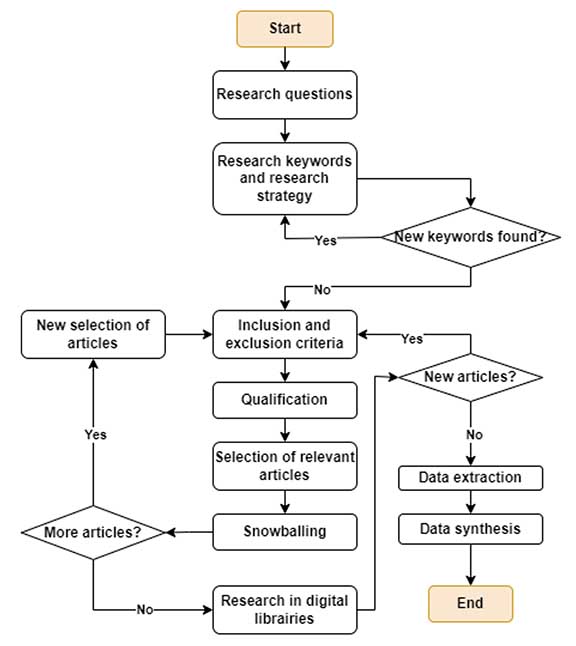

Figure 1

Steps in the Literature Review Process

The study rigorously followed a structured three-phase process for its literature review—planning, implementation, and reporting—as illustrated in Figure 1. Planning defined the research questions and developed a methodological protocol. Implementation identified relevant sources, assessed their quality, and extracted data for in-depth analysis. Finally, the reporting phase synthesized the results into a comprehensive report, offering a clear view of the research journey.

This comprehensive review encompassed both theoretical and empirical contributions and sought to address the following research questions.

Our article selection process was guided by a set of inclusion and exclusion criteria, as outlined below. We included (a) theoretical and empirical works on factors influencing the quality or success of MOOCs; and (b) theoretical and empirical works that proposed frameworks for quality assurance or improvement in MOOCS, pedagogical quality in particular.

We excluded items according to the following criteria:

We conducted a comprehensive manual search employing various permutations of keywords related to our research, such as (a) assurance, (b) improvement, (c) pedagogical quality, (d) quality framework, (e) MOOCs quality, (f) pedagogical quality in MOOCs, (g) instructional design quality assurance in MOOCs, and (h) success MOOC. We searched for papers containing one or more of these keywords in their titles or abstracts. Despite the labor-intensive nature of manual searching, it was deemed more reliable than automated methods, as it could encompass keywords present in article titles, abstracts, and occasionally within the article content. We employed Boolean expressions (e.g., Or, And) to refine our searches, continually expanding our keyword list as our investigations advanced.

To ensure the completeness of our research, we used reputable computer science and information technology citation databases such as Springer, IEEE Explore, ACM Digital Library, Scopus, and Science Direct. Our study focused on the last seven years (2018—2022) to capture recent advances and emerging trends. This analysis covered not only empirical studies, but also theoretical articles.

In the first phase, we identified an initial set of 97 sources, then applied rigorous criteria to retain 69 relevant sources. We also checked whether the authors had produced other publications related to the subject.

By introducing the snowballing method into our selection process, we incorporated new elements discovered in the first iteration to add another eight sources in the second iteration. This rigorous methodology resulted in a comprehensive and up-to-date collection of relevant sources.

We used Zotero to extract data from the search results and to organize detailed bibliographic information to facilitate the article selection stage. An Excel file was used to summarize and classify the various contributions selected.

The initial search yielded 97 research papers. After applying inclusion/exclusion and qualification criteria by analyzing titles and abstracts, only 77 were classified as relevant sources. Table 1 shows the results of searches in academic databases.

Table 1

Results of Searches in Academic Databases

| Academic data source | Number of relevant papers |

| IEEE | 9 |

| Springer | 6 |

| ACM | 2 |

| Science Direct | 19 |

| Taylor & Francis | 2 |

| International Review of Research in Open and Distributed Learning | 3 |

| Google Scholar | 36 |

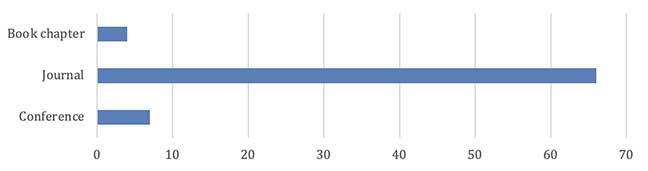

Figure 2

Distribution of Selected Publications

Figure 2 shows the distribution of selected publications by type. Of the 77 studies found, 66 were in journals, while just seven were conference papers and four were book chapters.

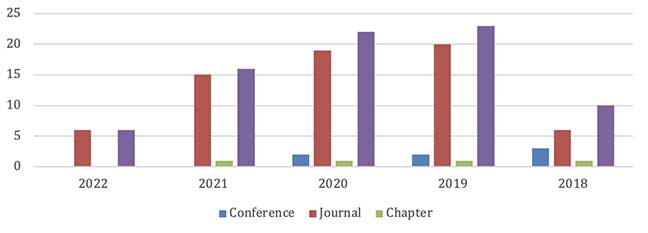

Figure 3

Annual Breakdown of Literature by Type

Figure 3 shows the growth of work around quality assurance in MOOCs towards the year 2020.

In-depth study of the literature on MOOCs revealed complex interactions among their components. To explore quality in MOOCs, Biggs’ 3P model was adopted, adapted, and applied (Biggs, 1993). This model depicted educational ecosystems as having foreshadowing, process, and product variables (Gibbs, 2010). Our analysis redefine these variables in order to better understand their interrelation. As MOOCs are learning ecosystems, reassessing their composition is crucial. The systematic search for key factors in the literature facilitated a methodical classification according to the three categories of variables below, and as detailed in Table 2.

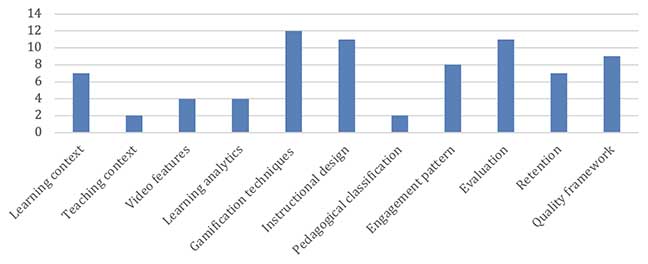

Figure 4

Number of Studies per Factor

Figure 4 shows that gamification techniques was the factor most addressed, followed by evaluation and instructional design, then quality framework. Pedagogical classification and teacher context were the least addressed in the literature.

Table 2

Classification Scheme for Studies Included in the Review

| Variable | Factor | Study |

| Presage | Learner context | Chen et al. (2019); Costello, Brunton et al. (2018); Demetriadis et al. (2018); Gamage et al. (2020); Sun & Bin (2018); Sun et al. (2019) |

| Teacher context | Bonk et al. (2018); Ray (2019) | |

| Process | Technological dimensions | |

| Video features | Fassbinder et al. (2019); Lemay & Doleck (2022); Stoica et al. (2021); van der Zee et al. (2018) | |

| Learning analytics | Cross et al. (2019); Hooda (2020); İnan & Ebner (2020) ; Shukor & Abdullah (2019) | |

| Gamification techniques | Aparicio et al. (2019); Bai et al. (2020); Buchem et al. (2020); Danka (2020); Jarnac de Freitas & Mira da Silva (2020); Khalil et al. (2018); Osuna-Acedo (2021); Rahardja et al. (2019); Rincón-Flores et al. (2020); Romero-Rodriguez et al. (2019); Sezgin & Yüzer (2022); Tjoa & Poecze (2020) | |

| Pedagogical dimensions | ||

| Instructional design | Anyatasia et al. (2020); Giasiranis & Sofos (2020); Guerra et al. (2022); Julia et al. (2021); Jung et al. (2019); Littlejohn & Hood (2018); Nie et al. (2021); Sabjan et al. (2021); Smyrnova-Trybulska et al. (2019); Wang, Lee, et al. (2021); Wong (2021) | |

| Pedagogical classification | Davis et al. (2018); Xing (2018) | |

| Engagement pattern | Alemayehu & Chen (2021); Dai et al. (2020); Deng et al. (2020); Estrada-Molina & Fuentes-Cancell (2022); Guajardo Leal et al. (2019); Liu et al. (2022); Wang et al. (2019); Xing (2018) | |

| Assessment | Alcarria et al. (2018); Alexandron et al. (2020); Avgerinos & Karageorgiadis (2020); Bogdanova & Snoeck (2018); Costello, Holland, et al. (2018); Douglas et al. (2020); Farrow et al. (2021); Gamage et al. (2018, 2021); Nanda et al. (2021); Pilli et al. (2018); Xiao et al. (2019) | |

| Product | Retention or completion rate | Bingöl et al. (2019); Dalipi et al. (2018); Goel & Goyal (2020); Gregori et al. (2018); Hew et al. (2020); Mrhar et al. (2021); Wang, Khan, et al. (2021) |

| MOOC quality: Framework for pedagogical quality assurance | Aloizou et al. (2019); Li et al. (2022); Nie et al. (2021); OpenupEd (n.d.); Ossiannilsson (2020); Quality Assurance Agency for Higher Education (n.d.); Quality Matters (n.d.); Stracke et al. (2018); Su et al. (2021); Yuniwati et al. (2020); Zhou & Li (2020) | |

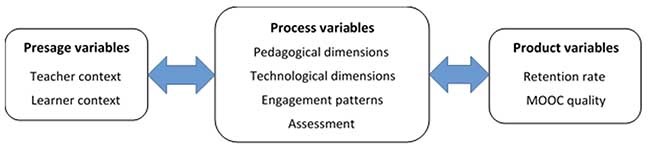

Figure 5

Framework for MOOC Quality Measurement

Traditional measures of presage variables include teacher quality and learner quality. MOOCs disrupt these traditional measures and call for new measures of quality. These new measures have important implications for process and product variables. Figure 5 shows the framework for MOOC quality measurement. In the following, we detail the results of our literature review according to this framework.

Learner Context. In MOOCs, there are three types of interaction involving the learner: (a) learner interaction with activities and xontent, (b) teacher-learner interaction, and (c) learner-learner interaction and collaboration. Studies into the role of interactivity in MOOC quality assurance have been based on frameworks such as the academically productive talk (APT) framework (Costello, Brunton et al., 2018) for integrating a conversational agent that facilitates learner-learner interactivity. Another study, Sun and Bin (2018) was based on the local community detection framework.

Teacher Context. The literature has been divided between those who demonstrated that the role of the teacher in MOOCs is not paramount and those who have seen it as necessary. The literature regarding the context of the teacher in MOOC quality assurance included studies on useful activities, tools, approaches, and resources for teachers to develop their teaching experience (Askeroth & Richardson, 2019; Bonk et al., 2018; Ray, 2019).

Pedagogical Design for MOOCS. Several studies have examined the importance of defining a homogeneous, coherent, and integrative course structure, taking into account the constraints of the number of modules and the time between them.

Most work on the quality of pedagogical scripting in MOOCs has been based on questionnaires for learners (Sabjan et al., 2021). Some looked as a single MOOC (Giasiranis & Sofos, 2020) while others examined several MOOCs (Julia et al., 2021). The analysis and review of MOOC design has often drawn on frameworks such as ADDIE (analysis, design, development, implementation, evaluation; Smyrnova-Trybulska et al., 2019), the educational scalability framework (Julia et al., 2021), or the 10-principle framework (Wang, Lee, et al., 2021). Some works were limited to manual analysis (e.g., Giasiranis & Sofos, 2020), while others relied on sentiment analysis and automated tools such as the course scan questionnaire (Wang, Lee, et al., 2021).

Pedagogical Classification of MOOCs. Various studies have focused on improving the pedagogical design of MOOCs by comparing different pedagogies suitable for large-scale learning and teaching. However, analyzing and classifying MOOCs has been challenging due to their content, structures, designs, and variety of providers. Researchers have taken different approaches to understand these variations and identify pedagogical models in MOOC instructional design.

Several descriptive frameworks and evaluation tools have been proposed to categorize and assess MOOCs. Examples include a 10-dimension MOOC pedagogy assessment tool (AMP) used for evaluation (Quintana & Tan, 2019).

Some researchers have used machine learning algorithms like k-nearest neighbor (k-NN) and k-means to automatically classify MOOCs based on their design features and pedagogical approaches (Davis et al., 2018; Xing, 2018). Other studies incorporated theoretical foundations to guide their classification and evaluation processes. Overall, these efforts aimed to help educators and designers make informed decisions about MOOC pedagogy, enhance learner engagement, and improve the quality of MOOC experiences.

The technological quality of online educational resources has been studied. Our literature review identified three main technological dimensions: (a) video characteristics, (b) gamification, and (c) the use of learning analytics.

Fassbinder et al. (2019) proposed models for high-quality video-based open educational resources in MOOCs, with the aim of improving learning experiences. Lemay and Doleck (2022) used neural networks to predict student performance in MOOCs by analyzing video viewing behavior, highlighting the role of motivation and active viewing. Stoica et al. (2021) explored the prediction of learning success in MOOC videos, while van der Zee et al. (2018) advocated active learning strategies, demonstrating their positive impact on student quiz performance in online education.

Gamification techniques have been used in e-learning and have yielded positive results in improving learner engagement and motivation (Khalil et al., 2018). Sezgin and Yüzer (2022) studied the contribution of adaptive gamification on e-learning; they deduced that this approach facilitated high-quality interactive learning experiences for distance learners. Osuna-Acedo (2021) confirmed that integrating gamification into MOOCS models positively affected learner motivation and engagement. In the same vein, Rincón-Flores et al. (2020) evaluated the effect of gamification in a MOOC. Of 4,819 participants, 621 completed the course and 647 took up the gamification challenge. The results showed that over 90% of participants experienced greater motivation and stimulation than with conventional teaching methods. Other studies, (Bai et al., 2020; Danka, 2020;) confirmed the contribution of gamification to the success and quality of MOOCs.

Learning analytics have shown potential for supporting learner and teacher engagement, and promoting the quality of the teaching and learning experience, by providing information that can be useful for both teacher and learner. İnan and Ebner (2020) analyzed various forms of learning analytics in MOOCs, ranging from data mining to analysis and visualization. Hooda (2020) examined learning analytics and educational data mining, and demonstrated the impact on the learner and instructor in different learning environments. Similarly, Shukor and Abdullah (2019) demonstrated the positive effect of learning analytics on the quality of instructional scripting.

Studies on MOOCs have attempted to better understand the different types of learner behavior by analyzing patterns of persistence, perseverance, and interaction. But given the heterogeneity of learner profiles in MOOCs, this calls for drawing a distinction between different learner profiles. To this end, several studies adopted a statistical approach. These studies have carried out in-depth analyses to obtain information on learners’ motivations and establish a classification of learners according to their type and degree of engagement. Learner engagement has many definitions, depending on one’s perspective. In the MOOC context, engagement refers to the learner’s interactions with peers, the teacher, content, and activities. These interactions can take many forms and occur throughout the teaching/learning process. So, to improve the quality of this process, it is necessary to consider the different forms of engagement when designing and delivering a MOOC.

We found two literature reviews that addressed the role of engagement in MOOC quality assurance. Guajardo Leal et al. (2019) conducted a systematic literature mapping to thoroughly explore the concept of academic engagement in massive and open online learning. Estrada-Molina and Fuentes-Cancell (2022) analyzed 40 studies between 2017 and 2021. The results showed that the main variables were (a) the design of e-activities, (b) intrinsic and extrinsic motivation, and (c) communication between students. This article confirmed that the main challenges for guaranteeing engagement in MOOCs are individualized tutoring, interactivity, and feedback. The evaluation, measurement, and classification of engagement patterns have been the subject of various research studies. Liu et al. (2022) used a robust model (BERT-CNN; bidirectional encoder representations from transformer, BERT, combined with convolutional neural networks, CNN) to analyze the discussions among 8,867 learners. Structural equation modeling indicated that emotional and cognitive engagement interacted and had a combined effect on learning outcomes.

There are several types of assessment: (a) formative assessment, during the learning process; (b) summative assessment, at the end of the course; or (c) an initial test to check learners’ knowledge before the course begins. In MOOCs, tests are generally self-assessment or peer assessment in which participants examine and evaluate the work of other learners. In their review of literature between 2014 and 2020, Gamage et al. (2021) provided summary statistics and a review of methods across the corpus. They highlighted three directions for improving the use of peer assessment in MOOCs: the need to (a) focus on scaling learning through peer assessments, (b) scale and optimize team submissions in team peer assessments, and (c) integrate a social peer assessment process.

Retention Rate. Traditional indicators of learning quality are not appropriate for measuring the quality of MOOCs, as success is not the goal of all learners. Course completion does not always correspond to learning satisfaction or success. Conventional measures such as retention and completion cannot guarantee quality in MOOCs.

Bingöl et al. (2019) identified success factors and completion elements for MOOCs, focusing on instructors, course design, and personal factors. Dalipi et al. (2018) examined dropout prediction in MOOCs using machine learning, while Goel and Goyal (2020) explored dropout reduction strategies, using semi-supervised learning. Mrhar et al. (2021) proposed a dropout prediction model for MOOCs using neural networks. Wang, Khan, et al. (2021) studied user satisfaction and reuse intentions in MOOCs during the COVID-19 pandemic using the unified theory of acceptance and use of technology (UTAUT) model, highlighting critical factors influencing user behavior.

MOOC Quality: Framework for Pedagogical Quality Assurance. Our literature review detected proposals for quality assurance frameworks (e.g., Quality Matters, n.d.). In this framework, quality management is a process of peer review and faculty development based on eight dimensions. While it provided a reasonable argument for online learning, it did not specifically address the MOOC context. Aloizou et al. (2019) conducted a literature review to identify the most mature existing MOOC quality assurance methods. Two quality assurance frameworks that met most of their criteria were selected, including OpenupED (n.d.) and Quality Matters (n.d.). An evaluative case study was then carried out to apply the selected methods to a MOOC implementing active learning pedagogies. Yuniwati et al. (2020) developed an evaluation instrument to measure platform quality, using the plomp model, consisting of five phases: (a) design; (b) construction; (c) test, evaluation, and revision; and (d) implementation.

Other studies have relied on learners’ data (e.g., their comments on feedback) to make manual or automatic analyses and reveal the factors that affect MOOC quality. For example, Zhou and Li (2020) used the BERT model to classify student comments while Li et al. (2022) relied on sentiment analysis of learner feedback to measure MOOC quality.

This paper endeavored to establish a micro-level framework for ensuring quality in MOOCs, striving to establish connections among the diverse factors that influence MOOC quality and success. To achieve this goal, we conducted an extensive literature review, meticulously analyzing and categorizing publications pertinent to quality in MOOCs. Our objective was to gain deeper insights into the factors that impact MOOC success. Our approach was rooted in systematic literature review methodologies, systematically identifying and scrutinizing the critical factors that contribute to MOOC success.

By focusing our literature review on the facets influencing quality in MOOCs, particularly pedagogical quality, we were able to classify these factors based on a scheme inspired by Biggs’ framework (Biggs, 1993). Our quantitative analysis revealed that interest in quality in MOOCs reached its zenith in the year 2020 and continues to captivate researchers in this domain. Moreover, the distribution of the 77 studies across the various dimensions of our classification scheme displayed disparities, signifying distinct research interests and emphases.

The primary outcome of our study was the development of a quality framework that represented a classification scheme encompassing key aspects of MOOC quality, with a clear distinction among three dimensions—presage, process, and product. The presage dimension was detailed and expounded upon in a total of nine studies arranged in two hierarchical levels; there were seven primary studies concerning the learner context and two secondary studies addressing the teacher context. The process dimension was explored in 33 studies, with gamification techniques the factor addressed most, then evaluation and instructional design, followed by quality framework. Pedagogical classification and teacher context were addressed the least in the literature.

The three factors of learner context, instructional design, and engagement patterns emerged as the most frequently discussed in the literature. This prevalence can be attributed to the predominant focus on quality from the learner’s perspective. Conversely, the foreshadowing factor related to the teacher’s context.

The qualitative analysis explored various variables relevant to MOOC quality. Learner context came to the fore, highlighting the importance of peer interaction and community sensing in fostering enriching learning experiences. Likewise, the context of the teacher sparked debate, with studies looking at tools, resources, and approaches to improve pedagogical strategies for instructors in online learning environments. Process variables highlighted the importance of pedagogical dimensions, such as instructional scripting and design frameworks, as well as technological aspects, including the use of video features and gamification techniques to promote learner engagement and motivation. In addition, models of engagement, revealing the complexity of learner behaviors, proved crucial for adapting pedagogical strategies and course designs.

In terms of product variables, studies focused on retention rates, addressing dropout prediction, and factors influencing user satisfaction, particularly during the COVID-19 pandemic. In addition, the assessment of quality assurance frameworks provided insights into the evolution of quality assessment in MOOCs, calling for comprehensive assessment tools and peer-reviewed frameworks.

These analyses elucidated the dynamic nature of MOOC quality assurance, highlighting the pivotal role of learner-teacher interactions, structured instructional designs, and technological innovations in creating effective online learning environments. The implications of these findings are far-reaching, offering opportunities for educators, designers and policy-makers to refine pedagogical strategies, exploit technological advances, and develop comprehensive quality assessment frameworks for MOOCs.

Another crucial takeaway from this systematic literature review was the imperative to consider and address several key indicators related to MOOC design and quality across all three dimensions when conducting research on MOOC quality. While the majority of studies emphasized quality from the learner’s viewpoint, the remaining three dimensions (i.e., presage, process, and product) are equally relevant and instrumental in shaping the design and quality of MOOCs. Therefore, a comprehensive approach necessitates the consideration of each dimension when designing MOOCs.

In conclusion, we have highlighted promising avenues for future research in this domain. We advocate for the incorporation of two product variables, namely completion rates and MOOC quality, in assessing MOOC success. According to our literature review, relying solely on completion rates is inadequate to measure MOOC success, necessitating the development of a comprehensive quality measurement framework. Existing frameworks, while present in the literature, often lacked automatic evaluation tools and encompassed only a limited number of MOOCs. Consequently, we recommend further research focusing on large-scale, automated evaluations of MOOCs as an area with significant potential.

We firmly believe that this systematic literature review and its findings are pertinent to both MOOC designers and learners. It empowers them to identify the critical quality categories aligning with their objectives, facilitating the selection of the most suitable MOOC methods. The principal outcome of this review, the quality framework for MOOCs, should serve as a valuable resource for future MOOC research, offering applications in MOOC design guidelines, fostering discussion and benchmarking among MOOC design teams, and facilitating standardized descriptions and assessments of MOOC quality. Additionally, it can serve as a foundation for conducting systematic reviews of subsequent literature in the future.

Moreover, it is essential to underscore that, like any e-learning initiative, the successful adoption of MOOCs hinges on the active participation of all stakeholders, particularly instructors and learners. Special attention should be directed towards ensuring pedagogical quality in MOOCs right from their inception, with ongoing support for educators. The findings of this research should be harnessed and evaluated in the development of new MOOCs, offering insights into the feasibility of the four dimensions and their quality indicators. Using mixed-methods research can foster a more comprehensive understanding and facilitate improved strategies for enhancing the design, implementation, and evaluation of future MsOOCs, ultimately enhancing their quality. Incorporating these elements will guarantee that MOOCs are designed and delivered effectively, promoting meaningful learning experiences and enhancing learner satisfaction, thereby contributing to the success of MOOC systems.

Albelbisi, N., Yusop, F. D., & Salleh, U. K. M. (2018). Mapping the factors influencing success of massive open online courses (MOOC) in higher education. EURASIA Journal of Mathematics, Science and Technology Education, 14(7). https://doi.org/10.29333/ejmste/91486

Alcarria, R., Bordel, B., Martín de Andrés, D., & Robles, T. (2018). Enhanced peer assessment in MOOC evaluation through assignment and review analysis. International Journal of Emerging Technologies in Learning, 13(1), 206. https://doi.org/10.3991/ijet.v13i01.7461

Alemayehu, L., & Chen, H. -L. (2021). Learner and instructor-related challenges for learners’ engagement in MOOCs : A review of 2014—2020 publications in selected SSCI indexed journals. Interactive Learning Environments, 31, 1-23. https://doi.org/10.1080/10494820.2021.1920430

Alexandron, G., Wiltrout, M. E., Berg, A., & Ruipérez-Valiente, J. A. (2020, March). Assessment that matters: Balancing reliability and learner-centered pedagogy in MOOC assessment. In Proceedings of the tenth international conference on learning analytics & knowledge (pp. 512-517). https://doi.org/10.1145/3375462.3375464

Aloizou, V., Sobrino, S. L. V., Monés, A. M., & Sastre, S. G. (2019). Quality assurance methods assessing instructional design in MOOCs that implement active learning pedagogies: An evaluative case study. CEUR Workshop Proceedings. https://uvadoc.uva.es/handle/10324/38662

Anyatasia, F. N., Santoso, H. B., & Junus, K. (2020). An evaluation of the Udacity MOOC based on instructional and interface design principles. Journal of Physics: Conference Series, 1566(1), 012053. https://doi.org/10.1088/1742-6596/1566/1/012053

Aparicio, M., Oliveira, T., Bacao, F., & Painho, M. (2019). Gamification: A key determinant of massive open online course (MOOC) success. Information & Management, 56(1), 39-54. https://doi.org/10.1016/j.im.2018.06.003

Askeroth, J. H., & Richardson, J. C. (2019). Instructor perceptions of quality learning in MOOCs they teach. Online Learning, 23(4), 135-159. https://doi.org/10.24059/olj.v23i4.2043

Avgerinos, E., & Karageorgiadis, A. (2020). The importance of formative assessment and the different role of evaluation in MOOCS. 2020 IEEE Learning With MOOCS (pp. 168-173). https://doi.org/10.1109/LWMOOCS50143.2020.9234320

Bai, S., Hew, K. F., & Huang, B. (2020). Does gamification improve student learning outcome? Evidence from a meta-analysis and synthesis of qualitative data in educational contexts. Educational Research Review, 30, 100322. https://doi.org/10.1016/j.edurev.2020.100322

Biggs, J. B. (1993). From theory to practice : A cognitive systems approach. Higher Education Research & Development, 12(1), Article 1. https://doi.org/10.1080/0729436930120107

Bingöl, I., Kursun, E., & Kayaduman, H. (2019). Factors for success and course completion in massive open online courses through the lens of participant types. Open Praxis, 12(2), 223. https://doi.org/10.5944/openpraxis.12.2.1067

Bogdanova, D., & Snoeck, M. (2018). Using MOOC technology and formative assessment in a conceptual modelling course: An experience report. Proceedings of the 21st ACM/IEEE International Conference on Model Driven Engineering Languages and Systems: Companion Proceedings (pp. 67-73). https://doi.org/10.1145/3270112.3270120

Bonk, C. J., Zhu, M., Kim, M., Xu, S., Sabir, N., & Sari, A. R. (2018). Pushing toward a more personalized MOOC: Exploring instructor selected activities, resources, and technologies for MOOC design and implementation. The International Review of Research in Open and Distributed Learning, 19(4), Article 4. https://doi.org/10.19173/irrodl.v19i4.3439

Buchem, I., Carlino, C., Amenduni, F., & Poce, A. (2020). Meaningful gamification in MOOCS: Designing and examining learner engagement in the open virtual mobility learning hub. Proceedings of the 14th International Technology, Education and Development Conference (pp. 9529-9534). https://doi.org/10.21125/inted.2020.1661

Chansanam, W., Poonpon, K., Manakul, T., & Detthamrong, U. (2021). Success and challenges in MOOCs: A literature systematic review technique. TEM Journal, 10(4), 1728-1732. https://doi.org/10.18421/TEM104-32

Chen, Y., Gao, Q., Yuan, Q., & Tang, Y. (2019). Facilitating students’ interaction in MOOCs through timeline-anchored discussion. International Journal of Human—Computer Interaction, 35(19), 1781-1799. https://doi.org/10.1080/10447318.2019.1574056

Costello, E., Brunton, J., Brown, M., & Daly, L. (2018). In MOOCs we trust: Learner perceptions of MOOC quality via trust and credibility. International Journal of Emerging Technologies in Learning, 13(06), 214. https://doi.org/10.3991/ijet.v13i06.8447

Costello, E., Holland, J., & Kirwan, C. (2018). The future of online testing and assessment: Question quality in MOOCs. International Journal of Educational Technology in Higher Education, 15(1), 42. https://doi.org/10.1186/s41239-018-0124-z

Cross, J. S., Keerativoranan, N., Carlon, M. K. J., Tan, Y. H., Rakhimberdina, Z., & Mori, H. (2019). Improving MOOC quality using learning analytics and tools. 2019 IEEE Learning With MOOCS (pp. 174-179). https://doi.org/10.1109/LWMOOCS47620.2019.8939617

Dai, H. M., Teo, T., & Rappa, N. A. (2020). Understanding continuance intention among MOOC participants: The role of habit and MOOC performance. Computers in Human Behavior, 112, 106455. https://doi.org/10.1016/j.chb.2020.106455

Dalipi, F., Imran, A. S., & Kastrati, Z. (2018). MOOC dropout prediction using machine learning techniques: Review and research challenges. 2018 IEEE Global Engineering Education Conference (pp. 1007-1014). https://doi.org/10.1109/EDUCON.2018.8363340

Danka, I. (2020). Motivation by gamification: Adapting motivational tools of massively multiplayer online role-playing games (MMORPGs) for peer-to-peer assessment in connectivist massive open online courses (cMOOCs). International Review of Education, 66, 75-92. https://doi.org/10.1007/s11159-020-09821-6

Davis, D., Seaton, D., Hauff, C., & Houben, G.-J. (2018). Toward large-scale learning design: Categorizing course designs in service of supporting learning outcomes. Proceedings of the Fifth Annual ACM Conference on Learning at Scale (pp. 1-10). https://doi.org/10.1145/3231644.3231663

Demetriadis, S., Tegos, S., Psathas, G., Tsiatsos, T., Weinberger, A., Caballe, S., Dimitriadis, Y., Sanchez, E. G., Papadopoulos, P. M., & Karakostas, A. (2018). Conversational agents as group-teacher interaction mediators in MOOCs. 2018 Learning With MOOCS (pp. 43-46). https://doi.org/10.1109/LWMOOCS.2018.8534686

Deng, R., Benckendorff, P., & Gannaway, D. (2020). Learner engagement in MOOCs: Scale development and validation. British Journal of Educational Technology, 51(1), 245-262. https://doi.org/10.1111/bjet.12810

Douglas, K. A., Merzdorf, H. E., Hicks, N. M., Sarfraz, M. I., & Bermel, P. (2020). Challenges to assessing motivation in MOOC learners: An application of an argument-based approach. Computers & Education, 150, 103829. https://doi.org/10.1016/j.compedu.2020.103829

Estrada-Molina, O., & Fuentes-Cancell, D.-R. (2022). Engagement and desertion in MOOCs: Systematic review. Comunicar, 30(70), 111-124. https://doi.org/10.3916/C70-2022-09

Farrow, R., Ferguson, R., Weller, M., Pitt, R., Sanzgiri, J., & Habib, M. (2021). Assessment and recognition of MOOCs : The state of the art. 12. Journal of Innovation in PolyTechnic Education, 3(1), 15-26.

Fassbinder, M., Fassbinder, A., Fioravanti, M. L., & Barbosa, E. F. (2019). Towards an educational design pattern language to support the development of open educational resources in videos for the MOOC context. Proceedings of the 26th Conference on Pattern Languages of Programs (pp. 1-10). https://dl.acm.org/doi/abs/10.5555/3492252.3492274

Gamage, D., Perera, I., & Fernando, S. (2020). MOOCs lack interactivity and collaborativeness: Evaluating MOOC platforms. International Journal of Engineering Pedagogy, 10(2), 94. https://doi.org/10.3991/ijep.v10i2.11886

Gamage, D., Staubitz, T., & Whiting, M. (2021). Peer assessment in MOOCs: Systematic literature review. Distance Education, 42(2), 268-289. https://doi.org/10.1080/01587919.2021.1911626

Gamage, D., Whiting, M. E., Perera, I., & Fernando, S. (2018). Improving feedback and discussion in MOOC peer assessement using introduced peers. 2018 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (pp. 357-364). https://doi.org/10.1109/TALE.2018.8615307

Giasiranis, S., & Sofos, L. (2020). The influence of instructional design and instructional material on learners’ motivation and completion rates of a MOOC course. Open Journal of Social Sciences, 8(11), 190-206. https://doi.org/10.4236/jss.2020.811018

Gibbs, G. (2010, September). Dimensions of quality. The Higher Education Acadamy. https://support.webb.uu.se/digitalAssets/91/a_91639-f_Dimensions-of-Quality.pdf

Goel, Y., & Goyal, R. (2020). On the effectiveness of self-training in MOOC dropout prediction. Open Computer Science, 10(1), 246-258. https://doi.org/10.1515/comp-2020-0153

Gregori, E. B., Zhang, J., Galván-Fernández, C., & Fernández-Navarro, F. de A. (2018). Learner support in MOOCs: Identifying variables linked to completion. Computers & Education, 122, 153-168. https://doi.org/10.1016/j.compedu.2018.03.014

Guajardo Leal, B. E., Navarro-Corona, C., & Valenzuela González, J. R. (2019). Systematic mapping study of academic engagement in MOOC. The International Review of Research in Open and Distributed Learning, 20(2). https://doi.org/10.19173/irrodl.v20i2.4018

Guerra, E., Kon, F., & Lemos, P. (2022). Recommended guidelines for effective MOOCs based on a multiple-case study. ArXiv:2204.03405. https://doi.org/10.48550/arXiv.2204.03405

Hew, K. F., Hu, X., Qiao, C., & Tang, Y. (2020). What predicts student satisfaction with MOOCs: A gradient boosting trees supervised machine learning and sentiment analysis approach. Computers & Education, 145, 103724. https://doi.org/10.1016/j.compedu.2019.103724

Hooda, M. (2020). Learning analytics lens: Improving quality of higher education. International Journal of Emerging Trends in Engineering Research, 8(5), 1626-1646. https://doi.org/10.30534/ijeter/2020/24852020

İnan, E., & Ebner, M. (2020). Learning analytics and MOOCs. In P. Zaphiris & A. Ioannou (Eds.), Learning and collaboration technologies: Designing, developing and deploying learning experiences (Vol. 12205, pp. 241-254). Springer International Publishing. https://doi.org/10.1007/978-3-030-50513-4_18

Jarnac de Freitas, M., & Mira da Silva, M. (2020). Systematic literature review about gamification in MOOCs. Open Learning: The Journal of Open, Distance and e-Learning, 38(1), 1-23. https://doi.org/10.1080/02680513.2020.1798221

Julia, K., Peter, V. R., & Marco, K. (2021). Educational scalability in MOOCs: Analysing instructional designs to find best practices. Computers & Education, 161, 104054. https://doi.org/10.1016/j.compedu.2020.104054

Jung, E., Kim, D., Yoon, M., Park, S., & Oakley, B. (2019). The influence of instructional design on learner control, sense of achievement, and perceived effectiveness in a supersize MOOC course. Computers & Education, 128, 377-388. https://doi.org/10.1016/j.compedu.2018.10.001

Khalil, M., Wong, J., de Koning, B., Ebner, M., & Paas, F. (2018). Gamification in MOOCs: A review of the state of the art. 2018 IEEE Global Engineering Education Conference (pp. 1629-1638). https://doi.org/10.1109/EDUCON.2018.8363430

Kitchenham, B., Pretorius, R., Budgen, D., Brereton, O. P., Turner, M., Niazi, M., & Linkman, S. (2010). Systematic literature reviews in software engineering—a tertiary study. Information and Software Technology, 52(8), 792-805. https://doi.org/10.1016/j.infsof.2010.03.006

Lemay, D. J., & Doleck, T. (2022). Predicting completion of massive open online course (MOOC) assignments from video viewing behavior. Interactive Learning Environments, 30(10), 1782-1793. https://doi.org/10.1080/10494820.2020.1746673

Li, L., Johnson, J., Aarhus, W., & Shah, D. (2022). Key factors in MOOC pedagogy based on NLP sentiment analysis of learner reviews: What makes a hit. Computers & Education, 176, 104354. https://doi.org/10.1016/j.compedu.2021.104354

Littlejohn, A., & Hood, N. (2018). Reconceptualising learning in the digital age. Springer. https://doi.org/10.1007/978-981-10-8893-3_5

Liu, S., Liu, S., Liu, Z., Peng, X., & Yang, Z. (2022). Automated detection of emotional and cognitive engagement in MOOC discussions to predict learning achievement. Computers & Education, 181, 104461. https://doi.org/10.1016/j.compedu.2022.104461

Mrhar, K., Douimi, O., & Abik, M. (2021). A dropout predictor system in MOOCs based on neural networks. Journal of Automation, Mobile Robotics and Intelligent Systems, 14(4), 72-80. https://doi.org/10.14313/JAMRIS/4-2020/48

Nanda, G., A. Douglas, K., R. Waller, D., E. Merzdorf, H., & Goldwasser, D. (2021). Analyzing large collections of open-ended feedback from MOOC learners using LDA topic modeling and qualitative analysis. IEEE Transactions on Learning Technologies, 14(2), 146-160. https://doi.org/10.1109/TLT.2021.3064798

Nie, Y., Luo, H., & Sun, D. (2021). Design and validation of a diagnostic MOOC evaluation method combining AHP and text mining algorithms. Interactive Learning Environments, 29(2), 315-328. https://doi.org/10.1080/10494820.2020.1802298

OpenupEd. (n.d.). OpenupEd quality label. Retrieved October 7, 2022 from https://www.openuped.eu/quality-label

Ossiannilsson, E. (2020). Quality models for open, flexible, and online learning. Journal of Computer Science Research, 2(4). https://doi.org/10.30564/jcsr.v2i4.2357

Osuna-Acedo, S. (2021). Gamification and MOOCs. In D. Frau-Meigs, S. Osuna-Acedo, & C. Marta-Lazo (Eds.), MOOCs and the participatory challenge: From revolution to reality (pp. 89-101). Springer International. https://doi.org/10.1007/978-3-030-67314-7_6

Pilli, O., Admiraal, W., & Salli, A. (2018). MOOCs: Innovation or stagnation? Turkish Online Journal of Distance Education, 19(3), 169-181. https://doi.org/10.17718/tojde.445121

Quality Assurance Agency for Higher Education. (n.d.). The quality code. Retrieved October 7, 2022 from https://www.qaa.ac.uk/quality-code

Quality Matters. (n.d.). Quality matters. Retrieved November 22, 2022, from https://www.qualitymatters.org/

Quiliano-Terreros, R., Ramirez-Hernandez, D., & Barniol, P. (2019). Systematic Mapping Study 2012-2017 : Quality and Effectiveness Measurement in MOOC. Turkish Online Journal of Distance Education, 223 247. https://doi.org/10.17718/tojde.522719

Quintana, R. M., & Tan, Y. (2019). Characterizing MOOC Pedagogies : Exploring Tools and Methods for Learning Designers and Researchers. Online Learning, 23( 4), Article 4. https://doi.org/10.24059/olj.v23i4.2084

Rahardja, U., Aini, Q., Graha, Y. I., & Tangkaw, M. R. (2019). Gamification framework design of management education and development in industrial revolution 4.0. Journal of Physics: Conference Series, 1364. https://doi.org/10.1088/1742-6596/1364/1/012035

Ray, S. (2019, February). A quick review of machine learning algorithms. In 2019 International conference on machine learning, big data, cloud and parallel computing (COMITCon; pp. 35-39). IEEE. https://doi.org/10.1109/COMITCon.2019.8862451

Rincón-Flores, E. G., Mena, J., & Montoya, M. S. R. (2020). Gamification: A new key for enhancing engagement in MOOCs on energy? International Journal on Interactive Design and Manufacturing, 14, 1379-1393. https://doi.org/10.1007/s12008-020-00701-9

Romero-Rodriguez, L. M., Ramirez-Montoya, M. S., & Gonzalez, J. R. V. (2019). Gamification in MOOCs : Engagement application test in energy sustainability courses. IEEE Access, 32093 32101. https://doi.org/10.1109/ACCESS.2019.2903230

Sabjan, A., Abd Wahab, A., Ahmad, A., Ahmad, R., Hassan, S., & Wahid, J. (2021). MOOC quality design criteria for programming and non-programming students. Asian Journal of University Education, 16(4), 61. https://doi.org/10.24191/ajue.v16i4.11941

Sezgin, S., & Yüzer, T. V. (2022). Analysing adaptive gamification design principles for online courses. Behaviour & Information Technology, 41(3), 485-501. https://doi.org/10.1080/0144929X.2020.1817559

Shukor, N. A., & Abdullah, Z. (2019). Using learning analytics to improve MOOC instructional design. International Journal of Emerging Technologies in Learning, 14(24), 6. https://doi.org/10.3991/ijet.v14i24.12185

Smyrnova-Trybulska, E., McKay, E., Morze, N., Yakovleva, O., Issa, T., Issa, T. (2019). Develop and implement MOOCs unit: A pedagogical instruction for academics, case study. In E. Smyrnova-Trybulska, P., Kommers, N. Morze, & J. Malach (Eds). Universities in the networked society. Critical studies of education (vol. 10, pp. 103-132). Springer. https://doi.org/10.1007/978-3-030-05026-9_7

Stoica, A. S., Heras, S., Palanca, J., Julián, V., & Mihaescu, M. C. (2021). Classification of educational videos by using a semi-supervised learning method on transcripts and keywords. Neurocomputing, 456, 637-647. https://doi.org/10.1016/j.neucom.2020.11.075

Stracke, C. M., Tan, E., Moreira Texeira, A., Texeira Pinto, M. do C., Vassiliadis, B., Kameas, A., & Sgouropoulou, C. (2018). Gap between MOOC designers’ and MOOC learners’ perspectives on interaction and experiences in MOOCs: Findings from the global MOOC quality survey. 2018 IEEE 18th International Conference on Advanced Learning Technologies (pp. 1-5). https://doi.org/10.1109/ICALT.2018.00007

Stracke, C. M., & Trisolini, G. (2021). A systematic literature review on the quality of MOOCs. Sustainability, 13(11), 5817. https://doi.org/10.3390/su13115817

Su, P. -Y., Guo, J. -H., & Shao, Q. -G. (2021). Construction of the quality evaluation index system of MOOC platforms based on the user perspective. Sustainability, 13(20), 11163. https://doi.org/10.3390/su132011163

Sun, G., & Bin, S. (2018). Topic Interaction Model Based on Local Community Detection in MOOC Discussion Forums and its Teaching. Educational Sciences: Theory & Practice, 18(6).

Sun, Y., Ni, L., Zhao, Y., Shen, X., & Wang, N. (2019). Understanding students’ engagement in MOOCs: An integration of self‐determination theory and theory of relationship quality. British Journal of Educational Technology, 50(6), 3156-3174. https://doi.org/10.1111/bjet.12724

Suwita, J., Kosala, R., Ranti, B., & Supangkat, S. H. (2019). Factors considered for the success of the massive open online course in the era of smart education: Systematic literature review. 2019 International Conference on ICT for Smart Society (pp. 1-5). https://doi.org/10.1109/ICISS48059.2019.8969844

Tjoa, A. M., & Poecze, F. (2020). Gamification as an enabler of quality distant education: The need for guiding ethical principles towards an education for a global society leaving no one behind. Proceedings of the 22nd International Conference on Information Integration and Web-Based Applications & Services (pp. 340-346). https://doi.org/10.1145/3428757.3429145

van der Zee, T., Davis, D., Saab, N., Giesbers, B., Ginn, J., van der Sluis, F., Paas, F., & Admiraal, W. (2018). Evaluating retrieval practice in a MOOC: How writing and reading summaries of videos affects student learning. Proceedings of the 8th International Conference on Learning Analytics and Knowledge (pp. 216-225). https://doi.org/10.1145/3170358.3170382

Wang, Q., Khan, M. S., & Khan, M. K. (2021). Predicting user perceived satisfaction and reuse intentions toward massive open online courses (MOOCs) in the COVID-19 pandemic: An application of the UTAUT model and quality factors. International Journal of Research in Business and Social Science, 10(2), 1-11. https://doi.org/10.20525/ijrbs.v10i2.1045

Wang, W., Guo, L., He, L., & Wu, Y. J. (2019). Effects of social-interactive engagement on the dropout ratio in online learning: Insights from MOOC. Behaviour & Information Technology, 38(6), 621-636. https://doi.org/10.1080/0144929X.2018.1549595

Wang, X., Lee, Y., Lin, L., Mi, Y., & Yang, T. (2021). Analyzing instructional design quality and students’ reviews of 18 courses out of the Class Central Top 20 MOOCs through systematic and sentiment analyses. The Internet and Higher Education, 50, 100810. https://doi.org/10.1016/j.iheduc.2021.100810

Wohlin, C. (2014). Guidelines for snowballing in systematic literature studies and a replication in software engineering. Proceedings of the 18th International Conference on Evaluation and Assessment in Software Engineering (pp. 1-10). https://doi.org/10.1145/2601248.2601268

Wong, B. T. (2021). A survey on the pedagogical features of language massive open online courses. Asian Association of Open Universities Journal, 16(1), 116-128. https://doi.org/10.1108/AAOUJ-03-2021-0028

Xiao, C., Qiu, H., & Cheng, S. M. (2019). Challenges and opportunities for effective assessments within a quality assurance framework for MOOCs. Journal of Hospitality, Leisure, Sport & Tourism Education, 24, 1-16. https://doi.org/10.1016/j.jhlste.2018.10.005

Xing, W. (2018). Exploring the influences of MOOC design features on student performance and persistence. Exploring the influences of MOOC design features on student performance and persistence. Distance Education, 40(1), 98-113. https://doi.org/10.1080/01587919.2018.1553560

Yuniwati, I., Yustita, A. D., Hardiyanti, S. A., & Suardinata, I. W. (2020). Development of assesment instruments to measure quality of MOOC-platform in engineering mathematics 1 course. Journal of Physics: Conference Series, 1567(2), 022102. https://doi.org/10.1088/1742-6596/1567/2/022102

Zhou, Y., & Li, M. (2020). Online course quality evaluation based on BERT. 2020 International Conference on Communications, Information System and Computer Engineering (pp. 255-258). https://doi.org/10.1109/CISCE50729.2020.00057

Towards Quality Assurance in MOOCs: A Comprehensive Review and Micro-Level Framework by Hanane Sebbaq and Nour-eddine El Faddouli is licensed under a Creative Commons Attribution 4.0 International License.