Volume 25, Number 4

Daniela Castellanos-Reyes1, Sandra Liliana Camargo Salamanca2, and David Wiley3

1Department of Teacher Education and Learning Sciences, North Carolina State University, Raleigh, North Carolina; 2Department of Educational Psychology, College of Education, University of Illinois Urbana-Champaign, Illinois; 3Lumen Learning, Portland, Oregon

Open educational resources (OER) have been praised for revolutionizing education. However, practitioners and instructors battle keeping OER updated and measuring their impact on students’ performance. Few studies have analyzed the improvement of OER over time in relation to achievement. This longitudinal study uses learning analytics through the open-source Resource Inspection, Selection, and Enhancement (RISE) analysis framework to assess the impact of continuous improvement cycles on students’ outcomes. Panel data (i.e., performance and use) from 190 learning objectives of OER of an introductory sociology course were analyzed using a hierarchical linear model. Results show that more visits to an OER do not improve student achievement, but continuous improvement cycles of targeted OER do. Iterative implementation of the RISE analysis for resource improvement in combination with practitioners’ expertise is key for students’ learning. Given that the RISE classification accounted for 65% of the growth of students’ performance, suggesting a moderate to large effect, we speculate that the RISE analysis could be generalized to other contexts and result in greater student gain. Institutions and practitioners can improve the OER’s impact by introducing learning analytics as a decision-making tool for instructional designers. Yet, user-friendly implementation of learning analytics in a “click-and-go” application is necessary for generalizability and escalation of continuous improvement cycles of OER and tangible improvement of learning outcomes. Finally, in this article, we identify the need for efficient applications of learning analytics that focus more on “learning” and less on analytics.

Keywords: open educational resources, OER, student performance, longitudinal analysis, learning analytics, higher education, RISE analysis

Open educational resources (OER) have been extensively used to create more equitable environments with the promise of closing the gap between historically underserved students and their peers (Caswell et al., 2008; Van Allen & Katz, 2020). Yet, students might find outdated OER that do not support actual learning despite the best efforts of instructional designers, practitioners, and institutions creating a sea of OER to share knowledge freely. Although practitioners can use, reuse, and remix OER to avoid reinventing the wheel, tools to help them identify which resources are updated or need attention are scant (Wiley, 2018). Ultimately, OER’s sustainability is challenged by a lack of a streamlined continuous improvement process that can identify which OER need further work (Avila et al., 2020; Wiley, 2007). Despite researchers finding evidence of OER’s effect on students’ performance (Colvard et al., 2018; Grimaldi et al., 2019; Tlili et al., 2023), practitioners have difficulty measuring the impact of OER’s continuous improvement on students’ learning. This ultimately creates greater challenges in securing funding for OER sustainability.

In response to instructional designers, instructors, and practitioner’s challenges in improving OER, Bodily et al. (2017) proposed the Resource Inspection, Selection, and Enhancement (RISE) framework that leverages analytics data and achievement data to categorize OER based on their effectiveness to support students’ performance and then inform instructional designers and content developers which OER need enhancement. Despite the promise to advance sustainability and continuous improvement of OER, the improvement is still bound to cross-sectional analysis highlighting a lack of knowledge on RISE’s long-term effects. Knight et al. (2017) called for greater emphasis on temporal analysis (e.g., longitudinal studies) in analytics-driven studies because educational research takes the passage of time as self-evident in learning processes but rarely conceptualizes or operationalizes it.

Accounting for time in analytics-supported educational research is crucial to estimate learners’ interactions and eventually move to predictions that support learning (Castellanos-Reyes et al., 2023). In the field of OER, Wiley (2012) first introduced the concept of “continuous improvement cycles” to OER to account for temporality in resource improvement. Specifically, continuous improvement responds to the static perspective that quality assurance in educational resources is a one-time snapshot without accounting for “constantly getting better” (Wiley, 2012, para. 2).

Furthermore, existing assessment indicators of OER have focused on proxy measures of quality such as authors’ credentials or interactivity rather than actual student learning. Avila et al. (2020) highlighted that most learning analytics work focuses on students’ interactions with platforms and learning management systems (LMS) overseeing the role of resources themselves. Furthermore, little work has applied learning analytics to enhance OER and examine their relationship to students’ performance. Therefore, it is essential to show practitioners and researchers the value of adopting learning analytic approaches that translate to efficient use of resources (i.e., labor and time) while evidencing gains in student achievement.

The purpose of this study was to explore how the continuous improvement of OER over time influences students’ performance by using panel data of OER evaluation metrics through a growth curve model. The goal was to investigate how OER evolve through time when the RISE analysis is applied.

OER have been praised for revolutionizing education by providing open access to information at a meager cost to students (Winitzky-Stephens & Pickavance, 2017). Institutional implementation of OER instead of traditional textbooks translates into millions of dollars of savings (Martin et al., 2017) for already-in-debt college students. However, the openness of OER does not mean without cost. Griffiths et al. (2020) calculated that OER cost an average of $65 per student to institutions. However, Barbier (2021) criticized that OER production comes with a high cost of free labor from instructors who often underestimate production costs (Aesoph, 2018).

An even more overlooked figure is the price of updating OER. The boom of open education in the early 2000s made educational institutions jump and devote resources to creating OER. Nowadays, countless digital repositories are developed at the highest quality in which academic rigor and resources are devoted to developing OER (Richardson et al., 2023). However, how these resources continue thriving and remain top-notch is a challenge. The production cycle that editorial houses and for-profit companies use to keep publishing updated and improved versions is well ahead of open-access counterparts. Therefore, it is crucial that instructors have the ability to estimate OER’s impact not only on students’ access to education but also on their performance to secure institutional and governmental funding.

Wiley (2012) questioned the sustainability of OER, specifically, the extent to which institutions devote funds to continue working on them after depletion and whether sustainability is at all part of the design and proposal of OER. If affordable continuous improvement is not part of the planning, OER and their repositories are unfortunately doomed to oblivion (Aesoph, 2018). Another risk of not accounting for the sustainability of OER is that institutions might fund endless OER and their repositories and create new products from scratch instead of improving what they already have (Müller, 2021)—in other words, reinventing the wheel. In part because developers need to show new products as proof of the value of their work and in part because it is challenging for institutions to show how the continuous improvement of OER ultimately benefits students’ performance, it is essential for institutions to direct measures of OER quality on how they relate to students’ actual learning. In response to these challenges, researchers and practitioners have proposed automated and learning analytic techniques to identify which OER need to be updated (Avila et al., 2017; Müller, 2021).

Educational researchers and practitioners have used a variety of instruments to identify the need to update OER, such as user experience surveys and peer expert identification (Richardson et al., 2023). However, these processes are resource expansive due to the low survey response rate, the cost of paying experts to review OER, and the time it takes to complete the process. One popular way to overcome this obstacle is by using large scales of data—also known as learning analytics—to identify educational resources “at risk” and needing improvement, eventually increasing students’ performance (Ifenthaler, 2015). For example, Giannakos et al. (2015) combined clickstream data, achievement data, and student log data from a LMS to identify which copyrighted videos needed to be updated. Likewise, Avila et al. (2017) first introduced the ATCE tool that focuses on creating and evaluating accessible OER. The ATCE tool supports teachers in developing OER by leveraging automatic accessibility evaluation, expert evaluation, and LMS capabilities (Avila et al., 2020). These data are later condensed in a dashboard that informs teachers on how accessible the OER is. However, the ATCE evaluation focuses on proxy quality measures rather than actual student usage or performance.

Researchers have made similar efforts to apply learning analytics to improve OER. However, there is still a need to evaluate how OER specifically impact students’ achievement and even so, if instructional designers’ efforts to update OER are related to student performance. Contemporary to the work of Avila et al. (2017) with the ATCE tool, Bodily et al. (2017) highlighted the need to provide OER quality assurance tools that are independent from a LMS. Independence from a LMS allows instructors and practitioners in under-resourced institutions to provide the highest quality of education with already available gratuitous platforms (Castellanos-Reyes et al., 2021). Bodily et al.’s (2017) answer to this need was the RISE framework and the subsequent publication of an open-source analytical package (Wiley, 2018) detailed in the following section.

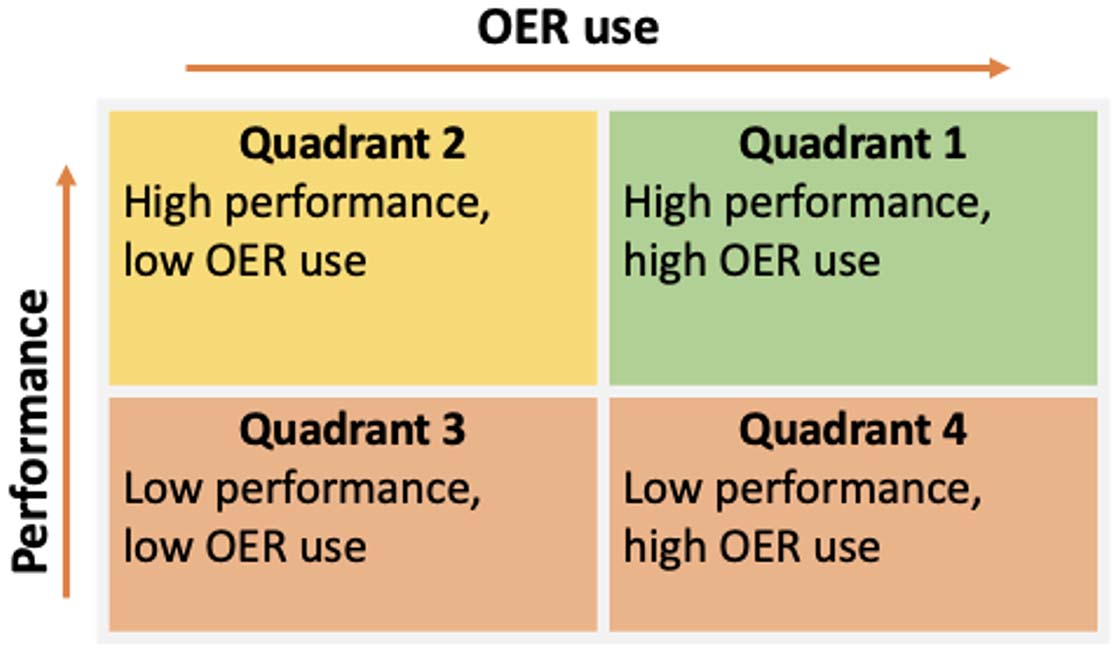

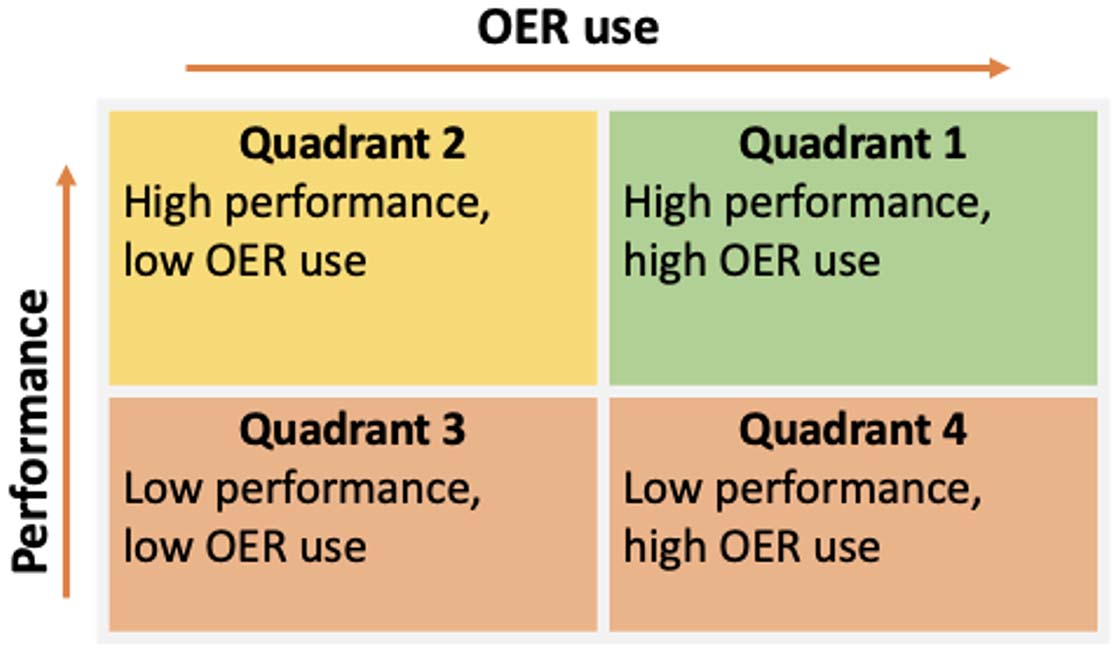

Bodily et al. (2017) proposed a learning analytic approach, called the RISE framework, that leverages digital trace data (i.e., number of views) and achievement data (i.e., scores in activities aligned to learning objectives) to identify resources that need further revision. The RISE framework is a two-by-two quadrant that classifies OER based on the relationship between students’ performance and use. The y-axis represents students’ performance on assessments aligned to a specific learning outcome (i.e., high vs. low grades), and the x-axis represents students’ interaction metrics with resources aligned to the same outcome (e.g., page views, time, and OER ratings). Quadrant 1 includes OER with greater than average use and greater than average grades. This is the ideal situation for any OER because it implies that the resource supported the student to achieve the outcome and that practitioners’ work on alignment between assessment and content was on target (Bodily et al., 2017). Quadrant 2 has OER with less than average use and greater than average grades. Potential explanations on why resources are in this quadrant include high student entry skills and knowledge, assessment was too easy (i.e., the difficulty parameter is below 0), and/or the resource failed to catch student attention (i.e., OER use) but allowed students to obtain a passing score (i.e., performance). Quadrant 3 identifies OER with less than average use and grades. Resources in this quadrant may indicate a lack of student engagement or challenging assessment items. Quadrant 3 may also indicate that resources were insufficient to support student assessment. Finally, quadrant 4 represents greater than average use and less than average grades. Although the RISE analysis is not interpreted as a continuum, resources in quadrant 4 are the ones that need priority attention from instructional designers as they indicate poor alignment between OER and assessment (Bodily et al., 2017). Figure 1 shows the RISE framework and its quadrants.

Simply falling into one of the quadrants that flag a need for further attention (quadrants 2–4) does not merit deploying resources for a continuous improvement cycle. On top of classifying an OER’s learning objective into a quadrant, the RISE analysis also uses an additive z -scores approach to identify outliers and trigger an intervention (Bodily et al., 2017). A cycle of continuous improvement is completed each time an OER is identified as an outlier needing further development using the quadrant and modified. Practitioners and researchers can use the RISE framework via the open R package (rise) (Wiley, 2018) to identify OER that need improvement based on the quadrant classification and apply an iterative approach towards OER sustainability.

Despite the RISE analysis practical application, Bodily et al. (2017) clarified that the framework focuses on flagging resources that need evaluation rather than providing pedagogical recommendations on how to improve them. It is up to the instructional designers’ expertise to diagnose why the OER is not supporting students’ outcomes as expected. The RISE analysis, as with most learning analytics approaches, does not provide an all-knowing ultimate verdict on learning (Pardo et al., 2015; Romero-Ariza et al., 2023) and, therefore, should be used with caution and evaluated on its effectiveness over time in students’ learning.

Figure 1

RISE Framework for Continuous Improvement of OER

Note. RISE = resource inspection, selection, and enhancement; OER = open educational resources.

Although we know that the RISE analysis estimates OER’s need for improvement (Bodily et al., 2017), we do not know whether applying the RISE analysis to improve OER significantly improves students’ achievement over time. Remediating this gap is urgent as OER are significant instruments of social transformation in education (Caswell et al., 2008) and more so with the accelerated need to transition to digital education (Tang, 2021). Furthermore, estimating the effectiveness of the RISE analysis would augment its generalizability and application to other OER. Such need opens these questions:

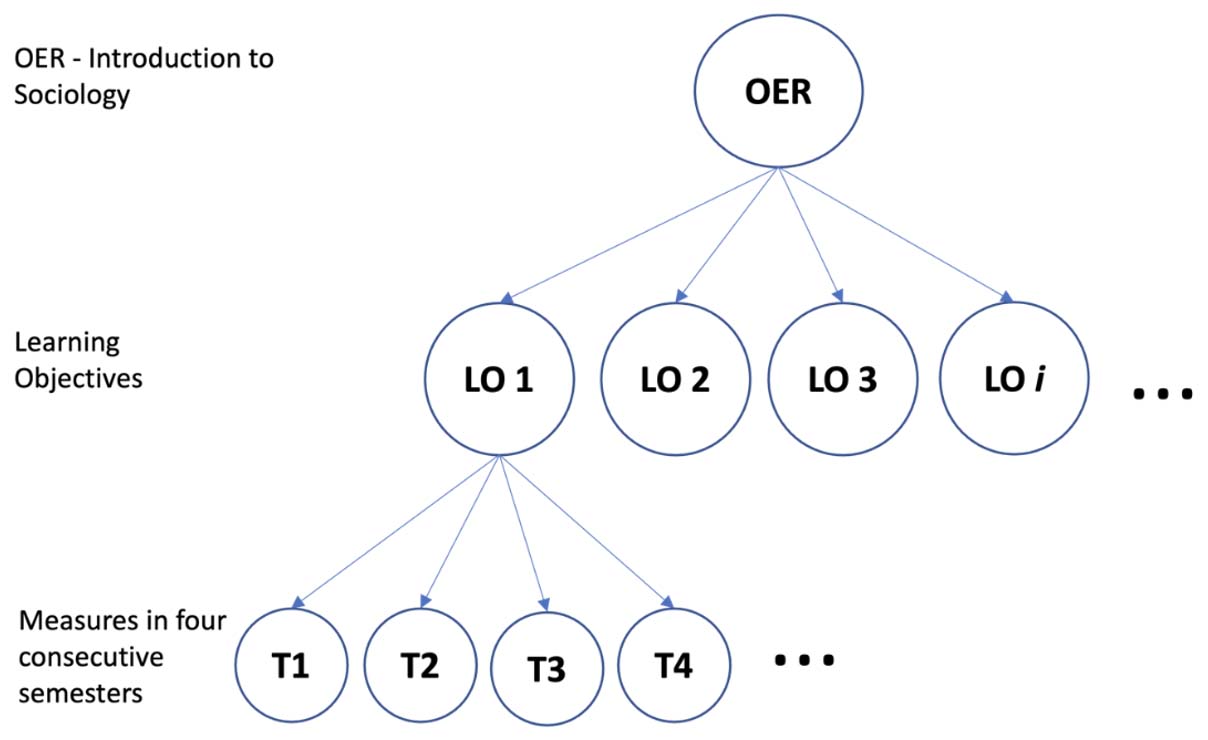

We used a multilevel approach (MLM), also known as hierarchical linear modeling, to estimate the effect of the RISE analysis on students’ achievement over time. MLM is a type of regression analysis in which researchers can establish nested analyses that could be hierarchical (e.g., students within classes within schools). Researchers also use MLM to conduct longitudinal analysis in which the same units of analysis are followed over time, creating a nested sample. Such an approach is called a growth curve model (GCM; Raudenbush & Bryk, 2002). GCM for longitudinal analyses is useful to account for individual variations (i.e., growth) over time and to identify the predictors of growth using a multiple-time point design. Ultimately, longitudinal analysis aims to “understand and characterize changes in an assessment measure over time” (Boscardin et al., 2022). Unlike other repeated longitudinal approaches, like the repeated measures analysis of variance (ANOVA), GCM is a flexible approach, tolerant to discrete variables, missing data (Boscardin et al., 2022), unequal time point measurements, and time-varying covariates (Curran et al., 2010). Figure 2 shows the nested data structure.

Figure 2

Illustration of Special Growth Curve Model as a Special Application of a Multilevel Model

Note. OER = open educational resources; LO = learning objective; T = time-wave.

Data was collected from the OER-based curriculum of an undergraduate course titled Introduction to Sociology (https://lumenlearning.com/courses/introduction-to-sociology/) provided by the educational company Lumen over four consecutive semesters from spring 2020 to fall 2021. The units of analysis were 190 learning objectives associated with the OER used in the course. No granular student data was used in this study. Data was obtained from users’ interaction (i.e., visits to an OER) and achievement (i.e., quizzes) with the OER associated with each learning objective. The course had 18 modules covering topics such as “Stratification and Inequality,” “Population, Urbanization, and the Environment,” and “Work and the Economy.” Each module had between two and four learning objectives, with three being the most common number per module. Sample learning objectives included “Explain global stratification,” “Distinguish mechanical solidarity from organic solidarity,” and “Describe demographic measurements like fertility and mortality rates.” All OER used in the course were aligned with individual learning objectives. Assessment items were multiple-choice questions and fill-in-the-blank questions. Many of the materials used in this course were based on the OpenStax Sociology 2e text (https://openstax.org/books/introduction-sociology-2e/pages/1-introduction-to-sociology).

The spring 2020 run of the course had 127 learning objectives, at which point 63 learning objectives were added to the remaining runs of the course for a total of 190. Sample learning objectives added included “Discuss how symbolic interactionists view culture and technology” and “Define globalization and describe its manifestation in modern society.” The remaining three runs of the course had all 190 learning objectives. Despite the first wave of data having had fewer learning objectives, we decided to include it in the analysis to increase power in the sample size and improve statistical precision (Raudenbush & Liu, 2001). All materials were made available with the Creative Commons license CC BY 4.0, which indicates that users can share and adapt the material under the attribution terms. Readers can request access to the full course content and outcomes via the Lumen website (https://lumenlearning.com/courses/introduction-to-sociology/).

Students’ quiz scores related to each OER were used as performance data and were collected through an OER courseware platform. Assessment data from all questions aligned with the same learning objective was aggregated to obtain the average score. For each learning objective, the dataset contained an average student performance per academic semester (see Time–Wave).

The pageviews that an OER received were used as a proxy measure for OER interaction and were collected through Google Analytics. Given that pageviews alone do not measure actual student use, OER’s pageviews were normalized “by the number of students who attempted assessment” (Bodily et al., 2017, p. 111) for a learning objective. For each learning objective, there was a single value of OER use.

OER were assessed over four consecutive semesters from spring 2020 to fall 2021. Spring 2020 used as the reference wave. Our analysis focused on the OER aggregated end-of-semester metrics. The metrics collected at the end of each semester served to flag which OER needed further attention (i.e., update) before the start of the next semester. OER review and subsequent updates/changes were considered a continuous improvement cycle.

RISE analysis classifies the learning objectives in the quadrants explained previously (see Figure 1 and Bodily et al., 2017). For this study, the RISE quadrant is a categorical variable. For the analyses below, dummy variables encoded membership in a particular quadrant (i.e., quadrants 2, 3, and 4). The reference category for all the dummy variables, encoded by zero, was quadrant 1.

In this study, we used GCMs to follow the change in students’ performance on each learning objective over time with respect to OER use (RQ1, see Figure 2) and RISE analysis classification (RQ2). The R package “rise” version 1.0.4 (Wiley, 2018) was used for the RISE analysis classification. Two sequences of models, differing in their respective full models, were developed, one per each research question. Both sequences shared the same base null model, a model where average performance varies among OER. With this model, we sought to determine how much of the variance in students’ performance varies across learning objectives. The following was the equation for the null model:

| Performanceij = β00 + r0j + εij | (1) |

where Performanceij was the average performance in learning objective j at wave i; β00 was the average performance across all learning objectives and waves, r0j was the difference between the average performance of learning objective j and the overall average; and εij was the wave learning objective residuals.

The two sequences shared the model where time was incorporated into the model. The growth model sought to identify the change in performance over the four semesters. The equation that represents this model was:

| Performanceij = β00 + β10Wavei + r0j + rijWavei + εij | (2) |

where Wavei was the wave, β10 was the average growth rate across all learning objectives, and rij was the difference between the growth rate of learning objective j and the average growth rate. All other terms are defined as in Equation 1, the null model.

The full OER use model was defined to determine to what extent OER use is related to a systematic growth in students’ achievement across four consecutive semesters. It was obtained by adding a fixed slope for the standardized OER use measure and its interaction with the wave to the growth model. The resulting equation for this full model was:

| Performanceij = β00 + β10Wavei + β01OERusej + β11(OERusej * Wavei) + r0j + rijWavei + εij | (3) |

where β01 was the fixed effect of OER use of learning objective j on performance and β11 was the fixed effect of the interaction of OER use of learning objective j and wave i on performance. All the other terms were defined in equations 1 and 2.

The full RISE model sought to identify to what extent the RISE analysis of OER is related to a systematic growth in students’ performance across four consecutive semesters. The equation for this model was:

| Performanceij = β00 + β10Wavei + β02Quadrant2j + β03Quadrant3j + β04Quadrant4j + β12(Quadrant2j * Wavei) + β13(Quadrant3j * Wavei) + β14(Quadrant4j * Wavei) + r0j + rijWavei + εij | (4) |

where β02, β03, and β04 were fixed effects of the learning objective being classified in RISE quadrants 2, 3, or 4 on performance. Quadrant 1 served as reference group in the model. The β12, β13, β14 were the interactions between a learning objective being classified in one of the RISE quadrants j and the wave i on performance. All other terms were defined in equations 1, 2, and 3.

A combined model using OER use and RISE classification was not developed because OER use and RISE classification are highly colinear due to OER use being part of the RISE classification analysis.

The RISE analysis following learning objectives over time allowed us to identify which learning objectives had consistently low performance. Line charts represented student performance in OER associated with specific learning objectives. The worst performing learning objectives fell in the outermost region of quadrant 4 based on the additive z -score approach implemented in the RISE analysis (Wiley, 2018). Potential multicollinearity issues were mitigated given that variables were standardized.

Table 1 shows the descriptive statistics per wave and the percentage of learning objectives classified in each quadrant. Columns one and two in Table 1 show that the highest OER use was in spring 2020; however, this was also the semester with the lowest performance which could be explained by the pandemic outburst. It is worth noting that fall 2021 had the lowest OER use average without sacrificing performance average, indicating an improvement in OER efficiency for student performance. Preliminary examination of resource classification using the RISE analysis indicates greater variation in quadrants 2, 3, and 4. The percentage of resources classified in quadrant 1 is somehow stable over time. Unlike quadrant 1, the other quadrants show variations in the percentage of resources classified. Quadrant 2 showed an upward trend in 2020 and a slight decrease in fall 2021. Quadrant 3 showed a decrease in fall 2020 and then an upward trend until the last wave. Quadrant 4 indicated an increase from wave 1 to 2 and then a sharp decrease between wave 3 and 4.

Table 1

Descriptive Statistics Per Wave and Rate of Classified Learning Objectives Per Quadrant

| Wave | N | OER use M (SD) | Performance M (SD) | Q1 % | Q2 % | Q3 % | Q4 % |

| Spring 2020 | 127 | 0.84 (0.26) | 0.79 (0.13) | 29.13 | 23.62 | 19.68 | 27.55 |

| Fall 2020 | 190 | 0.74 (0.29) | 0.8 (0.09) | 30 | 23.15 | 16.84 | 30 |

| Spring 2021 | 190 | 0.72 (0.33) | 0.82 (0.08) | 27.89 | 26.84 | 20.52 | 24.73 |

| Fall 2021 | 190 | 0.33 (0.14) | 0.81 (0.08) | 29.47 | 25.26 | 25.78 | 19.47 |

Note. OER use and Performance are standardized. OER = open educational resources; Q1 = quadrant 1; Q2 = quadrant 2; Q3 = quadrant 3; Q4 = quadrant 4. M = mean. SD = standard deviation.

Table 2 shows the correlation among variables per wave. Waves 1 and 3, corresponding to spring 2020 and 2021 respectively, are displayed below the diagonal. Waves 2 and 4, that indicate fall 2020 and 2021, are above the diagonal. Preliminary inspection of correlations suggests that the largest negative correlations are between performance and quadrant 4 and between OER use and quadrant 1. As expected, the largest positive correlations are between performance and quadrant 1.

Table 2

Correlations Among Variables During Waves 1 to 4

| Spring 2020 (Wave 1) – Fall 2020 (Wave 2) | Spring 2021 (Wave 3) – Fall 2021 (Wave 4) | |||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 1 | 2 | 3 | 4 | 5 | 6 | |

| 1. Perf. | — | -.22 | .53 | .34 | -.38 | -.54 | — | .12 | .45 | .42 | -.58 | -.34 |

| 2. Use | -.21 | — | -.59 | .45 | -.37 | .48 | -.16 | — | -.46 | .55 | -.49 | .48 |

| 3. Q1 | .54 | -.54 | — | -.36 | -.29 | -.43 | .53 | -.51 | — | -.38 | -.38 | -.32 |

| 4. Q2 | .39 | .43 | -.36 | — | -.25 | -.36 | .35 | .48 | -.38 | — | -.34 | -.29 |

| 5. Q3 | -.37 | -.45 | -.32 | -.28 | — | -.29 | -.35 | -.42 | -.32 | -.31 | — | -.29 |

| 6. Q4 | -.59 | .54 | -.40 | -.34 | -.31 | — | -.59 | .44 | -.36 | -.35 | -.29 | — |

Note. The results for waves 1 (spring 2020) and 2(spring 2021) are shown below the diagonal, while waves 2 (fall 2020) and 4 (fall 2021) are above. Perf. = performance; Use = OER use; Q1 = quadrant 1; Q2 = quadrant 2; Q3 = quadrant 3; Q4 = quadrant 4.

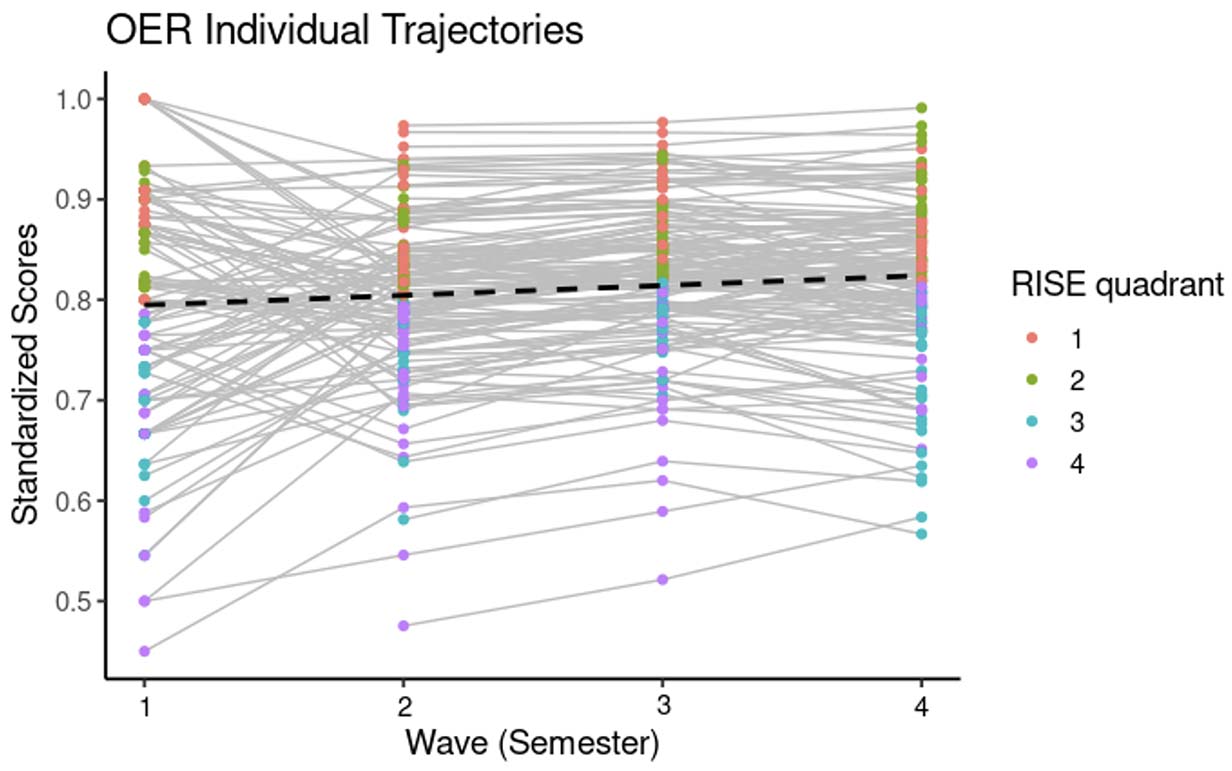

Of the 190 learning objectives, 135 changed at least once. Figure 3 shows a line plot of the learning objectives that changed quadrant over time in relation to student performance. Colors indicate quadrant change. The dashed line shows the linear pattern that the data follows, indicating that over time there was a slight increase in student performance. Ideally, learning objectives should move down quadrants over time, indicating that continuous improvement cycles triggered by the RISE analysis are improving OER quality. Visual examination of the fourth wave of data shows a decrease in learning objectives classified in quadrant 4, suggesting quality improvement.

Figure 3

Trajectory of Learning Objectives that Changed RISE Quadrant Over Time (N = 135)

Note. RISE = resource inspection, selection, and enhancement; OER = open educational resources.

The modelling results showing average score growth in the Introduction to Sociology course are displayed in Table 3.

The average performance among learning objectives was 0.80, p < .001. The interclass correlation (ICC) calculated with the null model shows that the learning objectives explained 77% of the variance in performance. The larger the variation, the more appropriate the use of a GCM. A 77.2% of variation justifies the nesting effect of the individual learning objectives over time. In other words, individual differences in performance per the learning objectives exist.

As summarized in Table 1 in the Growth Model (unconditional growth model), the mean growth rate was statistically different from zero [β10 = 0.0074, t(189) = 3.172, p < .001]. The average performance for learning objectives was 0.796, p < .001. The average performance significantly increased by 0.0074 points every semester (p = .002).

OER Use Model

The results of the OER use model showed that the mean performance for learning objectives was 0.837 [β00 = 0.837, t(189) = 46.92, p < .001]. For every view per OER, performance per learning objective decreased on average by 0.06 points [β01 = -0.059, t(189) = -2.772, p = .006]. The mean growth rate of performance decreased on average for every semester by 0.01. However, this decrease was not statistically significant [β10 = -0.01, t(189) = -1.806]. The student performance of learning objectives with higher OER use increases, on average, by 0.036 per semester [β11 = 0.0296, t(189) = 3.766, p < .001].

In the OER use model, OER use per learning objective accounted for 4% of students’ performance variance and 0.01% of students’ performance growth rates variance. Although OER use is significant in explaining the initial point differences and variation in growth, the practical significance is small. It is likely that the significance was due to the large sample size, and therefore, OER use is trivial to an increased student performance over time.

RISE Model

The results of the RISE model focused on the relation between the RISE analysis classification and performance. Results showed that the mean performance was 0.858 [β00 = 0.858, t(189) = 113.27, p < .001]. On average, performance decreased over time at 0.0014 [β10 = -0.0014, t(189) = -.474, p = .635]. Quadrant 1 (i.e., high performance, high use) served as the reference category to compare the effect of the RISE analysis classification on performance. As expected, the effect of the RISE analysis on performance was negative for specific quadrants. Quadrants 3 {i.e., low performance, low use; [β01 = -0.1325, t(189) = -13.29, p < .001]} and 4 {i.e., low performance, high use; [β01 = -0.1312, t(189) = -13.16, p < .001]} were statistically significant, negatively associated with lower starting points in students’ performance. However, the interaction between the RISE analysis classification and time showed that performance per the learning objectives classified in quadrant 3 significantly increased by 0.0148 [β11 = 0.0148, t(189) = 3.22, p = .01] with respect to learning objectives in quadrant 1. Likewise, performance in learning objectives classified in quadrant 4 also increased significantly by 0.0213 [β11 = 0.0221, t(189) = 4.78, p < .001]. The results indicate that the classification of learning objectives using the RISE analysis triggered continuous improvement cycles of the OER, addressing quality issues and resulting in better students’ performance over time.

In the RISE model, the data showed that using the RISE analysis classification in the model accounts for 65% of the variation in students’ performance over time. Therefore, we conclude that the effect of the RISE analysis and subsequent continuous improvement cycles is moderate to large.

Table 3

Linear Model of Growth in Average Standardized Score by OER Use and RISE Analysis Quadrants

| Effect | Parameter | Null model Model 1 | Growth Model 2 | OER use Model 3 | RISE Model 4 | ||||

| Fixed | Coefficient | SE | Coefficient | SE | Coefficient | SE | Coefficient | SE | |

| Performance (intercept) | β00 | 0.8081*** | 0.0062 | 0.7960*** | 0.007 | 0.837*** | 0.0178 | 0.8588*** | 0.0075 |

| OER use | β01 | -0.0587** | 0.0211 | ||||||

| Time–wave | β10 | 0.0074** | 0.002 | -0.0108 | 0.0059 | -0.0014 | 0.003 | ||

| OER use ✕ wave | β11 | 0.0296** | 0.0078 | ||||||

| Quadrant 2 | β02 | -0.0112 | 0.010 | ||||||

| Quadrant 3 | β03 | -0.1325*** | 0.009 | ||||||

| Quadrant 4 | β04 | -0.1312*** | 0.009 | ||||||

| Quadrant 2 ✕ wave | β12 | 0.0026 | 0.004 | ||||||

| Quadrant 3 ✕ wave | β13 | 0.0148** | 0.004 | ||||||

| Quadrant 4 ✕ wave | β14 | 0.0221*** | 0.004 | ||||||

| Random | Variance Component | SD | Variance Component | SD | Variance Component | SD | Variance Component | SD | |

| Individual intercept variance (between learning objectives) | r0j | 0.0067 | 0.0826 | 0.0109 | 0.1044 | 0.01047 | 0.1023 | 0.0038 | 0.06181 |

| Wave variance (within learning objectives) | rij | 0.0006 | 0.0255 | 0.0006 | 0.0255 | 0.0002 | 0.01515 | ||

| Model summary | |||||||||

| ICC | 72.2% | 84.1% | 84.2% | 70.1% | |||||

| AIC | -1711.9 | -1822.9 | -1835.1 | -2148.2 | |||||

| BIC | -1698.3 | -1795.6 | -1798.7 | -2093.6 | |||||

Note. The number of observations were n = 697. Interclass correlation coefficient = .52. Model 1 is an unconditional growth model. Model 2 adds the main effect of OER use per learning objective. Model 3 uses RISE analysis as a predictor only. Model 4 shows interaction between RISE analysis and time. The ICC for Model 1 is 0.84. In Model 1, was the average performance across all learning objectives and waves.

In Model 2 and 3, β10 was the average growth rate across all learning objectives. In Model 3, β11 was the fixed effect of the interaction of OER use of learning objective j and wave i on performance. In Model 4, β02, β03, and β04 were the fixed effects of the learning objective being classified in RISE quadrants 2, 3, or 4 on performance. In Model 4, β12, β13, and β14 were the interactions between a learning objective being classified in one of the RISE quadrants j and the wave i on performance. The random effects r0j was the difference between the average performance of learning objective j and the overall average and rij was the difference between the growth rate of learning objective j and the average growth rate. OER = open educational resources; RISE = resource inspection, selection, and enhancement; ICC = interclass correlation coefficient; AIC = akaike information criterion; BIC = bayesian information criterion; SD = standard deviation; SE = standard errors. ✕ = Interaction term. *p < .05. **p < .01. ***p < .001.

This study examined the role of OER use on students’ performance over time and how the RISE analysis classification was related to a growing trend in students’ performance. A longitudinal multilevel model (i.e., GCM) was used to investigate growing trends based on data gathered at the end of four consecutive semesters. The results suggest that there is an inverse relationship between OER quality based on the RISE analysis classification and OER use. Furthermore, certain OER classifications based on the RISE analysis resulted in increased student performance over time. We assume that such improvement is presumably due to practitioners’ efforts to improve OER quality through continuous improvement cycles. Furthermore, whilst improvements in student performance have been demonstrated by the models, other moderator variables may well account for this.

Resource use (i.e., accessing content, watching videos) is traditionally associated with higher performance (i.e., grades; Bonafini et al., 2017). In this study, OER use had a small but positive effect on students’ outcomes at the initial measurement time, but the effect of OER use became negative over time. Although this study showed a negative association between OER use and students’ performance, this result is not necessarily negative. The results may indicate that students who revisit OER need extra support and potentially that the OER is not enough to support the learner. We speculate that there is a need to shift the conversation from “mindless” engagement, in which more is better, to “meaningful engagement,” in which efficiency is measured by reduced use. Although previous learning analytics work has used page views as the metric for user engagement (Bonafini et al., 2017) and proxy of OER quality, we argue that future research would benefit from questioning the meaning of student engagement regarding OER. Foundational work in the field of distance learning opened the conversation about meaningful ways to approach students’ engagement and interaction by positing that “interaction is not enough” (Garrison & Cleveland-Innes, 2005, p. 133). Echoing their perspective, we now shift the focus to “views are not enough” in OER.

Unsurprisingly, quadrants 3 and 4 showed a negative effect on students’ performance. However, the interaction between quadrants 3 and 4 and time increased students’ performance over semesters, suggesting that OER classified in these two quadrants were efficiently improved. Rather than making causal claims, we speculate that the RISE analysis classification could be positively related to students’ performance when intentionally applied over time and when developers and instructional designers deploy their expertise for OER improvement. Assuming that flagged OER are intentionally improved, the RISE analysis helps practitioners and authors to focus their efforts on quality improvement activities. Furthermore, this is a cost-savings opportunity for educational institutions that often struggle with budgetary constraints, even more so with open education initiatives. Altogether, our findings in Model 3 suggest that RISE analysis classification is positively correlated with students’ performance when accounting for time, specifically with quadrants 3 and 4. Considering that only quadrants 3 and 4 showed statistically significant improvement, we suggest that a potential simplification of the RISE framework that includes only three categories could be worth examination. Future work on the RISE analysis could focus on more algorithmic-driven classifications through unsupervised machine learning to validate or improve the current classification.

As educational interventions tend to account for minor variances in performance (Hattie et al., 2014), we are confident that the RISE analysis is an efficient and cost-effective intervention for OER implementation that directly influences students’ learning. Given that the RISE classification accounts for 65% of the growth of students’ performance, suggesting a moderate to large effect, we speculate that the RISE analysis could be generalized to other contexts and result in greater student gain. Our findings are consistent with the premise that RISE analysis improves OER quality and, eventually, students’ learning (Bodily et al., 2017). We believe the RISE analysis answers the call from researchers in the learning analytics community who urge researchers and developers to focus more on “learning” and less on the analytics.

We call on researchers for the development of a user-friendly (i.e., click and go) application in which practitioners can run the RISE analysis in a cross-sectional and longitudinal fashion to evaluate whether the continuous improvement efforts are fruitful. Like the ATCE tool proposed by Avila et al. (2017), RISE aims to inform practitioners and educators about OER quality. Although Avila et al.’s (2017) work focused on using platforms for quality improvement of OER, those platforms are often targeted at specific learning management systems and include an array of variables. The RISE analysis is an efficient system that is already open access, can be adapted to a plethora of platforms, and requires minimal guidance. However, RISE analyses of OER are still subject to back-end data preprocessing before practitioners can use them. Even though RISE analysis is an open-source package, practitioners still need familiarity and data literacy skill to exploit its full potential. Therefore, future work for practitioners and researchers can focus on building integrated dashboards and applications to respond to such needs. This need is critical since RISE analysis is not meaningful if practitioners do not have buy-in to implement it in their everyday workflow.

Finally, in agreement with previous work that calls for temporal analysis of educational data (Castellanos-Reyes et al., 2023; Knight et al., 2017), this study calls for integrating granular temporal data that allows for continuous feedback on OER quality. In this way, researchers and practitioners could address improvement opportunities faster, meeting the learning analytics claim of informing educational decision-making in real time (Ifenthaler, 2015).

Given that the RISE framework includes students’ performance to make the learning objective classification, we addressed potential threats of multicollinearity by inspecting the correlations among variables and investigating model estimation changes with and without adding the RISE classification. Specifically, in Model 3 (OER use), the intercept and the growth rate output reflected the same results of Model 2 (RISE), showing that multicollinearity does not have a particularly adverse effect in these measures. This study focused on data from the humanities. Therefore, further exploration needs to be done in STEM disciplines. No interactions at level two were explored in this GCM model, given the limited number of independent variables and repeated measures. Future work could explore the role of different types of learning objectives following a taxonomy to observe the interaction between potential second-level predictors. However, taxonomies for learning objectives have been criticized (Owen Wilson, 2016); nevertheless, practitioners widely implement them.

More visits to OER do not increase performance per the learning objectives, but continuous improvement over time of flagged OER does. This study shows strong evidence that the iterative RISE analysis using learning analytics is related to improving students’ performance over time. Further studies might investigate if the trend holds when accounting for the interaction between the type of OER’s learning objective and student performance. OER significantly benefits students who have been historically underserved in higher education (Colvard et al., 2018), such as racialized minorities and students in developing regions (Castellanos-Reyes et al., 2021; Castellanos-Reyes et al., 2022). We believe that a closer look at leveraging the continuous improvement of OER will ultimately benefit the populations systematically priced out of access to high-quality education and educational resources (Spurrier et al., 2021).

Aesoph, L. M. (2018). Self-publishing guide. BCcampus. https://opentextbc.ca/selfpublishguide/

Avila, C., Baldiris, S., Fabregat, R., & Graf, S. (2017). ATCE: An analytics tool to trace the creation and evaluation of inclusive and accessible open educational resources. In I. Molenaar, X. Ochoa, & S. Dawson (Chairs), LAK ’17: Proceedings of the Seventh International Learning Analytics & Knowledge Conference (pp. 183-187). ACM. https://dl.acm.org/doi/10.1145/3027385.3027413

Avila, C., Baldiris, S., Fabregat, R., & Graf, S. (2020). Evaluation of a learning analytics tool for supporting teachers in the creation and evaluation of accessible and quality open educational resources. British Journal of Educational Technology, 51(4), 1019-1038. https://doi.org/10.1111/bjet.12940

Barbier, S. (2021, October 18). The hidden costs of open educational resources. Inside Higher Ed. https://www.insidehighered.com/views/2021/10/19/arguments-favor-oer-should-go-beyond-cost-savings-opinion

Bodily, R., Nyland, R., & Wiley, D. (2017). The RISE Framework: Using learning analytics for the continuous improvement of open educational resources. The International Review of Research in Open and Distributed Learning, 18(2). https://doi.org/10.19173/irrodl.v18i2.2952

Bonafini, F. C., Chae, C., Park, E., & Jablokow, K. W. (2017). How much does student engagement with videos and forums in a MOOC affect their achievement? Online Learning, 21(4). https://doi.org/10.24059/olj.v21i4.1270

Boscardin, C. K., Sebok-Syer, S. S., & Pusic, M. V. (2022). Statistical points and pitfalls: Growth modeling. Perspectives on Medical Education, 11(2), 104-107. https://doi.org/10.1007/S40037-022-00703-1

Castellanos-Reyes, D., Maeda, Y., & Richardson J. C. (2021). The relationship between social network sites and perceived learning and satisfaction: A systematic review and meta-analysis. In M. Griffin & C. Zinskie (Eds.), Social media: Influences on education (pp. 231-263). Information Age Publishing. https://www.researchgate.net/publication/354935042_The_relationship_between_social_network_sites_and_perceived_learning_and_satisfaction_A_systematic_review_and_meta-analysis

Castellanos-Reyes, D., Romero-Hall, E., Vasconcelos, L. & García, B. (2022). Mobile learning in emergency situations: Four design cases from Latin America. In V, Dennen, C, Dickson-Deane, X. Ge, D. Ifenthaler, S. Murthy, & J. Richardson (Eds.), Global perspective on educational innovations for emergency solutions (1st Ed., pp. 89-98). Springer Cham. https://doi.org/10.1007/978-3-030-99634-5

Castellanos-Reyes, D., Koehler, A. A., & Richardson, J. C. (2023). The i-SUN process to use social learning analytics: A conceptual framework to research online learning interaction supported by social presence. Frontiers in Communication, 8, 1212324. https://doi.org/10.3389/fcomm.2023.1212324

Caswell, T., Henson, S., Jensen, M., & Wiley, D. (2008). Open content and open educational resources: Enabling universal education. The International Review of Research in Open and Distributed Learning, 9(1). https://doi.org/10.19173/irrodl.v9i1.469

Colvard, N. B., Watson, C. E., & Park, H. (2018). The impact of open educational resources on various student success metrics. International Journal of Teaching and Learning in Higher Education, 30(2), 262-276. https://www.isetl.org/ijtlhe/pdf/IJTLHE3386.pdf

Curran, P. J., Obeidat, K., & Losardo, D. (2010). Twelve frequently asked questions about growth curve modeling. Journal of Cognition and Development, 11(2), 121-136. https://doi.org/10.1080/15248371003699969

Garrison, D. R., & Cleveland-Innes, M. (2005). Facilitating cognitive presence in online learning: Interaction is not enough. American Journal of Distance Education, 19(3), 133-148. https://doi.org/10.1207/s15389286ajde1903_2

Giannakos, M. N., Chorianopoulos, K., & Chrisochoides, N. (2015). Making sense of video analytics: Lessons learned from clickstream interactions, attitudes, and learning outcome in a video-assisted course. The International Review of Research in Open and Distributed Learning, 16(1). https://doi.org/10.19173/irrodl.v16i1.1976

Griffiths, R., Mislevy, J., Wang, S., Shear, L., Ball, A., & Desrochers, D. (2020). OER at scale: The academic and economic outcomes of Achieving the Dream’s OER Degree Initiative. SRI International. https://www.sri.com/publication/education-learning-pubs/oer-at-scale-the-academic-and-economic-outcomes-of-achieving-the-dreams-oer-degree-initiative/

Grimaldi, P. J., Basu Mallick, D., Waters, A. E., & Baraniuk, R. G. (2019). Do open educational resources improve student learning? Implications of the access hypothesis. PLOS ONE, 14(3), Article e0212508. https://doi.org/10.1371/journal.pone.0212508

Hattie, J., Rogers, H. J., & Swaminathan, H. (2014). The role of meta-analysis in educational research. In A. D. Reid, E. P. Hart, & M. A. Peters (Eds.), A companion to research in education (pp. 197-208). https://doi.org/10.1007/978-94-007-6809-3

Ifenthaler, D. (2015). Learning analytics. In J. M. Spector (Ed.), The SAGE encyclopedia of educational technology (Vol. 2, pp. 447-451). SAGE.

Knight, S., Friend Wise, A., & Chen, B. (2017). Time for change: Why learning analytics needs temporal analysis. Journal of Learning Analytics, 4(3), 7-17. https://doi.org/10.18608/jla.2017.43.2

Martin, M. T., Belikov, O. M., Hilton, J., III, Wiley, D., & Fischer, L. (2017). Analysis of student and faculty perceptions of textbook costs in higher education. Open Praxis, 9(1), 79-91. https://doi.org/10.5944/openpraxis.9.1.432

Müller, F. J. (2021). Say no to reinventing the wheel: How other countries can build on the Norwegian model of state-financed OER to create more inclusive upper secondary schools. Open Praxis, 13(2), 213-227. https://doi.org/10.5944/openpraxis.13.2.125

Owen Wilson, L. (2016). Anderson and Krathwohl Bloom’s Taxonomy revised. Quincy College. https://quincycollege.edu/wp-content/uploads/Anderson-and-Krathwohl_Revised-Blooms-Taxonomy.pdf

Pardo, A., Ellis, R. A., & Calvo, R. A. (2015). Combining observational and experiential data to inform the redesign of learning activities. In J. Baron, G. Lynch, & N. Maziarz (Chairs), LAK ’15: Proceedings of the Fifth International Conference on Learning Analytics And Knowledge (pp. 305-309). ACM. https://doi.org/10.1145/2723576.2723625

Raudenbush, S. W., & Bryk, A. S. (2002). Hierarchical linear models: Applications and data analysis methods. Sage.

Raudenbush, S. W., & Liu, X.-F. (2001). Effects of study duration, frequency of observation, and sample size on power in studies of group differences in polynomial change. Psychological Methods, 6(4), 387-401. https://doi.org/10.1037/1082-989X.6.4.387

Richardson, J. C., Castellanos Reyes, D., Janakiraman, S., & Duha, M. S. U. (2023). The process of developing a digital repository for online teaching using design-based research. TechTrends :For Leaders in Education & Training, 67(2), 217-230. https://doi.org/10.1007/s11528-022-00795-w

Romero-Ariza, M., Abril Gallego, A. M., Quesada Armenteros, A., & Rodríguez Ortega, P. G. (2023). OER interoperability educational design: Enabling research-informed improvement of public repositories. Frontiers in Education, 8, Article 1082577. https://doi.org/10.3389/feduc.2023.1082577

Spurrier, A., Hodges, S., & Schiess, J. O. (2021). Priced out of public schools: District lines, housing access, and inequitable educational options. Bellwether Education Partners. https://bellwether.org/publications/priced-out/

Tang, H. (2021). Implementing open educational resources in digital education. Educational Technology Research and Development, 69(1), 389-392. https://doi.org/10.1007/s11423-020-09879-x

Tlili, A., Garzón, J., Salha, S., Huang, R., Xu, L., Burgos, D., Denden, M., Farrell, O., Farrow, R., Bozkurt, A., Amiel, T., McGreal, R., López-Serrano, A., & Wiley, D. (2023). Are open educational resources (OER) and practices (OEP) effective in improving learning achievement? A meta-analysis and research synthesis. International Journal of Educational Technology in Higher Education, 20(1), Article 54. https://doi.org/10.1186/s41239-023-00424-3

Van Allen, J., & Katz, S. (2020). Teaching with OER during pandemics and beyond. Journal for Multicultural Education, 14(3-4), 209-218. https://doi.org/10.1108/JME-04-2020-0027

Wiley, D. (2007), On the sustainability of open educational resource initiatives in higher education. Center for Educational Research and Innovation, The Organization for Economic Co-operation and Development.

Wiley, D. (2018). RISE: An R package for RISE analysis. Journal of Open Source Software, 3(28), Article 846. https://doi.org/10.21105/joss.00846

Wiley, D. (2012, October 16). OER quality standards. Improving Learning. https://opencontent.org/blog/archives/2568

Winitzky-Stephens, J. R., & Pickavance, J. (2017). Open educational resources and student course outcomes: A multilevel analysis. The International Review of Research in Open and Distributed Learning, 18(4). https://doi.org/10.19173/irrodl.v18i4.3118

The Impact of OER's Continuous Improvement Cycles on Students' Performance: A Longitudinal Analysis of the RISE Framework by Daniela Castellanos-Reyes, Sandra Liliana Camargo Salamanca, David Wiley is licensed under a Creative Commons Attribution 4.0 International License.