Volume 25, Number 3

Hanwei Wu1, Yunsong Wang2, and Yongliang Wang3,*

1School of Foreign Studies, Hunan Normal University, Changsha, China; 2School of Foreign Languages and Cultures, Nanjing Normal University, Nanjing, China; 3School of Foreign Studies, North China University of Water Resources and Electric Power, Zhengzhou, China; *corresponding author

Artificial intelligence (AI) offers new possibilities for English as a foreign language (EFL) learners to enhance their learning outcomes, provided that they have access to AI applications. However, little is written about the factors that influence their intention to use AI in distributed EFL learning contexts. This mixed-methods study, based on the technology acceptance model (TAM), examined the determinants of behavioral intention to use AI among 464 Chinese EFL college learners. As to quantitative data, a structural equation modelling (SEM) approach using IBM SPSS Amos (Version 24) produced some important findings. First, it was revealed that perceived ease of use significantly and positively predicts perceived usefulness and attitude toward AI. Second, attitude toward AI significantly and positively predicts behavioral intention to use AI. However, contrary to the TAM assumptions, perceived usefulness does not significantly predict either attitude toward AI or behavioral intention to use AI. Third, mediation analyses suggest that perceived ease of use has a significant and positive impact on students’ behavioral intention to use AI through their attitude toward AI, rather than through perceived usefulness. As to qualitative data, semi-structured interviews with 15 learners, analyzed by the software MAXQDA 2022, provide a nuanced understanding of the statistical patterns. This study also discusses the theoretical and pedagogical implications and suggests directions for future research.

Keywords: artificial intelligence, AI, EFL college learner, behavioral intention, distributed learning

AI uses computational algorithms to perform cognitive tasks or solve complex problems that normally require human intelligence (Chen et al., 2020). In the last 30 years, AI has become a powerful tool to create new paradigms in many domains, including EFL education (Jiang, 2022; Rezai, 2023). AI techniques, such as natural language processing and machine learning, have enabled various applications for language learning, such as ChatGPT (Kohnke et al., 2023a), Pigai (Yang et al., 2023), Duolingo (Shortt et al., 2023), and so forth. These applications provide EFL learners with adaptive materials, instant feedback, and automatic diagnosis (Chassignol et al., 2018; Zhang & Aslan, 2021). Moreover, they allow EFL learners to access academic resources at their convenience, beyond the limits of traditional classes. These benefits have made AI-assisted EFL learning popular in the distributed learning context where flexibility, accessibility, and cost-effectiveness are highlighted (Janbi et al., 2023; Kuddus, 2022; Namaziandost, Razmi, et al., 2021).

Despite the advancement of AI computing technologies, effective learning is not guaranteed by the mere use of AI tools (Castañeda & Selwyn, 2018; Selwyn, 2016). For AI-assisted language learning, EFL learners’ willingness to adopt AI applications is a key factor (Kelly et al., 2023). Nevertheless, the existing literature on this topic tends to overlook EFL learners and concentrate on either the outcomes of a single AI system (Chen & Pan, 2022; Dizon, 2020; Muftah et al., 2023) or the attitudes of EFL teachers (Kohnke et al., 2023b; Ulla et al., 2023). The TAM (Davis, 1989) is a widely used framework that investigates how people’s behavior is affected by their perceptions of technology, encompassing perceived usefulness, perceived ease of use, and attitude toward technology. As AI is an emerging technology, it is suitable for applying the TAM. Therefore, the TAM can provide insights into the factors that shape EFL learners’ intention to use AI in the distributed learning context.

AI applications have the potential to facilitate EFL learning for college students in China, who have more access to electronic devices than K–12 students (Gao et al., 2014). Drawing on the TAM, this mixed-methods study investigated the factors influencing college students’ intention to adopt AI applications for EFL learning. Our findings have implications for various stakeholders. Educators can leverage these findings to enhance college students’ EFL achievement by promoting the use of AI applications, particularly in the distributed learning context where AI applications can provide flexible learning opportunities. AI application developers can use these findings to improve their design to cater to EFL learners and achieve business success.

AI has implications for education, as it can enhance outcomes, personalize instruction, and streamline activities (Bearman et al., 2023; Namaziandost, Hashemifardnia, et al., 2021; Ouyang & Jiao, 2021; Zhang & Aslan, 2021). In EFL contexts, AI applications that employ methods such as machine learning and natural language processing are prevalent. The majority of research has evaluated the effectiveness of AI in advancing EFL learners’ writing and speaking skills (Chen & Pan, 2022; Klimova et al., 2023). Researchers have also explored the perceptions of EFL teachers and students on AI and the determinants of their AI adoption (An et al., 2022; Liu & Wang, 2024; Wang et al., 2023).

Based on the role of learners, the extant literature on the effectiveness of AI practices in EFL contexts could be roughly divided into two categories: learner-as-beneficiary (LB) or learner-as-partner (LP). LB research investigates the impact of automatic essay scoring systems on EFL learners’ writing skills (Chen & Pan, 2022; Muftah et al., 2023). These systems, such as Aim Writing and WRITER, root in behaviorist principles and provide feedback on spelling, grammar, and sentence structure. The conclusions of previous studies have been inconsistent. For example, Chen and Pan (2022) recommended a hybrid model of Automated Essay Scoring (AES) and instructor feedback, as they found that Aim Writing’s feedback, although useful, was insufficient for students. However, in Muftah et al. (2023), the effectiveness of AI-assisted writing was confirmed. Their study indicated that WRITER users improved in all writing skills except organization, compared to traditional method users. This inconsistency may imply that different AES systems have different strengths and weaknesses.

LP research focuses on EFL learners’ speaking skills, which follows a constructivist approach and engages EFL learners in dialogue with AI (Ayedoun et al., 2019; Dizon, 2020). This type of research employs technologies such as intelligent personal assistants and dialogue management systems. Previous studies have shown that AI systems can enhance learners’ interactional competence. For example, Ayedoun et al. (2019) developed a model of an embodied conversational agent that employs communication strategies and affective backchannels to enhance EFL learners’ willingness to communicate. The results showed the embodied conversational agent with both strategies and backchannels increased communication more than the agent with either one alone. Likewise, Dizon (2020) compared the speaking skills of Alexa users and non-users and found that the former group improved more than the latter. A possible explanation for this effectiveness is that AI practices for EFL speaking can motivate learners by providing natural dialogue and alleviate their anxiety by creating a safe environment. Unlike real humans, AI does not judge learners’ oral mistakes.

Most studies have reported that EFL teachers have a positive and consistent view of AI and its benefits for their students’ learning. For example, Kohnke et al. (2023b) conducted semi-structured interviews with 12 EFL teachers and found that they were familiar with and confident in using AI-driven teaching tools such as Siri and Alexa. Similarly, Ulla et al. (2023) interviewed 17 EFL teachers and explored their perspectives on ChatGPT in their teaching practices. The interviewees expressed positive attitudes toward ChatGPT and recognized its various applications, such as lesson preparation and language activity creation. Other studies have explored the psychological factors that affect EFL teachers’ technology adoption. Zhi et al. (2023) surveyed 214 EFL teachers and found that both emotional intelligence (EI) and self-efficacy were significant and positive predictors of technology adoption, with EI being a stronger predictor.

Some studies have examined the factors that influence EFL teachers’ behavioral intention to use AI (An et al., 2022; Liu & Wang, 2024). An et al. (2022) investigated 470 Chinese EFL teachers and found that performance expectancy, social influence, and AI language technological knowledge had direct effects on behavioral intention, while effort expectancy, facilitating conditions, and AI technological pedagogical knowledge had indirect effects. However, only a few studies have investigated the influencing factors of EFL learners’ intention to use AI (An et al., 2023; Liu & Ma, 2023). An et al. (2023) discovered that the intention to use AI among 452 Chinese students in secondary schools was determined by three common factors: their performance expectancy, their interest in AI culture, and their goal attainment expectancy. Despite the significance of their research, Chinese pre-college students could be less benefited from AI in distributed learning contexts, as they are banned from using electronic devices at school. Chinese college students, on the other hand, do not have such restrictions, so they can use AI tools to for EFL learning more easily.

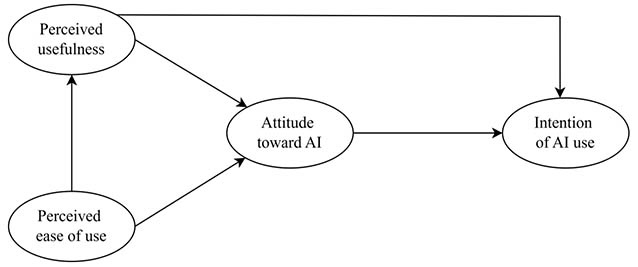

The TAM is a framework that explains users’ technology adoption (Davis, 1989; Venkatesh & Bala, 2008). The TAM suggests that users’ intention to use technology depends on three factors: perceived usefulness, perceived ease of use, and attitude toward use (Davis, 1989). Intention is the main outcome in the TAM, as it predicts actual usage (Davis, 1989; Teo et al., 2017). Perceived usefulness is how users believe technology will improve their performance, while perceived ease of use is how users believe technology will require little effort. Both perceived usefulness and perceived ease of use affect users’ attitude toward use, which is how users feel about using technology. Attitude toward use and perceived usefulness also influence users’ intention (Huang & Liaw, 2005). We used the TAM as the basis for our study to explore how EFL learners perceive and adopt AI for language learning in distributed learning contexts. See Figure 1.

Figure 1

The TAM-Based Model for EFL Learners’ Behavioral Intention to Use AI

Previous studies on AI in education have examined its impact on learning outcomes, teacher and student perceptions, and implementation and evaluation challenges. However, these studies mainly focused on EFL teaching contexts and ignored the factors affecting students’ intention to use AI for EFL learning. Moreover, most previous studies targeted EFL pre-college learners with limited access to AI applications, leaving a gap in the knowledge of EFL college learners’ views and behaviors. This study used the TAM as a theoretical framework to explore how EFL college learners’ intention to use AI for language learning is shaped by their perceived ease of use, perceived usefulness, and attitude toward AI. Based on the TAM, we tested the following research hypotheses among EFL college learners in distributed learning contexts:

H1: Perceived ease of use positively predicts perceived usefulness and attitude toward AI.

H2: Perceived usefulness positively predicts attitude toward AI and behavioral intention to use AI.

H3: Attitude toward AI positively predicts behavioral intention to use AI.

H4: Perceived usefulness positively predicts behavioral intention via attitude toward AI.

H5: Perceived ease of use positively predicts behavioral intention to use AI via attitude toward AI.

H6: Perceived ease of use positively predicts behavioral intention to use AI via perceived usefulness and attitude toward AI.

H7: Perceived ease of use positively predicts behavioral intention to use AI via perceived usefulness.

This study adopted an explanatory sequential design (Creswell, 2014) that combined quantitative and qualitative methods to investigate college students’ use of AI for EFL learning. College students were chosen as the target population because they do not face the same restrictions as pre-college students, who are prohibited from using electronic devices at school, as discussed earlier. Five colleges in China were selected for the sampling, and 557 students from various majors participated in the survey. After removing 93 duplicate responses, the final sample size was 464, comprising 248 freshmen, 91 sophomores, 116 juniors, and 9 seniors. Most participants were female (74.14%, n = 344) and aged between 18 and 23 (M = 19.18, SD = 1.29). The qualitative data were collected through interviews with 15 students from three colleges (H, N, and J). Table 1 shows the demographic data of the interview participants. A translation and back-translation procedure was applied to ensure the validity of the self-reported scales related to AI constructs (Klotz et al., 2023). The scales were translated from English to Chinese and then back to English by three bilingual researchers and two experts. The participants were given both versions of the scales.

Table 1

Demographic Information of Interviewees

| Student | University | Gender | Age | Grade |

| S1 | H | Female | 21 | junior |

| S2 | N | Male | 22 | senior |

| eS3 | J | Female | 21 | sophomore |

| S4 | H | Male | 20 | junior |

| S5 | H | Female | 19 | sophomore |

| S6 | J | Female | 18 | freshman |

| S7 | H | Female | 21 | senior |

| S8 | H | Female | 22 | junior |

| S9 | N | Female | 21 | sophomore |

| S10 | N | Female | 19 | freshman |

| S11 | N | Male | 22 | junior |

| S12 | J | Female | 22 | junior |

| S13 | H | Male | 22 | junior |

| S14 | N | Female | 21 | sophomore |

| S15 | J | Female | 19 | freshman |

The scales of perceived ease of use and perceived usefulness from Venkatesh et al. (2003) were used to measure students’ perceptions of the AI system. The scales have five items each, rated on a 5-point Likert scale. The items were adapted to the EFL context. For example, one item for perceived ease of use is “Learning to operate the AI system to assist my English learning would be easy for me.” One item for perceived usefulness is “Using the AI system would enhance my effectiveness in English learning.” The scales had Cronbach’s α coefficients of 0.748 and 0.935 in this study.

The General Attitudes Towards Artificial Intelligence Scale by Schepman and Rodway (2022) was employed to assess students’ attitude toward AI. The scale has 12 items, rated on a 5-point Likert scale. Higher scores indicate a more positive attitude. Some items are reverse-coded to reduce bias. The items, modified for the EFL context, included statements such as “I am interested in using AI systems in my English learning.” The scale had a high Cronbach’s α coefficient of 0.857 in this study.

The scale by Ayanwale et al. (2022) was adopted to measure students’ intention to use AI for EFL learning. The scale has five items, modified for the EFL context, such as “I intend to use AI to assist my English learning.” The items are rated on a 6-point Likert scale. A higher score means a stronger intention. The scale had a high Cronbach’s α coefficient of 0.893 in this study.

Semi-structured interviews were conducted with 15 students who had participated in our questionnaire survey. The interviews lasted about 20 minutes each. There were two main objectives: to further verify the roles of the variables from the quantitative stage in determining intention to use AI, and to understand the reasons for the quantitative results. Therefore, we chose an inductive semi-structured interview method for these exploratory purposes. The following interview questions were carefully designed based on a pilot test with two students:

With the help of EFL teachers, 464 Chinese college students from different majors were recruited. English is a mandatory course for all colleges in China. A survey tool (http://www.wjx.cn) was used to collect quantitative data online. The participants were asked to give their demographic information and complete four scales. For the qualitative data, 15 random students were interviewed. The AI tools and distributed learning were explained to them before the interview. The questionnaires and interviews were in Chinese for participants’ understanding and expression. A consent form was signed by participants before the study. The study was approved by the Ethics Committee of Hunan Normal University.

The analysis of quantitative data was done using IBM SPSS Amos (Version 24). The measurement model was verified first, following Kline’s (2016) two-stage SEM approach, and the reliability and validity of the constructs were assessed. Several indices were used to evaluate the goodness-of-fit of the models, including chi-square divided by degrees of freedom, comparative fit index, Tucker-Lewis index, and the root-mean-square error of approximation (Hu & Bentler, 1999). The data were checked for skewness, kurtosis, and descriptive statistics to ensure normality. The “maximum likelihood” method was used to test the structural model. The indirect effects were tested using bootstrapping with 5,000 iterations (Shrout & Bolger, 2002). The indirect effects were considered significant if 0 was not in the 95% confidence interval (Hayes, 2013).

The interviews were transcribed and translated by two linguistics professors, resulting in 12,303 Chinese characters and 9,842 English words. The qualitative data were coded and categorized using MAXQDA 2022, following the thematic analysis of Braun and Clarke (2006) with an inductive approach. The agreement was calculated by the first and corresponding authors, who coded the data separately, to check interrater reliability. For example, “portability” was the code for “Dictionaries are too bulky to carry around, so I prefer to use apps to learn vocabulary.” The authors agreed on 115 of 123 codes (93.5%) and resolved their disagreement on the remaining codes.

A confirmatory factor analysis was performed with four latent variables: perceived ease of use, perceived usefulness, attitude toward AI, and intention to use AI. The factor loadings of the items were checked using unstandardized and standardized estimates, and items with non-significant or low loadings were removed. Items ATA8 and ATA9 were deleted for p-values > 0.05, and items ATA1, ATA3, ATA6, ATA10, and PU6 were deleted for standardized loadings < 0.45 (Kline, 2016). The modification indices were then inspected, and the changes that matched the theory were made.

The measurement model fit the data acceptably, as shown by the following indices: χ2/df = 3.424 (3–5: acceptable, < 3: excellent), CFI = 0.920 (> 0.9: acceptable, > 0.95: excellent), TLI = 0.908 (> 0.9: acceptable, > 0.95: excellent), RMSEA = 0.072 (< 0.08: acceptable), and SRMR = 0.062 (< 0.08: acceptable) (Hu & Bentler, 1999).

The discriminant validity and composite reliability (CR) of each construct are presented in Table 2. All constructs met the criteria of CR > 0.7 and AVE > 0.5, and had maximum shared variance (MSV) values lower than their AVE, demonstrating good reliability and convergent validity. The Fornell-Larcker criterion indicated that all factors were interrelated, with strong correlations among perceived usefulness, perceived ease of use, and attitude toward AI. The discriminant validity was verified by the fact that the square root of AVE for each construct (the bold values in the table) exceeded its correlations with other factors (Fornell & Larcker, 1981).

Table 2

Composite Reliability and Convergent and Discriminant Validity of Factors Determining EFL Students’ Intention to Use AI

| Factor | CR | AVE | MSV | Fornell-Larcker Criterion | |||

| 1 | 2 | 3 | 4 | ||||

| 1. PEU | 0.766 | 0.790 | 0.712 | 0.899 | |||

| 2. PU | 0.936 | 0.744 | 0.712 | 0.844* | 0.863 | ||

| 3. ATA | 0.862 | 0.690 | 0.675 | 0.822* | 0.708* | 0.831 | |

| 4. IUA | 0.896 | 0.633 | 0.377 | 0.560* | 0.479* | 0.613* | 0.796 |

Note. PEU = perceived ease of use; PU = perceived usefulness; ATA = attitude toward AI; IUA = behavioral intention to use AI; CR = composite reliability; MSV = maximum shared variance. Figures in bold show the square root of AVE for each construct.

* p < .001.

The reliability and validity were verified before the data in the measurement model were analyzed. The descriptive statistics are shown in Table 3. The SEM assumptions were met by the data, as the absolute values of skewness and kurtosis of all items were < 2 (Noar, 2003).

Table 3

Descriptive Statistics of all Items of the Constructs

| Min | Max | M | SD | Skewness | SE | Kurtosis | SE | |

| PEU | 1.000 | 5.000 | 2.966 — 3.776 | 0.813 — 0.942 | -0.608 — -0.090 | 0.113 | -0.291 — 0.882 | 0.226 |

| PU | 1.000 | 5.000 | 3.653 — 3.683 | 0.773 — 0.812 | -0.336 — -0.483 | 0.113 | 0.507 — 0.939 | 0.226 |

| ATA | 1.000 | 5.000 | 3.543 — 3.866 | 0.714 — 0.801 | -0.512 — 0.175 | 0.113 | -0.136 — 0.741 | 0.226 |

| IUA | 1.000 | 5.000 | 4.004 — 4.362 | 0.851 — 0.961 | -0.535 — -0.323 | 0.113 | 0.293 — 1.559 | 0.226 |

Note. N = 464. PEU = perceived ease of use; PU = perceived usefulness; ATA = attitude toward AI; IUA = behavioral intention to use AI.

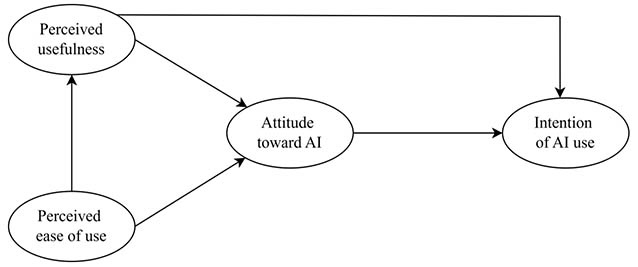

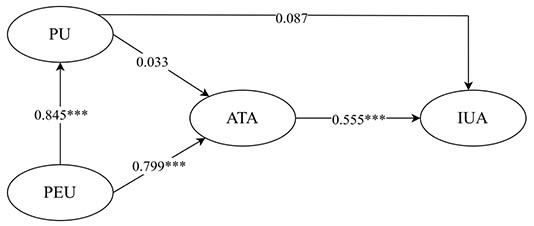

The underlying mechanisms among the four constructs of our study were examined using regression analysis with SEM to address our hypotheses. The structural model of this analysis is depicted in Figure 2.

Figure 2

The Structural Model With Standardized Estimates

Note. PEU = perceived ease of use; PU = perceived usefulness; ATA = attitude toward AI; IUA = behavioral intention to use AI.

*** p < 0.001

As shown in Figure 2, H1 was supported by the significant positive effects of perceived ease of use on both perceived usefulness (β = 0.845, p < .001) and attitude toward AI (β = 0.799, p < .001). Moreover, H3 was confirmed by the significant positive effect of attitude toward AI on behavioral intention to use AI (β = 0.555, p < .001). However, perceived usefulness did not significantly affect either behavioral intention to use AI (β = 0.087, p > .05) or attitude toward AI (β = 0.033, p > .05), thus rejecting H2.

The indirect path analysis results are shown in Table 4. H5 was supported, as the link between perceived ease of use and behavioral intention to use AI was mediated by attitude toward AI (β = 0.444, 95% CI [0.297, 0.656]). However, the other three mediation paths were not supported by the data, as 0 was in their 95% CI. Thus, H4, H6, and H7 were rejected, which contradicts the TAM assumptions. This discrepancy led to the subsequent interviews for deeper understanding.

Table 4

Bootstrapping Analyses of Results of Indirect Effects

| Indirect path | B | β | SE | 95% CI | p |

| 1. PU → ATA → IUA | 0.018 | 0.018 | 0.097 | [-0.172, 0.145] | .882 |

| 2. PEU → ATA → IUA | 0.126 | 0.074 | 0.071 | [-0.044, 0.190] | .303 |

| 3. PEU → ATA → IUA | 0.758 | 0.444 | 0.107 | [0.297, 0.656] | .001 |

| 4. PEU → PU → ATA → IUA | 0.026 | 0.015 | 0.083 | [-0.150, 0.118] | .872 |

| 5. PEU → PU → IUA | 0.129 | 0.074 | 0.070 | [-0.042, 0.193] | .255 |

Note. PU = perceived usefulness; ATA = attitude toward AI; IUA = behavioral intention to use AI; PEU = perceived ease of use; B = unstandardized path coefficient; CI = confidence interval.

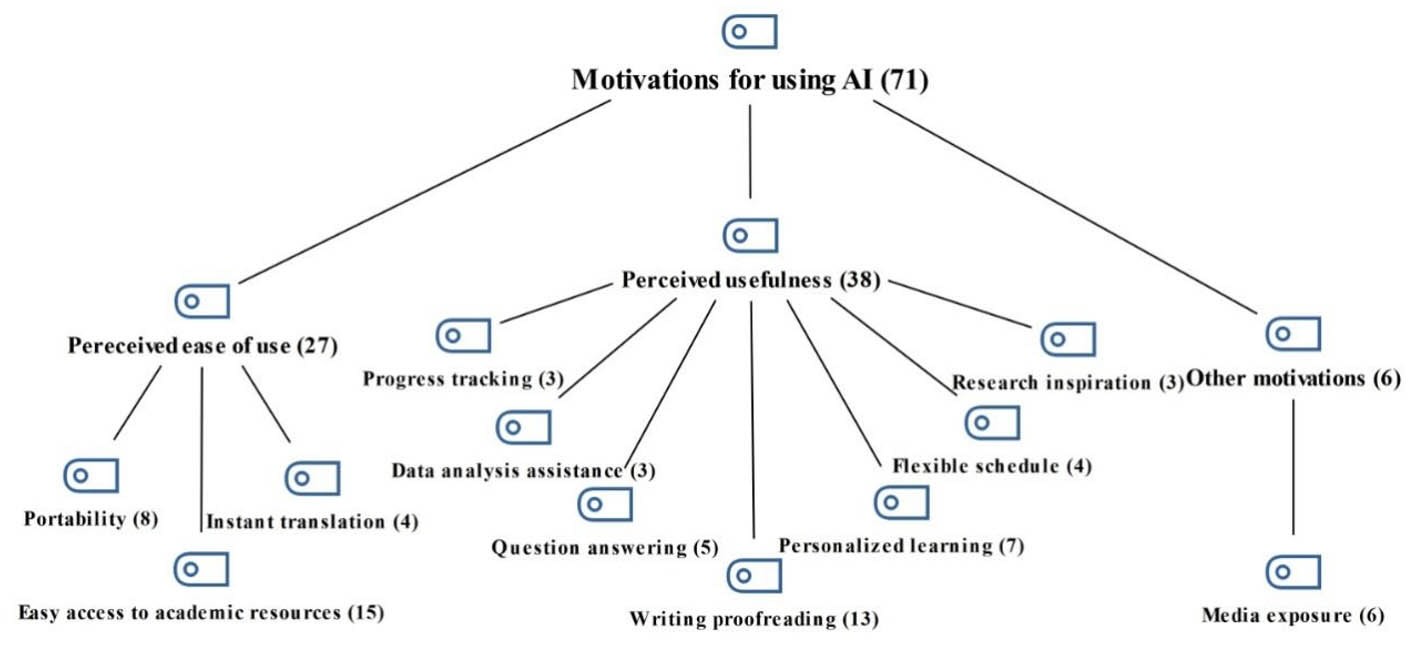

The interview data were analyzed to investigate the non-significant results of H2, H4, H6, and H7. It was shown in the analysis that all interviewees had a positive attitude toward AI for EFL learning. For the motivations, 71 codes, 11 categories, and 3 themes were extracted. The thematic map is presented in Figure 3.

Figure 3

Thematic Map of Motivations for Using AI for EFL Learning

Figure 3 illustrates the dominant themes: perceived ease of use (27 codes) and perceived usefulness (38 codes). Perceived ease of use had 3 categories: easy access to academic resources (15), portability (8), and instant translation (4). Perceived usefulness had more categories, such as writing proofreading (13), personalized learning (17), question answering (5), flexible schedule (4), data analysis (3), research inspiration (3), and progress tracking (3).

We give one example from the interviews for each main theme; underlined text shows key words we used to extract categories. Student 13 described the connection between AI and proofreading, one category of motivation found under perceived usefulness: “First of all, regarding writing, ChatGPT and Grammarly can help me improve my writing skills, fix grammar errors, and increase clarity. Grammarly, in particular, can also offer real-time feedback, making my writing more consistent.” On the other hand, student 5 described the connection between using AI and issues of portability, another category of motivation found under perceived ease of use: “Dictionaries are too bulky to carry around, so I prefer to use apps like Baicizhan and Bubei Danzi to learn vocabulary more frequently” (Baicizhan and Bubei Danci are two popular mobile applications for learning English in China). Besides the two main themes, another motivation for using AI to facilitate EFL learning was media exposure (6), which influenced students’ awareness and interest in AI. Overall, the two major themes indicate the main motivations for college students to use AI for EFL learning, which aligns with the TAM framework.

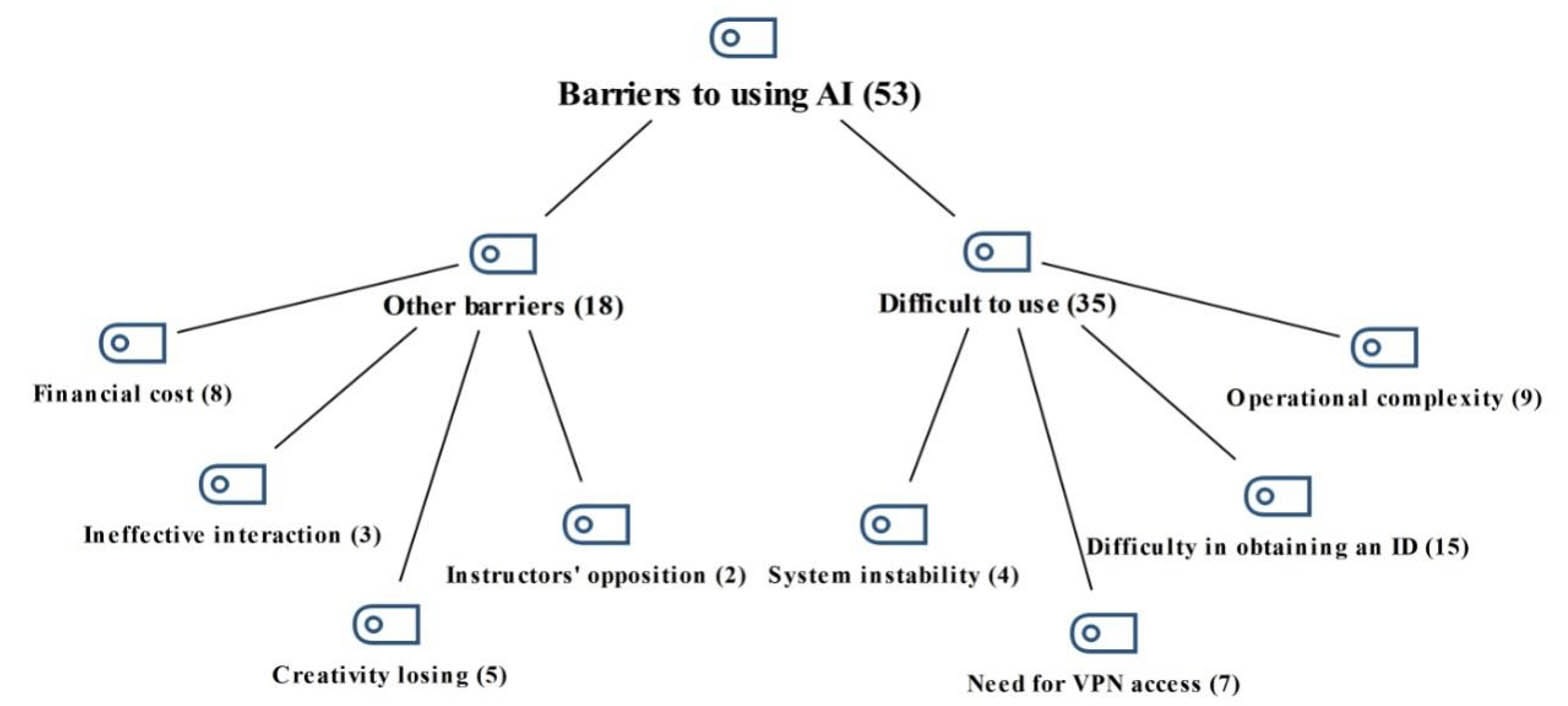

From the interview data, we identified 53 codes, 8 categories, and 2 themes related to the barriers that prevent college students from using AI to support their EFL learning. Figure 4 shows the thematic map of this analysis.

Figure 4

Thematic Map of Barriers to Using AI for EFL Learning

Figure 4 shows the dominant theme of difficult to use (35 codes), which included four categories: difficulty in obtaining an ID (15), operational complexity (9), need for VPN access (7), and system instability (4). Other barriers were financial cost (8), creativity loss (5), ineffective interaction (3), and instructors’ opposition (2). We give one example from the interviews for each main theme; underlined text shows key words we used to extract categories. Student 11 explained how operational complexity makes AI applications difficult to use. “Some AI applications have technical difficulties that prevent me from using them. For example, some applications have complex interface designs, which require me to spend a lot of time learning various functions and settings.” Student 9 spoke about the barriers that result from financial considerations. “Some AI applications for learning English are paid, and they are not very cost-effective when I use them. I cannot stick to them as much as I imagine, so it is not worth buying a membership.”

While we found evidence that ease of use and usefulness motivate students to use AI for EFL learning, our analysis of the barriers to using AI for EFL learning indicates that these factors are not enough to keep students using AI applications. The students said they would stop using AI for EFL learning if they had problems or frustrations with the apps, regardless of their usefulness. This means that ease of use and usefulness start AI-assisted EFL learning, but difficulty to use or low ease of use stop it. This finding matches our quantitative results, which stressed the key role of ease of use in affecting the behavioral intention to use AI in distributed learning contexts.

Drawing on the TAM, we employed a mixed-method approach to examine the mechanisms underlying the relationship between perceived ease of use, perceived usefulness, attitude toward AI, and behavioral intention to use AI among EFL college learners in distributed learning contexts. In the quantitative analysis, we first verified the reliability and validity of the instruments used in the study. Afterward, we conducted SEM to examine the proposed hypotheses.

The quantitative results of our study support H1 (perceived ease of use → perceived usefulness, perceived ease of use → attitude toward AI), H3 (attitude toward AI → behavioral intention to use AI), and H5 (perceived ease of use → attitude toward AI → behavioral intention to use AI) by showing that perceived ease of use has a positive effect on both perceived usefulness and attitude toward AI. This indicates that EFL college learners who perceive AI as easy to use are more likely to view AI as useful and have a favorable attitude toward AI. Moreover, EFL college learners with a positive attitude toward AI have a higher intention to use AI. Additionally, the mediated path from perceived ease of use to behavioral intention to use AI through attitude toward AI implies that EFL college learners who perceive AI as easy to use develop a more positive attitude toward AI, which in turn encourages them to use AI for their language learning.

These findings corroborate the TAM, which asserts that perceived ease of use is a key factor for users’ perceived usefulness and attitude toward a technology, and that attitude toward a technology influences behavioral intention to use it (Ursavaş, 2022). Moreover, these results are consistent with the existing literature in general education, which has confirmed the mediating role of attitude toward a specific AI tool in the link between perceived ease of use and behavioral intention to use that AI tool among both college students (Gado et al., 2022; Li, 2023) and teachers (Huang & Teo, 2020; Siyam, 2019). However, few studies have examined these relationships in AI-assisted EFL learning contexts, thus demonstrating the originality and importance of our study. In conclusion, our study validates both the TAM and previous studies in general education, particularly regarding the mediating effect of attitude toward AI on the use of AI among EFL college learners.

The quantitative results of this study are surprising, as they do not support H2 (perceived usefulness → attitude toward AI, perceived usefulness → behavioral intention to use AI), H4 (perceived usefulness → attitude toward AI → behavioral intention), H6 (perceived ease of use → perceived usefulness → attitude toward AI → behavioral intention to use AI), and H7 (perceived ease of use → perceived usefulness → behavioral intention to use AI). It was found that perceived usefulness had no significant effect on attitude toward AI or behavioral intention to use AI. Therefore, attitude toward AI does not mediate the relationship between perceived usefulness and behavioral intention to use AI. Furthermore, perceived usefulness does not mediate the relationship between perceived ease of use and behavioral intention to use AI, either by itself or together with attitude toward AI. This suggests that college students’ perception of AI’s usefulness for EFL learning is not associated with their attitude or intention to use AI.

The results of this study contradict the TAM, which suggests that perceived usefulness influences attitude and behavioral intention to use technology directly or indirectly (Ursavaş, 2022). Moreover, the results diverge from the findings of Wang et al. (2022) and Liu and Ma (2023), who verified the mediating effect of perceived usefulness, either alone or together with attitude, on the relationship between perceived ease of use and behavioral intention to use a specific AI tool among EFL learners. A possible reason for this discrepancy is that users may have varying degrees of familiarity with AI in general and specific AI tools. For instance, in Liu and Ma’s (2023) study, EFL learners’ perception was limited to a particular AI tool, ChatGPT, which is one of the most advanced and powerful AI applications in the world, with high levels of ease of use and usefulness. Consequently, most of its users, including EFL learners, may have a favorable impression of it due to their positive user experience and media exposure.

However, EFL learners’ evaluation of AI in general, revealed in our qualitative analysis, depends on their user experience of the specific AI tools that they are familiar with. Different from ChatGPT, these AI tools vary in quality and performance. Specifically, college students were motivated to adopt AI for EFL learning mainly by perceived ease of use and perceived usefulness. However, these factors were not enough to ensure their continued use of AI applications. The students reported that they would stop using AI for EFL learning if they encountered difficulties or dissatisfaction with the applications, even if they recognized their usefulness. Consequently, they would seek other AI tools for EFL learning. This qualitative finding suggests that perceived ease of use and perceived usefulness are the initial facilitators of AI-assisted EFL learning, but perceived difficulty to use or a low level of perceived ease of use are major barriers to long-term use. In other words, given that they all agree that AI can improve their EFL learning (Betal, 2023; Divekar et al., 2022), the key determinant of their intention to use AI is not its usefulness but its ease of use.

This qualitative finding supports our quantitative finding that perceived usefulness does not play a significant role in predicting EFL college learners’ attitude toward AI and their intention to use AI for language learning. Although it contradicts the assumptions of the TAM, this finding is still reasonable. Currently, there have been a variety of AI applications available for college students to facilitate their language learning, such as ChatGPT (Kohnke et al., 2023a), Duolingo (Shortt et al., 2023), Grammarly (Barrot, 2022), Pigai (Yang et al., 2023), and so forth. With such a wide range of options, it’s plausible that college students tend to opt for the one that they consider most user-friendly. Besides, it’s noteworthy that this finding may imply that the TAM has its robust soundness in terms of its components when it is applied to a single technology. However, in a specific learning context with various technological tools available, a tool’s ease of use may be the most decisive factor for users’ intention to use it.

This mixed-methods study investigated the factors influencing Chinese EFL college learners’ intention to adopt AI. The quantitative results reveal several key findings. First, students’ perceived ease of use of AI has a positive effect on their perceived usefulness and attitude toward AI. Second, students’ attitude toward AI is a positive predictor of their intention to use AI. However, in contrast to the TAM propositions, students’ perceived usefulness of AI does not significantly influence their attitude toward AI or their intention to use AI. Third, students’ perceived ease of use of AI positively influences their intention to use AI through their attitude toward AI, rather than through their perceived usefulness of AI. According to the qualitative results, perceived ease of use and perceived usefulness are the main factors that facilitate college learners’ behavioral intention to use AI for EFL learning. However, only perceived difficulty to use or a lack of perceived ease of use hinder their sustained use of AI, confirming the crucial role of perceived ease of use as revealed by the quantitative results.

This study contributes to both theory and practice. On the theoretical level, our study reveals that perceived ease of use is the main determinant of college learners’ intention to use AI for EFL learning, which contradicts the TAM proposition that perceived usefulness is more influential. This indicates the distinctiveness of the EFL college learning context where various AI-assisted language applications are accessible and the necessity of TAM adaptations. On the practical level, our findings suggest that educators can foster EFL learners’ use of AI applications by providing them with training and guidance. This can lower the perceived difficulty and boost the confidence of learners in using AI applications, particularly in distributed learning environments where learners have greater flexibility and autonomy over time and space. Moreover, AI application developers can enhance their products by improving the usability and user-friendliness of AI applications for EFL learning. They can also solicit feedback from college learners to satisfy target users’ preferences.

This study has several limitations that should be acknowledged. First, it is a cross-sectional study that cannot establish the causal relationships among the three constructs over time. Future research could use a cross-lagged panel design to examine the longitudinal causality among the constructs in this study (Derakhshan et al., 2023). Second, the sample is not sufficiently representative, as it only included participants from two provinces of China. Future studies could expand the sample size and diversity by recruiting participants from more provinces. Third, the study only focused on college learners. However, other populations, such as primary school learners and workers, may also greatly benefit from AI-assisted language learning. These populations could be selected as participants in future research to verify the generalizability of our findings. Fourth, the study only tested the constructs in the TAM, ignoring other factors in similar theories such as effort expectancy and performance expectancy in the unified theory of acceptance and use of technology (Venkatesh et al., 2003). Future studies could explore more variables that could affect EFL learners’ behavioral intention to use AI.

This study was sponsored by Teacher Education Project of Foundation of Henan Educational Committee, entitled “Using Online Learning Resources to Promote English as a Foreign Language Teachers’ Professional Development in the Chinese Middle School Context” (Grant No.: 2022-JSJYYB-027).

An, X., Chai, C. S., Li, Y., Zhou, Y., Shen, X., Zheng, C., & Chen, M. (2022). Modeling English teachers’ behavioral intention to use artificial intelligence in middle schools. Education and Information Technologies, 28, 5187-5208. https://doi.org/10.1007/s10639-022-11286-z

An, X., Chai, C. S., Li, Y., Zhou, Y., & Yang, B. (2023). Modeling students’ perceptions of artificial intelligence assisted language learning. Computer Assisted Language Learning, 1-22. https://doi.org/10.1080/09588221.2023.2246519

Ayanwale, M. A., Sanusi, I. T., Adelana, O. P., Aruleba, K. D., & Oyelere, S. S. (2022). Teachers’ readiness and intention to teach artificial intelligence in schools. Computers and Education: Artificial Intelligence, 3, Article 100099. https://www.doi.org/10.1016/j.caeai.2022.100099

Ayedoun, E., Hayashi, Y., & Seta, K. (2019). Adding communicative and affective strategies to an embodied conversational agent to enhance second language learners’ willingness to communicate. International Journal of Artificial Intelligence in Education, 29(1), 29-57. https://doi.org/10.1007/s40593-018-0171-6

Barrot, J. S. (2022). Integrating technology into ESL/EFL writing through Grammarly. RELC Journal, 53(3), 764-768. https://doi.org/10.1177/0033688220966632

Bearman, M., Ryan, J., & Ajjawi, R. (2023). Discourses of artificial intelligence in higher education: A critical literature review. Higher Education, 86(2), 369-385. https://doi.org/10.1007/s10734-022-00937-2

Betal, A. (2023). Enhancing second language acquisition through artificial intelligence (AI): Current insights and future directions. Journal for Research Scholars and Professionals of English Language Teaching, 7(39). https://doi.org/10.54850/jrspelt.7.39.003

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77-101. https://doi.org/10.1191/1478088706qp063oa

Castañeda, L., & Selwyn, N. (2018). More than tools? Making sense of the ongoing digitizations of higher education. International Journal of Educational Technology in Higher Education, 15, Article 22. https://doi.org/10.1186/s41239-018-0109-y

Chassignol, M., Khoroshavin, A., Klimova, A., & Bilyatdinova, A. (2018). Artificial intelligence trends in education: A narrative overview. Procedia Computer Science, 136, 16-24. https://doi.org/10.1016/j.procs.2018.08.233

Chen, H., & Pan, J. (2022). Computer or human: A comparative study of automated evaluation scoring and instructors’ feedback on Chinese college students’ English writing. Asian-Pacific Journal of Second and Foreign Language Education, 7, Article 34. https://doi.org/10.1186/s40862-022-00171-4

Chen, X., Xie, H., & Hwang, G.-J. (2020). A multi-perspective study on artificial intelligence in education: Grants, conferences, journals, software tools, institutions, and researchers. Computers and Education: Artificial Intelligence, 1, Article 100005. https://doi.org/10.1016/j.caeai.2020.100005

Creswell, J. W. (2014). A concise introduction to mixed methods research. SAGE Publications.

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319-340. https://doi.org/10.2307/249008

Derakhshan, A., Wang, Y., Wang, Y., & Ortega-Martín, J. L. (2023). Towards innovative research approaches to investigating the role of emotional variables in promoting language teachers’ and learners’ mental health. International Journal of Mental Health Promotion, 25(7), 823-832. https://doi.org/10.32604/ijmhp.2023.029877

Divekar, R. R., Drozdal, J., Chabot, S., Zhou, Y., Su, H., Chen, Y., Zhu, H., Hendler, J. A., & Braasch, J. (2022). Foreign language acquisition via artificial intelligence and extended reality: Design and evaluation. Computer Assisted Language Learning, 35(9), 2332-2360. https://doi.org/10.1080/09588221.2021.1879162

Dizon, G. (2020). Evaluating intelligent personal assistants for L2 listening and speaking development. Language, Learning and Technology, 24(1), 16-26. https://doi.org/10125/44705

Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39-50. https://doi.org/10.2307/3151312

Gado, S., Kempen, R., Lingelbach, K., & Bipp, T. (2022). Artificial intelligence in psychology: How can we enable psychology students to accept and use artificial intelligence? Psychology Learning & Teaching, 21(1), 37-56. https://doi.org/10.1177/14757257211037149

Gao, Q., Yan, Z., Zhao, C., Pan, Y., & Mo, L. (2014). To ban or not to ban: Differences in mobile phone policies at elementary, middle, and high schools. Computers in Human Behavior, 38, 25-32. https://doi.org/10.1016/j.chb.2014.05.011

Hayes, A. F. (2013). Introduction to mediation, moderation, and conditional process analysis: A regression based approach. Guilford Press.

Hu, L.-t., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6(1), 1-55. https://doi.org/10.1080/10705519909540118

Huang, F., & Teo, T. (2020). Influence of teacher-perceived organisational culture and school policy on Chinese teachers’ intention to use technology: An extension of technology acceptance model. Educational Technology Research and Development, 68(3), 1547-1567. https://doi.org/10.1007/s11423-019-09722-y

Huang, H.-M., & Liaw, S.-S. (2005). Exploring users’ attitudes and intentions toward the web as a survey tool. Computers in Human Behavior, 21(5), 729-743. https://doi.org/10.1016/j.chb.2004.02.020

Janbi, N., Katib, I., & Mehmood, R. (2023). Distributed artificial intelligence: Taxonomy, review, framework, and reference architecture. Intelligent Systems With Applications, 18, Article 200231. https://doi.org/10.1016/j.iswa.2023.200231

Jiang, R. (2022). How does artificial intelligence empower EFL teaching and learning nowadays? A review on artificial intelligence in the EFL context. Frontiers in Psychology, 13, Article 1049401. https://doi.org/10.3389/fpsyg.2022.1049401

Kelly, S., Kaye, S.-A., & Oviedo-Trespalacios, O. (2023). What factors contribute to the acceptance of artificial intelligence? A systematic review. Telematics and Informatics, 77, Article 101925. https://doi.org/10.1016/j.tele.2022.101925

Klotz, A. C., Swider, B. W., & Kwon, S. H. (2023). Back-translation practices in organizational research: Avoiding loss in translation. Journal of Applied Psychology, 108(5), 699-727. https://doi.org/10.1037/apl0001050

Klimova, B., Pikhart, M., Benites, A. D., Lehr, C., & Sanchez-Stockhammer, C. (2023). Neural machine translation in foreign language teaching and learning: A systematic review. Education and Information Technologies, 28(1), 663-682. https://doi.org/10.1007/s10639-022-11194-2

Kline, R. B. (2016). Principles and practice of structural equation modeling (6th ed.). Guilford Press.

Kohnke, L., Moorhouse, B. L., & Zou, D. (2023a). ChatGPT for language teaching and learning. RELC Journal, 54(2), 537-550. https://doi.org/10.1177/00336882231162868

Kohnke, L., Moorhouse, B. L., & Zou, D. (2023b). Exploring generative artificial intelligence preparedness among university language instructors: A case study. Computers and Education: Artificial Intelligence, 5, Article 100156. https://doi.org/10.1016/j.caeai.2023.100156

Kuddus, K. (2022). Artificial intelligence in language learning: Practices and prospects. In A. Mire, S. Malik, & A. K. Tyagi (Eds.), Advanced analytics and deep learning models (pp. 3-18). Wiley. https://doi.org/10.1002/9781119792437.ch1

Li, K. (2023). Determinants of college students’ actual use of AI-based systems: An extension of the technology acceptance model. Sustainability, 15(6), Article 5221. https://www.mdpi.com/2071-1050/15/6/5221

Liu, G., & Ma, C. (2024). Measuring EFL learners’ use of ChatGPT in informal digital learning of English based on the technology acceptance model. Innovation in Language Learning and Teaching, 18(2), 125-138. https://doi.org/10.1080/17501229.2023.2240316

Liu, G. L., & Wang, Y. (2024). Modeling EFL teachers’ intention to integrate informal digital learning of English (IDLE) into the classroom using the theory of planned behavior. System, 120, Article 103193. https://doi.org/10.1016/j.system.2023.103193

Muftah, M., Al-Inbari, F. A. Y., Al-Wasy, B. Q., & Mahdi, H. S. (2023). The role of automated corrective feedback in improving EFL learners’ mastery of the writing aspects. Psycholinguistics, 34(2), 82-109. https://doi.org/10.31470/2309-1797-2023-34-2-82-109

Namaziandost, E., Hashemifardnia, A., Bilyalova, A. A., Fuster-Guillén, D., Palacios Garay, J. P., Diep, L. T. N., Ismail, H., Sundeeva, L. A., Hibana, & Rivera-Lozada, O. (2021). The effect of WeChat-based online instruction on EFL learners’ vocabulary knowledge. Education Research International, 2021, Article 8825450. https://doi.org/10.1155/2021/8825450

Namaziandost, E., Razmi, M. H., Atabekova, A., Shoustikova, T., & Kussanova, B. H. (2021). An account of Iranian intermediate EFL learners’ vocabulary retention and recall through spaced and massed distribution instructions. Journal of Education, 203(2), 275-284. https://doi.org/10.1177/00220574211031949

Noar, S. M. (2003). The role of structural equation modeling in scale development. Structural Equation Modeling: A Multidisciplinary Journal, 10(4), 622-647. https://doi.org/10.1207/S15328007SEM1004_8

Ouyang, F., & Jiao, P. (2021). Artificial intelligence in education: The three paradigms. Computers and Education: Artificial Intelligence, 2, Article 100020. https://doi.org/10.1016/j.caeai.2021.100020

Rezai, A. (2023). Investigating the association of informal digital learning of English with EFL learners’ intercultural competence and willingness to communicate: A SEM study. BMC Psychology, 11, Article 314(2023). https://doi.org/10.1186/s40359-023-01365-2

Schepman, A., & Rodway, P. (2022). The General Attitudes towards Artificial Intelligence Scale (GAAIS): Confirmatory validation and associations with personality, corporate distrust, and general trust. International Journal of Human-Computer Interaction, 39(13), 2724-2741. https://doi.org/10.1080/10447318.2022.2085400

Selwyn, N. (2016). Is technology good for education? Polity Press.

Shortt, M., Tilak, S., Kuznetcova, I., Martens, B., & Akinkuolie, B. (2023). Gamification in mobile-assisted language learning: A systematic review of Duolingo literature from public release of 2012 to early 2020. Computer Assisted Language Learning, 36(3), 517-554. https://doi.org/10.1080/09588221.2021.1933540

Shrout, P. E., & Bolger, N. (2002). Mediation in experimental and nonexperimental studies: New procedures and recommendations. Psychological Methods, 7(4), 422-445.

Siyam, N. (2019). Factors impacting special education teachers’ acceptance and actual use of technology. Education and Information Technologies, 24(3), 2035-2057. https://doi.org/10.1007/s10639-018-09859-y

Teo, T., Huang, F., & Hoi, C. K. W. (2017). Explicating the influences that explain intention to use technology among English teachers in China. Interactive Learning Environments, 26(4), 460-475. https://doi.org/10.1080/10494820.2017.1341940

Ulla, M. B., Perales, W. F., & Busbus, S. O. (2023). ‘To generate or stop generating response’: Exploring EFL teachers’ perspectives on ChatGPT in English language teaching in Thailand. Learning: Research and Practice, 9(2), 168-182. https://doi.org/10.1080/23735082.2023.2257252

Ursavaş, Ö. F. (2022). Technology acceptance model: History, theory, and application. In Ö. F. Ursavaş (Ed.), Conducting technology acceptance research in education: Theory, models, implementation, and analysis (pp. 57-91). Springer International Publishing. https://doi.org/10.1007/978-3-031-10846-4_4

Venkatesh, V., & Bala, H. (2008). Technology acceptance model 3 and a research agenda on interventions. Decision Sciences, 39(2), 273-315. https://doi.org/10.1111/j.1540-5915.2008.00192.x

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425-478. https://doi.org/10.2307/30036540

Wang, Y., Yu, L., & Yu, Z. (2022). An extended CCtalk technology acceptance model in EFL education. Education and Information Technologies, 27, 6621-6640. https://doi.org/10.1007/s10639-022-10909-9

Wang, Y. L., Wang, Y. X., Pan, Z. W., & Ortega-Martín, J. L. (2023). The predicting role of EFL students’ achievement emotions and technological self-efficacy in their technology acceptance. The Asia-Pacific Education Researcher, 2023. https://doi.org/10.1007/s40299-023-00750-0

Yang, H., Gao, C., & Shen, H.-z. (2023). Learner interaction with, and response to, AI-programmed automated writing evaluation feedback in EFL writing: An exploratory study. Education and Information Technologies, 29, 3837-3858. https://doi.org/10.1007/s10639-023-11991-3

Zhang, K., & Aslan, A. B. (2021). AI technologies for education: Recent research and future directions. Computers and Education: Artificial Intelligence, 2, Article 100025. https://doi.org/10.1016/j.caeai.2021.100025

Zhi, R., Wang, Y., & Wang, Y. (2023). The role of emotional intelligence and self-efficacy in EFL teachers’ technology adoption. The Asia-Pacific Education Researcher, 2023. https://doi.org/10.1007/s40299-023-00782-6

"To Use or Not to Use?" A Mixed-Methods Study on the Determinants of EFL College Learners' Behavioral Intention to Use AI in the Distributed Learning Context by Hanwei Wu, Yunsong Wang, and Yongliang Wang is licensed under a Creative Commons Attribution 4.0 International License.