Volume 25, Number 3

Jingyu Xiao1, Goudarz Alibakhshi2,*, Alireza Zamanpour3, Mohammad Amin Zarei4, Shapour Sherafat5, and Seyyed-Fouad Behzadpoor6

1Guangdong University of Foreign Studies, Guangzhou, China; 2Allameh Tabataba'i University, Iran; 3Islamic Azad University Science and Research Branch, Tehran, Iran; 4Allameh Tabataba'i University; 5University of Tehran, Iran; 6Azarbaijan Shahid Madani University, Tabriz, Iran; *Corresponding author

Artificial intelligence (AI) has contributed to various facets of human lives for decades. Teachers and students must have competency in AI and AI-empowered applications, particularly when using online electronic platforms such as learning management systems (LMS). This study investigates the structural relationship between AI literacy, academic well-being, and educational attainment of Iranian undergraduate students. Using a convenience sampling approach, we selected 400 undergraduate students from virtual universities equipped with LMS platforms and facilities. We collected data using three instruments—an AI literacy scale, an academic well-being scale, and educational attainment scale—and analyzed the data using Smart-PLS3 software. Results showed that the hypothetical model had acceptable psychometrics (divergent and convergent validity, internal consistency, and composite reliability). Results also showed that the general model had goodness of fit. The study thus confirms the direct effect of AI on academic well-being and educational attainment. By measuring variables of academic well-being, we also show that AI literacy in China and Iran significantly affects educational attainment. These findings have implications for students, teachers, and educational administrators of universities and higher education institutes, providing knowledge about the educational uses of AI applications.

Keyword: AI, AI applications, academic well-being, AI literacy, educational attainment, undergraduate students

Open and distributed learning, often referred to as distance education or online learning, is a flexible educational approach that transcends traditional classroom boundaries (Bozkurt et al., 2015). Unlike conventional face-to-face instruction, open and distributed learning uses digital technologies to deliver educational content and facilitate interactions among learners and instructors across geographical locations and time zones (Alavi et al., 2002). This approach allows learners to engage with course materials, participate in discussions, and complete assignments remotely, typically through online platforms or learning management systems (LMS; Alavi et al., 2002).

Complementing this online learning approach, the rapid evolution of technology, particularly the advent of artificial intelligence (AI), has become a transformative force in shaping various facets of our daily lives. The proliferation of smart devices and applications embedded with AI has ushered in an era in which individuals are transitioning from being mere AI immigrants to being proficient AI natives. This paradigm shift has had a multifaceted impact on society, influencing how people work, learn, and interact.

The swift advancement of e-learning platforms is a notable global trend in higher education. Even before the COVID-19 pandemic, universities worldwide were increasingly exploring and adopting online teaching and learning methods. However, the global health crisis significantly accelerated this transition, pushing many educational institutions to pivot rapidly to online modalities. Hodges et al. (2020) observe this transformative shift, noting how the pandemic has compelled universities to make online education the primary mode of instruction. Consequently, the integration of online learning platforms within higher education has become a significant global phenomenon and reshaped the landscape of academic delivery. The accelerated adoption of online education has profound implications for students, educators, and institutions. As universities grapple with the challenges and opportunities presented by online learning, it becomes imperative to discern the factors contributing to students’ academic success in virtual classrooms. The study by Hodges et al. (2020) underscores the urgency of this examination.

Simultaneously, the rapid integration of AI into various aspects of our lives necessitates a critical evaluation of our readiness to navigate the challenges posed by the “AI era” (Davenport & Ronanki, 2018). The term “AI natives” reflects a new reality wherein individuals are not merely users but proficient navigators of AI-driven technologies. This shift prompts reevaluating the skills and competencies required in the modern world. Consequently, competence in effectively using AI is increasingly recognized as essential. Researchers such as Kandlhofer et al. (2016) and Tarafdar et al. (2019) emphasize the urgent need to enhance people’s capacity to interact with and harness the potential of AI.

Emerging AI systems, exemplified by technologies like IBM’s Watson, demonstrate remarkable capabilities in learning and self-improvement. This advancement has led to their use in knowledge-based tasks that were traditionally exclusive to human white-collar workers. Tasks once deemed resistant to automation are now within the purview of AI systems (Wladawsky-Berger, 2017). The intelligence of AI technologies is rapidly evolving, positioning them as semi-autonomous decision-makers across a growing array of intricate contexts (Davenport & Kirby, 2016).

While Jarrahi (2018) and Stembert and Harbers (2019) have emphasized the benefits of high AI competence in human-AI interaction, other scholars have stressed the need to improve AI competence. Long and Magerko (2020) have attempted to delineate core competencies using AI technology. However, a comprehensive framework or tool for assessing such competence still needs to be developed. To fill this gap, we suggest using “AI literacy” to describe people’s proficiency with AI. AI literacy is the ability to correctly recognize, operate, and assess AI-related products using moral guidelines. Comparable to other forms of literacy—such as digital (Ala-Mutka, 2011; Calvani et al., 2008) and computer literacy (Hoffman & Blake, 2003)—AI literacy emphasizes proficiency and appropriate use rather than requiring knowledge of the underlying theory.

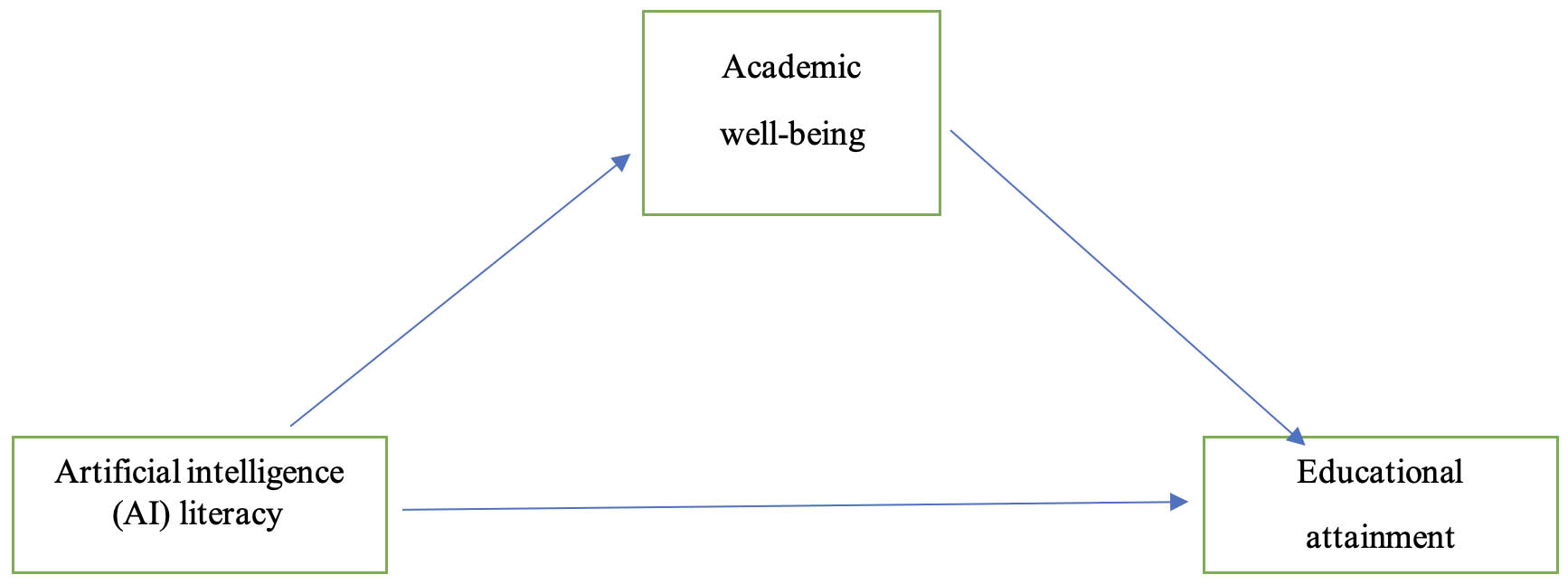

Research on AI literacy is pivotal for three main reasons. Firstly, AI literacy contributes to understanding ongoing research in human-AI interaction. An individual’s level of literacy regarding a product shapes their mental model (Rosling & Littlemore, 2011), which is crucial in interaction processes (Norman, 2013). Research on AI literacy may elucidate variations in people’s behavior during AI interactions. Secondly, unlike previous studies that measured participants’ AI competence through prior experience and usage frequency (Lee & Choi, 2017; Luo et al., 2019; Metelskaia et al., 2018), Thirdly, AI literacy provides a more comprehensive measurement of competence that considers the multifaceted aspects of AI use. However, the structural relationship between AI literacy, academic resilience, academic well-being, and educational attainment of university students in online classes with learning management systems (LMS) still needs to be explored. This study seeks to validate the structural equation modeling illustrating the interplay of academic resilience, academic well-being, and educational attainment of Iranian undergraduate students in online classes with an LMS, as depicted in Figure 1.Top of Form

Figure 1

Hypothetical Model of the Study

Based on the model, we propose the following five hypotheses:

H1: AI literacy has a significant effect on undergraduate students’ academic well-being.

H2: Undergraduate students’ academic well-being significantly affects their educational attainment.

H3: Undergraduate students’ AI literacy has a significant direct effect on their educational attainment.

H4: Undergraduate students’ AI literacy has a significant indirect effect on their educational attainment through the mediating role of academic well-being.

H5: The hypothetical structural model of undergraduate students’ AI literacy, educational attainment, and academic well-being has a goodness of fit.

One of the defining features of open and distributed learning is its accessibility, as it offers educational opportunities to individuals who may face barriers to attending traditional brick-and-mortar institutions (Bozkurt et al., 2015; Lea & Nicoll, 2013). This accessibility is particularly beneficial for non-traditional students—such as working professionals, parents, individuals with disabilities, or people residing in remote areas—who may find it challenging to pursue higher education through conventional means (Chen et al., 2021). By breaking down geographical constraints and temporal limitations, open and distributed learning democratizes access to education, promoting lifelong learning and continuous skill development (Alavi et al., 2002).

Furthermore, open and distributed learning fosters a learner-centered approach, allowing individuals to personalize their learning experiences according to their preferences, pace, and learning styles (Kop et al., 2011). Through asynchronous and synchronous communication tools, learners can engage with course content at their convenience, collaborate with peers, and receive timely feedback from instructors (Matheos & Archer, 2004). This flexibility empowers learners to take ownership of their education, cultivate self-discipline, and enhance digital literacy skills, which are increasingly vital in today’s technology-driven society (Matheos & Archer, 2004).

In the context of AI literacy, open and distributed learning presents unique challenges and opportunities (Mühlenbrock et al., 1998). On the one hand, the digital nature of open and distributed learning means students must be proficient in navigating online platforms, interacting with AI-driven tools and resources, and critically evaluating AI-generated content. Learners must develop AI literacy skills to discern between reliable and misleading information, understand the ethical implications of AI technologies, and harness AI tools effectively to support their learning objectives (Mühlenbrock et al., 1998). On the other hand, open and distributed learning platforms can use AI-driven algorithms to personalize learning experiences, adapt instructional content to individual needs, and provide targeted interventions to support struggling learners (Mühlenbrock et al., 1998). AI technologies embedded within LMS platforms can analyze learner data, identify patterns of engagement and performance, and offer personalized recommendations for optimizing learning outcomes (Mühlenbrock et al., 1998). Thus, open and distributed learning environments serve as fertile grounds for exploring the intersection of AI literacy, educational attainment, and academic well-being among diverse student populations, including Iranian university students.

The inception of AI in education dates back to the 1970s, marked by initial attempts to replace teachers with supercomputers. Pivotal research between 1982 and 1984 demonstrated that students receiving both human and AI instruction outperformed those exposed solely to traditional teaching methods (Hao, 2019; Kay, 2012). Integrating AI into education offers unique prospects for hands-on learning and enhancing technology-based educational settings. However, despite the potential of AI for education, many professionals in technology and education seek guidance in establishing effective procedures and frameworks (Li, et al., 2024; Kay, 2012).

As suggested by Mollman (2022), the release in November 2022 of the ChatGPT AI tool, based on the generative pre-trained transformer (GPT) language model, significantly increased public awareness of AI. This sophisticated chatbot tool broadened its user base to over a million within a week of its launch, showcasing its rapid adoption and success. Beyond technical capabilities, ChatGPT has the potential to revolutionize various societal aspects, including the generation of scholarly articles and assistance in complex tasks such as writing academic papers (Liu et al., 2021). The emergence of AI, represented by ChatGPT, poses challenges and opportunities for educators and students, emphasizing the need for specialized digital skills and literacy in the era of information technologies (Budzianowski & Vulić, 2019; Cote & Milliner, 2018).

AI involves the development of machines capable of tasks traditionally requiring human intelligence, ranging from learning and reasoning to problem-solving and pattern recognition (Davenport & Kalakota, 2019; Terra et al., 2023). It). Its applications extend to healthcare, where AI demonstrates equal or superior performance in tasks such as disease diagnosis (Gómez-Trigueros et al., 2019).

Wang and Wang (2022) have conducted a study addressing the need for a standardized tool to measure artificial intelligence anxiety. They developed an AI anxiety scale through a rigorous process, confirming the reliability and validity of the instrument with a sample of 301 respondents and contributing to the understanding of artificial intelligence anxiety and associated behaviors. In addition, Wang and Wang (2022) introduced the concept of AI literacy, identifying core constructs: awareness, use, evaluation, and ethics. They developed a 12-item scale through a rigorous process, confirming its adequacy. The study revealed significant associations between AI literacy, digital literacy, attitudes toward robots, and daily AI usage, providing insights into human-AI interaction and application design.

AI constitutes a dynamic and interdisciplinary field that spans computer science, information science, mathematics, psychology, sociology, linguistics, and philosophy, as highlighted by Russell and Norvig (2010). This convergence of diverse disciplines accentuates the nuanced distinctions between AI and digital literacy. While digital literacy encompasses proficiency in conventional digital technologies, AI literacy involves a more intricate understanding of systems endowed with biological and social attributes, as elucidated by Poria et al. (2017) and Tao and Tan (2005).

Users’ perceptions of the AI landscape are notably distinct from their perceptions of conventional digital technology. They tend to attribute more human-like and social qualities to AI entities, reflecting a cognitive shift in how they see and interact with technology. This shift departs from conventional digital literacy, where users engage primarily with tools that lack the social and biological dimensions they perceive as inherent in AI systems (Poria et al., 2017). When users engage with AI, particularly in human—robot interaction, their cognitive processes often lean toward social logic rather than machine logic, as Vossen and Hagemann (2010) have observed. This underscores a fascinating aspect of AI literacy—users navigate and interpret AI systems using mental models that incorporate social and relational dynamics, mirroring human interactions. In contrast, users typically approach conventional digital technologies with a more transactional and utilitarian mindset (Tao & Tan, 2005).

In essence, the interdisciplinary nature of AI not only reflects the convergence of diverse scientific and philosophical domains but also gives rise to a distinct cognitive landscape. The delineation between AI literacy and digital literacy is more than just a matter of technical proficiency. It encompasses a profound shift in how individuals conceptualize and engage with technology, particularly regarding its perceived social and biological dimensions. This shift in mental models emphasizes the need for a nuanced approach to literacy education that encompasses the multifaceted dimensions of contemporary technological landscapes (Davenport & Kalakota, 2019).

Consequently, users employ distinct criteria to evaluate AI and digital products. AI literacy cannot be directly equated with digital literacy, as demonstrated by a digitally literate high school student unfamiliar with AI concepts. This makes digital literacy instruments unsuitable for assessing AI literacy. However, the digital literacy framework can guide AI literacy development. The next section explores essential digital literacy concepts for a more nuanced understanding of AI literacy (Wang & Wang, 2022).

A comprehensive assessment of a student’s academic experiences—comprising their engagement in learning, sense of belonging, and satisfaction with school—is referred to as their academic well-being. Academic well-being has been determined to be a significant element influencing students’ academic achievement (Seligman et al., 2009). A person’s level of education is referred to as their educational attainment, and it is frequently used as a gauge of their success in school. This literature review aims to identify the factors that affect academic well-being and explore the relationship between well-being and educational attainment. Academic well-being and educational attainment are positively correlated: a study conducted by Suldo et al. (2008) found that students’ grades and test scores were higher when they reported higher levels of academic well-being, which included positive emotions, a solid academic self-concept, and supportive relationships. In addition, a study by Salmela-Aro and Upadyaya (2014) found that students who reported higher academic well-being were likelier to complete secondary school and pursue higher education. Several factors influence the relationship between academic well-being and educational attainment. One of the critical factors is social support: that is, how much students feel encouraged by their parents, teachers, and peers to pursue their academic goals. Students who have robust social networks are more likely to succeed academically. Moreover, research indicates that a positive school climate—marked by elevated expectations, nurturing relationships, and avenues for active participation—is pivotal in fostering academic well-being and the achievement of educational goals (Marsh, 1990). Student motivation, particularly intrinsic motivation, has also been identified as a positive correlate of intellectual well-being and educational attainment (Salmela-Aro & Upadyaya, 2014). Finally, academic self-concept, representing an individual’s belief in their capabilities, is a crucial factor influencing intellectual well-being and educational achievement (Yeager & Dweck, 2012).

In light of the comprehensive literature review encompassing the theoretical underpinnings of open and distributed learning, the intersections of AI literacy, and the dynamics of academic well-being and educational attainment, this study aims to address a critical gap in understanding the complex relationships among these variables within the context of Iranian university students’ experiences in online classes with LMS. While previous research has extensively explored the individual components of AI literacy, academic well-being, and educational attainment, very few empirical investigations have examined the interplay between these constructs, particularly within the framework of open and distributed learning. Moreover, the evolving landscape of AI technologies, coupled with the unprecedented challenges posed by the COVID-19 pandemic, underscores the need for a nuanced examination of how undergraduate students navigate AI-driven online learning environments and the implications of this for their academic well-being and educational outcomes.

The participants for this research were selected from Guangdong University of Foreign Studies in China Payame Noor University, and the Tehran University e-Learning Center and encompassed individuals from diverse academic disciplines, in Iran. Participants were selected through a random sampling technique to ensure a representative cross-section of the universities’ student populations. In determining the sample size for this investigation, we adhered to a robust methodology involving power analysis and sample size calculation.

Considering a preset significance level and power, the power analysis sought to determine the minimum sample size necessary for identifying statistically significant differences in the study’s results. The effect size—obtained from earlier studies on similar subjects—was included in this computation, and the significance level was fixed at 0.5. A standard level in social science research, 0.80, was chosen to determine the study’s power. The study included 400 participants, 220 (55%) of whom identified as female and 180 (45%) as male. The age range of the participants was 20—35 years, with a mean of—28 years (SD = 5). Remarkably, half of the subjects were between the ages of 20 and 23 (50%). Subjects aged 23—26 accounted for 25% of participants, and those 26—30 made up 13%. A smaller group (12%) of participants belonged to the 30—35 year age range. The intentional variation in the sample concerning age, gender, and level of education improves the applicability of the study’s conclusions to a larger group of young adults. Table 1 presents the participants’ demographic data.

Table 1

Demographic Profile of Participants

| Number of participants | Percentage | ||

| Gender | Female | 220 | 55 |

| Male | 180 | 45 | |

| Age | 20–23 | 200 | 50 |

| 23–26 | 100 | 25 | |

| 26–30 | 50 | 12.5 | |

| 30–35 | 50 | 12.5 | |

| Level | Basic sciences | 120 | 30 |

| Humanities | 140 | 35 | |

| Engineering | 140 | 35 | |

This study implemented a cross-sectional survey design using structural equation modeling (SEM) analysis as the research methodology. Students from a specific educational institution were selected for the study and willingly filled out the survey. During the administration of the survey, students were required to complete a series of self-report tasks carefully crafted to assess their level of academic achievement, academic well-being, and AI literacy. Using the cross-sectional survey design, we gathered information from the student body at a particular moment. This method made it easier to investigate the connections between the variables being studied, which provided important information about the dynamics occurring in the given setting. The study’s main goal was to examine the variables impacting the student cohort’s educational attainment and academic well-being.

The chosen students completed a comprehensive survey. After the data collection process was finished, SEM analysis was conducted to examine the complex relationships between the variables of interest. SEM is a sophisticated statistical technique that makes it possible to evaluate complex relationships by considering both direct and indirect effects. We evaluated the proposed model’s overall fit and methodically tested the proposed relationships using SEM. This methodology thoroughly and systematically investigates the complex relationships between academic achievement, AI literacy, and student well-being.

In this study, three instruments were used to thoroughly assess all variables of the study The primary tool was the AI literacy scale, created by Wang et al. (2023), with a total of 12 items. This scale condenses usage, evaluation, awareness, and ethics into five distinct factors. The scale’s items are carefully designed to measure participants’ proficiency in these areas, giving rise to a comprehensive understanding of AI literacy.

The second tool used was the academic well-being scale, a self-report tool for assessing students’ overall academic well-being. The academic work score is based on three primary dimensions: academic engagement, positive emotions, and sense of purpose. It uses a five-point scoring system, with a maximum score of 100. Higher scores indicate higher levels of academic well-being on this scale, providing a quantitative measure to evaluate and compare participants’ well-being across various dimensions.

The third tool was the educational attainment scale, which focused explicitly on grade point average (GPA). This scale involved the meticulous consideration of students’ GPA for the present semester and the cumulative GPA encompassing all courses and semesters. Notably, in Iranian higher education, GPA is computed based on the grades acquired in individual classes. The standard GPA scale in this educational setting spans from 0 to 20, with 20 representing the pinnacle of achievement. Using this instrument, we could obtain a comprehensive overview of participants’ academic performance using a standardized metric widely recognized in the educational domain.

Data for this study were acquired by administering a comprehensive survey questionnaire encompassing self-report measures targeting AI literacy, academic well-being, and educational attainment. The survey, designed for electronic delivery, was disseminated to the student cohort during a specified time frame. The anonymity and privacy of participants were meticulously safeguarded. The survey was structured to be anonymous, assuring respondents that their input would remain confidential. This method created an atmosphere in which students felt free to respond honestly and completely. Participants were more inclined to give frank feedback when worries about identification or consequences were removed.

An electronic invitation with detailed instructions and a hyperlink to the survey was sent to the chosen students as part of the administration process. Students had to give their informed consent after being made aware of the study’s goal and the importance of their involvement in supporting academic research. Students were informed about potential rewards for their participation, including gift cards and participation certificates, to encourage their involvement further. Students were free to finish the survey whenever it was convenient for them in the allotted time. They were equipped with their electronic devices and provided with Internet connectivity, and asked to react thoughtfully, offering their viewpoints and firsthand accounts of academic perseverance, student satisfaction, academic welfare, and educational achievement. This methodology aimed to obtain a nuanced and rich dataset that reflected the varied experiences and perspectives of the students who took part in the study.

A thorough and systematic statistical analysis was performed on the gathered dataset to investigate the complex relationships between essential variables, such as academic resilience, student engagement, well-being, educational attainment, and personality type. Several crucial steps were involved in the analytical process to obtain insightful results. First, we carried out a thorough data cleaning to guarantee the accuracy and completeness of the survey answers. Second, we maintained the dataset’s integrity by fixing erroneous or missing data points using methods such as imputation or removal. Third, we then calculated descriptive statistics to summarize the important variables. Central tendencies, variabilities, and data distributions were expressed using metrics such as means and standard deviations. We carried out a reliability analysis to evaluate the internal consistency of the self-report measures. For every scale in the survey, we calculated the Cronbach’s alpha coefficient and used it as a metric to assess the consistency and dependability of the measurements. After that, we performed a bivariate correlation analysis to examine the connections between the variables, determining the degree and direction of correlations using metrics such as Pearson’s correlation coefficient. SEM was used to test the proposed relationships between variables. This sophisticated analytical approach facilitated the evaluation of both direct and indirect effects, yielding a comprehensive understanding of the intricate relationships within the model. To assess the adequacy of the proposed model, we scrutinized model fit indices, including chi-square, comparative appropriate index, root mean square error of approximation, and standardized root mean square residual.

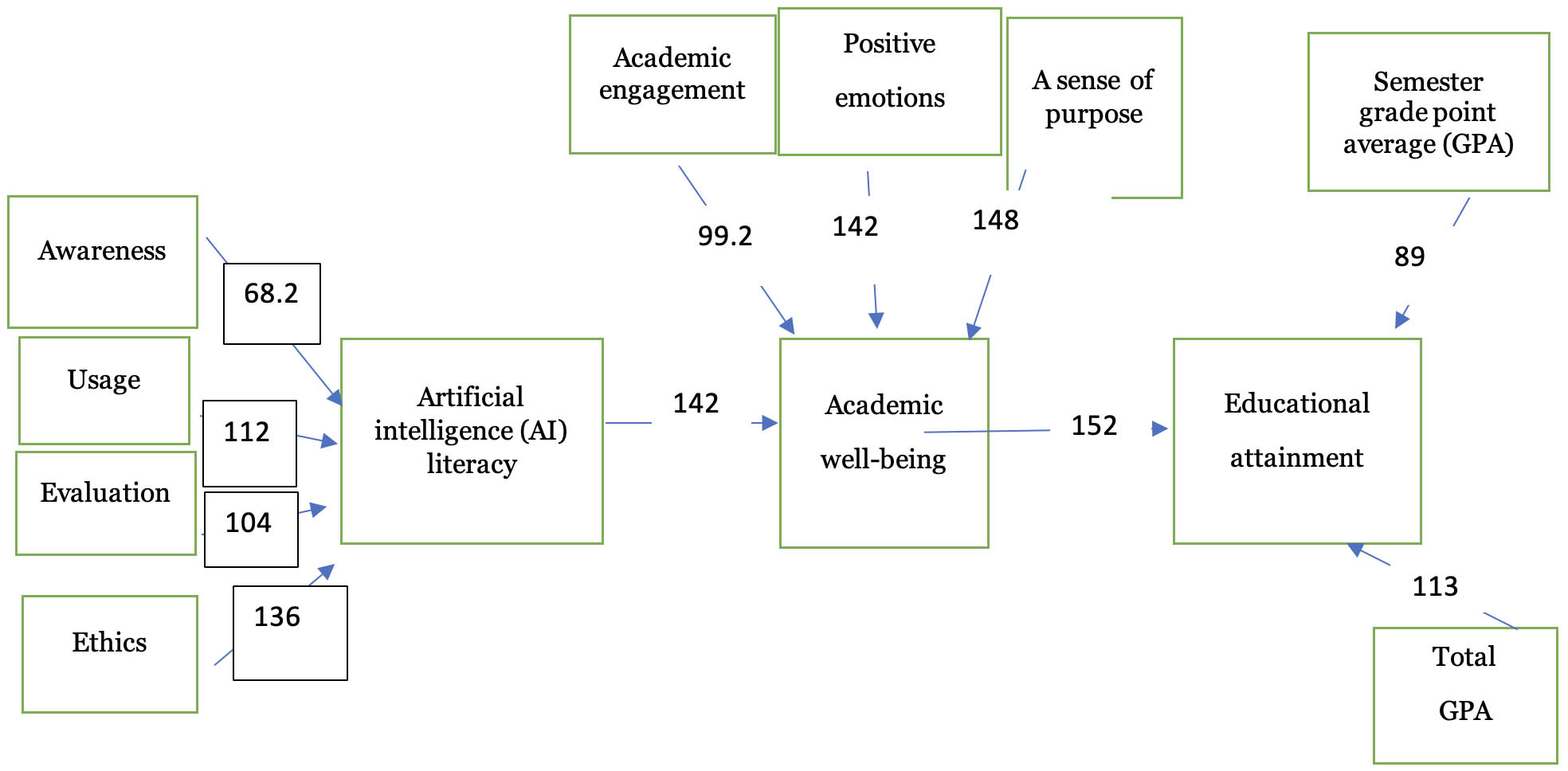

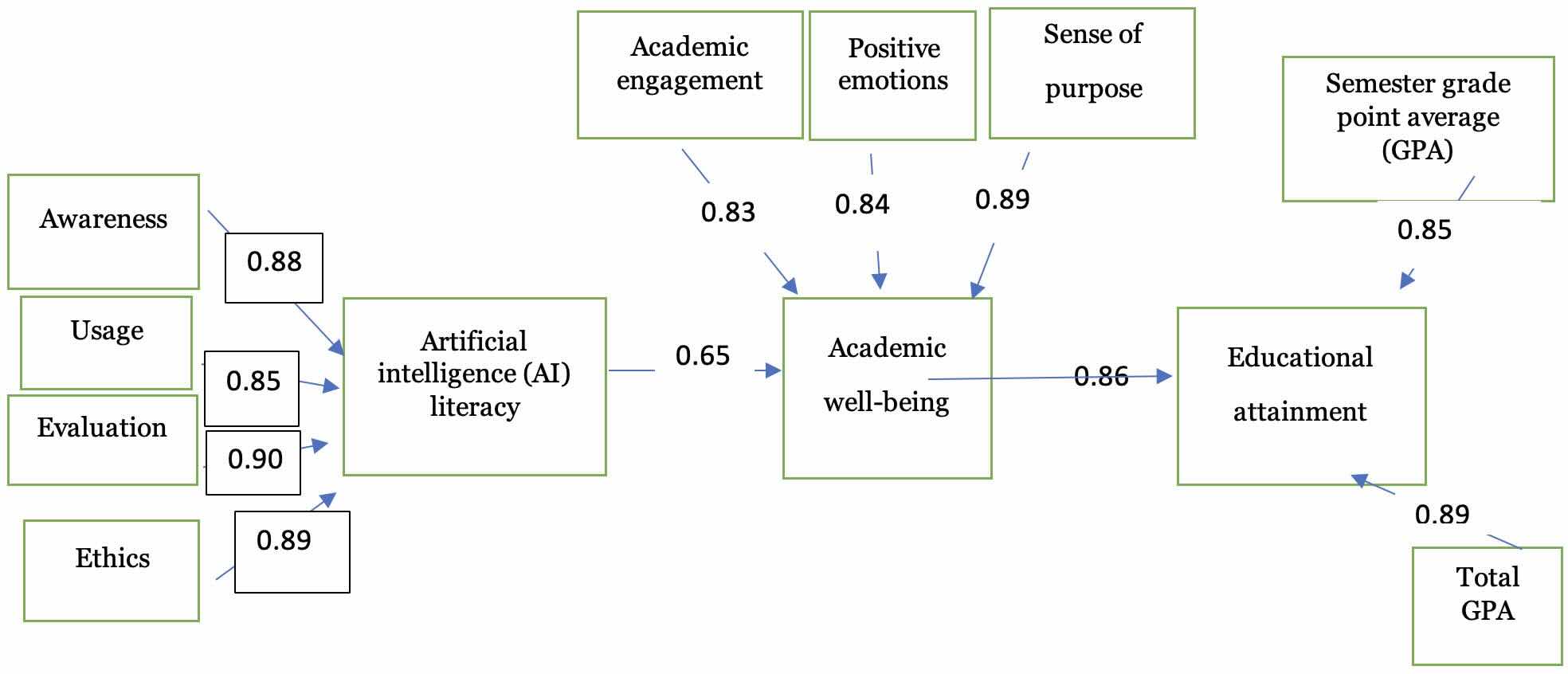

The study outcomes are delineated in two segments: an assessment of the model and findings related to examining the student’s personality types. Appraising the theoretical model involved applying multivariate and SEM analysis through Smart-PLS3 software. This selection was driven by the non-normal distribution of the data and the relatively modest sample size. Figures 2 and 3 present the model’s results, illustrating non-standard coefficients (indicating significant coefficients) and standard coefficients (reflecting effect coefficients), respectively.

Figure 2

Evaluation of Hypothetical Model Based on Non-Standard Coefficients (Significant Coefficients)

Figure 3

Evaluation of Hypothetical Model Based on Standard Coefficients

In general, when evaluating structural equation models with Smart-PLS3 software, researchers examine three models: the outer, inner, and entire empirical models. Similar to the measurement model in SEM, the external model shows the relationships between manifest or observed variables (here represented as indicators) and latent or unobservable variables (both independent and dependent). Examining the relationships between latent or unobservable variables, the inner model relates to the structural model or path analysis in SEM. Furthermore, the overall empirical model evaluates the model’s global suitability. As a result, we tested and evaluated the general practical model concerning students’ educational attainment and the empirical structural model (path) and measurement. Table 2 summarizes the findings on the measurement model (outer).

Table 2

Psychometrics (Validity and Reliability) of the Hypothetical Model

| Construct | Indicator | Validity assessment | Reliability assessment | ||||

| Convergent validity | Divergent validity | ||||||

| FL | AVE | Fornell & Larker Index | Cronbach’s alpha | Rho-A | Composite reliability | ||

| Artificial intelligence (AI) literacy | Awareness | 0.826 | 0.740 | 0.870 | 0.920 | 0.940 | 0.910 |

| Usage | 0.714 | ||||||

| Evaluation | 0.820 | ||||||

| Ethics | 0.923 | ||||||

| Academic well-being | Academic engagement | 0.842 | 0.920 | 0.920 | 0.930 | 0.936 | 0.948 |

| Positive emotions | 0.907 | ||||||

| Sense of purpose | 0.983 | ||||||

| Educational attainment | Semester grade point average (GPA) | 0.84 | 0.880 | 0.9 | 0.940 | 0.9 | 0.930 |

| Total GPA | 0.86 | ||||||

Note. FL = Factor Loading, AVE = Average variance explained

Table 2 and Figure 3 show that the cross-loadings of the independent and mediating variables (personality, academic resilience, and academic well-being) on educational attainment, mediated by learner enjoyment, were statistically significant at the 0.01 level (p < 0.01) and exceeded the acceptable threshold of 0.7. This implies acceptable correlations between the latent variables (independent and mediating variables) and the observed variables (components). Additionally, all research constructs extracted average variance extracted (AVE) values greater than the acceptable cutoff of 0.5, demonstrating the constructs’ convergent validity. Furthermore, as indicated by Table 2, the square root of the AVE for each construct outperforms its correlation with other constructs. As a result, the model’s acceptable discriminant validity is confirmed. The latent or independent and mediating variables are more strongly associated with their components than other constructs. Lastly, the internal consistency (reliability) assessment, as indicated in the results of Table 2, discloses that Cronbach’s alpha coefficient for the research constructs (independent and mediating variables) exceeds the acceptable threshold of 0.7. Moreover, the composite reliability and the homogenous Rho-A coefficient also surpass the acceptable threshold of 0.7 for all constructs, indicating satisfactory composite reliability.

This section evaluates all pathways delineated in the internal model, which illustrates relationships between constructs according to research hypotheses by applying the t-test for statistical significance. Consequently, should the confidence level of the test exceed 1.96 and 2.58, the pathways will be considered statistically validated at the 95% and 99% confidence levels, respectively. As illustrated in Figure 2, all hypotheses have been duly acknowledged, with their respective t-values demonstrating statistical significance at the 99% and 95% confidence levels (p < 0.01 and p < 0.05, respectively).

The coefficient of determination, signifying the overall explained variance of the dependent variable (educational attainment) predicated on independent and mediating variables in the structural model, stands at 0.983, reflecting an exceptionally high level. Furthermore, the independent variables in the study have demonstrated the capacity to elucidate and forecast 96.1% of the variance in the mediating variable.

The stated hypotheses were tested, and the results are presented in Table 3.

Table 3

Evaluation of the Stated Hypotheses

| Research hypotheses | Effect sizes | Results | ||

| Non-standard coefficients | Standard coefficients | |||

| f | p | |||

| H1: AI literacy has a significant effect on undergraduate students’ academic well-being. | 9.823 | p < 0/01 | 0.145 | Accepted |

| H2: Undergraduate students’ academic well-being significantly affects their educational attainment. | 1.941 | p < 0/05 | 0.067 | Accepted |

| H3: Undergraduate students’ AI literacy has a significant direct effect on their educational attainment. | 3.711 | p < 0.01 | 0.677 | Accepted |

| H4: Undergraduate students’ AI literacy has a significant indirect effect on their educational attainment through the mediating role of academic well-being. | 21.070 | p < 0/01 | 0.778 | Accepted |

| H5: The hypothetical structural model of undergraduate students’ AI literacy, educational attainment and academic well-being has a goodness of fit. | 21.070 | p < 0/01 | 0.778 | Accepted |

Conclusively, through a comprehensive assessment of the model employing the RNS Theta index, registering a value of 0.623 out of 100, we can infer that the generated model bears a relatively close resemblance to the theoretical model. Thus, the overarching hypothesis of the research, grounded in the structural and measurement model elucidating the impact of AI literacy on the educational attainment of students with the mediating variable of well-being, is substantiated.

The findings of the study resonate strongly with the theoretical framework of open and distributed learning, as they highlight the transformative potential of online education in breaking down traditional barriers to learning (Bozkurt et al., 2015; Alavi et al., 2002). The accessibility provided by open and distributed learning aligns with the study’s emphasis on democratizing education, particularly for non-traditional students facing geographical or temporal constraints (Lea & Nicoll, 2013; Chen et al., 2021). Moreover, the learner-centered approach facilitated by online platforms reflects the empowerment of students to personalize their learning experiences, a key feature emphasized in the theoretical framework (Kop et al., 2011; Matheos & Archer, 2004). The integration of AI literacy within open and distributed learning environments represents both an opportunity and a challenge, echoing the study’s exploration of the intersection between AI literacy and educational attainment (Mühlenbrock et al., 1998). By using AI-driven algorithms to personalize learning experiences and support struggling learners, open and distributed learning platforms exemplify the innovative potential of technology to enhance educational outcomes, consistent with the study’s findings on the role of AI in education. Overall, the study underscores the significance of open and distributed learning in promoting lifelong learning, skill development, and academic well-being among diverse student populations, aligning closely with the principles espoused in the theoretical framework.

Moreover, the findings of this study align with the broader discourse on the evolving landscape of education, particularly in the context of the integration of AI and the changing nature of literacy. The distinction between emergency remote teaching and online learning, as highlighted by Hodges et al. (2020), becomes crucial in understanding the implications of AI literacy for academic well-being and educational attainment.

In a similar vein, the study underlines the significant impact of AI literacy on the academic well-being of undergraduate students. Insights from Davenport and Kalakota (2019) regarding the potential of artificial intelligence in healthcare resonate with the idea that AI literacy may contribute positively to students’ overall well-being in an academic setting. As educational environments increasingly incorporate AI-driven tools and resources, students with higher AI literacy skills may experience enhanced well-being, potentially stemming from increased efficacy in navigating and using AI technologies (Lee & Choi, 2017).

Moreover, the influence of AI literacy on academic well-being aligns with Ala-Mutka’s (2011) conceptualization of digital competence and Yeager and Dweck’s (2012) acknowledgment of the multifaceted nature of student success. The ability to effectively engage with AI technologies is a modern facet of digital competence, impacting students’ overall well-being and shaping their experiences in educational settings.

The study also establishes a significant connection between academic well-being and educational attainment. This finding is consistent with the broader literature emphasizing the intricate relationship between psychological well-being and academic success (Suldo et al., 2008; Salmela-Aro & Upadyaya, 2014). Positive emotions, dedication, and absorption, as captured by the Schoolwork Engagement Inventory (Salmela-Aro & Upadyaya, 2014), are crucial components of academic well-being that contribute to sustained effort and focus, ultimately influencing educational outcomes.

The direct effect of AI literacy on educational attainment underscores the role of AI literacy as a determinant of academic success. This is in line with the work of Jarrahi (2018) on AI’s impact on organizational decision-making, suggesting that proficiency in AI literacy may translate into more informed and adequate decision-making in academic contexts. The significant indirect effect of AI literacy on educational attainment, mediated by intellectual well-being, adds nuance to the relationship. This aligns with the idea that students with higher AI literacy benefit not only directly from their skills but also indirectly through the positive impact on their academic well-being. The holistic nature of the influence of AI literacy on educational outcomes is supported by Long and Magerko (2020) and their insights into AI literacy competencies.

Integrating AI literacy into educational curricula becomes imperative, as Kandlhofer et al. (2016) suggest, to prepare students for the evolving technological landscape. The findings of the study by Kandlhofer et al. (2016) support the idea that AI literacy is not merely a technical skill but a factor influencing students’ well-being and educational achievements. Considering the challenges Stembert and Harbers (2019) identify in designing with AI, educational institutions need to foster a balance that accounts for the human aspects of AI integration. Our study contributes to understanding how AI literacy intersects with academic well-being and educational attainment among undergraduate students (Hao, 2019; Kay, 2012). The implications extend to educational practices, emphasizing the need for AI literacy education and holistic support for students to thrive in an AI-driven educational landscape. Future research could delve into specific AI literacy components and explore interventions that promote both AI literacy and well-being in educational settings (Liu et al., 2021; Mollman, 2022).

The implications of this study also extend to various stakeholders in the field of education, including language teachers, materials developers, and policymakers. For language teachers, the findings highlight the importance of integrating AI literacy into language instruction to equip students with the necessary skills to navigate and critically evaluate AI-driven language learning tools and resources. Language teachers can use AI technologies to personalize instruction, adapt materials to individual student needs, and provide targeted interventions to support language learning outcomes. Additionally, language teachers can use online platforms and LMS to create interactive and engaging learning experiences that foster student engagement and autonomy in language learning. Materials developers can use AI-driven algorithms to develop adaptive language learning materials that cater to learners’ diverse needs and preferences, ensuring accessibility and inclusivity in language education. Furthermore, policymakers can use the findings to advocate for the integration of AI literacy and online learning platforms into language education policies and curricula, promoting lifelong learning and continuous skill development in language learners. Policymakers can also invest in teacher training programs to enhance language teachers’ proficiency in using AI technologies effectively in language instruction, thereby fostering innovation and excellence in language education. Overall, the study underscores the transformative potential of AI and online learning in language education and highlights the need for collaborative efforts among language teachers, materials developers, and policymakers to harness these technologies for the benefit of language learners.

This study sheds light on the complex relationships between academic success, AI literacy, and educational attainment and offers insightful information about the changing educational environment. The results highlight the significant influence of AI literacy on the academic well-being of undergraduate students. They are consistent with the broader discussion on how artificial intelligence can be used to improve a range of fields. Students with higher levels of AI literacy are likely to be more adept at navigating the growing number of AI-driven tools being integrated into educational environments. This will likely lead to increased success and well-being in the digital age. The literature that has already been written about the complex relationship between psychological well-being and academic success is consistent with the observed relationship between academic well-being and educational attainment. Good feelings, commitment, and immersion—essential elements of academic health—greatly impact long-term effort and concentration, which in turn shapes learning outcomes. In line with current discussions on AI’s impact on decision-making, the direct relationship between educational attainment and AI literacy highlights the latter’s critical role as a predictor of academic success.

The findings of this study have broad implications for educational approaches, highlighting the need to include AI literacy in curricula as a critical component that influences students’ academic performance and well-being in addition to being a technical skill. The study emphasizes the critical use of a balanced approach—one that considers the integration of human elements—when designing with AI. The significance of comprehensive AI literacy education and holistic support for students to thrive in an AI-driven educational landscape is highlighted by this research, which adds to our understanding of the relationship between AI literacy and academic well-being and educational attainment. Subsequent investigations may examine particular elements of AI literacy and interventions that work in concert to advance both AI literacy and student well-being in educational environments.

The authors acknowledge that the manuscript is original. Some statistical terms (such as structural equation modeling, Confirmatory Factor Analysis average variance explained) might be detected as by plagiarism checkers. We also acknowledge that we used the AI-empowered applications Poe and Grammarly to check the accuracy of the language of the manuscript.

Ala-Mutka, K. (2011). Mapping digital competence: Towards a conceptual understanding (Report no. JRC67075). Institute for Prospective Technological Studies. https://www.researchgate.net/publication/340375234

Alavi, M., Marakas, G. M., & Yoo, Y. (2002). A comparative study of distributed learning environments on learning outcomes. Information Systems Research, 13(4), 404-415. https://doi.org/10.1287/isre.13.4.404.72

Bozkurt, A., Akgun-Ozbek, E., Yilmazel, S., Erdogdu, E., Ucar, H., Guler, E., Sezgin, S., Karadeniz, A., Sen-Ersoy, N., Goksel-Canbek, N., Deniz Dincer, G., Ari, S., & Aydin, C. H. (2015). Trends in distance education research: A content analysis of journals 2009-2013. International Review of Research in Open and Distributed Learning, 16(1), 330-363. https://doi.org/10.19173/irrodl.v16i1.1953

Budzianowski, P., & Vulić, I. (2019). Hello, it’s GPT-2—how can I help you? Towards the use of pre-trained language models for task-oriented dialogue systems. arXiv :1907.05774. https://doi.org/10.48550/arXiv.1907.05774

Calvani, A., Cartelli, A., Fini, A., & Ranieri, M. (2009). Models and instruments for assessing digital competence at school. Journal of E-Learning and Knowledge Society, 4(3). https://doi.org/10.20368/1971-8829/288

Chen, M., Gündüz, D., Huang, K., Saad, W., Bennis, M., Feljan, A. V., & Poor, H. V. (2021). Distributed learning in wireless networks: Recent progress and future challenges. IEEE Journal on Selected Areas in Communications, 39(12), 3579-3605. https://doi.org/10.1109/JSAC.2021.3118346

Cote, T., & Milliner, B. (2018). A survey of EFL teachers’ digital literacy: A Report from a Japanese University. Teaching English with Technology, 18(4), 71-89. https://tewtjournal.org/download/6-a-survey-of-efl-teachers-digital-literacy-a-report-from-a-japanese-university-by-travis-cote-and-brett-milliner/

Davenport, T., & Kalakota, R. (2019). The potential for artificial intelligence in healthcare. Future Healthcare Journal, 6(2), 94-98. https://doi.org/10.7861/futurehosp.6-2-94

Davenport, T.H., & Ronanki, R. (2018) Artificial Intelligence for the Real World. Harvard Business Review, 96, 108-116. https://doi.org/10.1007/s11747-019-00696-0

Gómez-Trigueros, I. M., Ruiz-Bañuls, M., & Ortega-Sánchez, D. (2019). Digital literacy of teachers in training: Moving from ICTs (information and communication technologies) to LKTs (learning and knowledge technologies). Education Sciences, 9(4), 274. https://doi.org/10.3390/educsci9040274

Hao, K. (2019, August 2). China has started a grand experiment in AI education. It could reshape how the world learns. MIT Technology Review. https://www.technologyreview.com/2019/08/02/131198/china-squirrelhas-started-a-grandexperiment-in-ai-education-it-could-reshape-how-the/

Hodges, C., Moore, S., Lockee, B., Trust, T., & Bond, A. (2020, March 27). The difference between emergency remote teaching and online learning. EDUCAUSE Review. https://er.educause.edu/articles/2020/3/the-difference-between-emergency-remote-teaching-and-online-learning

Hoffman, M., & Blake, J. (2003). Computer literacy: Today and tomorrow. Journal of Computing Sciences in Colleges, 18(5), 221-233.

Jarrahi, M. H. (2018). Artificial intelligence and the future of work: Human-AI symbiosis in organizational decision making. Business Horizons, 61(4), 577-586. https://doi.org/10.1016/j.bushor.2018.03.007

Kandlhofer, M., Steinbauer, G., Hirschmugl-Gaisch, S., & Huber, P. (2016). Artificial intelligence and computer science in education: From kindergarten to university. In IEEE Frontiers in Education Conference (FIE) (pp. 1-9). IEEE. http://doi.org/10.1109/FIE.2016.7757570

Kay, J. (2012). AI and Education: Grand Challenges. IEEE Intelligent Systems, 27(5), 66-69. https://doi.org/10.1109/MIS.2012.92.

Kop, R., Fournier, H., & Mak, J. S. F. (2011). A pedagogy of abundance or a pedagogy to support human beings? Participant support on massive open online courses. International Review of Research in Open and Distributed Learning, 12(7), 74-93. https://doi.org/10.19173/irrodl.v12i7.1041

Lea, M. R., & Nicoll, K. (Eds.). (2013). Distributed learning: Social and cultural approaches to practice. Routledge. https://doi.org/10.4324/9781315014500

Lee, S., & Choi, J. (2017). Enhancing user experience with conversational agent for movie recommendation: Effects of self-disclosure and reciprocity. International Journal of Human- https://doi.org/10.1016/j.ijhcs.2017.02.005

Li, G., Zarei, M.A., Alibakhshi, G. & Labbafi, A. (2024). Teachers and educators’ experiences and perceptions of artificial-powered interventions for autism groups. BMC Psychol ogy, 12, (2024). https://doi.org/10.1186/s40359-024-01664-2

Liu, X., Zheng, Y., Du, Z., Ding, M., Qian, Y., Yang, Z., & Tang, J. (2021). GPT understands, too. arXiv:2013.10385. https://doi.org/10.48550/arXiv.2103.10385

Long, D., & Magerko, B. (2020, April). What is AI literacy? Competencies and design considerations. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1-16). Association for Computing Machinery. https://aiunplugged.lmc.gatech.edu/wp-content/uploads/sites/36/2020/08/CHI-2020-AI-Literacy-Paper-Camera-Ready.pdf

Luo, X., Tong, S., Fang, Z., & Qu, Z. (2019). Frontiers: Machines vs. humans: The impact of artificial intelligence chatbot disclosure on customer purchases. Marketing Science, 38(6), 937-947. https://doi.org/10.1287/mksc.2019.1192

Marsh, H. W. (1990). The structure of academic self-concept: The Marsh/Shavelson model. Journal of Educational Psychology, 82(4), 623-636. https://doi.org/10.1037/0022-0663.82.4.623

Matheos, K., & Archer, W. (2004). From distance education to distributed learning: Surviving and thriving. Online Journal of Distance Learning Administration, 7(4), 1-13.

Metelskaia, I., Ignatyeva, O., Denef, S., & Samsonowa, T. (2018). A business model template for AI solutions. In Proceedings of the International Conference on Intelligent Science and Technology (pp. 35-41). Association for Computing Machinery.

Mollman, S. (2022). ChatGPT gained 1 million users in under a week. https://www.yahoo.com/video/chatgpt-gained-1-million-followers-224523258.html

Mühlenbrock, M., Tewissen, F., & Hoppe, U. (1998). A framework system for intelligent support in open distributed learning environments. International Journal of Artificial Intelligence in Education, 9, 256-274.

Norman, D. (2013). The design of everyday things: Revised and expanded edition. Basic Books.

Poria S, Cambria E, Bajpai R & Hussain A (2017) A review of affective computing: From unimodal analysis to multimodal fusion, Information Fusion, 37, 98-125. http://doi.org/10.1016/j.inffus.2017.02.003

Rosling, A., & Littlemore, K. (2011). Improving student mental models in a new university Information setting. In Digitisation Perspectives (pp. 89-101). Brill.

Russell, S. J., & Norvig, P. (2010). Artificial intelligence: A modern approach. Pearson.

Salmela‐Aro, K., & Upadyaya, K. (2014). School burnout and engagement in the context of demands—resources model. British Journal of Educational Psychology, 84(1), 137-151. https://doi.org/10.1111/bjep.12018

Seligman, M. E. P., Ernst, R. M., Gillham, J., Reivich, K., & Linkins, M. (2009). Positive Education: Positive psychology and classroom interventions. Oxford Review of Education, 35(3), 293-311. https://doi.org/10.1080/03054980902934563

Stembert, N., & Harbers, M. (2019). Accounting for the human when designing with AI: Challenges identified. In CHI’19-extended abstracts, Glasgow, Scotland UK—May 04-09, 2019.

Suldo, S. M., Shaunessy, E., & Hardesty, R. (2008). Relationships among stress, coping, and mental health in high-achieving high school students. Psychology in the Schools, 45(4), 273-290. https://doi.org/10.1002/pits.20300

Tao, J., & Tan, T. (2005). Affective computing: A review. In International Conference on Affective Computing and Intelligent Interaction (pp. 981-995). Springer.

Tarafdar, M., Beath, C. M., & Ross, J. W. (2019, June 11). Using AI to enhance business operations. MIT Sloan Management Review. https://sloanreview.mit.edu/article/using-ai-to-enhance-business-operations/

Terra, M., Baklola, M., Ali, S., & El-Bastawisy, K. (2023). Opportunities, applications, challenges and ethical implications of artificial intelligence in psychiatry: A narrative review. Egyptian Journal of Neurology, Psychiatry and Neurosurgery, 59(1), 80-90. https://doi.org/10.1186/s41983-023-00681-z

Vossen, G., & Hagemann, S. (2010). Unleashing Web 2.0: From concepts to creativity. Elsevier.

Wang, B., Rau, P. L. P., & Yuan, T. (2023). Measuring user competence in using artificial intelligence: Validity and reliability of artificial intelligence literacy scale. Behavior and Information Technology, 42(9), 1324-1337. https://doi.org/10.1080/0144929X.2022.2072768

Wang, Y. Y., & Wang, Y. S. (2022). Development and validation of an artificial intelligence anxiety scale: An initial application in predicting motivated learning behavior. Interactive Learning Environments, 30(4), 619-634. https://doi.org/10.1080/10494820.2019.1674887

Wladawsky-Berger, I. (2017, February 22). The emerging, unpredictable age of AI. MIT Initiative on the Digital Economy. http://ide.mit.edu/news-blog/blog/emergingunpredictable-age-ai

Yeager, D. S., & Dweck, C. S. (2012). Mindsets that promote resilience: When students believe that personal characteristics can be developed. Educational Psychologist, 47(4), 302-314. https://doi.org/10.1080/00461520.2012.722805

How AI Literacy Affects Students’ Educational Attainment in Online Learning: Testing a Structural Equation Model in Higher Education Context by Jingyu Xiao, Goudarz Alibakhshi, Alireza Zamanpour, Mohammad Amin Zarei, Shapour Sherafat, and Seyyed-Fouad Behzadpoor is licensed under a Creative Commons Attribution 4.0 International License.