Ining Tracy Chao, Tami Saj, and Doug Hamilton

Royal Roads University, Canada

The issue of quality is becoming front and centre as online and distance education moves into the mainstream of higher education. Many believe collaborative course development is the best way to design quality online courses. This research uses a case study approach to probe into the collaborative course development process and the implementation of quality standards at a Canadian university. Four cases are presented to discuss the effects of the faculty member/instructional designer relationship on course quality, as well as the issues surrounding the use of quality standards as a development tool. Findings from the study indicate that the extent of collaboration depends on the degree of course development and revision required, the nature of the established relationship between the faculty member and designer, and the level of experience of the faculty member. Recommendations for the effective use of quality standards using collaborative development processes are provided.

Keywords:Course development; course development team; online course quality; quality standards; instructional design standards; distance education; online learning; online education

The issue of quality is becoming front and centre as online and distance education moves into the mainstream of higher education (Sloan Consortium, 2004). Recent studies have determined that regarding students’ academic performance, online learning can be as effective as face-to-face learning and, in some cases, more effective (Sachar & Neumann, 2010;Tsai, 2009; U.S. Department of Education, 2009). Despite these promising and illuminating findings, universities and colleges that offer online programs must reassure various stakeholders, including learners, that engaging in online studies will be an effective and rewarding learning experience and that they will acquire the necessary skills and knowledge a particular program promises to deliver. To help provide these reassurances to stakeholders, many institutions and regional bodies have developed or adopted quality-related principles or standards that serve to define quality, but the debate remains on how to best assess quality when the new forms of education are emerging and changing rapidly (Middlehurst, 2001).

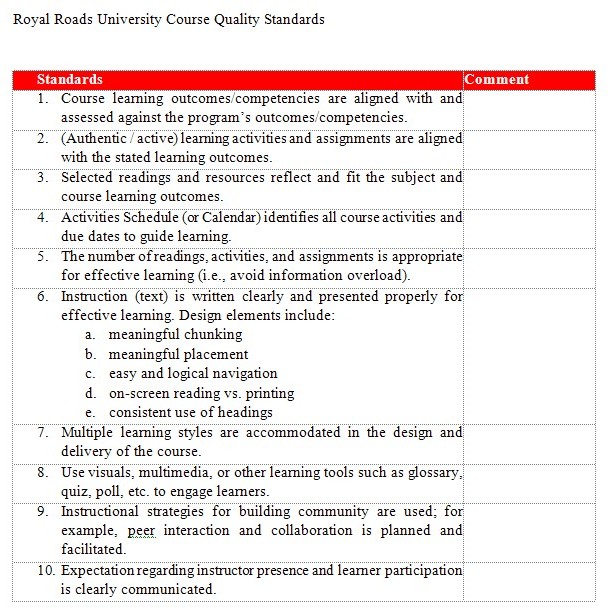

Royal Roads University (RRU) is one such institution offering applied and professional programs that feature substantive online study. Combining face-to-face residencies of one to four weeks with online courses in a cohort model, RRU’s programs have attracted many learners who appreciate the flexibility of a mixed model of delivery, especially if they are continuing to work full-time while taking a degree or certificate program. With over 600 courses being developed or revised annually, Royal Roads University needs to use a systematic approach to course development. All faculty members, including contract instructors, are supported by instructional designers in a centrally operated unit called the Centre for Teaching and Educational Technologies (CTET). This means each course must be designed and developed under the guidance of an academic lead and an instructional designer to ensure alignment with program outcomes and the university-wide instructional design quality standards, compiled and published by CTET in 2004 (Chao, Saj, & Tessier, 2004; see Appendix A). These standards consist of criteria related to learning outcomes and instructional strategies.

The instructional design quality standards have served primarily as a formative tool, with the use of the standards varying from one instructional designer to another. In addition, since the release of the quality standards, the University has formalized its curriculum and course quality assurance process by creating a university-wide, peer-based curriculum review and approval process, administered by the Curriculum Committee. As a result, it became necessary for CTET’s instructional design process to be aligned with this new process. A close examination of the course development process with the use of the instructional design quality standards is crucial in mapping a path forward to enhance the design and development of high-quality courses.

In most conventional higher education institutions, course design and development is accomplished by individual instructors. They draw up their course outlines based on their knowledge of a subject, without significant assistance from other university staff members. Thus, overall, the process of developing courses in higher education is a solitary one without consultation. The emergence of distance and online learning has contributed to a change in this process. A shared process of course development, referred to by Daniel (2009) as an industrial model of labour division for course development, has emerged in many higher education institutions. Instructional designers and technical personnel take part in the design and development of courses while instructors provide the subject matter expertise.

Instructional designers in CTET, like many practitioners in the field, advocate a collaborative course development model for quality online learning (Kidney, Cummings, & Boehm, 2007; Oblinger & Hawkins, 2006; Wang, Gould, & King, 2009). The main argument for adopting a collaborative development model is that designing a high-quality online course requires various sources of expertise not usually possessed by one person. Quite often, the development of an online course takes longer than the development of its face-to-face equivalent and requires the rethinking of pedagogy (Caplan, 2008; Knowles & Kalata, 2007). Proponents of distance and online education argue that the “lone ranger” model, in which an instructor learns how to design and teach an online course by him or herself, is not scalable and does not lend itself to the diffusion of innovative practice in an organization (Bates, 2000, p. 2). The days of the star faculty member who can do it all are long gone. Staff with instructional design expertise, technical knowledge, and subject matter knowledge must collaborate to produce quality courses on a consistent basis (Oblinger & Hawkins, 2006).

Researchers have begun to investigate the relationship between course development and course quality. The Institute for Higher Education Policy identified seven categories of quality measures: institutional support, course development, teaching and learning, course structure, student support, faculty support, and evaluation and assessment. Under the course development category, an institution should establish minimum standards and continuous reviews to ensure quality (Merisotis & Phipps, 2000). A similar effort was made in Canada with the publication of the Canadian Recommended E-learning Guidelines. These guidelines defined quality outcomes with a strong emphasis on learner-centred curricula and customer-oriented services. They did not suggest a development model to achieve those outcomes but did imply the importance of routine review and evaluation of course content, design, teaching, student achievements, policies and management practices, and learner support (Barker, 2002). The Sloan Consortium’s framework also proposes five pillars of quality: learning effectiveness, cost effectiveness, access, faculty satisfaction, and student satisfaction. Again, among a myriad of measures, the Sloan-C framework proposes a collaborative approach to curriculum design. It states that “effective design involves resources inside and outside of the institution, engaging the perspectives of many constituents... [and] aiming to use the experience of learners, teachers, and designers” (Moore, 2002, p. 17).

Many higher-education institutions now have instructional designers at the centre of curriculum design and development activities. Instructional design as a discipline came from skill-based training in the military during World War II (Reiser, 2001). Generally, instructional design practice did not have a significant presence on university campuses until the late 1980s and early 1990s when Internet technology and the resulting advances in online learning models and practices became prevalent. This enhanced presence did not necessarily equate with success. The common practice of systematic design, such as the ADDIE model, simply did not fit well with the academic culture (Moore & Kearsley, 2004; Magnussen, 2005). Over the past two decades, instructional designers in higher education have needed to redefine their role and practice. The role of a change agent emerged as instructional designers worked side by side with faculty to rethink their teaching in order to integrate technology into course design and delivery (Campbell, Schwier, & Kenny, 2007). Not only do instructional designers play the role of advisers to faculty and department on issues of curriculum and course quality, they also play a vital role in faculty development and institutional change when it comes to researching and implementing new learning technologies. Undoubtedly, instructional designers in higher education need to modify their approach and design models to fulfill their widening role and to make meaningful contributions. New design prototypes have evolved through field experience in higher education (Power, 2009), and role-based design has been proposed to transform the field of instructional design (Hokanson, Miller, & Hooper, 2008).

In summary, the literature cited reveals several important trends in course development. First, quality standards are receiving more attention as online education moves into the mainstream. Increasingly, universities and colleges are using standards to define quality. Second, instructional design is undergoing a transformation with the designer’s role changing to fit the shifting needs of higher education. Designers are (and could be) viewed as change agents. Team-based collaborative course development is highly regarded in the field. However, collaborative course development with the use of quality standards is in need of close examination in terms of its effectiveness and applicability in the large-scale production required by an online learning institution such as Royal Roads University. As Liston (1999) pointed out, building an effective quality culture requires, in part, prudent management of key processes.

This research investigates the course development process through the analysis of several case studies; as well, it explores the implications of collaboration on the enhancement of online course quality.

The study had three purposes: (1) determining how quality standards can be effectively used and implemented by faculty and instructional designers; (2) determining what kinds of collaborative processes involving faculty and instructional design staff best support the implementation of quality review processes; and (3) ascertaining how to make the development process as effective as possible by examining both the important elements of course quality and the key elements of collaboration.

Key questions in the research process are presented below:

(1) Elements of quality

(2) Elements of productive collaboration in course development

(3) Optimal development process

The research used a case study approach to examine how quality standards can be effectively implemented with a collaborative course development strategy. The case study is well established as a qualitative research method in the social sciences (Bromley, 1977). In each of the four cases selected for the study, an instructional designer worked with a faculty member to create and implement a collaborative process for using the quality standards to design and review an online course.

The four cases were selected, through purposive sampling, from different program areas to increase the breadth of the inquiry. This sampling process ensured that a diversity of courses, both new and those in revision, were examined. The faculty member’s level of experience with online courses was also taken into consideration during the sampling process. The small sample size also allowed an in-depth look into the course development process and the working relationship a faculty member forged with an instructional designer. All courses were offered within three months of one another and were of the same duration with a similar amount of content.

The four cases are listed below:

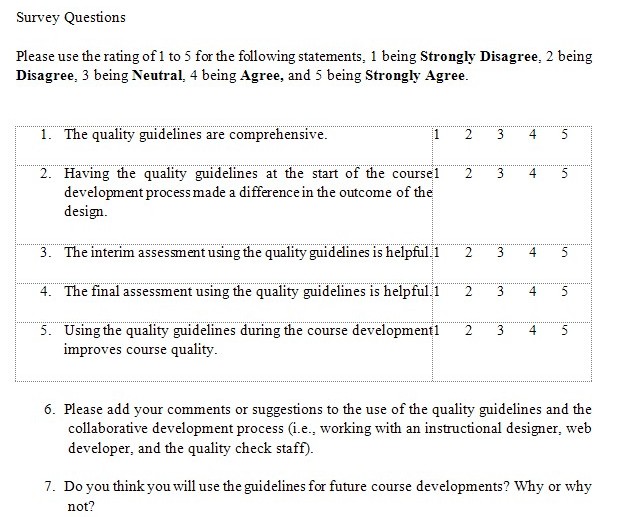

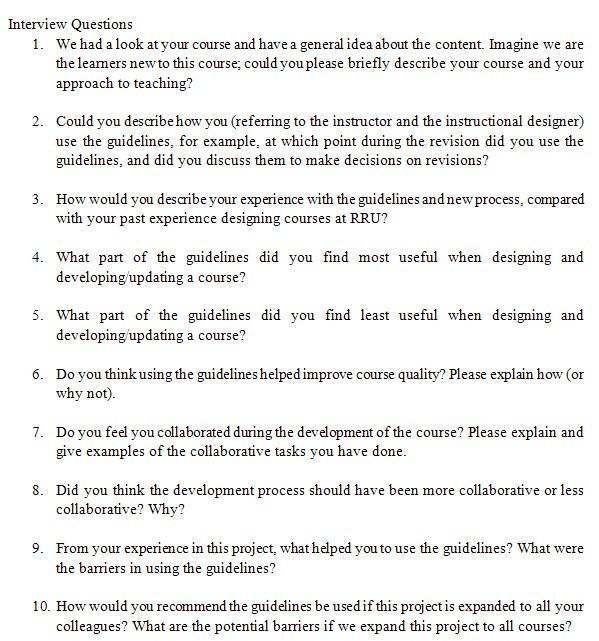

Both Yin (1984) and Stake (1995; 1998) argue that the use of multiple data-gathering strategies enhances the richness of the case analysis and increases the credibility of the reporting. Therefore, multiple data-gathering strategies in this study include document analysis, a survey, and semi-structured interviews. These three data-gathering strategies are described briefly below:

All interview transcripts and survey results were subjected to a thematic analysis of their content by the research team. Then these analyses were compared and re-examined until a common set of themes had been determined and agreed upon. These themes were used to code data from the transcripts using an inductive analytical approach as described by Huberman, Miles, and Lincoln (1994) and Mason (1996). As a form of interpretive research, the study placed emphasis on exploring the subjective and inter-subjective meanings that participants articulated as they reflected on their involvement in the course development process (Guba & Lincoln, 1994).

The research findings integrate the data gathered through the interviews and the open-ended survey responses.

It was clear that each faculty member and instructional designer focused on different quality standards as they took notes during the development. Interviews frequently referenced discussions that took place about what constitutes a quality course. Both the faculty members and the instructional designers felt that certain standards demanded more attention than others. For example, criteria related to learning outcomes and assessments were viewed as quite important. One faculty member said, “There are some guidelines that lend themselves well to the very early conceptualization of the course and the overall design.”

However, an assessment of the value of specific guidelines varied among the development teams. Some teams thought criteria related to learning outcomes were important while others thought criteria related to student workload and learning styles were important as those details tended to be overlooked in the course development process.

All participants indicated in the survey and in the interviews that the quality guidelines were helpful.

However, one instructional designer and one faculty member felt that using the guidelines at the start of the development process did not make much difference in the quality outcome of the course. All participants agreed that the guidelines were helpful at the end of the process as a checklist: “I used [the guidelines] when I first received them, starting the development, and then I used them again when I was finishing up [the last details].”

Some participants also stressed that the guidelines were only helpful if they could be adapted based on the needs of the course, of the instructional designer, and of the faculty member, and that they could not be used in isolation. One designer stated, “I would not recommend using [the guidelines] without a discussion of how they apply to each specific course.”

A faculty member wrote, “Guidelines can’t be separated from the conversations that occur with the instructional designer—they won’t be effective on their own.”

Even though the guidelines were used in different ways in the four cases, several participants commented that the guidelines provided an objective, outside perspective on what was important in the course development process and helped to expand their overall development toolkit.

On a university-wide level, the findings provided some interesting insights into how course development relates to other entities within the university. In particular, the participants indicated that the guidelines helped them to better prepare for the Curriculum Committee review process:

…in my previous experience with [the] Curriculum Committee, instructors go into it by themselves, never quite sure what to include or leave out [in their curriculum submissions]. With [these guidelines], they’d get far more guidance and help to produce something valuable.

The guidelines also served to provide an institutional definition of course quality for faculty and for learners. The following comment illustrates such a viewpoint: “Sometimes instructors, I think, don’t realize what goes on behind the scenes, [that] what they are doing is part of a larger process…this reminded me of that.”

The survey data and interviews suggested that the participants’ views on the usefulness of quality guidelines depended on their level of experience. For a relatively new faculty member, the guidelines served as an orientation and helped to clarify how to create a successful course. The instructional designer who was relatively new to Royal Roads commented that the guidelines helped to establish consistency in the development process.

One experienced faculty member indicated that the guidelines complemented existing training and experience and were a positive reinforcement of faculty members’ pre-existing competency. Faculty also characterized the guidelines as a “reminder,” a “reference,” and a “checklist.” The guidelines were used as a validation step to gauge the robustness of the instructional design qualities of the course, which provided the faculty member with more confidence that he or she was “doing the right things” while helping to ensure that he or she “didn’t miss anything.” One faculty member said that it helped to “refresh my memory.” In other words, the guidelines were seen as a positive and empowering tool in the course development process, highlighting how much the faculty member and the instructional designer already knew.

A couple of responses touched on the time pressures that faculty members face during the course development process, indicating that the guidelines were more helpful when not dealing with short timelines and acute time pressures, leading to speculation that the use of the specific standards would need to be prioritized or used selectively.

Having rapport is a crucial factor in collaboration. This means that the instructional designer and the faculty member are familiar with each other’s working styles. For the instructional designer, the rapport comes from her familiarity with the course content and the faculty member’s teaching philosophy. One instructional designer said, “We’ve known each other for a long time so we [have] already established that rapport working together.”

Another commented, “...it comes down to building relationships, having the time, having that strong foundation.”

A faculty member further commented:

… [the instructional designer] knew the program very well… it didn’t take me too long to explain… with a certain understanding with content, because she knew exactly what the structure and the overall structure of the process and the overall rationale of the program.It helps a great deal.

It seems easier to take a collaborative approach to course design when the relationship between the instructional designer and faculty member has already been established. This relationship may be strengthened at the personal level when the pair has known each other for a long time and has a history of successful collaboration.

Without the history of working together, however, the faculty member and the instructional designer appear to become a productive team if they have enough time to establish expectations up front and if they allow themselves to move at a pace that gives them room to listen to feedback and to reflect. Collaboration was fostered by what an instructional designer called “early conversations.” She commented, “The first conversation was really all-encompassing; I think it’s not just the design, but it’s the goal and how we approach this and the underlying teaching philosophy.”

Another instructional designer described the exchange she had with an instructor during their first meeting for their first course project together:

[the instructor] has some strong feelings [about] participation marks. So after hearing him talk about it, I could see his point and see his reasoning, and I think my biggest advice to you was to make it clear up front what you think and why you think that.

These conversations, whether face-to-face, by phone, or by email, created a sense of team solidarity because they helped create a shared understanding and vision.Also, having an upfront discussion about vision and goals for a course helped to set the stage for further discussions related to the elements of course quality. One instructional designer said,

[there is] value in actually having that first conversation to get a better understanding of what your objectives are in terms of revisions, what you want to see out of the course, and how you want to improve the experience.

Using the guidelines facilitated a team approach to course revision. For the faculty members, this was a positive experience because it seemed as though there was shared responsibility among various people for enhancing the course (e.g., faculty members themselves, instructional designers, web developers, even the Curriculum Committee). But one faculty member did comment that he felt “vulnerable” having so many eyes looking in on his course, that he had to get used to this team approach, but that he came to appreciate it by the end. There is no doubt that a faculty member’s willingness to be open to feedback is very important in the collaborative process as well as an instructional designer’s investment in building rapport and in understanding an individual faculty member’s teaching approach.

Several factors related to collaboration could hinder the development of a quality course. Participants seemed to agree that introducing all the guidelines at once could be overwhelming, especially when the development timeframe is short.

For example, in one case, the instructional designer used the guidelines as a template to provide feedback. The faculty member reported feeling overwhelmed by the amount of detailed comments beside many of the criteria and thought all comments needed to be addressed before the course went to the Curriculum Committee. Further discussion with the instructional designer revealed that this was not the case, leading the faculty member to feel that using the guidelines in this way confused matters.

It became apparent to faculty members and instructional designers that different criteria were important at different stages of the course development. Also, faculty members and instructional designers felt that they should have the freedom to adapt the guidelines to their level of experience and to the circumstances of the course development project. According to the study participants, early and clear communication about how the guidelines were going to be used was also important. One instructional designer said that the danger of unclear expectations and of overload of information risked damaging a positive working relationship.

Everyone seemed to view collaboration as a positive experience and a necessary step in producing quality courses. However, it is a double-edged sword, as one instructional designer indicated:

The downside is it’s labour intensive...But we got a much better outcome, and that much better outcome saves us a lot of time down the road. Because we’ll be better received by learners, it’ll be a much better experience for them…So if you look at the whole picture, I think it’s better.

Participants’ responses indicated that collaboration is viewed as time consuming, but if the team can focus on shared meaning and vision early enough, as well as on a productive working relationship, it can reduce the amount of time and work spent fixing problems later, the kind that, if they arise, can compromise the quality of a course.

Overall, the participants felt that it wasn’t necessary to introduce the guidelines in a formal and artificial way when their collaborative work “naturally flowed.” They used parts of the guidelines when they needed to and in a way that suited their workflow.

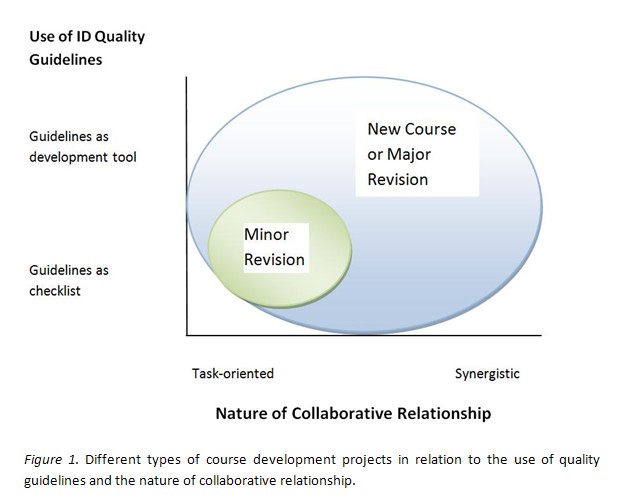

In addition to the faculty member’s level of experience, the nature of the course development project affected the way the instructional designer and faculty member worked together. In the cases of a new course or major revision, collaboration played an important role, requiring relationship building and visioning to create synergy in the team. If the course required a minor revision, the nature of the collaboration became task-oriented, rather than based on building a vision and relationship. One instructional designer commented on the importance of collaboration when developing a new course:

…it’s more effective and it really helps the course quality if the guideline is used in conjunction with a very collaborative approach. And that’s why I find it takes that initial discussion, the overarching discussion we have about teaching design— because [the guidelines document] is an additional tool, on top of a very strong collaboration approach, just brings so much more value and will no doubt produce much better course quality.

In contrast, the instructional designer who worked on a minor revision said:

I don’t know that we did a lot of collaboration. I mean, we did updates based on past experience of the course. I reviewed the course…We’re not finished as well because we’ll look to the web developer coming in and looking over images. I think there’s going to be more opportunities to look at the course again….What [the faculty member] intends with the images …we didn’t have those conversations about the course.

There is no doubt that faculty members and instructional designers have different levels of experience and different working styles. Each course project has unique characteristics. All of these factors influence the collaborative process.

Furthermore, there was strong agreement among the participants that the quality standards need to be used flexibly in different course development situations to accommodate unique course development needs, individual teaching styles, and differing program contexts. As well, participants referred to the need for an “evolving” use of the standards during the course development process, which would allow them to make the different standards as meaningful as possible when they were most relevant in the course development process.

From the interview and survey results reported, it is evident that the instructional design quality guidelines were valued by faculty members and instructional designers as being informative in the course development process. The degree of helpfulness of the guidelines, however, appears to be influenced by the experience level of the faculty member involved. There was strong agreement among participants that the guidelines are more helpful for new and less-experienced faculty members. In all four cases, however, the participants indicated that they valued the guidelines as part of the overall review process before the course was launched. As a whole, participants placed the most value on the guidelines related to outcomes and assessment, although this perspective varied among the four development teams.

The four cases revealed different patterns of collaboration between the faculty member and the instructional designer. Establishing rapport early in the course development process was important and was made easier when a strong relationship had been established between the faculty member and instructional designer. Having sufficient time, or creating opportunities to dedicate time, for the mutual and respectful exchange of expectations/reflections about the course early in the development process was important in developing a shared understanding of what revisions were required and how the development process was to proceed.

All participants viewed the collaboration between the faculty member and the instructional designer to be a positive experience. Nevertheless, participants were able to cite factors related to the collaboration that hindered or potentially hindered producing a course that met the quality standards. Addressing all of the quality standards at the same time appeared to be overwhelming to faculty members and, therefore, limited the usefulness of the guidelines as both a course development tool and as a checklist on course quality. The responses of participants indicated that, based on their collective experience, the standards should be viewed as a set of guidelines that are flexibly and systematically introduced, along with a discussion of how to make the best use of them throughout the course development process. How the guidelines are used should depend on the nature of the course, the working relationship between the instructor and instructional designers, and the experience level of the instructor.

The study has a limitation, however. This research examined the relationship between faculty members and instructional designers in the four case studies but did not take into account the perspectives of other personnel who might have played important roles in the course development process, such as the program head and web developer.

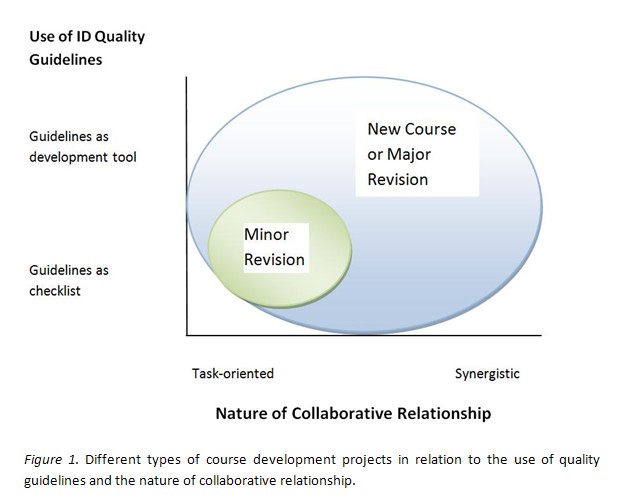

Despite this limitation, a distinction between two types of specific uses of the quality guidelines has clearly emerged. Understanding these uses among the four cases sheds light on the degree and nature of the collaborative relationship that is most helpful in improving the course development process. Figure 1 illustrates the type of course development in relation to the implementation of the standards (i.e., guidelines used as a checklist vs. guidelines used as a development tool) and the nature of the collaborative relationship between the faculty member and the instructional designer (task-oriented vs. synergistic relationship).

In cases B, C, and D, where the courses had been designed and taught before, the team used the guidelines as a checklist. The faculty member and the instructional designer took a task-oriented approach. There was not as much time invested in discussing high-level design questions, nor was there much time dedicated to developing the relationship between the two team members. In addition, when an instructional designer and a faculty member already have a strong rapport, the revision is quite efficient as the team shares an understanding of the course’s pedagogy and each other’s working styles. On the other hand, in new courses or courses requiring extensive revisions, such as Course A in the study, faculty members and instructional designers were willing to invest time and effort in relationship-building activities that helped the team members develop a common vision for the course. Thus, the instructional design standards were a development tool used to set expectations, guide teamwork, and create opportunities for dialogue about the expectations for the course.

Taking all the findings together, there seems to be a need to better define the scope of course development required in individual courses and the level of collaboration necessary to produce a high-quality course. It is clear that the need for an elaborate collaboration process is the greatest when a new course is being developed. Therefore, new courses may benefit from a highly collaborative process, more so than courses requiring less extensive development or re-development. The cases suggest that a collaborative development process that integrates the use of quality standards throughout the process would produce quality courses, primarily when the development work is complex and extensive. Such an approach has an added value of maintaining consistent quality at the institutional level, orienting new faculty members and instructional designers, and rejuvenating course development teams as the guidelines remind them of what is important in a quality course.

The cases also revealed a distinction between the extent of collaboration required to effectively support new course development and the extent required to support revision-based course development. Thus, it would be useful to seek a better way of judging a course development project from the onset so that different and more efficient processes could be implemented while ensuring that the quality standards are met.

Finding an optimal development process and a clear distinction between new course development and revision-based development has implications for an efficient, large-scale course development operation at an educational institution with extensive online course offerings, such as Royal Roads University. In the Sloan Consortium’s quality framework, cost-effectiveness is a pillar equal to all other measures (Sloan Consortium, 2004). It implies that quality is a value determined by the ratio of benefits and cost. In other words, are the resources devoted to the elaborate collaboration justified in terms of producing the highest quality? Do all courses, regardless of the development scope, require a highly collaborative process? These remain crucial yet unanswered questions, even though the consensus in the field is to use collaborative approaches and to utilize the skills of instructional designers, web developers, graphic designers, and other IT personnel on a development team (Caplan, 2008; Knowles & Kalata, 2007).

Finally, our findings and conclusions from the four cases warrant the following recommendations, which course development teams may wish to consider in using quality standards effectively:

Barker, K. (2002). Canadian recommended e-learning guidelines. Retrieved from http://www.futured.com/pdf/CanREGs%20Eng.pdf.

Bates, A. W. (2000). Managing technological change: Strategies for college and university leaders. San Francisco, CA: Jossey-Bass, Inc.

Bromley, D. B. (1977). The case-study method in psychology and related disciplines. Chichester, NY: Wiley.

Campbell, K., Schwier, R., & Kenny, R. (2007). The critical, relational practice of instructional design in higher education: An emerging model of change agency. Educational Technology Research & Development, 57(5), 645-663.

Chao, T., Saj, T., & Tessier, F. (2006). Establishing a quality review for online courses. Educause Quarterly, 29(3), 32-39. Retrieved from http://www.educause.edu/apps/eq/eqm06/eqm0635.asp.

Caplan, D., & Graham, R. (2008). The development of online courses. In T. Anderson (Ed.), Theory and practice of online education. Athabasca, Alberta: AU Press.

Daniel, S. J. (2009). Is e-learning true to the principles of technology? Presented at the World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education 2009. Retrieved from http://www.editlib.org/p/33043.

Guba, E. G., & Lincoln, Y. S. (1994). Competing paradigms in qualitative research. In N. K. Denzin & Y. S. Lincoln (Eds.), Handbook of qualitative research (pp. 105-117). Thousand Oaks, CA: Sage Publications.

Hokanson, B., Miller, C., & Hooper, S. R. (2008). Role-based design: A contemporary perspective for innovation in instructional design. TechTrends: Linking Research & Practice to Improve Learning, 52(6), 36-43.

Huberman, A. M., Miles, M., & Lincoln, Y. S. (1994). Data management and analysis methods. In N. K. Denzin & Y. S. Lincoln (Eds.), Handbook of qualitative methods (pp. 428-444). Thousand Oaks, CA: Sage Publications.

Kidney, G., Cummings, L., & Boehm, A. (2007). Towards a quality assurance approach to e-learning courses. International Journal on E-Learning, 6(1), 17-30.

Knowles, E., & Kalata, K. (2007). A model for enhancing online course development. Innovate, 4(2). Retrieved from http://innovateonline.info/index.php?view=article&id=45.

Liston, C. (1999). Managing quality and standards. Buckingham, UK: STHE/Open University Press.

Magnusson, J. L. (2005) Information and communications technology: Plugging Ontario higher education into the knowledge society. Encounters on Education, 6(Fall), 119-135.

Mason, J. (1996). Qualitative researching. Thousand Oaks, CA: Sage Publications.

Merisotis, J. P., & Phipps, R. A. (2000). Quality on the line: Benchmarks for success in internet-based distance education. Retrieved from http://www.ihep.org/Publications/publications-detail.cfm?id=69.

Middlehurst, R. (2001). Quality assurance implications of new forms of higher education: Part 1 a typology. Retrieved from http://www.enqa.eu/files/newforms.pdf.

Moore, J. C. (2002). Elements of quality: The Sloan-C framework. U.S.A.: The Sloan Consortium.

Moore M. G., & Kearsley, G. (2004). Distance education: A systems view (2nd ed.). Belmount, CA: Wadsworth.

Oblinger, D., & Hawkins, B. (2006). The myth about online course development, Educause Review, January/February, 14-15.

Power, M. (2009). A designer’s log: Case studies in instructional design. Edmonton, AB: Athabasca University Press.

Reiser, R. (2001). A history of instructional design and technology: Part II: A history of instructional design. Educational Technology Research and Development, 49(2), 57-67.

Sachar, M., & Neumann, Y. (2010). Twenty years of research on the academic performance differences between traditional and distance learning: Summative meta-analysis and trend examination. MERLOT Journal of Online Learning and Teaching, 6(2), 318-334.

Stake, R. E. (1995). The art of case study research. Thousand Oaks, CA: Sage Publications.

Stake, R. E. (1998). Case studies. In N. K. Denzin & Y. S. Lincoln (Eds.), Strategies of qualitative inquiry (pp. 86-109). Thousand Oaks, CA: Sage Publications.

The Sloan Consortium. (2004). Elements of quality online education: Into the mainstream. Retrieved from http://www.sloan-c.org/intothemainstream_wisdom.

Tsai, M.-J. (2009). The model of strategic e-learning: Understanding and evaluating student e-learning from metacognitive perspectives. Educational Technology & Society, 12(1), 34-48.

U.S. Department of Education. (2009). Evaluation of evidence-based practices in online learning: A meta-analysis and review of online learning studies. Retrieved from http://www.ed.gov/rschstat/eval/tech/evidence-based-practices/finalreport.pdf.

Wang, H., Gould, L. V., & King, D. (2009). Positioning faculty support as a strategy in assuring quality online education. Innovate, 5(6). Retrieved from http://innovateonline.info/index.php?view=article&id=626&action=login.

Yin, R. K. (1984). Case study research: Design and methods. Thousand Oaks, CA: Sage Publications.